Collision Avoidance of Intelligent Vehicle

based on Networked High-speed Vision System

Masahiro Hirano

1

, Akihito Noda

2

, Yuji Yamakawa

1

and Masatoshi Ishikawa

1

1

Graduate School of Information Science and Technology, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

2

Graduate School of Frontier Science, The University of Tokyo

5-1-5 Kashiwanohara, Kashiwa-shi, Chiba 277-8561, Japan

Keywords:

Networked High-speed Vision, Collision Avoidance, Intelligent Vehicle.

Abstract:

We propose a driving safety support system (DSSS) that employs a high-speed vision system installed in

the environment surrounding, for instance, highways, urban roads, and intersections. The aim of the system

is to recognize potentially dangerous traffic situations, including those that are undetectable from a moving

vehicle, and to use this information for supporting safe driving. The system consists of a vision network

of synchronized high-speed cameras that are capable of acquiring images at one-millisecond intervals, and

vehicles that are capable of communicating with this network through communication hubs. We conducted

collision avoidance experiments and demonstrated that, by introducing high-speed vision, the proposed system

can resolve the issue of slow reaction time, which is common to environmental vision systems.

1 INTRODUCTION

Intelligent transport systems (ITSs) have been de-

veloped rapidly in response to the increasing need

for safe, economical, and environment-friendly trans-

portation around the world. In Europe for instance,

the “Europe 2020” action plan drawn up by the the

Commission of the European Community, includes

modernization of transport systems, utilization of in-

formation and communication technology, and so

forth. Also in Japan, the government has been a driv-

ing force towards realizing the world’s safest transport

systems and has outlined several quantitative indica-

tors that will be used for evaluating the efficiency of

these systems.

To meet such demands, a large number of systems

havebeen proposed for various applications, like driv-

ing safety support. We, however, do not believe that

this approach, i.e. to design a system for solving ex-

isting problems, is the right one. On the contrary, we

believe that a better approach is to have the design of

an ITS as the starting point.

Driving safety support systems based on vi-

sion systems installed on vehicles have been pro-

posed (Cherng et al., 2009). However, it is imprac-

tical to require that all vehicles be equipped with vi-

sion units. A more practical and feasible approach is

Readiness (evaluated by maximum delay)

Field-of-view of vision system

Standard

environmental

vision

High-speed

environmental

vision

This research

Onboard vision

Figure 1: The purpose of this research.

t

o consider a vision system installed in the environ-

ment, for example, roadsides.

It is widely considered that the introduction of

driving safety support systems based on interac-

tions between vehicles and the environment will con-

tribute in a major way to achieving the goals men-

tioned above (Papadimitratos and Evenssen, 2009;

Kim and Kim, 2009). For instance, studies have

shown that vehicle-based vision systems are inferior

to environment-based vision systems in some cases,

for instance, when detecting potential dangers around

539

Hirano M., Noda A., Yamakawa Y. and Ishikawa M..

Collision Avoidance of Intelligent Vehicle based on Networked High-speed Vision System.

DOI: 10.5220/0005100105390544

In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2014), pages 539-544

ISBN: 978-989-758-040-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

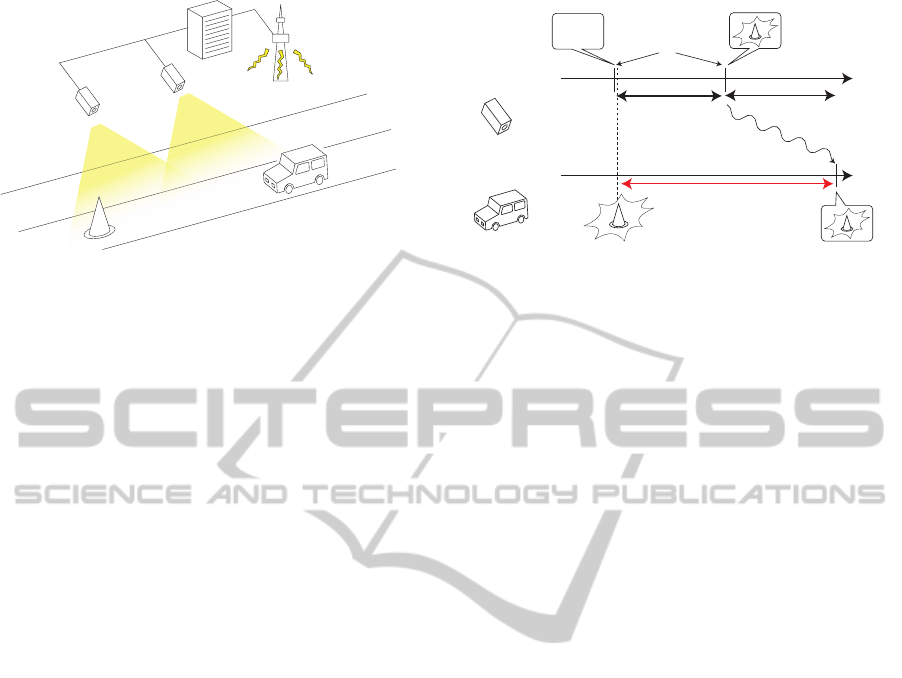

high-speed

camera

Communication

Station

Vehicle

Analysis

Server

Figure 2: Proposed driving safety support system for colli-

sion avoidance.

corners, or on highways when the driver’s sight is lim-

ited by large trucks (Vceraraghavan et al., 2002).

However, an environment-basedsystem providing

information about potential obstacles involves a long

reaction time, which is a serious drawback in time-

critical situations like collision avoidance. Consider-

ing a situation in which a pedestrian runs out in front

of a vehicle from behind a parked vehicle, it is diffi-

cult to avoid a collision with the pedestrian by using

an environment-based vision system alone.

We propose a novel system employing environ-

mental high-speed cameras installed in areas with

frequent traffic accidents, such as blind intersections

and highways. This camera network forms a vi-

sion system synchronized for sub-millisecond com-

munication, a system that we call the “Networked

High-speed Vision System“ (Noda et al., 2014). This

system can overcome the deficiencies in standard

environment-based vision systems, as detailed in Sec-

tion 2. The concept of our study is illustrated in Fig-

ure 1.

The system used in this research consists of two

high-speed cameras connected to image processing

workstations, which are connected via a network.

Furthermore, there is a communication station for

broadcasting information to vehicles about the sur-

rounding traffic situation, including obstacles around

them and other vehicles. The cameras are installed

in such a way that the field-of-view of one camera

slightly overlaps with that of the other.

With this set-up it is possible to have a large de-

tection range for vehicles moving at high speed and

obstacles, even if they are not visible by the same

camera. The high-speed vehicles are equipped to re-

ceive information about obstacles detected by the vi-

sion system, and to take appropriate action, like colli-

sion avoidance.

In the remainder of this paper, we describe the

proposed driving safety support system designed for

installation on a highway and present results of ex-

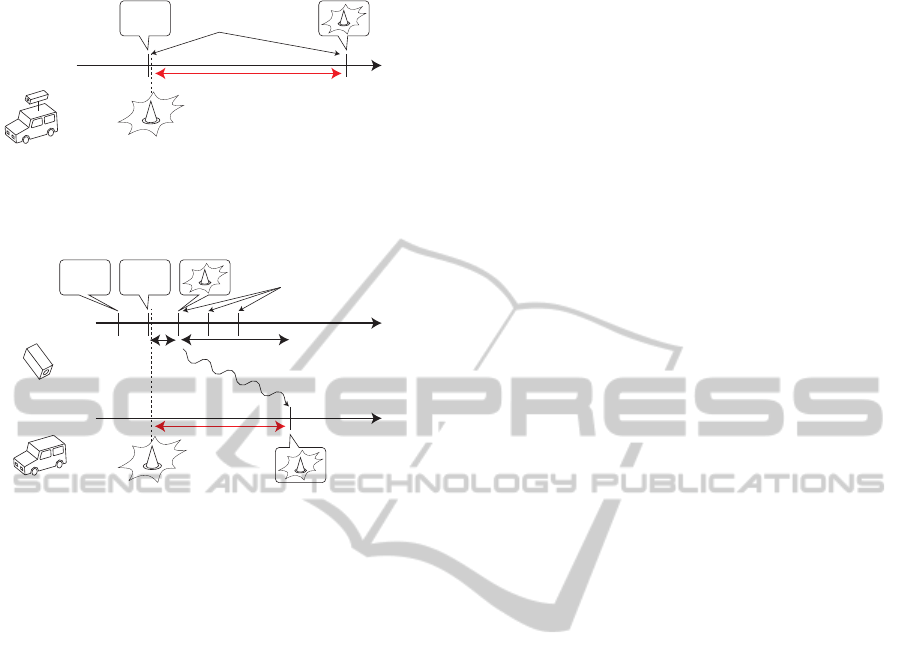

Obstacle recognition & transmission

Frame acquisition

33 [ms] 15 [ms]

48 [ms]

Obstacle emergence

Environmental

vision

Vehicle

Time [ms]

Obstacle information reception

Figure 3: Timing diagram of environmental vision system

using standard camera (30 fps).

periments conducted for verifying the effectiveness of

this system, specifically, collision avoidance experi-

ments using vision systems that capture images at dif-

ferent frame rates (30 frames per second (fps) and 600

fps).

2 PROPOSED SYSTEM

2.1 Networked High-speed Vision

System

Networked high-speed vision systems have previ-

ously been proposed (Noda et al., 2013; Noda et al.,

2014). In this subsection, we give a short overview of

the system.

The networked high-speed vision system con-

sists of multiple high-speed cameras and PCs which

are connected via a network. These cameras have

partly overlapping fields-of-view and observe the en-

tire space of interest. The PCs are synchronized to

sub-millisecond order by using the software-based

precision time protocol (PTP). When applying this

system to object tracking, the data transmitted in the

network includes the position of the tracked target and

the timestamp when the frame is acquired.

Thanks to the high acquisition rate, target track-

ing can be realized by a simple computer algorithm.

This system is designed to be used in a number of sit-

uations, including highways and intersections. The

main target of the system is to track objects mov-

ing in the field of view. For this purpose, back-

ground subtraction is an appropriate algorithm be-

cause it can be executed with low computational load.

Since the cameras are calibrated, homography matri-

ces for transforming the cameras’ coordinate systems

into a common world coordinate system can be ob-

tained. Each target can be identified by its position in

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

540

Obstacle recognition

33 [ms]

Frame acquisition

Obstacle emergence

Vehicle with

onboard vision

Time [ms]

Figure 4: Timing diagram of onboard vision system using

standard camera (30 fps).

Obstacle recognition & transmission

Frame acquisition

1.6 [ms]

15 [ms]

16.6 [ms]

Obstacle emergence

Environmental

high-speed vision

Vehicle

Time [ms]

Obstacle information reception

Figure 5: Timing diagram of environmental high-speed vi-

sion system using high-speed camera (600 fps).

the previous frame due to the small movement of the

target during short frame interval of the high-speed

cameras. The main tasks of the CPU include back-

ground subtracting, binarization, calculating the im-

age centroid, and so on, which enables tracking to be

completed within a 1-millisecond frame interval.

2.2 Proposed Driving Safety Support

System

Networked High-speed Vision Systems have a broad

range of applications in ITS. As a result of the con-

figuration detailed in the previous section, the system

enables us to recognize every traffic situation at a high

frame rate. One of the advantages of this system is

that, since it can robustly track vehicles on a highway,

we can obtain abundant traffic data with high accu-

racy even for vehicles that are not equipped with spe-

cial sensors. Therefore, this system can be applied to

efficient traffic planning and control by surveillance

and analysis of traffic congestion or accidents.

The networked vision system is designed to have

high-speed cameras with overlapping fields-of-view

in order to provide full coverage of the area in ques-

tion. The function of the system is to simultaneously

detect vehicles and obstacles on the road. As a re-

sult, it provides vehicles with traffic information and

“obstacle maps”, which represent the locations of ob-

stacles in relation to the target vehicle, through the

communication station. The vehicles can then decide

whether to take appropriate action, like sudden brak-

ing or steering, in response to the incoming informa-

tion.

In general, an environment-based vision system

can monitor a larger area compared with an onboard

vision system. It is possible to recognize, at an early

stage, different traffic situations involving surround-

ing vehicles and obstacles. However, an additional

delay is inevitably introduced in the wireless commu-

nication. Consider for instance a situation where a

child suddenly runs in front of a vehicle. In this case,

the low responsiveness is a limiting factor in tradi-

tional environment-based vision systems.

Moreover, if an obstacle emerges just after a frame

is acquired, there is a 33 ms delay in detecting the ob-

stacle, assuming the standard frame rate of 30 fps for

standard cameras. Figure 3 illustrates the total delay

between detection and the vehicle receiving this in-

formation: 33 ms for detection and 15 ms for system-

to-vehicle communication.

On the other hand, when a standard camera is in-

stalled in the vehicle, there is no delay due to commu-

nication. Therefore, as illustrated in Figure 4, there

can be a delay of up to 33 ms in such a system.

In contrast, by introducinghigh-speedcameras ca-

pable of image acquisition at 600 fps, the detection

delay can be reduced to 1.6 ms. Figure 5 illustrates

that vehicles can recognize obstacles within 16.6 ms

after an obstacle emerges, including the delay due to

communication.

To summarize, the proposed driving safety sup-

port system for high-speed vehicles based on an en-

vironmental high-speed vision system can recognize

traffic situations in the entire area of coverage, and at

the same time can achieve the same high responsive-

ness as standard on-board vision systems.

3 COLLISION AVOIDANCE

RULES

The networked high-speed vision system installed on

a highway provides information about objects, like

other vehicles and obstacles, to each of the vehicles

in the covered area simultaneously. It generates an

obstacle map from the viewpoint of each target vehi-

cle. In the case of a highway, the obstacle map will

include distances from the target vehicle to obstacles,

including an indication of which traffic lanes the ob-

stacles are in. The system generates one obstacle map

per vehicle on the highway. Since the proposed sys-

tem uses high-speed vision, the distances that the ve-

CollisionAvoidanceofIntelligentVehiclebasedonNetworkedHigh-speedVisionSystem

541

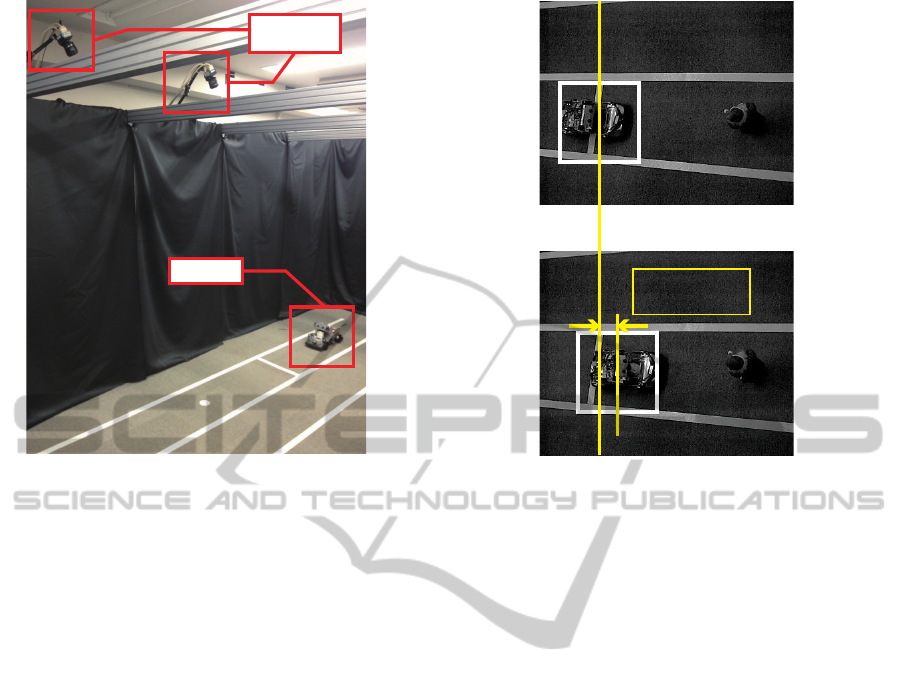

Robocar

High-speed

camera

Figure 6: Experimental setup.

hicles move between frames can be significantly re-

duced (Ishii and Ishikawa, 1999).

Obstacle maps generated by the networked high-

speed vision system are distributed to the correspond-

ing vehicles through a wireless network. Each ve-

hicle selects collision avoidance rules from a set of

pre-programmed rules, based on the received obsta-

cle map. When an obstacle suddenly appears in front

of a vehicle, it is commonly known that collision

avoidance by steering is more effective than a sudden

stop (Keller et al., 2011).

In the work described in this paper, we assumed

such a situation and adopted collision avoidance

by steering. More precisely, the vehicle was pro-

grammed to start the avoidance maneuver by steering

in a certain direction when the distance to the obstacle

is less than a pre-defined threshold. The vehicle then

drives in a straight line for a fixed time period and fi-

nally steers back in the opposite direction, which will

place it in the neighboring lane.

4 EXPERIMENTAL SETUP

We conducted an experiment to show the effective-

ness of the proposed driving safety support system.

The system used in this experiment consisted of a

1/10-scale vehicle, two networked high-speed cam-

eras attached to workstations, and a communication

station. The system is shown in Figure 6. The ve-

hicle used in this experiment was a robotic car re-

search platform called “RoboCar 1/10”, provided by

55 [mm]55 [mm]

Figure 7: The upper figure shows an image captured by one

of the high-speed environmental cameras when the system

recognized that the distance to the obstacle fell below the

threshold. The lower figure shows the same situation for a

standard environmental camera.

ZMP (ZMP, 2014). RoboCar has a realtime operat-

ing system (a customized version of Linux), control-

ling its steering and communication modules. It com-

municates with the communication station via a Wifi

connection (IEEE 802.11g).

The obstacle map contained two 32-bit integer

variables per obstacle, where one represented the dis-

tance from the vehicle to the obstacle, and the other

represented the number of the lane that the obstacle

resided in. We limited one obstacle map to contain-

ing at most 50 obstacles, meaning that the size of one

packet (including header and payload) was 512 bytes,

since the payload was 4*2*50 bytes = 400 bytes.

We conducted a preliminary experiment to mea-

sure the round-trip time of a 512-byte packet between

RoboCar and the communication station. The mea-

sured times ranged from 5 ms to 30 ms, indicating a

one-way time of 2.5 ms to 15 ms. This result is in

agreement with the assumptions made in Section 2.

5 EXPERIMENTS

We conducted an experiment to validate the effective-

ness of the proposed system. The setup is illustrated

in Figure 6. In this experiment, we assumed a situ-

ation where a person suddenly jumps out in front of

the vehicle from the roadside onto the highway. Since

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

542

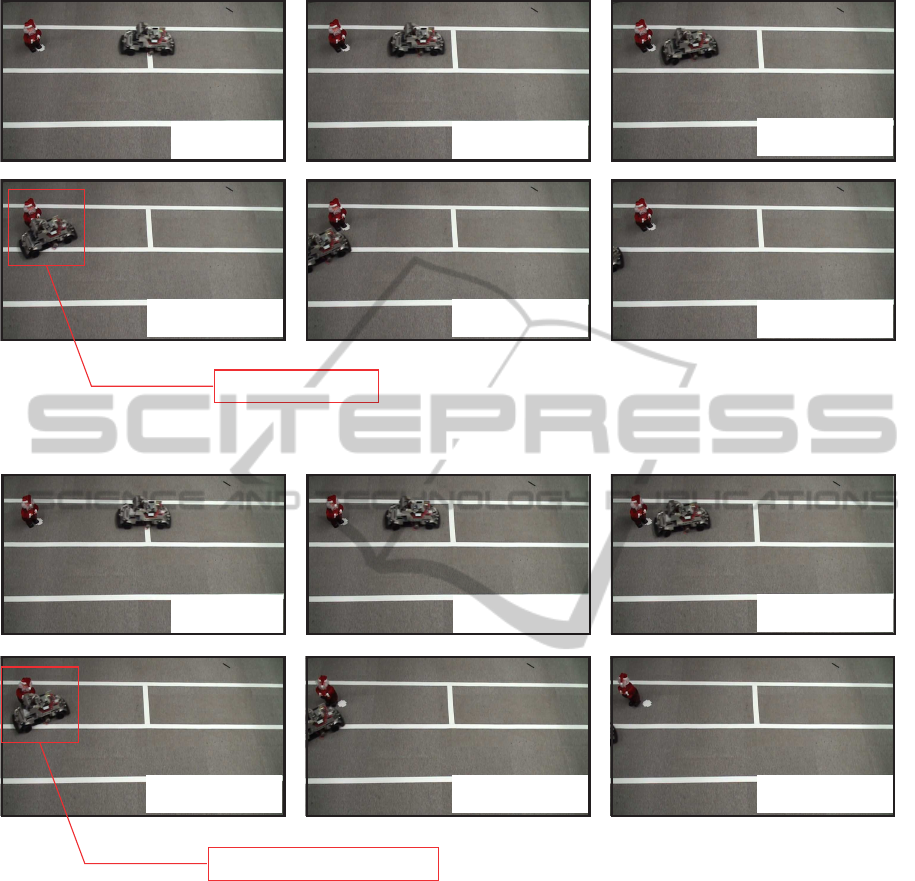

T = 0.4 [s]

T = 0.5 [s]

T = 0.1 [s]

T = 0.2 [s]

T = 0 [s]

T = 0.3 [s]

Avoid obstacleAvoid obstacle

Figure 8: Experimental results for the high-speed environmental vision system.

T = 0.4 [s] T = 0.5 [s]

T = 0.1 [s]

T = 0.2 [s]

T = 0 [s]

T = 0.3 [s]

Collide with obstacleCollide with obstacle

Figure 9: Experimental results for the standard environmental vision system.

the speed of a person on the highway can be consid-

ered to be considerably lower than the vehicles on that

highway, we modeled the person as a static obstacle.

In the experiment, we assumed a situation in which

the person (obstacle) that suddenly appeared was de-

tected by the system around 800 mm in front of the

vehicle.

To illustrate the effectiveness of the proposed sys-

tem, we conducted two experiments. In the first ex-

periment, we used standard environmental cameras

with standard frame rates of 30 fps. In the second

experiment, we introduced a high-speed environmen-

tal vision system with a frame rate of around 600 fps.

The results of the experiments are shown in Figures 8

and 9. The vehicle received the obstacle map trans-

mitted by the networked vision system, after which

it took action based on a preprogrammed collision

avoidance rule. The collision avoidance rule adopted

in this experiment was as follows:

1. Start steering at an angle of 30 degrees when the

distance from the vehicle to the obstacle falls

below the threshold (800 mm) and keep that

heading for time T

1

.

2. Keep going straight for time T

2

.

3. Steer at an angle of -30 degree for time T

1

in order

to come back to the neighboring driving lane.

CollisionAvoidanceofIntelligentVehiclebasedonNetworkedHigh-speedVisionSystem

543

Considering the time required for an evasive ma-

neuver,it is even more important to detect the obstacle

at an early stage. The standard environmental vision

system could recognize the vehicle when it overshot

the threshold by 55 mm. In Figure 7, this threshold

is indicated with a white line drawn 800 mm from the

obstacle. In contrast, the proposed system could rec-

ognize the vehicle just after it reached the line.

When a collision occurred in the case of the stan-

dard environmental vision system, the vehicle had a

velocity of 7.2 kilometers per hour (km/h) (=1.8 mil-

limeters per millisecond (mm/ms)). Therefore, the

delay for recognition in the standard vision system

can be estimated to be 55/1.8 ≃ 30.5 ms. For this

reason, collision avoidance fails with the standard vi-

sion system, whereas it succeeds with the proposed

system.

In this experiment, the vehicle started the evasive

maneuver when the distance from the vehicle to the

obstacle fell below 800 mm. This distance is equiva-

lent to 8 m at actual scale. It is known that vehicles

must maintain at least 58 m intervals between them

for safe driving, which is around 7 times longer than

8 m.

6 CONCLUSION AND FUTURE

WORK

In this research, we aim to construct a driving safety

support system based on networked high-speed vision

cameras. We constructed a system employing two

high-speed environmental cameras attached to work-

stations, which were connected via a network and

synchronized to sub-millisecond order, and a com-

munication station. We also conducted comparative

experiments of collision avoidance. One experiment

using standard cameras (30 fps) failed to avoid a col-

lision with an obstacle. In contrast, the other experi-

ment using high-speed cameras (600 fps) succeeded

in avoiding a collision with the obstacle. Through

fundamental experiments, we demonstrated the effec-

tiveness of the proposed system when applied to a

driving safety support system and showed that such

a system can overcome the low responsiveness that is

common in standard environmental vision systems.

To further reinforce the effectiveness of the pro-

posed system, we are planning to carry out additional

experiments to compare it with an onboard vision sys-

tem for collision avoidance. We also aim to introduce

the proposed system in other situations. For instance,

it should be possible to apply it to intersections in ur-

ban areas. Although vehicles generally drive at lower

speeds in urban areas, the speed relative to vehicles

driving in the opposite direction is twice as fast as

that of the vehicle in question. We expect that our

proposed system will be effective even in such situa-

tions where the driving speed is not high.

ACKNOWLEDGEMENTS

This work was supported in part by the Strategic In-

formation and Communications R&D Promotion Pro-

gramme (SCOPE) 121803013.

REFERENCES

Cherng, S., Fang, C. Y., Chen, C. P., and Chen, S. W.

(2009). Critical motion detection of nearby moving

vehicles in a vision-based driver-assistance system. In

IEEE Transactions on Intelligent Transportation Sys-

tems, volume 10, pages 70–82.

Ishii, I. and Ishikawa, M. (1999). Self windowing for high-

speed vision. In IEEE International Conference on

Robotics and Automation, pages 1916–1921.

Keller, C. G., Dang, T., Fritz, H., Joos, A., Rabe, C., and

Gavrila, D. M. (2011). Active pedestrian safety by au-

tomatic braking and evasive steering. In IEEE Trans-

actions on Intelligent Transportation Systems, pages

1292–1304.

Kim, J. and Kim, J. (2009). Intersection collision avoid-

ance using wireless sensor network. In IEEE In-

ternational Conference on Vehicular Electronics and

Safety, pages 68–73.

Noda, A., Hirano, M., Yamakawa, Y., and Ishikawa, M.

(2014). A networked high-speed vision system for

vehicle tracking. In Sensors Applications Symposium,

pages 343–348.

Noda, A., Yamakawa, Y., and Ishikawa, M. (2013). High-

speed object tracking across multiple networked cam-

eras. In IEEE/SICE International Symposium on Sys-

tem Integration, pages 913–918.

Papadimitratos, P. and Evenssen, K. (2009). Vehicular com-

munication systems: Enabling technologies, applica-

tions, and future outlook on intelligent transportation.

In IEEE Communication Magazine, volume 47, pages

84–95.

Vceraraghavan, H., Masoud, O., and Papanikolopoulos, N.

(2002). Vision-based monitoring of intersections. In

IEEE International Conference on Intelligent Trans-

portation Systems, pages 7–12.

ZMP (2014). 1/10 scale robocar 1/10. http://www.zmp.

co.jp/wp/products/robocar-110?lang=en.

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

544