Development of Therapeutic Expression for a Cat Robot in the

Treatment of Autism Spectrum Disorders

Bo Hee Lee

1

,

Ju-young Jang

1

, Keum-hi Mun

2

, Ja Young Kwon

3

and Jin Soun Jung

4

1

Department of Electrical Engineering, Semyung University, Jecheon-si, R.O. Korea

2

Department of Industrial Design, Semyung University, Jecheon-si, R.O. Korea

3

Department of Social Welfare, Semyung University, Jecheon-si, R.O. Korea

4

Department of Fashion Design, Semyung University, Jecheon-si, R.O. Korea

Keywords: Autism Spectrum Disorder, Social Interactions, Treatment Behaviour, Face Mechanism, Therapeutic

Expression, Hand Gesture, Controller and Sensor.

Abstract: The purpose of this research is to develop a therapeutic expression for the use of early treatment that will

improve the social interactions of children with autism spectrum disorders (ASD). In order to satisfy the

purpose, we choose a cat character after surveying requirements and summarize the required actions for a

treatment as hugging, eye contacts, copies of body movements and reactions to negative behaviours. The

robot has a face mechanism that can express the emotion and a body mechanism to perform hand gesture.

We also designed a system controller and sensor interfaces to control its body or interact with children. All

the use history of the robot is stored at the memory device to analyze the play patterns of the patient and

also used to make the treatment program that can be utilized in the specialized clinic. In this study, the

therapeutic expression for the treatment of ASD is suggested and ported in a cat robot, and verified with real

action experiment of those functions. This study is kinds of preliminary result before developing a treatment

therapy for ASD children using perfect cat robot that has outer skin and furry coat, and followed by

expression research for the suitable program that can be applied in the real treatment field.

1 INTRODUCTION

ASD is reportedly the fastest spreading childhood

disorder. It is known that one in 150 children is

diagnosed with ASD. The main characteristics of

children with ASD are that they have social

interaction disabilities, communication disabilities,

repetitive stereotypy behaviours, and limited

attention spans. Existing methods of treatment and

education are greatly limited because these children

cannot properly express their own emotional states

and consequently a new treatment system needs to

be developed. Initiatives that use robots to treat

ASD children have consistently reported positive

results in improved interactions, eye contact, and

concentration (Cho et al., 2009; Feil-Seifer and

Mataric, 2008; Lee et al., 2010; Robin et al., 2005,

Scassellati et al., 2012). ASD children have actively

reacted to robots, initiated interactions, and

increased exploring stimulus that they liked (Kim et

al., 2011). In the previous study, we have

investigated the effect of the treatment using robot

and how to design such a robot (Kwon et al., 2014).

Sometimes robot can be used to treat ASD with

making custom scenarios and revising or training

robot program just using commercially available

robot (Gillesen and Barakova, 2011), and this study

results showed that robot has the potential to be used

as a treatment and educational medium for ASD

children. In this study we conducted FGIs with

professional therapists and parents of ASD children

in order to discover the motions and abilities the

robots need to be utilized in treating ASD children

under the situation of Table 1 and 2. According to

FGI result analysis, robots were found to need the

following motions and abilities. First, it requires the

“individualization”. This abilities of robots need to

be developed on various levels depending on the

development of the ASD child in order to conduct

individualized treatment. The robot should be

responsive to the child’s actions instead of leading.

Second, it should have “ability to stimulate the

senses”. This is the ability to express through the

various senses according to the situation. It is

640

Lee B., Jang J., Mun K., Kwon J. and Jung J..

Development of Therapeutic Expression for a Cat Robot in the Treatment of Autism Spectrum Disorders.

DOI: 10.5220/0005123106400647

In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2014), pages 640-647

ISBN: 978-989-758-040-6

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

accomplished by visual, textual, olfactory, and

auditory sensors to measure touch, temperature,

sound, light, color, smell, etc.

Table 1: Background for FGI participants –therapist.

Table 2: Background for FGI participants –parents.

Third, it should have “interactive motion”. Since

eye-contact is an important measure of interaction.

During the interaction a robot should be able to

move and positively react according to the child’s

gaze and eye-contact range. It also has the ability to

negatively respond to child’s behaviors that need

correcting or aggressive behavior, etc. This also

includes the ability to emulate the child’s actions,

hugging motion, requesting motion to play with the

child, and eating motion as if it is a real pet.

Finally, this kind of robot can be able to “manage

all of the behavior” or result of the treatment using

operation history. It should have the function for

family members or therapists to control the robot

with a remote control or the ability to select from a

various range of programs according to the child’s

conditions with the swappable memory cards. In

addition it can notice the changes in the child’s

behavior during use of the robot. This therapeutic

program should be included user interface for

controlling and monitoring the robot. In this paper,

we suggest some effective treatment method based

on the feedback of the field and robot design to be

suitable for making such action.

2 ROBOT DESIGN

2.1 Required Action and Expression

After surveying of FGI result, we found that actions

of a cat robot for a treatment must include making

hugs, doing eye contacts, imitating body movements

and reactions to negative behaviours as shown in

figure 1. In order to perform these acts, the robot has

to be designed proper physical structure. Head can

be able to move in all directions, and legs should be

operated with suitable structure for hugging and

negative reactions.

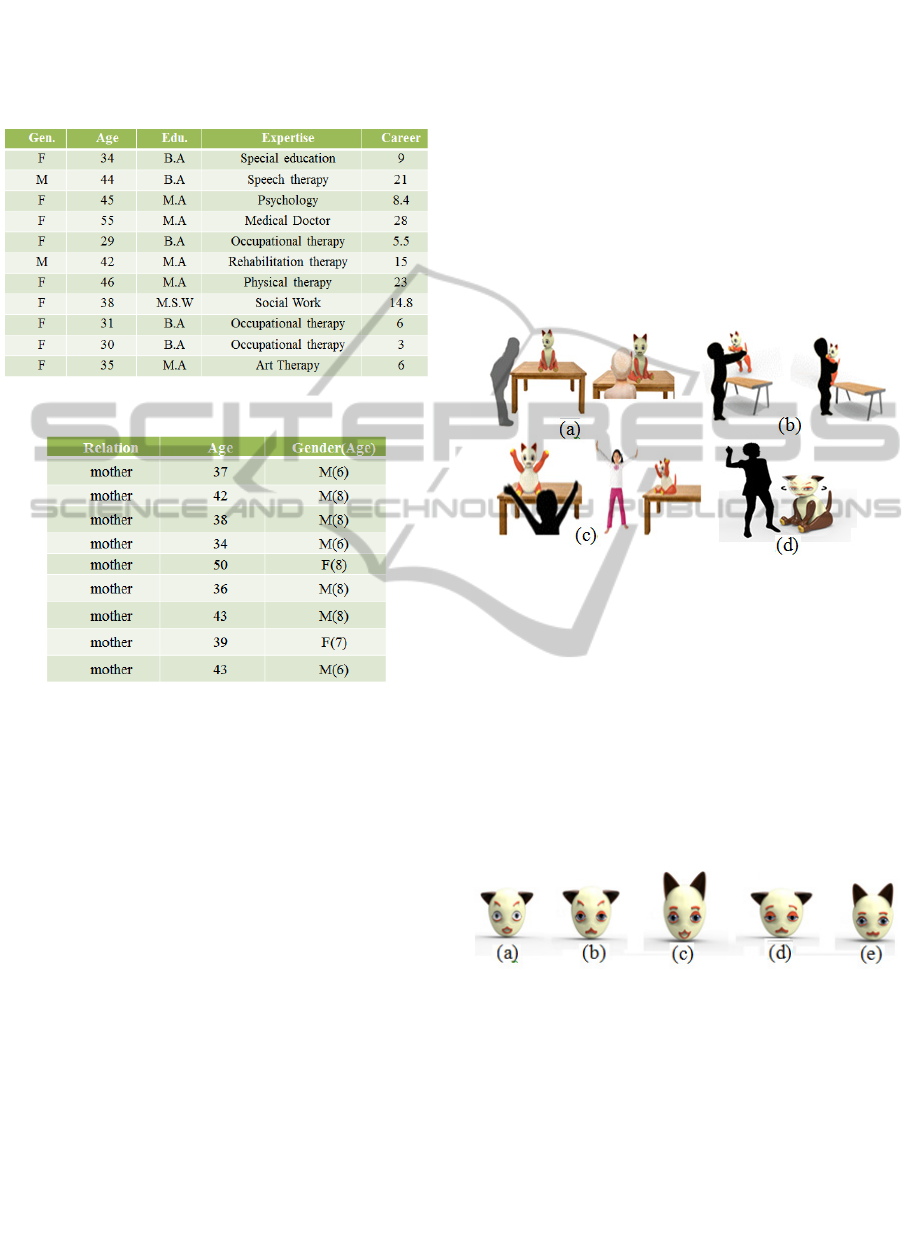

Figure 1: Actions for treatment: (a) eye contacts, (b)

hugging, (c) imitating body movements,(d) reaction to

negative acts).

At the same time, for treatment of ASD children,

it should be required some actions expressing

happiness, sadness, anger, and surprise. This robot

could make expressions on the face showing the

status of emotion just like human. It should be

possible to show children in moving ears, eyebrows,

and eyelid movement according to emotion, and

realized with giving various changes like raising

tails of lip up and down.

Figure 2: Facial expression: (a) surprise, (b) anger, (c)

happiness,(d) sadness,(e) absence of expression.

2.2 Character and Mechanical Design

A referred cat robot is implemented to be covered by

furry coat with the same length of furry as a real cat.

The ratio between a face and a body is given with

1:2 to show the cuteness of a baby cat. For a better

showing of the change on a facial expression,

eyebrows is emphasized and eyes are bigger than

normal size according to the result of a preference

DevelopmentofTherapeuticExpressionforaCatRobotintheTreatmentofAutismSpectrumDisorders

641

research and tails of eyes is centered. A cat robot has

the height of 50cm to be set up at the children’s eye

level as shown in the Figure 3.

Figure 3: Internal mechanism.

To be looked more like a cat, a shape of face,

ears, body are designed. Eyes, a mouth and ears are

designed with emphasis on the shape than the real to

have the better appearance of the facial expression.

Supporting structure is needed inside for electronic

circuits. For the structures of body and skeleton,

aluminum structures are used to sustain strong and

light-weighted. A joint for making movements in

set-up angles is equipped with a servomotor.

Figure 4: Outward appearance design.

As a whole, it would be embodied with 21 joints

including actions of legs and tail. At the same time,

an outward appearance is designed to protect the

designed inner part and structure. The outward

appearance is designed for the tight joint with the

structure of inner part. To assemble the parts using

each hole of the parts prepared in the inner part

structure design, outward appearance design was

processed separately by two parts.

2.3 Joint and Electrical System Design

Cat robot for the ASD treatment should be

considered the action against the user's abnormal

behavior. Its structure also has to sustain strong

external force with strong material like aluminum

alloy. In addition to reduce the weight, it should be

made by many empty spaces on the skeleton as soon

as possible. For the treatment of emotional

expression to a face part, intelligent controller units

related with the movement should be considered in

the body portion and the tail portion. It maybe takes

after real cat and be able to do similar movement.

Head unit is very important to represent the emotion.

It has parts of the eyes with forehead, eyebrows,

mouth, and ears like a cat, which are operated with

10 motors. Furthermore the each parts of the face are

arranged separately and controlled by each

individual motors, so they can move independent

and express the required emotions. In the upper leg,

three joint are placed with the motion of shoulder

and elbow that can be used to make some human-

friendly gesture. In the right and left hip portion,

high torque driven legs are designed to make stand-

down or stretching. And one joint is placed to

express the pleasant feeling by waving a tail. In

connecting the head and neck of the body, two

degrees of freedom is implemented with the motion

of shaking and nodding, so cat robot has a total of 21

degrees of freedom.

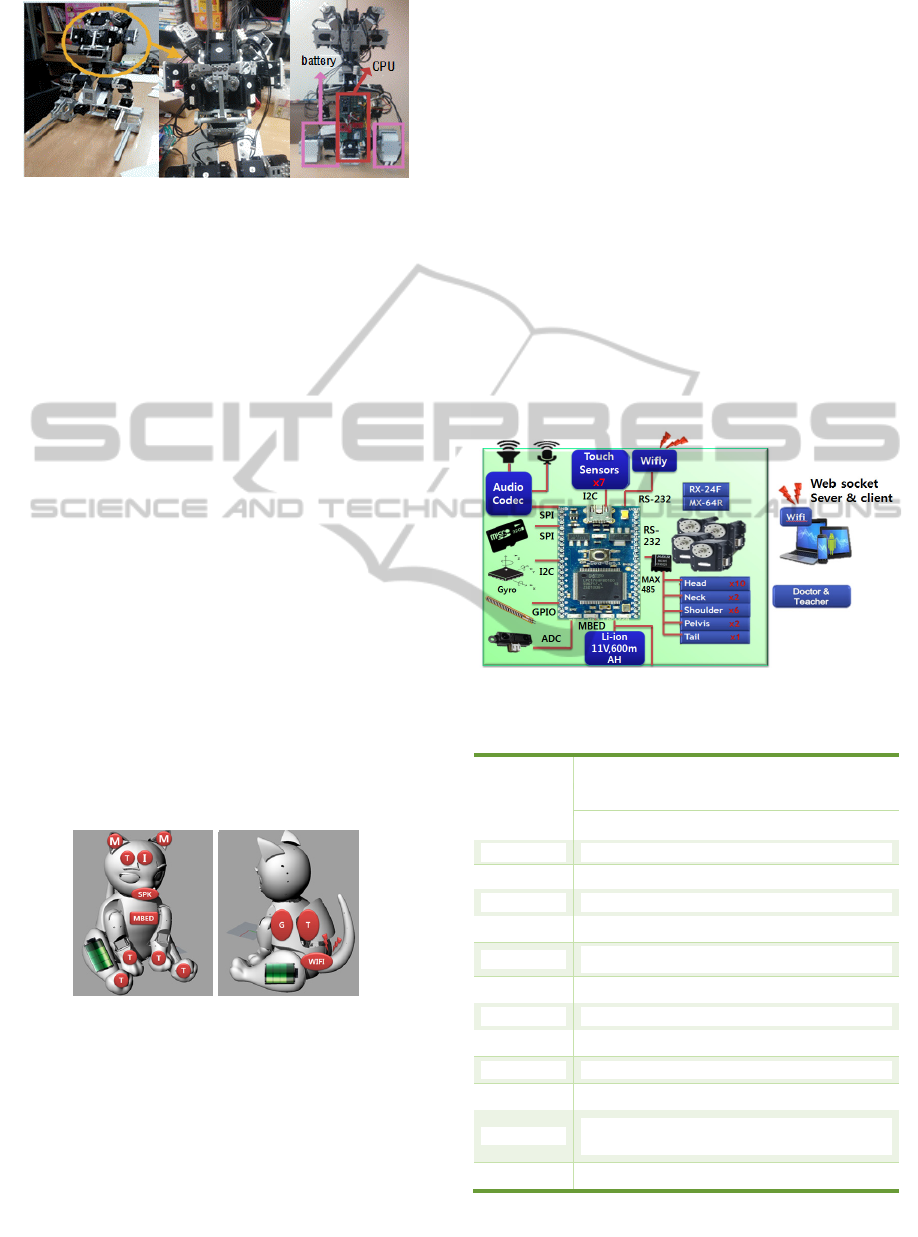

Figure 5: Motor movements in body and face.

Generally cat robot has an initial posture sitting

at table, and be excluded the walking function of

real cat. It just vents main body using hip joints to

make up-down motion of body. Especially hip

motors are so powerful enough to drive whole body

and withstand the load variation from external. In

addition various types of sensor system were

installed on the skin or under the skin in order to

give robot-human interaction like intelligent

behavior and sympathetic action.

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

642

Figure 6: Cat robot skeleton and motor installation.

2.4 Controller Design

The operations of each part of the body and

interactions between robot and ASD children are

detected by wide range of internal and external

sensors of the robot. Several capacitive contact

sensors were installed on head, back, belly, and

upper legs, which can detect the user's touch. Touch

information from the sensors are stored in micro SD

that is plugged in main controller and be made

available for the analysis and making treatment

program in the future. In addition sound generating

device is designed to generate cat voice according to

various situations. The cat sound can be reproduced

through audio Codec with electrical speaker. Sound

effect is essential to make interaction with each

other, especially in ASD treatment. An infrared

sensor can catch the motion when somebody moves

within a boundary of certain distance. It can be able

to measure the distance between user and robot and

also direction of movement, so it can be used to

increase intimacy with robot. In the waist, a 3-axis

accelerometer was installed to measure the robot

orientation to check if the robot was tilted, lifted, or

if user try to hug according to the user's feeling.

Figure 7: Sensor deployment (T:touch,G:gyro,I:infrared).

All the proposed motion is determined by number of

commands that ordered from operator on the web

through Wireless Internet (Wifi), and robot behavior

and actions is set by downloading command on the

web. In this way many working robots in different

field are available to play in the response of the

robot and easily collect data as a useful therapeutic

effect, and the teacher or operator can control the

robot remotely as a medium of treatment. Motors for

driving the joint are one of the two types of

ROBOTIS MX-64 (6.0Nm) or AX-32 (2.6Nm),

which are controlled using the RS485

communication with only two lines in controlling

multiple motors(Robotis,2014). Main MCU was

designed to perform all of the control with easiness.

It is based on Mbed, 32-bit ARM ® Cortex ™-

M3(LPC1768) interfaced with various sensors and

driver to control actuator (MBED, 2014). The total

power consumption of the motor 21 can be driven at

the same time, so we should take into account of the

critical situation. 3000mAH, 3.7V Lithium-ion

battery is used with several combinations and finally

to produce 6000mAH 11.2V. The entire actuator and

the controller about the connection of the sensor are

shown in a block diagram and a specification is

summarized in Table 3.

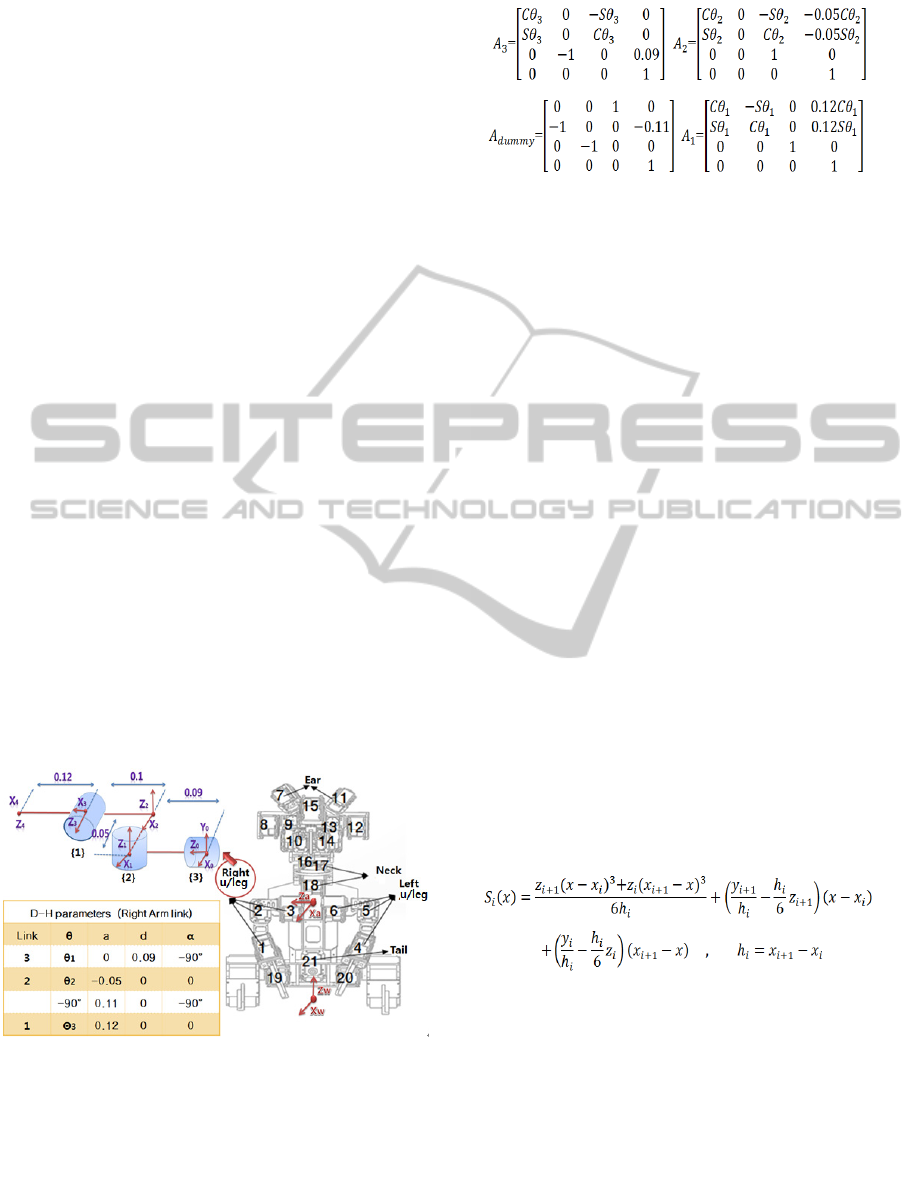

Figure 8: Block diagram of the controller.

Table 3: Robot specification.

Actuator

total number of motors (21)

Dynamixel RX-24F(2.6Nm) : 14

Dynamixel MX-64(5.3Nm) : 7

Weight 5Kg(including battery)

Height 50Cm

Inner skin plastic

Outer skin artificial fiber(antibiotic, flavor)

CPU

Mbed(NXP LPC1768 기반,Cortex-M3)

Sensors

Touch MPR121 based (I2C)

Sound VS1053 based (SPI)

Balance L3GD20 based (I2C)

Speaker AS04008CO-WR-R, 8Ω

Battery

LG Chemistry 18650,11V, 6000mAh(3.7V

3000mAh Lion, 6)

Network Wifly(RN-171)

DevelopmentofTherapeuticExpressionforaCatRobotintheTreatmentofAutismSpectrumDisorders

643

3 ANALYSIS OF ROBOT

In order to move robot joints properly, motor control

system is designed under the analysis of operation.

At the same time, since there are various sensors

attached to robot, intelligent system should be

implemented to identify with an operation. The

picture below shows the overall appearance of cat

robot without skin that will be implemented in the

near future. In order to give leg motion, we should

make plan for the predefined joint motion. Based on

this concept, all the kinematic analysis of the each

link joint is performed as follows. First, the

kinematic parameters of motor 21 are defined after

setting the joint coordinate system by D-H

representation. 10 joints among 21 are placed in the

head for emotional expression and require the

predefined action, but others in the body, and legs

must be designed the motion based on this analysis.

Joint analysis starts from doing by setting a

coordinate system at upper leg and the neck part

separately. So they can be divided into 3 individual

coordinate systems. First, origin of overall world

coordinator is expressed in the center of the sitting

robot and other one is located in the middle of both

upper legs, and last one is in center point of neck. In

analysis process, we only consider upper leg

coordinate system because it should be used to make

lots of gesture, but others are needed only predefined

function. The link allocation and kinematic analysis

of leg can be expressed with the coordinate system

as shown in Figure 9.

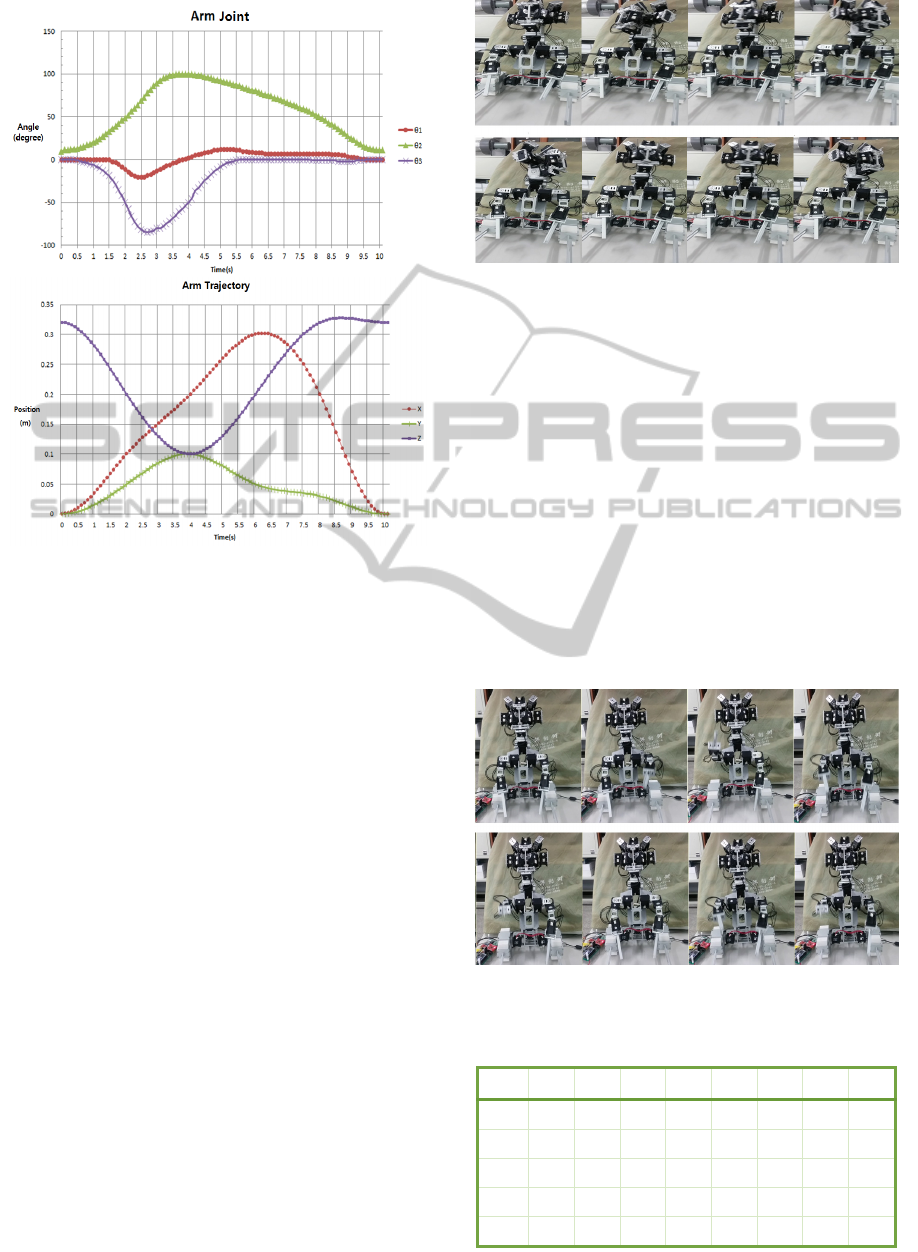

Figure 9: Coordinate system.

Forward kinematics is calculated as follows based

on the upper legs and shoulders with DH parameters

(1)

Using this result from kinematics, leg joint

angles are derived by the inverse kinematics and

created the movement of joints. Generally in

planning the trajectory of the object, Euler angle

representation is so popular. To acquire distal

positions, Jacobian for each posture is derived with

velocities of hand gesture between robot and human.

The process of inverse kinematics was transplanted

into the main controller with a MBED module to

work in real time. When doing jobs with robot, we

should give several discrete points to the robot

which are going through between the point and point.

At this time, the intermediate points with respect to a

given discrete points are obtained by interpolation,

and robot can move the target points smoothly using

these points. We use cubic-spline interpolation

passing through each point from the position and

velocity, and acceleration in the form of the

continuity of the operation of the robot performing

the interpolation and finally could be controlled

smoothly. In this analysis, robot trajectory is derived

at some positions from the start to 10 seconds during

hand gesture.

In order to get the trajectory points, we applied

the interpolation using spline curve that is called to

Natural cubic interpolation. The coefficients of the

polynomial are determined as follows (Hazewinkel,

et al., 2001).

(2)

Here, z is determined by the boundary condition

coefficient values. Figure 10 shows the result of

trajectory planning under the Table 4 condition. We

can find the smoothness during all of the working

time. After checking the value of planning the

movement of joints with respect to the gesture of

robot, therapeutic operation can be realized in the

real robot.

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

644

Figure 10: Trajectory planning.

4 EXPERIMENT

The required operations of the robot based on the

analysis above are implemented to show usability.

To do this, read the value of the current angles of

each motor and stored them in the micro SD card,

and generated the required operation on the basis of

the stored data. In order to move smoothly,

trajectory of the action was generated by using via

points which were recorded from offline operation.

Some of operations that are needed to be used in

treatment operation were performed.

4.1 Expression of Negative Feedback

First, the expression of negative behavior is tried to

give negative action to ASD children. The behavior

is predefined with required action in advance.

Depending on the sensor operation the head turning

from side to side is expressed in the middle of action

and this kind of expression showing the unpleasant

emotion allows patients to lead more reliably. The

history of negative behavior is memorized and

reported to doctor as an aid of treatment. As shown

in the Figure 11, a robot is shaking his head from sid

e to side with some hand gesture, and also a negative

sound is generating to express such action.

Figure 11: Behavior of negative feedback.

4.2 Imitation and Gesture

Some typical behaviors of cat are implemented using

body and hands together according to user action.

Hand or back movement is kinds of checking

operation whether the action has been completed or

not. Lifting up hands can make the patient easier to

handle the robot or hug easily. At the same time

robot makes crying sound in the middle of action

and sometimes change the shape of the eyebrows or

the mouth to imitate the actual animal action. These

actions are not finished yet because outer skin was

not implemented in cat robot. Figure 12 shows the

imitation gesture using hand and legs with respect to

Table 4 information.

Figure 12: Imitation behavior.

Table 4: Joint angles of the gesture.

Motor 3 4 6 7 8 12 15 16

start 202.2 141.8 147.3 157.6 145.6 160.8 99.3 157

via1 192.9 150.9 250.6 136.2 125.4 170.8 115.9 192.8

via2 192.9 150.9 250.6 136.2 127.1 175.2 115.9 155.6

via3 192.9 150.9 250.6 136.2 127.7 171.7 115.9 155.6

end 192.9 150.9 250.6 136.2 145.3 155 100.6 155.6

DevelopmentofTherapeuticExpressionforaCatRobotintheTreatmentofAutismSpectrumDisorders

645

If somebody rubs belly, it responds with the

action of alternating hands to express the good feelin

g and also performs hand gestures with facial expres

sion. At the same time it makes some typical cat sou

nd opening a mouth. During this operation, the shoul

der joint 3 and 6, were lifted up and down most of

time and elbow joint 6 and 7 were worked

intermittent.

4.3 Facial Expression

Several expressions can be made using face such as

folding ears, lifting eyebrows and changing the lip

shape. In this experiment, movements of upper and

lower lip, eyebrow, and ear pieces are designed and

implemented the sequential action with various

emotional combinations. Furthermore in case of

implementation of the entire face covered with fur

and skins, we could express precise facial expression.

Figure 13: Eyebrow movement.

Some structure is designed to make the eyelids w

inding, floating, and working separately in wink

operation. Robot emotions using eyebrow is an impo

rtant part of a cat and given by 2 degrees of freedom

to be able to express a different look. The eye

behavior is shown in the Figure 14.

Figure 14: Eye movement.

The frame structure and the outer covering of the

eye with consideration of the connection structure

are carefully performed in order to move the eye

brows variously according to emotions. From the

right side it shows the eye expression such as closed

or open eye fully or half. The lip movements are ex

pressed with the middle structure of face and sides

of lips. It can be closed, open, and also make some

shapes to express anger, sadness and surprise, etc.

Figure 15: Lip movement.

The ear has the function only up and closing. It is

deemed that no other action is required other than

the above operation. The two ears are designed to

move independently. We can express the emotion

like a surprise or contentment using this ear.

4.4 Eye Contact

Another method for expressing emotion is eye

contact that can be useful to expand user

communication.

Figure 16: Eye contact.

In order to perform this operation, first detect a

human body by a position and direction sensor on

the body frame. In this experiment, the user moves

from left to right and robot head is moving

accordingly with the movement of user

4.5 Hug

Hugs are the most intense action between a robot

and ASD children as a way of self-expression.

Because of the complexity of the operation, we

should convey partial reaction step by step according

to suitable reaction that can be carried out. First,

when the intension of embrace is detected, the robot

stretches out its legs. After that it trims the shape of

ICINCO2014-11thInternationalConferenceonInformaticsinControl,AutomationandRobotics

646

upper leg according to the body shape of the patient.

Upper left in the Figure shows user approaching to

robot, then board sensor of the robot detects the

intension with the robot touch. Finally it controls its

leg and upper legs to fit the user body. Until now,

the performance of hugs is unnatural because it has

no outer skin and furry coat. In the near future, more

comfort hug method will be revised with the support

of outer skin.

Figure 17: Hugging.

5 CONCLUSIONS

The design of a robot having a cat character is

suggested in order to make positive treatment effects

for the ASD children. The operations related with

the various facial expressions and body gestures

with appropriate interaction between robot and

patient are suggested and implemented with analysis

of engineering issue. The suitable motors and

intelligent sensor system that can measure and

control the robot are also designed. The basic

emotional expression using facial movements for the

treatment are performed to express anger, sadness,

and surprise. Various types of treatment action are

also suggested using body, and legs like hugging,

eye contacts, and some behaviors. As shown in the

result, cat robot can express therapeutic action with

the proper interaction. In the future, more realistic

problem to control a robot will be studied with the

outer skin and appropriate artificial furry coat. In

addition, the realistic therapy program will be

designed after getting feedback from real treatment

place. These kinds of studies using face and body

parts of animal robot is expected to cause the

diversification of robot usages in the future.

ACKNOWLEDGEMENTS

This work was supported by the National Research

Foundation of Korea Grant funded by the Korean

Government (NRF-2013R1A2A2A04014808).

REFERENCES

Cho, K., Kwon, J., Shin, D., 2009. Trends of cognitive

robot based intervention for autism spectrum disorder,

Journal of Korean Association for person with autism,

9(2). pp 45~60

Feil-Seifer D, Matari´c M.J., 2008. B3IA: a control

architecture for autonomous robot-assisted behavior

intervention for children with autism spectrum disorders.

Proc. 17th IEEE Int. Symp. Robot Hum. Interact.

Commun. (RO-MAN 2008), Aug. 1–.3, Munich, Ger., pp.

328–.33. Piscataway, NJ: IEEE

Kim, K. H., Lee, H.S., Jang, S.J, Bae, M.J., Ku, H. J.,

2011. Exploring the Responses of Children Labeled

with Autism- Through Interactions with Robotic Toys.,

Journal of Special Education & Rehabilitation Science,

50(1) pp. 181~209,

Lee, H. S., Baek, S. S., Ku, H. J., Kang, W.S., Kim, Y. D.,

Hong, J. W., An, J.U., 2010. Experimental Research of

Interactions Between Children with Autism and Robots,

Journal of Emotional & Behavioral Disorders, 26(2) pp.

141~168.

Robins B, Dautenhahn K, Te Boekhorst R, Billard A.,

2005. Robotic assistants in therapy and education of

children with autism: Can a small humanoid robot help

encourage social interaction skills? Univers. Access Inf.

Soc. 4(2):105–120

Scassellati B,, Admoni, H., and Mataric, M., 2012,

Robot for use in autism research, Annu. Rev Biomed.

Eng, 14: 275-294

Kwon, J.Y., Mun, K.H, Lee, B.H., Jung, J.S., 2014. Devel

oping an initial model for an eco-friendly cat robot for t

he use of early treatment of autism spectrum disorder.

Proc. 4th Int. Conf. on Pervasive and Embedded Comp.

and Commun. (PECCS 2014), Jan,. 7–.9, Lisbon, Por.,

pp. 186-191.

Gillesen, J.C.C., Barakova, E.I., Huskens, B.E.B.M., Feijs,

L.M.G., 2011. From training to robot behavior:

Towords custom scenarios for robotics intraining

programs for ASD. 2011 IEEE Int. Conf. on

Rehabilitation Robotics, July 1,ETH Zurich Science

City, Switzerland.

Robotis, 2014,e-manual, http://support.robotis.com/en

MBED, 2014, https://mbed.org/platforms/mbed-LPC1768/

Hazewinkel, M., ed. 2001, "Spline interpolation",

Encyclopedia of Mathematics, Springer, ISBN 978-1-

55608-010-4.

DevelopmentofTherapeuticExpressionforaCatRobotintheTreatmentofAutismSpectrumDisorders

647