An Insect Inspired Object Tracking Mechanism for

Autonomous Vehicles

Zahra Bagheri

1,2

, Benjamin S. Cazzolato

1

, Stevend D. Wiederman

2

,

Steven Grainger

1

and David C. O'Carroll

3

1

School of Mechanical Engineeing, The University of Adelaide, Adelaide, Australia

2

Adelaide Centre for Neuroscience Research, The University of Adelaide, Adelaide, Australia

3

Department of Biology, Lund University, Lund, Sweden

1 PROJECT SUMMARY

Target tracking is a complicated task from an

engineering perspective, especially where targets are

seen against complex natural scenery. Due to the

high demand for robust target tracking algorithms

much research has focused in this area. However

most engineering solutions developed for this

purpose are either unreliable in real world conditions

or too computationally expensive to be used in many

real-time applications. Insects, such as the

dragonfly, solve this task when chasing tiny prey,

despite their low spatial resolution eye and small

brain suggesting that nature has evolved an efficient

solution for target detection and tracking problem.

This project aims to develop a robust, closed-

loop model inspired by the physiology of insect

neurons that solves this problem, and to integrate

this into an autonomous robot. This system is tested

in software simulations using MATLAB/Simulink.

In near future this system will be integrated with a

robotic platform to examine its performance in real

world environments to demonstrate the usefulness of

this approach for applications such as wildlife

monitoring.

2 STAGE OF THE RESEARCH

This project is started in April 2013 as PhD project

and it is at intermediate stage. The computational

model inspired by physiology of insects has been

tested and optimized using MATLAB/Simulink. The

results of simulations show that the model can

robustly detect and pursue targets of varying contrast

against complex natural backgrounds. The current

stage of this project is implementing this model in a

hardware platform and testing its ability in real

world conditions.

3 OUTLINE OF OBJECTIVES

The aim of this project is to develop a robust,

efficient, and cost effective closed loop algorithm to

track and chase targets for autonomous terrain

robots. This prototype will be designed to track

small objects based on the recent findings from

flying insect behavior and electrophysiological

recordings of their neural system. The objectives of

this project can be categorized as two primary goals:

1- To develop a robust closed loop algorithm

to track and chase targets even against cluttered

background and in the presence of other distracters.

2- To implement the model on a hardware

platform to provide a base for applications such as

surveillance and wildlife monitoring.

4 RESEARCH PROBLEM

Detecting and tracking a small moving object

against a cluttered background is one of the most

challenging tasks for both natural and artificial

visual systems. Due to the increasing demand for

automation, developing a robust tracking algorithm

has been the focus of much research during the last

decade (Cannons, 2008). The potential applications

for such visual target tracking systems include

autonomous vehicle navigation, map building,

surveillance systems, wildlife study, human

assistance mobile robots, and bionic vision. All these

applications and many others identify a common

requirement for technology that can successfully

extract features of interest, track them robustly

within complex environments through long

trajectories and do so even in the presence of other

distractions.

Many algorithms have been developed over the

last decade to address the problem of object

30

Bagheri Z., S. Cazzolato B., D. Wiederman S., Grainger S. and C. O’Carroll D..

An Insect Inspired Object Tracking Mechanism for Autonomous Vehicles.

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

detection and tracking for different scenarios. Most

of these methods use assumptions to simplify the

situations and make the tracking problem tractable.

For instance, smoothness of motion, minimal

amount of occlusion, illumination constancy, high

contrast with respect to background, etc., are the

common simplifications in most of the developed

algorithms (Yilmaz et al., 2006). Consequently,

most of these methods collapse when it comes to

tracking objects in real world situations, within a

distracting environment or in the absence of relative

background motion. Moreover, most of these

methods and techniques involve complex and time

consuming computational mechanisms which

require huge processing capacity that makes them

impractical in many applications. This identifies a

clear need for an alternative and more efficient

approach to solving at least a subset of the target

tracking problem.

While engineering methods try to solve the

problem of target detection and tracking by using

high resolution cameras, fast processors, and

computationally expensive methods, studies of

insect visual systems and flying behavior suggest

there is a simpler solution. Insects are an ideal group

to draw inspiration from in this context since they

have a low spatial acuity visual system, angular

resolution of approximately 1° (Stavenga, 2003),

and a small size, light-weight and low-power

neuronal architecture. Nonetheless, they show

remarkable visual guided behavior in chasing other

insects, e.g. for predation, territorial or mating

behavior, even against complex moving

backgrounds (Collett and Land, 1975, Wehrhahn,

1979) or in the presence of distracting stimuli

(Corbet, 1999, Wiederman and O'Caroll, 2013).

These features have motivated extensive research to

investigate the neural system that underlies

processing for such a complex task.

Electrophysiological recordings have been used to

examine sensitive cells to small moving objects in

different species such as blowfly (Wachenfeld,

1994), dragonfly (O'Carroll, 1993), fleshfly (Gilbert

and Strausfeld, 1991), and hoverfly (Collett and

Land, 1975).

Moreover, flying insects uncouple the eye from

their bodies to actively control their gaze direction

and stabilize the image during flight. This active

gaze control may simplify and improve tracking

strategies for many real-world applications, yet it is

a strategy little used in existing artificial vision

systems that face many of the same problems of

limited spatial and temporal resolution as insects.

Figure 1: Insects must have evolved a relatively simple

and efficient solution to a task that challenges the most

sophisticated robotic vision systems - the detection,

selection and pursuit of moving features in cluttered

environments.

Fortunately, as a result of the recent breakthroughs

in understanding biological vision we are now at a

point where modeling and implementing similar

strategies in an autonomous system is a practical

possibility. This project therefore aims to adopt a

bio-inspired approach to target tracking and pursuit,

based largely on recent research on the insect visual

system, and will implement it on a ground robotic

platform, complete with an active gaze control

system.

5 STATE OF THE ART

Traditionally, computer vision techniques divide the

problem of target tracking into two subproblems:

detection of moving objects and tracking of moving

objects (Ren et al. 2003). Dependent on the method,

object detection might be required in every frame or

when the object first appears in the video (Yilmaz et

al., 2006). The object tracker locates the position of

the target in every frame of the video and generates

its trajectory (Yilmaz et al., 2006). In recent years,

some research has shown that the integration of

image-based target detection and tracking improves

the robustness of the overall system (Wang et al.

2008, Kalal et al. 2012).

5.1 Detection of Moving Objects

In the literature, three typical approaches are used in

object motion detection: optical flow, temporal

difference and background subtraction:

5.1.1 Optical Flow

Optical Flow methods involve calculation of

AnInsectInspiredObjectTrackingMechanismforAutonomousVehicles

31

estimates for local motions in an image, and the

determination of the relocation of each pixel in

sequential image frames. Most optical flow methods

use spatial and temporal partial derivatives to

determine the velocity of each pixel in successive

images. This method is capable of detecting moving

objects even in the presence of camera motion and

background changes, though these changes should

be relatively small due to a ‘smoothness constraint’

(Lu et al., 2008). One common assumption in

developing optic flow algorithms which limits its

applicability in real world scenarios is illumination

uniformity (Zelek, 2002). Furthermore, the

computational complexity of these methods makes

them less suitable for implementation in real-time

applications.

5.1.2 Temporal Difference

These methods find contours of moving objects via

the difference of two successive frames in a multi-

frame image, assuming illumination is constant and

the background is stationary. This method applies a

threshold on the absolute time difference of two

adjacent frames to identify moving objects. The

temporal difference method can effectively

accommodate environmental changes, but it is

usually unable to completely represent shapes of

moving objects. The main advantage of this method

is its simplicity and low computational complexity,

however, it is very sensitive to threshold. A small

threshold causes noisy outcomes, while a large one

leads to losing essential information of the objects

(Yi and Liangzhong, 2010). Moreover, in temporal

difference methods a very fast moving object might

be detected as two distinct objects (Yi and

Liangzhong, 2010).

5.1.3 Background Subtraction

This method is the most popular and developed

method for moving objects detection. This method

uses a reference frame as a “background image” and

this reference frame is kept updated to represent the

effect of varying luminance and geometry settings

(Piccardi 2004). Therefore, the moving objects are

detected by finding the deviation of the current

frame from the background image.

Background subtraction provides high quality

motion information and has less computational

complexity than optical flow. Nevertheless, like the

temporal difference method, it requires a stationary

background scene with respect to the viewpoint and

it is sensitive to scene changes caused by light,

weather etc.

5.2 Tracking of Moving Objects

Methods for tracking of moving objects can be

categorized as (i) discrete feature trackers, (ii)

contour trackers, and (iii) region-based trackers:

5.2.1 Discrete Feature Trackers

Discrete feature trackers use image features such as

discrete points, edges and lines to track an object.

Point trackers match the object frame-to-frame

based on the previous object position and motion.

Both edge trackers and 3D model trackers focus on

line elements of the object as many man-made

objects are composed of numerous straight lines.

The difference between these two classes is whether

or not the tracker uses a three dimensional object

model.

Many successful tracking methods have been

developed based on point trackers (Veenman et al.,

2001, Sahfique and Shah, 2003). The work of

Sahfique and Shah (2003) shows a high level of

accuracy despite a significant level of noise in the

scene. Although some proposed point tracking

methods can cope with occlusions and foreground

clutter, these methods have not effectively addressed

the effect of illumination changes (Cannons, 2008).

The other groups of discrete feature tracking,

edge (Zhang and Faugeras, 1992, Jonk et al., 2001,

Mörwald et al., 2009) and 3D model trackers (Leng

and Wang, 2004, Lepetit et al., 2005), are less

developed compared with point trackers. These

groups of trackers are mostly robust to illumination

since spatiotemporal filtering is applied on their

front end, and they are capable of handling some

extent of occlusion. Unlike the edge trackers, 3D

model based ones can deal with scale changes.

However, both of these methods have mostly been

examined only under simple and controlled

environments. Hence, their performance under real

world conditions and cluttered environments is, as

yet, largely unknown (Cannons, 2008).

5.2.2 Contour Trackers

A contour tracker is defined as any system that

follows a target from frame-to-frame and represents

the target with an open or closed curve that adheres

to its outline. Although both contour trackers and

line trackers track the boundaries of targets within

the scene, line trackers are limited to following

straight line segments. Therefore, since boundary

representation of the contour trackers are drastically

different from a straight line (e.g., a circle), the

ICINCO2014-DoctoralConsortium

32

techniques used for these two types of trackers are

quite different.

Contour trackers have been significantly

improved since their original inception. Different

contour trackers have been proposed (Paragios and

Deriche, 2000, Li et al., 2006, Mansouri, 2002,

Yilmaz et al., 2004, Bibby and Reid, 2008, Bibby

and Reid, 2010) to address some of the issues related

to object tracking, such as automatic initialization

and occlusion. Although these approaches have

successfully solved some issues, none are truly

robust to background clutter.

5.2.3 Region-based Trackers

A region-based tracker is a type of tracker which

represents the target by maintaining feature

information across an area. The types of features that

are used in region trackers include color, texture,

gradient, spatiotemporal energies, filter responses,

and even combinations of the above modalities.

Research based on region trackers shows very

robust results in terms of occlusion (Comaniciu et

al., 2000, Cannons and Wildes, 2007). This class of

trackers can handle background clutter more

robustly than other classes as long as the clutter in

the background is stationary and occlusion is not

significant (Comaniciu et al., 2000, Birchfield and

Rangarajan, 2005, Cannons and Wildes, 2007, Yin

and Collins, 2007). Nonetheless, these types of

trackers are very sensitive to changes in

illumination.

5.3 Estimation Tools

In some tracking research, algorithms tools such as

Kalman filter, Extended Kalman filter, Unscented

Kalman filter, and particle filter have been employed

to enhance the accuracy of target tracking (Boykov

and Huttenlocher, 2000, Li and Chellappa, 2000, Rui

and Chen, 2001, Li et al. 2003).

5.3.1 Kalman Filter and its Variations

The Kalman filter is a prediction and correction tool

which uses the states of the previous time step and

observable measurements to compute a statistically

optimal estimate for the hidden states of a system.

Although a Kalman filter can provide a powerful

estimation tool, it has limitations. The mathematical

model of the Kalman filter assumes that the dynamic

model is linear but some systems are not well-

described by linear equations. Another limitation of

the Kalman filter arises from modeling the

measurement uncertainties by white Gaussian noise

processes. There are many instances where this

simplified model is not appropriate such as tracking

a target throughout a cluttered environment, where

the measurement distribution might not be a

unimodal Gaussian.

The 'Extended Kalman Filter' (EKF) is a

variation of the Kalman filter developed to provide

prediction and correction for non-linear models. In

the extended Kalman filter framework, Taylor series

expansion is used as a linear approximation of non-

linear models. The strength of the EKF lies in its

simplicity and computational efficiency.

Nonetheless, unlike the Kalman filter, the extended

Kalman filter in general is not an optimal estimator.

In addition, due to the extended Kalman filter’s

sensitivity to linearization errors and covariance

calculations, the filter may quickly diverge.

The 'Unscented Kalman Filter' (UKF) is another

popular non-linear variation of the Kalman filter.

The UKF utilizes deterministic sampling methods to

represent the measurement and state variables. The

UKF tends to be more robust and more accurate than

the EKF in its estimation of error. However, neither

the EKF nor the UKF solve the cases where white

Gaussian noise cannot be used as estimation

descriptor of measurement uncertainties.

5.3.2 Particle Filter

A particle filter or sequential Monte Carlo filter

maintains a probability distribution over the state of

the object being tracked by using a set of weighted

samples, or particles. Each 'particle' represents a

possible instantiation of the state of the object. In

other words, each particle is a guess representing

one possible location of the object being tracked and

the denser the portion of particles is at one location,

the more likely the target is there.

The main advantage of a particle filters over a

Kalman filter and its variations is its applicability to

nonlinear models and non-Gaussian noise processes.

Although with sufficient number of samples particle

filters are more accurate than either the EKF or

UKF, when the simulated sample is not sufficiently

large, they might suffer from sample

impoverishment.

The addition of these filters to tracking

algorithms decreases the noise in the image and

produces a more accurate estimation of the position

of a target within the scene. Although the robustness

of described tracking algorithms (Section 5.2)

increases in conjunction with these filters, the

AnInsectInspiredObjectTrackingMechanismforAutonomousVehicles

33

computational complexity associated with these

algorithms still remains an unsolved issue.

5.3.3 Physiological Approaches

As an alternative to the traditional engineering

approaches, there has been recent research which

has used biologically inspired approaches for

detection and tracking. Wiederman et al. (2008)

developed a size selective, velocity tuned, contrast

sensitive bio-mimetic model based on

electrophysiological experiments (Figure 2) from

‘small target motion detector’ (STMD) neurons, in

response to the presentation of various visual

stimuli. This 'elementary small target motion

detector' model (ESTMD) emulates the different

stages of visual processing in flying insects

consisting: (i) fly optics, (ii) photoreceptors, (iii)

large monopolar cells and (iv) rectifying transient

cells.

(i) Optics of the insect compound eye consisted of

thousands of arranged facet lenses which their

diffraction limit the spatial resolution of the eye to

approximately 1°.

(ii) Photoreceptors in the retina dynamically adapt

to background luminance (Laughlin, 1994), reduce

noise and improve the SNR by altering their contrast

gain (Juusola et al., 1994).

(iii) Large Monopolar Cells (LMCs) in the insects'

lamina remove redundant information (Coombe et

al., 1989) by acting as a spatiotemporal contrast

detector (Wiederman et al., 2008).

(iv) Rectifying Transient Cells (RTC) of the

medulla have independent adaptation of ON and

OFF channels (reverse polarities).

Figure 2: Electrophysiological recordings from STMD

neurons. The visual stimuli are displayed, whilst the

electrical potential (spikes) from inside the brain cell is

being recorded.

Recent studies on dragonflies reveal that one type of

STMD neuron, CSTMD1, has a facilitatory role in

tracking targets. The spiking activity of CSTMD1

builds over time in response to targets that move

through long, continuous trajectories (Nordström et

al., 2011). Dunbier et al. (2011, 2012) recorded the

response of these neurons to stimuli traversing in

interrupted paths. These electrophysiological

recordings show that these neurons responses reset

to a naive state when there are large breaks (~7°) in

the trajectory path. This facilitatory mechanism can

enhance the response to weak stimuli. Moreover,

this mechanism directs the attention to the estimated

reappearance location of the object which increases

the robustness of pursuit even if the target is

temporarily invisible. The same analogy can be

found in probabilistic approaches such as Kalman

filtering which provides an optimal estimation of

hidden states of a system by analyzing observable

measurements.

We hypothesize this facilitation underlies the

highly robust target tracking observed in dragonflies.

Therefore, a bio-inspired facilitation is proposed in

this research project to enhance the performance of

the existing bio-inspired models.

Moreover, these types of neurons have shown

competitive selection of one target in presence of

other distracters (Wiederman and O'Carroll, 2013).

Electrophysiological recordings of CSTMD1 neuron

show that irrespective of target size, contrast, or

separation, this neuron selects one target from the

pair and perfectly preserves the original response as

if the distracter was not present (Wiederman and

O'Carroll, 2013). These results bring insight to

robust control of target pursuit in the presence of

other distracters.

5.3.4 Insect Gaze Control

Studies of fly flight behavior show that they control

the direction of flight along with their gaze direction

through short and fast saccadic movements where

their head and body turn independently (Van

Hateren and Schilstra, 1999). This uncoupling of the

eye from its support enables the insect to maintain

the orientation of the gaze even when disturbances

occur which affect its body. Moreover it reduces the

temporal blurring effects and may promote ‘popout’

of a target against a background as a result of the

high-pass nature of key stages of visual processing

During a pursuit, an insect has to control its

forward velocity and distance to the target while

fixating the target in the frontal visual field. Two

different gaze control strategies have been seen

among flying insects (Figure 3); tracking as

described from male houseflies (Wehrhahn et al.

1982) during which a heading is calculated from the

error angle between the target and the central axis of

ICINCO2014-DoctoralConsortium

34

the pursuer’s gaze (Land and Collett 1974,

Wehrhahn et al. 1982); and intercepting as observed

in dragonflies (Olberg et al. 2000) which involves

the calculation of the future trajectory of the target to

intercept its anticipated position. It was found that

dragonflies use steering to minimize the movement

of the prey's image on their retina in order to

estimate the intersection of the target flight

trajectory (Olberg et al. 2000). Using this strategy,

dragonflies chase their target by flying directly to a

point in front of the prey (Olberg et al. 2000). High

prey capture rates in dragonflies seem to be related

to the insect’s ability to maintain its head oriented at

a constant angle with respect to the visual field

(Olberg et al. 2000). Although it is believed that the

pursuit strategy in the dragonfly has a key role in its

high catching rate, to date, the effect of different

pursuit strategies in flying insects have not been

investigated in a robotic platform.

Figure 3: Two main flying insects' pursuit strategies.

6 METHODOLOGY

To achieve the objectives of this research project,

both computational and experimental investigations

will be conducted. The performance of the model

under different conditions has initially been

examined using computational simulation. In the

next stage a robotic platform will be used to further

investigate the performance of the model under

different real world conditions (weather, sunlight,

etc.).

6.1 Computational Methods

For the computational part of this project an

extended version of the previously published

ESTMD model (Wiederman et al., 2008) is used.

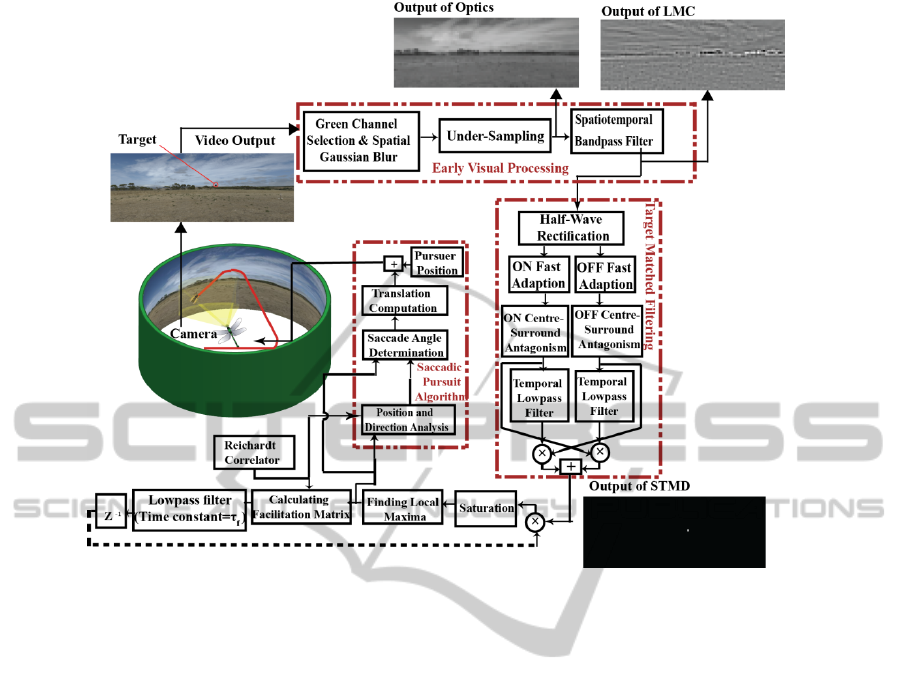

The closed-loop pursuit block diagram presented in

Figure 4 is the insect inspired detection and tracking

model which is utilized in the simulations. Different

stages of this model are described briefly in the

following paragraphs.

In order to approximate the spectral sensitivity of

fly photoreceptors that subserve motion processing

(Srinivasan and Guy,1990) and optical blur of an

insect compound eye, this model selects only the

green channel of the RGB input image and applies a

Gaussian spatial blur on it. The output signal goes

through spatiotemporal bandpass filtering which

includes centre-surround antagonism to remove

redundant information in the image, which is

inspired by the same mechanism in photoreceptors

and large monopolar cells. Centre-surround

antagonism is a spatial feature of LMC which

enables edge detection and contrast enhancement. In

the ESTMD model the centre-surround antagonism

is implemented by convolving the image with a

kernel which applies a negative weighting to the

surrounding nearest-neighbor pixels.

The output of early visual processing (Figure 4)

goes through half wave rectification which imitates

the independent ON and OFF channels of insects by

separating reverse polarities. Then each independent

channel is processed via a fast adaptive mechanism.

The fast adaptive mechanism is modeled by using a

fast lowpass filter (τ=3 ms) when the input signal

increases, and a slow lowpass filter (τ=70 ms) when

it decreases. This adaptation process serves to inhibit

repeating bursty inputs, such as noise. Both of the

ON and OFF channels then undergo further centre-

surround antagonism which helps to selectively tune

the model to small sized targets.

The earlier version of the ESTMD model

(Wiederman et al. 2008, Halupka et al. 2011) was

only sensitive to dark targets. But for the purpose of

this project, it has been modified to respond to both

dark and light targets by delaying and multiplying

the relevant contrast polarities (ON and OFF

channels).

In this new computational model developed for

this research project, the facilitation mechanism

observed in the dragonfly CSTMD1 neuron is

implemented by multiplying the output of the

ESTMD model with a lowpass filtered version of a

‘weighted map’ dependent on the location of the

winning feature but offset in the direction of target

motion. This facilitation mechanism increases the

chance of a repetitive winner to be the superior in

the next time step by enhancing the area around the

estimated location of the winning feature. The role

of the lowpass filter time constant here is to control

the period of the time that the facilitation matrix

enhances the area around the winning feature. The

location of the winning feature in the output of

ESTMD model will be used as the target location

and fed into saccadic pursuit algorithm to calculate

AnInsectInspiredObjectTrackingMechanismforAutonomousVehicles

35

Figure 4: Overview of the closed-loop block diagram of the computational model for simulation and the output of each

stage.

pursuer translation and rotation.

In order to simulate this computational model,

the Simulink 3D Animation Toolbox was used to

create a virtual world as the front-end for the bio-

inspired target detection and pursuit control

algorithm. A cylindrical arena with rendered natural

panoramic images was used as a virtual environment

(Figure 4). In order to move a target within this

environment, randomized three dimensional paths

with biologically plausible constraints on ‘saccadic’

turn angles have been generated. Moreover, the 3D

Animation Toolbox provides the possibility of

embedding 3D objects within this environment to act

as occluding obstacles and foreground clutter.

Within the virtual reality model a viewpoint was

mounted on the pursuer position in these

simulations. The video output of this viewpoint was

fed as an input to the detection and tracking closed-

loop control model.

6.1 Experimental Methods

For the practical part of the project a large payload

(70kg) all-terrain mobile robot developed by

Clearpath Robotics™, Husky A200, will be used as

the platform. This robot operates under Robotic

Operating System (ROS). To test the tracking

algorithm, a Ladybug®2, spherical digital video

camera (Point Grey Inc.) will be integrated with the

Husky to provide a 360° viewpoint of the

environment. The camera control software works

under a Windows server, while ROS is compatible

with Ubuntu. To overcome this problem with

conflicting OSs, a virtualization software package

like VirtualBox is required to load multiple guest

OSs under a single host operating-system (host OS).

The output of the camera will be used as input to the

target detection and tracking model and will be

processed by on board computer (Apple Mac mini).

In the next stage, to test the active gaze control, a

limited view point camera will be mounted on the

robot using a real-time pan-tilt-zoom mechanism,

Yorick, developed by the University of Oxford

(Bradshaw et al. 1994) to actively control the camera

gaze. In order to determine the global position and

orientation of the robot, dGPS and IMU systems will

be utilized. Moreover, IR sensors and possibly

scanning LiDAR will be used to navigate the robot

safely without accident. To test the ability of this

robotic platform to track moving objects a remote

control quadrotor helicopter will be used to navigate

a small object, e.g. a ping-pong ball, in different

ICINCO2014-DoctoralConsortium

36

environment and conditions (e.g. weather, sunlight).

Figure 5: The bio-inspired autonomous robot implements

target detection algorithms derived from

electrophysiological recordings.

7 EXPECTED OUTCOME

Due to the high target density and maneuverability,

high clutter, low visibility arising from terrain

masking, etc., ground target tracking presents unique

challenges not present in tracking other types of

targets. Despite the enormous effort and significant

progress in the field of visual target tracking, the

lack of a robust algorithm capable of tracking

objects in the most complex environments is still

evident. Moreover, most of the developed methods

are computationally expensive and require high

speed processors and high spatial resolution

cameras. The recent studies of insect visual system

and gaze control, suggests that an effective, real-

time and robust bio-inspired method can solve the

visual object tracking problem.

Therefore the expected outcome of this project is

a ground robotic platform which can autonomously

detect and track small moving objects in the most

sophisticated environments. Moreover, this project is

defined to not only contribute to progress of the

active research areas which require robust tracking

algorithms, but also to help raising new questions in

physiology as well. A hardware implementation of

the proposed tracking algorithm can reveal the limits

of the underlying systems for real world application

and raise new questions to investigate the solutions

that have evolved in the insect neural system

REFERENCES

Bibby, C., & Reid, I., 2010. Real-time tracking of multiple

occluding objects using level sets. In IEEE Conference

on Computer Vision and Pattern Recognition (CVPR),

, 1307-1314.

Bibby, C., & Reid, I. 2008. Robust real-time visual

tracking using pixel-wise posteriors. In Computer

Vision–ECCV, Springer Berlin Heidelberg, 831-844.

Birchfield, S.T., & Rangarajan, S., 2005. Spatiograms

versus histograms for region-based tracking. In IEEE

Computer Society Conference on Computer Vision

and Pattern Recognition, 2: 1158-1163.

Boykov, Y., & Huttenlocher, D.P., 2000. Adaptive

Bayesian recognition in tracking rigid objects. In IEEE

Conference on Computer Vision and Pattern

Recognition, 2 : 697-704.

Bradshaw, K.J., McLauchlan, P. F. Reid, I. D., & Murray,

D.W., 1994. Saccade and pursuit on an active head/eye

platform. Image and Vision Computing, 12(3): 155-163.

Comaniciu, D., Ramesh, V., & Meer, P., 2000. Real-time

tracking of non-rigid objects using mean shift. In IEEE

Conference on Computer Vision and Pattern

Recognition. Proceedings, 2: 142-149.

Cannons, K., 2008. A review of visual tracking. Dept.

Comput. Sci. Eng., York Univ., Toronto, Canada,

Tech. Rep. CSE-2008-07.

Cannons, K., & Wildes, R., 2007. Spatiotemporal oriented

energy features for visual tracking. In Computer

Vision–ACCV, Springer Berlin Heidelberg, 532-543.

Collett, T. S., & Land, M. F., 1975. Visual control of flight

behaviour in the hoverfly Syritta pipiens L. Journal of

Comparative Physiology, 99(1): 1-66.

Coombe, P.E., Srinivasan, M.V., & Guy, R.G., 1989. Are

the large monopolar cells of the insect lamina on the

optomotor pathway? Journal of Comparative

Physiology A: Neuroethology, Sensory, Neural, and

Behavioral Physiology, 166(1): 23-35.

Corbet, P.S., 1999. Dragonflies: Behavior & Ecology of

Odonata, Ithaca, Cornell Univ Press.

Dunbier, J.R., Wiederman, S.D., Shoemaker P.A. &

O’Carroll, D.C., 2012. Facilitation of dragonfly target-

detecting neurons by slow moving features on

continuous paths. Frontiers in Neural Circuits, 6(79).

Dunbier, J.R., Wiederman, S.D., Shoemaker P.A., &

O’Carroll, D.C., 2011. Modelling the temporal response

properties of an insect small target motion detector, In

IEEE 7th International Conference on Intelligent Sensors,

Sensor Networks and Information Processing (ISSNIP),

125-130.

Gilbert, C., & Strausfeld, N.J., 1991. The functional

organization of male-specific visual neurons in flies.

Journal of Comparative Physiology A, 169(4): 395-411.

Halupka, K.J., Wiederman, S.D., Cazzolato, B.S., &

O'Carroll, D.C., 2011. Discrete implementation of

biologically inspired image processing for target

detection. In Seventh International Conference on

Intelligent Sensors, Sensor Networks and Information

Processing (ISSNIP), 143-148.

Jonk, A., van de Boomgaard, R., & Smeulders, A.W.,

2001. A line tracker. Internal ISIS report, Amsterdam

University.

Juusola, M., Kouvalainen, E., Järvilehto, M., &

Weckström, M., 1994. Contrast gain, signal-to-noise

ratio, and linearity in light-adapted blowfly

photoreceptors. The Journal of General Physiology,

104(3): 593-621.

AnInsectInspiredObjectTrackingMechanismforAutonomousVehicles

37

Kalal, Z., Mikolajczyk, K. & Matas, J., 2012. Tracking-

Learning-Detection. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 34(7): 1409-1422.

Land, M. F., & Collett, T. S., 1974. Chasing behaviour of

houseflies (Fannia canicularis). Journal of

Comparative Physiology, 89(4): 331-357.

Laughlin, S.B., 1994. Matching coding, circuits, cells, and

molecules to signals: general principles of retinal

design in the fly's eye. Progress in Retinal and Eye

Research, 13(1): 165-196.

Leng, J., & Wang, H., 2004. Tracking as recognition: a

stable 3D tracking framework. In 8th IEEE

Conference on Control, Automation, Robotics and

Vision, 3: 2303-2307.

Lepetit, V., Lagger, P., & Fua, P., 2005. Randomized trees

for real-time keypoint recognition. In IEEE Computer

Society Conference on Computer Vision and Pattern

Recognition, 2:775-781.

Li, K., Miller, E.D., Weiss, L.E., Campbell, P.G., &

Kanade, T., 2006. Online tracking of migrating and

proliferating cells imaged with phase-contrast

microscopy. In IEEE Conference on Computer Vision

and Pattern Recognition Workshop, 65-65.

Li, P., Zhang, T., & Pece, A.E., 2003. Visual contour

tracking based on particle filters. Image and Vision

Computing, 21(1): 111-123.

Li, B., & Chellappa, R., 2000. Simultaneous tracking and

verification via sequential posterior estimation. In

Proceedings of IEEE Conference on Computer Vision

and Pattern, 2: 110-117.

Lu, N., Wang, J., Wu, Q.H., & Yang, L., 2008. An

improved motion detection method for real-time

surveillance. IAENG International Journal of

Computer Science, 35(1): 1-10.

Mansouri, A.R., 2002. Region tracking via level set PDEs

without motion computation. IEEE Transactions on

Pattern Analysis and Machine Intelligence, 24(7),

947-961.

Mörwald, T., Zillich, M., & Vincze, M., 2009. Edge

tracking of textured objects with a recursive particle

filter. In Proceedings of the Graphicon.

Nordström, K., Bolzon, D.M., & O'Carroll, D.C., 2011.

Spatial facilitation by a high-performance dragonfly

target-detecting neuron. Biology letters, 7(4): 588-592.

O'Carroll, D., 1993. Feature-detecting Neurons in

Dragonflies. Nature, 362: 541-543.

Olberg, R.M., Worthington, A.H., & Venator, K.R., 2000.

Prey pursuit and interception in dragonflies. Journal of

Comparative Physiology A, 186(2): 155-162.

Paragios, N., & Deriche, R., 2000. Geodesic active

contours and level sets for the detection and tracking

of moving objects. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 22(3), 266-280.

Piccardi, M. 2004. Background subtraction techniques: a

review. In IEEE International Conference on Systems,

Man and Cybernetics, 4: 3099-3104.

Ren, Y., Chua, C.S., & Ho, Y.K., 2003. Motion detection

with nonstationary background. Machine Vision and

Applications, 13(5-6), 332:343.

Rui, Y., & Chen, Y., 2001. Better proposal distributions:

Object tracking using unscented particle filter. In

Proceedings of IEEE Computer Society Conference on

Computer Vision and Pattern Recognition, 2: 786-793.

Shafique, K. & Shah, M., 2003. A non-iterative greedy

algorithm for multi-frame point correspondence. In

IEEE International Conference on Computer Vision,

110-115.

Srinivasan, M.V., & Guy, R.G.,1990. Spectral properties

of movement perception in the dronefly Eristalis.

Journal of Comparative Physiology A, 166(3), 287-

295.

Stavenga, D., 2003. Angular and spectral sensitivity of fly

photoreceptors. I. Integrated facet lens and

rhabdomere optics. Journal of Comparative

Physiology A, 189(1): 1-17.

Van Hateren, J. H. , & Schilstra, C., 1999. Blowfly flight

and optic flow. II. Head movements during flight.

Journal of Experimental Biology, 202(11), 1491-1500.

Veenman, C.J., Marcel J.R, & Eric Backer, 2001.

Resolving motion correspondence for densely moving

points. IEEE Transactions on Pattern Analysis and

Machine Intelligence, 23(1): 54-72.

Wachenfeld, A., 1994. Elektrophysiologische

Untersuchungen und funktionelle Charakterisierung

männchenspezifischer visueller Interneurone der

Schmeissfliege Calliphora erythrocephala. Doctoral

dissertation, Universität Köln.

Wehrhahn, C., 1979. Sex-specific differences in the

chasing behaviour of houseflies (Musca). Biological

Cybernetics, 32(4): 239-241.

Wiederman, S.D., O’Carroll, D.C., 2013. Selective

Attention in an Insect Visual Neuron. Current Biology,

23:156-161.

Wiederman, S.D., Shoemaker, P.A., & O'Carroll, D.C.,

2008. A model for the detection of moving targets in

visual clutter inspired by insect physiology. PloS One,

3(7):1-11.

Yi, Z., & Liangzhong, F., 2010. Moving object detection

based on running average background and temporal

difference. In IEEE International Conference on

Intelligent Systems and Knowledge Engineering

(ISKE), 270-272.

Yilmaz, A., Javed, O., & Shah, M., 2006. Object tracking:

A survey. ACM Computing Surveys (CSUR), 38(4):

13.

Yilmaz, A., Li, X., & Shah, M., 2004. Contour-based

object tracking with occlusion handling in video

acquired using mobile cameras. IEEE Transactions on

Pattern Analysis and Machine Intelligence, 26(11),

1531-1536.

Yin, Z., & Collins, R., 2007. Belief propagation in a 3D

spatio-temporal MRF for moving object detection. In

IEEE Conference on Computer Vision and Pattern

Recognition, 1-8.

Zhang, Z., & Faugeras, O.D., 1992. Three-dimensional

motion computation and object segmentation in a long

sequence of stereo frames. International Journal of

Computer Vision, 7(3), 211-241.

Zelek, J.S., 2002. Bayesian real-time optical flow. In

Vision Interface, 266-273.

ICINCO2014-DoctoralConsortium

38