Task Specification by Demonstration based on the Framework for

Physical Interaction Approach

Angel P. del Pobil

1

and Mario Prats

2

1

Robotic Intelligence Lab, Jaume-I, University, Castellón, Spain

2

Google, Mountain View, CA 94043, U.S.A.

Abstract: We present a new method for building an abstract task representation from a single human-guided

demonstration. We call it the Specification by Demonstration Approach and it is based on our Framework

for Physical Interaction (FPI). Guided by a human instructor, a robot extracts a set of key task references

and relates them to a visual model of the object. A physical interaction task representation is built and stored

for its future use. The robot makes use of visual and force feedback both during the demonstration and in

future autonomous operation. Some experiments are reported.

1 INTRODUCTION

Future robot companions will have to work in

human spaces and deal with objects that they have

never seen before. Many of these objects will be

home appliances such as diswashers, washing

machines, TV sets, etc. that need a specific

interaction procedure for its use. Therefore, methods

for teaching physical interaction tasks are needed.

Most approaches to Programming by Demonstration

(PbD) focus on task representations at the joint level

(Calinon, 2009), which cannot easily adapt to wide

variations in the working scenario. In order to solve

this problem, other approaches focus on abstract task

representations that are independent of the robot

configuration.

Most of these works are based on qualitative

descriptions of the robot environment, e.g. trying to

reach a desired pose of the objects relative to each

other, mostly for applications involving pick and

place actions (Ekvall and Kragic, 2005). In this work

we focus on building abstract task representations

for interacting with home appliances through vision

and force feedback. We introduce the Specification

by Demonstration approach, based on our previous

work on a framework for specification of physical

interaction tasks (FPI) (Prats et al., 2013). The main

idea is to automatically build an abstract robot-

independent representation of the task from a single

user demonstration. First, the user introduces a new

object to the robot and indicates a visual reference

for its localization. Then, the user shows a new task

on this object to the robot, by manually guiding the

robot hand. After that, the robot reproduces the same

motion by its own, and the user validates. Then, a

physical interaction task specification is built from

the position, vision and force feedback logged

during the teaching process. This abstract

information is structured in an XML format and

stored in a database for its future use.

2 HUMAN-GUIDED TASK

SPECIFICATION

The following steps are performed for showing a

new task to the robot:

1) First, the user indicates the name of the object.

If the object already exists in the database, the robot

loads the tasks that have been already specified and

the visual reference used for tracking the object. The

user has the option to add a new task, modify an

existing task, or specify a visual reference. The

visual reference is currently specified by clicking on

four points of a rectangular region with clearly

visible edges. The robot then asks for the dimensions

of the rectangular patch. With this information, a

visual model is built and the patch pose is retrieved

and tracked using the Virtual Visual Servoing

method (Comport et al., 2004), (Sorribes et al.,

2010). By stereo visual processing, the need for

specifying the patch dimensions could be avoided.

2) For teaching a new task on an object, the user first

introduces the task name, and then guides the robot

hand

through the different steps of the task. This is

Del Pobil A. and Del Pobil A.

Task Specification by Demonstration based on the Framework for Physical Interaction Approach.

DOI: 10.5220/0006813000010001

In Proceedings of the 11th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2014), pages 5-7

ISBN: 978-989-758-039-0

Copyright

c

2014 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

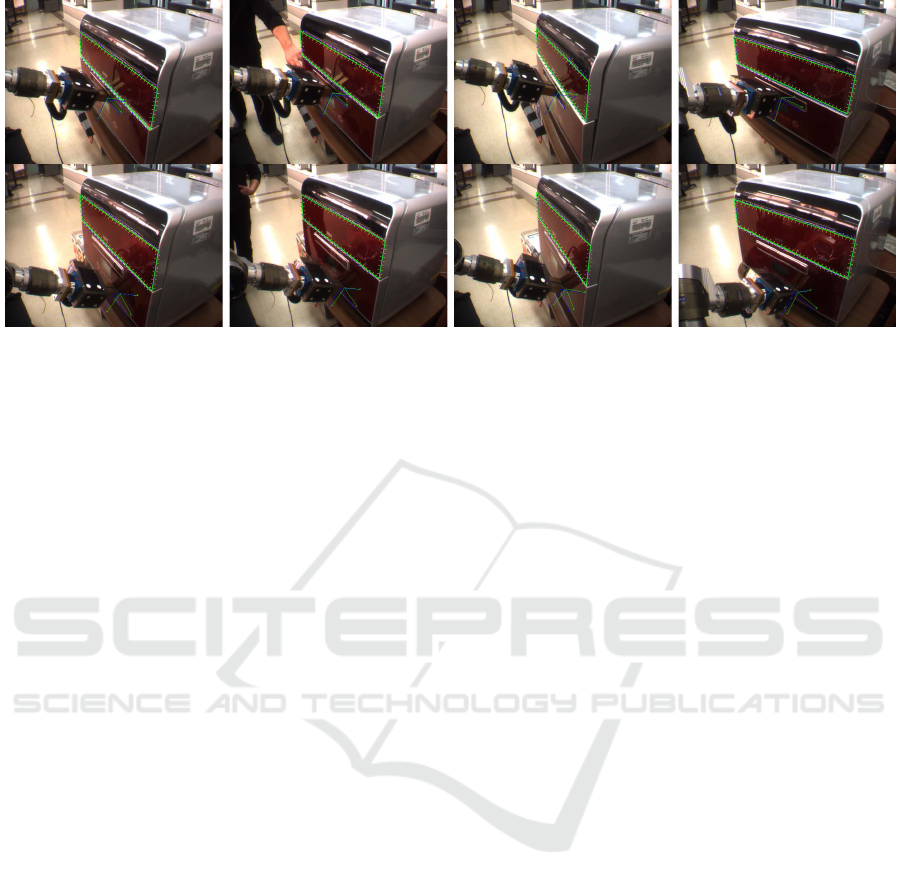

Figure 1: The mobile manipulator reproducing the task under different orientations of the object. The top row shows the

opening task, done by pushing on a large button. The bottom row shows the switch on task, that requires pushing a smaller

button. The images show only the moment in which the robot makes contact.

done thanks to a force sensor placed at the robot

wrist. During this step the robot stores the joint

trajectories. 3) After that, the robot tries on its own

and performs the task by reproducing exactly the

same joint trajectories while logging the end-effector

trajectory, the forces generated and the object pose.

We assume that the object pose does not change

between steps 2 and 3. The goal of this step is to log

the interaction forces, which is not possible in step 2,

due to the fact that the human guidance introduces

forces that cannot be distinguished from the

interaction forces. 4) From the end-effector

trajectory, forces and object pose, a set of frames

and velocity/force references relative to the object

pose are set, and a XML file describing the task is

generated.

Everything is computed relative to the visual

reference pose, and, therefore, the same task can be

always reproduced as long as the visual reference

has been localized. The task is specified according to

our FPI framework (Prats et al., 2013).

3 TASK REPRODUCTION

When the user asks the robot to perform a task on a

object, the corresponding XML file is loaded, and

the following steps are performed:

1) The robot first loads the visual reference model

and asks for a pose initialization. Currently the

user has to click on the visual reference,

although we are making automate this process

automatic. After the visual reference is

initialized, its edges are tracked and the full pose

is continuously estimated.

2) After the pose is estimated, the robot goes to the

contact point (relative to the visual reference)

and applies the required force.

Figure 1 shows four different reproductions of the

two tasks under different poses of the dishwasher.

4 CONCLUSION

This paper describes our work in progress towards

the automatic specification of physical interaction

tasks from a single demonstration assisted by a

human. The main application concerns showing our

future robot companions how to interact with

household appliances in a manner that can be easily

transferred to other robots. We adopt an approach

similar to what is done for showing this kind of

interaction to other humans: first we show how to

perform the task, then we let the other do the task

without our intervention and either approve it or

show the task again. The proposed approach relies

on our previously published physical interaction

framework, that allows to specify grasping and

interaction tasks in a robot-independent manner.

Therefore, tasks shown to one robot can be reused

by other robots, without the need of showing the

task again. This work is still preliminary and needs

further development, specially concerning reducing

the instructor intervention in aspects like reporting

the visual reference dimensions or making the pose

initialization. In addition, further experiments have

to be performed with different objects and

considering tasks that require several sequential

actions.

ACKNOWLEDGEMENTS

Support for this research was provided in part by

Ministerio de Economía y Competitividad

(DPI2011-27846), by Generalitat Valenciana

(PROMETEO/2009/052) and by Universitat Jaume I

(P1-1B2011-54). The authors wish to thank Prof.

Sukhan Lee for many helpful discussions and for

granting access to the Intelligent System Research

Center (Sungkyunkwan University, Korea) for the

experiments shown in Fig. 1.

REFERENCES

S. Calinon. Robot Programming by Demonstration: A

Probabilistic Approach. EPFL/CRC Press, 2009.

A. I. Comport, E. Marchand, and F. Chaumette. Robust

model-based tracking for robot vision. In IEEE/RSJ

Int. Conf. on Intelligent Robots and Systems, IROS04,

pages 692–697, 2004.

S. Ekvall and D. Kragic. Grasp recognition for

programming by demonstration. In IEEE International

Conference on Robotics and Automation, pages 748–

753, 2005.

M. Prats, A.P. del Pobil and P. J. Sanz, , Robot Physical

Interaction through the combination of Vision, Tactile

and Force Feedback , Springer Tracts in Advanced

Robotics, Vol. 84, Springer, Berlin, 2013.

J. Sorribes, M. Prats, and A. Morales. Visual robot hand

tracking based on articulated 3d models for grasping.

In IEEE International Conference on Robotics and

Automation, Anchorage, AK, May 2010.

BRIEF BIOGRAPHY

Angel Pasqual del Pobil is Professor of Computer

Science and Artificial Intelligence at Jaume I

University (Spain), founder director of the UJI

Robotic Intelligence Laboratory, and a Visiting

Professor at Sungkyungkwan University (Korea). He

holds a B.S. in Physics (Electronics, 1986) and a

Ph.D. in Engineering (Robotics, 1991), both from

the University of Navarra. He has been Co-Chair of

two Technical Committees of the IEEE Robotics and

Automation Society and is a member of the

Governing Board of the Intelligent Autonomous

Systems (IAS) Society and EURON. He has over

230 publications, including 11 books the last two

published recently by Springer: Robot Physical

Interaction through the combination of Vision,

Tactile and Force Feedback (2013) and Robust

Motion Detection in Real-life Scenarios (2012).

Prof. del Pobil was co-organizer some 40 workshops

and tutorials at ICRA, IROS, RSS, HRI and other

major conferences.. He was Program Co-Chair of

the 11th International Conference on Industrial and

Engineering Applications of Artificial Intelligence,

General Chair of five editions of the International

Conference on Artificial Intelligence and Soft

Computing (2004-2008) and Program Chair of

Adaptive Behaviour 2014. He is Associate Editor for

ICRA (2009-2013) and IROS (2007-2013) and has

served on the program committees of over 115

international conferences, such as IJCAI, ICPR,

ICRA, IROS, ICINCO, IAS, ICAR, etc. He has been

involved in robotics research for the last 27 years,

his past and present research interests include:

humanoid robots, service robotics, internet robots,

motion planning, mobile manipulation, visually-

guided grasping, robot perception, multimodal

sensorimotor transformations, robot physical and

human interaction, visual servoing, robot learning,

developmental robotics, and the interplay between

neurobiology and robotics. Professor del Pobil has

been invited speaker of 56 tutorials, plenary talks,

and seminars in 14 countries. He serves as associate

or guest editor for eight journals, and as expert for

research evaluation at the European Commission. He

has been Principal Investigator of 28 research

projects. Recent projects at the Robotic Intelligence

Lab funded by the European Commission include:

FP6 GUARDIANS (Group of Unmanned Assistant

Robots Deployed In Aggregative Navigation

supported by Scent detection), FP7 EYESHOTS

(Heterogeneous 3-D Perception Across Visual

Fragments), and FP7 GRASP (Emergence of

Cognitive Grasping through Emulation,

Introspection, and Surprise).