Automatic Tooth Identification in Dental Panoramic Images with

Atlas-based Models

Selma Guzel

1

, Ayse Betul Oktay

2

and Kadir Tufan

3

1

Department of Computer Engineering, Gebze Institute of Technology, 41400, Kocaeli, Turkey

2

Department of Computer Engineering, Istanbul Medeniyet University, 34700, Istanbul, Turkey

3

Department of Computer Engineering, Fatih University, 34500, Istanbul, Turkey

Keywords:

Tooth detection, Tooth labeling, Haar, SVM, Atlas-based Model.

Abstract:

After catastrophes and mass disasters, accurate and efficient identification of decedents requires an automatic

system which depends upon strong biometrics. In this paper, we present an automatic tooth detection and

labeling system based on panoramic dental radiographs. Although our ultimate objective is to identify dece-

dents by comparing the postmortem and antemortem dental radiographs, this paper only involves the tooth

detection and the tooth labeling stages. In the system, the tooth regions are first determined and the detection

module runs for each region individually. By employing the sliding window technique, the Haar features are

extracted from each window and the SVM classifies the windows as tooth or not. The labeling module labels

the candidate tooth positions determined by the SVM with an atlas-based model and the final tooth positions

are inferred. The novelty of our system is combining the atlas-based model with the SVM under the same

framework. We tested our system on 35 panoramic images and the results are promising.

1 INTRODUCTION

Decedent identification after catastrophes is very cru-

cial for many reasons including relieving the family’s

distress, issuing a death certificate for legacy, and in-

surance. Using dental panoramic radiographs (See

Figure 1(a)) for decedent identification satisfies the

limitations of the other biometrics, such as DNA and

fingerprint, due to the durable structure of teeth (Sen,

2010). However, if identification is performed manu-

ally, it takes a long time. Moreover, if some change-

able characteristics are utilized, the accuracy rate may

decrease (Zhou and Abdel-Mottaleb, 2005). There-

fore, an automatic dental identification system is very

important for fast and reliable decedent identification.

There exist many studies in the literature (Lin and

Lai, 2009; Mahoor and Abdel-Mottaleb, 2005; Push-

paraj et al., 2013) for identification based on dental ra-

diographs. The Automated Dental Identification Sys-

tem in (Abdel-Mottaleb et al., 2003) isolates the teeth

using the integral intensity projection method and it

is accepted as the pioneer in terms of the tooth isola-

tion approach. In (Zhou and Abdel-Mottaleb, 2005),

the snake method is employed to isolate the teeth in

advance of using the integral intensity projection to

determine the initial contours. These two studies are

tested on bitewing images. In (Jain et al., 2003), the

same method in (Zhou and Abdel-Mottaleb, 2005) is

used for tooth isolation before applying the Bayesian

rule to determine the tooth contours. It is tested on

both bitewing and panoramic images; but, the system

is semi-automatic. The system in (Jain et al., 2003)

eliminates the inaccurate segmentation lines using the

dental pulp which is also utilized in (Frejlichowski

and Wanat, 2011) instead of the gaps between the

teeth for separating the adjacent teeth. In (Lin et al.,

2010), the SVM classifier runs with several geometri-

cal tooth features to classify a tooth. The tooth identi-

fication is completed after labeling the teeth according

to a particular pattern. The system is tested only on

bitewing images. In (Jain and Chen, 2005), the fusion

of three SVM classifiers are used for tooth classifica-

tion and the Markov chain model is used for labeling.

The system is tested on a few panoramic dental radio-

graphs.

In this paper, we propose a novel tooth identifi-

cation system based on machine learning and atlas-

based models (Guzel, 2014). We combine the ap-

pearance information of teeth in panoramic images

with the geometrical information under the atlas-

based model. The appearance of teeth are extracted

with Haar descriptors (Viola and Jones, 2001) and

136

Guzel S., Betul Oktay A. and Tufan K..

Automatic Tooth Identification in Dental Panoramic Images with Atlas-based Models.

DOI: 10.5220/0005179701360141

In Proceedings of the International Conference on Pattern Recognition Applications and Methods (ICPRAM-2015), pages 136-141

ISBN: 978-989-758-077-2

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

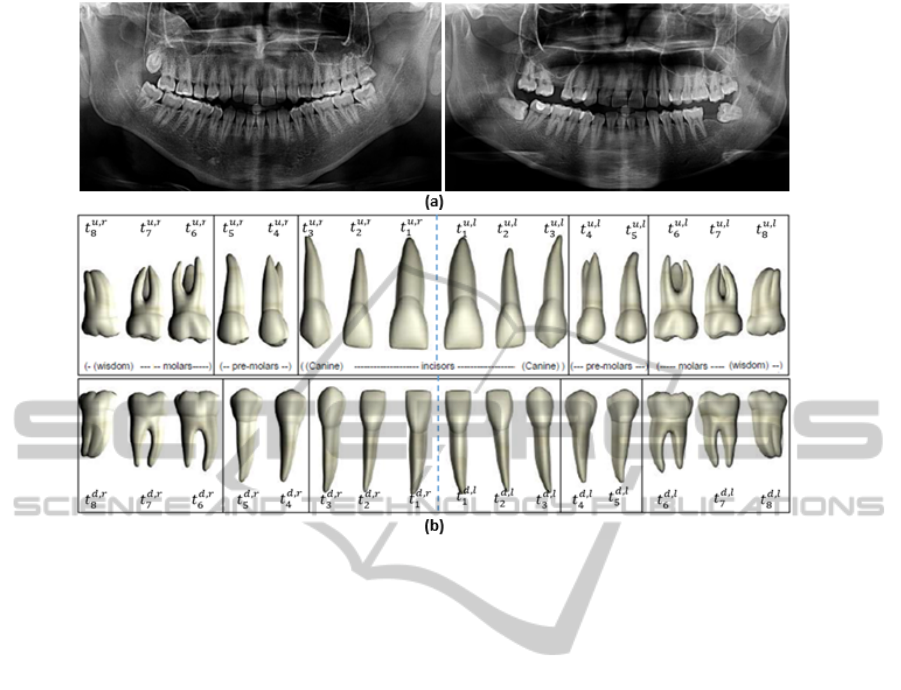

Figure 1: (a) Examples of dental panoramic radiographs, (b) layout of the teeth where t

j,s

i

represents the i

th

tooth on the jaw

j ∈ {up, down} and the side s ∈ {right, le ft}.

the Candidate Tooth Positions (CTP) are found by the

SVM. The final tooth labels are determined accord-

ing to the CTP and their spatial relationship using an

atlas-based framework.

Our method has several advantages. First, the can-

didate teeth are detected with the textural descriptors

without requiring a template for each tooth. The can-

didate teeth determined according to the local appear-

ance are incorporated efficiently with the atlas-based

models which are constructed considering the geo-

metric information about the teeth. In addition, our

system may work on intra-oral X-ray images with

small modifications.

The organization of this paper is as follows: The

framework of the proposed system is introduced in

Section 2. Section 3 presents the detection module

and Section 4 presents the labelling module. In Sec-

tion 5, the experimental results are evaluated and Sec-

tion 6 concludes the paper.

2 THE FRAMEWORK OF THE

PROPOSED SYSTEM

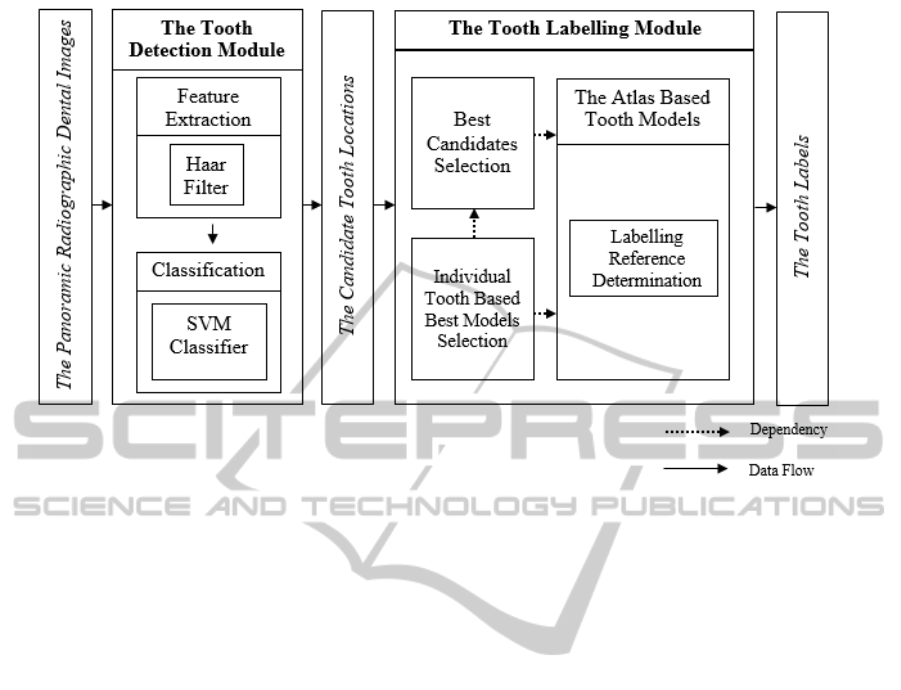

Our system consists of two modules which are candi-

date tooth detection module and the labeling module

(Figure 2). In the candidate tooth detection module,

the SVM detects the CTP based on the Haar features.

Then, in the labeling module, the optimal tooth po-

sitions are identified using the atlas-based modeling

approach.

3 TOOTH DETECTION MODULE

Each tooth has unique shape and appearance charac-

teristics. Most of the studies in the literature (Jain

et al., 2003; Abdel-Mottaleb et al., 2003; Zhou and

Abdel-Mottaleb, 2005) directly use the intensity val-

ues via histogram projection in order to detect the

teeth. However, in panoramic images the gap between

the teeth disappears and occlusions may occur be-

cause of stitching partial X-ray images taken from the

circular shaped jaw onto a 2-D image (Frejlichowski

and Wanat, 2011). Therefore, instead of detecting

the teeth with intensity change information, we pro-

pose using the textural and intensity descriptors to-

gether without requiring a model for each tooth. Note

that, the intensity based techniques (Abdel-Mottaleb

et al., 2003; Zhou and Abdel-Mottaleb, 2005) use lo-

cal image intensity information between the neighbor-

ing teeth, while our technique uses non-local informa-

tion including the intensity and texture of the teeth.

The Haar descriptors are used for feature extrac-

AutomaticToothIdentificationinDentalPanoramicImageswithAtlas-basedModels

137

Figure 2: The proposed tooth identification system.

tion. The Haar features are similar to Haar basis func-

tions (Papageorgiou et al., 1998) and they extract the

intensity and texture features effectively.

There are 32 teeth in a normal adult mouth where

8 of them are incisors, 4 of them are canines, 8

of them are premolars, and 12 of them are molars.

Let t = {t

j,s

1

, t

j,s

2

, ..., t

j,s

8

} be the tooth labels on a jaw

where j ∈ {up, down} is the upper or lower jaw and

s ∈ {le ft, right} is the side of the mouth. We divide

the teeth on one side of the jaw into 3 different subsets

where t

m

= {t

j,s

1

, t

j,s

2

, t

j,s

3

} are the molar teeth on side

s of jaw j, t

pm

= {t

j,s

4

, t

j,s

5

} are the premolar teeth on

side s of jaw j, and t

i

= {t

j,s

6

, t

j,s

7

, t

j,s

8

} are the incisors

and canine teeth on side s of jaw j (Figure 1b).

Each tooth subset {t

j,s

m

, t

j,s

pm

, t

j,s

i

} on a jaw j is

trained and tested separately with the SVM. For train-

ing, we use the manually delineated tooth images for

positive samples and non-tooth regions around the

tooth locations as negative samples. In testing, we

use a sliding window approach. For a pair {v, y}, let v

be the feature vector and y = {0, 1} be the class where

y = 0 is the non-tooth and y = 1 is the tooth class. The

windows classified as tooth (y = 1) , are called as the

CTP and they are used in the tooth labeling module.

4 LABELING MODULE

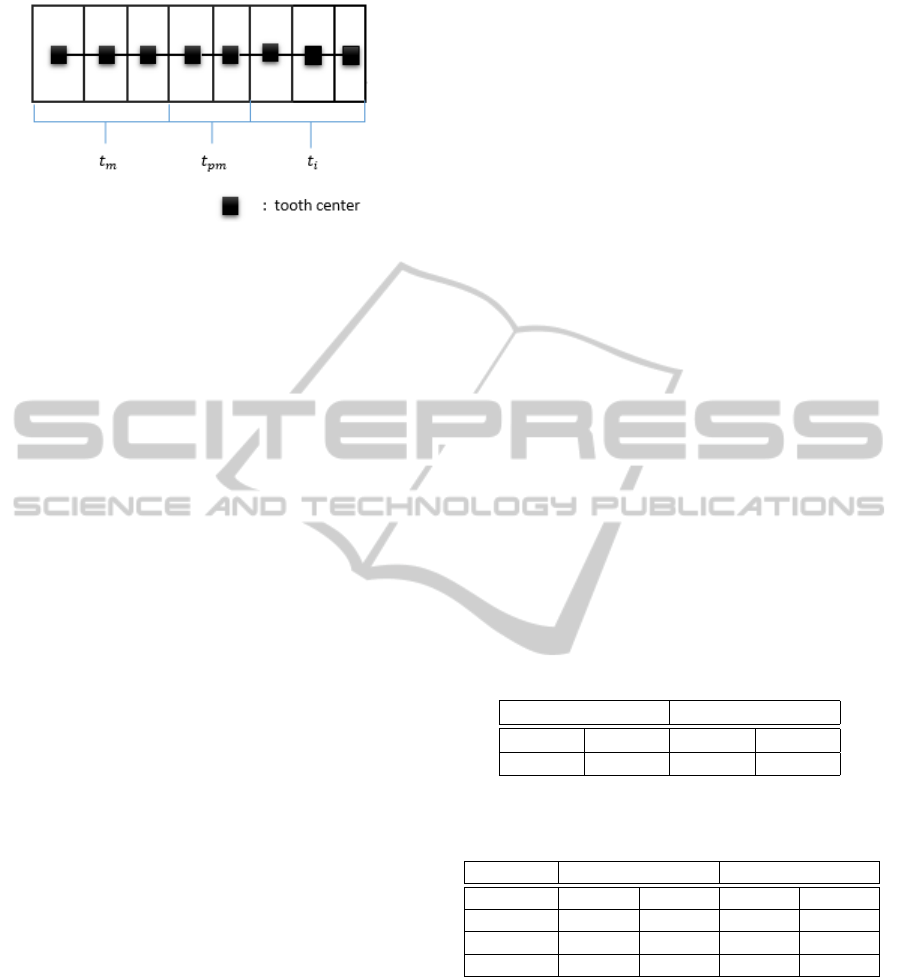

In order to infer the final positions and labels of

teeth, we use an atlas based model. Let A

k

=

{a

j,s

1

, a

j,s

2

, ..., a

j,s

8

} be an atlas (Figure 3) where each

node a

i

in the atlas represents a tooth t

j,s

i

. We con-

sider the center of the mouth gap as the initial refer-

ence point for labeling to construct the model. Be-

cause, while taking the panoramic X-ray image, the

movement of the patient is prevented by the the den-

tal panoramic system positioning technology which

involves bite fork, forehead support, and chin rest. As

a result, corrupting the teeth order is prohibited. We

use the integral intensity projection method (Zhou and

Abdel-Mottaleb, 2005) to find the center of the mouth

gap.

Consider A = {A

1

, A

2

, ..., A

n

} as the atlas set

learned from the training set. According to the CTP,

our objective is to infer the optimal atlas A

∗

∈ A that

best matches with the geometrical information rep-

resented by the CTP. In order to find A

∗

, we first

eliminate the inappropriate CTP according to the cor-

responding search space in the atlas based models.

After that, the appropriate candidates are labeled for

each tooth model. Let the cost g

c

(l

k

, a

k

) represent the

distance of the candidate tooth position l

k

to the cor-

responding tooth center a

k

. The candidate l

k

which

is the closest one to the center a

k

of the correspond-

ing tooth in the model, namely whose g

c

(l

k

, a

k

) is the

minimum, is selected as the best candidate l

∗

k

of the

same labeled candidates l

k

. In order to find the best

tooth model A

∗

, our approach is to determine the best

tooth model per tooth t

k

∈ t

j,s

d

in the search space. Let

||a

k−1

, a

k

|| represent the distance between the centers

of the adjacent teeth as the optimum distance and let

ICPRAM2015-InternationalConferenceonPatternRecognitionApplicationsandMethods

138

Figure 3: The atlas-based model used for labeling.

||l

k−1

, l

k

|| represent the distance between the CTP as

the actual distance. The tooth cost cost(t

k

) computes

the difference between the optimum and the actual

distance as

cost(t

k

) = abs(||a

k−1

, a

k

|| − ||l

k−1

, l

k

||), (1)

where abs is the absolute value function. The pro-

cedure of computing the cost(t

k

) is repeated for all of

the teeth t

k

∈ t

j,s

d

and for all of the atlas models A

k

∈ A.

For each tooth, the tooth costs, which are computed

according to the atlas models, are summed up to find

the best model A

∗

per tooth and the teeth are labeled

according to the selected atlas models for each tooth

with the following equation:

A

∗

= argmin

cost

j,s

i

∑

cost

j,s

i

(t

k

), (2)

where cost

j,s

i

represents the cost of tooth t

i

on jaw j

and side s.

5 EXPERIMENTAL RESULTS

The proposed system is tested on a dataset contain-

ing panoramic dental images. The images are taken

from 35 different subjects over 18 years old. The

panoramic images involve implants, dental works,

and missing teeth. In the detection stage, the SVM is

trained using 50 positive and 100 negative delineated

tooth images. The maximum size of the training tooth

images is used as the window size.

Our software, which is written in C++, utilizes the

Haar filters of the OpenCV library for feature extrac-

tion and uses the SVM function in the Waikato En-

vironment for Knowledge Analysis (WEKA) (Hall,

2009) program for classification.

The prediction accuracy of the detection module

is evaluated using the metric which calculates the rate

of the accurate predictions to all the predictions. In

order to measure the accuracy rate, we initially es-

tablish reference images for each test image in which

the contours of the teeth are marked using our system

according to the expert information. A prediction is

evaluated as accurate if a tooth in the reference im-

age is inside the corresponding window at least %70

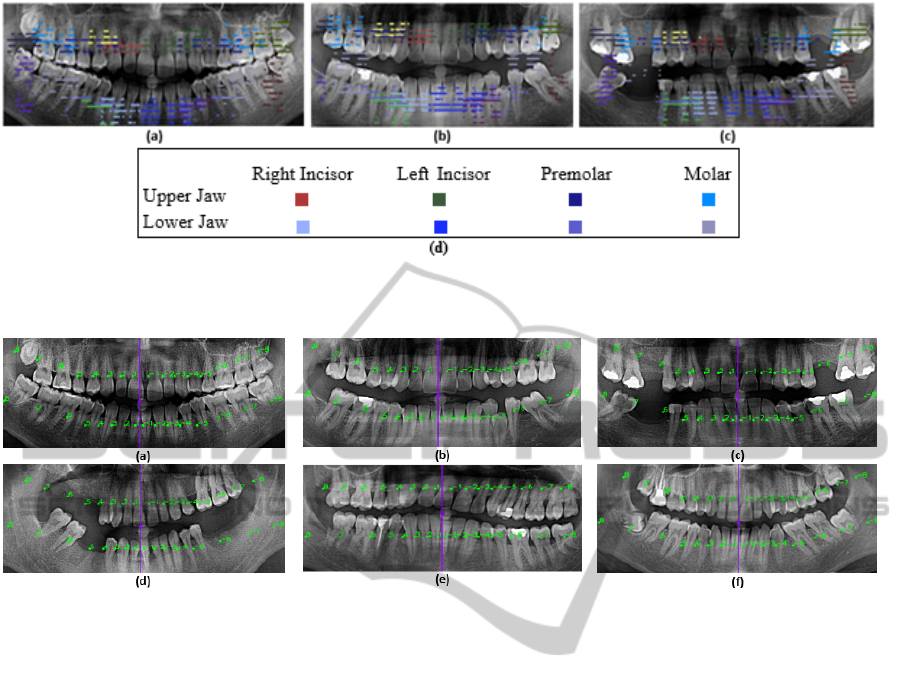

rate. Table 2 shows the numerical results and Figure

4 shows the visual results of the detection module.

The accuracy rate of the detection module is low

due to the similarity of teeth in the different tooth sets.

Because the structure of the molars are very similar

to the premolars, the neighboring molars are also de-

tected as premolars. In addition, the extracted features

may not be representative enough to detect a particu-

lar tooth of a tooth set in a dental panoramic image.

Despite of the fact that the accuracy is increased by

the labelling module, more robust features may be in-

vented to get better results. It should be emphasized

that this module only tries to find the CTP which limit

the search space for the labeling module.

In the labeling module, we establish 7 atlas based

models taking the minimum and the maximum tooth

widths in the training set into account. We evaluate

the labeling module using a similar metric with the

detection module. However, in this module, a predic-

tion is accepted as accurate if the center of the pre-

dicted window is inside the corresponding reference

tooth contours. The numerical and visual results of

the labeling module are presented by Table 1 and Fig-

ure 5, respectively.

Table 1: The average accuracy rates of the tooth labeling

module.

Upper Jaw Lower Jaw

Right Left Right Left

0.813% 0.808% 0.835% 0.800%

Table 2: The average accuracy rates of the proposed tooth

detection module.

Upper Jaw Lower Jaw

Right Left Right Left

molar 0.472% 0.478% 0.677% 0.685%

premolar 0.432% 0.423% 0.359% 0.419%

incisor 0.587% 0.595% 0.800% 0.842%

The labeling is based on (i) the candidate tooth lo-

cations, (ii) the mouth gap, (iii) the labeling model,

and (iv) the labeling method. Therefore, the results

may be enhanced if (i) the detection stage is im-

proved, (ii) the image is enhanced before detection,

(iii) more complex models such as the Markov chain

graphical model is utilized to represent the mouth

and the teeth better, and (iv) the methods are en-

hanced such that both the global and local relations

between the teeth and the mouth are considered suf-

ficiently. The results of the detection module is dis-

AutomaticToothIdentificationinDentalPanoramicImageswithAtlas-basedModels

139

Figure 4: (a-c) The visual detection results. Each color represents one tooth class t

j,s

c

where c ∈ {molar, premolar, incisor},

jaw j ∈ {up, down}, and side s ∈ {right, le f t}, and (d) represents the colors and corresponding tooth classes.

Figure 5: (a-f) The final labels determined by our system. In the images (a-c) the final labels are accurately determined;

however in the images (d-f) some of the labels are inaccurately determined because of inaccurate mouth gap prediction in (d)

and (f) and the obliquity in (e).

cussed above. In addition, instead of binary values,

probability scores representing the accuracy degree

may be produced for the candidate detections and

these values may be utilized for labeling. Further-

more, different feature descriptors and classifiers may

be fused to determine the most probable candidates.

6 CONCLUSIONS

In this paper, we introduce a tooth identification sys-

tem consisting of the detection and labeling modules.

The system first produces the CTP by the SVM classi-

fier which uses the Haar features. After that, the label-

ing is employed according to the optimal atlas based

model within the probable models which are con-

structed based on the geometrical information. The

optimal model selection is performed with the cost

function minimization technique. The cost function

computes the distances of the candidate locations and

the expected locations. The results show that our al-

gorithm is promising to detect and label the teeth in

the panoramic radiographic images.

As future work, we plan to enhance both the detec-

tion and the labeling approaches by taking the global

appearance of the panoramic image into account and

by using more salient tooth features for tooth detec-

tion. Moreover, the best candidates may be deter-

mined by fusing the results of several feature descrip-

tors and the classifiers, such that each component in-

creases the probability score if the candidate is de-

tected. In summary, the system is capable to be en-

hanced to produce more accurate results.

ACKNOWLEDGEMENTS

This work was supported by TUBITAK project

named “113E114- Human Identification using Dental

Radiographs as Biometrics”.

REFERENCES

Abdel-Mottaleb, M., Nomir, O., Nassar, D., Fahmy, G.,

and Ammar, H. (2003). Challenges of developing an

automated dental identification system. In Circuits

and Systems, 2003 IEEE 46th Midwest Symposium on,

volume 1, pages 411–414 Vol. 1.

ICPRAM2015-InternationalConferenceonPatternRecognitionApplicationsandMethods

140

Frejlichowski, D. and Wanat, R. (2011). Extraction of teeth

shapes from orthopantomograms for forensic human

identification. In Real, P., Diaz-Pernil, D., Molina-

Abril, H., Berciano, A., and Kropatsch, W., editors,

Computer Analysis of Images and Patterns, volume

6855 of Lecture Notes in Computer Science, pages

65–72. Springer Berlin Heidelberg.

Guzel, S. (2014). Automatic tooth detection and labelling

from panoramic radiographic images. Master’s thesis,

Thesis, Fatih University.

Hall, M., F. H. P. R. W. (2009). The weka data mining

software:an update.

Jain, A., Chen, H., and Minut, S. (2003). Dental biomet-

rics: Human identification using dental radiographs.

In Kittler, J. and Nixon, M., editors, Audio- and Video-

Based Biometric Person Authentication, volume 2688

of Lecture Notes in Computer Science, pages 429–

437. Springer Berlin Heidelberg.

Jain, A. K. and Chen, H. (2005). Registration of dental at-

las to radiographs for human identification. In Jain,

A. K. and Ratha, N. K., editors, Biometric Technology

for Human Identification II, volume 5779 of Society

of Photo-Optical Instrumentation Engineers (SPIE)

Conference Series, pages 292–298.

Lin, P. and Lai, Y. (2009). An effective classification sys-

tem for dental bitewing radiographs using entire tooth.

In Intelligent Systems, 2009. GCIS ’09. WRI Global

Congress on, volume 4, pages 369–373.

Lin, P., Lai, Y., and Huang, P. (2010). An effective classi-

fication and numbering system for dental bitewing ra-

diographs using teeth region and contour information.

Pattern Recognition, 43(4):1380 – 1392.

Mahoor, M. H. and Abdel-Mottaleb, M. (2005). Classifica-

tion and numbering of teeth in dental bitewing images.

Pattern Recognition, 38(4):577 – 586.

Papageorgiou, C., Oren, M., and Poggio, T. (1998). A gen-

eral framework for object detection. In Computer Vi-

sion, 1998. Sixth International Conference on, pages

555–562.

Pushparaj, V., Gurunathan, U., and Arumugam, B. (2013).

An effective dental shape extraction algorithm using

contour information and matching by mahalanobis

distance. Journal of Digital Imaging, 26(2):259–268.

Sen, D. R. (2010). Forensic Dentistry. CRC Press.

Viola, P. and Jones, M. (2001). Rapid object detection using

a boosted cascade of simple features. In Computer Vi-

sion and Pattern Recognition, 2001. CVPR 2001. Pro-

ceedings of the 2001 IEEE Computer Society Confer-

ence on, volume 1, pages I–511–I–518 vol.1.

Zhou, J. and Abdel-Mottaleb, M. (2005). A content-based

system for human identification based on bitewing

dental x-ray images. Pattern Recogn., 38(11):2132–

2142.

AutomaticToothIdentificationinDentalPanoramicImageswithAtlas-basedModels

141