Thompson Sampling in the Adaptive Linear Scalarized

Multi Objective Multi Armed Bandit

Saba Q. Yahyaa, Madalina M. Drugan and Bernard Manderick

Department of Computer Science, Vrije Universiteit Brussel, Pleinlaan 2, 1050 Brussels, Belgium

Keywords:

Multi-armed Bandit Problems, Multi-objective Optimization, Linear Scalarized Function, Scalarized Function

Set, Thompson Sampling Policy.

Abstract:

In the stochastic multi-objective multi-armed bandit (MOMAB), arms generate a vector of stochastic normal

rewards, one per objective, instead of a single scalar reward. As a result, there is not only one optimal arm,

but there is a set of optimal arms (Pareto front) using Pareto dominance relation. The goal of an agent is to

find the Pareto front. To find the optimal arms, the agent can use linear scalarization function that transforms

a multi-objective problem into a single problem by summing the weighted objectives. Selecting the weights is

crucial, since different weights will result in selecting a different optimum arm from the Pareto front. Usually,

a predefined weights set is used and this can be computational inefficient when different weights will optimize

the same Pareto optimal arm and arms in the Pareto front are not identified. In this paper, we propose a

number of techniques that adapt the weights on the fly in order to ameliorate the performance of the scalarized

MOMAB. We use genetic and adaptive scalarization functions from multi-objective optimization to generate

new weights. We propose to use Thompson sampling policy to select frequently the weights that identify new

arms on the Pareto front. We experimentally show that Thompson sampling improves the performance of the

genetic and adaptive scalarization functions. All the proposed techniques improves the performance of the

standard scalarized MOMAB with a fixed set of weights.

1 INTRODUCTION

Multi-Objective Optimization (MOO) problem with

conflicting objectives is present everywhere in the

real-world. For instance, in shipping firm, the con-

flicting objectives could be consist of the shipping

time and the cost. At the same time, shorten shipping

time is needed in order to improve customer satisfac-

tion, while also reducing the number of used ships to

reduce the operating cost. It is obvious that adding

more ships will reduce the needed shipping time but

will increase the operating cost. The goal of the MOO

with conflicted objectives is to tradeoff the conflicting

objectives. The Multi-Objective Multi-Armed Ban-

dit (MOMAB) problem (Drugan and Nowe, 2013; S.

Q. Yahyaa and Manderick, 2014b) is the simplest ap-

proach to representing the MOO problem.

MOMAB problem is a sequential stochastic learn-

ing problem. At each time step t, an agent pulls one

arm i from an available set of arms A and receives a

reward vector r

r

r

i

from the arm i with D dimensions

(or objectives) as feedback signal. The reward vec-

tor is drawn from a probability distribution vector,

for example from a normal probability distribution

N(µ

µ

µ

i

,σ

σ

σ

2

i

), where µ

µ

µ

i

is the true mean vector and σ

σ

σ

2

i

is

the covariance matrix parameters of the arm i. The

reward vector r

r

r

i

that the agent receives from the arm

i is independent from all other arms and independent

from the past reward vectors of the selected arm i.

Moreover, the mean vector of the arm i has indepen-

dent D distributions, i.e. σ

σ

σ

2

is a diagonal covariance

matrix. We assume that the true mean vector and co-

variance matrix of each arm i are unknown parame-

ters to the agent. Thus, by drawing each arm i, the

agent maintains estimations of the true mean vector

and the diagonal covariance matrix (or the variance

vector) which are known as

ˆ

µ

µ

µ

i

and

ˆ

σ

σ

σ

2

i

, respectively.

The MOMAB problem has a set of Pareto optimal

arms (Pareto front) A

∗

, that are incomparable, i.e. can

not be classified using a designed partial order rela-

tions (Zitzler and et al., 2002). The agent has to fig-

ure out the optimal arms to minimize the total Pareto

loss of not pulling the optimal arms. At each time

step t, the Pareto loss (or Pareto regret) is the distance

between the set mean of Pareto optimal arms and the

mean of the selected arm (Drugan and Nowe, 2013).

55

Yahyaa S., Drugan M. and Manderick B..

Thompson Sampling in the Adaptive Linear Scalarized Multi Objective Multi Armed Bandit.

DOI: 10.5220/0005184400550065

In Proceedings of the International Conference on Agents and Artificial Intelligence (ICAART-2015), pages 55-65

ISBN: 978-989-758-074-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

Thus, the total Pareto regret is the cumulativesumma-

tion of the Pareto regret over t time steps.

The Pareto front A

∗

can be found for example,

by using linear scalarized function (f) (Eichfelder,

2008). Linear scalarized function is simple and in-

tuitive. Given a predefined set weight w

w

w, the linear

scalarized function f weighs each value of the mean

vector of an arm i, converts the multi-objective space

to a single-objective one by summing the weighted

mean values and selects the optimal arm i

∗

that has

the maximum scalarized function. However, solving

a multi-objective optimization problem means find-

ing the Pareto front A

∗

. Thus, we need various lin-

ear scalarized functions F

F

F, each scalarized function

f

s

, f

s

∈ F

F

F, s = 1,·· · ,S has a corresponding set of

weight w

w

w

s

, to generate the variety of elements belong-

ing to the Pareto front. The predefined total weight

setW

W

W, W

W

W = {w

w

w

1

,· ·· ,w

w

w

S

} is uniformly random spread

sampling in the weighted space (Das and Dennis,

1997). However, there is no guarantee that the to-

tal weight set W

W

W can find all the optimal arms in the

Pareto front A

∗

. To improve the performance of the

linear scalarized function (S. Q. Yahyaa and Mander-

ick, 2014a) have used Knowledge Gradient (KG) pol-

icy (I. O. Ryzhov and Frazier, 2011) in the MOMAB

problem, resulting Linear Scalarized Knowledge Gra-

dient Function (LS-KG-F).

In this paper, we improve the performance of

the linear scalarized knowledge gradient function LS-

KG-F by introducing techniques from multi-objective

optimization that redefine the weights for the weight

set w

w

w. We either generate a new weight set w

w

w by

using genetic operators that change the weights di-

rectly (Drugan, 2013) or adapt the weights by using

the arms in the Pareto front like in (J. Dubois-Lacoste

and Stutzle, 2011). We propose also the Thompson

sampling policy (Thompson, 1933) to select from the

total weight set W

W

W, the weight set w

w

w that identifies a

larger set of optimal arms from the Pareto front A

∗

.

The rest of the paper is organized as follows:

In Section 2 we introduce the multi-objective multi-

armed bandit problem. In Section 3 we present the

linear scalarized functions and the scalarized multi-

objective bandits algorithm. In Section 4 we intro-

duce algorithms to determine the weight set, the stan-

dard, the adaptive and the genetic algorithms. In

Section 5 we introduce the adaptive scalarized multi-

objective bandits algorithm that uses Thompson sam-

pling policy to select the appropriate weight set. In

Section 6, we describe the experiments set up fol-

lowed by experimental results. Finally, we conclude

and discuss future work.

2 MULTI OBJECTIVE MULTI

ARMED BANDITS PROBLEM

Let us consider the MOMABs problems with |A| ≥ 2

arms and with independent D objectives per arm. At

each time step t, the agent selects one arm i and

receives a reward vector r

r

r

i

. The reward vector r

r

r

i

is drawn from a corresponding normal probability

distribution N(µ

µ

µ

i

,σ

σ

σ

2

i

) with unknown mean parameter

vector µ

µ

µ

i

, µ

µ

µ

i

= [µ

1

i

,· ·· ,µ

D

i

]

T

and unknown variance

parameter vector σ

σ

σ

2

i

, σ

σ

σ

2

i

= [σ

2,1

i

,· ·· ,σ

2,D

i

]

T

, where T

is the transpose. Thus, by drawing each arm i, the

agent maintains estimate of the mean parameter vec-

tor

ˆ

µ

µ

µ

i

and the variance

ˆ

σ

σ

σ

2

i

parameter vector, and com-

putes the number of times N

i

arm i is drawn. The

agent updates the estimated mean ˆµ

d

i

, the estimated

variance

ˆ

σ

2,d

i

of the selected arm i in each objective

d,d ∈ D and the number of times N

i

arm i has been

selected as follows (Powell, 2007):

N

i+1

= N

i

+ 1 (1)

ˆµ

d

i+1

= (1−

1

N

i+1

) ˆµ

d

i

+

1

N

i+1

r

d

t+1

(2)

ˆ

σ

2,d

i+1

=

N

i+1

− 2

N

i+1

− 1

ˆ

σ

2,d

i

+

1

N

i+1

(r

d

t+1

− ˆµ

d

i

)

2

(3)

where N

i+1

is the updated number of times arm i has

been selected, ˆµ

d

i+1

is the updated estimated mean,

and

ˆ

σ

2,d

i+1

is the updated estimated variance of the arm

i in the objective d and r

d

t+1

is the observed reward of

the arm i in the objective d.

When the objectives are conflicting with one an-

other then the mean component µ

d

i

of arm i corre-

sponding with objective d, d ∈ D, can be better than

the component µ

d

j

of another arm j but worse if we

compare the components for another objective d

′

:

µ

d

i

> µ

d

j

but µ

d

′

i

< µ

d

′

j

for objectives d and d

′

, respec-

tively. The agent has a set of optimal arms (Pareto

front) A

∗

which can be found by the Pareto dominance

relation (or Pareto partial order relation).

The Pareto dominance relation finds the Pareto

front A

∗

directly in the multi-objectiveMO space (Zit-

zler and et al., 2002). It uses the following relations

between the mean vectors of two arms. We use i and

j to refer to the mean vector (estimated mean vector

or true mean vector) of arms i and j, respectively:

Arm i dominates or is better than j, i ≻ j, if there

exists at least one objective d for which i

d

≻ j

d

and

for all other objectives d

′

we have i

d

′

j

d

′

. Arm i is

incomparable with j, i k j, if and only if there exists

at least one objective d for which i

d

≻ j

d

and there

exists another objective d

′

for which i

d

′

≺ j

d

′

. Arm i

is not dominated by j, j ⊁ i, if and only if there exists

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

56

at least one objective d for which j

d

≺ i

d

. This means

that either i ≻ j or i k j.

Using the above relations, Pareto front A

∗

,A

∗

⊂ A

be the set of arms that are not dominated by all other

arms. Moreover, the optimal arms in A

∗

are incompa-

rable with each other.

In the MOMAB, the agent has to find the Pareto

front A

∗

, therefore, the performance measure is the

Pareto regret (Drugan and Nowe, 2013). The Pareto

regret measure (R

Pareto

) measures the distance be-

tween a mean vector of an arm i that is pulled at

time step t and the Pareto front A

∗

. Pareto regret

R

Pareto

is calculated by finding firstly the virtual dis-

tance dis

∗

. The virtual distance dis

∗

is defined as

the minimum distance that is added to the mean

vector of the pulled arm µ

µ

µ

t

at time step t in each

objective to create a virtual mean vector µ

µ

µ

∗

t

, µ

µ

µ

∗

t

=

µ

µ

µ

t

+ ε

ε

ε

∗

that is incomparable with all the arms in

Pareto set A

∗

, i.e. µ

µ

µ

∗

t

||µ

µ

µ

i

∀

i∈A

∗

. Where ε

ε

ε

∗

is a vec-

tor, ε

ε

ε

∗

= [dis

∗,1

,· ·· ,dis

∗,D

]

T

. Then, the Pareto regret

R

Pareto

, R

Pareto

= dis(µ

µ

µ

t

,µ

µ

µ

∗

t

) = dis(ε

ε

ε

∗

,0

0

0) is the dis-

tance between the mean vector of the virtual arm µ

µ

µ

∗

t

and the mean vector of the pulled arm µ

µ

µ

t

at time step

t, where dis, dis(µ

µ

µ

t

,µ

µ

µ

∗

t

) = (

∑

D

d=1

(µ

∗,d

t

− µ

d

t

)

2

)

(1/2)

is

the Euclidean distance. Thus, the regret of the Pareto

front is 0 for optimal arms, i.e. the mean of the opti-

mal arm coincides itself.

3 THE SCALARIZED

MULTI-OBJECTIEVE BANDITS

Linear scalarization function converts the multi-

objective into single-objective optimization (Eich-

felder, 2008). However, solving a multi-objective op-

timization problem means finding the Pareto front A

∗

.

Thus, we need a set of scalarized functions F

F

F, F

F

F =

{ f

1

,· ·· , f

s

,· ·· , f

S

} to generate a variety of elements

belonging to the Pareto front A

∗

. Each scalarized

function f

s

, f

s

∈ F

F

F has a corresponding predefined

set of weight w

w

w

s

, w

w

w

s

∈ W

W

W, whereW

W

W = (w

w

w

1

,· ·· ,w

w

w

S

).

The linear scalarization function assigns to each

value of the mean vector of an arm i a weight w

d

and the result is the sum of these weighted mean

values. Given a predefined set of weights w

w

w

s

, w

w

w

s

=

(w

1

,· ·· ,w

D

) such that

∑

D

d=1

w

d

= 1, the linear scalar-

ized across mean vector is:

f

s

(µ

µ

µ

i

) = w

1

µ

1

i

+ ··· + w

D

µ

D

i

(4)

where f

s

(µ

µ

µ

i

) is a linear scalarized function s, s ∈ S

over the mean vector µ

µ

µ

i

of the arm i. After transform-

ing the multi-objective problem to a single-objective

problem, the linear scalarized function f

s

selects the

arm i

∗

f

s

that has the maximum linear scalarized func-

tion value:

i

∗

f

s

= argmax

1≤i≤A

f

s

(µ

µ

µ

i

)

The linear scalarization is very popular because of

its simplicity. However, it can not find all the optimal

arms in the Pareto front A

∗

(Das and Dennis, 1997).

To improve the performance of the linear scalar-

ized function, (S. Q. Yahyaa and Manderick, 2014b)

have extended Knowledge Gradient (KG) policy (I.

O. Ryzhov and Frazier, 2011) to the MOMAB prob-

lem, resulting linear scalarization function knowledge

gradient. (S. Q. Yahyaa and Manderick, 2014b) have

proposed two variants of linear scalarized KG, lin-

ear saclarized KG across arms (LS1-KG) and linear

saclarized KG across dimensions (LS2-KG). Since

LS1-KG performs better than LS2-KG, we will use

linear scalarized KG across arms LS1-KG.

The linear scalarized-KG across arms (LS1-

KG) converts the multi-objective estimated mean

ˆ

µ

µ

µ

i

,

ˆ

µ

µ

µ

i

= [ˆµ

1

i

,· ·· , ˆµ

D

i

]

T

and estimated variance

ˆ

σ

σ

σ

2

i

,

ˆ

σ

σ

σ

2

i

=

[

ˆ

σ

2,1

i

,· ·· ,

ˆ

σ

2,D

i

]

T

of each arm to one-objective, then

computes the corresponding bound (or term) ExpB

i

.

At each time step t, LS1-KG weighs both the esti-

mated mean vector

ˆ

µ

µ

µ

i

and estimated variance vector

ˆ

σ

σ

σ

2

i

of each arm i, converts the multi-objective vec-

tors to one-objective values by summing the elements

of each vector. Thus, we have one-objective multi-

armed bandits problem. The KG policy calculates

for each arm, a bound which depends on all avail-

able arms and selects the arm that has the maximum

estimated mean plus the bound. The LS1-KG is as

follows:

eµ

i

= f

s

(

ˆ

µ

µ

µ

i

) = w

1

ˆµ

1

i

+ ··· + w

D

ˆµ

D

i

∀

i

(5)

e

σ

2

i

= f

s

(

ˆ

σ

σ

σ

2

i

) = w

1

ˆ

σ

2,1

i

+ ··· + w

D

ˆ

σ

2,D

i

∀

i

(6)

e

¯

σ

2

i

=

e

σ

2

i

/N

i

∀

i

(7)

v

i

=

e

¯

σ

i

g

−|

eµ

i

− max

j6=i, j∈A

eµ

j

e

¯

σ

i

|

∀

i

(8)

where f

s

is a linear scalarization function that has a

predefined set of weight w

w

w

s

= (w

1

,· ·· ,w

D

), eµ

i

, and

e

σ

2

i

are the modified estimated mean, and the modi-

fied estimated variance of an arm i, respectively which

are one-objective values and

e

¯

σ

i

is the modified Root

Mean Square Error (RMSE) of an arm i. The v

i

is

the KG index of an arm i. The function g(ζ), g(ζ) =

ζΦ(ζ) + φ(ζ), where Φ, and φ are the cumulative

distribution, and the density of the standard normal

density N(0,1), respectively. Linear scalarized-KG

ThompsonSamplingintheAdaptiveLinearScalarizedMultiObjectiveMultiArmedBandit

57

across arms selects the optimal arm i

∗

LS

1

KG

according

to:

i

∗

LS

1

KG

= argmax

i=1,···,|A|

(eµ

i

+ ExpB

i

) (9)

= argmax

i=1,···,|A|

(eµ

i

+ (L− t) ∗ |A|D ∗ v

i

) (10)

where ExpB

i

is the bound of arm i, |A| is the number

of arms, D is the number of objectives, L is the hori-

zon of an experiments, i.e. length of trajectories and t

is the current time step.

The algorithm. The pseudocode of the Scalarized

Multi-objectieve Multi-Armed Bandit (SMOMAB)

algorithm is given in Figure 1. The linear scalarized-

KG across arms LS1-KG function is f. The scalar-

ized function set isF

F

F = ( f

1

,· ·· , f

S

), where each LS1-

KG function f

s

has a predefined weight set, w

w

w

s

=

(w

1,s

,· ·· ,w

D,s

) and the number of scalarized function

is |S|, |S| = D+ 1.

The algorithm in Figure 1 plays each arm for each

scalarized function s, Initial plays (step: 2)

1

. N

s

is the

number of times the scalarized function s is pulled and

N

s

i

is the number of times the arm i under the scalar-

ized function s is pulled. (r

r

r

i

)

s

is the reward of the

pulled arm i under the scalarized function s which is

drawn from a normal distribution N(µ

µ

µ,σ

σ

σ

2

r

), whereµ

µ

µ is

the unknown true mean vector and σ

σ

σ

2

r

is the unknown

true variance vector of the reward. (

ˆ

µ

µ

µ

i

)

s

and (

ˆ

σ

σ

σ

i

)

s

are the estimated mean and standard deviation vectors

of the arm i under the scalarized function s, respec-

tively. After initial playing, the algorithm chooses

uniformly at random one of the scalarized function

s (step: 4). The algorithm determines the correspond-

ing weight set w

w

w

s

such that

∑

D

d=1

w

d,s

= 1 (step: 5).

The weight set w

w

w

s

can be specified by using stan-

dard algorithm (Das and Dennis, 1997), adaptive al-

gorithm (J. Dubois-Lacoste and Stutzle, 2011), or ge-

netic algorithm (Drugan, 2013), we refer to Section 4

for more details. If the SMOMAB algorithm uses the

standard algorithm to set the weights, then the total

weight set W

W

W = (w

w

w

1

,· ·· ,w

w

w

S

) is fixed until the end of

playing L steps. However, if the SMOMAB algorithm

uses the adaptive or the genetic algorithm, then the

total weight setW

W

W = (w

w

w

1

,· ·· ,w

w

w

S

) will change at each

time step. The SMOMAB algorithm uses the prede-

fined total weight set W

W

W till the end of playing Initial

steps, then at each time step the adaptive and the ge-

netic algorithms generate new weights. The algorithm

selects the optimal arm (i

∗

)

s

that maximizes the LS1-

KG function (step: 6) and simulates the selected arm

(i

∗

)

s

to observe the reward vector (r

r

r

i

∗

)

s

(step: 7). The

1

We use s to refer the scalarized function f

s

that has a

predefined weight set w

w

w

s

= (w

1

,·· · ,w

d

,·· · , w

D

).

estimated mean vector (

ˆ

µ

µ

µ

i

∗

)

s

, estimated standard de-

viation vector (

ˆ

σ

σ

σ

i

∗

)

s

, and the number N

s

i

∗

of the se-

lected arm and the number of the pulled scalarized

function N

s

are updated (step: 8). Finally, the al-

gorithm computes the Pareto regret (step: 9). This

procedure is repeated until the end of playing L steps

which is the horizon of an experiment.

Note that, the algorithm in Figure 1 is an adapted

version of the scalarized MOMABs from (Drugan and

Nowe, 2013), but here the reward is drawn from nor-

mal distribution and the weight set w

w

w

s

is determined.

1.

Input:

Horizon of an experiment

L

;number

of arms

|A|

;number of objectives

D

;number of

scalarized functions

|S| = D+ 1

;reward vector

r

r

r ∼ N(µ

µ

µ,σ

σ

σ

2

r

)

.

2.

Initialize:

Total Weight set

W

W

W = (w

w

w

1

,· ··,w

w

w

D+1

)

For each scalarized function

s = 1

to

S

Play: each arm

i

,

Initial

steps

Observe:

(r

i

)

s

Update:

N

s

← N

s

+ 1

;

N

s

i

← N

s

i

+ 1

;

(ˆµ

i

)

s

;

(

ˆ

σ

i

)

s

End

3.

Repeat

4. Select a function

s

uniformly at random

5. Compute the weight set

w

s

← Weight

6. Select the optimal arm

(i

∗

)

s

that maximizes

the scalarized function

f

s

7. Observe: reward vector

(r

r

r

i

∗

)

s

,

(r

r

r

i

∗

)

s

= ([r

1

i

∗

,· ··, r

D

i

∗

]

T

)

s

8. Update:

(

ˆ

µ

µ

µ

i

∗

)

s

;

(

ˆ

σ

σ

σ

i

∗

)

s

;

N

s

i

∗

← N

s

i

∗

+ 1

;

N

s

← N

s

+ 1

9. Compute: Pareto regret

10.

Until L

11. Output: Pareto regret

Figure 1: The scalarized multi-objective multi-armed bandit

(SMOMAB) algorithm.

4 ADAPTIVE WEIGHTS FOR

THE SCALARIZED MOMAB

In this section, we provide different algorithms to

identify the weight set w

w

w

s

.

Fixed set of weights. The standard algo-

rithm (Das and Dennis, 1997) defines a fixed to-

tal weight set W

W

W, W

W

W = (w

w

w

1

,· ·· ,w

w

w

s

,· ·· ,w

w

w

S

) that is

uniformly random spread sampling in the weighted

space. For example, the bi-objective multi-armed

bandit with number of scalarized function |S|. The

weight w

1,s

of the scalarized function s in the objec-

tive d, d = 1 is set to 1−

s−1

|S|−1

and the weight w

2,s

of

the scalarized function s in the objective d, d = 2 is

set to 1− w

1,s

.

Note that, this algorithm performs a uniform sam-

pling in the weight space. However, there is no

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

58

guarantee that the resulting arms will give a uni-

form spread in the objective space. The weight set

w

w

w

s

, w

w

w

s

∈ W

W

W is sequentially ordered, therefore, when

the scalarized MOMAB algorithm (the algorithm in

Figure 1) is stopped prematurely, it will not have sam-

pled all the part of the weight space, possibly leaving

a part of the Pareto front A

∗

undiscovered.

Adaptive Direction. The adaptive algorithm from

(J. Dubois-Lacoste and Stutzle, 2011) takes into ac-

count the shape of the Pareto front A

∗

in order to

obtain a well-spread set of the non-dominated arms.

For a scalarized function s, this algorithm defines a

norm (Euclidean distance) to specify the largest gap

in the coverage of the Pareto front A

∗

. The largest gap

is the gap between the maximum norm max

i∈A

||

ˆ

µ

µ

µ

s

i

||

and the minimum norm min

i∈A

||

ˆ

µ

µ

µ

s

i

|| of the estimated

mean

ˆ

µ

µ

µ

s

i

of an arm i, i ∈ A under the scalarized func-

tion s. For example, the bi-objective multi-armed

bandit with scalarized function s. The adaptive new

weight w

1,s

of the objective d, d = 1 is perpendic-

ular to the segment defined by the maximum arm

i

max

, i

max

= argmax

i∈A

||

ˆ

µ

µ

µ

s

i

|| and the minimum arm

arm i

min

, i

min

= argmin

i∈A

||

ˆ

µ

µ

µ

s

i

|| in the objective space,

that is:

w

1,s

=

ˆµ

2,s

i

max

− ˆµ

2,s

i

min

ˆµ

2,s

i

max

− ˆµ

2,s

i

min

+ ˆµ

1,s

i

min

− ˆµ

1,s

i

max

where ˆµ

2,s

i

max

and ˆµ

2,s

i

min

are the estimated mean of the

arms i

max

and i

min

in the objective 2 under scalarized

function s, respectively . And, ˆµ

1,s

i

max

and ˆµ

1,s

i

min

are the

estimated mean of the arms i

max

and i

min

in the objec-

tive 1 under scalarized function s, respectively. The

new weight w

2,s

of the second objective d = 2 un-

der scalarized function s is set to 1 − w

1,s

. Note that,

for number of objectives D > 2, the weight w

1

in the

objective d = 1 is calculated by using the estimated

mean of the objectives d = 1 and d = 2. The weight

w

2

in the objective d = 2 is calculated by using the es-

timated mean of the objectives d = 2 and d = 3, and

so on. While the weight w

D

in the objective D is set

to 1− (w

1

+ ··· + w

D−1

).

This algorithm defines new weights based on the

shape of the Pareto front. Therefore, if the shape of

the Pareto front is irregular, then the new weight will

not discover all the optimal arms in the Pareto front.

This operator adapt the weights of only two objectives

at the time.

Genetic Operators. The scalarized local search al-

gorithm (Drugan, 2013) generates new weights for

scalarized function s using real-coded genetic oper-

ators. The new weights are different from the parent-

ing weights, therefore, it could explore the parts of the

Pareto front A

∗

that are undiscovered.

The mutation operator, mutates each weight of a

scalarization function independently using a normal

distribution. The recombination operator generates a

new weight w

w

w from two or more scalarized functions,

each scalarized function has a predefined weight set

w

w

w

s

= (w

1

,· ·· ,w

D

). The translation recombination

operator translates the main set of scalarized function

S with a normally distributed variable. The rotation

recombination operator, considers that the scalarized

functions S are positioned on a S-dimensional hyper-

sphere. The generated new scalarized function s also

belongs to this hypersphere around the main scalar-

ized functions S, that is rotated with a small normally

distributed angle. For more details, we refer to (Dru-

gan and Thierens, 2010).

Since the mutation operator is the easiest one to

implement, we will use it in our comparison. Given

the weight setw

w

w

s

(t) of the scalarized function s at time

step t, the mutated new set of weight w

w

w

s

(t + 1) at time

step t + 1 is calculated as follows;

w

w

w

s

(t + 1) = w

w

w

s

(t) + I

I

I1

1

1

where I

I

I is a diagonal matrix of size D × D with nor-

mally distributed variables and 1

1

1 is a vector of size

D with 1 variables. After calculating the new weight

set w

w

w

s

(t + 1), we can either replace the old weight set

w

w

w

s

(t) with the new weight set, i.e. w

w

w

s

(t) ← w

w

w

s

(t + 1)

(mutation) or at each time step t, we generate new set

of weight that is independent from the previous one

(mutation without replacement).

5 THOMPSON SAMPLING IN

THE SCALARIZED MOMAB

ALGORITHM

In this section, we design an algorithm that frequently

selects the appropriated scalarized function set of

weights w

w

w

s

, w

w

w

s

∈ W

W

W, where the total weight set W

W

W is

either determined by using standard algorithm, adap-

tive algorithm or genetic algorithm. The appropriate

scalarized function is the one that improves the per-

formance of the algorithm by identifying new Pareto

optimal arms.

In the Bernoulli one-objective,Multi-Armed Ban-

dits (MABs), the reward is a stochastic scalar value,

and there is only one optimal arm. The reward r

i

, r

i

∼

B(p

i

) for an arm i is either 0, or 1 and generated from

a Bernoulli distribution B with unknown probability

of success p

i

. The goal of an agent is to minimize the

loss of not pulling the best arm i

∗

over L time steps.

The loss (or the total regret) is R

L

= Lp

∗

−

∑

L

t=1

p

i

(t),

where p

∗

= max

i=1,···,A

p

i

is the probability of suc-

ThompsonSamplingintheAdaptiveLinearScalarizedMultiObjectiveMultiArmedBandit

59

cess of the best arm i

∗

, and p

i

is the probability of

success of the selected arm i at time step t. To mini-

mize the total regret, at each time step t, the agent has

to trade-off between selecting the optimal arm i

∗

(ex-

ploitation) to minimize the regret

2

and selecting one

of the non-optimal arm i to increase the confidence in

the estimated probability of success ˆp

i

, ˆp

i

=

α

i

/(α

i

+ β

i

)

of the arm i (exploration). Where α

i

is the number of

successes (the number of receiving reward equals 1)

and β

i

is the number of failures (the number of receiv-

ing reward equals 0) of the arm i.

Thompson Sampling Policy. (Thompson, 1933) as-

signs to each arm i, i ∈ A a random probability of

selection P

i

to trade-off between exploration and ex-

ploitation. The random probability of selection P

i

of

each arm i is generated from Beta distribution, i.e.

P

i

= Beta(α

i

,β

i

), where α

i

is the number of successes

and β

i

is the number of failures of the arm i. The ran-

dom probability of selection P

i

of an arm i depends

on the performance of the arm i, i.e. the unknown

probability of success p

i

of the arm i. It will be high

value if the arm i has high probability of success p

i

value. With Bayesian priors on the Bernoulli proba-

bility of success p

i

of each arm i, Thompson sampling

assumes initially the number of successes, α

i

and the

number of failures, β

i

for each arm i is 1. At each

time t, Thompson sampling samples the probability

of selection P

i

for each arm i, i ∈ A (the probability

that an arm i is optimal) from Beta distribution, i.e.

P

i

= Beta(α

i

,β

i

). Beta distribution generates random

values, therefore, probably, at time step t, the optimal

arm i

∗

, i

∗

= argmax

i∈A

p

i

has high probability of se-

lection P

i

∗

, while at time step t +1 the suboptimal arm

j, j ∈ A, j 6= i

∗

has high probability of selection P

j

.

Thompson sampling selects the optimal arm i

∗

TS

that has the maximum probability of selection P

i

∗

TS

,

i.e. i

∗

TS

= argmax

i∈A

P

i

and observes the reward r

i

∗

TS

.

If r

i

∗

TS

= 1, then Thompson sampling updates the num-

ber of successes α

i

∗

TS

= α

i

∗

TS

+ 1 for the arm i

∗

TS

. As

a result, the estimated probability of success ˆp

i

∗

TS

of

the arm i

∗

TS

will increase. If r

i

∗

TS

= 0, then Thomp-

son sampling updated the number of failures β

i

∗

TS

=

β

i

∗

TS

+1 for the arm i

∗

. As a result, the estimated prob-

ability of success ˆp

i

∗

TS

of the arm i

∗

TS

will decrease.

Since, Thompson sampling is very easy to imple-

ment, we will use it to select the scalarized function

s, s ∈ S. We assume that each scalarized function s

has unknown probability of success p

s

and when we

select s, we either receive reward 1 or 0. We call

the algorithm that uses Thompson sampling to select

the weight set ”Adaptive Scalarized Multi-Objective

Multi-Armed Bandit” (adaptive-SMOMAB). Note

2

At each time step t, the regret equals p

∗

− p

i

(t).

that, adaptive-SMOMAB uses Thompson sampling to

select the weight set, while scalarized multi-objective

multi-armed bandit (MOMAB) selects uniformly at

random one of the weight set w

w

w

s

, w

w

w

s

∈ W

W

W.

The Adaptive-SMOMAB Algorithm. As in the case

of MABs, Thompson sampling uses random of beta

distribution Beta(α

s

,β

s

) to assign a probability of se-

lection P

s

for each scalarized function s. Where α

s

is the number of successes of the scalarized function

s and β

s

is the number of failures of the scalarized

function s. We consider that each scalarized func-

tion s has unknown probability of success p

s

and by

playing each scalarized function s, we can estimate

the corresponding probability of success. At each

time step t, we maintain value V

s

(t) for each scalar-

ized function s, where V

s

(t) = max

i∈A

f

s

((

ˆ

µ

µ

µ

i

)

s

) is the

value of the optimal arm i

∗

, i

∗

= argmax

i∈A

f

s

((

ˆ

µ

µ

µ

i

)

s

)

under scalarized function s and (

ˆ

µ

µ

µ

i

)

s

is the estimated

mean vector of the arm i under the scalarized func-

tion s. If we select the scalarized function s at time

step t and the value of this scalarized function V

s

(t) is

greater or equal than the value at the previous selec-

tion, V

s

(t) ≥ V

s

(t − 1), then this scalarized function s

performs well because it has the ability to select the

same optimal arm or to select another optimal arm

that has higher value. Otherwise, the scalarized func-

tion s does not perform well.

The pseudocode of the adaptive-SMOMAB algorithm

is given in Figure 2. The linear scalarized-KG across

arms LS1-KG function f is used to convert the multi-

objective to a single one. The number of scalarized

function is |S|, |S| = D + 1, where D is the number

of objectives. The horizon of an experiment is L

steps. The algorithm in Figure 2 plays each arm for

each scalarized function s, Initial plays. The scalar-

ized function set is F

F

F = ( f

1

,· ·· , f

|S|

), each scalarized

function s has a corresponding predefined weight set,

w

w

w

s

= (w

1,s

,· ·· ,w

D,s

). N

s

is the number of times the

scalarized function s is pulled and N

s

i

is the number

of times the arm i under the scalarized function s is

pulled. (r

r

r

i

)

s

is the reward vector of the pulled arm i

under the scalarized function s which is drawn from a

normal distribution N(µ

µ

µ,σ

σ

σ

2

r

), whereµ

µ

µ is the true mean

vector andσ

σ

σ

2

r

is the true variance vector of the reward.

(

ˆ

µ

µ

µ

i

)

s

and (

ˆ

σ

σ

σ

i

)

s

are the estimated mean and standard

deviation vectors of the arm i under the scalarized

function s, respectively. V

s

, V

s

= max

i∈A

f

s

((

ˆ

µ

µ

µ

i

)

s

) is

the value of each scalarized function s after playing

each arm i Initial steps, where f

s

((

ˆ

µ

µ

µ

i

)

s

) is the value

of the LS-KG for the arm i under scalarized function

s. The number of successes α

s

, and the number of

failures β

s

for each scalarized function s are set to 1

as (Thompson, 1933), therefore, the estimated proba-

bility ˆp

s

, ˆp

s

=

α

s

/(α

s

+ β

s

) of success is 0.5. The prob-

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

60

1.

Input:

Horizon of an experiment

L

;number

of arms

|A|

;number of objectives

D

;number of

scalarized functions

|S| = D+ 1

;reward vector

r

r

r ∼ N(µ

µ

µ,σ

σ

σ

2

r

)

.

2.

Initialize:

Total wight set

W

W

W = (w

w

w

1

,· ··,w

w

w

D+1

)

For each scalarized function

s = 1

to

|S|

Play: each arm

i

,

Initial

steps

Observe:

(r

r

r

i

)

s

Update:

N

s

← N

s

+ 1

;

N

s

i

← N

s

i

+ 1

(

ˆ

µ

µ

µ

i

)

s

;

(

ˆ

σ

σ

σ

i

)

s

Compute:

V

s

(t) = max

1≤ i≤ A

f

s

((ˆµ

ˆµ

ˆµ

i

)

s

)

Set:

α

s

= 1

;

β

s

= 1

;

ˆp

s

= 0.5

;

P

s

=

1

|S|

End

3.

Repeat

4. For each scalarized function

s = 1,· ··, S

5. Sample

P

s

from Beta

(α

s

,β

s

)

6. End for

7.

s

∗

= argmax

s

P

s

8. Compute: the new weight set

w

w

w

s

∗

← Weight

9. Select: the optimal arm

i

∗

that maximizes

scalarized function

f

s

∗

that has

the new weight set

w

w

w

s

∗

10. Compute:

V

s

∗

(t) = max

1≤ i≤ A

f

s

∗

((ˆµ

ˆµ

ˆµ

i

)

s

∗

)

11. If

V

s

∗

(t) ≥ V

s

∗

(t −1)

12.

α

s

∗

= α

s

∗

+ 1

13. Else

14.

β

s

∗

= β

s

∗

+ 1

15. End

16.

V

s

∗

(t −1) ← V

s

∗

(t)

17. Observe: reward vector

(r

r

r

i

∗

)

s

∗

= ([r

1

i

∗

,· ··, r

D

i

∗

]

T

)

s

∗

18. Update:

(

ˆ

µ

µ

µ

i

∗

)

s

∗

;

(

ˆ

σ

σ

σ

i

∗

)

s

∗

;

N

s

∗

i

∗

← N

s

∗

i

∗

+ 1

;

N

s

∗

← N

s

∗

+ 1

19. Compute: Pareto regret

20.

Until L

21.

Output:

Pareto regret

Figure 2: Adaptive Scalarized MOMAB.

ability of selection P

s

each scalarized function s is

1

|S|

(step: 2).

After initial playing, the algorithm computes the

probability of selection P

s

of each scalarized function

s, the probability of selection P

s

is sampled from beta

distribution Beta(α

s

,β

s

)(step: 4). The algorithm se-

lects the optimal scalarized function s

∗

, the one that

has a max probability of success (step: 7). The al-

gorithm determines the weight set w

w

w

s

∗

for the optimal

scalarized function s

∗

(step: 8). The weight set w

w

w

s

∗

is determined either by using adaptive algorithm, ge-

netic algorithm or standard algorithm, Section 4. The

algorithm selects the optimal arm i

∗

under the optimal

scalarized function s (step: 9) and computes the value

of the optimal scalarized function s

∗

(step: 10) which

is the value of the optimal arm i

∗

. If the value V

s

∗

(t)

of the optimal scalarized function s

∗

at time step t, is

greater or equal than the value of the of the optimal

scalarized function s

∗

at time step t − 1, then the opti-

mal scalarized function s

∗

performs well. The number

of successes α

s

∗

is increased. Other wise, the number

of failures β

s

∗

is increased (steps: 11-15). Then, the

algorithm updates the value V

s

∗

of the optimal scalar-

ized function s

∗

(step: 16). The algorithm simulates

the optimal arm i

∗

of the optimal scalarized function

s

∗

, observes the corresponding reward vector (r

r

r

i

∗

)

s

∗

and updates the estimated mean vector (ˆµ

ˆµ

ˆµ

i

∗

)

s

∗

, the es-

timated standard deviation vector (

ˆ

σ

ˆ

σ

ˆ

σ

i

∗

)

s

∗

of the arm

i

∗

and updates the number N

s

∗

i

∗

of the selected arm

and the number of the pulled scalarized function N

s

∗

(steps: 17-18). Finally, the algorithm computes the

Pareto regret (step: 19). This procedure is repeated

until the end of playing L steps.

Note that, if the adaptive-SMOMAB algorithm

uses the standard algorithm to set the weights, then

the total weight set W

W

W = (w

w

w

1

,· ·· ,w

w

w

S

) is fixed un-

til the end of playing L steps. However, if the

adaptive-SMOMAB algorithm uses the adaptive or

the genetic algorithm, then the total weight set W

W

W =

(w

w

w

1

,· ·· ,w

w

w

S

) will change at each time step. The

adaptive-SMOMAB algorithm uses a predefined to-

tal weight set W

W

W till the end of playing Initial steps,

then at each time step the adaptive and the genetic al-

gorithms generate new weights.

6 EXPERIMENTS

In this section, we firstly compare the performance of

the adaptive scalarized multi-objective, multi-armed

bandit (adaptive-SMOMAB)algorithm, the algorithm

in Figure 2 and the performance of the scalarized

multi-objective multi-armed bandit (SMOMAB) al-

gorithm, the algorithm in Figure 1. We use the

genetic, the adaptive, and the standard algorithms,

Section 4 to set the weight set w

w

w

s

for each linear

scalarized knowledge gradient across arms (LS1-KG)

s. Secondly, we experimentally compare the stan-

dard, the adaptive and the genetic algorithms, using

the adaptive-SMOMAB algorithm. The performance

measures are:

1. The Pareto regret, Section 2 at each time step t

which is the average of M experiments.

2. The cumulative Pareto regret, Section 2 at each

time step t which is the average of M experiments.

The number of experiments M is 1000. The hori-

zon of each experiment L is 1000. The reward vectors

r

r

r

i

of each arm i are drawn from corresponding normal

distribution N(µ

µ

µ

i

,σ

σ

σ

2

i,r

) where µ

µ

µ

i

= [µ

1

i

,· ·· ,µ

D

i

]

T

is the

true mean vector and σ

σ

σ

i,r

= [σ

1

i,r

,· ·· ,σ

D

i,r

]

T

is the true

ThompsonSamplingintheAdaptiveLinearScalarizedMultiObjectiveMultiArmedBandit

61

standard deviation vector of the reward of the arm i.

The true means and the true standard deviations of

arms are unknown parameters to the agent. The LS1-

KG needs the estimated variance

ˆ

σ

σ

σ

2

i

for each arm i,

therefore, each arm is played initially 2 times which

is the minimum number to estimate the variance.

At each time step t, the mutation operator mu-

tates the weight set w

w

w

s

(t) of each scalarized function

s at time step t independently using a normal distri-

bution N(µ, σ

2

) to generate new weight set w

w

w

s

(t + 1),

we set the mean µ to 0 and the variance σ

2

to 0.05

as (Drugan, 2013). We can either replace the old

weight w

w

w

s

(t) set with the new weight set w

w

w

s

(t + 1),

i.e. w

w

w

s

(t) ← w

w

w

s

(t + 1) or at each time step t, we gen-

erate new set of weight that is independent from the

previous one. We compare the two different setting

and we find out that the replacement setting performs

better, therefore, we use this in our comparison.

6.1 Bi-Objective

Example 1. We used the same example in (Dru-

gan and Nowe, 2013) because it simple to understand

and contains non-convex mean vector set. The num-

ber of arms |A| equals 6, the number of objectives D

equals 2. The standard deviation for arms in each

objective is 0.1. The true mean set vector is (µ

µ

µ

1

=

[0.55, 0.5]

T

, µ

µ

µ

2

= [0.53,0.51]

T

, µ

µ

µ

3

= [0.52,0.54]

T

,

µ

µ

µ

4

= [0.5,0.57]

T

, µ

µ

µ

5

= [0.51,0.51]

T

, µ

µ

µ

6

= [0.5, 0.5]

T

).

Note that, the Pareto front is A

∗

= (a

∗

1

,a

∗

2

,a

∗

3

,a

∗

4

),

where a

∗

i

refers to the optimal arm i

∗

. The subopti-

mal a

5

is not dominated by the two optimal arms a

∗

1

and a

∗

4

, but a

∗

2

and a

∗

3

dominates a

5

while a

6

is domi-

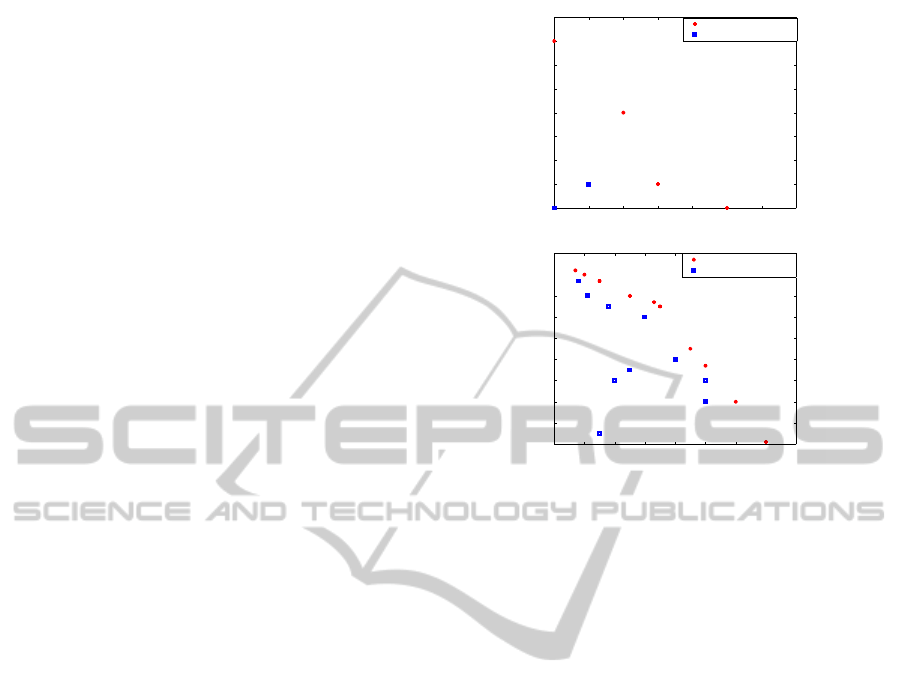

nated by all the other mean vectors. Figure 3 shows a

set of 2-objective true mean with a non-convex set.

First, we compare the performance of the

SMOMAB and adaptive-SMOMAB algorithms. We

use either the standard algorithm, the adaptive algo-

rithm, or the genetic algorithm to set the weights.

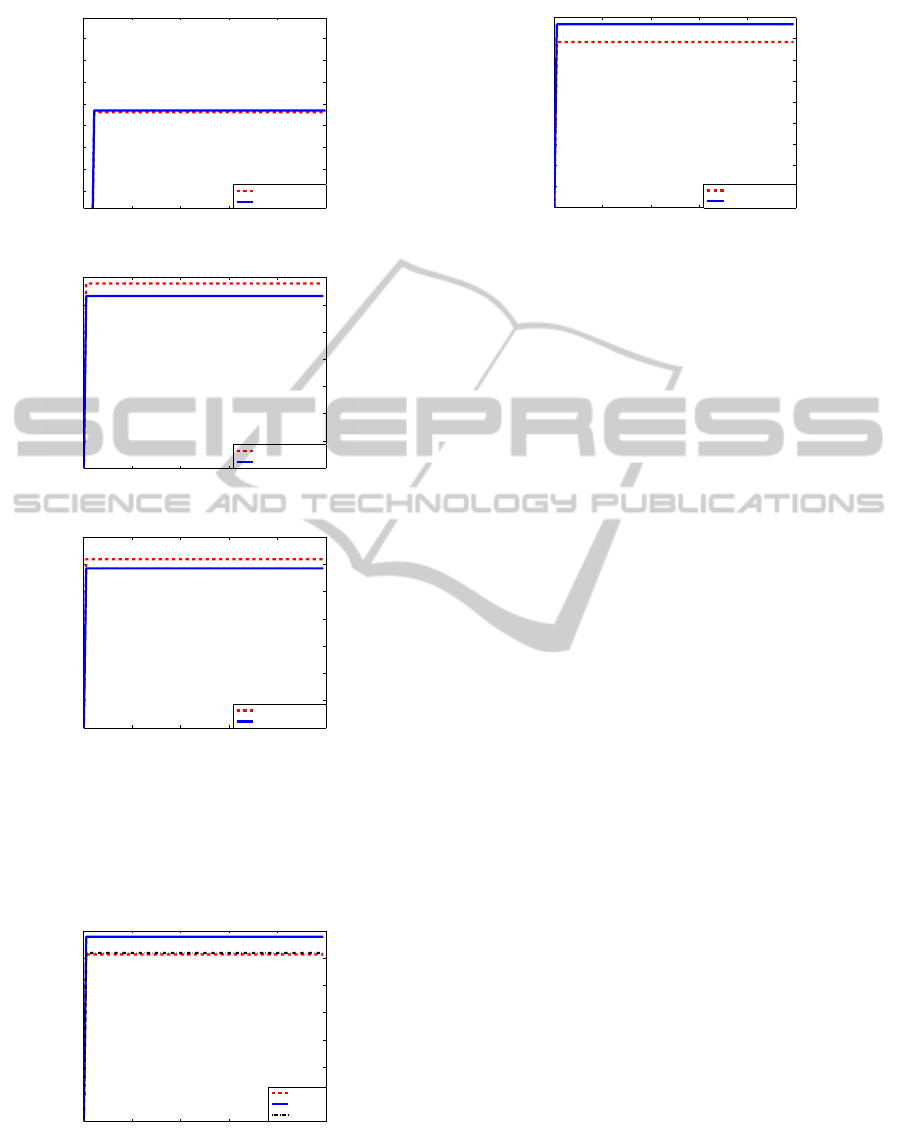

Figure 4 gives the comparison of the SMOMAB and

adaptive-SMOMAB algorithms using the standard,

the adaptive, and the genetic algorithms. The stan-

dard deviation σ

d

i,r

of reward for each arm i in each

objective d is set to 0.1. The x-axis gives the time

step. The y-axis is the cumulative Pareto regret which

is the average of M experiments at each time step t.

Figure 4 shows that the SMOMAB algorithm per-

forms better than the adaptive-SMOMAB algorithm

using the adaptive, and the genetic algorithms, since

the cumulative Pareto regret is decreased. While, the

adaptive-SMOMAB algorithm performs slightly bet-

ter than the SMOMAB algorithm using the standard

algorithm.

Second, we compare the performance of the stan-

0.5 0.51 0.52 0.53 0.54 0.55 0.56 0.57

0.5

0.51

0.52

0.53

0.54

0.55

0.56

0.57

0.58

Objective

1

Objective

2

mean of optimal arm

mean of non optimal arm

µ

1

*

µ

2

*

µ

3

*

µ

4

*

µ

5

µ

6

0.49 0.5 0.51 0.52 0.53 0.54 0.55 0.56 0.57

0.49

0.5

0.51

0.52

0.53

0.54

0.55

0.56

0.57

0.58

Objective

1

Objective

2

mean of optimal arm

mean of non optimal arm

µ

10

*

µ

1

*

µ

2

*

µ

3

*

µ

5

*

µ

6

*

µ

4

*

µ

7

*

µ

8

*

µ

9

*

µ

13

µ

15

µ

16

µ

20

µ

19

µ

18

µ

12

µ

11

µ

14

µ

17

Figure 3: Non-convex and convex mean vector sets with

bi-objective. Upper figure shows a non-convex set with 6-

armed. Lower figure shows a convex set with 20-armed.

dard, the adaptive, and the genetic algorithms using

the adaptive-SMOMAB algorithm. Figure 5 gives the

cumulative Pareto regret. The x-axis is the time step.

The y-axis is the cumulative Pareto regret which is the

average of M experiments at each time step t. Figure 5

shows that the standard algorithm is the best algo-

rithm and the adaptivealgorithm is the worst one. The

mutation algorithm performs better than the adaptive

algorithm and slightly worse than the standard algo-

rithm.

In Example 1, the Pareto front A

∗

contains optimal

arms that are far from the non-optimal arms accord-

ing to the Euclidean distance and the number of the

optimal arms |A

∗

|, |A

∗

| = 4 is larger than the number

of the non-optimal arms which is equal 2. Therefore,

the SMOMAB algorithm almost performs better than

the adaptive-SMOMAB because it selects uniformly

at random one of the scalarized function. And, the

standard algorithm performs better than the adaptive

and the genetic algorithms because they generate new

weights that are nearest to each other to explore more

the optimal arms.

6.2 Triple-Objective

Example 2. With number of objectives D equals

2, number of arms |A| equals 20 and convex

Pareto mean set, (µ

µ

µ

1

= [.56,.491]

T

,µ

µ

µ

2

= [.55,

.51]

T

,µ

µ

µ

3

= [.54,.527]

T

,µ

µ

µ

4

= [.535, .535]

T

,µ

µ

µ

5

=

[.525, .555]

T

,µ

µ

µ

6

= [.523, .557]

T

,µ

µ

µ

7

= [.515, .56]

T

,

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

62

0 50 100 150 200 250

5.4

5.6

5.8

6

6.2

6.4

6.6

6.8

x 10

−3

Time step

Cumulative Pareto Regret

adaptive−SMOMAB

SMOMAB

a. Using the standard algorithm

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

x 10

−3

Time step

Cumulative Pareto Regret

adaptive−SMOMAB

SMOMAB

b. Using the adaptive algorithm

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

x 10

−3

Time step

Cumulative Pareto Regret

adaptive−SMOMAB

SMOMAB

c. Using the genetic algorithm

Figure 4: Performance comparison of the SMOMAB

algorithm with the adaptive-SMOMAB algorithm on 2-

objective, 6-armed problem. The weights are set using the

standard algorithm in figure a, using the adaptive algorithm

in figure b, and using the genetic algorithm in figure c.

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

x 10

−3

Time step

Cumulative Pareto Regret

Standard

Adaptive

Mutation

Figure 5: Performance comparison of the standard, the

adaptive, and the genetic algorithms on 2-objective, 6-

armed problem using the adaptive-SMOMAB algorithm.

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

8

9

x 10

−3

Time step

Cumulative Pareto Regret

adaptive−SMOMAB

SMOMAB

a. Using the adaptive algorithm

Figure 6: Performance comparison of the SMOMAB

algorithm with the adaptive-SMOMAB algorithm on 3-

objective, 20-armed problem. The weights are set using the

adaptive algorithm.

µ

µ

µ

8

= [.505,.567]

T

,µ

µ

µ

9

= [.5, .57]

T

,µ

µ

µ

10

= [.497,

.572]

T

,µ

µ

µ

11

= [.498,.567]

T

,µ

µ

µ

12

= [.501,.56]

T

,µ

µ

µ

13

=

[.505, .495]

T

,µ

µ

µ

14

= [.508, .555]

T

,µ

µ

µ

15

= [.51,.52]

T

,

µ

µ

µ

16

= [.515,.525]

T

,µ

µ

µ

17

= [.52,.55]

T

,µ

µ

µ

18

=

[.53,.53]

T

,µ

µ

µ

19

= [.54,.52]

T

,µ

µ

µ

20

= [.54,.51]

T

),

the standard deviation for arms in each objective is

set to 0.1. The Pareto front A

∗

contains 10 optimal

arms, A

∗

= (a

∗

1

,a

∗

2

,a

∗

3

,a

∗

4

,a

∗

5

,a

∗

6

,a

∗

7

,a

∗

8

,a

∗

9

,a

∗

10

) (S.

Q. Yahyaa and Manderick, 2014c). Note that, the

number of the optimal arms |A

∗

|, |A

∗

| = 10 is equal

to the number of non-optimal arms and the optimal

arms are close to the non-optimal arm according

to the Euclidean distance. Figure 3 shows a set of

2-objective convex true mean vector set. We add

extra objective to Example 2, resulting in 3-objective,

20-armed bandit problem. The Pareto front A

∗

still

contains 10 optimal arms and the optimal arms are

closer to non-optimal arms compared to Example 2

according to the Euclidean distance.

First, we compare the performance of the

SMOMAB and adaptive-SMOMAB algorithms. We

use either the standard algorithm, the adaptive al-

gorithm, or the genetic algorithm to determine the

weight set. The adaptive-SMOMAB algorithm per-

forms better than the SMOMAB algorithm for the

standard, adaptive and genetic algorithms. Figure 6

gives the comparison of the SMOMAB and adaptive-

SMOMAB algorithms using the adaptive algorithm.

Figure 6 shows that the adaptive-SMOMAB algo-

rithm performs better than the SMOMAB algorithm

using the adaptive algorithm to set the weight set.

Second, we compare the performance of the stan-

dard, the adaptive, and the genetic algorithms using

the adaptive-SMOMAB algorithm. Figure 7 gives

the cumulative Pareto regret. Figure 7 shows that the

standard algorithm is the worst algorithm. The adap-

tive algorithm is the best one and performs slightly

better than the mutation algorithm. The mutation al-

ThompsonSamplingintheAdaptiveLinearScalarizedMultiObjectiveMultiArmedBandit

63

0 100 200 300 400 500

4.5

5

5.5

6

6.5

7

7.5

8

8.5

9

x 10

−3

Time step

Cumulative Pareto Regret

Standard

Adaptive

Mutation

Figure 7: Performance comparison of the standard, the

adaptive, and the genetic algorithms on 3-objective, 20-

armed problem using the adaptive-SMOMAB algorithm.

gorithm performs better than the standard algorithm

and worse than the adaptive algorithm.

From the above experiment, we see that when

we increase the number of objectives, the adaptive-

SMOMAB algorithm performs better than the

SMOMAB algorithm. Also, we see that the adap-

tive and the genetic algorithms perform better than the

standard algorithm.

6.3 5-Objective

We add extra 2 objectives to Example 2, resulting

in 5-objective, 20-armed bandit problem, leaving the

Pareto front A

∗

unchanged. The optimal arms in the

Pareto front A

∗

are still closer to the non-optimal arms

according to the Euclidean distance. The standard de-

viation for arms in each objective is set to 0.01.

First, we compare the performance of the

SMOMAB and adaptive-SMOMAB algorithms. We

use either the standard algorithm, the adaptive algo-

rithm, or the genetic algorithm to set the weights. The

adaptive-SMOMAB algorithm performs better than

the SMOMAB algorithm for all the weight setting.

Figure 8 gives the comparison of the SMOMAB and

adaptive-SMOMAB algorithms using the standard al-

gorithm. Figure 8 shows that the adaptive-SMOMAB

algorithm performs better than the SMOMAB algo-

rithm using the standard algorithm.

Second, we compare the performance of the stan-

dard, the adaptive, and the genetic algorithms using

the adaptive-SMOMAB algorithm. Figure 9 gives

the cumulative Pareto regret. Figure 9 shows that the

standard algorithm is the worst algorithm and the mu-

tation algorithm is the best algorithm. The adaptive

algorithm performs better than the standard algorithm

and worse than the mutation algorithm.

From the above experiment, we see that when we

increase the number of objectives, i.e. D > 3 the per-

formance of the adaptive-SMOMAB algorithm is in-

creased. We also see the performance of the genetic

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

8

9

x 10

−3

Time step

Cumulative Pareto Regret

adaptive−SMOMAB

SMOMAB

a. Using the adaptive algorithm

Figure 8: Performance comparison of the SMOMAB

algorithm with the adaptive-SMOMAB algorithm on 5-

objective, 20-armed problem. The weights are set using the

adaptive algorithm.

0 200 400 600 800 1000

0

1

2

3

4

5

6

7

8

x 10

−3

Time step

Cumulative Pareto Regret

Standard

Adaptive

Mutation

Figure 9: Performance comparison of the standard, the

adaptive, and the genetic algorithms on 5-objective, 20-

armed problem using the adaptive-SMOMAB algorithm.

and adaptive algorithms are increased, where the cu-

mulative Pareto regret is decreased.

Discussion. from the above experiments, we see

that with minimum number of objectives D, D = 2,

the SMOMAB algorithm performs better than the

adaptive-SMOMAB algorithm. While, for number of

objectives D, D > 2 larger than 2, the performance

of the adaptive-SMOMAB algorithm is better than

the performance of the SMOMAB algorithm. As the

number of objectives is increased, the performance of

the adaptive-SMOMAB algorithm increases. We also

see that, for small number of objectives D, D = 2,

the standard algorithm performs better than the adap-

tive and the genetic algorithms using the adaptive-

SMOMAB algorithm. While as the number of ob-

jectives is increased, the adaptive and genetic algo-

rithms perform better than the standard algorithm us-

ing the adaptive-SMOMAB algorithm. Where, the

adaptive algorithm performs better than the genetic

algorithm for number of objectives equals 3 and the

genetic algorithm performs better than the adaptive

algorithm for number of objectives equals 5. The in-

tuition is that for small number of objectives D, D = 2

and small number of arms |A|, |A| = 6, the small num-

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

64

ber of scalarized functions S, |S| = D+ 1 was able to

identify almost all the optimal arm in the Pareto front

A

∗

. While, for large number of objectives D, D ≥ 2

and large number of arms |A|, |A| = 20, the adaptive

and genetic algorithms generate new weights, and the

new weights explore all the optimal arms in the Pareto

front A

∗

. We also see that the figures have a flat per-

formance and this is because of the calculation of the

Pareto regret. Pareto regret adds minimum distance

(virtual distance) to the selected suboptimal arm to

create an optimal arm, therefore the added distance

will be the same if the suboptimal arms are close to

each other.

7 CONCLUSIONS

We presented multi-objective, multi-armed bandit

(MOMAB) problem and the regret measures in the

MOMAB. We presented the linear scalarized function

and the linear scalarized-KG that transform the multi-

objective problem into a single problem by summing

the weighted objectivesto find the optimal arms. Usu-

ally, the scalarized multi-objective (SMOMAB) algo-

rithm selects uniformly at random the scalarized func-

tion s. We proposed to use techniques from the multi-

objective optimization in the SMOMAB algorithm to

adapt the weights online. We use the genetic opera-

tors to generate new weights in the proximity of the

current weight sets, and we adapt the weights to be

perpendicular on the set of Pareto optimal solutions.

We propose the adaptive scalarized multi-armed ban-

dit (adaptive-SMOMAB) algorithm that uses Thomp-

son sampling policy to select the scalarized s. We

experimentally compared the SMOMAB algorithm

and the adaptive-SMOMAB algorithm using the pro-

posed algorithms: the fixed weights, the adaptive

weights, and the genetic weights. We conclude that

when the number of objective D is increased D >

2, the adaptive-SMOMAB performs better than the

SMOMAB algorithm. The adaptive and the mutation

algorithms perform better than the standard algorithm

(fixed weights).

REFERENCES

Das, I. and Dennis, J. E. (1997). A closer look at drawbacks

of minimizing weighted sums of objectives for pareto

set generation in multicriteria optimization problems.

Structural Optimization, 14(1):63–69.

Drugan, M. (2013). Sets of interacting scalarization func-

tions in local search for multi-objective combinatorial

optimization problems. In IEEE Symposium Series on

Computational Intelligence (IEEE SSCI).

Drugan, M. and Nowe, A. (2013). Designing multi-

objective multi-armed bandits algorithms: A study. In

Proceedings of the International Joint Conference on

Neural Networks (IJCNN).

Drugan, M. and Thierens, D. (2010). Geometrical recombi-

nation operators for real-coded evolutionary mcmcs.

Evolutionary Computation, 18(2):157–198.

Eichfelder, G. (2008). Adaptive Scalarization Methods in

Multiobjective Optimization. Springer-Verlag Berlin

Heidelberg, 1st edition.

I. O. Ryzhov, W. P. and Frazier, P. (2011). The knowledge-

gradient policy for a general class of online learning

problems. Operation Research.

J. Dubois-Lacoste, M. L.-I. and Stutzle, T. (2011). Improv-

ing the anytime behavior of two-phase local search. In

Annals of Mathematics and Artificial Intelligence.

Powell, W. B. (2007). Approximate Dynamic Program-

ming: Solving the Curses of Dimensionality. John

Wiley and Sons, New York, USA, 1st edition.

S. Q. Yahyaa, M. D. and Manderick, B. (2014a). Empir-

ical exploration vs exploitation study in the scalar-

ized multi-objective multi-armed bandit problem. In

International Joint Conference on Neural Networks

(IJCNN).

S. Q. Yahyaa, M. D. and Manderick, B. (2014b). Knowl-

edge gradient for multi-objective multi-armed bandit

algorithms. In International Conference on Agents

and Artificial Intelligence (ICAART), France. Inter-

national Conference on Agents and Artificial Intelli-

gence (ICAART).

S. Q. Yahyaa, M. D. and Manderick, B. (2014c). Multi-

variate normal distribution based multi-armed bandits

pareto algorithm. In the European Conference on Ma-

chine Learning and Principles and Practice of Knowl-

edge Discovery in Databases (ECML/PKDD).

Thompson, W. R. (1933). On the likelihood that one un-

known probability exceeds another in view of the evi-

dence of two samples. In Biometrika.

Zitzler, E. and et al. (2002). Performance assessment

of multiobjective optimizers: An analysis and re-

view. IEEE Transactions on Evolutionary Computa-

tion, 7:117–132.

ThompsonSamplingintheAdaptiveLinearScalarizedMultiObjectiveMultiArmedBandit

65