Beyond Onboard Sensors in Robotic Swarms

Local Collective Sensing through Situated Communication

Tiago Rodrigues, Miguel Duarte, Sancho Moura Oliveira and Anders Lyhne Christensen

Instituto de Telecomunicac¸

˜

oes, 1049-001 Lisbon, Portugal

Instituto Universit

´

ario de Lisboa (ISCTE-IUL), 1649-026 Lisbon, Portugal

BioMachines Lab, 1649-026 Lisbon, Portugal

Keywords:

Swarm Robotics, Evolutionary Robotics, Situated Communication, Local Collective Sensing.

Abstract:

The constituent robots in swarm robotics systems are typically equipped with relatively simple, onboard sen-

sors of limited quality and range. When robots have the capacity to communicate with one another, communi-

cation has so far been exclusively used for coordination. In this paper, we present a novel approach in which

local, situated communication is leveraged to overcome the sensory limitations of the individual robots. In

our approach, robots share sensory inputs with neighboring robots, thereby effectively extending each other’s

sensory capabilities. We evaluate our approach in a series of experiments in which we evolve controllers for

robots to capture mobile preys. We compare the performance of (i) swarms that use our approach, (ii) swarms

in which robots use only their limited onboard sensors, and (iii) swarms in which robots are equipped with

ideal sensors that provide extended sensory capabilities without the need for communication. Our results show

that swarms in which local communication is used to extend the sensory capabilities of the individual robots

outperform swarms in which only onboard sensors are used. Our results also show that in certain experimental

configurations, the performance of swarms using our approach is close to the performance of swarms with

ideal sensors.

1 INTRODUCTION

Robots in large-scale decentralized multirobot sys-

tems, or swarm robotics systems, typically have sim-

ple and inexpensive sensors. This design principle al-

lows for the unit cost to be kept low, but limits the

sensory capabilities of the individual robots (see, for

instance, Correll and Martinoli (2006)).

Many simulation-based studies have disregarded

limitations of real sensors (Turgut et al., 2008), used

simple communication to facilitate cooperation (Fred-

slund and Matari

´

c, 2002), or relied on indirect co-

ordination through stigmergy (Beckers et al., 1994).

While unrealistic sensors can be used to study certain

aspects of biological systems, such as the evolution of

particular behaviors observed in nature (Trianni et al.,

2003; Duarte et al., 2011), resulting controllers cannot

be used on any real robotic systems. Simple means of

communication, such as sound and color, on the other

hand, are relatively straightforward to implement in

real hardware (Floreano et al., 2007), but they are also

limited in terms of the amount of information they al-

low robots to exchange. Examples of bio-inspired ap-

proaches such as quorum sensing in bacteria (Bassler,

1999), trophallaxis (Schmickl and Crailsheim, 2008),

and hormone-based communication (Stamatis et al.,

2009) have been shown to be simple, yet effective

strategies to achieve coordination through communi-

cation in multirobot systems.

Robots can alternatively be equipped with more

complex, wireless communication hardware that en-

ables direct transmission of binary data. In such

scenarios, robots are able to transmit packets with

relatively large amounts of information. Yet, in

swarm robotic systems, such means of communica-

tion are typically only used to broadcast simple in-

formation, such as the heading, location or speed of

each robot (Cianci et al., 2007) in order to facilitate

behaviors such as aggregation (Garnier et al., 2008)

and flocking (Turgut et al., 2008).

In this paper, we show how the use of commu-

nication can extend the limited sensory capabilities

of the constituent robots in a swarm. Our approach

relies on local, situated communication (Støy, 2001),

where the signal that carries information also contains

context, namely the relative direction and distance,

111

Rodrigues T., Duarte M., Oliveira S. and Lyhne Christensen A..

Beyond Onboard Sensors in Robotic Swarms - Local Collective Sensing through Situated Communication.

DOI: 10.5220/0005215401110118

In Proceedings of the International Conference on Agents and Artificial Intelligence (ICAART-2015), pages 111-118

ISBN: 978-989-758-074-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

from the sender to the receiver. This type of com-

munication can be achieved using widely available

and relatively inexpensive equipment, such as the e-

puck (Mondada et al., 2009) equipped with the range

& bearing board extension (Guti

´

errez et al., 2008).

In our approach, each robot in the swarm shares

readings from its onboard sensors with neighbor-

ing robots. By combining information from multi-

ple sources, it becomes possible to obtain knowledge

about the environment that would otherwise be un-

available to any single robot. Robots can effectively

use the sensory information received to extend their

own sensing capabilities through the implementation

of virtual sensors. We call such virtual sensors collec-

tive sensors.

Our goal is to maintain the desirable properties of

natural swarm systems while simultaneously exploit-

ing some of the unique capabilities of machines. In

this way, we can combine features such as scalability

and robustness due to the exclusive reliance on decen-

tralized control, with robots’ capacity for low-latency

and high-bandwidth communication in order to over-

come limitations of the individual units’ onboard sen-

sory hardware.

We demonstrate our approach in a predator-prey

task, in which a swarm of robots must locate and con-

sume preys. Each robot has two short-range onboard

sensors that can detect preys that the robot is fac-

ing, and four collective sensors that are implemented

based on sensory information shared by neighboring

robots. By knowing the neighboring robots’ relative

range and bearing, it is possible for a robot that re-

ceives the information to estimate a prey’s position

within its own local frame of reference. The estimated

position of the prey is then used to compute the read-

ings of the receiving robot’s collective sensors thereby

effectively extending the robot’s sensory range. We

compare the performance of robots using collective

sensors with robots that rely exclusively on onboard

sensors, and with robots equipped with ideal sensors,

that is, sensors that allow robots to sense preys di-

rectly and independently at ranges equal to the col-

lective sensors. In our experiments, we use evolution-

ary robotics techniques (Nolfi and Floreano, 2000) to

evolve artificial neural network-based controllers in

scenarios with up to 20 robots and 10 preys.

2 RELATED WORK

Communication systems in nature have been widely

studied by biologists, and have served as inspiration

to roboticists. The process of communication in bac-

teria, known as quorum sensing (Bassler, 1999; Einol-

ghozati et al., 2012), relies in producing signaling

molecules that can be perceived by neighbors. In this

way, it is possible for individuals to estimate popu-

lation density based on the concentration of signal-

ing molecules, and to modify their behavior accord-

ingly. The quorum sensing process has also been

used in robots: Chandrasekaran and Hougen (2006),

for instance, used quorum sensing to give nano-scaled

robots, constructed using biological components such

as proteins and DNA structures, the ability to commu-

nicate and coordinate goal-seeking strategies.

Duarte et al. (2011) studied the emergence of com-

plex macroscopic behaviors observed in colonies of

social insects, such as task allocation, communica-

tion, and synchronization. In a foraging scenario,

robots were given explicit visual communication ca-

pabilities through changes in the robots’ body color.

The authors observed that explicit communication en-

abled complex behaviors to emerge, and the perfor-

mance of the swarm was significantly higher than in

scenarios in which robots could not communicate.

Inspired by mound-building termites, Werfel et al.

(2014) implemented a system in which a group of

robots were able to coordinate in a construction task.

A set of rules was defined, allowing the robots to in-

crementally construct a particular structure in a de-

centralized way. The robots did not communicate,

and instead had to rely on stigmergy to coordinate.

Turgut et al. (2008) studied self-organized flock-

ing in a swarm of robots with inter-robot communica-

tion. Their robots were equipped with a wireless com-

munication module, which allowed the robots in the

swarm to sense the headings of neighboring robots.

By taking into account the robots’ mean orientation,

the swarm was able to achieve a robust flocking be-

havior. In a related study, Fredslund and Matari

´

c

(2002) studied formation tasks in swarm of robots.

The authors used robots that were equipped with a

panning camera and IR sensors. The robots’ sensors

allowed them to estimate the orientation and distance

to other robots in the formation. No global coordinate

system was used, and therefore only relative distances

were taken into account. The authors enforced for-

mation sorting through each robots’ unique IDs using

local sensing and minimal communication.

In our study, we go beyond simple communica-

tion of each robot’s own parameters, such as heading,

distance to other robots, or speed. We process on-

board sensory information, such as the estimation of

the position of a target, and transmit it to neighbor-

ing robots. Collective sensors then use the received

information to allow the robot to sense particular en-

vironmental features that would otherwise be beyond

the range of the robot’s onboard sensors.

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

112

3 METHODOLOGY

In this study, we explore the potential benefits of shar-

ing sensory information to extend the capabilities of

the individual robots in swarm robotics systems. The

proposed approach is based on the mutual sharing of

readings from onboard sensors between neighboring

robots. The shared information is then used to com-

pute readings for collective sensors, which can give

the individual robot information that would not be

available through its onboard sensors.

In our approach, robots can either share prepro-

cessed information, such as the location of interest-

ing features in the environment, or the raw sensory

readings, such as readings from a proximity sensor.

The shared information is broadcast to nearby robots

using situated communication, where the receiving

robot knows the relative location and orientation of

the transmitting robot. The location and orientation

of the transmitting robot can be included in the mes-

sages based on GPS and compass information, or

by communication means that implicitly embed such

relative position information in the signals transmit-

ted (Guti

´

errez et al., 2008).

Our collective sensors calculate the appropriate

readings taking into account the robot’s own location

and orientation. This local sensor fusion can pro-

vide robots with either longer range sensing, more

accurate sensing, or both. For instance, two or more

robots observing an object from two different angles

may be able to estimate its volume by combining their

sensory inputs, something that would not be possi-

ble based on readings from a single robot. In this

study, robots exchange information regarding the rel-

ative position of preys, effectively extending the sen-

sory range of each robot in the swarm.

4 EXPERIMENTAL SETUP

We evaluate our approach in a predator-prey task

where a group of robots (the predators) must locate

and consume a number of moving preys. The envi-

ronment is square-shaped, with a size of 10 m x 10 m,

surrounded by walls. The robots start each experi-

ment in the center of the environment, while the preys

are placed in random locations sampled from a uni-

form distribution. A robot consumes a prey by touch-

ing it. Whenever a prey is consumed, a new prey is

placed randomly in the environment, thereby keeping

the number of preys constant.

We use small (10 cm diameter) differential-drive

robots, loosely modeled after the e-puck (Mondada

et al., 2009). The speed of the robots is limited to

10 cm/s. The set of sensors is composed of (i) two

onboard prey sensors with a range of 0.8 m, (ii) four

collective prey sensors with a range of 3 m, (iii) four

robot sensors with a range of 3 m, and (iv) four wall

sensors with a range of 0.5 m. All the sensors have an

opening angle of 90

◦

. The collective prey sensors, the

robot sensors, and the wall sensors are all distributed

evenly around the robot, at the angles 0

◦

, 90

◦

, 180

◦

and 270

◦

, while the two onboard prey sensors are lo-

cated on the front of the robot at angles of 15

◦

and

-15

◦

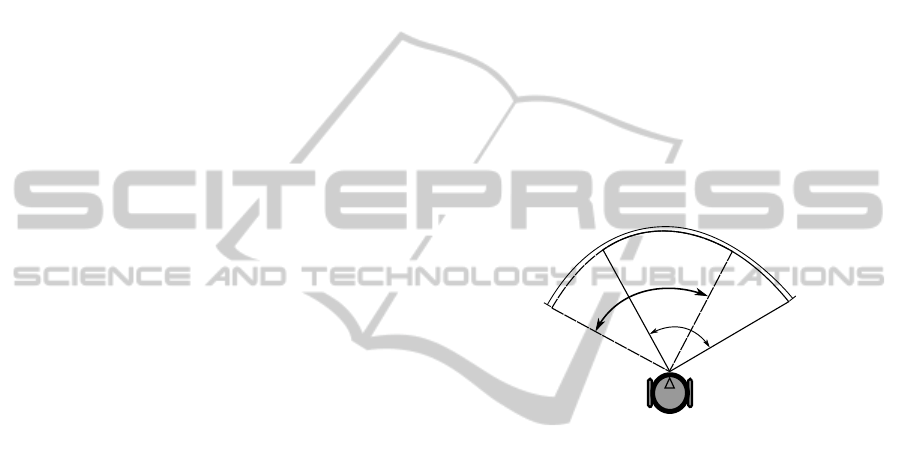

(see Figure 1). Consequently, the onboard prey

sensors overlap by 60

◦

and cover a section of 120

◦

.

The fact that the two onboard prey sensors overlap

was found to help the robot to locate and pursue preys.

The two onboard prey sensors could be implemented

on real robots, based on inputs from a camera, for in-

stance, by segmenting the field-of-view of the camera

into two overlapping regions.

120

◦

PR

PL

Figure 1: Location and field of view of the two onboard

prey sensors, where PL indicates the area sensed by the left

onboard prey sensor and PR the right onboard prey sensor.

The onboard prey sensors have a range of 0.8 m and opening

angle of 90

◦

. Together they cover a 120

◦

wide section, and

overlap by 60

◦

.

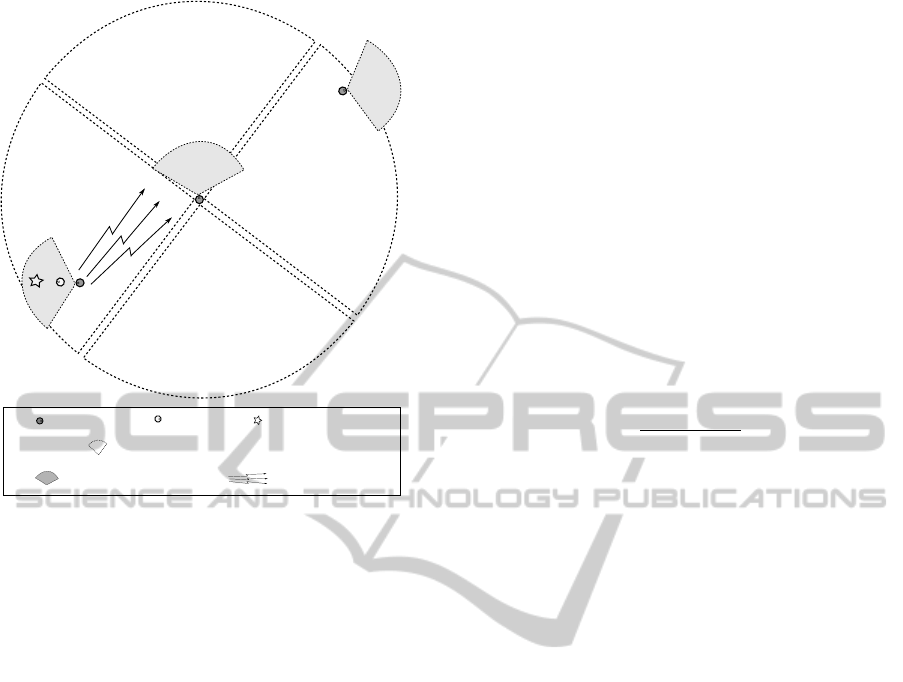

Readings for the collective sensors are computed

based on estimates received from nearby robots that

are detecting a prey with their onboard sensors. The

relative position of the prey is calculated taking into

account the relative distance and orientations of the

two robots, as well as the prey’s relative location with

respect to the robot that is detecting the prey. If es-

timates are received from multiple robots, it becomes

possible to triangulate the position of the prey. Other-

wise, an a priori estimate is used in terms of how close

the prey is to the robot that is detecting it. An a priori

estimate of 50 cm between a prey and a robot is used,

which corresponds to 10 times the radius of the robot.

The sharing of information is limited to the range of

the local, situated communication technology. In this

study, the range of both the collective sensors and of

local, situated communication is 3 m. An illustration

of the collective sensors can be seen in Figure 2.

The preys are able to move at a speed of 15 cm/s,

which is 1.5 times faster than the robots. The preys’

BeyondOnboardSensorsinRoboticSwarms-LocalCollectiveSensingthroughSituatedCommunication

113

- robot

- prey

- onboard prey sensor

- estimated position

- collective prey sensor (robot B)

- communication

B

A

C

Figure 2: An illustration of how collective sensors work.

When robot A senses a prey with its onboard sensors, it

processes the sensed information generating a estimate of

the prey’s position and broadcasts the estimate to nearby

robots, in this case robot B. Since robot C is outside robot

A’s communication range, robot A’s estimates do not reach

robot C.

sensors consist of (i) two wall sensors in front of the

prey with a range of 0.5 m — located at the same po-

sitions as the prey sensors of the robots, and (ii) four

robot sensors, located around the prey’s body, with a

range of 0.5 m. The prey remains still whenever no

nearby robot is detected. If a robot is detected, but

it is not directly in front of the prey, the prey moves

forward at full speed. If a prey detects a robot in front

of it, the prey randomly turns to either side until it is

able to move forward. After a prey escapes from the

robots, it remains still until a nearby robot is detected

again.

We evolved controllers for the robots to solve the

proposed task using a simple generational evolution-

ary algorithm. Each generation was composed of

100 genomes, and each genome encoded an artifi-

cial continuous-time recurrent neural network (Beer

and Gallagher, 1992) with a reactive layer of input

neurons, one hidden layer with 10 neurons, and one

layer of output neurons (see (Rodrigues et al., 2014)

for a detailed description of the artificial neural net-

work topology used in this study). The fitness of a

genome was sampled 9 times and the mean fitness

is used for selection. Each sample lasted 5,000 time

steps, which is equivalent to 500 seconds. In each

sample, the number of robots and preys were varied

in order to promote the evolution of general behav-

ior, which means that one sample was conducted for

each possible combination of number of robots and

number of preys. The number of robots varied be-

tween 5, 10 and 20, and the number of preys varied

between 2, 5 and 10. After all the genomes had been

evaluated, an elitist approach was used: the top five

genomes were selected to populate the next genera-

tion. Each of the top five genomes became the parent

of 19 offspring. An offspring was created by apply-

ing a Gaussian noise to each gene with a probably of

10%. The 95 mutated offspring and the original five

genomes constituted the next generation.

In order to evaluate the controllers, we rewarded

robots for moving close to and consuming preys, ac-

cording to the following equations:

F =

N

p

+

∑

T

i=0

B

i

N

r

(1)

B

i

=

N

r

∑

r=0

max(PL

r

, PR

r

) · 10

−5

(2)

where N

p

is the total number of preys consumed, T

is the total number of time steps, N

r

is the num-

ber of robots on the environment in each sample and

max(PL

r

, PR

r

) gives the maximum of the readings of

the left and right prey sensor for robot r at each time

step. Fitness is divided by the number of robots, N

r

,

in order prevent biasing evolution toward local optima

in setups with many robots. B

i

is a bootstrapping term

used to guide evolution toward behaviors that result in

robots being close to preys.

We ran experiments for three different setups:

(i) the collective sensors setup, that represent our ap-

proach, (ii) the onboard sensors setup, where robots

do not share any information, and (iii) the ideal sen-

sors setup, where robots have sensors that let them

sense preys up to a range of 3 m, which is equal to the

range of the collective sensors. We ran 20 evolution-

ary runs in every setup, each lasting 500 generations.

After all evolutionary runs had finished, we conducted

a post-evaluation with a total of 900 samples, 100 for

each combination of numbers of robots and preys, of

the genome that had obtained the highest fitness in

each run.

For our experiments we used JBotEvolver (Duarte

et al., 2014), an open source, multirobot simulation

platform and neuroevolution framework.

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

114

5 RESULTS AND DISCUSSION

5.1 Performance

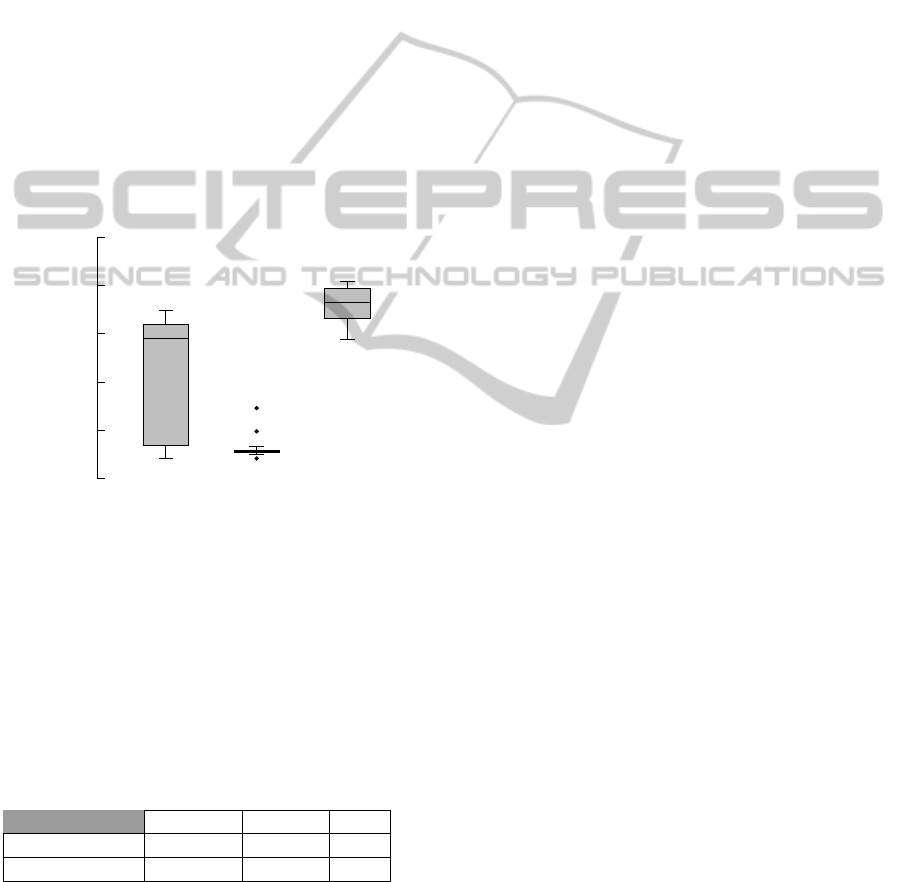

Figure 3 shows the average fitness scores of the high-

est scoring controllers of the collective, onboard and

ideal sensors setups. Each boxplot aggregates the re-

sults of 900 post-evaluation samples, from 9 different

configurations of number of robots and preys. The

results show that the highest-performing controllers

evolved in the collective sensors setup outperformed

the controllers in the onboard sensors setup, and

underperformed the controllers in the ideal sensors

setup. The average fitness obtained by the highest-

performing controllers in post-evaluation of the col-

lective, onboard and ideal sensors setups corresponds

to 0.43 ± 0.24, 0.12 ± 0.04 and 0.72 ± 0.07, respec-

tively, and in terms of preys consumed to 5.37, 1.61

and 8.81, respectively (see Table 1).

0

0.2

0.4

0.6

0.8

1

Collective Onboard Ideal

Fitness

Figure 3: Boxplot of the post-evaluated fitness scores

achieved by the highest scoring controllers in 20 evolution-

ary runs conducted in each of the setups. Each boxplot sum-

marizes results from 900 post-evaluation samples, and com-

prises observations ranging from the first to the third quar-

tile. The median is indicated by a bar, dividing the box into

the upper and lower part. The whiskers extend to the far-

thest data points that are within 1.5 times the interquartile

range, and the dots represent outliers.

Table 1: Mean fitness obtained and number of preys

consumed by the highest-performing controllers in post-

evaluation of the controllers evolved in the collective, on-

board and ideal sensors setups.

Collective Onboard Ideal

Fitness 0.43 0.12 0.72

Preys consumed

5.37 1.61 8.81

When comparing the performance of the con-

trollers evolved in the collective sensors setup with

those evolved in the ideal sensors setup, the latter

achieved a higher fitness, which can be explained by

the fact that the collective sensors need at least one

robot detecting a prey with its front prey sensors to be

able to share that prey’s relative position with other

nearby robots. In the ideal sensors setup, no com-

munication is necessarily used, since the prey sensors

have a range of 3 m instead of 0.8 m, and detect preys

in all directions. These differences between the col-

lective and the ideal sensors translate into a mean dif-

ference of preys consumed in post-evaluation of 2.68

preys, which corresponds to 30%.

In order to evaluate the robustness, adaptivity and

scalability of the solutions evolved, we evaluated the

controllers from the highest-performing evolutionary

run using collective sensors in an environment where

the principal factors — size of the arena, number of

preys and robots, were scaled by a factor of five, re-

sulting in an arena of 22.3 m x 22.3 m (500 m

2

), 50

preys and 100 robots. The evolved controllers were

able to disperse well, locate and consume an average

of 44.6 preys after post-evaluation, three times the av-

erage number of preys consumed by the controller in

the original setup (14.8). The number of preys con-

sumed was only three times higher and not five, due

to the fact that the average distance from a robot to

the wall is longer in the enlarged arena, and robots of-

ten need to trap preys in corners or along walls before

they can catch them.

5.2 Behavior

The evolved behaviors can be divided into two sub-

behaviors: a search behavior and a trap/consume be-

havior. The preys are faster than the robots, which

means that the robots often have to trap a prey be-

fore they can catch it. A prey can become trapped

if it moves close to a wall or into a corner, and two

or more robots are following it closely. Alternatively,

three or more robots can trap a prey without the aid of

walls by approaching from different directions.

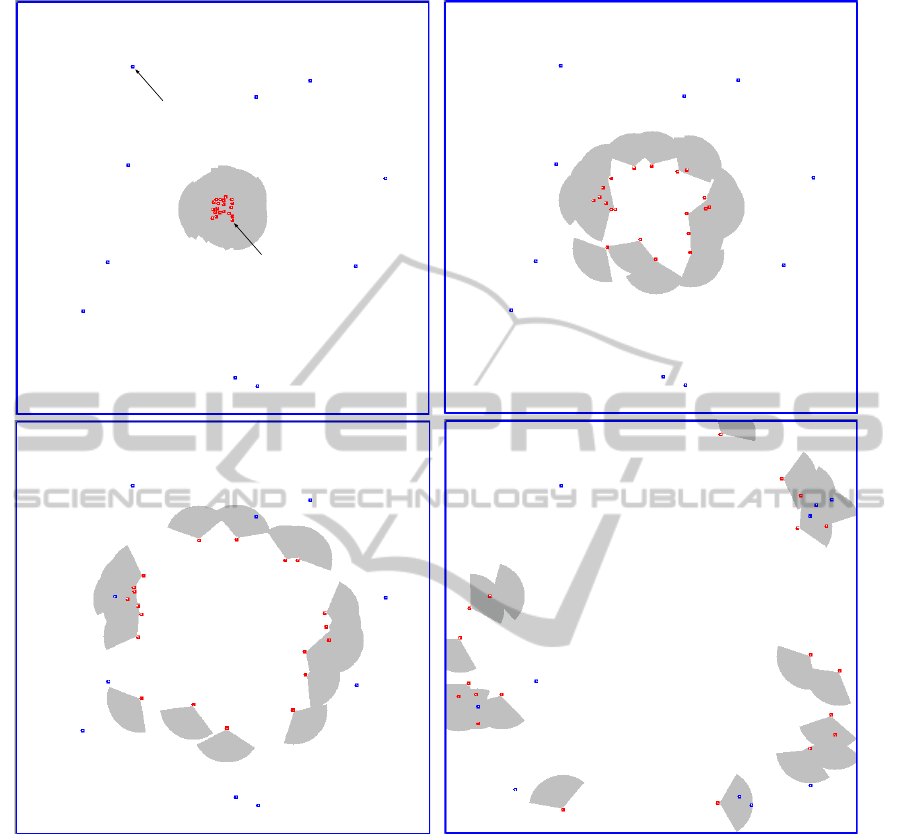

In the collective sensors setup, 15 out of 20 runs

evolved the same type of behavior: at the start of a

trial, the robots disperse in outward circular motion in

order to find preys. The robots then try to chase preys

toward the corners or a wall, either in groups or alone.

When a prey is consumed or escapes, the robots dis-

perse again to cover a larger area. An example of this

behavior can be seen on Figure 4.

The most significant difference found in the be-

haviors are in the extremes of robot densities, that

is, between samples where 20 robots are present and

samples where only five robots are present. When the

density of robots is high, they tend to disperse evenly

and when a prey is seen, they quickly aggregate with

nearby robots on the location of the prey. On the other

hand, when the density of robots is low, the aggrega-

tion near the prey is slower since the robots tend to be

BeyondOnboardSensorsinRoboticSwarms-LocalCollectiveSensingthroughSituatedCommunication

115

Prey

Robot

(A)

(B)

(C)

(D)

Figure 4: Example of a high performing controller evolved in the collective sensors setup, in a sample with 20 robots and 10

preys. The robots start at the center of the arena (A), and then disperse in outward circular motion in order to find preys (B).

When preys are detected (C), the robots chase the preys toward the corners or walls, either in groups or alone (D).

distant from each other, forcing each robot to try to

trap a prey alone, or try to maintain the prey in view

and wait until another robot gets within range of the

collective sensors. In the other five evolutionary runs,

the highest-performing controllers of the collective

sensors setup display a behavior in which the robots

move backwards. Moving backwards represents a

poor local optimum in which evolution became stuck

in early generations. In this case the robots tend to

have a relatively fixed motion pattern that, by chance,

can cause preys to be trapped in corners and then con-

sumed.

In the highest-performing behaviors evolved in the

onboard sensors setup, the robots start with a simi-

lar behavior to the collective sensors setup, dispers-

ing in different directions to find preys. When a prey

is found, the robots attempt to pursue it until another

robot be able to detect the same prey with its onboard

sensors. The highest-performing controllers of the

ideal sensors setup have a different behavior. Since

robots with ideal sensors are almost always capable

of seeing a prey, they simply follow and try to con-

sume the closest prey without the need of sometimes

extensive periods of searching.

Controllers from the collective sensors setup tend

to have a performance closer to the one observed in

controllers from the ideal sensors when the number of

preys is higher than the number of robots (Figure 5).

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

116

0

5

10

15

20

25

30

2p:5r 5p:5r 10p:5r 2p:10r 5p:10r 10p:10r 2p:20r 5p:20r 10p:20r

Preys Consumed

collective

onboard

ideal

Figure 5: The figure shows the number of prey consumed by the highest-performing controllers evolved in the onboard,

collective and ideal sensor setups in different combinations of number of preys and robots. Each boxplot represents the

average number of preys consumed in 100 post-evaluation samples.

Since the robots in the ideal sensors setup can sense

preys at a distance of 3 m, they often tend to follow

different preys, which makes it difficult to trap and

consume them. The robots in the collective sensors

setup, on the other hand, tend to follow fewer preys

with more robots, due to their ability to share a prey’s

location with a limited number of neighboring robots.

6 CONCLUSIONS

In this paper, we explored a novel approach in which

robots share readings from their sensors with neigh-

boring robots to overcome the often limited capabil-

ities of the individual robot’s onboard sensory hard-

ware. We evaluated our approach in a predator-prey

scenario, in which detected preys’ estimated positions

are communicated to neighboring robots. Robots

use received estimates to compute the readings for

their collective sensors, thereby effectively allowing

robots to sense preys at greater distances, and to more

quickly converge on the preys.

Our experimental results showed that swarms us-

ing our approach achieve a higher performance than

swarms in which the robots have to rely exclusively

on their onboard sensors. In certain cases, the per-

formance of swarms using collective sensors even ap-

proaches the performance of swarms in which robots

are equipped with ideal sensors.

The concept of collective sensors proposed in this

paper opens several new avenues of research. Obser-

vations made by different robots can be integrated to

allow more precise information to be obtained about

the environment. It might be beyond the capability

of a single robot to, for instance, estimate the veloc-

ity, shape, or size of a particular object, but such es-

timates could be obtained by combining the sensory

readings of multiple robots. Moreover, the sharing

of sensory information potentially introduces redun-

dancy in a swarm robotics system. Such redundancy

could be used to detect faults, and in case of failure in

onboard sensors, a robot could continue to contribute

by relying on its collective sensors.

ACKNOWLEDGEMENTS

This work was supported by Fundac¸

˜

ao para a

Ci

ˆ

encia e a Tecnologia (FCT) under the grants,

SFRH/BD/76438/2011, PEst-OE/EEI/LA0008/2013,

and EXPL/EEI-AUT/0329/2013.

REFERENCES

Bassler, B. L. (1999). How bacteria talk to each other: regu-

lation of gene expression by quorum sensing. Current

Opinion in Microbiology, 2(6):582–587.

Beckers, R., Holland, O. E., and Deneubourg, J.-L. (1994).

From local actions to global tasks: Stigmergy and col-

lective robotics. In 4th International Workshop on the

Synthesis and Simulation of Living Systems (ALIFE

IV), pages 181–189. MIT Press, Cambridge, MA.

BeyondOnboardSensorsinRoboticSwarms-LocalCollectiveSensingthroughSituatedCommunication

117

Beer, R. D. and Gallagher, J. C. (1992). Evolving dynam-

ical neural networks for adaptive behavior. Adaptive

Behavior, 1(1):91–122.

Chandrasekaran, S. and Hougen, D. F. (2006). Swarm intel-

ligence for cooperation of bio-nano robots using quo-

rum sensing. In 2006 Bio Micro and Nanosystems

Conference (BMN), pages 104–104. IEEE Press, Pis-

cataway, NJ.

Cianci, C., Raemy, X., Pugh, J., and Martinoli, A. (2007).

Communication in a swarm of miniature robots: The

e-puck as an educational tool for swarm robotics. In

Swarm Robotics, pages 103–115. Springer, Berlin,

Germany.

Correll, N. and Martinoli, A. (2006). Collective inspec-

tion of regular structures using a swarm of miniature

robots. In Experimental Robotics IX, pages 375–386.

Springer, Berling, Germany.

Duarte, M., Oliveira, S., and Christensen, A. L. (2011).

Towards artificial evolution of complex behavior ob-

served in insect colonies. In 15th Portuguese Confer-

ence on Artificial Intelligence (EPIA), pages 153–167.

Springer, Berlin, Germany.

Duarte, M., Silva, F., Rodrigues, T., Oliveira, S. M., and

Christensen, A. L. (2014). JBotEvolver: A versatile

simulation platform for evolutionary robotics. In 14th

International Conference on the Synthesis & Simula-

tion of Living Systems (ALIFE)., pages 210–211. MIT

Press, Cambridge, MA.

Einolghozati, A., Sardari, M., and Fekri, F. (2012). Collec-

tive sensing-capacity of bacteria populations. In 2012

IEEE International Symposium on Information The-

ory (ISIT), pages 2959–2963. IEEE Press, Piscataway,

NJ.

Floreano, D., Mitri, S., Magnenat, S., and Keller, L. (2007).

Evolutionary Conditions for the Emergence of Com-

munication in Robots. Current Biology, 17(6):514–

519.

Fredslund, J. and Matari

´

c, M. J. (2002). A general

algorithm for robot formations using local sensing

and minimal communication. IEEE Transactions on

Robotics and Automation, 18(5):837–846.

Garnier, S., Jost, C., Gautrais, J., Asadpour, M., Caprari,

G., Jeanson, R., Grimal, A., and Theraulaz, G. (2008).

The embodiment of cockroach aggregation behavior

in a group of micro-robots. Artificial Life, 14(4):387–

408.

Guti

´

errez, A., Campo, A., Dorigo, M., Amor, D., Mag-

dalena, L., and Monasterio-Huelin, F. (2008). An open

localization and local communication embodied sen-

sor. Sensors, 8(11):7545–7563.

Mondada, F., Bonani, M., Raemy, X., Pugh, J., Cianci, C.,

Klaptocz, A., Magnenat, S., Zufferey, J.-C., Floreano,

D., and Martinoli, A. (2009). The e-puck, a robot

designed for education in engineering. In 9th IEEE

International Conference on Autonomous Robot Sys-

tems and Competitions (ROBOTICA), pages 59–65.

IPCB: Instituto Polit

´

ecnico de Castelo Branco.

Nolfi, S. and Floreano, D. (2000). Evolutionary robotics:

The biology, intelligence, and technology of self-

organizing machines. MIT Press, Cambridge, MA.

Rodrigues, T., Duarte, M., Oliveira, S. M., and Christensen,

A. L. (2014). What you choose to see is what you

get: An experiment with learnt sensory modulation

in a robotic foraging task. In 16th European Confer-

ence on the Applications of Evolutionary Computation

(EvoApplications), pages 789–801. Springer, Berlin,

Germany.

Schmickl, T. and Crailsheim, K. (2008). Trophallaxis

within a robotic swarm: bio-inspired communication

among robots in a swarm. Autonomous Robots, 25(1-

2):171–188.

Stamatis, P. N., Zaharakis, I. D., and Kameas, A. D. (2009).

A study of bio-inspired communication scheme in

swarm robotics. In 7th International Conference on

Practical Applications of Agents and Multi-Agent Sys-

tems (PAAMS), pages 383–391. Springer, Berlin, Ger-

many.

Støy, K. (2001). Using situated communication in dis-

tributed autonomous mobile robotics. In 7th Scandi-

navian Conference on Artificial Intelligence (SCAI),

pages 44–52. IOS Press, Amsterdam, The Nether-

lands.

Trianni, V., Groß, R., Labella, T. H., S¸ahin, E., and Dorigo,

M. (2003). Evolving aggregation behaviors in a

swarm of robots. In 7th European Conference on Arti-

ficial Life, pages 865–874. Springer, Berlin, Germany.

Turgut, A. E., C¸ elikkanat, H., G

¨

okc¸e, F., and S¸ahin, E.

(2008). Self-organized flocking with a mobile robot

swarm. In 7th International Joint Conference on Au-

tonomous Agents and Multiagent Systems (AAMAS),

pages 39–46. IFAAMAS, Richland, SC.

Werfel, J., Petersen, K., and Nagpal, R. (2014). Designing

collective behavior in a Termite-Inspired robot con-

struction team. Science, 343(6172):754–758.

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

118