Resource-aware State Estimation in Visual Sensor Networks with

Dynamic Clustering

Melanie Schranz and Bernhard Rinner

Institute of Networked and Embedded Systems, Alpen-Adria-Universit

¨

at Klagenfurt, Klagenfurt, Austria

Keywords:

Visual Sensor Network, Resource Distribution, Wireless Communication, Negotiation Theory, Decentralized

Processing.

Abstract:

Generally, resource-awareness plays a key role in wireless sensor networks due the limited capabilities in

processing, storage and communication. In this paper we present a resource-aware cooperative state estima-

tion facilitated by a dynamic cluster-based protocol in a visual sensor network (VSN). The VSN consists of

smart cameras, which process and analyze the captured data locally. We apply a state estimation algorithm

to improve the tracking results of the cameras. To design a lightweight protocol, the final aggregation of the

observations and state estimation are only performed by the cluster head. Our protocol is based on a market-

based approach in which the cluster head is elected based on the available resources and a visibility parameter

of the object gained by the cluster members. We show in simulations that our approach reduces the costs for

state estimation and communication as compared to a fully distributed approach. As resource-awareness is

the focus of the cluster-based protocol we can accept a slight degradation of the accuracy on the object’s state

estimation by a standard deviation of about 1.48 length units to the available ground truth.

1 INTRODUCTION

In wireless sensor networks (WSNs), cooperative

control and distributed processing opened up a wide

research field. It is a very popular topic, e.g., in mo-

bile robots, unmanned vehicles, automated highway

systems, industrial process or environmental monitor-

ing (Ren and Beard, 2005). WSNs are constituted of

spatially distributed sensor nodes to retrieve informa-

tion from the environment and react on it. The indi-

vidual sensor nodes in such a network communicate

wirelessly and their actions are also autonomous with

respect to the received information. Furthermore, the

individual sensor nodes in a WSN are able to learn

from its environment especially through exchanging

locally retrieved information among themselves. A

typical characteristic of sensor nodes used in an ad-

hoc WSN are the limited resources. They are usu-

ally battery powered, have a bounded communication

range and limited on-board processing and storage ca-

pabilities.

Throughout this paper, we consider only networks

consisting of visual sensors communicating wire-

lessly. Visual sensor networks (VSNs) consist of au-

tonomous low-power image sensors with storage and

communication capabilities as well as a processing

unit on board (Soro and Heinzelman, 2009). Thus,

they have the ability to analyze and process the data

locally. A typical task of a VSN is to identify and

track objects for surveillance and identification appli-

cations.

The object is usually described by a state, in-

cluding the position, the velocity or other character-

istics of the object. A VSN with overlapping field

of views (FOVs) and thus multiple observations of

the same target simultaneously, asks for aggregat-

ing them to a joint, improved observation. To com-

pute a global state, the individual observations are ex-

changed among the cameras and aggregated locally.

These aggregated observations serve as input to state

estimation algorithms.

A typical approach for state estimation in a VSN is

to forward the observations to a central unit. This unit

aggregates the observations and performs a global

state estimation algorithm. Another possibility in

state estimation is to use a fully distributed approach.

Each camera exchanges its observations with the

other cameras in the VSN and performs global state

estimation. In this paper we propose a lightweight

resource-aware cluster-based protocol. In a mod-

ified market-based approach—proposed by (Esterle

et al., 2014)—we elect a cluster head responsible for

15

Schranz M. and Rinner B..

Resource-aware State Estimation in Visual Sensor Networks with Dynamic Clustering.

DOI: 10.5220/0005239200150024

In Proceedings of the 4th International Conference on Sensor Networks (SENSORNETS-2015), pages 15-24

ISBN: 978-989-758-086-4

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

state estimation incorporating the observations from

its cluster members. The role of the cluster head is

handed over to a cluster member if the available local

resources decrease. Comparing the cluster-based to

the centralized approach, scalability is increased and

there is no longer the risk of a single point of failure.

Further, in resource-aware VSNs the fully distributed

approach stresses the camera’s capabilities in com-

munication and processing due to the high amount of

messages to be exchanged. Further, their observations

are processed simultaneously and thus leading to re-

dundant results on each camera.

This paper provides two scientific contributions

with a focus on resource-awareness: (i) Utilized by

the market-based approach we perform dynamic clus-

ter management including cluster head election and

handover. (ii) With evaluations in a simulation envi-

ronment we show the advantages in terms of resource-

awareness of the proposed cluster-based protocol over

the fully distributed approach. A state estimation al-

gorithm is incorporated for both approaches.

The paper is organized as follows: Section 2

presents the related work to state estimation and clus-

tering methods in VSNs. In Section 3 we define the

system model for the cluster-based approach. Further,

Section 4 describes the underlying market-based ap-

proach as well as the state-space model. Section 5

shows the cluster-based protocol and the incorpora-

tion of the state estimation algorithm. In Section 6 we

evaluate the proposed protocol and discuss the simu-

lation results. Finally, Section 7 concludes the paper

and gives an outlook on future work.

2 RELATED WORK

In our approach we focused on related work concern-

ing cooperative state estimation and clustering meth-

ods in VSNs.

Cooperative state estimation is a well-known re-

search topic in VSNs to optimize an object state.

There exist already approaches for fully distributed

systems having an underlying linear state-space

model (Olfati-Saber and Sandell, 2008). Several

authors in (Ding et al., 2012), (Song et al., 2011)

and (Soto and Roy-Chowdhury, 2009) propose the

distributed Kalman-Consensus Filter (KCF) for dis-

tributed state estimation in camera networks. For a

non-linear state space model, there exist other filters

like the Extended Kalman Filter, the Particle Filter or

the newly approached Cubature Kalman Filter. In the

work of (Bhuvana et al., 2013) we made a compari-

son between these three filters for distributed state es-

timation in VSNs. The best trade-off in terms of com-

putational complexity and estimation accuracy when

modeling non-linear states, is achieved with the Cu-

bature Kalman Filter.

Nevertheless, in a VSN the limited resources of

the cameras need to be managed accordingly. One ap-

proach to reduce the participating nodes and thus save

resources, is clustering. The literature describes two

main strategies for clustering: (i) In a static cluster

the nodes are assigned offline to a specific cluster and

do not change over the network’s lifetime (Chaurasiya

et al., 2011), (Zahmati et al., 2007). (ii) In a dynamic

approach clustering is triggered by arising events in

the network as in (Medeiros et al., 2008), (Taj and

Cavallaro, 2011), (Mallett, 2006) and (Qureshi and

Terzopoulos, 2008). In (Mallett, 2006) and (Qureshi

and Terzopoulos, 2008) they use the term grouping in-

stead of clustering. Nevertheless, their task is to form

clusters having a qualifying parameter. In (Qureshi

and Terzopoulos, 2008) this qualifying parameter de-

scribes the extrinsic parameters of a PTZ-camera to

examine the cameras coverage over the object of in-

terest. Thus, they focus on distribute tracking per-

formance among the cameras. Further, in (Medeiros

et al., 2008) and (Qureshi and Terzopoulos, 2008)

it is necessary to exchange various messages among

the cluster members, e.g. to log-in/log-off from the

cluster. Also the coverage problem plays a role in

VSNs for air space surveillance as in (Hooshmand

et al., 2013) and (Torshizi and Ghahremanlu, 2013),

although the clustering process is directed via a cen-

tral unit. Especially for resource management of the

nodes in a VSNs there are several ideas: In (Chen

et al., 2008) they propose a handoff algorithm with

adaptive resource management that automatically and

dynamically allocates resources to objects with dif-

ferent priority ranks. Their resource management ap-

proach is to decrease the frame rate. Similarly it is

done in (Dieber et al., 2011), focusing on coverage as

well. In (Monari and Kroschel, 2010) a cluster head

selects cluster members to deliver tracking responsi-

bilities. Further, in (Younis and Fahmy, 2004) they

propose HEED (hybrid, energy-efficient distributed

clustering approach) for sensor networks. They se-

lect the cluster head based on the residual energy of

the node as well as neighbor proximity. Nevertheless,

the termination of the clustering approach is depen-

dent on the number of neighbors. A similar approach

is realized in (SanMiguel and Cavallaro, 2014). Nev-

ertheless, the communication overhead produced by

this clustering protocol is quite high and its usage for

battery-powered devices questionable.

In contrast to the existing research directions, our

objective is to establish a resource-aware approach for

smart cameras in VSNs. We adapt a market-based ap-

SENSORNETS2015-4thInternationalConferenceonSensorNetworks

16

proach proposed in (Esterle et al., 2014) to design a

dynamic cluster-based protocol focusing on available

resources and a visibility parameter in order to elect a

single camera for state estimation. Contrary to (Song

et al., 2011), we reduce the overhead for communica-

tion by designing a lightweight cluster-based protocol

with a minimal number of messages to be exchanged

and thus, spare a node’s resources.

3 SYSTEM MODEL

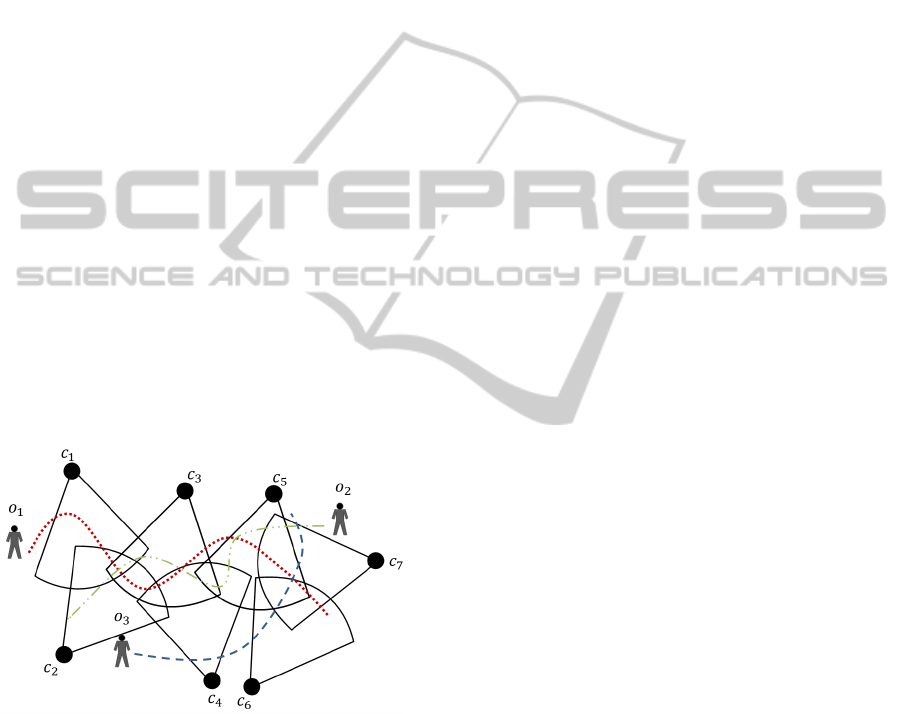

In this paper we consider a VSN of a fixed set of cal-

ibrated smart cameras c

i

∈ C as illustrated in Figure

1. The task of the VSN is to monitor the given en-

vironment and thus to identify and track one or more

specific objects o

k

∈ O. We assume a perfect object

re-identification. Thus, each c

i

∈ C is aware of the

object’s global identifier. As these cameras are cali-

brated, they are able to calculate the object’s position

on the ground plane by applying a homography on the

object’s image plane coordinates. The object position

is referred to as observation. Since the cameras in

Figure 1 have overlapping FOVs, they have the abil-

ity to track a specific object o

k

simultaneously. This

enables cooperative work in the VSN. Cooperation is

achieved by exchanging their individual observations

and processing them accordingly.

Figure 1: VSN with spatially distributed smart cameras per-

forming multiple object tracking.

The objective of this paper is to present a resource-

aware protocol for cooperative state estimation in a

VSN by forming dynamic clusters—one per object

in the scene. A cluster is a subset of all cameras in

the network C

k

⊂ C, whereby a camera c

k

i

is a cluster

member of the cluster C

k

, if the camera has the object

o

k

in its FOV. Thus, this cluster is given as

C

k

:= {c

k

i

∈ C|c

i

∈ C ∧ o

k

in FOV} (1)

and c

k

h

represents the cluster head of C

k

.

The dynamics of the clustering is illustrated in

Figure 2. The individual figures show subsequent

time slots of a specific cluster C

k

. The camera marked

with an x is the cluster head c

k

h

with the responsibil-

ity to estimate the state for the specific object o

k

. All

cameras with gray colored FOVs indicate the pres-

ence of the object in their FOV. Cameras without an

x, but with a colored FOV, denote the cluster mem-

bers.

4 RESOURCE-AWARE STATE

ESTIMATION

In our approach the cluster head is responsible for the

collection of the object’s observations from all clus-

ter members and to perform cooperative state estima-

tion on them. For its election we propose a market-

based approach based on the work in (Esterle et al.,

2014). In this approach the tracking responsibility for

a specific object is autonomously distributed among

the cameras in the network. This market-based ap-

proach is used to elect a single camera as cluster head

out of all cluster members. Contrary to the approach

in (Esterle et al., 2014), all cluster members continu-

ously track the objects in their FOV.

4.1 Market-based Dynamic Clustering

Within the market-based approach we have two dif-

ferent interacting components: a camera owning the

object and cameras bidding for the object. In our case

the owner is the cluster head c

k

h

∈ C

k

with the respon-

sibility for auction initiation. The bidders are the clus-

ter members c

k

i

∈ C

k

and have the task to bid for an

object.

The primary step of the market-based approach is

to initiate an auction by the owner for a specific ob-

ject o

k

. The auction initiation is necessary to elect the

owner for the object o

k

in the next round. The possi-

bilities for auction initiation are described in Section

4.1.2. Subsequently, the cluster members c

k

i

track the

object in their FOV. With a set of parameters they bid

with an utility α

k

i

for the object at the owner’s side.

The composition of the utility α

k

i

is discussed in Sec-

tion 4.1.1.

In market-based clustering each camera (cluster

head c

k

h

as well as cluster member c

k

i

) tries to maxi-

mize its local utility A

i

which is given by

A

i

=

∑

k∈O

α

k

i

− p + r. (2)

The parameter p describes all payments made and the

parameter r all received payments in this iteration.

Resource-awareStateEstimationinVisualSensorNetworkswithDynamicClustering

17

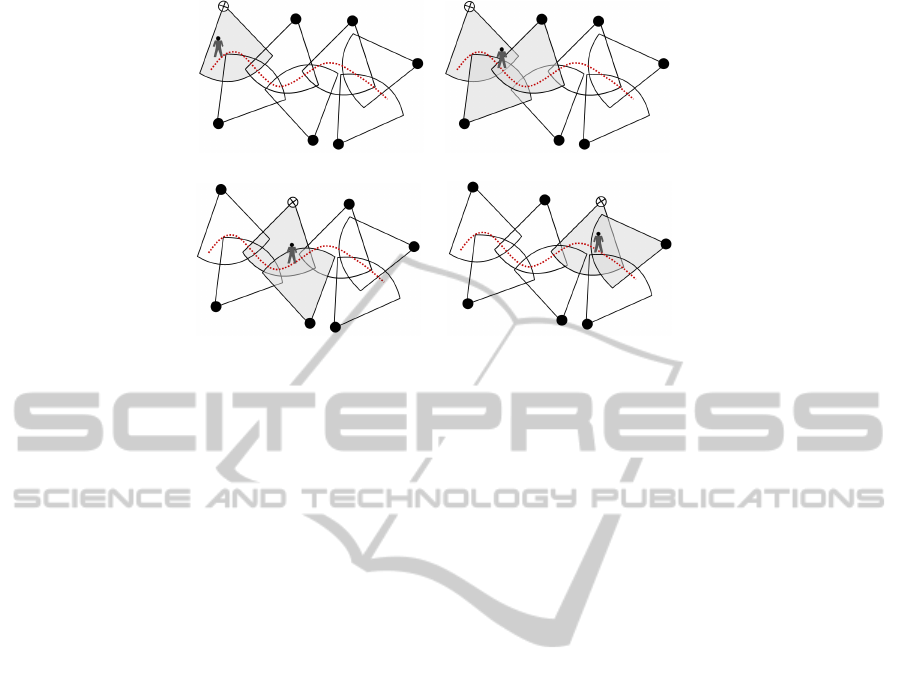

(a) (b)

(c) (d)

Figure 2: Dynamic clustering in a VSN. Figure 2(a) to Figure 2(d) show the clustering in specific time steps.

According to the Vickrey auction mechanism (Vick-

rey, 1961), the state estimation responsibility is trans-

ferred to the highest bidder, but at the price by the 2nd

highest bidder. This strategy imposes to bid truthful

valuations from the camera side instead of specula-

tions. If A

i

can be increased by selling o

k

, the owner

chooses the highest bidder to be the next owner of the

object o

k

and thus to become the next cluster head c

k

h

.

The estimation process and thus, the dynamic cluster

“follow“ the object’s trajectory through the network,

as illustrated in Figure 2.

4.1.1 Utility Definition

The utility α

k

i

is used as value to bid for an object o

k

in the FOV, if an auction is initiated by the current

cluster head c

k

h

. The utility is an election criterion

that can be defined with different parameters. In our

approach, the utility is based on two parameters: the

available resources on the camera and the confidence

in the tracking performance.

Storage, communication and processing power

are the most critical resources in VSNs. Especially

in VSNs with heterogeneous camera systems the

individual distribution of resources can express

how many tasks can be fulfilled by a specific cam-

era. The available resources are indicated with

R

total,i

, normalized in the range 0 6 R

total,i

6 1,

and can describe any resources the designer per-

ceives to pay attention to. As already mentioned,

we typically pay attention to exchanging, process-

ing and storing the observations retrieved by the

visual sensor. The task of sensing is ignored in

the resource model, due to its continuous execu-

tion. The resources totally available are described

with

R

total,i

=

∑

λr

= λ

0

∗

∑

[r

k

W L

] + λ

1

∗ r

E,i

+ λ

2

∗ r

MEM,i

+ λ

3

∗ r

COMM,i

. (3)

The parameter λ = [λ

0

, . . . ,λ

3

] with

∑

λ = 1 indicates

the weights of the resources we pay attention to. The

individual resources are denoted with i) r

k

W L

as the

workload for each object o

k

in terms of processing

power, ii) r

E,i

as the total energy available on the

node, iii) r

MEM,i

as the total memory available on the

node and iv) r

COMM,i

as the amount of communica-

tion performed. Each parameter is a normalized value

between 0 and 1. For the cluster-based protocol pre-

sented in Section 5 and the corresponding evaluation

in Section 6 we use the parameter r

E,i

, solely. There-

fore, we set the parameter λ

1

= 1, all others are 0.

Thus, we only consider the total energy available on

each camera c

i

as we focus on battery powered smart

cameras.

The other parameter used for calculating the util-

ity is the local confidence in the tracking performance

of the camera on the object o

k

. The confidence,

denoted with ζ

k

i

, can be obtained in various ways.

One approach is to derive the confidence out of the

matched features when comparing the tracked object

to a given model. Another possibility is to use ζ

k

i

as a

visibility parameter described as a binary value [0;1].

A 1 indicates that the object o

k

can be detected in the

FOV of camera c

i

. With a 0 we express that the object

is not detected. Thus, ζ

k

i

can be considered as mem-

bership function being a part of the cluster or not. The

utility α

k

i

is then defined as

α

k

i

= ζ

k

i

∗ R

total,i

. (4)

SENSORNETS2015-4thInternationalConferenceonSensorNetworks

18

The utility α

k

i

is only positive, and thus a valid bid, if

the object o

k

is visible to camera c

i

.

4.1.2 Auction Initiation

With auction initiation by the cluster head we start the

election of the cluster head for the next time step. The

election is necessary, to select a camera with sufficient

resources and confidence of the tracking performance.

If the cluster head sells the responsibility for object

o

k

, we hand over the object ID together with the ac-

tual state as initial state for the processing by the new

cluster head. If the cluster head can maximize its own

utility by keeping o

k

it remains the cluster head for the

next time step as well.

Selecting a proper time for the handover is essen-

tial to limit the communication overhead produced by

the market-based approach itself. As can be seen in

Figures 2(a) to 2(d) a new cluster head is elected after

an auction initiation. We identified three possibilities,

when an auction can be initiated: (i) α

k

h

== 0: The

utility of c

k

h

is equal to zero—the worst case with no

available resources at all or the object is no longer in

the camera’s FOV. (ii) α

k

h

< α

thr

: The utility of c

k

h

is smaller than a given threshold. (iii) We continu-

ously initiate an auction at regular intervals to exam-

ine α

k

h

< α

k

i

, hence, the utility of c

k

h

is smaller than the

utility of a cluster member c

k

i

.

For the cluster-based protocol presented in Sec-

tion 5 and the corresponding evaluation in Section 6

we apply the auction initiation point ii.

4.2 State-space Model

The objective of a VSN is to detect and track objects.

As we assume overlapping FOVs in the VSN we can

perform cooperative state estimation on the object’s

state. In our approach we choose a continuous state,

describing the position and the velocity of the object

moving in the VSN. Equation 5 describes the state s

consisting of position (x, y) and velocity (˙x, ˙y) of an

object o

k

determined by camera c

i

at time step t.

s

k

i

(t) = [x

k

i

(t), y

k

i

(t), ˙x

k

i

(t), ˙y

k

i

(t)] (5)

The state is modeled in a linear state-space model. As

an approach for cooperative state estimation Song et

al. (Song et al., 2011) designed a Kalman Consen-

sus model for fully distributed processing in VSNs.

Their approach serves as reference system in Section

6. Furthermore, we apply the Kalman Consensus Fil-

ter of (Song et al., 2011) in the cluster-based protocol.

5 THE CLUSTER-BASED

PROTOCOL

In our cluster-based protocol (cp. Algorithm 1) the

camera can take on either of the following two roles

for each object in its FOV.

Cluster Head c

k

h

. The cluster head is an elected

camera in the VSN. It has the task of collecting the

observations and the bids from the cluster members.

Further, it performs a state estimation algorithm and

initiates an auction to trigger cluster head election if

necessary.

Cluster Member c

k

i

. First, a cluster member waits

for a defined timeout to receive a request for auc-

tion initiation. After receiving the request, the clus-

ter member provides the cluster head its observation

and bid to a corresponding object. If no request for

auction initiation was received within the timeout, the

camera assigns itself as cluster head. This procedure

is denoted as the initialization phase of the cluster-

based protocol.

Algorithm 1: The cluster-based protocol for a

resource-aware state estimation.

ObjectDetection();

for ∀ o

k

in FOV do

role (k)=role (

˜

k);

GetObservation();

switch (role (k)) do

case (c

k

h

):

InitiateAuction();

ReceiveInformation();

PerformStateEstimation();

if (α

k

i

> α

k

h

) then

Handover (s

k

i

);

role (

˜

k)=c

k

i

;

case (c

k

i

):

WaitTimeout();

if (ReceiveRequest()) then

SendInformation();

else

role (

˜

k)=c

k

h

;

if (ReceiveHandover()) then

role (

˜

k)=c

k

h

;

For each iteration of the cluster-based protocol

in Algorithm 1, the initial task for all cameras in

the VSN is the detection of the objects. As al-

ready mentioned, we assume a global identifier for

each object known by the cameras in the VSN. With

Resource-awareStateEstimationinVisualSensorNetworkswithDynamicClustering

19

ObjectDetection() we can identify all objects o

k

in

the FOV of a camera. The first task for each camera

is to take over the role in role(

˜

k) of the camera from

the previous time step of the specific object o

k

. For

all detected objects o

k

the camera retrieves its local

observations z

k

i

in Observation() and calculate the

corresponding utility α

k

i

.

If the camera is a cluster head c

k

h

it initiates an auc-

tion by InitiateAuction(). Thereafter, it receives

the observations z

k

i

—in our case the object position on

ground plane—as well as the utlity α

k

i

from the clus-

ter members with ReceiveInformation(). In the

next routine it performs state estimation to optimize

the object’s state in PerformStateEstimation() by

integrating the received observations from the cluster

members. Now if one of the received utilities α

k

i

is

smaller than the local utility of the cluster head α

k

h

,

the cluster head performs a handover and transmits

the current estimated state s

k

i

using Handover (s

k

i

) to

the new cluster head c

k

i

. Thereby, it assigns itself as

cluster member.

In case, the camera is a cluster member c

k

i

, it first

waits for a defined timeout in WaitTimeout(). If c

k

i

is able to receive a request from the cluster head in

ReceiveRequest(), it transfers the object’s observa-

tion z

k

i

as well as the corresponding utility α

k

i

to the

cluster head in SendInformation(). On the other

hand, if c

k

i

has not received a request, it assigns itself

as cluster head c

k

h

. Further, if c

k

i

receives the message

ReceiveHandover() it assigns itself to the cluster

head c

k

h

and adopts its tasks in the next time step. With

the self-nomination it is possible to assign multiple

cluster heads for a single object. Nevertheless, in the

next iteration of the algorithm the auction initiation

process elects a cluster head through the exchanged

utilities. Thus, after the first bidding process the issue

on multiple cluster heads is resolved. In this process,

each self-nominated cluster head initiates an auction,

in which the cluster head with the highest utility keeps

its role.

5.1 Additional Settings to the

Cluster-Based Protocol

To keep the dynamic cluster head allocation as

lightweight as possible, cluster members do not know

each other, only the cluster head is in knowledge of

them. Joining a cluster is straightforward. If a cam-

era detects an object, it waits for a predefined timeout

to receive a message for auction initiation by an al-

ready existing cluster head. Since it is able to detect

the object, it has also information about its state and

the related utility. Leaving the cluster is only possi-

ble, if the camera is not able to detect the object in

its FOV. Nevertheless, if this is the case, the camera

shows simply no reaction on messages for auction ini-

tiation. Thus, the cluster head would not receive any

further information related to the object by this cam-

era. Further, a camera failure or a camera adding to

the network would not disturb the process of the clus-

tering protocol.

5.2 State Estimation

In this work we apply the Kalman Consensus Filter

(KCF) proposed by (Song et al., 2011) as state esti-

mator. The major steps are summarized in Algorithm

2. In the information form of the Kalman Filter, pre-

diction and update are done in one step,

s

k

i

(t + 1) =A

i

(t)s

k

i

(t)

+ K

k

i

(t)[z

k

i

(t + 1) − H

i

(t)s

k

i

(t)]

(6)

The observations of the cameras are indicated with

z

k

i

(t + 1) identically described as the state in Equation

5. Further, A

i

is denoted as the state change for each

time step t and H

i

referred to as the observation ma-

trix, which maps the true state space into the observed

space. The Kalman gain K

k

i

defines how much the

difference between the previous estimation and the

actual measurement influences the actual estimation.

Algorithm 2 summarizes the main steps in KCF state

Algorithm 2: PerformStateEstimation().

input : current observations z

k

i

(t + 1) from

all participants (c

k

i

and c

k

h

); state

s

k

i

(t) and covariance matrix P

k

i

(t)

from the last time step t.

output: state s

k

i

(t + 1) and covariance

matrix P

k

i

(t + 1) update.

BuildInformation();

StateEstimation();

StateUpdate();

estimation. As input for the state estimation in

PerformStateEstimation() serves the current ob-

servation z

k

i

(t + 1), the state s

k

i

(t) from the last time

step t and the corresponding covariance matrix P

k

i

(t).

First, the information matrix and the information vec-

tor are built in BuildInformation(). The informa-

tion vector u

i

in Equation 7 is a statistical generaliza-

tion of the observation, whereas the information ma-

trix U

i

in Equation 8 builds the covariance matrix ex-

pressing the uncertainty in the estimated values of the

system state.

SENSORNETS2015-4thInternationalConferenceonSensorNetworks

20

u

k

i

=

∑

i

H

T

i

R

−1

i

z

k

i

(7)

U

k

i

=

∑

i

H

T

i

R

−1

i

H

i

(8)

Within StateEstimation() the state is estimated as

described in Equation 6. The state s

k

i

as well as the er-

ror covariance P

i

are updated in StateUpdate() with

P

k

i

(t + 1) = A

i

(t)M

k

i

(t)A

i

(t)

T

+ B

i

(t)Q

i

(t)B

i

(t)

T

s

k

i

(t + 1) = A

i

(t)s

k

i

(t) (9)

where M

k

i

(t) = (P

k

i

(t)

−1

+U

k

i

)

−1

. Finally we return

P

k

i

and s

k

i

as inputs for the iteration in the next time

step.

6 SIMULATION AND

EXPERIMENTAL RESULTS

We evaluate the proposed resource-aware state esti-

mation with a dynamic clustering approach by sim-

ulation studies. For these evaluations we use a new

VSN-Simulator (Schranz and Rinner, 2014), a graph-

ical simulator built in the game engine Unity3D. The

reason for developing a new simulator beside the ex-

isting ones, as presented in (Qureshi and Terzopoulos,

2008), was to create a tool that i) is easy in installa-

tion, use and extension of the simulation environment,

ii) can model multiple cameras, iii) having a simulator

close to real-time performance (up to now all 2 sec-

onds a measurement is made), and iv) getting a fancy

looking and thus motivating environment with mul-

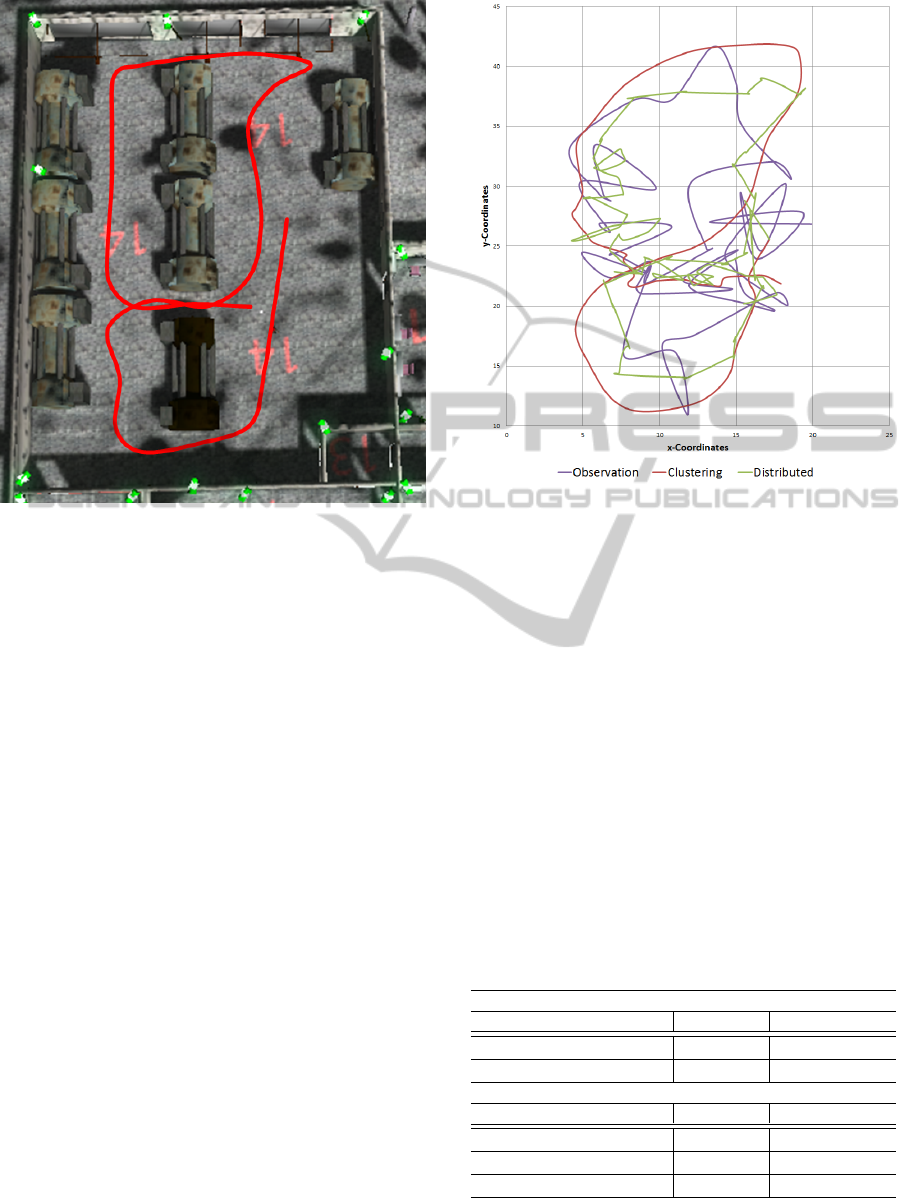

tiple GUI elements. The VSN-simulator provides 26

smart cameras set to 14 emulated office rooms. Figure

3 shows a screenshot of the simulator, with 3 chosen

camera views and buttons to interact with (add ob-

jects, delete objects, switch tracking of objects on/off

and save the observations). In the simulation environ-

ment the cameras have overlapping FOVs and the ob-

ject identification as well as all other processing task

concerning clustering and state estimation run locally

on the cameras. The corresponding scripts to the pro-

cessing tasks were written in C#. The tracking of the

object is realized by the so-called raycast method pro-

vided by Unity3D. In simple terms, if a camera has a

sustained sight on the object, it gets the object’s coor-

dinates.

Figure 4 shows the scenario for the underlying

simulation results together with the trajectory the ob-

ject is moving on. In our evaluation we consider a sin-

gle room of the VSN-Simulator equipped with 9 cam-

eras. The object is following pre-defined waypoints.

The coordinates on the object’s trajectory are further

Figure 3: A screenshot of the VSN-Simulator.

denoted as ground truth. Within a simulation environ-

ment, the cameras need individual observations from

the object. Thus, each camera’s tracking output is a

random modification of the ground truth. In this eval-

uation we set the modification value randomly to a

standard deviation of 3 length units.

6.1 Performance Measure

A first evaluation is referred to the accuracy of the

object state gained by the proposed resource-aware

dynamic clustering protocol. To compare the cluster-

based protocol with the fully distributed approach of

(Song et al., 2011) both were implemented to the sim-

ulator using C#. For this evaluation we present the re-

sults for t = 1, . . . , 52 measurement points on the ob-

ject’s trajectory of Figure 4. On an average 5 of the

9 cameras have the object in their FOV. Thus, for the

cluster-based protocol, we have 5 participants (con-

sisting of cluster head and cluster members) on aver-

age as well.

Figure 5 illustrates the estimated x and y-

coordinates for a single object, comparing the fully

distributed approach with the cluster-based protocol

to the camera output, a random modification of the

ground truth of the observed object. The difference

of the estimated object’s state between the applied fil-

ters using the cluster-based protocol and the fully dis-

tributed approach is evaluated in Table 1. The state

accuracy computed in a distributed approach achieves

Table 1: Comparison between the fully distributed and the

clustering approach of the RMSE and the standard deviation

σ to the ground truth of the tracked object.

Method

RMSE σ

x y x y

Fully distributed 1.06 0.93 0.64 0.54

Clustering approach 1.71 1.88 1.24 1.08

Resource-awareStateEstimationinVisualSensorNetworkswithDynamicClustering

21

Figure 4: A screenshot of the considered scenario in the

VSN-Simulator including the object’s trajectory.

a higher accuracy of the actual object’s state. Compar-

ing the root-mean-square errors (RMSE) and the stan-

dard deviation σ in x and y, the distributed approach

was able to reduce the error to almost 50%. The rea-

son is that in the distributed approach the cameras

exchange full states and the error covariance matri-

ces instead of single observations as with the cluster-

based protocol.

6.2 Resource Measure

Further evaluations are related to the resource con-

sumption of the VSN within the simulation environ-

ment. Therefore, we record the exchanged messages

as well as the operations for state estimation applied

for the cluster-based protocol and the fully distributed

approach. Both of the following evaluations include

the initialization phase of the cluster-based protocol.

In the simulator we have the following settings for

this evaluation: The communication channel is wire-

less and thus, we exchange the messages by broad-

casting. We record the exchange of the messages

for both approaches in 1 office room of 9 cameras

with overlapping FOVs (see Figure 4). Further, in

this evaluation we consider t = 1, . . . , 52 measurement

points, as for the performance measure in Section 6.1.

As before, we have on average of 5 of the 9 cameras

having the object in their FOV.

Figure 5: Comparing the results of state estimation in the

cluster-based protocol, the fully distributed approach and

the camera observation.

6.2.1 Communication Effort

Table 2 describes the messages for both approaches

with its content. As can be seen, the total payload

of the fully distributed approach exceeds the one of

the cluster-based approach. The message payload

is based on the standard C data types short int (2

Bytes) and float (4 Bytes). Table 3 shows the aver-

age number of messages exchanged for 52 measure-

ment points per camera. If we multiply the retrieved

number of the individual message types with the pay-

load in Bytes from Table 2, we get for the distributed

approach 165.48 Bytes, for the cluster-based protocol

only 14.14 Bytes on average per camera.

Table 2: Message types in the distributed approach and the

cluster-based protocol together with content and total pay-

load size in Bytes.

Fully Distributed approach

Message Type Content Payload (Bytes)

ExchangeInformation() u

k

i

, U

k

i

, s

k

i

80 Bytes

ExchangeFinalState() s

k

i

,P

k

i

72 Bytes

Cluster-based Protocol

Message Type Content Payload (Bytes)

InitiateAuction() c

k

h

2 Bytes

SendInformation() z

k

i

,α

k

i

8 Bytes

Handover() s

k

i

,P

k

i

48 Bytes

SENSORNETS2015-4thInternationalConferenceonSensorNetworks

22

Table 3: Number of messages exchanged in the fully dis-

tributed and the clustering approach for 52 measurement

points on average per camera.

Fully Distributed approach

Message Type Avg. Number per c

i

ExchangeInformation() 1.09

ExchangeFinalState() 1.09

Cluster-based Protocol

Message Type Avg. Number per c

i

InitiateAuction() 0.19

SendInformation() 0.94

Handover() 0.13

6.2.2 Computational Effort

In a further analysis, we focus on comparing the

amount of operations. Therefore, we compare the

number of additions and multiplications between the

two approaches. The result is shown in Table 4 com-

paring the average number of additions and multipli-

cations for 52 measurements per camera. From the

Table 4: Number of operations for state estimation in the

fully distributed approach and the cluster-based protocol for

52 measurement points on average per camera.

Method Additions Multiplications

Fully distributed 399.02 356.77

Cluster-based 82.42 89.45

simulation result of Table 4 we can see that the clus-

tering approach needs a much lower number of oper-

ations on average. For the cluster-based protocol the

number of operations N

o

on average are given with

N

k

o

(t) = t ∗(82.42 + 89.45) (10)

with t as the measurement point index. The first num-

ber indicates the additions, the second the multiplica-

tions for state estimation and the clustering process.

In the distributed approach each camera has the

same number of operations to execute on average

given by

N

k

o

(t) = t ∗(399.02 + 356.77) (11)

again, with t as the measurement point index. The

first number indicates the number of additions, the

second the number of multiplications.

In the cluster-based protocol the state estimation is

calculated only on the cluster head. In contrast, in the

fully distributed approach the operations are executed

on each participating camera. Thus, we can achieve

an enormous reduction of processing and storage con-

sumption in the cluster-based approach when compar-

ing it to the fully distributed approach.

7 CONCLUSION AND FUTURE

WORK

In this paper we propose resource-aware state estima-

tion with a cluster-based protocol for VSNs with lim-

ited capacities in storage, processing and communi-

cation. Our simulation results show that the achieved

accuracy of the state estimation in the cluster-based

protocol declines compared to fully distributed sys-

tems. Nevertheless, the achieved reduction of com-

munication and storage consumption confirm that the

cluster-based protocol is a highly applicable resource-

aware approach for VSNs. Thus, a trade-off be-

tween accuracy and resource-awareness exists for ob-

ject tracking applications in low-power systems. The

next step is to integrate the validated approach into

a VSN of real cameras. As a low-cost development

platform we use the pandaboard

1

extended with a

standard web cam.

An open issue is related to the assumption of ob-

ject identification and the global identifier for the ob-

ject. It is necessary to exchange the observations

among the cameras, thus, each camera is aware to

which object the incoming observations refer. Nev-

ertheless, we are working on relaxing the assump-

tion of object identification. One approach consid-

ers defining a much more complex state, including

also the features of the tracked object. Another ap-

proach could be to define a totally new state, consist-

ing solely of object features and thus, restructure the

current state estimation to an identification problem.

REFERENCES

Bhuvana, V., Schranz, M., Huemer, M., and Rinner, B.

(2013). Distributed object tracking based on cuba-

ture kalman filter. In Signals, Systems and Computers,

2013 Asilomar Conference on, pages 423–427.

Chaurasiya, S. K., Pal, T., and Bit, S. D. (2011). An en-

hanced energy-efficient protocol with static clustering

for wsn. In Proceedings of the International Confer-

ence on Information Networking, pages 58–63.

Chen, C.-H., Yao, Y., Page, D., Abidi, B., Koschan, A.,

and Abidi, M. (2008). Camera handoff with adaptive

resource management for multi-camera multi-target

surveillance. In Fifth IEEE International Conference

on Advanced Video and Signal Based Surveillance,

pages 79–86.

Dieber, B., Micheloni, C., and Rinner, B. (2011). Resource-

aware coverage and task assignment in visual sensor

networks. IEEE Transactions on Circuit and Systems

for Video Technology, 21:1424 – 1437.

1

http://pandaboard.org/

Resource-awareStateEstimationinVisualSensorNetworkswithDynamicClustering

23

Ding, C., Song, B., Morye, A., Farrell, J., and Roy-

Chowdhury, A. (2012). Collaborative sensing in a

distributed ptz camera network. IEEE Transactions

on Image Processing, 21(7):3282–95.

Esterle, L., Lewis, P. R., Yao, X., and Rinner, B. (2014).

Socio-economic vision graph generation and han-

dover in distributed smart camera networks. Trans-

actions on Sensor Networks, 10(2):20:1–20:24.

Hooshmand, M., Soroushmehr, S. M. R., Khadivi, P.,

Samavi, S., and Shirani, S. (2013). Visual sensor net-

work lifetime maximization by prioritized scheduling

of nodes. Journal of Network and Computer Applica-

tions, 36:409–419.

Mallett, J. (2006). The Role of Groups in Smart Cam-

era Networks. PhD thesis, Massachusetts Institute of

Technology.

Medeiros, H., Park, J., and Kak, A. (2008). Distributed

object tracking using a cluster-based kalman filter in

wireless camera networks. IEEE Journal of Selected

Topics in Signal Processing, 2(4):448–463.

Monari, E. and Kroschel, K. (2010). Task-oriented object

tracking in large distributed camera networks. In IEEE

Seventh International Conference on Advanced Video

and Signal Based Surveillance, pages 40–47.

Olfati-Saber, R. and Sandell, N. (2008). Distributed track-

ing in sensor networks with limited sensing range.

In Proceedings of the American Control Conference,

pages 3157–3162. IEEE.

Qureshi, F. and Terzopoulos, D. (2008). Smart camera net-

works in virtual reality. Proceedings of the IEEE,

96(10):1640–1656.

Ren, W. and Beard, R. (2005). Consensus seeking in multi-

agent systems under dynamically changing interaction

topologies. IEEE Transactions on Automatic Control,

50(5):655–661.

SanMiguel, J. and Cavallaro, A. (2014). Cost-aware

coalitions for collaborative tracking in resource-

constrained camera networks. IEEE Sensors Journal,

PP(99):12.

Schranz, M. and Rinner, B. (2014). Demo: VSNsim - a

simulator for control and coordination in visual sensor

networks. In Eight ACM/IEEE International Confer-

ence on Distributed Smart Cameras, page 3.

Song, B., Ding, C., Kamal, A. T., Farrell, J. A., and Roy-

Chowdhury, A. K. (2011). Distributed camera net-

works. IEEE Signal Processing Magazine, 28(3):20–

31.

Soro, S. and Heinzelman, W. (2009). A survey of visual

sensor networks. Advances in Multimedia, 2009:21.

Soto, C. and Roy-Chowdhury, A. (2009). Distributed multi-

target tracking in a self-configuring camera network.

In Proceedings of the IEEE Conference on Computer

Vision and Pattern Recognition, pages 1486–1493.

Taj, M. and Cavallaro, A. (2011). Distributed and decen-

tralized multi-camera tracking : a survey. IEEE Signal

Processing Magazine, 28(3):46–58.

Torshizi, E. S. and Ghahremanlu, E. S. (2013). Energy

efficient sensor selection in visual sensor networks

based on multi-objective optimization. International

Journal on Computational Sciences and Applications,

3:37–46.

Vickrey, W. (1961). Counterspeculation, auctions, and

competitive sealed tenders. The Journal of Finance,

16(1):8–37.

Younis, O. and Fahmy, S. (2004). Heed: A hybrid, energy-

efficient, distributed clustering approach for ad hoc

sensor networks. IEEE Transactions on Mobile Com-

puting, 3(4):366–379.

Zahmati, A. S., Abolhassani, B., Asghar, A. B. S., and

Bakhtiari, A. S. (2007). An energy-efficient proto-

col with static clustering for wireless sensor networks.

International Journal of Electronics, Circuits and Sys-

tems, 1(2):135–138.

SENSORNETS2015-4thInternationalConferenceonSensorNetworks

24