Emotion Recognition based on Heart Rate

and Skin Conductance

Mickaël Ménard

1

, Paul Richard

1

, Hamza Hamdi

1

, Bruno Daucé

2

and Takehiko Yamaguchi

3

1

LARIS, University of Angers, 62 Avenue Notre-Dame du Lac, Angers, France

2

GRANEM, University of Angers, 4 Allée François Mitterrand, Angers, France

3

Faculty of Industrial Science and Technology, Tokyo University of Science, Tokyo, Japan

Keywords: Physiological Signal, Classification, Models, Emotions, Platform, SVM.

Abstract: Information on a customer’s emotional states concerning a product or an advertisement is a very important

aspect of marketing research. Most studies aimed at identifying emotions through speech or facial

expressions. However, these two vary greatly with people’s talking habits, which cause the data lacking

continuous availability. Furthermore, bio-signal data is also required in order to fully assess a user’s

emotional state in some cases. We focused on recognising the six basic primary emotions proposed by

Ekman using biofeedback sensors, which measure heart rate and skin conductance. Participants were shown

a series of 12 video-based stimuli that have been validated by a subjective rating protocol. Experiment

results showed that the collected signals allow us to identify user's emotional state with a good ratio. In

addition, a partial correlation between objective and subjective data has been observed.

1 INTRODUCTION

Scientific research in the area of emotion extends

back to the 19th century when Charles Darwin

(Darwin, 1872) and William James (James, 1884)

proposed theories of emotion that continue to

influence our way of thinking today. During most of

the 20th century, research in emotion assessment has

gained popularity thanks to the realisation of the

presence of feelings in social situations (Picard,

1995) (Croucher and Sloman, 1981) (Pfeifer et al.,

1988). One of the major problems in emotion

recognition is related to defining emotion and

distinguishing emotions. Ekman proposed a model

which relies on universal emotional expressions to

distinguish six primary emotions (joy, sadness,

anger, fear, disgust, surprise) (Friesen and Ekman,

1978) (Cowie et al., n.d.).

The majority of research concentrated on

analysing speech or facial expressions in the interest

of identifying emotions (Rothkrantz and Pantic,

2003). Nonetheless, it is easy to mask a facial

expression or to simulate a particular tone of voice

(Pun et al., 2007). Additionally, these channels are

not continuously available, meaning that users are

not always facing the camera or constantly speaking.

We believe that the use of physiological signals,

such as electro dermal activity (EDA),

electromyography (EMG) or electrocardiography

(ECG), grants us the key to solving the previously

mentioned problems. For example, heart rate proves

to effectively recognise anger, fear, disgust, and

sadness in both young and elderly participants

(Levenson, 2003).

Psychological researchers applied diverse

methods to examine emotion expression as well as

perception in their laboratories, ranging from

imaginary inductions to film clips and static

pictures. A particular set of video stimuli (Bartolini,

2011), developed by the University of Louvain, is

the most widely used. It is based on a categorical

model of emotion and it contains various videos

depicting, among others, mutilations, murders,

attack scenes and accidents.

The purpose of our work is to recognize the six

basic primary emotions proposed by Ekman, using

widely-available and low-cost, biofeedback sensors

that measure heart rate (Nonin Oxymeter (I-

Maginer, n.d.)) and skin conductance (TEA, n.d.).

The latter provides physiological data which reveals

stress-related behaviors. We designed an experiment

based on video clips from University of Louvain.

26

Ménard M., Richard P., Hamdi H., Daucé B. and Yamaguchi T..

Emotion Recognition based on Heart Rate and Skin Conductance.

DOI: 10.5220/0005241100260032

In Proceedings of the 2nd International Conference on Physiological Computing Systems (PhyCS-2015), pages 26-32

ISBN: 978-989-758-085-7

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

The goal of the experiment is to elicit and identify

emotions by studying physiological data collected

from the heart-rate and skin conductance sensors.

The videos’ set has been validated using an

evaluation system formerly established by the

emotional reaction the viewer had to different clips.

The result of this experiment contributes to the

development of the real-time platform for emotions

recognition by using bio-signals (Hamdi, 2012).

This platform is part of the EMOTIBOX project

developed between three laboratories: LARIS,

GRANEM and LPPL. This platform is to complete a

complex system, such as a job interview simulator,

which establishes a face-to-face communication

between a human being and an Embodied

Conversational Agent (ECA) (Saleh et al., 2011),

(Hamdi et al., 2011), (Herbelin et al., 2004). In this

context, we will take into account the six primary

emotions.

The rest of the paper is organized as following:

in the next section, we will evaluate the work in the

aspect of the modelling and classification of

emotions with a particular emphasis on emotion

assessment from physiological signals. In Section 3,

we will present the experimental study along with

the data acquisition procedure and the experimental

design used to recognise emotions related to videos.

The results are presented and discussed in Section 4.

The paper ends with a conclusion and provides ideas

for future research.

2 RELATED WORK

2.1 The Modelling and Classification of

Emotions

Theories of emotion have integrated other

components such as cognitive and physiological

changes, as well as trends in action and motor

expressions. Each of these components has different

functions. Darwin postulated the existence of a finite

number of emotions present in all cultures and

theorised that they have an adaptation function

(Darwin, 1872). Although several theoretical models

of emotions exist, the most commonly used are the

dimensional and categorical models (Mauss and

Robinson, 2009). The second approach is to label

the emotions in discrete categories, i.e. experiment

participants have to select amongst a prescribed list

of word labels, e.g. joy, sadness, surprise, anger,

love, fear. The result was subsequently confirmed by

Ekman who divided emotions into two classes: the

primary emotions (joy, sadness, anger, fear, disgust,

surprise) which are natural responses to a given

stimulus and which ensure the survival of the

species, and the secondary emotions that evoke a

mental image which correlates with the memory of

primary emotions (Ekman, 1999). Later on, several

more lists of basic emotions have been adopted by

different researchers (see Table 1 (Ortony and

Turner, 1990)).

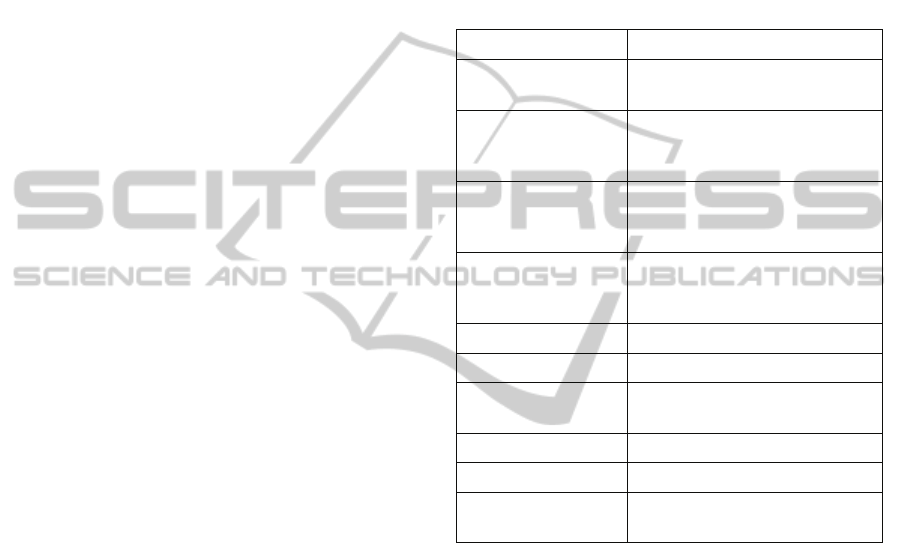

Table 1: Lists of basic emotions from different authors

(Ortony & Turner, 1990).

Reference Basic emotions

(Ekman & Friesen,

1982)

Anger, disgust, fear, joy,

sadness, surprise

(Izard, 1971)

Anger, contempt, disgust,

distress, fear, guilt, interest, joy,

shame, surprise

(Plutchik, 1980)

Acceptance, joy, anticipation,

anger, disgust, sadness,

surprise, fear

(Tomkins, 1984)

Anger, interest, contempt,

disgust, distress, fear, joy,

shame, surprise

(Gray, 1982) Rage and terror, anxiety, joy

(Panksepp, 1982) Expectancy, fear, rage, panic

(McDougall, 1926)

Anger, disgust, elation, fear,

subjection, tender-emotion,

(Mower, 1930) Pain, pleasure

(James, 1884) Fear, grief, love, rage

(Oatley & Johnson-

Laird, 1987)

Anger, disgust, anxiety,

happiness, sadness

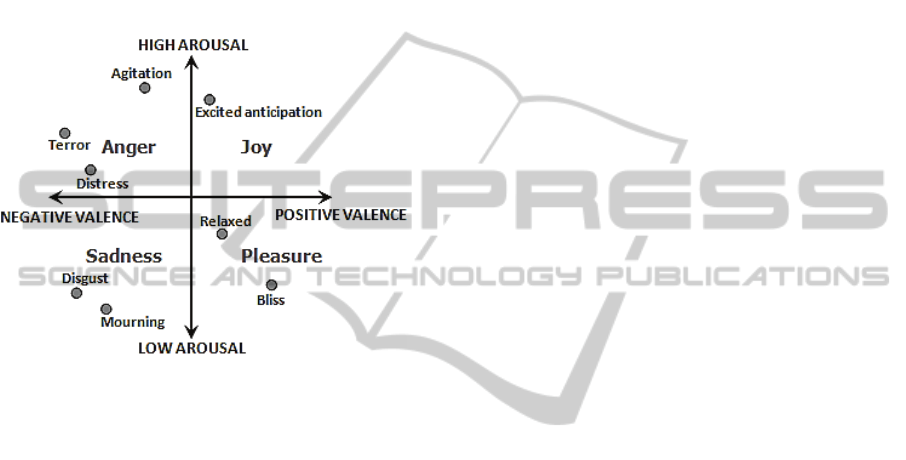

Another way of classifying emotions is to use

multiple dimensions or scales based on arousal and

valence (Figure 1). This method was advocated by

Russell (Russell, 1979). The arousal dimension

varies from “not-aroused” to “excited”, and the

valence dimension goes from negative to positive.

The different emotional labels can be plotted at

numerous positions on a two-dimensional plan

spanned by two axes to construct a 2D emotion

model. For example, happiness has a positive

valence, whereas disgust has a negative valence,

sadness has low arousal, while surprise triggers high

arousal (Lang, 1995).

Moreover, each approach, in discrete categories

or continuous dimensions, has its advantages and

disadvantages. In the first, each affective display is

classified into a single category, complex

mental/affective states or blended emotions may be

too difficult to assort (Barreto and Zhai, 2006).

Despite exhibiting advantages that cover this blind

EmotionRecognitionbasedonHeartRateandSkinConductance

27

spot, the dimensional approach, in which each

stimulus could be found on several continuous

scales, has received a number of criticisms. Firstly,

the theorists working on discrete emotions, for

example Silvan Tomkins, Paul Ekman, and Carroll

Izard, have questioned the usefulness of the theory,

arguing that the reduction of emotional space to two

or three dimensions is extreme and results a loss of

information. Secondly, some emotions may lie

outside the space of two or three dimensions (e.g.,

surprise) (Pantic and Gunes, 2010).

Figure 1: Illustration of the two-dimensional model based

on valence and arousal (Russell, 1979).

In our study, we aim to identify the six basic

emotions proposed by Ekman and Friesen (Friesen

and Ekman, 1978) (disgust, joy, anger, surprise,

disgust, fear, sadness) because of universal

acceptance on human emotional states study.

2.2 Emotion Recognition by

Physiological Signals

The analysis of physiological signal is a possible

approach for emotion recognition (Healey et al.,

2001). Thus, several types of physiological signals

have been used to measure emotions, based on the

recordings of electrical signals produced by the brain

(EEG), the muscles (EMG) and the heart (ECG).

These indications include signals derived from

the Autonomic Nervous System (ANS) of the human

body (fear, for example, increases heartbeat and

respiration rate) (Ang et al., 2010), the

Electromyogram (EMG) that measures muscle

activity, the Electrocardiogram (EKG or ECG) that

measures heart activity, Electrodermal Activity

(EDA) that measures electrical conductivity that is

in charge of organs such as sweat glands on the skin,

the Electrooculogram (EOG) that measures eye

movement, and the Electroencephalogram (EEG)

(Moradi and Khalili, 2009) (Ang et al., 2010).

3 EXPERIMENTAL STUDY

The designed experimentation intended to collect

enough data for building models for each of our

sensors. We followed a simple concept: one stimulus

corresponds to one particular emotion, which is

applied on the subject, and the sensors collect

physiological activity data of this emotion.

3.1 Stimulus Material

We selected visual and dynamic stimuli for this

experimentation in favour of catching and

establishing models of the evolution of our signals.

Emotions such as “anger” and “fear” surfaces

slowly. Dynamic stimuli proved to be more efficient

during the experiment. Concretely, video clips

stimulate two sensory modalities (visual and

auditory), which are close to the system for a job

interview simulator, a video game or an interactive

virtual reality system.

Given that the majority of our subjects are of

French origin, we had to find video clips in French

language. Several sets of videos exist in the

literature (Carvalho et al., 2012) (Bednarski, March

29, 2012) and the one developed at the University of

Louvain (Schaefer et al., 2004) was particularly

interesting.

The total length of experimentation was then the

most important criterion. At least two videos for

each emotion were necessary to assess accurately the

signals. Therefore, we had twelve videos plus one

neutral video between each emotion category for our

six emotions. As we chose to limit the total

experimentation to 30 min so that it does not take

too much time of our subjects, we selected videos

that lasting 30s to 2 min.

The neutral video is supposed to assure the

variables independence by giving a break to our

subjects between each category of emotions. They

were also asked to grade each emotions felt on a

scale from 1 to 10 after the video. It helped to

qualify our experimentation material according to

the inducted emotional stimulus.

3.2 Experimental Set-up

We collected data from 35 participants, mostly

between 18 and 30 years old; 11.4% of the subjects

were older than 30. Gender parity was positive: with

PhyCS2015-2ndInternationalConferenceonPhysiologicalComputingSystems

28

54% of men and 46% of women. They were either

students or employees of the University of Angers.

The experimentation took place in a room equipped

with nothing other than the screen and the shutters

were closed: no distractions were admitted.

Only two sensors were used during the

experimentation, one is an EDA sensor (TEA, n.d.)

and the other is a heart-rate sensor (Nonin) (I-

Maginer, n.d.). Knowing that the Nonin sensor was

previously calibrated to be used on the platform,

building models with another classifier allowed us to

validate the existing models.

The design of the experiment is the following:

the subject was first asked to read and sign a consent

form to participate in the study. Then he/she

received a copy of the instructions. He/she was then

informed the purpose of the study and the exact

experimentation procedure. Once the person sat in

front of the screen, the researcher set up the sensors

and checked whether everything was functioning.

As soon as the subject was ready for the

experimentation, the program started and the

researcher left the room to ensure genuine reaction

from the subject. Twelve videos with a neutral one

between each emotion category were displayed.

After watching each video, the participant had to

rate it on the following dimensions: joy, anger,

surprise, disgust, fear, sadness.

The duration of the transitory window between

two video was to trigger the automatic affect

analysis and was decided by the sensory modality

and the targeted emotion. A study published by

Levenson and his co-workers (Levenson, 1988)

demonstrated that the length of emotions display

varies from 0.5 to 4 seconds. However, some

researchers suggested using a different window

length that depends on the modality, e.g., 2-6

seconds for speech, and 3-15 seconds for bio-signals

(Kim, 2007). In our experiment, each trial included a

5-second rest between video presentations, and a 15-

second rating interval during which time the video

was not displayed on the screen. In this interval, the

participant answered on a 10-point scale the degree

he felt for each emotion, by pressing a visual

analogue scale (0-10) with 0 indicating not at all and

10 a great amount. This procedure allowed the

participant to specify multiple labels for a certain

image.

At the end of the experiment, the researcher

came back in the room to check the subject’s

emotional state, remove the sensors and get a

feedback.

4 RESULTS

4.1 Affective Rating of Videos

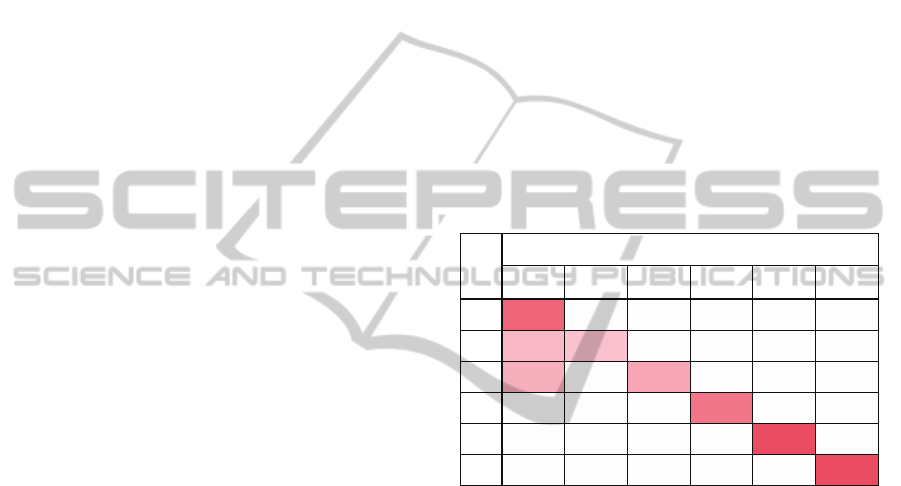

Table 2 illustrates the level of participants’

perceived emotions from the videos. For example,

the participants the 2 video related to joy, on

average, as 86.9% joy and 8.3% sadness. Similarly,

the videos related to anger were rated as 38.9%

anger and 30.6% disgust. We can see here that

subjects recognised every emotions but Anger

(38.9%) and Fear (26.1%). This could be attributed

to the general stimulating atmosphere (horrifying,

appalling and uneasy) that generates such emotions:

it is easy to mix Fear and Anger with Disgust. These

results thus partially validated the selected videos as

being representative of the studied emotions.

Table 2: Confusion Matrix obtained as the result of the

classification of the videos regarding each emotion.

IN

OUT

An Di Fe Jo Su Sa

An 38,9% 30,6% 6,2% 1,6% 5,2% 17,5%

Di 0,4% 63,5% 15,8% 8,4% 10,4% 1,4%

Fe 1,5% 47,4% 26,1% 5,0% 18,1% 1,9%

Jo 0,0% 1,0% 0,0% 86,9% 3,8% 8,3%

Su 10,8% 6,5% 21,6% 8,4% 51,0% 1,6%

Sa 15,5% 27,9% 2,5% 1,0% 2,8% 50,2%

Abbreviations related: Anger (An), Disgust (Di), Fear

(Fe), Joy (Jo), Surprise (Su), Sadness (Sa)

4.2 Objective Data Analysis

After checking the correctness of the results of the

subjective assessment by the participants, we turned

our attention to evaluate the capacity of correctly

estimate the emotional state of the user. The subjects

were given tasks that should trigger emotional

reactions. The skin conductivity and the heart-rate,

measured by the TEA sensor and the Nonin sensor,

were recorded respectively at a frequency of 2Hz.

Each measure corresponds to one video (and

associated emotion), the heart-rate and the skin

conductivity. Measures for the neutral video were

taken only once.

We decided to process first the collected signals

with a Fourier Transform to get the Fourier

coefficients, and then classify theses coefficients

with the Support Vector Machine (SVM) (Lin and

Chang, 2011).

For each recording

(

=1,…,

)

, the function

is supposed to belong to

(

ℝ

)

.

EmotionRecognitionbasedonHeartRateandSkinConductance

29

We introduce then

(

ℝ

)

Fourier Basis, i.e. for=

1,2,…:

()=1, (1)

(

)

=

√

2cos

(

2

)

, (2)

()=

√

2sin

(

2

)

. (3)

For each, the function

can be decomposed into

Fourier series (Bercher & Baudoin, 2001):

=

,

, (4)

with

,

=

(

)

()

ℝ

(5)

In practice, Fourier coefficients

,

are computed

by Fast Fourier Transform (FFT) for =1,…,

where is chosen by the user.

For each of our recordings, we have

=

,1,…,

,

the vector of the first Fourier

coefficients corresponding to

.

By watching the setX

,i=1,…,n, we wish to

build a discriminative rule allowing us to predict the

label associated to the next recordingX

.

We build an input table of sizen×(k+1),

with in raw, recordings i and in columns, for each :

emotion

, then the Fourier coefficients computed

by

,

,…,

,

, i.e.:

,

,

⋯

,

,

⋮⋱⋮

,

⋯

,

We then used this table as an input for the SVM to

build a model. For=1.0,=1.0×10

, the

best classification rate was computed for both

signals at =200 (33% of the whole dataset was

put aside for the validation). For the skin

conductivity, the computed classification rate was

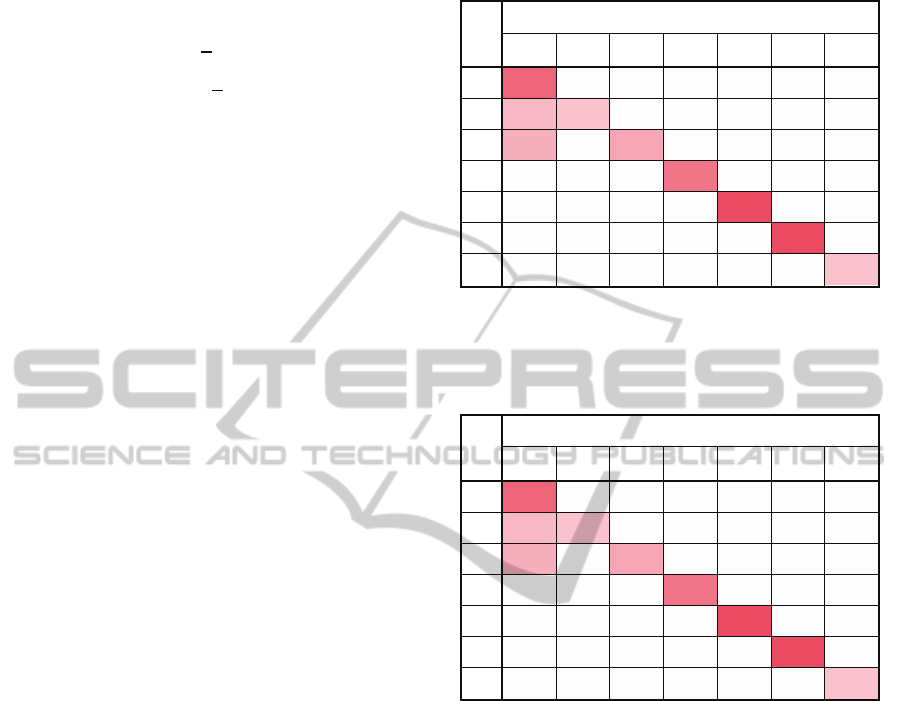

85.57%. The table 3 is the confusion matrix related.

For the heart rate, the computed classification

rate was 89.58%, to which the confusion matrix is

shown on table 4. Classification rates (85% and

89%) were sufficient enough for further exploitation.

In another word, any model built under a 70%

classification rate would direct the exploitation to an

incorrect result.

Table 3: Confusion Matrix for the skin conductance data

classification.

IN

OUT

An Di Fe Jo Su Sa Ne

An 16 0 0 0 0 0 0

Di 7 6 0 0 0 0 0

Fe 8 0 9 0 0 0 0

Jo 0 0 0 14 0 0 0

Su 0 0 0 0 19 0 0

Sa 0 0 0 0 0 19 0

Ne 0 0 0 0 0 0 6

Abbreviations related: Anger (An), Disgust (Di), Fear

(Fe), Joy (Jo), Surprise (Su), Sadness (Sa), Neutral (Ne)

Table 4: Confusion Matrix for the heart-rate data

classification.

IN

OUT

An Di Fe Jo Su Sa Ne

An 22 0 0 0 0 0 0

Di 5 13 0 0 0 0 0

Fe 10 0 12 0 0 0 0

Jo 0 0 0 27 0 0 0

Su 0 0 0 0 19 0 0

Sa 0 0 0 0 0 25 0

Ne 0 0 0 0 0 0 11

Abbreviations related: Anger (An), Disgust (Di), Fear

(Fe), Joy (Jo), Surprise (Su), Sadness (Sa), Neutral (Ne)

4.3 Synthesis

This work was directed to add new sensors with an

emotions recognition platform. Previous analyses

enabled us to integrate an EDA sensor and to

improve the efficiency when applying a heart-rate

sensor. The SVM let us build new models that are

more robust and more accurate to fit our emotions’

set. A new C++ plug-in was created for the platform

in order to process the data collected by the previous

sensors. It works as a solver where the SVM

(integrated with the library LibSVM (Lin and

Chang, 2011)) classifies the data, according to the

previously built model, and output of the emotional

state of the subject.

PhyCS2015-2ndInternationalConferenceonPhysiologicalComputingSystems

30

5 DISCUSSION

Several points of this work could be discussed. First

of all, the platform targets fields such as marketing

and medical research, which means its subject could

be the major population. Yet, our experimentations

conducted on subjects ageing from 20 to 30 years

old. Models built for this population are certainly not

accurate for subjects’ ageing between 40 and 50

years old. What’s more, most of the subjects came

because they were intrigued by the experiment: half

of the sample has a psychology background, making

them interested in research concerning emotions.

These people, knowing the research topic, might

cause a certain social desirability bias. The

experimental conditions also play an important role

on how people react: subjects might not have the

same physiological reactions at different time of the

day.

6 CONCLUSIONS

Today, the areas of emotion recognition are seen as

an alternative to the discrimination of different

human feelings, thanks to physiological signals

collected by easy-to-handle, embedded, electronic

equipment. In this paper, we showed the use of

different types of physiological signals to assess

emotions. Electrodermal activity was collected by an

EDA sensor (TEA), and heart activity was measured

through a biofeedback sensor (Nonin). An

experiment involving 35 subjects was carried out to

identify the physiological signals corresponding to

the six basic emotions proposed by Ekman (joy,

sadness, fear, surprise, anger, disgust). Participants

were exposed to a set of 12 videos (2 for each

emotion). Videos set was partially validated through

a subjective rating system. In our future work, we

focus on confirming the benefits of multimodality

for emotions recognition with bio-signals, as well as

on integrating an electrocardiogram sensor (ECG)

which could bring more swiftness to the system.

REFERENCES

Ang, K., Wahab, A., Quek, H. & Khosrowabadi, R., 2010.

Eeg-based emotion recognition using self-organizing

map for boundary detection. In: I. C. Society, ed.

Proceedings of the 2010 20th International Conference

on Pattern Recognition,. Washington, DC, USA: s.n.,

p. 4242–4245.

Barreto, A. & Zhai, J., 2006. Stress detection in computer

users through noninvasive monitoring of physiological

signals. In: Biomedical Science Instrumentation.

s.l.:s.n., p. 495–500.

Bartolini, E. E., 2011. Eliciting Emotion with Film:

Development of a Stimulus Set, Wesleyan University:

s.n.

Bednarski, J. D., March 29, 2012. Eliciting Seven Discrete

Positive Emotions Using Film Stimuli, Vanderbilt

University: s.n.

Bercher, J.-F. & Baudoin, G., 2001. Transformée de

Fourier Discrète,» École Supérieure d’Ingénieurs en

Électrotechnique et Électronique. s.l.:s.n.

Carvalho, S., Leite, J., Galdo-Álvarez, S. & Gonçalves,

O., 2012. The Emotional Movie Database (EMDB): a

self-report and psychophysiological study.,

Neuropsychophysiology Lab Cipsi, School of

Psychology, University of Minho, Campus de Gualtar,

Braga, Portugal.: s.n.

Cowie, R. et al., n.d. Emotion recognition in human-

computer interaction. Signal Processing Magazine,

IEEE.

Croucher, M. & Sloman, A., 1981. Why robots will have

emotions. Originally appeared in Proceedings IJCAI

1981 ed. Vancouver, Sussex University as Cognitive

Science: s.n.

Darwin, C., 1872. The expression of emotion in man and

animal. Chicago: University of Chicago Press

(reprinted in 1965).

Ekman, P., 1999. In: Basic emotions. New York: Sussex

U.K.: John Wiley and Sons, Ltd, p. 301–320.

Ekman, P. & Friesen, W., 1982. Measuring facial

movement with the facial action coding system. In:

Emotion in the human face (2nd ed.). s.l.:New York:

Cambridge University Press.

Friesen, W. & Ekman, P., 1978. Facial Action Coding

System: A Technique for Measurement of Facial

Movement.. Palo Alto, California: Consulting

Psychologists Press.

Gray, J., 1982. The neuropsychology of anxiety - an

inquiry into the functions of the septo-hippocampal

system. s.l.:s.n.

Hamdi, H., 2012. Plate-forme multimodale pour la

reconnaissance d’émotions via l’analyse de signaux

physiologiques : Application à la simulation

d’entretiens d’embauche, Université d’Angers, France:

s.n.

Hamdi, H., Richard, P., Suteau, A. & Saleh, M., 2011.

Virtual reality and affective computing techniques for

face-to-face communication. In: GRAPP 2011 -

Proceedings of the International Conference on

Computer Graphics Theory and Applications. Algarve,

Portugal: s.n., p. 357–360.

Healey, J., Vyzas, E. & Picard, R., 2001. Toward machine

emotional intelligence: Analysis of affective

physiological state. In: IEEE Transactions on Pattern

Analysis and Machine Intelligence. s.l.:s.n., p. 1175–

1191.

Herbelin, B. et al., 2004. Using physiological measures for

emotional assessment: a computer-aided tool for

EmotionRecognitionbasedonHeartRateandSkinConductance

31

cognitive and behavioural therapy. In: ICDVRAT.

Oxford, England: s.n., p. 307–314.

I-Maginer, n.d. Nonin medical wristox2. [Online]

Available at: http://www.nonin.com/

Izard, C. E., 1971. The face of emotion. New York:

Appleton-Century-Crofts.

James, W., 1884. What is an emotion?,. s.l.:s.n.

Kim, J., 2007. Bimodal emotion recognition using speech

and physiological changes, s.l.: s.n.

Lang, P., 1995. The emotion probe: Studies of motivation

and attention. In: American psychologist. s.l.:s.n., p.

372–385.

Levenson, R., 1988. Emotion and the autonomic nervous

system: a prospectus for research on autonomic

specificity. Social Psychophysiology and Emotion:

Theory and Clinical Applications, p. 17–42.

Levenson, R. W., 2003. Autonomic specificity and

emotion. s.l.:s.n.

Lin, C.-J. & Chang, C.-C., 2011. LIBSVM : a library for

support vector machines. ACM Transactions on

Intelligent Systems and Technology.

Mauss, I. & Robinson, M., 2009. Measures of emotion: A

review. In: Cognition & Emotion. s.l.:s.n., p. 209–237.

McDougall, W., 1926. An introduction to social

psychology. s.l.:s.n.

Moradi, M. & Khalili, Z., 2009. Emotion recognition

system using brain and peripheral signals: using

correlation dimension to improve the results og EEG.

In: I. Press, ed. Proceedings of the 2009 international

joint conference on Neural Networks. Piscataway, NJ,

USA: s.n., p. 1571–1575.

Mower, O., 1930. Learning theory and behavior. s.l.:s.n.

Oatley, K. & Johnson-Laird, P., 1987. Towards a

cognitive theory of emotions. s.l.:s.n.

Ortony, A. & Turner, W., 1990. What’s basic about basic

emotions. In: Psychological Review. s.l.:s.n., p. 315–

331.

Panksepp, J., 1982. Toward a general psycho-biological

theory of emotions. In: Behavioral and Brain Sciences.

s.l.:s.n., p. 407–422.

Pantic, M. & Gunes, H., 2010. Automatic, dimensional

and continuous emotion recognition. In: Int’l Journal

of Synthetic Emotion. s.l.:s.n., p. 68–99.

Transactions on Affective Computing, Special Issue on

Naturalistic Affect Resources for System Building and

Evaluation. s.l.:s.n.

Pfeifer, R., Kaiser, S. & Wehrle, T., 1988. In Cognitive

Perspectives on Emotion and Motivation. Artificial

intelligence models of emotions, Volume 44, p. 287–

320.

Picard, R., 1995. Affective Computing, rapport interne du

MIT Media Lab, TR321, Massachusetts Institute of

Technology, Cambridge, USA: s.n.

Plutchik, R., 1980. A general psychoevolutionary theory

of emotion. In: Emotion: Theory, research, and

experience. s.l.:New York: Academic, pp. 3-33.

Pun, T., Ansari-Asl, K. & Chanel, G., 2007. Valence-

arousal evaluation using physiological signals in an

emotion recall paradigm. In: Systems, Man and

Cybernetics. Montreal, Que, 2007: IEEE International

Conference, p. 2662–2667.

Rothkrantz, L. & Pantic, M., 2003. Toward an affect-

sensitive multimodal human-computer interaction.

Proceedings of the IEEE, Volume 91, p. 1370–1390.

Russell, A., 1979. Affective space is bipolar. In:

Personality and Social Psychology. s.l.:s.n., p. 345–

356.

Saleh, M., Suteau, A., Richard, P. & Hamdi, H., 2011. A

multi-modal virtual environment to train for job

interview. In: PECCS 2011 - Proceedings of the

International Conference on Pervasive and Embedded

Computing and Communication Systems. Algarve,

Portugal: s.n., p. 551–556.

Schaefer, A., Nils, F., Sanchez, X. & Philippot, P., 2004.

A multi-criteria assessment of emotional films,

University of Louvain, Louvain-La-Neuve, Belgium.:

s.n.

TEA, n.d. [Online], Available at: http://www.teaergo.com/

Tomkins, S., 1984. Chapter Affect Theory. In: Approaches

to emotion. Erlbaum, Hillsdale, NJ: s.n., p. 163–195.

Westerink, J. H. & van den Broek, E. L., 2009. Guidelines

for affective signal processing (asp): from lab to life.

In: I. C. Society, ed. In Proceedings of the Interaction

and Workshops, ACII 2009. Amsterdam: s.n., p. 704–

709.

PhyCS2015-2ndInternationalConferenceonPhysiologicalComputingSystems

32