Creation of Emotion-inducing Scenarios using BDI

Pierre-Olivier Brosseau and Claude Frasson

Département d’informatique et de recherche opérationnelle, Université de Montréal, 2920 Chemin de la Tour,

Montréal, H3C 3J7, Quebec, Canada

Keywords: Inducing Emotions, BDI Agents, EEG Headset, Emotional State, Scenarios, Simulation.

Abstract: Automated analysis of human affective behavior has attracted increasing attention from researchers in

psychology, computer science, linguistics, neuroscience, and related disciplines. However, existing methods

to induce emotions are mostly limited to audio and visual stimulations. This study tested the induction of

emotions in a virtual environment with scenarios that were designed using the Belief-Desire-Intention (BDI)

model, well-known in the Agent community. The first objective of the study was to design the virtual

environment and a set of scenarios happening in driving situations. These situations can generate various

emotional conditions or reactions and the design was followed by a testing phase using an EEG headset able

to assess the resulting emotions (frustration, boredom and excitement) of 30 participants to verify how

accurate the predicted emotion could be induced. The study phase proved the reliability of the BDI model,

with over 70% of our scenarios working as expected. Finally, we outline some of the possible uses of

inducing emotions in a virtual environment for correcting negative emotions.

1 INTRODUCTION

The Belief-Desire-Intention (BDI) model is a well-

known model in the agent community which is often

used as its structure is close to the human reasoning

pattern. This pattern is known as practical reasoning:

using a set of beliefs we decide what we want to

achieve (desire), then we decide how to do it

(intentions) (Puica et al., 2013). Emotions are

another component of human behaviour and,

according to an increasing part of Artificial

Intelligence researchers’ community, they represent

an essential characteristic of intelligent behaviour,

particularly for taking decision. In driving situations

for instance, emotions place the driver into a

cerebral state that will allow or disallow him/her to

react adequately to a specific situation, sometimes

requiring an immediate reaction. For instance, anger

and excitation can lead to sudden driving reactions,

often involving collisions. Sadness or an excess of

joy can lead to a loss of attention. Generally,

emotions that increase the reaction time in driving

situations are the most dangerous. Several questions

arise. How emotions appear when a driver is

exposed to a specific traffic situation? How can we

measure or estimate the emotion of the driver in

these situations? Can we use the BDI model to

design emotional-inducing scenarios in a virtual

environment? How can we train the driver to react

differently and control his emotions? By creating

unexpected events in a virtual environment that go

against the user’s desires and intentions, we have

seen that we can induce controlled primary

emotions. Several techniques have been used over

the years to generate emotions such as emotional-

inducing audio and video sequences (O’Toole et al.,

2005; Pantic and Bartlett, 2007) and (Sebe et al.,

2004). The American Driving (AAA) Association,

estimates that the proportion of serious injuries on

the road associated with aggressive behavior would

be the tier two-thirds of all road accidents. In

addition, for every serious accident, there are several

thousands of drivers angry, if not more, who behave

recklessly without reaching the point where they

commit an assault. Strong emotions are the basis of

this anger that gives rise to these dangerous

situations on the road.

In our study we have decided to push this

process further by adding human interaction in a

virtual environment. The goal of our study is to

design multiple scenarios in a driving virtual

environment, each one with scenes able to induce a

specific primary emotion.

Different technologies can be used to assess

emotions. We can use physiological sensors that are

able to evaluate seat position, facial recognition,

523

Brosseau P. and Frasson C..

Creation of Emotion-inducing Scenarios using BDI.

DOI: 10.5220/0005278505230529

In Proceedings of the International Conference on Agents and Artificial Intelligence (ICAART-2015), pages 523-529

ISBN: 978-989-758-074-1

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

voice recognition, heart rate, blood pressure,

sweating and the amount of pressure applied on the

computer mouse. The galvanic skin conductivity is a

good indication of emotional change but its

evaluation is not precise. The use of

Electroencephalograms (EEG) sensors is more

precise and more recently used (Chaouachi et al,

2011). In fact, EEG signals are able to detect

emotions and cerebral states which, synchronized

with the driving scene, can highlight what happens

in the brain and when.

The organization of the paper is as follows:

section 2 presents a brief review of previous works

in similar fields. Section 3 presents the design of

emotional scenarios based on BDI model. In section

4, we present the details of the experimental

procedure. Finally, section 5 presents the results and

a discussion about the impact of our findings in the

field of emotionally-based environments design.

2 PREVIOUS WORK

Recently, a large body of research was directed

towards improving learners’ experience and

interaction in learning environments. Affective and

social dimensions were considered in these

environments to provide learners with intelligent and

adaptive interaction (Picard et al., 2001; D’Mello et

al., 2009). Audio and video sequences have been

used to induce emotions for a long time: (O’Toole et

al., 2005; Pantic and Bartlett, 2007) and (Sebe et al.,

2004) the subjects and their reactions were recorded

while they were watching the emotion-inducing

videos. Multiple emotions can be observed by

recording the subjects’ voice and faces (Schuller et

al., 2007) and comparing them with audio and visual

databases of human affective behavior (Zeng et al.,

2009; Neiberg et al., 2006).

The different techniques used to label the

emotions over the years were Self-report (Sebe et

al., 2004), observers’ judgment or listener’s

judgment (O’Toole et al., 2005) when analyzing the

voice and face expressions (Pantic and Bartlett,

2007; Bartlett et al., 2005; O’Toole et al., 2005) and

other biometrics means. Jones and Jonsson (Jones et

al., 2005) built a system to recognize the emotion of

the driver inside the car from traits analyzed in his

voice. Their system combines acoustic features such

as tone and volume to emotions such as boredom,

sadness and anger. Research on emotions detection

are currently conducted by Toyota. Their system,

which is still in the prototype stage, can identify the

emotional state of the driver with a camera that spots

238 points on his face. The car can then make

suggestions to the driver, or simply adjust the music

to relax. In our study we have decided to use the

EPOC EEG headset from Emotiv to detect emotions

of a driver, a system relatively easy to use and

control.

From the EPOC we collect 3 main emotions:

boredom, excitement and frustration. Frustration is

an emotion that occurs in situations where a person

is blocked from reaching a desired outcome. In

general, whenever we reach one of our goals, we

feel pleased and whenever we are prevented from

reaching our goals, we may succumb to frustration

and feel irritable, annoyed and angry. Typically, if

the goal is important, frustration and anger or loss of

confidence will increase. Boredom creeps up on us

silently, we are lifeless, bored and have no interest in

anything, due perhaps to a build-up of

disappointments, or just the opposite, due to an

excess of stimuli that leads to boredom, taking away

our ability to be amazed or startled anymore when

things happen. Excitement is a state of having a great

enthusiasm while calm is a state of tranquillity, free

from excitement or passion.

The BDI model is a model developed for

programming intelligent agents. It originates from a

respectable philosophical model of human practical

reasoning (originally developed by Michael

Bratman) (Georgeff et al., 1999); the first references

of a practical implementation appeared as early as

1995 (Rao et al., 1995).

This model was developed because of its

similarity with the human reasoning process

(Georgeff et al., 1999). In our research, we have

decided to use this model to develop scenarios in a

virtual environment that will induce strong emotions

of the users by going against one of their beliefs or

preventing one of their intentions (Puica et al.,

2013).

Following the OCC model of emotions (Orthony

et al., 1988), an event can be appraised in terms of

beliefs, desires and intentions, returning a certain

score regarding its valence (positive or negative) and

arousal (the intensity of emotion felt). In our case we

will use the three emotions perceived by the EPOC

to assess the impact of specific situations in driving

emotional reactions.

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

524

3 CREATION OF SCENARIOS

3.1 Scenarios based on BDI Model

Scenarios

The scenarios are designed using the BDI model,

associating a desire in the form of an objective that

the user has to complete. The goal of the scenario is

to induce a primary emotion with an intensity

varying depending on the user. A complete

simulation system has been developed and

implemented to design and create scenarios.

Objective

The objective of the scenario is defined by a text

that the participant reads before entering the

simulation, it gives the participant a general idea of

what he has to accomplish, and thus generating a

belief and preparing the intentions that will be

generated during the simulation.

Percepts

The percepts are anything that comes from the

environment: stimuli or messages from the

simulator, they are also influenced by the emotions

of the user. (Puica et al., 2013) In our simulator the

percepts are the textual objective for each scenario,

the visual stimulation and the controls of the car.

Beliefs

The beliefs represent the information that the

user holds when he is currently trying to complete a

scenario. They are acquired from percepts. When a

human is placed in a situation forcing him/her to

make urgent decisions he/she will try to grasp the

situation by sensing current information in the

environment (Joo et al., 2013). These beliefs are also

influenced by the user’s emotions (Puica et al,

2013).

Desires

A desire is a goal perceived by the user when

attempting a scenario. We suppose that the desire for

the user is linked to what will lead him/her to a

success in the scenario by fulfilling the objective.

The desire to complete the objective for a scenario

never changes and it is portrayed through the user’s

intentions throughout the scenario.

Intentions

An intention is one of the different actions that

the user will chose to reach a certain desire. They are

constantly revised by the user based on his current

desires, beliefs, emotions (Puica et al., 2013) and the

different ways to reach the goal. Users are generally

committed to an intention until it is achieved or

proven that it cannot be accomplished anymore. In

our environment we generate events that we call

opposition events that prevents the user from

accomplishing the most obvious intentions in the

scenarios.

The Expected Intention

It is the most obvious and easiest way to complete

the scenario, it is this intention that we will prevent

the user from accomplishing his objective.

Expected Intention = f(Beliefs, Desires)

3.2 Using BDI to Induce an Emotion

Opposition event

Our goal is to induce emotions by generating

opposition and calming events in the simulation that

will prevent the subject from realizing his current

intention. The emotion-induction is provoked when

the event occurs and prevents the user from

accomplishing his intention. The user now has to

find a new intention to fulfill his desire if he wants

to succeed in the scenario. Opposition and calming

events are at the origin of emotions generated.

Emotion Generated

The primary emotions generated comes from

instinctual behavior and the secondary emotions

influence the cognitive processes (Puica et al.,

2013). In our study, each scenario is designed to

induce one targeted primary emotion by creating an

opposition to the expected intention.

Emotion Generated = g(Expected Intention,

Opposition)

In the following section we will go through each

scenario and describe what the Belief and Desires

associated with each scenario are and the expected

intention of the subject that we will use to plan the

opposition event. After that, we will verify if the

primary emotion induced by each scenario is the

expected one. For the experiment we have defined

eight scenarios.

4 EXPERIMENTATION

To collect the data we used the EPOC headset built

by Emotiv. Emotiv EPOC is a high resolution,

multi-channel, wireless neuroheadset. The EPOC

uses a set of 14 sensors plus 2 references to tune into

electric signals produced by the brain in order to

detect the user’s thoughts, feelings and expressions

in real time. Using the Affectiv Suite we can

monitor the player’s emotional states in real-time.

CreationofEmotion-inducingScenariosusingBDI

525

This method was used to measure the emotions

throughout the whole simulation process. The

emotions are rated between 0 and 100%, where

100% is the value that represents the highest

power/probability for this emotion.

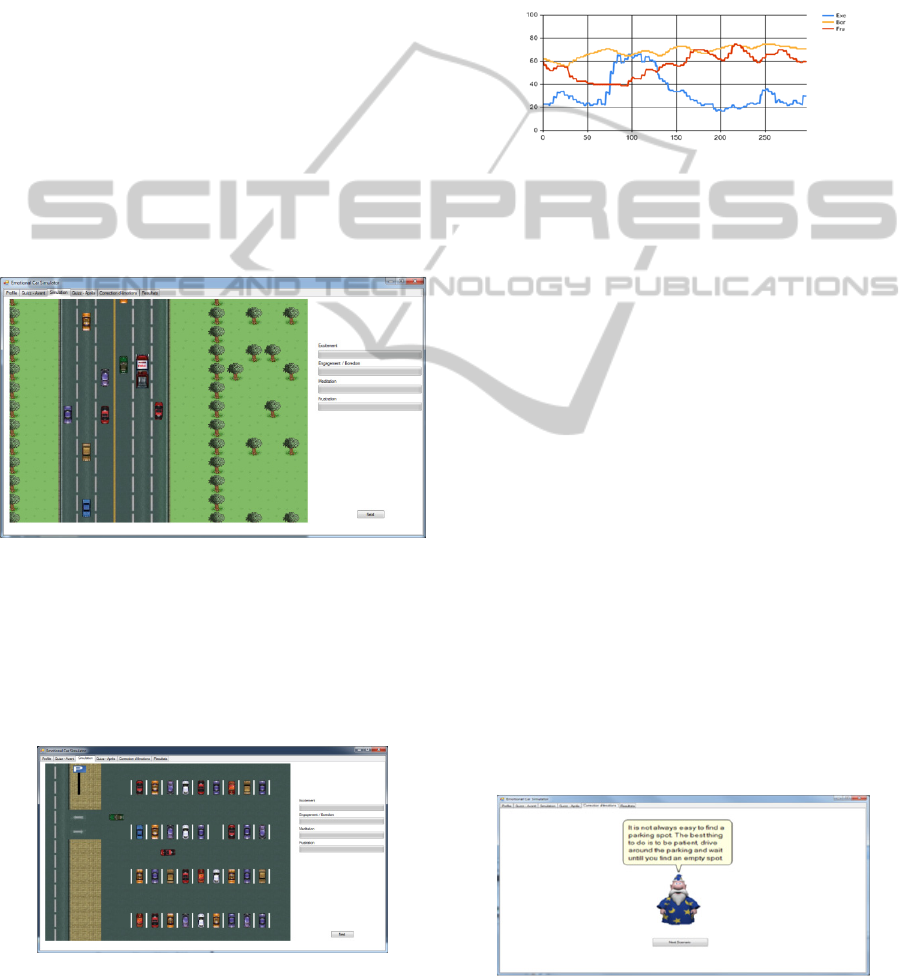

The virtual environment takes the form of a

game in which the player is driving a car from a

bird’s-eye view (Figure 1, the red car is the player’s

car) who is submitted to a variety of realistic

situations that everybody could experience. We have

developed several scenarios which can happen in

current driving situations and can generate emotions.

The user is submitted to a sequence of eight

scenarios and emotions generated during the

scenario are recorded through the EPOC system.

Figure 1 shows an example of such a scenario. The

user is driving his red car on the right side of the

road while a Fire Truck is suddenly coming fast,

activating its siren. Emotions generated are indicated

on the right. Here we detect excitement,

engagement, boredom, meditation and frustration.

Figure 1: The Fire Truck scenario running in the Virtual

Environment.

In the following scenario the user has to find a

place in a public parking. There is only one place

left and before the participant can reach it, another

car takes it. The participant has to look around to

find another place.

Figure 2: The parking place.

In another stressing scenario the user is driving

normally when the brakes are suddenly no more

working.

In the following scenarios we will use only

excitement, frustration and boredom; we have also

built and interface to plot the curves resulting from

the recording of the data extracted from EPOC and

showing the evolution of emotion with the time and

opposition events. Figure 3 shows the increase of

excitement due to a specific event which occurred

Figure 3: Evolution of emotions with time and events.

For the experiment, the user has first to register

and provide personal information in the virtual

environment, then he/she is submitted to a test

scenario to become acquainted with the simulator.

This test scenario is also used as our baseline to

identify the emotions of the user using the EPOC

headset. After the test scenario, the user is then

submitted to the eight scenarios mentioned earlier.

30 participants were involved in the experiment.

They were aged between 17 and 33, and included 6

women and 24 men. The system is coded in action

script 3.0.

For each scenario the opposition event takes

place at a certain time or is triggered when the user

arrives at a specific place. When the opposition

event is triggered, we use the Emotiv EPOC headset

to register the probability of the three emotions that

the user is feeling. These values are registered at a

speed of 10 times per second and are saved in the

database at the end of the scenario. We then proceed

to analyze the results.

Correcting Agent:

To correct the negative emotions generated in

the scenarios we created an agent in charge of

neutralizing player’s emotions (Figure 4).

Figure 4: The correcting agent.

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

526

Its soothing voice combined with its funny

appearance is there to reassure the player and tell

him the proper behavior to handle the scenario

correctly and to reduce his emotions. In this first

implementation with have created texts specific to

each scenario. They contain calming advices and

more explanations on the scenario in order to reduce

the importance of emotion into the context.

For example the BDI components of the above

scenarios are the following:

Fire Truck: Beliefs (he/she is on the highway

and must go forward), Desire (to reach the end of the

highway), Expected Intention (to keep going

forward until he/she reaches the end).

Parking Spot: beliefs (he/she has to park the car

in the parking spot), Desire (to find an empty spot

and park the car), Expected Intention (to drive the

car and park it in the empty spot that is visible from

the start).

The corresponding opposition events and

expected emotions are the following:

Fire Truck: Opposition event (after a while there

is a loud siren coming from behind and the subject

has to move away for the fire truck to pass),

expected emotion (excitement).

Parking Spot: Opposition event (when the car

arrives near an empty parking spot, another car takes

the place), expected emotion (frustration).

In the BDI approach these events create a

contradiction to the initial beliefs and desire and so

generate negative emotions.

5 RESULTS

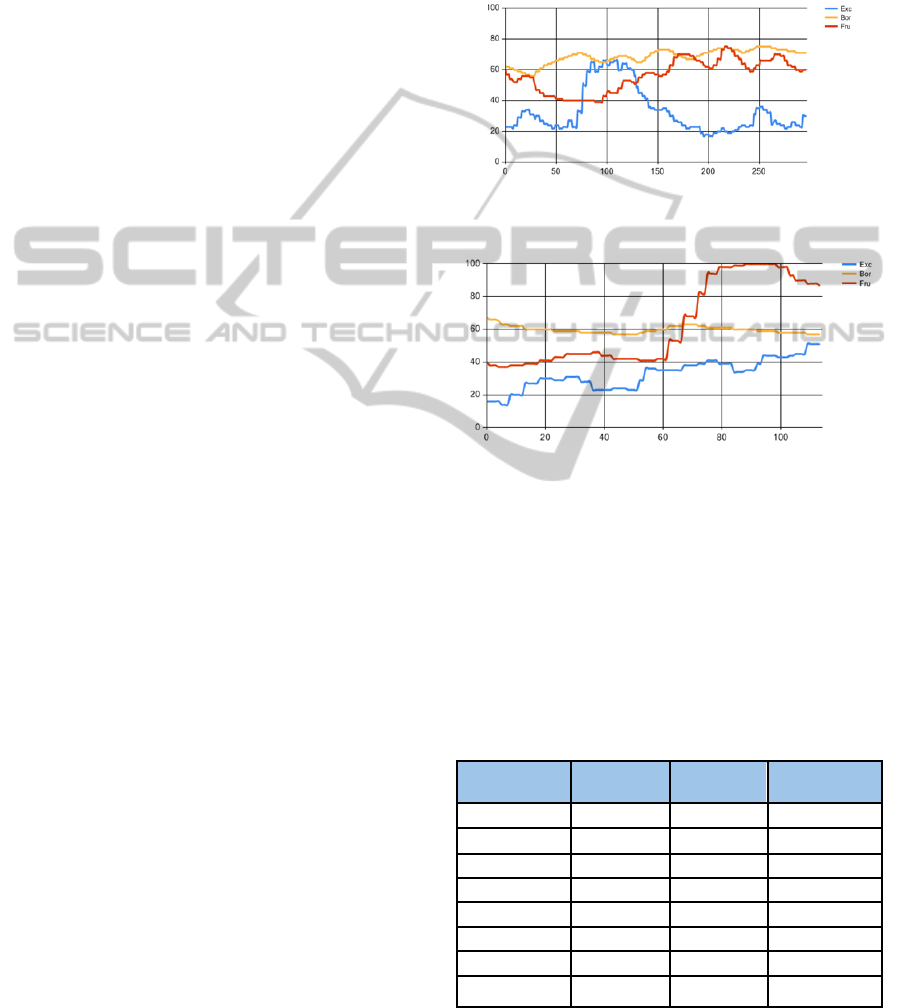

After the completion of the eight scenarios, we

produce graphs showcasing the variations in

probability and intensity of the user’s emotions over

time, and determine if the opposition event induced

the emotion as planned. The x-axis represents the

time in 10th of a second and the y-axis represents

the probability that the user is feeling a certain

emotion. We determine that an emotion has been

induced by the event if the probability that the

emotion is felt by the user has increased by at least

20% in the next 5 seconds after the opposition event.

We are only looking at the variation as the base

value can vary depending on the user. As we can see

below (Figure 5), the probability of Excitement

increases by 40% in the course of 5 seconds

following the moment where Fire Truck starts its

siren, therefore we can conclude that excitement has

been induced by the opposition event as expected.

In the following scenario (Figure 6, parking spot)

the participant arrived in a public parking. There is

only one place left and before the participant can

reach it, and another car takes it faster. We see the

impact in terms of growing frustration. The

participant has to look around to find another place

and if no more place is available the frustration stays

high

Figure 5: Raise of Excitement after the Fire Truck starts its

siren.

Figure 6: Raise of Frustration after the user notices that the

only parking spot left was taken by a passing car.

For each scenario we have calculated the

percentage of participants that have experienced

each emotion and have chosen the emotion that was

experienced by the highest number of participants as

the observed emotion. Out of the eight scenarios, six

of them resulted in having an observed emotion that

matched the predicted emotion. (Table 1).

Table 1: Strongest emotion generated after the opposition

event.

Scenario Expected

Emotion

Observed

Emotion

Percentage of

participants

Highway Frustration Frustration 64.7%

School Zone Boredom Excitement 70.6%

Intersection Frustration Frustration 73.3%

Pedestrian Frustration Frustration 52.9%

Parking Spot Frustration Frustration 76.5%

Brakes Failure Excitement Frustration 66.6%

Fire Truck Excitement Excitement 58.8%

Late for Work Frustration Frustration 64.7%

Strong emotions generated during the scenarios

are corrected with the correcting agent. The

CreationofEmotion-inducingScenariosusingBDI

527

efficiency is the percentage of the amount of

reduction. In Figure 7 we see the impact of the

correcting agent on the excitement resulting for

scenario 5 (parking spot). The influence is

effectively on the excitation which decreases while

the other emotions are stable. We see that the

decrease intervenes

Figure 7: The effect of the correcting agent on the high

excitement of the user (while the agent is talking).

The correcting agent worked with the best

efficiency of 70.0% for the frustrated participants in

scenario 8 (Table 2), and 66.7% of excited

participants in scenario 3.

The boredom emotion is particular. It seems that

when the user is in this emotion which can last for

hours, he cannot evolve and change, except on

special conditions that the correcting agent should

deploy. This emotion can appear in educational

situations when a learner for instance is no more

interested by the course he is following. However, in

driving situations this emotion is not really pertinent

compared to excitement or frustration.

Table 2: Efficiency of the correcting agent for all the

participants.

Scenario Excitement Boredom Frustration

1

66.7%

21.1%

50.0%

2

50.0%

8.6%

45.5%

3

66.7%

4.8%

62.5%

4

42.9%

10.3%

66.7%

5

66.7%

19.7%

57.1%

6

46.2%

7.5%

41.6%

7

57.1%

0.0%

58.3%

8

55.6%

0.0%

70.0%

9

44.4%

4.3%

36.4%

The boredom emotion is particular. It seems that

when the user is in this emotion which can last for

hours, he cannot evolve and change, except on

special conditions that the correcting agent should

deploy.

6 CONCLUSIONS

Using the Belief-Desire-Intention model we have

been able to design emotion-inducing scenarios in a

virtual environment that induced emotions as

intended. We had established that an emotion could

be considered as generated if we saw an increase of

at least 20% of the intensity of the emotion within

the few seconds following the event. In six out of

eight scenarios the primary induced emotion was the

expected one. Our study shows that applying our

system that combines the BDI model and the Emotiv

EPOC is a good solution to induce a specific

emotion.

After generating a strong emotion for a

participant, we tested whether it was possible to

reduce it using our corrective emotional agent. An

emotion is considered as reduced if the observed

value of probability and intensity of that emotion has

declined over 20%, a few seconds after the advice of

our correcting agent. Again, the obtained results

were positive.

Virtual environments that are designed to correct

emotional behavior would benefit from creating

scenarios that use our system to target dangerous

emotions (frustration for a driver for instance) and

repeatedly training the user to reduce the intensity of

these emotions. Further research could be made by

using a more realistic virtual environment to really

immerse the user and comparing the intensity of

induced emotions. It would be also to test several

calming scenarios to detect which one would lead to

the most important reduction.

The advantage of our system is that it is now

very easy to generate scenarios to provoke driver’s

emotions and correct these same emotions. An

emotional corrective agent could easily be

implemented in a car to advise the driver in relation

to the emotions felt. However, in this case the major

limitation of the system would be to be unable to

detect the exact origin of the emotion, the emotional

correcting agent having no information on what

would have caused the strong emotion of the driver.

It would be much more difficult to give specific

advice as to not to mind when and find a new

parking spot when this one is taken by another

driver.

ACKNOWLEDGEMENTS

We would like to thank NSERC for funding these

works. Thanks also to Thi Hong Dung Tran for

ICAART2015-InternationalConferenceonAgentsandArtificialIntelligence

528

having helped and participated into the experiments.

REFERENCES

Bartlett, M. S., Littlewort, G., Frank, M., Lainscsek, C.,

Fasel, I., and J. Movellan, “Recognizing Facial

Expression: Machine Learning and Application to

Spontaneous Behavior,” Proc. IEEE Int’l Conf.

Computer Vision and Pattern Recognition (CVPR

’05), pp. 568-573, 2005.

Chaouachi, M., Jraidi, I., Frasson, C.: Modeling Mental

Workload Using EEG Features for Intelligent

Systems. User Modeling and User-Adapted

Interaction, Girona, Spain, 50-61(2011).

D'Mello, S., and Graesser, A., 2009. ‘Automatic Detection

of Learner's Affect From Gross Body Language’,

Applied Artificial Intelligence, 23, (2), pp. 123-150.

Georgeff, M., Pell, B. Pollack, M., Tambe, M., Woolridge,

M. The Belief-Desire-Intention Model of Agency,

1999.

Jaekoo J., Perception and BDI Reasoning Based Agent

Model for Human Behavior Simulation in Complex

System, Human-Computer Interaction, Part V, HCII

2013, LNCS 8008, pp.62-71. 2013.

Jones, C., Jonsson, I. M.: Automatic recognition of

affective cues in the speech of car drivers to allow

appropriate responses. In: Proc. OZCHI (2005).

Neiberg, D., Elenius, K. and Laskowski, K. “Emotion

Recognition in Spontaneous Speech Using GMM,”

Proc. Int’l Conf. Spoken Language Processing (ICSLP

’06), pp. 809-812, 2006.

Ortony, A., Clore, G., and Collins, A. (1988). The

cognitive structure of emotions. Cambridge University

Press.

O’Toole, A.J. et al., “A Video Database of Moving Faces

and People,” IEEE Trans. Pattern Analysis and

Machine Intelligence, vol. 27, no. 5, pp. 812-816, May

2005.

Pantic, M., and Bartlett, M. S., “Machine Analysis of

Facial Expressions,” Face Recognition, K. Delac and

M. Grgic, eds., pp. 377-416, I-Tech Education and

Publishing, 2007.

Picard, R. W., Vyzas, E., and Healey, J., 2001. ‘Toward

machine emotional intelligence: analysis of affective

physiological state’, Pattern Analysis and Machine

Intelligence, IEEE Transactions on, 23, (10), pp.

1175-1191.

Puica, M-A, Florea, A-M. Emotional Belief-Desire-

Intention Agent Model: Previous Work and Proposed

Architecture, (IJARAI) International Journal of

Advanced Research in Artificial Intelligence, Vol. 2,

No. 2, 2013.

Rao, A. S., Georgeff, P, BDI Agents: From Theory to

Practice, Proceedings of the First International

Conference on Multiagent Systems, AAAI, 1995.

Rao, A. S., Georgeff, P., BDI Agents: From Theory to

Practice, Proceedings of the First International

Conference on Multiagent Systems, AAAI, 1995.

Schuller, B., Muller, R., B., Hornler, A., Hothker, H.

Konosu, and Rigoll, G. “Audiovisual Recognition of

Spontaneous Interest within Conversations,” Proc.

Ninth ACM Int’l Conf. Multimodal Interfaces (ICMI

’07), pp. 30-37, 2007.

Sebe, N., Lew, M. S., Cohen, I., Sun, Y., Gevers, T., and

Huang, T.S. “Authentic Facial Expression Analysis,”

Proc. IEEE Int’l Conf. Automatic Face and Gesture

Recognition (AFGR), 2004.

O’Toole, A. J. et al., “A Video Database of Moving Faces

and People,” IEEE Trans. Pattern Analysis and

Machine Intelligence, vol. 27, no. 5, pp. 812-816, May

2005.

Zeng, Z., Pantic, M., Glenn I. R., and Huang, T. S. A

survey of Affect Recognition Methods: Audio, Visual,

and Spontaneous Expressions, Member, IEEE

Computer Society, IEEE Transactions on Pattern

Analysis and Machine Intelligence, Vol. 31, no.1,

January 2009, pp. 39-57.

CreationofEmotion-inducingScenariosusingBDI

529