Impressions of Size-Changing in a Companion Robot

Martin Cooney and Stefan M. Karlsson

Intelligent Systems Lab, Halmstad University, Box 823, 301 18 Halmstad, Sweden

Keywords: Adaptive Interfaces, Physiology-driven Robotics, Human-Robot Interaction, Size-Changing.

Abstract: Physiological data such as head movements can be used to intuitively control a companion robot to perform

useful tasks. We believe that some tasks such as reaching for high objects or getting out of a person’s way

could be accomplished via size changes, but such motions should not seem threatening or bothersome. To

gain insight into how size changes are perceived, the Think Aloud Method was used to gather typical

impressions of a new robotic prototype which can expand in height or width based on a user’s head

movements. The results indicate promise for such systems, also highlighting some potential pitfalls.

1 INTRODUCTION

The current paper reports on the first phase of our

work in designing a novel physiological computing

system involving a companion robot which can

adapt its size. Size-changing functionality in a robot

will allow useful behaviour such as stretching to

reach for objects or attract attention, as well as

shrinking to be carried, get out of the way in urgent

situations, or operate in narrow spaces. This concept

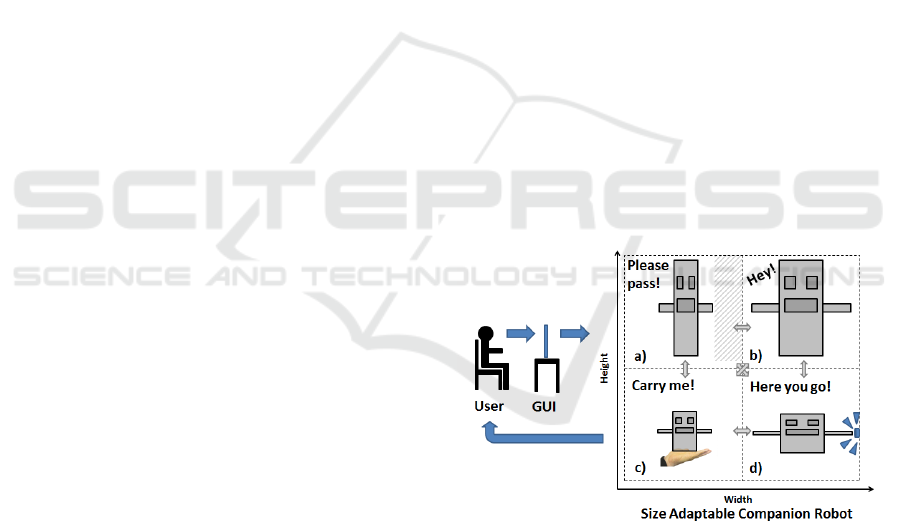

is shown in Figure 1.

One challenge is that size strongly influences our

impressions: we can feel reverence toward high

mountains, trepidation toward a large predator,

affection toward a small infant, and disregard for a

mote of dust. When a robot changes size based on a

user’s commands, it should be perceived as fun and

helpful and not as dangerous or bothersome.

Thus the goal of the current study was to obtain

knowledge of how a robot’s size-changing will be

perceived by people. The investigated scenario

involved a humanoid prototype as a familiar

interface—simplified to avoid eliciting unreal

expectations—and basic size changes in height and

width, which could be perceived differently. To

eavesdrop on user impressions, the Think Aloud

Method (Lewis and Rieman, 1993) was used. The

acquired knowledge—a list of size changes and

typical associated impressions—will be extended in

the next step of our work, in which we will focus on

specific size-changing tasks, thereby informing a

next generation of size-adaptive robot systems.

Figure 1: The basic concept for our system: a user’s

physiological signals are transformed via a Graphic User

Interface (GUI) into commands for a robot to change

size—e.g., becoming (a) thin to let someone pass, (b) large

to attract attention, (c) small to be more easily held, or (d)

wide in order to access a far object—which should be

perceived by the user as pleasant and unthreatening.

2 RELATED WORK

This work touches on three areas—physiology-

driven robotic interfaces, size-changing artifacts and

perception of size-changing cues.

2.1 Physiological Interfaces

Fairclough et al. described various forms of

physiological computing—mouse and keyboard,

body tracking, muscle or gaze, and Brain Computer

Interfaces (BCI) (2011)—which have also been used

118

Cooney M. and M. Karlsson S..

Impressions of Size-Changing in a Companion Robot.

DOI: 10.5220/0005328801180123

In Proceedings of the 2nd International Conference on Physiological Computing Systems (PhyCS-2015), pages 118-123

ISBN: 978-989-758-085-7

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

to control robotic devices. For example, a BCI

interface was used to control a wheelchair and

devices in an intelligent environment (Kanemura et

al., 2013). Fiber optic sensors detecting finger

motions were used to control two extra fingers (Wu

and Asada, 2014). And, a head tracker was used to

control the humanoid robot PR2 to allow a

tetraplegic person to scratch himself and wipe his

face (Cousins and Evans, 2014). We selected the

latter kind of approach, which is easy to use and

inclusive as arms are not required.

For head pose estimation, promising work is

being conducted using active appearance models

(AAM) (Cootes et al., 1992), but challenges with

lighting and lack of temporal consistency can lead to

jitter in estimates. An approach intended for

controlling a cursor, “eViacam”, estimates overall

head position in video using the Viola Jones detector

(eviacam.sourceforge.net). The approach we propose

is faster and smoother, utilizing less computational

resources and optical flow to track a face in between

detected frames.

2.2 Size-Changing Artifacts

The need for adaptive companion robots has been

voiced (Dautenhahn, 2004). Already, simple size

changes such as becoming longer or taller can be

enacted by some factory and tele-operated robots;

more complex changes can also be achieved using

multiple modules (Alonso-Mora et al., 2012; Revzen

et al., 2011), objects (Brodbeck and Iida, 2012), and

approaches such as “jamming” (Steltz et al., 2009)

or programmable matter/4d printing (An et al.,

2014). Outside of robotics, size-changing

mechanisms involving (1) elastic/absorbent

materials, (2) telescopic cylinders, (3) scissor

linkages, (4) folding, and (5) rack and pinions, have

been used to build artifacts such as (1) balloons; (2)

construction vehicles; (3) furniture, elevators,

architectural displays and toys (e.g., Hoberman’s

combinable Expandagon blocks); (4) maps, satellites

(Miura, 1985) and medicinal devices (You and

Kuribayashi, 2003); as well as (5) locomotive

devices. Unknown was how to build a robot which

can expand in height or width.

2.3 Perception of Size Changes

Some pioneering studies showed that tall robots can

appear more conscientious and human-like and less

neurotic (Walters et al., 2009), and more dominant

(Rae and Mutlu, 2013) than short robots. Also, in

comparing simulations of shape changes in a cell

phone, growing larger evoked a strongest emotional

response in terms of both pleasure and arousal, as

well as a highest sense of animacy, and seemed

highly desirable (Pedersen et al., 2014). These

results suggested the usefulness of investigating how

size changes are perceived in a robot.

Human and animal cues offered some additional

insight. Height-wise expansion can seem dominant

with an erect posture (Carney et al., 2005), draw

attention as in hand-raising, or indicate curiosity if

conducted to see over an obstacle. Height-wise

contraction expressed through a downcast face can

indicate shame or dejection (Darwin, 1872), or

focused tension, like a crouched runner ready to

spring forward. Width-wise expansion can indicate

dominance when an expansive open posture with

extended limbs is adopted (Carney et al., 2005), or

happiness from a full belly, through satisfying an

important need (Maslow, 1943). Width-wise

contraction can indicate tension via a closed posture

(Burgoon, 1991), or suffering through a metaphor of

malnourishment or dehydration. Becoming small

can express fear as cowering (Darwin, 1872), and

possibly cuteness because small size is a

characteristic of children (Lorenz, 1971). Thus,

many predictions could be made, which required

investigation to determine if they would apply to the

case of a robot.

Thus, the contribution of the current work is

acquiring some first knowledge of how people

perceive a robot’s size changes using a new

humanoid prototype capable of itself expanding and

contracting in both or either height and width.

3 SUICA: A SIZE-CHANGING

HUMANOID PROTOTYPE

Design of our new prototype, Suica, involved (1) a

proof-of-concept physiological computing interface

and (2) an embodiment with a cover and size-

changing mechanism.

3.1 Control Interface

Our requirements for an interface included that it

would be robust, simple, fast, and provide a natural

mode of interaction without the use of arms. Also

we restricted ourselves to the scenario of a single

user controlling one robot with a computer.

The designed interface uses a standard low-end

web camera to acquire video data at 30 frames per

second. An elliptic region containing a frontal face

ImpressionsofSize-ChanginginaCompanionRobot

119

was detected at 1Hz (one frame per every 30

processed) using the Viola-Jones detection algorithm

provided with Matlab/Computer Vision Toolbox

(www.mathworks.se). Optical flow was calculated

similar to the Lucas-Kanade approach (Lucas and

Kanade, 1981), yielding a dense flow by

regularization (Karlsson and Bigun, 2012). Optical

flow was used for two purposes. First, to implement

a smooth tracking of the face region in frames for

which the Viola Jones algorithm was not invoked.

Second, optical flow with support from inside the

tracked face region was used to control the

computer’s mouse cursor. To achieve smooth,

natural motions of the cursor, a concept of

momentum was also implemented in which optical

flow affects a particle of small mass (the cursor)

with a force in a surrounding of high friction.

The system was implemented entirely in Matlab,

and worked well on a resolution as low as 200 by

200 pixels. At that resolution, the application took

up roughly 14% of the CPU load, without skipping

any frames, whereas the well-written C-

implementation of “eViacam” took up roughly 25%

(as measured on a Lenovo ThinkPad X1 Carbon

laptop, with an Intel Core i5 microprocessor, 1.80

GHz, and 4GB RAM).

Also VoxCommando (voxcommando.com) was

used to quickly associate a speech command with a

mouse click event. Using this system and a GUI we

built, a user can turn their head in any direction (e.g.,

left, right, up, or down) and say “right click” to issue

commands for a robot to change size.

3.2 Embodiment

When changing size, a robot should be covered to

protect nearby persons from potential injury. Our

requirements for a cover were: robustness, ease of

changing and retaining sizes (low energy and

“multistability”), light weight, and compactness.

(Water-proofing was not felt to be a priority for the

current study.) Also we focused on the scenario of a

(1) flat, (2) rectangular area which can be scaled (3)

along its principal axes. (1) Curvature was not

considered for simplicity. Also, because we wished

to consider the simplest case of a plane for our initial

investigation, we did not require our prototype to

stand but decided it could be laid on the floor in

front of a user. (2) Although triangles are also

important because they can be used to build all other

shapes, a rectangle was chosen due to relevance in

engineering for modeling objects (box modeling), as

well as in psychology because many fabricated

objects are rectangular, and a single rectangle better

approximates the frontal humanoid form in two

dimensions. (3) Shear transformations were not

considered because shape is not preserved.

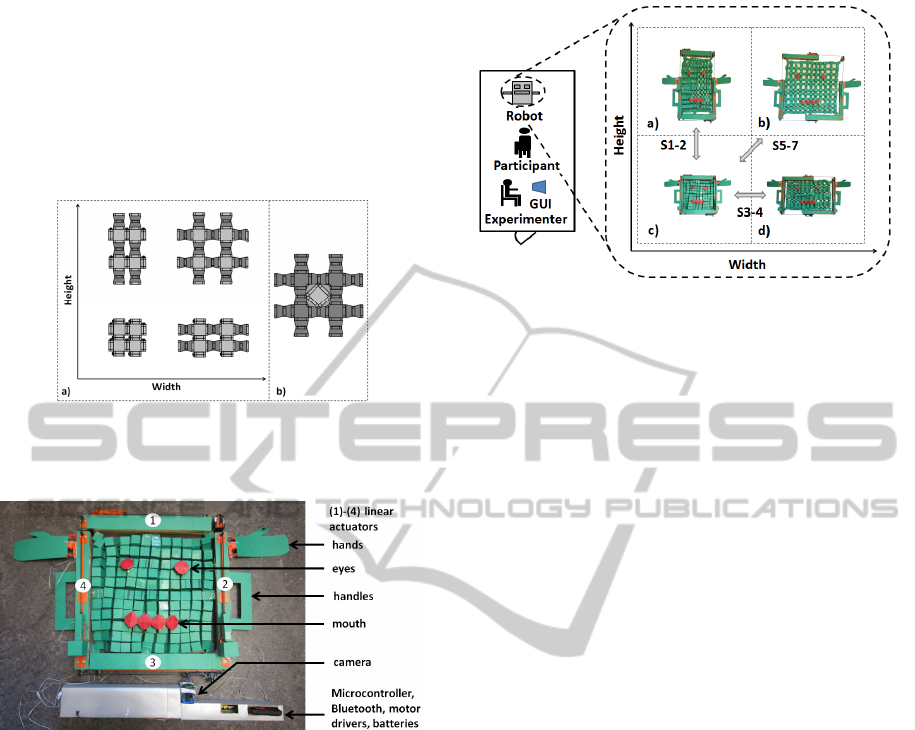

To create a cover, a simple solution involving an

elastic material such as latex could have problems

such as tearing, deterioration, force required to

maintain an expanded state, slack in the contracted

state, and allergies in some interacting persons.

Therefore, we instead designed and implemented a

folding pattern as shown in Figure 2, composed of

squares connected by V-shaped strips (contracted:

27.5cm, expanded: 77.5cm). This plane can be made

arbitrarily flat and holes too small for a person’s

fingers to pass through by reducing the length of the

sides of the squares and connecting strips, or

covering with a top layer.

In addition to a cover, a size-changing frame was

constructed using four rack and pinion style linear

actuators, as can be seen in Figure 3. Bluetooth and

a camera were included to issue motor commands

and capture video. Typical features of a humanoid

companion robot such as simplified face with a

neutral expression and moving hands were also

added to provide a feeling of human-likeness and

familiarity. Although Suica is only a prototype with

various limitations, unlike previous artifacts it can

expand and contract in height, width, or both, while

presenting a safe, complete appearance.

4 IMPRESSIONS OF A

SIZE-CHANGING ROBOT

To gain insight into how changes in height and

width are perceived in a robot, a study was

conducted with Suica using the Think Aloud Method

(Lewis and Rieman, 1993). This method was useful

because we did not know how people would

perceive size changes.

4.1 Participants

Eight participants (age: M = 33.5 years, SD = 9.6, 2

female, 6 male) working at a university lab in

Sweden participated for approximately 30 minutes

each and were not remunerated.

4.2 Procedure

Each participant sat in front of Suica in a small room

and the door was closed, leaving them alone with the

experimenter, as in Figure 4. A simple handout

introduced our robot and stated that they could

PhyCS2015-2ndInternationalConferenceonPhysiologicalComputingSystems

120

freely speak any thoughts that came to mind. When

ready, participants imagined interacting and watched

the prototype change size, describing aloud what

they saw. Suica was controlled by the experimenter

to reduce participants’ workload and ensure the

same stimuli were witnessed by all. Afterwards,

short interviews were conducted and transcribed

protocols coded by the experimenter.

Figure 2: Size-changing cover: (a) a main layer which

enables changes in height and width, and (b) an optional

top layer (shown lightly shaded over the dark main layer).

Figure 3: Actual photo of our humanoid prototype, Suica,

indicating component locations.

4.3 Conditions

Seven size changes were shown in random order:

S1 Tall: the robot became tall from a small state

(a transition from state “c” to “a” in Figure 4)

S2 Short: the robot became small from a tall state

(Figure 4 “a” to “c”)

S3 Wide: the robot became wide from a small

state (Figure 4 “c” to “d”)

S4 Thin: the robot became small from a wide state

(Figure 4 “d” to “c”)

S5 Large: the robot became tall and wide from a

small state (Figure 4 “c” to “b”)

S6 Small: the robot became short and thin from a

large state (Figure 4 “b” to “c”)

S7 Repeated: the robot expanded and contracted

three times (Figure 4 “c” to “b”).

Figure 4: Experiment layout and depiction of the seven

size changes which participants observed (S1-7) using

actual photos of our prototype, Suica, changing between

four states: (a) tall, (b) large, (c) small, and (d) wide.

4.4 Predictions

We had some expectations of how size changes

would be perceived based on the literature in Section

2.3 and our own ideas (the term “intimidating” was

used in place of “dominant” to try to avoid

specialized words which participants might not use).

P1 Tall: Height-wise expansions would appear

intimidating, show a desire for attention, or

indicate curiosity.

P2 Short: Height-wise contractions would

indicate shame or focused tension.

P3 Wide: Width-wise expansions would appear

intimidating or show contentment.

P4 Thin: Width-wise contractions would show

tension or suffering.

P5 Large: Becoming large in both height and

width would appear intimidating.

P6 Small: Becoming small in both height and

width would appear cute or indicate fear.

P7 Repeated: Repeated changes would be

perceived as playful.

4.5 Results

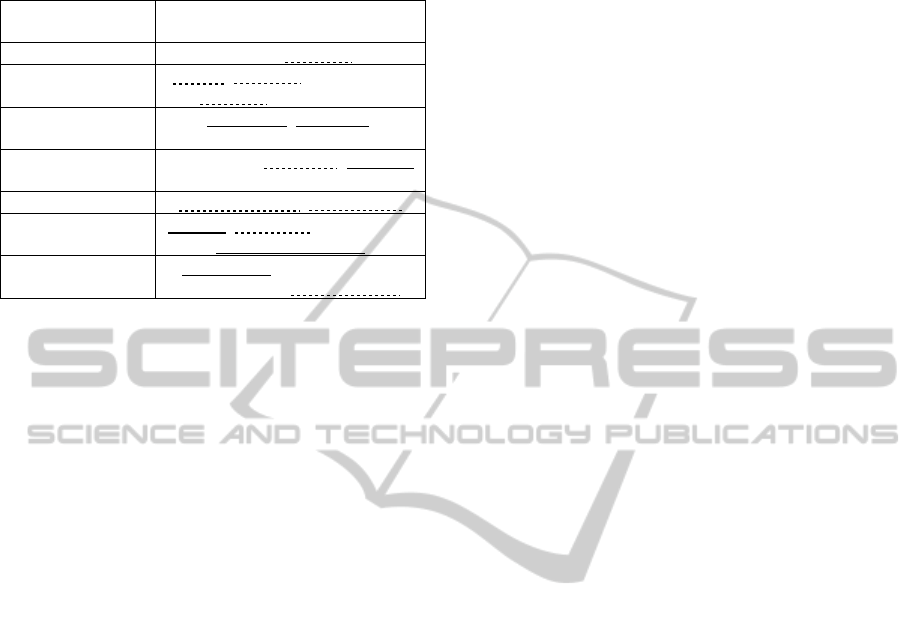

Typical impressions common to more than one

participant, shown in Table 1, were analyzed in

regard to (1) positivity, (2) consistency, (3) and

agreement with our predictions. (1) A Chi-squared

test did not reveal a difference in the prevalence of

positive, neutral, and negative impressions: χ²(2, N =

54) = 0.8, p = .7, indicating promise for

communicating various cues. (2) Some consistency

was also observed: expansions were reported as

showing incredulousness similar to eyebrows rising

or eyes opening wide, and contractions were

ImpressionsofSize-ChanginginaCompanionRobot

121

Table 1: Typical impressions of size changes (bold font

and italics show predicted and related impressions; solid

and dotted lines show positive and negative impressions).

Size Change

(Prediction)

Impressions

(No. of participants)

S1 Tall (P1) incredulous (3), angry (2)

S2 Short (P2)

sad (3), fearful (2), attentive (2),

angry (2), responding (2)

S3 Wide (P3)

smiling (3), happy (2),

incredulous (2)

S4 Thin (P4)

attentive (3), fearful (2), attractive

(2)

S5 Large (P5) intimidating (4), unnatural (2)

S6 Small (P6)

cute (4), fearful (2), attentive (2),

face more visible (2)

S7 Repeated (P7)

ebullient (3), wanting to show

something (3), disagreeing (2)

perceived as cute, fearful, or attentive. (3)

Predictions were partially supported in only 4/7

cases, with only 28% and 57% of impressions

directly predicted or related to predictions. The

reason is the high complexity of human signalling

(many related cues exist with similar expression),

and interpretation required to associate robot size

changes with biological motions. We feel that the

number of unanticipated impressions confirmed that

it had been useful to attempt to gain insight into the

kinds of impressions which result from observing

size changes.

Some impressions were not directly predicted but

related to our predictions. For P1, the robot was

described as angry rather than intimidating, which

could have been because anger displays intimidate

(Clark, Pataki and Carver, 1996). For S2 and S4,

impressions of fearfulness related to P6, for

becoming smaller. For P2, P4 and P6, although one

participant was reminded of the focused tension of a

player in a Judo match, attentiveness was attributed

in place of tension; these two constructs have been

grouped as “activation” (Tonn, 1984). For P4, fear

was perceived in place of suffering, which relate as

fear exerts unpleasant physical effects. For P7

repeated changes were described not as playful but

as happy and excited, like jumping for joy or a dog

wagging its tail; these concepts relate, as “cheerful”

and “joyous” have been listed as synonyms for

playfulness (www.thesaurus.com/browse/playful).

Some results were unanticipated. For P1, only

one participant reported feeling that Suica desired

attention by becoming taller because we had asked

participants to watch the robot, and curiosity was not

perceived as there was no obstacle to see over. For

P2, impressions of shame were not reported,

possibly because shame is conveyed by gaze

directed toward the ground (whereas Suica’s eyes

always looked forward). For P3, the widening robot

seemed to be smiling, laughing, finding humor in

something, and otherwise happy; however this was

due to a Cheshire Cat-like widening of the robot’s

mouth and not to the robot seeming like it had a full

belly as we had predicted.

5 DISCUSSION AND

CONCLUSIONS

Results of the current study were encouraging. The

main contribution of the study was to identify a rich

range of positive and negative impressions which

people associate with size changes in a robot.

Positive impressions included that our robot

appeared cute and attentive when contracting, and

happy when widening or repeatedly expanding and

contracting. Negative impressions included that the

robot seemed intimidating when expanding, angry

when thin, and fearful when contracting. This

suggests that to present a fun impression when being

controlled, a robot could seek to show mostly

positive signals, and accompany negative signals

with positive ones: e.g., a robot could smile while

expanding to accomplish a task then contract again.

Additionally, we built a physiological computing

interface based on head motion which could be used

by elderly or disabled persons and requires less

computational resources than previous work. We

also reported on the construction of a new prototype

companion robot, Suica, which can expand in height

or width (a video is available online, e.g., at the first

author’s homepage: martin-cooney.com).

These results are limited by the prototype used

and exploratory data acquisition design, including

the participants (a small number of researchers at a

university in Sweden). Our next step will be to

conduct a more solid experiment with a robot

capable of performing practical tasks such as

reaching for objects and getting out of the way. This

will indicate if perceptions differ based on a robot’s

task: e.g., will becoming large intimidate even if it is

known that a robot is reaching for a high object?

Physiological measurements such as skin

conductance and saliva hormone tests will provide

objective evidence of unpleasant impressions. We

also aim to design a new GUI in which a user can

control online the area and symmetry of a robot by

bringing their face closer or further away, and tilting

their head. Furthermore, impressions will be

investigated of changes in speed and depth (e.g.,

swiftness could indicate arousal and a thick or

PhyCS2015-2ndInternationalConferenceonPhysiologicalComputingSystems

122

paper-thin embodiment could indicate solid resolve

or indecision), as well as shear and local size

changes (e.g., skew could indicate discord, a large

head could seem intelligent or cute, and large eyes

or ears could express interest). Sensors and

mechanisms to improve symmetry, prevent some

drooping due to gravity and compensate for friction

will improve accurate transmission of cues.

ACKNOWLEDGEMENTS

We’d like to thank all those who helped! We

received funding from the Center for Applied

Intelligent Systems Research (CAISR) program,

Swedish Knowledge Foundation.

REFERENCES

Alonso-Mora, J., et al., 2012. Object and Animation

Display with Multiple Aerial Vehicles. In Proc. IROS,

1078-1083. DOI: 10.1109/IROS.2012.6385551.

An, B., et al., 2014. An End-to-End Approach to Making

Self-Folded 3D Surface Shapes by Uniform Heating.

In Proc. ICRA, 1466-1473. DOI:

10.1109/ICRA.2014.6907045.

Brodbeck, L., Iida, F., 2012. Enhanced Robotic Body

Extension with Modular Units. In Proc. IROS, 1428-

1433. DOI: 10.1109/IROS.2012.6385516.

Burgoon, J. K., 1991. Relational Message Interpretations

of Touch, Conversational Distance, and Posture.

Journal of Nonverbal Behavior 15 (4), 233-259. DOI:

10.1007/BF00986924.

Carney, D.R., Hall, J.A., Smith LeBeau, L., 2005. Beliefs

about the nonverbal expression of social power.

Journal of Nonverbal Behavior 29 (2), 105–123. DOI:

10.1007/s10919-005-2743-z.

Clark, M. S., Pataki, S. P., Carver, V. H., 1996. Some

thoughts and findings on self-presentation of emotions

in relationships. Knowledge structures in close

relationships: A social psychological approach, 247-

274.

Cootes, T. F., Taylor, C. J., Cooper, D. H., Graham, J.,

1992. Training models of shape from sets of examples.

In Proceedings of BMVC’92, 266-275.

Cousins, S., Evans, H., 2014. ROS Expands the World for

Quadriplegics. IEEE Robotics & Automation

Magazine 21 (2), 14-17.

Darwin, C. R., 1872. The expression of the emotions in

man and animals. London: John Murray. 1st edition.

Retrieved Nov. 4, 2014 from http://darwin-

online.org.uk/content/frameset?itemID=F1142&viewt

ype=text&pageseq=1.

Dautenhahn, K., 2004. Robots We Like to Live With? – A

Developmental Perspective on a Personalized, Life-

Long Robot Companion. In Proc. RO-MAN, 17-22.

DOI: 10.1109/ROMAN.2004.1374720.

Fairclough, S., Gilleade, K., Nacke, L.E., Mandryk, R.L.,

2011. Brain and Body Interfaces: Designing for

Meaningful Interaction. In Proceedings of CHI EA,

65-68. DOI: 10.1145/1979742.1979591.

Kanemura, A., et al., 2013. A Waypoint-Based

Framework in Brain-Controlled Smart Home

Environments: Brain Interfaces, Domotics, and

Robotics Integration. In Proc. IROS, 865-870. DOI:

10.1109/IROS.2013.6696452.

Karlsson, S. M., Bigun, J., 2012. Lip-motion events

analysis and lip segmentation using optical flow. In

Proc. CVPRW 2012, 138-145. DOI:

10.1109/CVPRW.2012.6239228.

Lewis, C., Rieman, J., 1993. Task-Centered User Interface

Design: A Practical Introduction. Technical Document.

Lorenz, K. 1971. Studies in Animal and Human Behavior.

Harvard Univ. Press, Cambridge, MA.

Lucas, B. D., Kanade, T., 1981. An Iterative Image

Registration Technique with an Application to Stereo

Vision. In Proc. IJCAI '81, 674-679.

Maslow, A. H., 1943. A theory of human motivation.

Psychological Review 50 (4), 370–96. DOI:

10.1037/h0054346.

Miura, K., 1985. Method of Packaging and Deployment of

Large Membranes in Space.

Inst. of Space and

Astronaut. Science report 618, 1-9.

Pedersen, E. W., Subramanian, S., Hornbæk, K., 2014. Is

my phone alive?: a large-scale study of shape change

in handheld devices using videos. In Proc. CHI, 2579-

2588. DOI: 10.1145/2556288.2557018.

Rae, I., Takayama, L. Mutlu, B, 2013. The influence of

height in robot-mediated communication. In Proc.

HRI, 1-8. DOI: 10.1109/HRI.2013.6483495.

Revzen, S., Bhoite, M., Macasieb, A., Yim, M., 2011.

Structure synthesis on-the-fly in a modular robot. In

Proc. IROS, 4797-4802. DOI:

10.1109/IROS.2011.6094575.

Steltz, E., et al., 2009. JSEL: Jamming Skin Enabled

Locomotion. In Proc. IROS, 5672-5677. DOI:

10.1109/IROS.2009.5354790.

Tonn, B. E., 1984. A sociopsychological contribution to

the theory of individual time-allocation. Environment

and Planning A 16 (2), 201-223.

Walters, M. L., Koay, K. L., Syrdal, D. S., Dautenhahn,

K., Boekhorst, R., 2009. Preferences and Perceptions

of Robot Appearance and Embodiment in Human-

Robot Interaction Trials. In Proc. New Frontiers in

Human-Robot Interaction: Symposium at AISB09,

136-143.

Wu, F. Y., Asada, H. H., 2014. Bio-artificial synergies for

grasp posture control of supernumerary robotic fingers.

In Proc. Robotics: Science and Systems.

You, Z., Kuribayashi, K., 2003. A Novel Origami Stent.

In Proc. Summer Bioengineer Conf.

ImpressionsofSize-ChanginginaCompanionRobot

123