Design, Implementation and Simulation of an Experimental Processing

Architecture for Enhancing Real-time Video Services by Combining

VANET, Cloud Computing System and Onboard Navigation System

K. Hammoudi

1,2

, N. Ajam

1,2

, M. Kasraoui

1

, F. Dornaika

3,4

, K. Radhakrishnan

2,∗

, K. Bandi

2,∗

, Q. Cai

2,∗

and S. Liu

2,∗

1

Research Institute on Embedded Electronic Systems (IRSEEM), IIS Group, St-Etienne-du-Rouvray, France

2

ESIGELEC School of Engineering, Department of ICT (

∗

MS Students), St-Etienne-du-Rouvray, France

3

Department of Computer Science and Artificial Intelligence, University of the Basque Country, San Sebasti

´

an, Spain

4

IKERBASQUE, Basque Foundation for Science, Bilbao, Spain

Keywords:

Vehicular Network (VANET), Vehicular Cloud Computing (VCC), Image-based Recognition, Fusion of

Multi-source Imagery, Real-time Video Services, Cooperative Monitoring System, Sensor Networks.

Abstract:

In this paper, we propose a design for novel and experimental cloud computing systems. The proposed sys-

tem aims at enhancing computational, communicational and annalistic capabilities of road navigation services

by merging several independent technologies, namely vision-based embedded navigation systems, prominent

Cloud Computing Systems (CCSs) and Vehicular Ad-hoc NETwork (VANET). This work presents our initial

investigations by describing the design of a global generic system. The designed system has been experi-

mented with various scenarios of video-based road services. Moreover, the associated architecture has been

implemented on a small-scale simulator of an in-vehicle embedded system. The implemented architecture has

been experimented in the case of a simulated road service to aid the police agency. The goal of this service is

to recognize and track searched individuals and vehicles in a real-time monitoring system remotely connected

to moving cars. The presented work demonstrates the potential of our system for efficiently enhancing and

diversifying real-time video services in road environments.

1 INTRODUCTION AND

MOTIVATION

In this work, we propose to exploit cloud computing

systems for developing real-time road video services

from embedded navigation systems and VANETs

(Vehicular Ad-hoc NETworks). The proposed sys-

tems will have a final objective to be experimented on

a vehicle fleet. More particularly, this paper presents

the design, the implementation and the simulation

parts of a cloud-based recognition system for extend-

ing real-time road video services. Indeed, the pro-

posed global generic system will exploit a cloud-

based embedded recognition systems and VANET

technologies; on the one hand, for analyzing the road

traffic (e.g.; vehicular or navigation information) and

on the other hand, for mutualizing the computational

resources as well as for sharing relevant information

visually extracted. Notably, the designed system will

be useful for identifying dynamical Points Of Interest

from embedded cameras (e.g., traffic-based POI) and

then sharing the identified POIs to external stakehold-

ers potentially interested (e.g., surrounding vehicles

or road agencies).

For instance, these technologies can be exploited

for improving the road traffic, the emergency map-

ping or the citizen security by cooperatively analyzing

acquired georeferenced road images. Respectively,

we present below some scenarios that will be based

on the detection of dynamical POIs:

• Sc. 1: a vehicle can detect an available parking

area and to transmit its GPS location in a pre-

defined neighborhood for informing surrounding

drivers by exploiting a cloud computing system

and VANET,

• Sc. 2: each vehicle can similarly transmit im-

ages for analyzing and mapping the road mete-

orology in real-time. Thus, drivers can define an

itinerary based on meteorological criteria, notably

to reduce the moving in areas having bad weather

(e.g., snowy roads),

• Sc. 3: a vehicle can extract on-the-fly the license

plate of preceding vehicles and then, sending

the extracted plate characters to police services

174

Hammoudi K., Ajam N., Kasraoui M., Dornaika F., Radhakrishnan K., Bandi K., Cai Q. and Liu S..

Design, Implementation and Simulation of an Experimental Processing Architecture for Enhancing Real-time Video Services by Combining VANET,

Cloud Computing System and Onboard Navigation System.

DOI: 10.5220/0005330201740179

In Proceedings of the 5th International Conference on Pervasive and Embedded Computing and Communication Systems (PECCS-2015), pages

174-179

ISBN: 978-989-758-084-0

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

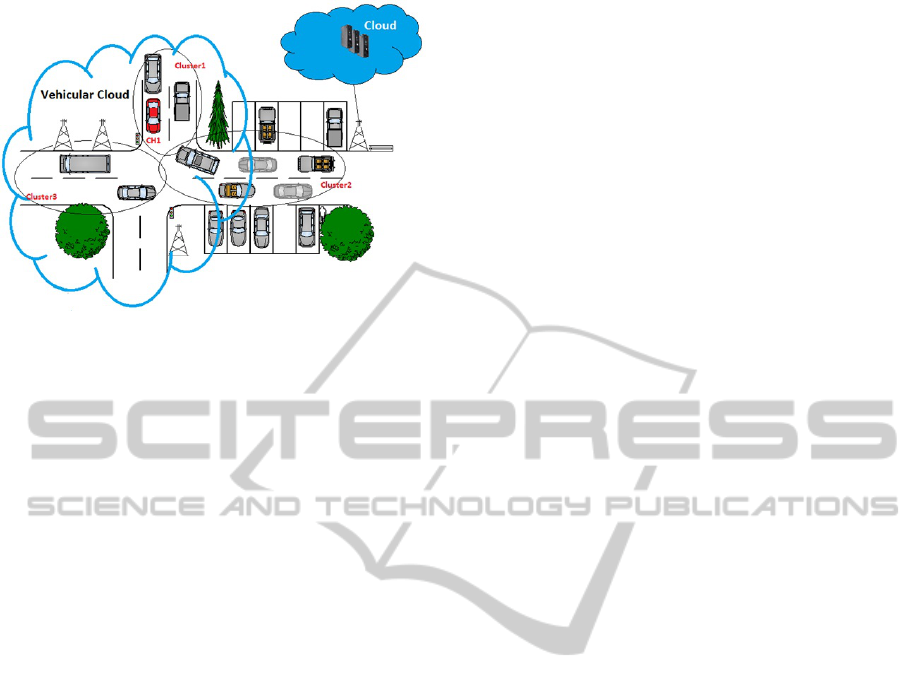

Figure 1: Illustration of the designed cloud computing sys-

tem.

searching to localize stolen vehicles by matching

extracted data with their reference databases,

• Sc. 4: similarly, a vehicle can extract on-the-fly

people faces from the streets and then, sending the

extracted face images to police services that aim

to localize searched individuals.

In this study, we have experimented the proposed

cloud-based system by considering the last scenarios

related to the police service application.

2 RELATED WORK

Nowadays, cloud computing developments are revo-

lutionizing the world by providing to companies more

and more powerful services. In particular, many com-

panies tend to store their data on external servers or

data centers. Indeed, this technology improves the

Quality of Service (QoS); notably for the data man-

agement, the data security as well as for the data dis-

tribution. By this way, the providers of cloud com-

puting systems allow many companies to develop ser-

vices specifically focused on their principal activi-

ties. More precisely, cloud computing can be defined

as a technology providing resources at three levels,

namely infrastructures, software platforms and ser-

vices (Whaiduzzaman et al., 2014). The cloud com-

puting was initially employed through wire-based net-

work for internet and it has been progressively ex-

tended to the mobile network (e.g., through cellular

networks). Notably, the cloud computing technolo-

gies facilitate the development of hybrid systems as

well as the mutualizing of computational resources.

In this work, we are particularly interested by the

development of cloud computing systems on the basis

of VANET for enhancing and diversifying real-time

road services.

VANET networks have the particularity to ex-

ploit Ad-hoc systems. In other terms, these systems

are self-organizing in the sense that each of them

can communicate with others without the necessity

of exploiting a pre-defined infrastructure. The de-

velopment of VANET had a primary goal of sup-

porting Intelligent Transport System through Vehicle-

to-Infrastructure (V2I) and Vehicle-to-Vehicle (V2V)

communications (e.g., (Maslekar, 2011)).

Besides, the novel generation of general pub-

lic vehicles is equipped with computer-aided embed-

ded navigation and vision systems such as Advanced

Driver Assistance Systems (ADAS systems). In par-

ticular, ADAS systems are more and more employed

for detecting road obstacles (e.g.; self-parking) or

for detecting the visibility degree of roads (e.g.; au-

tomatic lighting systems). In parallel, experimen-

tal multi-camera vehicle systems are actively devel-

oped for the research in the fields of cartography

and machine vision in order to reconstruct urban

environments in 3D as well as to develop full au-

tonomous navigation vehicles (Hammoudi and Mc-

Donald, 2013; Hammoudi et al., 2013).

To the best of our knowledge, video services in

vehicular clouds are not very developed. In (Gerla

et al., 2013), Gerla et al. presented an image-on-

demand service named “Pics-on-wheels” where some

vehicles will send their acquired images for example

by analyzing detected accidents. These images can

then be used for assurance claims. In our case, we

present a generic cloud computing system that could

be used for developing various real-time video ser-

vices by exploiting a distributed computing system.

Notably, this system will be employed for sharing

traffic information (e.g.; in aided-navigation or road

safety) by exploiting embedded vision-based systems

(e.g., recognition system), CCSs and VANETs (see

Figure 1). First results of our work were presented at

a French-speaking seminar on vision-based process-

ing (CORESA). This paper presents an extended work

which describes in more detail the design of the sim-

ulator as well as the proposed processing architecture

and use cases.

3 PROPOSED GLOBAL GENERIC

SYSTEM FOR REAL-TIME

ROAD VIDEO SCENARIOS

In our case, it is assumed that the vehicles will be

equipped with embedded camera system, a GPS mod-

ule and a VANET connecting system (802.11p). No-

tably, new generation vehicles are equipped with var-

Design,ImplementationandSimulationofanExperimentalProcessingArchitectureforEnhancingReal-timeVideo

ServicesbyCombiningVANET,CloudComputingSystemandOnboardNavigationSystem

175

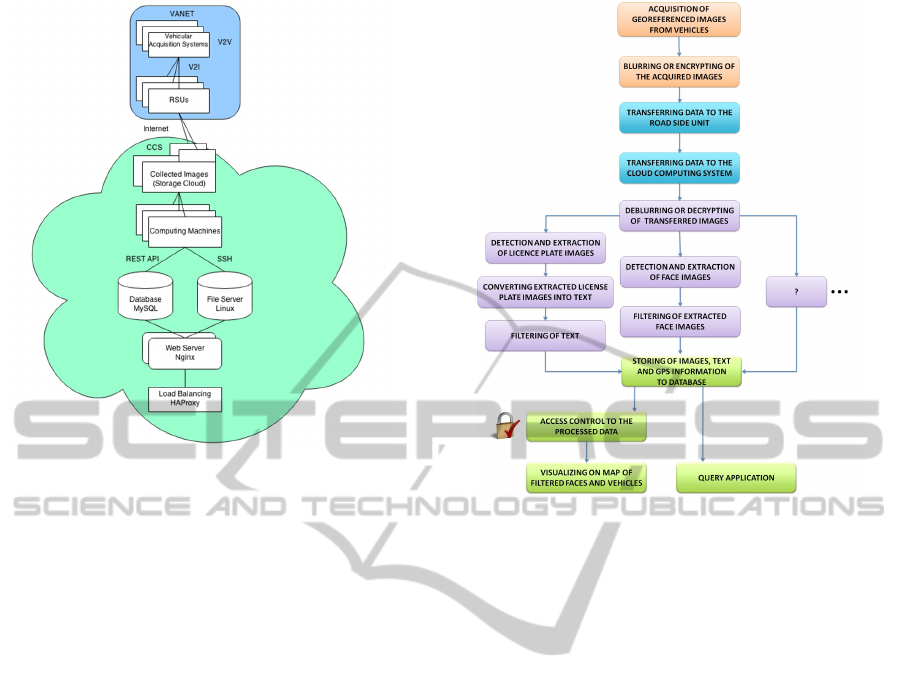

Figure 2: Proposed processing architecture of a global

generic system.

ious types of sensors such as cameras located at the

front and rear end. The proposed vision-based cloud

computing system will take advantage of distributed

computing and storing capabilities of conventional

CCS and VANET (see Figure 1) for providing video

services requiring high resources in term of data pro-

cessing. In particular, the proposed system will be

useful for visually recognizing dynamical objects of

interest such as, for the search of stolen vehicles or

individuals.

More precisely, the proposed system will exploit

vehicular networks or external data center according

to the needs. Yu et al. classify some cloud-based sys-

tems related to VANET (Yu et al., 2013). First, ve-

hicular cloud is exclusively composed of vehicles. It

allows vehicles to dynamically schedule on demand

computational and storage resources. Second, road-

side cloud is composed of dedicated servers and RSUs

(Road Side Units). The later permits access to the

cloud. This cloud is exclusively used by vehicles lo-

calized within the radio coverage of the RSU. Vehi-

cles roam between successive RSUs to continuously

benefit from the service. Third, central cloud is based

either on dedicated servers in the Internet or data cen-

ters on VANET itself. In our case, we are using

the concept of Hybrid Vehicular Cloud (HVC) which

shares the processing between the Vehicular Cloud

(VC) and the central cloud.

Moreover, we visualize in Figure 2 the architec-

ture that has been developed for supporting the var-

ious data transfer and data processing. First, vehi-

cles communicate with internet access point by using

Figure 3: Proposed global dataflow diagram.

vehicle to infrastructure (V2I) or vehicle to vehicle

(V2V) communications. RSUs are exploited for re-

moving redundancy in captured images and GPS in-

formation. Second, the collected georeferenced raw

data are then sent to a customized storage cloud (e.g.;

Amazon cloud). Computing machines continuously

run the face extraction, GPS extraction and number

plate recognition algorithms in parallel. The extracted

license plate numbers as well as the extracted GPS

information are saved in a database (textual informa-

tion). The extracted images are copied to file servers.

Users access the service by connecting to a load bal-

ancing server, which distributes the requests to several

working web servers.

In Figure 3, we observe the global dataflow dia-

gram of experimented scenarios (Sc.3 − 4). As can

be observed, it worth mentioning that our architecture

can also be used for the processing of other scenar-

ios related to new real-time road video services (e.g.;

Sc.1 − 2).

4 EXPERIMENTAL RESULTS

4.1 Developed Indoor Vehicular

Monitoring Simulator

Figure 4 shows a drawing of the designed small-scale

car model, i.e. chassis of the indoor simulator (Sub-

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

176

(a) Drawing of the designed car model (2D views).

(b) Built car model with embedded devices.

Figure 4: Designed small-scale car pieces and its corre-

sponding built car model (final chassis of the simulator).

figure 4(a)) as well as the corresponding built car

model (Sub-figure 4(b)). The drawing presented in

Sub-figure 4(a) includes 2D views of major car pieces

and their associated dimensions (drawing done by us-

ing a Computer Aided Design software named Cad-

Std

1

). Annotated car dimensions correspond to those

of a standard sedan approximately reduced by a fac-

tor of 7. Sub-figure 4(b) illustrates the car model

painted (rigid mock-up) and equipped with its embed-

ded monitoring system.

Figure 5 shows the developed embedded monitor-

ing simulator in more detail. This vehicular monitor-

ing simulator is composed of the car model as well as

its associated vision-based embedded system. More

precisely, this embedded system is equipped with a

Logitech HD camera (see Sub-figure 5(a)) connected

to a Raspberry Pi micro-computer (see Sub-figure

5(b)). This micro-computer includes a SD card for

storing the acquired images. For simulating the mov-

ing of the car prototype, a screen has been placed in

front of the webcam and a video corresponding to a

vehicle path acquired by an external Mobile Mapping

System has been filmed (e.g., videos from the Kitty

research dataset

2

(Geiger et al., 2012; Fritsch et al.,

1

http://www.cadstd.com/

2

http://www.cvlibs.net/datasets/kitti/

(a) Acquisition part. (b) Processing part.

(c) Vehicular monitoring simulator (Overall view).

Figure 5: Detailed parts of the developed vision-based em-

bedded system and indoor configuration of the test bench

for experimenting road video services in real-time.

2013)). For such databases, the GPS information re-

lated to the images are provided. An overall view of

the vehicular monitoring simulator is depicted in Sub-

figure 5(c). Moreover, the micro-computer includes a

wifi adapter that was used for simulating the VANET

network. This embedded car prototype is connected

to three workstations, the one simulating the RSU, the

two others simulating the cloud nodes.

4.2 Implemented Architecture of the

Proposed Global Generic System

More precisely, two python scripts are running on

Raspberry Pi, one aims to capture images and to geo-

tag them, and the other aims to transfer the images

to RSU by FTP. In RSU, a bash script is written

to send those images to two simulated cloud nodes

by using SSH. By this way, the data flow is evenly

distributed to the cloud nodes through WiFi. The

computing machines (also cloud nodes) will process

the images in storage servers and get the extracted

faces, license plates and GPS information by run-

ning a python script invoking the corresponding algo-

rithms. On the web server, we implemented a REST-

ful API to access the database. The extracted images

are archived in file servers, while the license num-

ber, GPS and time are updated to the database. Thus,

the updated information can be visualized. Moreover,

new extraction algorithms can be developed for vari-

Design,ImplementationandSimulationofanExperimentalProcessingArchitectureforEnhancingReal-timeVideo

ServicesbyCombiningVANET,CloudComputingSystemandOnboardNavigationSystem

177

Table 1: Time information associated to the data transfer

and data processing for one image. Data is processed on

Intel Core i5 workstations of 2.4GHz under Windows 8.1

64 −bit with 4GB of RAM.

Image transferring Time (sec.) Image processing Time (sec.)

Rasberry Pi to RSU 1.33 Face extraction 1.08

RSU to cloud nodes 1.12 License plate extraction 3.29

ous query applications.

4.3 System Application and Evaluation

In this study, applications related to police ser-

vices previously mentioned (Scenarios 3 − 4) have

been experimented by deploying computer vision ap-

proaches well-known for their efficiency on the pro-

posed generic processing architecture (one simulated

mobile node). Notably, open-source CSharp Emgu

CV routines

3

have been exploited for carrying out

the face extraction as well as the OCR-based license

plate extraction. Data matching has been experi-

mented by comparing extracted features with a refer-

ence database generated by an operator. The proposed

experimentation pipeline distributes the flow of col-

lected images and extracted features are localized and

labeled on Google Maps-based application in quasi

real-time. Time information associated to the data

transfer and data processing for one image of 16.5Kb

(resolution of 640x480) can be observed in Table 1.

5 CONCLUSIONS AND FUTURE

WORKS

This paper presents our initial investigations for the

design, the implementation and the simulation of a

cloud computing system for enhancing and diversify-

ing real-time video services through VANET and On-

board Navigation Systems. A vehicular monitoring

simulator has been developed for carrying out indoor

experiments. A generic hardware and software archi-

tecture is proposed for experimenting new video ser-

vice applications. Accordingly, next stage will consist

of transferring this technology on two modular chas-

sis that will be fixed on vehicle windshields for ex-

periments in real mobile conditions (i.e., two moving

nodes). Moreover, research will be pursued in indoor

for improving the architecture of the developed simu-

lator and simulations of the network architecture will

be implemented under ns2

4

and ns3

5

Network Sim-

ulators. Furthermore, we will tackle research in im-

3

http://www.emgu.com/

4

http://nsnam.isi.edu/nsnam/

5

http://www.nsnam.org/

Figure 6: Illustration of a scenario related to the detection

of available parking areas by exploiting VANET.

agery for the detection of available parking areas in

order to develop parking services. A corresponding

targeted application was described in Scenario 1 and

has been illustrated in Figure 6.

Additionally to the development of research activ-

ities, it is worth mentioning that the described pro-

cessing architecture can also be used to support ped-

agogical activities on embedded systems; notably in

project-based learning.

ACKNOWLEDGEMENTS

This work is part of the SAVEMORE project

6

. The

SAVEMORE project has been selected in the con-

text of the INTERREG IVA France (Channel) - Eng-

land European cross-border co-operation programme,

which is co-financed by the ERDF.

REFERENCES

Fritsch, J., Kuehnl, T., and Geiger, A. (2013). A new per-

formance measure and evaluation benchmark for road

detection algorithms. In International Conference on

Intelligent Transportation Systems (ITSC).

Geiger, A., Lenz, P., and Urtasun, R. (2012). Are we ready

for autonomous driving? the KITTI vision benchmark

suite. In Conference on Computer Vision and Pattern

Recognition (CVPR).

Gerla, M., Weng, J. T., and Pau, G. (2013). Pics-on-wheels:

Photo surveillance in the vehicular cloud. In IEEE

International Conference on Computing, Networking

and Communications (ICNC), pages 1123–1127.

Hammoudi, K., Dornaika, F., Soheilian, B., Vallet, B., Mc-

Donald, J., and Paparoditis, N. (2013). A synergis-

tic approach for recovering occlusion-free textured

3D maps of urban facades from heterogeneous car-

tographic data. International Journal of Advanced

Robotic Systems, 10:10p.

6

http://www.savemore-project.eu/

PECCS2015-5thInternationalConferenceonPervasiveandEmbeddedComputingandCommunicationSystems

178

Hammoudi, K. and McDonald, J. (2013). Design, imple-

mentation and simulation of an experimental multi-

camera imaging system for terrestrial and multi-

purpose mobile mapping platforms: A case study. Ap-

plied Mechanics and Materials, Trans Tech Publica-

tions, Selected papers from the International Confer-

ence on Optimization of the Robots, 332:139–144.

Maslekar, N. (2011). Adaptative traffic signal control sys-

tem based on inter-vehicular communication. Ph.D.

thesis, University of Rouen, Esigelec School of Engi-

neering.

Whaiduzzaman, M., Sookhak, M., Gani, A., and Buyya, R.

(2014). A survey on vehicular cloud computing. Jour-

nal of Network and Computer Applications, 40:325–

344.

Yu, R., Zhang, Y., Gjessing, S., Xia, W., and Yang, K.

(2013). Toward cloud-based vehicular networks with

efficient resource management. In IEEE Network,

pages 48–55.

Design,ImplementationandSimulationofanExperimentalProcessingArchitectureforEnhancingReal-timeVideo

ServicesbyCombiningVANET,CloudComputingSystemandOnboardNavigationSystem

179