The EPOC Project

Energy Proportional and Opportunistic Computing System

Nicolas Beldiceanu

1

, B´arbara Dumas Feris

2

, Philippe Gravey

2

, Sabbir Hasan

3

, Claude Jard

4

,

Thomas Ledoux

1

, Yunbo Li

1

, Didier Lime

5

, Gilles Madi-Wamba

1

, Jean-Marc Menaud

1

,

Pascal Morel

6

, Michel Morvan

2

, Marie-Laure Moulinard

2

, Anne-C´ecile Orgerie

7

,

Jean-Louis Pazat

3

, Olivier Roux

5

and Ammar Sharaiha

6

1

Mines de Nantes, LINA, Nantes, France

2

Telecom Bretagne, Brest, France

3

INSA de Rennes, IRISA, Rennes, France

4

Universit´e de Nantes, LINA, Nantes, France

5

Ecole Centrale de Nantes, IRCCyN, Nantes, France

6

ENIB, Lab-STICC, Brest, France

7

CNRS, IRISA, Rennes, France

Keywords:

Data-center, Energy-efficiency, Virtualization, Task Placement, Optical Network.

Abstract:

With the emergence of the Future Internet and the dawning of new IT models such as cloud computing, the

usage of data centers (DC), and consequently their power consumption, increase dramatically. Besides the

ecological impact, the energy consumption is a predominant criteria for DC providers since it determines the

daily cost of their infrastructure. As a consequence, power management becomes one of the main challenges

for DC infrastructures and more generally for large-scale distributed systems. In this paper, we present the

EPOC project which focuses on optimizing the energy consumption of mono-site DCs connected to the regular

electrical grid and to renewable energy sources.

1 INTRODUCTION

A data center (DC) is a facility used to house tens

to thousands of computers and their associated com-

ponents. These servers are used to host applications

available in the Internet, from simple web server to

multi-tier applications, but also some batch jobs. With

the explosion of online services, particularly driven

by the extension of cloud computing, DCs are con-

suming more and more energy. The growth of energy

consumption by DCs is, at the same time, a technical,

environmental and financial problem. Technically, in

some areas (like Paris), the electrical grid has already

saturated, thus preventing new DC installation or ex-

pansion of the existing ones. From an environmental

point of view, the electricity production causes many

CO

2

emissions, whereas financially the OPEX (Oper-

ational Expenditure) have exceeded CAPEX (Capital

Expenditure). Although over the last few years, com-

puter servers have become less expensive and highly

energy efficient, the price of electricity has signifi-

cantly increased even in countries known of having

lower electricity price (e.g. France). To some ex-

tent, these operating costs are mainly related to the

power consumption. Several actions are possible to

reduce these impacts/costs. One of them consists in

using a local power generation based on renewable

energy, like Microsoft, Google, and Yahoo who have

built new DCs close to large and cost-efficient hydro-

electric power sources for instance.

However, the extension of hydroelectric power

plants is severely limited by environmental issues,

and other renewable energy sources provide intermit-

tent electricity over time. In the EPOC project, we

aim at focusing on energy-aware task execution from

the hardware to the application’s components in the

context of a mono-site and small DC (all resources are

in the same physical location), which is connected to

the regular electric Grid and to a local renewable en-

ergy sources (such as windmills or solar cells). EPOC

is a collaborative project between Brittany and Pays

de la Loire regions

1

.

Pioneering solutions have recently been proposed

1

This work has received a French state support granted

to the CominLabs excellence laboratory and managed by

the National Research Agency in the ”Investing for the Fu-

388

Beldiceanu N., Dumas Feris B., Gravey P., Hasan S., Jard C., Ledoux T., Li Y., Lime D., Madi-Wamba G., Menaud J., Morel P., Morvan M., Moulinard M.,

Orgerie A., Pazat J., Roux O. and Sharaiha A..

The EPOC Project - Energy Proportional and Opportunistic Computing System.

DOI: 10.5220/0005487403880394

In Proceedings of the 4th International Conference on Smart Cities and Green ICT Systems (SMARTGREENS-2015), pages 388-394

ISBN: 978-989-758-105-2

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

to tackle the challenge of powering small-scale DC

with only renewable energies (Goiri et al., 2014). In

the context of EPOC, we are considering a hybrid ap-

proach relying on both the regular grid and a renew-

able energy source, like sun or wind for instance.

On the generation side, it is estimated that 10%

of electric energy produced by power plants is cur-

rently lost during transmission and distribution to the

consumers, with 40% of these losses occurring on the

distribution network (Feng et al., 2009). For instance

in 2006, in the United-States, the total energy and

distribution losses were about 1,638 billion and 655

billion kWh, respectively (Feng et al., 2009). Most

of the energy-efficient Cloud frameworks proposed in

the literature do not consider electricity availability

and renewable energy in their models. This is a major

drawback since significant amounts of electricity are

lost during transportation and storage.

In the EPOC project, the first challenge consists

in developing a transparent (for users) energy pro-

portional computing (EPC) distributed system (from

system to service-oriented runtime) mainly based on

hardware and virtualization capabilities. The sec-

ond challenge addresses the energy issue through

a strong synergy inside infrastructure-software stack

and more precisely between applications and re-

source management systems designed to tackle the

first challenge. This approach must allow adapt-

ing the Service Level Agreement (SLA) by seeking

the best trade-off between energy cost (from regu-

lar electric grid), its availability (from renewable en-

ergy), and service degradation (from application re-

configuration to jobs suspension). The third chal-

lenge embarks to set energy efficient optical net-

works as key enablers of future internet and cloud-

networking service deployment through the conver-

gence of optical-infrastructure layer with the upper

layers. Another strength of the EPOC project is to in-

tegrate all research results into a common prototype

named EpoCloud. This approach allows the pool-

ing of development efforts, and validates solutions on

common and reproducible use-cases. EPOC is an on-

going project, and the aim of this paper is to present

the DC architecture designed in this context, from

hardware layer to middleware layer.

2 EpoCloud PRINCIPLES

Our first goal is to design an energy-proportional-

computing system (EPCS), which implies no energy

consumption, whenever there is no activity. To date,

dynamic power management has been widely used

ture” program under reference Nb. ANR-10-LABX-07-01.

in embedded systems as an effective energy saving

method with a policy that attempts to adjust the power

mode according to the workload variations (Sridharan

and Mahapatra, 2010). Unfortunately, servers con-

sume energy even when they are idle. For an efficient

EPCS, we need to have the capability to turn on/off

servers dynamically. Vary-on/vary-off (VOVO) pol-

icy reduces the aggregate-power consumption of a

server cluster during periods of reduced workload.

The VOVO policy turns off servers so that only the

minimum number of servers that can support the

workload are kept alive.

However, much of the applications running in a

data center must be online constantly. To solve this

problem, dynamic placement using application live

migration permits to keep using VOVO policy in the

on-line application context. Live migration moves a

running application between different physical ma-

chines without disconnecting the client or application.

Memory, storage, and network connectivity are trans-

ferred from the original host machine to the desti-

nation. Currently, the most efficient system for live

migration is the use of virtualization. Virtualization

refers to the creation of a virtual machine (VM) that

acts like a real computer with an operating system but

software executed on these VMs is separated from the

underlying hardware resources. Virtualization also al-

lows snapshots, fail-over and globally reduce the IT

energy consumption by consolidating VMs on a phys-

ical machine (i.e. increasing the server utilization and

thus reducing the energy footprint). Furthermore, dy-

namic consolidation uses live migration for effective

placement of VMs on the pool of DC servers to re-

duce energy, increase security, etc. But live migration

requires significant network resources.

Our first main objective is more concentrated on

Workload-driven approach. EpoCloud adapts the

power consumption of the DC depending on the ap-

plication workload. Our second objective is more fo-

cused on Power-driven SLA. The Power-driven ap-

proach implies shifting or scheduling the postponable

workloads to the time period when the electricity is

available (from the renewable energy sources) or at

the best price. For on-line application, power-driven

approach implies a degradation of services when en-

ergy is at a insufficient level, while maintaining SLAs.

In addition, EpoCloud takes advantage of the avail-

able energy to perform some tasks. Some of them

allow limitations on application degradation. We de-

scribe our EpoCloud architecture and EpoCloud man-

ager in section 3 and 4 respectively.

TheEPOCProject-EnergyProportionalandOpportunisticComputingSystem

389

3 HIGH THROUGHPUT

OPTICAL NETWORKS FOR VM

MIGRATION WITHOUT SAN

Recent studies on companies’ data-centers show that

a VM consume an average of 4 GB of Memory and

128 GB of storage. Thus, it will take a minimum of

17.5 minutes (resp. 1.75 minutes) with a 1 Gb/s (resp.

10 Gb/s) network to realize a complete VM migra-

tion. Moreover, a classical consolidation ratio in vir-

tualized data centers is 50 VMs per server. Accord-

ing to the approach that we are considering in EPOC

(VOVO Policy), our data center needs to be able to

migrate all the VMs running on a server (7.5 TB),

whenever the hypervisor requests to turn this server

off in order to save power. Having one optical port

per rack means that its bandwidth might be shared by

the servers located in this rack. Then, is this band-

width enough to migrate all the VMs in one server?

Using 10 Gb/s this operation takes around 2 hours.

However, if we consider an example, 32 servers per

rack, the same operation would take about 53 hours,

since now the bandwidth is being shared by the 32

servers. Consequently, increasing the bit rate of the

interconnection network becomes a must.

To overcome the aforementioned problem, classi-

cal dynamic consolidation system uses live migration

with a Storage Area Network (SAN). In this case, the

VM storage is shared between all servers and live mi-

gration is limited to transfer VMs memory. Never-

theless, adding a SAN impacts on the global DC en-

ergy consumption. EpoCloud proposes to suppress

the SAN, which is a dedicated network providing ac-

cess to consolidated data storage.

Among various components of a data center, stor-

age is one of the biggest consumers of energy. An

industry report (Inc, 2002) shows that storage devices

account for almost 27% of the total energy consumed

by a DC. By suppressing the SAN we optimize the en-

ergy consumption but we introduce a strong hypothe-

sis on the technical architecture : for accessing data of

applications and systems, we can only use local disk

servers. Turning off a server involves transferring 7.5

TB on average. Given this scenario, a high broad-

band network is required, but is a 100 Gb/s network

card really exploitable with current server technolo-

gies? In this article, we present an innovative net-

work architecture, detailed in section 3.1, a pre-study

in section 3.2, and finally, we describe in section 3.3

architectural motives and principles for the integra-

tion of renewable energy.

3.1 Network Architecture

A classical interconnection architecture is based on

a 3-Tier fat-tree topology as presented in (Kachris

and Tomkos, 2012). Each of the three main switch-

ing layers - core, aggregation, and ToR (top-of-the

rack)- uses Electrical Packet Switches (EPS). Servers

accommodated into racks are connected through the

ToR switches to the aggregation layer, and from there

to the core layer using the aggregation switches. Fi-

nally, the core switches provide interconnection to the

internet (or outside the DC).

The introduction of optical communications

seems to be crucial, because it can achieve very

high data rates, low latency and low power consump-

tion (Kachris and Tomkos, 2013). This has recently

become a hot research topic inside the optical net-

working community. Some authors propose a direct

migration to all-optical architectures, most of them

based on Optical Circuit Switching (OCS) (Singla

et al., 2010) that does not meet the needs of a variable

traffic over time. Some hybrid architectures, involv-

ing several hierarchy levels, could have the potential

to connect millions of servers in giant DC (Gumaste

and Bheri, 2013). As already noted, EPOC aims at

focusing on small/medium size data centers.

For transferring 7.5 TB, implementing a full op-

tical interconnection architecture could be an attrac-

tive option, in terms of latency, power consump-

tion and control complexity. This implies using Op-

tical Packet Switching (OPS) technology, whose ma-

turity is still highly questionable, in spite of sev-

eral decades of investigation for telecom network ap-

plications (Yoo, 2006). Nevertheless, several tech-

niques, relying on fast wavelength tunable optical

emitters, have recently gained a renewed attention,

in particular for metropolitan area network applica-

tions. These techniques include TWIN (Time-domain

Wavelength Interleaved Networks), originally pro-

posed by Lucent (Sanjee and Widjaja, 2004), and

POADM (Packet Optical Add and Drop Multiplexer)

proposed by Alcatel-Lucent (Chiaroni, 2008).

In the EPOC project, we decided to investigate

a third option, derived from TWIN, which was pre-

sented in (Indre et al., 2014) under the name of POPI

(Passive Optical Pod Interconnect). The main moti-

vation for this choice is that POPI uses a purely pas-

sive optical network, with power consumption con-

centrated at networks edge. Note that the passive

nature of the POPI network provides a high relia-

bility. This architecture is simpler than the classical

EPS one (Kachris and Tomkos, 2012), in the sense

that there is no ToR switch and the existence of racks

will depend on the bandwidth assigned per server (see

SMARTGREENS2015-4thInternationalConferenceonSmartCitiesandGreenICTSystems

390

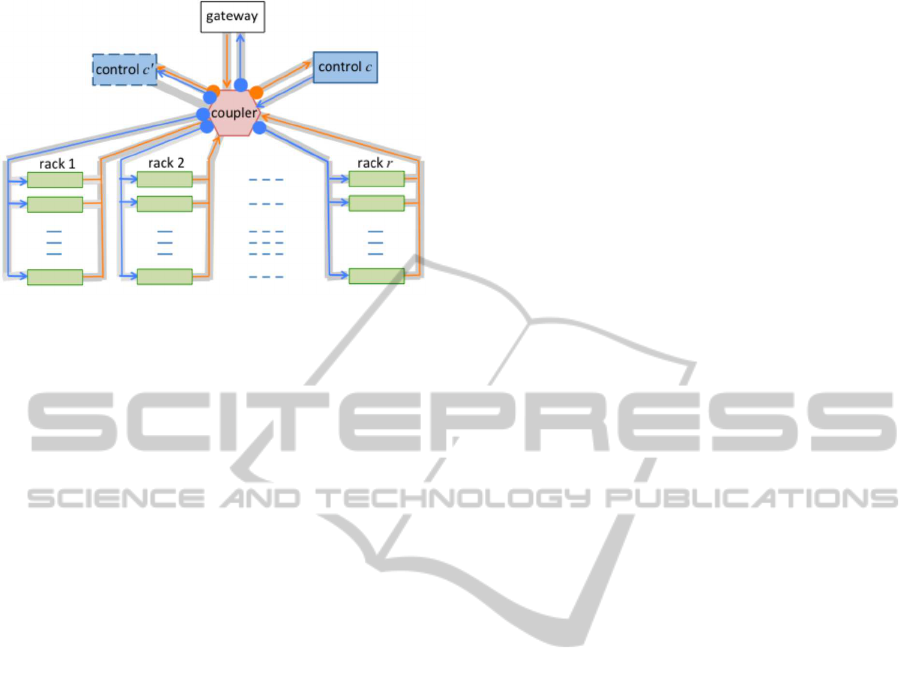

Figure 1: POPI architecture (from (Indre et al., 2014)).

POPI scheme depicted at Figure 1). Therefore, there

is no difference between inter- or intra-rack commu-

nications. Servers are independent and can connect to

each other by means of a passive coupler. Each server

i has a transmitter constituted by a tunable laser, and

a receiver adjusted to wavelength i. Moreover, POPI

consumes around 5 times less power than the classical

EPS architecture (Indre et al., 2014).

The capacity of POPI (with one wavelength per

server) is limited by the used components; the tunable

laser and the coupler being the most limiting ones.

Considering a fast tunable laser with 50GHz-spaced

channels (Chiaroni et al., 2010), the maximum num-

ber of servers shall be around 60. Nevertheless, this

number could be increased by using spectral-efficient

modulation formats enabling more less-spaced chan-

nels. Concerning the coupler losses, we could use

around 140 channels (with 10 Gb/s - NRZ format) us-

ing a moderate power(0 dBm) sources without optical

amplification.

3.2 Server Throughput Capacity

In EPOC project, the DC consolidates the VMs peri-

odically by using the live migration technology. Each

server owns a 100 Gb/s transmission capacity and the

live migration migrates the whole VM including the

storage. It requires huge network resource to main-

tain the performance level therefore less degradation

is employed during the process. In order to achieve a

read/write speed of 100 Gb/s, we prefer SSD (Solid-

State Drive) over HDD (Hard Disk Drive), since SSD

is faster and less energy consuming than HDD. A sin-

gle SSD of 800 GB capacity with a of PCI-E 2.1 x8

(Peripheral Component Interconnect Express) inter-

face, can achieve 2 GB/s reading speed and 1 GB/s

writing speed. This implies that we still need sev-

eral SSDs in RAID (Redundant Array of Inexpensive

Disks) technology in order to attain the 100 Gb/s-data

rate. In the near future, a PCI-E 3.0 x16 shall offer a

15.75 GB/s network data rate, so this will be achieved

by a single SSD.

Despite of higher speed, energy consumption for

SSD is largely reduced to about 2 W compared to 6

W for HDD. Consequently, SSD generates less heat

than HDD; making SSD more suitable to our project

purposes.

3.3 Integrating Local Renewable

Energy

Although several research efforts have been

made to reduce energy consumption by design-

ing/implementing server consolidation, hardware

with better power/performance trade-offs, work-

load migration and software techniques for energy

aware scheduling, still the goal for alleviating car-

bon footprint is being underachieved. Given the

circumstances, explicit or implicit integration of

renewable energy to the DC can be the only way

to reduce carbon footprint at an acceptable level.

Besides that, the demand for green services is ever

increasing, thus integrating renewable sources to

the data center left no choice. Few green cloud

providers, e.g., GreenQloud (GreenQloud, 2010),

Green House Data (Green House Data, 2007) and

academic researchers (Goiri et al., 2014) integrated

renewable sources to the data-center explicitly which

offers green computing services with partial SLA

fulfillment.

As renewable power sources are very intermit-

tent in nature, hence predicting the amount of re-

newable energy production ahead of real time might

demonstrate greater error statistics in DC power man-

agement. Nonetheless, excessive production of re-

newable energy can imbalance the Grid as renewable

energy is connected to the Grid via grid-tie device,

which combines electricity produced from renewable

sources and Grid. One way to overcome the challenge

is to use energy storage or battery to store this su-

perfluous green energy which can be discharged later

for peak shaving of DC power demand or for fulfill-

ment of energy aware SLA between Infrastructure-

as-a-Service (IaaS) and Software-as-a-Service (SaaS)

provider when renewable energy needed but not avail-

able. Energy storage incurs additional costs to DCs

CAPEX and OPEX, and energy losses due to battery’s

efficiencyand finite capacity. Therefore it is not an at-

tractive solution for small-scale data centers.

In order to avoid using storages or batteries in

small-scale DC, we could virtualize the green energy.

Virtualization of energy implies, nullifying the de-

graded interval (lack of green energy) with the sur-

TheEPOCProject-EnergyProportionalandOpportunisticComputingSystem

391

plus interval (excessive green energy than demand),

whenever the availability of green energy is over the

demand. So, whenever the excessive green/renewable

energy is present, we use the whole portion of the

available green energy and draw the other portion

from the grid. Thus, the abundant green energy is

fully utilized and these intervals can neutralize the

non-existent green energy intervals, if there is any.

From clients or SaaS providers perspective, they re-

alize both the interval as ideal interval (when supply

meet the demand), though the green energy was not

present instantaneously rather present virtually. In

this way, energy storage is not needed and neither of

the portion of renewable energy is wasted. Further-

more, total expenditure of energy purchasing can be

reduced since no energy goes to waste and additional

cost for using storage is not needed. Even energy

aware SLA between IaaS and SaaS providers can be

fulfilled.

4 EpoCloud MANAGER

Architectural principles for small data centers were

defined in the previous sections. They rely on innova-

tive infrastructure where a limited number of servers

(without SAN) are connected by a high speed op-

tical network and supplied by local sources of renew-

able energy, composed of a limited number of server

(without SAN) connected by a high speed network.

To take advantage of this architecture, the EPOC

project develops an innovative task management sys-

tem: the EpoCloud Manager including a smart task

scheduler (Section 4.1), and an energy-aware SLA

oriented management system (Section 4.2).

4.1 Opportunistic Energy-aware

Resource Allocation

In the EPOC project, we propose to design a disrup-

tive approach to Cloud’s resource management which

takes advantage of renewable energy availability to

perform opportunistic tasks. Let’s recall that, the con-

sidered EpoCloud is mono-site (i.e. all resources are

in the same physical location) and performs tasks (like

web hosting or MapReduce tasks) running in virtual

machines. The EpoCloud receives a fixed amount of

power from the regular electrical grid. This power al-

lows it to run usual tasks. In addition, the EpoCloud

is also connected to renewable energy sources (such

as windmills or solar cells) and when these sources

produce electricity, the EpoCloud uses it to run more,

less urgent, tasks.

The proposed resource management system inte-

grates a prediction model to be able to forecast these

extra-power periods of time in order to schedule more

work during these periods. Given a reliable prediction

model, it is possible to design a scheduling heuristic

that aims at optimizing resource utilization and en-

ergy usage, problem known to be NP-hard. So, the

proposed heuristics will schedule tasks spatially (on

the appropriate servers) and temporally (over time,

with tasks that can be planed in the future).

In order to achieve this energy-aware resource al-

location, we distinguish two kinds of jobs to be sched-

uled on the data center: the web jobs which rep-

resent jobs requiring to run continuously (like web

server) and the batch jobs which represent jobs that

can be delayed and interrupted, but with a deadline

constraint. The second type of jobs are the natural

candidates of the opportunistic scheduling algorithm.

Additionally for reducing further energy consumption

in the EpoCloud, we are taking advantage of consoli-

dation algorithms and on/off mechanisms to optimize

the number of powered-on resources. These consoli-

dation algorithms also relies on VM suspend/resume

mechanisms for the batch jobs and live migration

mechanisms of VMs for the web jobs. However, such

mechanisms have a cost in terms of both time and en-

ergy, and so, the algorithms take these costs into ac-

count to optimize the overall energy utilization.

4.2 Enforcing Green SLA

While the proliferation of Cloud services have greatly

impacted our society, how green are these services

is yet to be answered. Usually, the traditional Cloud

services are offered to clients having a Service Level

Agreement (SLA), which includes availability and re-

sponse time. Since, the demands for green products,

services as well as social awareness for being green

is ever increasing, its high time for service providers

to consider offering green services using green en-

ergy. Therefore we propose a new paradigm of Ser-

vice level objective (SLO) for SaaS provider, where

service can be provided using proportional green en-

ergy with above mentioned classical objectives (i.e.,

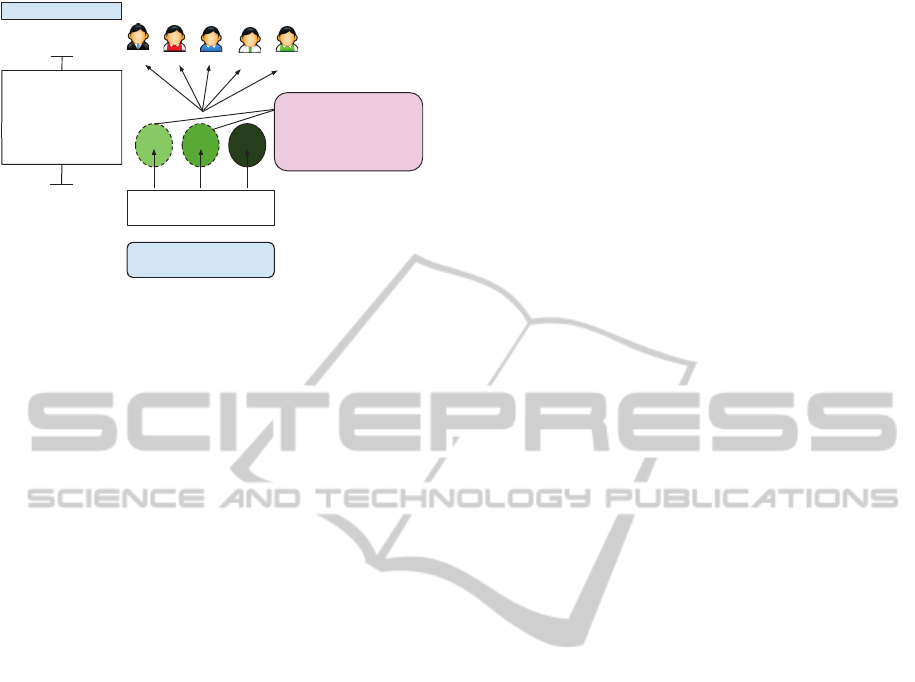

Availability, Response time) in Figure 2.

To date, the problem for offering green services

based on green energy has been undermined since

green energy sources are very intermittent in nature

and constantly providing the same amount of green

energy is ungovernable. Therefore, reducing the en-

ergy consumption in application level left no choice

if certain percentage of green energy requirement has

to be respected while green energy is unavailable or

scarce. In the green SLA contract, providers can

SMARTGREENS2015-4thInternationalConferenceonSmartCitiesandGreenICTSystems

392

6DD6

$YDLODELOLW\RIVHUYLFH

5HVSRQVHWLPH

*UHHQHQHUJ\

SHUFHQWDJH

(QG8VHU

6DD6

6/$IOH[LELOLW\GXHWR

IX]]LQHVVYDOXH

8QDYDLODELOLW\RI*UHHQ

HQHUJ\

5HQHZDEOHHQHUJ\SRZHUHG

'DWDFHQWHU

6HUYLFHOHYHO2EMHFWLYH

Figure 2: Example of green SLA.

introduce some Green energy percentage flexibility

or performance of applications adaptability for pro-

viding respected Quality of Service (QoS). Appli-

cations performance/functionality/feature adaptabil-

ity with fewer resources might cause degradation of

service for certain period, but it can reduce significant

amount of energy consumption since performance is

related to CPU and RAM usage. Therefore, selecting

algorithms depending on time, space complexity and

choosing most energy compliant components in the

software is necessary.

5 CONCLUSION

In this paper, we have presented the EpoCloud

principles, architecture and middleware components.

EpoCloud is our prototype, which will tackle three

major challenges: 1) To optimize the energy con-

sumption of distributed infrastructures and service

compositions in the presence of ever more dynamic

service applications and ever more stringent availabil-

ity requirements for services; 2) To design a clever

cloud’s resource management, which takes advantage

of renewable energy availability to perform oppor-

tunistic tasks, then exploring the trade-off between

energy saving and performance aspects in large-scale

distributed system; 3) To investigate energy-aware

optical ultra high-speed interconnection networks to

exchange large volumes of data (VM memory and

storage) over very short periods of time.

In order to achieve these ambitious goals, we pro-

pose: 1) To determine energy-aware SLA manage-

ment policies considering energy as a first class re-

source and relying on the concept of virtual green en-

ergy to better utilize renewable energy; 2) To evalu-

ate energy-aware task scheduling algorithms based on

the distinction of two kinds of tasks (web tasks and

batch tasks) and leveraging renewable energy avail-

ability to perform opportunistic tasks without ham-

pering performance; 3) To assess the ability of a spe-

cific OPS-based interconnection architecture to sup-

port the exchange of large data volumes (about 7.5 TB

for the migration of all VMs hosted by a single server

while allowing background traffic exchange between

servers).

REFERENCES

Chiaroni, D. (2008). Optical packet add/drop multiplexers

for packet ring networks. In Optical Communication,

2008. ECOC 2008. European Conference on, pages

1–4.

Chiaroni, D., Neilson, D., Simonneau, C., and Antona, J. C.

(2010). Novel Optical Packet Switching Nodes for

Metro and Backbone Networks. In Optical Network

Design and Modeling (ONDM), International Confer-

ence on.

Feng, X., Peterson, W., Yang, F., Wickramasekara, G., and

Finney, J. (2009). Smarter grids are more efficient.

ABB review.

Goiri, I., Katsak, W., Le, K., Nguyen, T., and Bianchini, R.

(2014). Designing and managing data centers pow-

ered by renewable energy. Micro, IEEE, 34(3):8–16.

Green House Data (2007). Green house data. http://

www.greenhousedata.com/green-data-centers.

GreenQloud (2010). Greenqloud. https://

www.greenqloud.com.

Gumaste, A. and Bheri, B. (2013). On the architectural

considerations of the FISSION (Flexible Interconnec-

tion of Scalable Systems Integrated using Optical Net-

works) framework for data-centers. In Optical Net-

work Design and Modeling (ONDM), International

Conference on, pages 23–28.

Inc, M. I. (2002). Power, heat, and sledgehammer. Techni-

cal report, University of Zurich, Department of Infor-

matics.

Indre, R.-M., Pesic, J., and Roberts, J. (2014). POPI: A Pas-

sive Optical Pod Interconnect for high performance

data centers. In Optical Network Design and Model-

ing, 2014 International Conference on, pages 84–89.

Kachris, C. and Tomkos, I. (2012). A survey on optical in-

terconnects for data centers. Communications Surveys

Tutorials, IEEE, 14(4):1021–1036.

Kachris, C. and Tomkos, I. (2013). Power consumption

evaluation of all-optical data center networks. Clus-

ter Computing, 16(3):611–623.

Sanjee, I. and Widjaja, I. (2004). A new optical network

architecture that exploits joint time and wavelength

interleaving. In Optical Fiber Communication Con-

ference, 2004. OFC 2004, volume 1, pages 446–448.

Singla, A., Singh, A., Ramachandran, K., Xu, L., and

Zhang, Y. (2010). Proteus: A Topology Malleable

Data Center Network. In ACM SIGCOMM Workshop

on Hot Topics in Networks (Hotnets), pages 8:1–8:6.

TheEPOCProject-EnergyProportionalandOpportunisticComputingSystem

393

Sridharan, R. and Mahapatra, R. (2010). Reliability aware

power management for dual-processor real-time em-

bedded systems. In Proceedings of the 47th Design

Automation Conference, DAC ’10, pages 819–824,

New York, NY, USA. ACM.

Yoo, S. J. B. (2006). Optical Packet and Burst Switching

Technologies for the Future Photonic Internet. Light-

wave Technology, Journal of, 24(12):4468–4492.

SMARTGREENS2015-4thInternationalConferenceonSmartCitiesandGreenICTSystems

394