Rotation-Invariant Image Description from Independent Component

Analysis for Classification Purposes

Rodrigo D. C. da Silva, George A. P. Thé and Fátima N. S. de Medeiros

Federal University of Ceara, Dept. of Teleinformatic Engineering, Campus do Pici s/n, Bl 725, Fortaleza, Brazil

Keywords: Independent Component Analysis, Invariant Rotation, Pattern Recognition.

Abstract: Independent component analysis (ICA) is a recent technique used in signal processing for feature

description in classification systems, as well as in signal separation, with applications ranging from

computer vision to economics. In this paper we propose a preprocessing step in order to make ICA

algorithm efficient for rotation invariant feature description of images. Tests were carried out on five

datasets and the extracted descriptors were used as inputs to the k-nearest neighbor (k-NN) classifier.

Results showed an increasing trend on the recognition rate, which approached 100%. Additionally, when

low-resolution images acquired from an industrial time-of-flight sensor are used, the recognition rate

increased up to 93.33%.

1 INTRODUCTION

The human ability to recognize objects regardless of

eventual rotation, translation or scalling

transformation is one of the most basic and

important features for human-environment

interaction (Cichy, 2013). This recognition ability

also provides human beings with the unique ability

of sensing and actuating in a wide range of

situations. In addition, it enables object labeling

wherever it is located and whatever it is oriented on

a scene.

In computer vision applications, a fundamental

issue is to recognize objects regardless of viewpoint

transformations. Particularly, in industrial

applications such as object counting and selection in

conveyor belts, pattern recognition is worldwide

used. In these applications, object recognition

implies label assignment according to its feature

description. By object description it is meant one

with as few data as possible, thus allowing for fast

and, eventually, all-embedded implementations.

Classical methods for 2-D object recognition

include B- Spline moment method (Huang and

Cohen, 1996), moment methods (Hu, 1962), (Zhao

and Chen, 1997), (Mukundan, 2001), Fourier and

Wavelet transform methods based on object contour

(Oirrak et al., 2002), (Khalil and Bayoumi, 2002)

(Huang et al., 2005).

In the last decade, ICA has been claimed to offer

powerful feature description from a reduced set of

descriptors. Essentially, it is a blind source

separation technique, which estimates components

that are as independent as possible (Hyvärinen et al.,

2001). Pioneering this field was the work on the

separation of two physiological signals (Jutten and

Herault, 1991), and it has been established as an

interesting tool for research. In fact, significant

advances have been achieved in terms of efficiency

of algorithms and range of applications where ICA

can be used, as well. Therefore, interest concerning

this technique has increased in electrical power field

(Lima et al., 2012), computer vision (Pan et al.,

2013), face recognition (Sanchetta et al., 2013),

neuroimaging (Khorshidi et al. 2014) (Tong et al.,

2013), neurocomputing (Park et al. 2014) (Rojas et

al. 2013), biomedical signal processing (Sindhumol

et al. 2013), computational statistics (Chattopadhyay

et al., 2013), economic modeling (Lin and Chiu,

2013), chemistry (Masoum et al. 2013), etc.

ICA solves the problem of suitably representing

multivariate data by linearly decomposing a random

vector x, into components, s, that are statistically

independent, according to Eq. (1) below. Main goal

is to estimate the independent components (ICs), or

the mixing matrix A only from the observed data x.

x

=

A

s

(1)

210

da Silva R., A. P. Thé G. and de Medeiros F..

Rotation-Invariant Image Description from Independent Component Analysis for Classification Purposes.

DOI: 10.5220/0005512802100216

In Proceedings of the 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2015), pages 210-216

ISBN: 978-989-758-123-6

Copyright

c

2015 SCITEPRESS (Science and Technology Publications, Lda.)

In order to make ICA estimation possible, the

ICs must be non-gaussian; this non-gaussianity

assumption in ICA mixture modeling is probably the

main reason of the conducted researches on the field

(Hyvärinen and Oja, 2000). Another known

restriction in ICA is that it is not rotation invariant;

this means that rotation of the observers affects the

estimation of the mixing matrix and the ICs, as well.

Therefore, the ability of representing rotating objects

would be compromised, in principle.

In the literature, works discussing the issued of

rotation invariance generally refer to (Huang et al.,

2005) and (Ali et al., 2006), whose methods also

consider the translation and scaling transformations.

The former introduces a new scheme for affine

invariant description and affine motion estimation

contour-based depiction extracted by ICA. The latter

is an invariant description method based on a

normalized affine-distorted and noise-corrupted

object boundary.

As an alternative to face the second restriction, in

this paper we propose to use ordering preprocessing

step as a way to make ICA robust to rotation

transformation of the observers, so that getting

rotation-invariant image descriptors. This ordering

step is accomplished by making the input vector to

undergo a nonlinear transformation, here expressed

in terms of a matrix λ

.

Then, to evaluate the proposal, we performed a

simple k-Nearest Neighbor classifier (k-NN) on

various image database to show that more efficient

image descriptors can be obtained if this

preprocessing takes place.

This paper is organized as follows: Section 2

briefly describes fundamentals of ICA and the

proposed preprocessing step for ICA-based rotation

invariant image feature extraction. In section 3 the

datasets are described and discussed along with the

experimental results of a classification system.

Finally, in section 4 conclusions are drawn.

2 BASICS OF ICA

ICA is a mathematical technique that reveals hidden

factors that underlie a set of random variables, which

are assumed non-gaussian and mutually statistically

independent. It is also described as a statistical

signal processing technique whose goal is to linearly

decompose a random vector into components that

are not only uncorrelated, but also as independent as

possible (Fan et al., 2002). Thus, ICA can be

considered as a generalization of the principal

component analysis (PCA). PCA generates a

representation of data inputs based on uncorrelated

variables, whereas ICA provides a representation

based on statistically independent variables (Déniz

et al., 2003).

The basic definition of ICA is given in the

following. Given a set of observations of random

variables x

1

(t), x

2

(t). ...x

n

(t), where t is the time or

sample index, assume that they are generated as a

linear mixture of independent components s

1

(t), s

2

(t).

...s

n

(t) (Huang et al., 2005):

x= A (s

1

(t),s

2

(t),... ,s

n

(t ))

T

= As

(2)

where A is an unknown mixture matrix, A ϵ

R

n×n

(Huang et al., 2005). The ICA model, Eq. (1),

describes how the observed data are generated by a

process of mixing the independent components s.

ICs are latent variables, what means that they cannot

be directly observed. Thus, the classic ICA problem

consists in estimating A and s, when only x is

observed, provided that the observers, collecting the

mixtures and representing the rows of A, be

independent, so that A is invertible (Bizon et al.,

2013) (Huang et al., 2005).

After estimating the matrix A properly, the

problem stated by Eq. (1) can be rewritten as:

s=

A

− 1

x

= Wx

,

(3)

in such a way that a linear combination

s

= Wx

is

the optimal estimation of the independent source

signals s (Bizon et al., 2013).

Under the assumption of the statistical

independence of the components, and that they are

characterized by a non-gaussian distribution, the

basic ICA problem stated in Eqs. (1) and (2) can be

solved by maximizing the statistical independence of

the estimates s (Bizon et al., 2013).

On the process of finding the matrix W, some

useful preprocessing techniques are used in order to

facilitate the calculation (Fan et al., 2002). There are

two quite standard preprocessing steps in ICA. The

first one moves the data center to the origin by

subtracting the data mean as follows

~

x = x− E { x }

(4)

The second step consists in whitening data, i.e.,

by applying a data transform and providing

uncorrelated components of unit variance,

z= V

~

x

,

(5)

where V is the whitening matrix and z is the

whitened data.

Rotation-InvariantImageDescriptionfromIndependentComponentAnalysisforClassificationPurposes

211

ICA applications on pattern recognition of

rotated images require as training step the random

variables to be the training images. Letting x

i

to be a

vectorized image, we can construct a training image

set x

1

, x

2

,...,x

n

, with n random variables which are

assumed to be the linear combination of m unknown

independent components s, denoted by s

1

, s

2

,...,s

m

converted into vectors and denoted as x = (x

1

,

x

2

,...,x

n

)

T

and s = (s

1

, s

2

,...,s

n

)

T

. From this

relationship, each image x

i

is represented as a linear

combination of s

1

, s

2

,...,s

m

with weighting

coefficients a

i1

, a

i2

,...,a

im

, related to the matrix A.

When ICA is applied to extract image features, the

columns of A

train

are features, and the coefficients s

signal the presence and the amplitude of the i-th

feature in the observed data x

train

(Fan et al., 2002).

Futhermore, the mixing matrix A

train

can be

considered as features of all training images (Yuen

and Lai, 2002). Accordingly, x

test

must be multiplied

by the vector s for the characteristics A

test

as:

A

test

= x

test

s

− 1

(6)

Finally, this matrix contains the main feature

vectors of the image under test, which is the input to

the classifier, as Figure 1 illustrates.

There are several algorithms that perform ICA

and they are named FastICA (Hyvärinen et al.,

2001), Jade (Cardoso, 1989), ProDenICA (Hastie

and Tibshirani, 2003), orInfomax (Bell and

Sejnowski, 1995), KernelICA (Bach and Jordan,

2002). Here, we perform ICA by applying FastICA

because it is simple and allows program code

modification and maintenance.

Figure 1: Steps of the classification process.

2.2 Proposed Technique

The proposed technique consists in arranging the

vectorized images such that pixel intensities are

ordered (this does not modify the intensity

distribution and Probability Density Function of

image pixels under study). As our results reveal, it

improves the ICA estimation, thus providing better

representation of images that have undergone

rotations.

The ordering procedure is accomplished by

multiplying input vector x by a matrix, hereafter

referred as λ, which is unique for each sample image

and is responsible for ordering the vector.

Combining this procedure with Eq. (1), it can be

written as

λx= λAs= x

order

= Bs

order

(7)

Eq. (6) shows that the ICA model for the ordered

input vector remains valid.

The matrix here proposed is not a permutation

matrix used in basic linear algebra to permute rows

or columns of a matrix, but it is actually n x n matrix

able to reorder the elements of n-size vector, and

assumes the following form:

1

2

0

0

0

k

k

nk

nxn

λ =r

r

r

.

(8)

There is only one non-zero column, whose

elements can be obtained from the following

pseudo-code:

x = INPUT;//waits for the input image vector

maxValue=MAX(x);//finds the maximum of input vector, x

maxIndex=FIND(maxValue,x);// returns index

λ = ZEROS(SIZE(x),SIZE(x));//initialization as zero-matrix

count = 1;

maxCounter = SIZE(x);

REPEAT

minValue=MIN(x);//finds the minimum of input

vector, x

minIndex=FIND(minValue,minValue);//returns index

λ(count,maxIndex) = minValue/maxValue;//fills matrix

CLEAR x(minIndex);//eliminates minIndex-th element

UNTIL count = maxCounter

As results will reveal, the adoption of the

ordering preprocessing leads to improvement in

classification accuracy, which will be associated

later to the non-gaussianity of the data in the ICA

model.

2.3 Classification

Classification is the final stage of any image

processing system where each unknown pattern is

assigned to a category. The degree of difficulty in a

classification problem depends on the variability of

feature values that characterize objects belonging to

a same category with regard to differences between

feature values of objects belonging to different

categories (Mercimek et al., 2005). In this paper, we

use the k-Nearest Neighbor classifier (k-NN) for

supervised pattern recognition, a classical technique

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

212

proposed by (Cover, 1968) as a reference method to

evaluate the performance of more sophisticated

techniques (Coomans and Massart, 1981).

Our main purpose is to investigate the effect of

the ordering preprocessing on the ICA estimation.

Thus, the comparison among several classifiers is

out of the scope of this paper.

3 DATASETS

The performance evaluation of the ordering

procedure of a ICA-based classification system has

been done in a very straightforward manner. It

simply compares the classifier accuracy obtained

when the input vectors are ordered (to some extent)

to the case when they are not. This is done for

different image sets, described in the following.

Figure 2: Examples of 1024 x 1024 images from Dataset A.

3.1 Datasets A and B (Small Database)

The first dataset used in this experiment includes 12

images of 1024 × 1024 pixels and 7 images of 512 ×

512 pixels, acquired from the database of the Ming

Hsieh Department of Electrical Engineering of the

University of Southern California.

Each image was rotated 1° step from 0° to 360°,

thus forming 361 samples for every image. Those

corresponding to 0° were used for training and the

others used for testing. Figure 2 shows some image

samples of dataset A.

3.2 Dataset C (Low-resolution Images)

In order to evaluate the proposed method and extend

conclusions for industrial-like applications, thus

broadening the range of interested readers, we

performed tests on an additional dataset. In this

experiment, tests were carried out on images

extracted from the 3D sensor effector pmd E3D200,

from ifm electronic ®, which is a low-resolution

time-of-flight 50 × 64 pixels sensor.

Another purpose of this experiment is to prospect

real-time implementation of all-industrial image

classification systems using ICA-based description.

Dataset C contains pictures of three small packages,

just different in size, which were acquired after

randomly rotating the packages on a conveyor belt.

This was done in a bad illuminated scenario, as it

can be seen in the poor quality of images in Figure 3

below.

It is worth emphasizing that this experiment was

performed on three image classes. The number of

prototypes per class is 6, each one referred to a side

of every box, in such a way that the database

available for training contains 18 images.

Figure 3: 50 x 64 pixels pictures of three packages with

dimensions 15×10.5×7.2 cm, 15×14×6 cm and

21.5×16.2×9.6 cm, respectively.

3.3 Dataset D (Large-Size Database)

To further evaluate the performance of the proposed

method for large datasets, another experiment was

necessary, this time having 77 images. To create this

database, other 58 textures images acquired from the

database of the Ming Hsieh Department of Electrical

Engineering of the University of Southern California

were resized and added to datasets A and B.

Each image was rotated with 5° step, from 0° to

360°, thus forming 73 samples for every image.

Again, those corresponding to 0° have been used for

training, and the others, for testing.

3.4 Dataset E (Brodatz Database)

Finally, we considered using a texture database

having very different background intensities. The

Brodatz album available in (Safia, 2013) has 112

texture images, which have been resized from 640 x

640 to 128 x 128 pixels.

Here again we rotated images with 5° step, from

0° to 360°, thus forming 73 samples for every

image. Once more, those corresponding to 0° have

been used for training, and the others, for testing.

Table 1 exhibits, for each dataset, information

about the experiments. The classifier has been

trained and tested 50 times for each dataset.

Rotation-InvariantImageDescriptionfromIndependentComponentAnalysisforClassificationPurposes

213

Table 1: Parameters for every experiment done.

Image set

(pixels)

Number of

Coefficients

Samples of

Training

Samples of

Testing

Set A

(1024×1024)

12 12 4320

Set B (512×512)

7 7 2520

Set C (50×64)

18 18 150

Set D (128×128)

77 77 5544

Set E (128×128)

112 112 8064

4 RESULTS

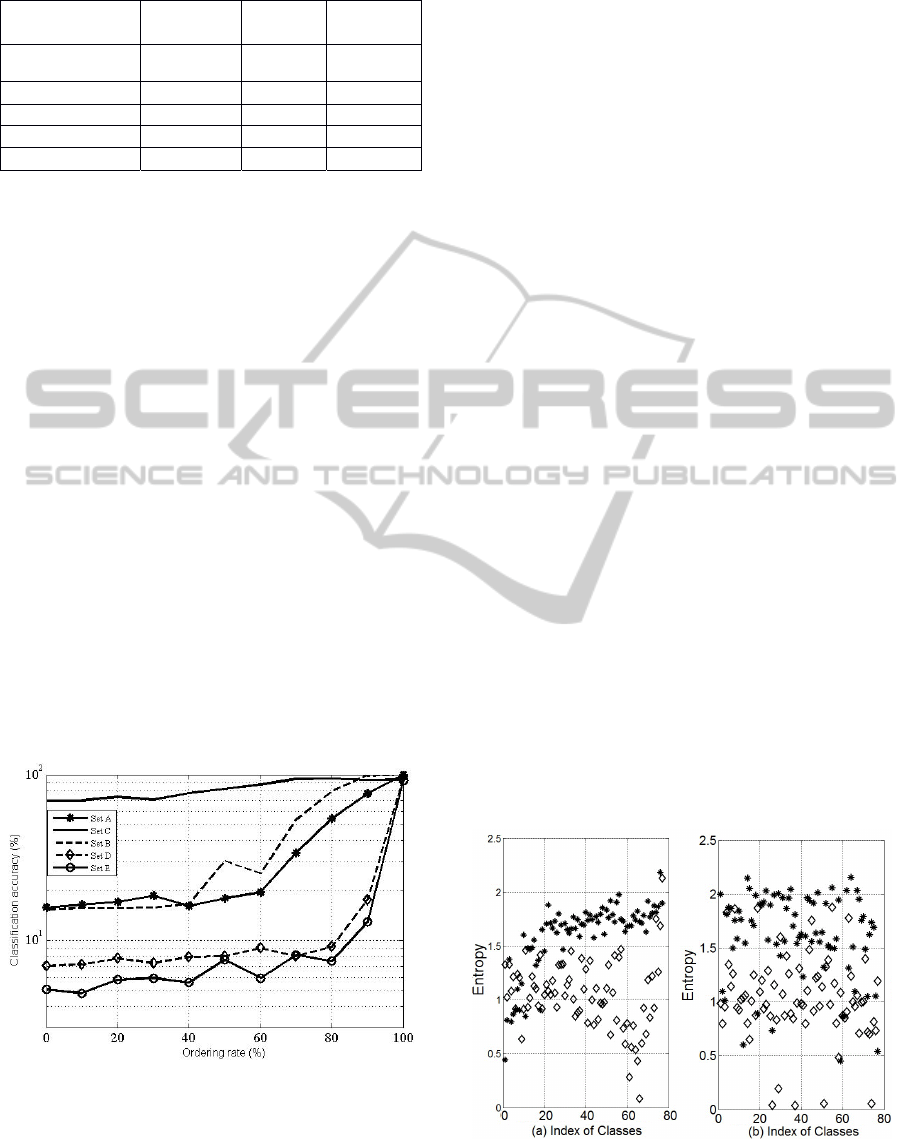

Figure 4 displays the mean recognition rates for the

experiments using the proposed ordering method.

Limits of x-axis indicate ranging from non-ordering

(hence, traditional ICA approach) to full-ordering.

Ordering rate appearing in x-axis indicates the

amount of elements of a given input vector

undergoing the ordering transformation λ.

This result is impressive because it shows that

our approach shifts the performance of the ICA-

based classification system from as low as 5% to

near 100% after the full-ordering of the input

vectors.

A less remarkable but not a negligible result has

been obtained with the low-resolution dataset C,

which showed an increasing performance on the

recognition rates from 70.00% to about 93.33% after

the ordering transformation.

Overall, clearly an ascendant trend comes out

from this analysis, i.e., ordering images has the

positive effect of making the classification accuracy

higher.

Figure 4: Mean recognition rates obtained for

classification experiments with the various datasets.

We associate the above effect on the results with

the increase of non-gaussianity in the data, leading

to better ICA representation. We explain that on the

basis of the improved non-gaussianity achieved on

the independent components when ordering

transformation is applied and when it is omitted.

Indeed, as explained in section 4.2 of (Hyvärinen

et al., 2001), the estimation of the independent

components of the ICA model relies on the

maximization of non-gaussianity of a linear

transformation of the observed data, x. If the data is

presented in such a way to increase non-gaussianity

a priori, the esimation of the ICA model is favoured.

That is our claiming.

In order to provide support for this claiming, we

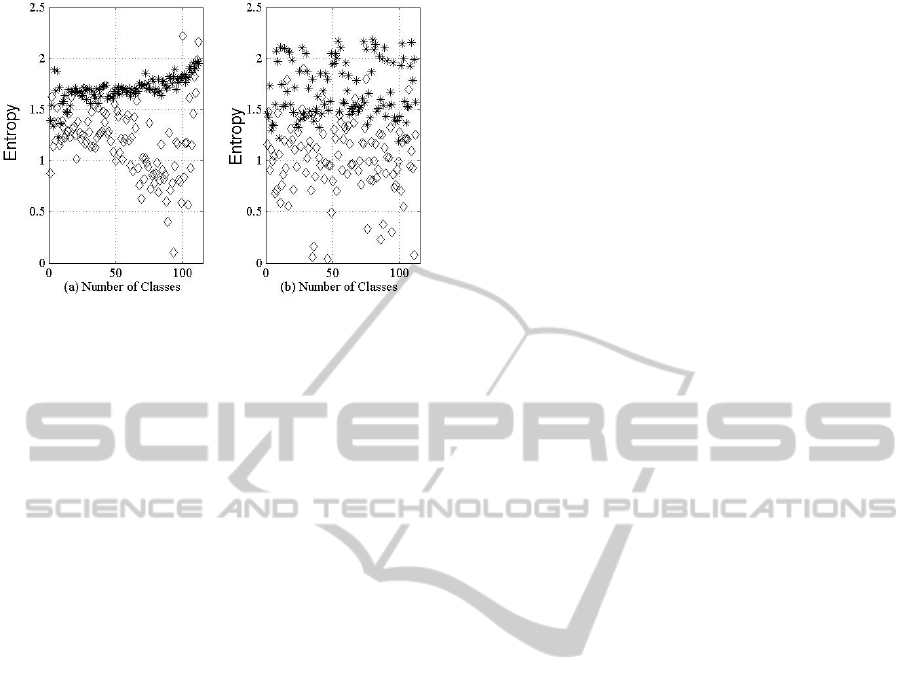

proceeded to measure the non-gaussianity for the

datasets D and E, only. Since the transformation λ

changes the way the input data is presented to the

ICA algorithm, thus modifying the ICs, one should

verify changes in the non-ordered case as compared

to the full-ordering scenario.

One should also compare the independent

components provided by the ICA representation in

both scenarios. We emphasize that the next results

will not consider partial ordering scenario, as in

Figure 4, but only null or full-ordering instead.

Entropy is calculated by performing 150 rounds

to extract and provide average values. This is due to

the random initialization of the mixing matrix A as

calculated from the FastICA algorithm.

By following this procedure, the algorithm

prevents the calculated non-gaussianity to be

dependent on any initial condition. Figures 5 and 6

summarize the non-gaussianity measurements for

datasets D and E, respectively.

Figures 5a and 6a show that the non-gaussianity

increases in the whitened data after full-ordering (to

see this, note that the lower the entropy, the lower

the gaussianity. The scenario of this augmented non-

gaussianity also occurred in the calculated ICs.

Figures 5b and 6b display this trend.

Figure 5: Entropy of the variables for set D: a) whitened z

(asterisk) and z

order

(diamond); b) independent components

s (asterisk) and s

order

(diamond).

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

214

Figure 6: Entropy of the variables for set E: a) whitened z

(asterisk) and z

order

(diamond); b) independent components

s (asterisk) and s

order

(diamond).

Altogether, Figures 5 and 6 reveal a separation

between diamonds (ordered case) and asterisks (non-

ordered case), pretty like a “frontier”, which is more

evident for the datasets A and B (not shown here for

brevity).

4 CONCLUSIONS

In this paper, a preprocess for extracting rotation-

invariant features using independent component

analysis is proposed. Although ICA can be directly

used for feature extraction, it often requires data

preprocessing. Thus, our approach may be thought

as a preprocessing, in which the input data undergo

full or partial ordering. Experiments performed on

four different image datasets showed how the

ordering transformation improved the representation

of feature vectors, which are inputs to the classifier,

i.e., the mixing matrix of the ICA model.

Tests were carried out on rotated images to

evaluate the efficiency of the method. The increased

classification accuracy rate ranging from 5% to near

100% (in high-resolution images) and from 70.00%

to 93.33% (in low-resolution images) suggests the

use of the proposed technique as a useful input data

preprocessing. The entropy and kurtosis measures

confirmed that the increased non-gaussianity of the

estimated independent components improved the

representation of the feature vectors provided by the

ICA model.

Summing up, although the ordering of pixels of

an image does not affect its histogram, we showed

that it is helpful in making the feature extraction

from ICA a good alternative for rotation-invariant

image recognition. As a future work, other

approaches for rotation-invariant feature descriptors

will be studied and compared to the alternative here

discussed.

ACKNOWLEDGEMENTS

The authors acknowledge CAPES for financial

support and FUNCAP (PP1-0033- 00032.01.00/10).

Authors finally thank NUTEC, for administrative

facilities.

REFERENCES

Ali, A., Gilani, A. M., Memon, N. A., 2006. Affine

Normalized Invariant functionals using Independent

Component Analysis. Multitopic Conference.

INMIC ’06. IEEE, pp. 94-99, 23-24 December.

Bach, F. R., Jordan, M. I., 2002. Kernel Independent

Component Analysis, Journal of Machine Learning

Research, vol. 3, pp. 1-48.

Bell, A. J., Sejnowski, T. J., 1995. An Information-

Maximization Approach to Blind Separation and Blind

Deconvolution, Neural Computation, vol. 7, pp. 1129–

1159.

Bizon, K., Lombardi, S., Continillo, G., Mancaruso, E.,

Vaglieco, B. M., 2013. Analysis of Diesel Engine

Combustion using Imaging and Independent

Component Analysis. Proceedings of the Combustion

Institute, vol. 34, pp. 2921-2931.

Cardoso, J. F., 1989. Source Separation using Higher

Order Moments, ICASSP, in: Proceedings of the IEEE

International Conference on Acoustics, Speech and

Signal Processing, vol. 4, pp. 2109–2112.

Chattopadhyay, A. K., Mondal, S., Chattopadhyay, T.,

2013. Independent Component Analysis for the

Objective Classification of Globular Clusters of the

Galaxy NGC 5128, Computational Statistics & Data

Analysis, vol. 57, issue 1, pp. 17-32.

Cichy, R., Pantazis, D., Oliva, A., 2013, “Mapping Visual

Object Recognition in the Human Brain with

Combined MEG and fMRI”, Journal of Vision, vol.

13, n. 9, pp. 659.

Coomans, D., Massart, D. L., 1981. Alternative k-Nearest

Neighbor Rules in Supervised Pattern Recognition.

Part. 1. k-nearest neighbor classification using

alternative voting rules. Analytica Chimica Acta, vol.

136, pp. 15-27.

Cover, T. M., 1968, Estimation by the Nearest Neighbor

Rule, IEEE Transactions on Information Theory, vol.

14, issue 1, pp. 50-55.

Déniz, O., Castrillón, M., Hernández, M., 2003. Face

Recognition using Independent Component Analysis

and Support Vector Machines. Pattern Recognition

Letters, vol. 24, issue 13, pp. 2153-2157.

Fan, L., Long, F., Zhang, D., Guo, X., Wu, X., 2002.

Applications of independent component analysis to

image feature extraction, Second International

Rotation-InvariantImageDescriptionfromIndependentComponentAnalysisforClassificationPurposes

215

Conference on Image and Graphics, vol. 4875, pp.

471-476.

Hastie, T., Tibshirani, R., 2003. In: Becker, S., Obermayer,

K. (Eds.), Independent Component Analysis through

Product Density Estimation in Advances in Neural

Information Processing System, vol. 15, MIT Press,

Cambridge, MA, pp. 649–656.

Huang, Z., Cohen, F. S., 1996, “Affine-invariant B-Spline

moment for curve matching”, IEEE Trans. Image

Process., vol. 5, n. 10, pp. 1473-1490.

Huang, X., Wang, B., Zhang, L., 2005. A New Scheme for

Extraction of Affine Invariant Descriptor and Affine

Motion Estimation based on Independent Component

Analysis, Pattern Recognition Letters, vol. 26, issue 9,

pp. 1244-1255.

Hyvärinen, A., Karhunen, J., Oja, E., 2001. Independent

Components Analysis. John Wiley & Sons, Inc.,

Canada (Chapter 6–8).

Hyvärinen, A., Oja, E., 2000. ‘Independent Component

Analysis: Algorithms and Applications’, Neural

Networks, vol. 13, pp. 411–430.

Jutten, C. and Herault, J., “Blind separation of sources,

part I: An adaptive algorithm based on neuromimetic

architecture”, Signal Processing, vol. 24, pp. 1-10,

1991.

Khalil, M. I., Bayoumi, M. M., 2002, “Affine invariants

for object recognition using the wavelet transform”,

Pattern Recognition Letters, vol. 23, pp. 57-72.

Khorshidi, G. S., Douaud, G., Beckmann, C. F., Glasser,

M. F., Griffanti, L., Smith, S. M., 2014. Automatic

denoising of functional MRI data: Combining

independent component analysis and hierarchical

fusion of classifiers, NeuroImage, vol. 90, pp. 449-

468.

Lima, M. A. A., Cerqueira, A. S., Coury, D. V., Duque, C.

A., 2012. A Novel Method for Power Quality Multiple

Disturbance Decomposition based on Independent

Component Analysis, International Journal of

Electrical Power & Energy Systems, vol. 42, issue 1,

pp. 593-604.

Lin, T.-Y., Chiu, S.-H., 2013. Using Independent

Component Analysis and Network DEA to Improve

Bank Performance Evaluation, Economic Modelling,

vol. 32, pp. 608-616.

Masoum, S., Seifi, H., Ebrahimabadi, E. H., 2013.

Characterization of Volatile Components in

Calligonum Comosum by Coupling Gas

Chromatography-Mass Spectrometry and Mean Field

Approach Independent Component Analysis,

Analytical Methods, vol. 5, issue 18, pp. 4639-4647.

Mercimek, M., Gulez, K., Mumcu, T. V., 2005. Real

Object Recognition using Moment Invariants.

Sadhana, vol. 30, issue 6, pp. 765–775.

Mukundan, R., Ong, S.H., Lee, P.A., 2001. Image analysis

by Tchebichef moments, IEEE Trans. Image Process.

vol. 10, issue 9, pp. 1357-1364.

Oirrak, A. E., Daoudi, M., Aboutajdine, D., 2002. Affine

Invariant Descriptors using Fourier Series. Pattern

Recognition Letters. vol. 23, pp. 1109-1118.

Pan, H. et al, 2013, “Efficient and Accurate Face Detection

using Heterogeneous Feature Descriptors and Features

Selection”, Computer Vision and Image

Understanding, vol. 117, n. 1, pp. 12-28.

Park, B., Kim, D-S., Park, H-J., 2014. Graph Independent

Component Analysis Reveals Repertoires of Intrinsic

Network Components in the Human Brain. PLoS ONE

9(1): e82873. doi:10.1371/journal.pone.0082873.

Rojas, F., García, R. V., González, J., Velázquez, L.,

Becerra, R., Valenzuela, O., B. Román, S., 2013.

Identification of Saccadic Components in

Spinocerebellar Ataxia Applying an Independent

Component Analysis Algorithm, Neurocomputing, vol.

121, pp. 53-63.

Safia A., He D., 2013. New Brodatz-based Image

Databases for Grayscale Color and Multiband Texture

Analysis, ISRN Machine Vision, Article ID 876386, 1.

Available at http://multibandtexture.recherche.

usherbrooke.ca/original_brodatz.html.

Sanchetta, A. C. et al, 2014, “Facies Recogniton using a

Smoothing Process through Fast Independent

Component Analysis and Discrete Cosine Transform”,

Computer & Geosciences, vol. 57, pp. 175-182.

Sindhumol S., Kumar, A., Balakrishnan, K., 2013.

Spectral Clustering Independent Component Analysis

for Tissue Classification from Brain MRI, Biomedical

Signal Processing and Control, vol. 8, issue 6, pp.

667-674.

Tong, Y., Hocke, L.M., Nickerson, L.D., Licata, S.C.,

Lindsey, K.P., Frederick, B.D., 2013. Evaluating the

Effects of Systemic Low Frequency Oscillations

Measured in the Periphery on the Independent

Component Analysis Results of Resting State

Networks, NeuroImage, vol. 76, pp. 202–215.

Yuen, P. C., Lai, J. H., 2002. Face Representation using

Independent Component Analysis. Pattern

Recognition, vol. 35, pp. 1247-1257.

Zhao, A., Chen, J., 1997. Affine Curve Moment Invariants

for Shape Recognition. Pattern Recognition. vol. 30,

issue 6, pp. 895-901.

ICINCO2015-12thInternationalConferenceonInformaticsinControl,AutomationandRobotics

216