KISS MIR: Keep It Semantic and Social Music Information Retrieval

Amna Dridi and Mouna Kacimi

Faculty of Computer Science, Free University of Bozen-Bolzano, Piazza Domenicani 3, Bolzano, Italy

Keywords:

Music Information Retrieval, Music-context, User-context, Personalization, Social Information.

Abstract:

While content-based approaches for music information retrieval (MIR) have been heavily investigated, user-

centric approaches are still in their early stage. Existing user-centric approaches use either music-context or

user-context to personalize the search. However, none of them give the possibility to the user to choose the

suitable context for his needs. In this paper we propose KISS MIR, a versatile approach for music informa-

tion retrieval. It consists in combining both music-context and user-context to rank search results. The core

contribution of this work is the investigation of different types of contexts derived from social networks. We

distinguish semantic and social information and use them to build semantic and social profiles for music and

users. The different contexts and profiles can be combined and personalized by the user. We have assessed

the quality of our model using a real dataset from Last.fm. The results show that the use of user-context to

rank search results is two times better than the use of music-context. More importantly, the combination of

semantic and social information is crucial for satisfying user needs.

1 INTRODUCTION

With the increasing volume of digital music available

on the world wide web, developing information re-

trieval (IR) techniques for music is challenging. The

main reason is the wide variety of ways music is pro-

duced, represented, and used (Smiraglia, 2001; Casey

et al., 2008). However, despite the high potential

of systems presented at ISMIR and similar venues,

the development of music IR (MIR) systems that fit

user music taste is still in its early stages. For in-

stance, most of existing MIR approaches are content-

based considering low-level features of music (audio

signal). These methods are capable to define mu-

sic structure, to identify a piece from noisy record-

ing, or to compute similarities between music pieces.

However, they cannot capture semantic information

which is essential to many users of music(Knees et al.,

2013). Moreover, they do not take into account user

preferences which can highly improve and facilitate

access to music. In fact, several recent surveys on

MIR (Kaminskas and Ricci, 2012; Schedl and Flexer,

2012; Weigl and Guastavino, 2011; Y.Song, 2012)

emphasized the limitations of content-based MIR sys-

tems and suggest new MIR directions which are user-

centric.

Existing user-centric MIR approaches aim at im-

proving user access to music following two main

strategies. The first one consists in enriching music-

context using annotations (Li and Ogihara, 2006;

Saari et al., 2013; Sanden and zhang, 2011), and

the second one exploits user-context for a personal-

ized music retrieval (Hoachi et al., 2003; Boland and

Murray-Smith, 2014). These approaches aim at satis-

fying user needs imposing a specific type of context.

However, user needs are not predictable and should

not be bound to a specific setting. Thus, there is

a need for providing users the possibility to interact

with the music retrieval system and choose the suit-

able context for his needs.

In this paper, we propose KISS MIR a novel ap-

proach for music retrieval that enriches user search

experience by combining both music-context and

user-context. To this end, we exploit social networks

as the main source for context information. We cat-

egorize the information provided in such networks

into semantic and social information. Semantic infor-

mation is reflected by tagging actions of users while

social information represents user activities and rela-

tions in the network. The context of music and user

can be either semantic, social, or both. Based on these

types of context, we propose a ranking model for mu-

sic tracks that helps selecting the right context for

each query and user need. The versatility of our ap-

proach provides a flexible access to music. The main

contributions of this paper are as follows:

Dridi, A. and Kacimi, M..

KISS MIR: Keep It Semantic and Social Music Information Retrieval.

In Proceedings of the 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2015) - Volume 1: KDIR, pages 433-439

ISBN: 978-989-758-158-8

Copyright

c

2015 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

433

1. We propose to model context-information as se-

mantic or social to investigate their role in satisfy-

ing user needs.

2. We define user profile and music profile based on

semantic and social information. Semantic infor-

mation reflects music description and user inter-

ests while social information reflects music popu-

larity and user behaviour.

3. We propose a personalized ranking model that

combine both music-context and user-context

which can be semantic, social, or both. The model

allows the user to choose the most suitable setting

for his needs.

4. we evaluate our model on real world dataset from

Last.fm

1

and show that user-context that com-

bines both semantic and social information out-

performs all other settings.

The remainder of this paper is organized as fol-

lows: Section 2 reviews the related work, Section 3

describes the framework of KISS MIR, Section 4 in-

troduces user and music profiles, Section 5 describes

our scoring model, Section 6 presents our experiment

setting and results, and Section 7 concludes the paper.

2 RELATED WORK

Existing user-centric MIR approaches can be divided

into two main classes. The first class of approaches

exploits music-context to improve user access to

music. Concretely, they rely on music annotation

with semantic labels. Some approaches annotate

music with emotions and they rely on emotion detec-

tion based on music content (Li and Ogihara, 2006;

Huron, 2000; Kaminskas and Ricci, 2011; Braun-

hofer et al., 2013). While some other approaches

combine human-annotated tags with music content

for emotion detection (Lee and Neal, 2007; Saari

et al., 2013; Y.Song et al., 2013; Lamere, 2008; Feng

et al., 2003), multimodal music similarity (Zhang

et al., 2009), artist descriptions (Pohle et al., 2007),

music pieces characterisation (Knees et al., 2007),

verifying the quality of music tag annotation via

association analysis (Arjannikov et al., 2013), or via

multi-label classification (Sanden and zhang, 2011) .

The second class of approaches exploits user-

context to build user profile for personalized MIR.

For instance, (Hoachi et al., 2003) propose to build

user profile based on what he likes and what he

hates. Moreover, (Celma et al., 2005) use Friend Of

1

www.lastfm.fr

A Friend (FOAF) documents to define user profile.

While, in another work, (Herrara, 2009) suggest that

user profile can be categorised to three domains:

demographic, geographic and psychographic. Addi-

tionally, (Chen and Chen, 2001) derive user profile

from his access history and (Boland and Murray-

Smith, 2014) capture the change of user profile over

time.

In our work, we aim at enriching the user search

experience, by combining both music-context and

user-context in the retrieval process. The most simi-

lar approaches to our are music recommender systems

that help users to filter and discover music accord-

ing to their tastes (Bugaychenko and Dzuba, 2013;

Celma et al., 2005; Chen and Chen, 2001; Chedrawy

and Abidi, 2009). While these approaches provide

music recommendation that matches user profile, we

are providing a music search system with personal-

ized ranking involving the user in the center of the

retrieval process.

3 KISS MIR FRAMEWORK

KISS MIR consists in combining both music-context

and user-context in the music retrieval process. To

extract these contexts, we exploit social networks as

a prominent and rich source for information about

both music properties and user activities. The first

step towards this goal is to understand (1) which kind

of information can we find in such networks and (2)

how can we use it to extract music and user contexts.

To this end, we distinguish the following entities as

main components of the information provided by so-

cial networks:

1. Users: represent the participants to a social net-

work.

2. Music Tracks: represent the content shared by

users in a social network

3. Descriptions: represent tags or annotations pro-

vided by users to describe music tracks. Tags can

also be exploited to indicate user preferences.

4. Reactions: represent user feedback reflected by

different actions (comment, like, dislike, favourite,

etc). Reactions capture user interests and the pop-

ularity of music tracks. In some cases, they also

categorize interests as negative or positive (like,

dislike, etc.)

5. Communities: represent sets of users who are in-

terconnected. Users can be linked based on dif-

ferent criteria such as friendship, location, be-

KDIR 2015 - 7th International Conference on Knowledge Discovery and Information Retrieval

434

haviour, or simply belonging to the same social

network.

We model social networks as a graph that represents

the entities mentioned above. Figure 1 shows the

graph model consisting of four types of nodes Users,

Music tracks, Descriptions and Reactions. Users pro-

vide music tracks, describe them, and react to them.

Communities can be implicit, and thus they are not

represented in the graph.

Figure 1: Social Music Graph.

4 USER AND MUSIC PROFILES

The different entities of social networks provide two

types of information defined as follows:

Semantic Information. Semantic information re-

flects meanings, roles, and properties. This type

of information is provided by descriptions where

users tag music tracks with semantic labels. De-

scriptions about music include genre, singer, title,

etc. They also give labels to user interests such as

Rock and Pop.

Social Information. Social information reflects ac-

tivities in social networks. These activities are

represented by the links between entities in the

social graph shown in Figure 1. They include re-

acting and tagging actions of users towards music

tracks.

4.1 User Profile

Based on semantic and social information described

above, we define two types of user profiles: User Se-

mantic Profile and User Social Profile defined as fol-

lows:

4.1.1 User Semantic Profile

User semantic profile consists in the set of descrip-

tions (tags) used by a user u to annotate music tracks

of his choice. We denote this set by T

u

= {t

1

, t

2

, ..., t

k

}.

In social networks tags represent a strong indicator of

users interests.

4.1.2 User Social Profile

User social profile consists in a set of users that have

a relationship with user u, namely the community of

u. We denote this set by C

u

= {u

1

, u

2

, ..., u

n1

} where

u

i

is a user i linked to user u.

4.2 Music Profile

Similarly to user profile, we define two types of pro-

files for music tracks: Music Semantic Profile and

Music Social Profile defined as follows:

4.2.1 Music Semantic Profile

Music semantic profile consists in a set of features

that represent a music track m. The set of features

is predefined and has a fixed length k. Music track

features include genre, singer, year, and a set of key-

words representing its content such as lyrics or title.

We represent the semantic profile of music track m as

vector of k features F

m

= { f

1

, f

2

, ..., f

k

}. The values

of these features are mainly provided by tags.

4.2.2 Music Social Profile

Music social profile consists in the set of users who

reacted to the music track m. We denote this set by

U

m

= {u

1

, u

2

, ..., u

n2

} where the u

i

is a user who re-

acted to music m. The number of users who reacted

to music track m indicates its popularity in the social

network.

5 SCORING MODEL

When users search for music, they typically do not

have a clear idea about what they are looking for.

Thus, a keyword search is not a suitable scenario

unless users have precise information about a given

music track such as title or singer. To allow a

wider access to music, we choose a query-by-example

paradigm where users can find similar music tracks

to what they are interested in. Given a user u, and

a query Q=m, where m is a music track, we search

for music tracks that are relevant to the query Q and

match the interests of user u. A set of music results

{m

1

, . . . , m

n3

} is returned to the user where the score

of each result m

i

is given as following:

S(Q, u, m

i

) = λS

music

(m

i

, Q) + (1 − λ)S

user

(m

i

, u)

(1)

KISS MIR: Keep It Semantic and Social Music Information Retrieval

435

where S

music

(m

i

, Q) denotes the music relevance score

of m

i

to Q and S

user

(m

i

, u) denotes the user relevance

score of m

i

to u . The parameter λ controls the amount

of personalization (0 ≤ λ ≤ 1 ). Setting λ = 1 means

that we aim at finding what matches the query music

track and setting λ = 0 means that we aim at finding

what matches user interests. Values in between com-

bine the two components with different degrees.

5.1 Music Relevance Score

The music relevance score, of a music track m given

a query Q, indicates to which degree m matches

Q. Considering the query-by-example paradigm, we

compute the music relevance score using a similarity

measure between m and Q:

S

music

(m, Q) = Similarity(m, Q) (2)

The similarity measure can take different forms such

as cosine similarity, Jaccard similarity, or Euclidean

distance. In this paper, we use Jaccard distance to

compute the similarity between two music tracks m

and Q:

Similarity(m, Q) =

|F

m

∩ F

Q

|

|Fm ∪ F

Q

|

(3)

where F

m

and F

Q

represent the set of features of mu-

sic tracks m and Q respectively. These features are

extracted from the semantic music profiles of m and

Q.

5.2 User Relevance Score

The community of a user have a big influence on the

music he listens to. This means that for a music track

to be liked by the user, it needs to be popular in his

community. Thus, we exploit the social profile of

the user to compute the user relevance score of music

tracks m in the community of u as follows:

S

user

(m, u) =

|U

m

∩C

u

)|

|Users|

(4)

where: U

m

is the set of users who reacted to music

track m, C

u

is the set of users in the community of

user u. and |Users| is the total number of users in the

network. It is important to mention that we focus, in

this paper, only on positive reactions. So, the more

reactions there are, the more popular the music track

is.

Additionally to the social profile of the user, we

can enhance the user relevance score by taking into

account his semantic profile. This means that among

the users of the community of user u, we just target

those who have similar interests as u. Recall that user

interests are reflected by tags. In this case, we would

limit the community of user u only to users who have

similar tagging actions as user u. We say that two

users u

i

and u

j

have similar tagging behaviour if the

size intersection of their tag sets T

u1

and T

u2

exceeds

a certain threshold. The threshold setting depends on

the social network and the amount of data it contains.

6 EVALUATION

6.1 Music Dataset

To evaluate our approach, we have used a dataset

from Last.fm

23

. The dataset contains music tracks,

users, and their activities. As there was not enough

semantic information about music tracks, we have ex-

ploited Wikipedia

4

to enrich the dataset. For each mu-

sic track, we have used the title to access its Wikipedia

page. From the Wikipedia page, we have got informa-

tion about the music track from the infobox includ-

ing singer, producer, writer, year, label, and other fea-

tures. Further, we have removed all tracks that do not

have Wikipedia pages. Regarding user activities, the

dataset contains descriptions corresponding to tags,

and reactions corresponding to clicks which were the

only reactions available in the dataset. Table 1 gives

statistics about the resulted dataset.

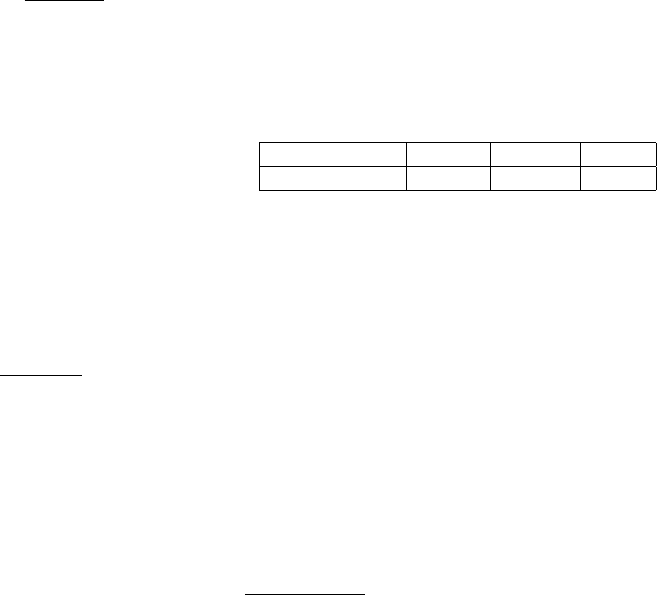

Table 1: Statistical characteristics of the Last.fm dataset.

# Music Tracks # Users # Clicks # Tags

11523 3387 642490 68335

6.2 Evaluation Methodology

We have run our experiments using 100 queries. Re-

call that we use a query-by-example paradigm and

thus queries correspond to music tracks. We have

randomly selected the 100 queries from the top250

most clicked music tracks. The reason of this choice

is driven by the requirements of the automatic assess-

ment of the results described below. As a further

step, we proceeded with the selection the query initia-

tors. For each query, we have selected the users who

clicked on the query music track and ranked them.

The rank of users was computed based on the number

of clicks he has on that track and the number of clicks

he has globally. The query initiator was then selected

randomly among the top20 users.

2

www.lastfm.fr

3

http://www.dtic.upf.edu/∼ocelma/

MusicRecommendationDataset/lastfm-1K.html

4

www.wikipedia.org

KDIR 2015 - 7th International Conference on Knowledge Discovery and Information Retrieval

436

After selecting all queries and their query initia-

tors, we have use our model to rank the results of

each query. We have tested different strategies of our

model:

1. Music-context (Semantic): consists in returning

results that match only the semantic profile of the

query without considering the query initiator pro-

file. This is achieved by setting the parameter

λ = 1.

2. Music-context (Semantic + Social): consists in

returning results that match the semantic and the

social profile of the query without considering the

query initiator profile. This is achieved by setting

the community of the query initiator to all users

in the network. Thus the S

user

score would be in-

dependent from the query initiator reflecting only

the popularity of the music track in the whole net-

work.

3. User-context (Social): consists in returning re-

sults that match the social profile of the query

initiator setting λ = 0 . In this experiments, the

community of any user is the set of all users in

the network and thus the score is solely based on

the popularity of the music track in the network.

This setting is equivalent to Music-context (So-

cial) where the result matches only the social pro-

file of the query.

4. User-context (Semantic + Social): consists in

taking into account both the social and the se-

mantic profiles of the query initiator. In this set-

ting music tracks should match the interests of the

community and the query initiator. This is ob-

tained by setting λ = 0 and restricting the com-

munity of the query initiator only to users with

similar tagging behaviour.

5. Music-context + User-context (Semantic + So-

cial): consists in using all the elements of our ap-

proach. We set λ = 0.5 and we use both semantic

and social profiles for music tracks and users to

rank results.

6.3 Assessment and Evaluation Metrics

To avoid any subjectivity in the assessment of the re-

sults, we have exploited click information to indicate

whether a user likes a music track or not. So, for each

returned result we check if the user has clicked on

it. If he has clicked, then we set the result as rele-

vant and give a value of 1, otherwise it is irrelevant

and has a value of 0. To measure the effectiveness of

our approach, we have used the precision P@k which

represents the fraction of retrieved music tracks that

are relevant to the query considering only the top-k

results. It is given by:

P@k =

|Relevant Track ∩topk Track Results|

k

(5)

We have also used the Mean Average Precision

(MAP) which is a widely adopted standard measure

in IR given by:

MAP@n =

∑

N

i=1

AverageP@n

i

N

(6)

where N is the total number of queries, n is a given

position and AverageP is the average precision of each

query.

6.4 Results and Discussion

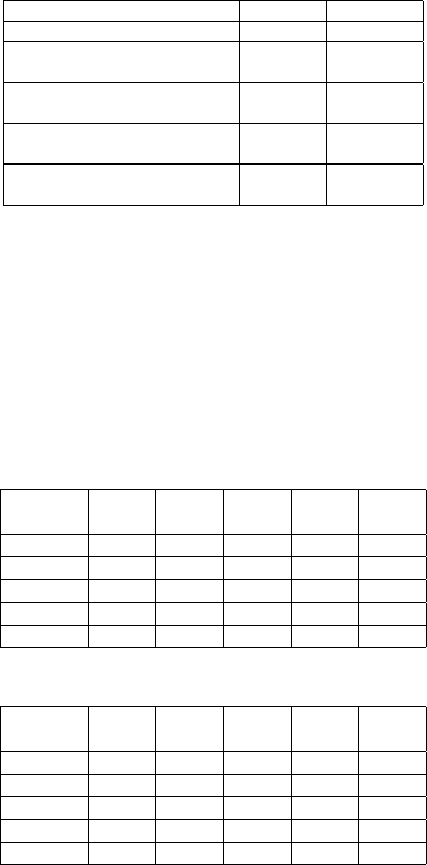

Tables 2 and 3 show the precision and MAP values

for the different strategies of our model. It is clear

from the results that user-context approaches perform

the best in terms of precision and MAP values. More

precisely, when the social and the semantic user pro-

files are both taken into account to find relevant mu-

sic tracks. Compared to using only a Music-context

approach based on the semantic profile of the music

track, the precision@5 increases from 0.285 to 0.478

which is a substantial improvement. Similarly, the

MAP@5 highly improves from 0.242 to 0.423. We

note a decrease in precision and MAP for the top10

results which is due to the decrease of the popularity

of music tracks at lower ranks.

Table 2: Mean precision values for all queries.

P@5 P@10

Music-context (Semantic) 0.285 0.204

Music-context (Social) 0.45 0.309

/ User-context (Social)

Music-context 0.433 0.293

(Semantic + Social)

User-context 0.478 0.315

(Semantic + Social)

Music-context + User-context 0.450 0.293

(Semantic + Social)

After User-context approaches come the Music-

context combined with User-context approaches

showing also high precision and MAP values. Thus,

whenever User-context approaches are adopted, we

can increase the satisfaction of the user. Now, consid-

ering only Music-context approaches we can see a no-

table difference when we use the semantic profile of

the music track and when we enhance it with its social

profile. We can see that matching music tracks based

on their social profile increases the precision@5 from

KISS MIR: Keep It Semantic and Social Music Information Retrieval

437

Table 3: MAP values for all queries.

MAP@5 MAP@10

Music-context (Semantic) 0.242 0.147

Music-context (Social) 0.398 0.259

/ User-context (Social)

Music-context 0.405 0.254

(Semantic + Social)

User-context 0.423 0.268

(Semantic + Social)

Music-context + User-context 0.421 0.258

(Semantic + Social)

0.285 to 0.433 which is a substantial improvement.

Similarly, the MAP@5 highly improves from 0.242 to

0.405. The reason is that even though the social pro-

file of the music track does not depend on the query

initiator, it depends on other users in the network.

This means that it is enough to be in the same net-

work to influence the taste of any participant. So, this

strategy is indirectly User-context which explains the

high improvement in the results.

Table 4: MAP @5 for all queries.

H

H

H

H

H

λ

1-λ

0.0 0.2 0.5 0.8 1.0

0.0 n/a n/a n/a n/a 0.478

0.2 n/a n/a n/a 0.448 n/a

0.5 n/a n/a 0.450 n/a n/a

0.8 n/a 0.450 n/a n/a n/a

1.0 0.285 n/a n/a n/a n/a

Table 5: P@5 for all queries.

H

H

H

H

H

λ

1-λ

0.0 0.2 0.5 0.8 1.0

0.0 n/a n/a n/a n/a 0.423

0.2 n/a n/a n/a 0.419 n/a

0.5 n/a n/a 0.421 n/a n/a

0.8 n/a 0.421 n/a n/a n/a

1.0 0.242 n/a n/a n/a n/a

To analyse the impact of the parameter λ on the

performance of the model, we use different values

ranging from 0 to 1 for the strategy that combines all

types of profiles. The results are given in tables 4 and

5. In the same line as previous results, we can see that

the best results are achieved when λ = 0 which cor-

responds to User-context strategy. To summarise, the

overall results of these experiments demonstrate that

involving the user in the retrieval process can be done

in different ways and all of them improves highly the

satisfaction of the user compared to music-context ap-

proaches.

In these experiments, we have exploited clicks, by

analogy to IR evaluation paradigm, as relevance judg-

ments. However, actually, in the case of music, a sim-

ple click does not really reveal the relevance judgment

since the user can stop the music after some seconds.

To overcome this problem, in our ongoing experi-

ments, we are currently testing our approach by in-

troducing a graded relevance scale based on the num-

ber of clicks considering that having clicked a track

several times is a much stronger indication of its rele-

vance.

7 CONCLUSIONS AND FUTURE

WORK

We have presented a user-centric approach for mu-

sic retrieval which gives the opportunity to users to

get involved in the search process. To this end, we

exploited a variety of contexts. More specifically,

we have extracted semantic and social contexts for

music tracks and for users. Based on these differ-

ent contexts, we proposed a ranking model that can

use music-context, user-context, or both. Addition-

ally, it can set the context to semantic, social, or

both. We have investigated the different settings of

our model to understand what type of information is

more suitable for satisfying user needs. Our exper-

iments have shown that user-context is by far more

useful than music-context for improving the quality

of music search results. More importantly, both se-

mantic and social information should be taken into

account to represent user profile. As future work,

we aim at investigating different types of reactions

other than clicks. Comments are definitely a valu-

able source for user interests and can reveal a lot more

about what the user is looking for and how his taste

changes over time.

REFERENCES

Arjannikov, T., Sanden, C., and Zhang, J. (2013). Verify-

ing tag annotations through association analysis. In

Proceedings of International Society of Music Infor-

mation Retrieval (ISMIR), pages 195–200. ACM.

Boland, D. and Murray-Smith, R. (2014). Information-

theoretic measures of music listening behaviour. In

Proceedings of ISMIR, pages 561–566. ACM.

Braunhofer, M., Kaminskas, M., and Ricci, R. (2013).

Location-aware music recommendation. 2(1):31–44.

Bugaychenko, D. and Dzuba, A. (2013). Musical recom-

mendations and personalization in a social network.

In Proceedings of RecSys, pages 367–370. ACM.

Casey, M., Veltkamp, R., Gosto, M., Leman, M., Rhodes,

C., and Slaney, M. (2008). Content-based mir: Cur-

rent directions and future challenges. In Proceedings

of IEEE. IEEE.

KDIR 2015 - 7th International Conference on Knowledge Discovery and Information Retrieval

438

Celma, O., Ramirez, M., and Herrara, P. (2005). Foafing the

music: A music recommendation system based on rss

feeds and user preferences. In Proceedings of ISMIR,

pages 464–467. ACM.

Chedrawy, Z. and Abidi, S. (2009). A web recommender

system for recommending predicting and personaliz-

ing music palylists. In Proceedings of WISE, pages

335–342. Springer.

Chen, H. and Chen, A. L. P. (2001). A music recommen-

dation system based on music data grouping and user

interests. In Proceedings of CIKM, pages 231–238.

ACM.

Feng, Y., Zhuang, Y., and Yunhe, P. (2003). Music infor-

mation retrieval by detecting mood via computational

media aesthetics. In Proceedings of WI, pages 235–

241. ACM, IEEE.

Herrara, O. C. (2009). Music Recommendation and Discov-

ery in the Long Tail. PhD thesis.

Hoachi, K., Matsumoto, K., and Inoue, N. (2003). Per-

sonalization of user profiles for content-based music

retrieval based on relevance feedback. In Proceedings

of MULTIMEDIA, pages 110–119. ACM.

Huron, D. (2000). Perceptual and cognitive applications in

music information retrieval. In Proceedings of ISMIR.

ACM.

Kaminskas, M. and Ricci, F. (2011). Location-adapted mu-

sic recommendation using tags. In Proceedings of

UMAP.

Kaminskas, M. and Ricci, F. (2012). Contextual music in-

formation retrieval and recommendation: State of the

art and challenges.

Knees, P., Pohle, T., Schedl, M., and Widmer, G. (2007). A

music search engine built upon audio-based and web-

based similarity measures. In Proceedings of SIGIR,

pages 447–454. ACM.

Knees, P., Schedl, M., and Celma, O. (2013). Hybrid music

information retrieval. In Proceedings of ISMIR, pages

1–2. ACM.

Lamere, P. (2008). Social tagging and music information

retrieval. 37(2):101–114.

Lee, H. and Neal, D. (2007). Toward web 2.0 music in-

formation retrieval: Utilizing emotion-based, user as-

signed descriptors. 44:1–34.

Li, T. and Ogihara, M. (2006). Toward intelligent music

retrieval. In Proceedings of MULTIMEDIA, volume 8,

pages 564–574.

Pohle, T., Shen, J., Knees, P., Schedl, M., and Widmer, G.

(2007). Building an interactive next-generation artist

recommender. In CBMI. IEEE.

Saari, P., Eerola, T., Fazekas, G., Barthet, M., Lartillot, O.,

and Sandlen, M. (2013). The role of audio and tags

in music mood prediction. In Proceedings of ISMIR.

ACM.

Sanden, C. and zhang, J. (2011). An empirical study of

multi-label classifiers for music tag annotation. In

Proceedings of ISMIR, pages 717–722. ACM.

Schedl, M. and Flexer, A. (2012). Putting the user in the

center of music information retrieval. In Proceedings

of ISMIR, pages 385–390. ACM.

Smiraglia, R. (2001). Musical works as information re-

trieval entities epistemological perspectives. In Pro-

ceedings of ISMIR, pages 85–91. ACM.

Weigl, D. and Guastavino, C. (2011). User studies in the

music information retrieval literature. In Proceeding

of ISMIR, pages 335–340. ACM.

Y.Song, Dixon, S., Pearce, M., and Halpern, A. (2013). Do

online social tags predict perceived or induced emo-

tional responses to music? In Proceeding of ISMIR,

pages 89–94. ISMIR.

Y.Song, S. Dixon, M. P. (2012). A survey of music recom-

mendation systems and future perspectives. In Pro-

ceedings of CMMR, pages 395–410.

Zhang, B., Shen, J., Xiang, Q., and Wang, Y. (2009). Icom-

positemap: a novel framework for music similarity. In

Proceedings of SIGIR, pages 403–410. ACM.

KISS MIR: Keep It Semantic and Social Music Information Retrieval

439