Towards Common Information Sharing

Study of Integration Readiness Levels

Rauno Pirinen

Laurea University of Applied Sciences, Espoo, Finland

Keywords: Common Information Sharing, Integration, Integration Readiness Level, Maturity, Operational Validation.

Abstract: This study focuses on integration readiness level (IRL) metrics and their definition, criteria, references and

questionnaires for the operational and pre-operational validation of shared information services and systems.

The study attempts to answer the following research question: how can IRL metrics be understood and

realized in the domain of shared information services and systems? It aims to improve ways of the

acceptance, operational validation, pre-order validation, risk assessment and development of sharing

mechanisms as well as the integration of information systems and services by public authorities across

national borders.

1 INTRODUCTION

This case study is based on the European Union’s

Common Information Sharing Environment (EU

CISE 2020) research project, R&D-related research

on work packages (n = 8) of the EU CISE 2020

research consortium and research agenda targets

related to the public authority in Finland.

The study examines information sharing

environments that foster cross-sectorial and cross-

border collaboration among public authorities, the

dissemination of the EU CISE 2020 initiative and

steps along the Maritime CISE roadmap.

EU CISE 2020 work entails the widest possible

experimental environments encompassing

innovative and collaborative services and processes

between European institutions and takes as

reference, a broad spectrum of factors in the field of

European integrated services arising from the

European legal framework as well as collaborative

studies and related pilot projects.

In this study, knowledge management is

considered a discipline concerned with the analysis

and technical support of practices used in an

authority-related organization to identify, create,

represent, distribute and enable the adoption and

leveraging of real-world practices, which were used

in collaborative authority settings and, in particular,

public authority organizational processes. In this

sense, effective knowledge management is an

increasingly imperative shared source of

collaborative and rationale advantages and a key to

success in public authority organizations bolstering

the collective and shared expertise of its employees,

actors and partners.

Information sharing is related to the ontology of

information technology, data exchange capabilities,

communication protocols, technological artifacts and

digital infrastructures.

Although standardization is indeed an essential

element in sharing information, information systems

effectiveness requires going beyond the syntactic

nature of information technology and delving into

the human functions at the semantic, pragmatic,

critical realist and social levels of organizational

semiotics.

In this approach, the integration of information

services or systems is understood as a complex

process involving multiple overlapping and iterative

tasks that address co-creativity as well as a multi-

methodological approach that involves thinking,

building, improving and evaluating a successful

information system and its communication, which

fits the needs of the applied domain, information

sharing and implementation of integration readiness

viewpoints.

The EU CISE 2020 research domain prioritizes

improvements in the integration process of a

complex service or system. The term “external

validity”, in this study, refers to establishing the

expanded domain in which the study’s findings and

conclusions can be generalized.

Pirinen, R..

Towards Common Information Sharing - Study of Integration Readiness Levels.

In Proceedings of the 7th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2015) - Volume 3: KMIS, pages 355-364

ISBN: 978-989-758-158-8

Copyright

c

2015 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

355

This study adopts the method of increasing

understanding through information systems research

and integration facilities, such as utility and

communication, integration readiness and networked

realization capability.

It makes the following contributions to the

operational and pre-operational validation (POV)

and utility of ISO standardization and

interconnection: 1) improvement in metrics for

information system and service integration 2)

advances in global procurement management and

pre-order validation 3) pre-operational validation in

information system investigations 4) progress in

operational validation in information system

implementation 5) findings of methodological

implications for the implementation of IRLs in the

context of EU CISE 2020 6) usefulness of

information system sharing and interconnection 7)

expansion of large and networked information-

intensive services that can extend shared solutions

and routes of shared information utilization and

common global information and information system

sharing and 8) educational advances in R&D-related

functions in higher education institutions, which in

this case, can be shared across national borders.

The macro-level target of this research is to

examine how existing IRL metrics and their

definition, criteria, references and questionnaires are

useful and can be employed to realize and validate

integration and communication in information

systems sharing.

At the micro level, this study was performed on

shared information systems in the case of shared

maritime systems and focuses on IRL’s targets: 1)

realization such as the usefulness, sharing and

dissemination of an information system as a

common digital service, product or solution

involving shared information across appropriate

borders of applied domains and 2) validation, that is,

pre-operational validation, pre-order validation for

procurements, internal validity and external validity,

which can, for example, be useful in the national and

global deployment and dissemination processes,

operational validation of information systems,

improving integration success, achieving common

ontological understanding and improving methods of

information systems integration and sharing.

The overall target of this research is to address

increasing trustworthiness such that related studies

make sense and are credible for EU CISE 2020

audiences.

The study design is based on a combination of a

thorough understanding of the theoretical

framework, studies in the related literature and

experimental knowledge of the collaborative

integration used to explain the research question as

well as learning processes and their meaning.

Internal validity in this analysis refers to the

establishment of casual relationships. Causal

relationships are interactions and relationships

among shared IRL measures and information

systems realizations from the perspective of

integration readiness, information sharing across

borders of various domains and the use of common

shared information systems. For example,

information is shared and education is collaborative

and disseminated across national borders, an aim

undertaken by maritime universities throughout the

European Union.

In this study, learning by R&D related scope is

described as an integrative way of learning in where

an individual learns along with a workplace, school,

and R&D community, such as EU CISE 2020

research consortium, as well as alongside an

authorities organization and across borders and

disciplinary silos, as in a collective learning space

that can be regional or individual-global oriented.

The main doctrine of study is that the research

dimensions include learning, and an authentic real-

world research process and methodology are used

for learning. Then, the objectives of learning by EU

CISE 2020 can be associated through various formal

and informal structures, such as R&D networks and

actors, especially in developing students and learners

to specialize in their areas of novel information

sharing related expertise where applicable

knowledge is produced and mobilized in the

collective R&D-related learning processes

2 LITERATURE

The path-dependency of IRL development and key

knowledge aspects are referenced from the related

literature, for example, system engineering (Eisner,

2011), systems readiness levels (Sauser et al., 2006)

and the development of an integration readiness

level (IRL) (Sauser et al., 2010).

Following these works, this study focuses to how

IRL metrics can be understood and realized in the

context of the Common Information Sharing

Environment (EU CISE 2020) using generally

understood and related metrics and models for the

realization and reasoning of IRLs development.

2.1 Open System Interconnection

The first widely understood and well-known model

ISE 2015 - Special Session on Information Sharing Environments to Foster Cross-Sectorial and Cross-Border Collaboration between Public

Authorities

356

in IRLs development is open system interconnection

(OSI) (Zimmermann, 1980).

Sauser et al., (2006) described this development

path as follows: ‘it was necessary to develop an

index that could indicate how integration occurs’ (p.

6). This index ‘considered not only physical

properties of integration, such as interfaces or

standards, but also interaction, compatibility,

reliability, quality, performance and consistent

ontology when two pieces are being integrated’.

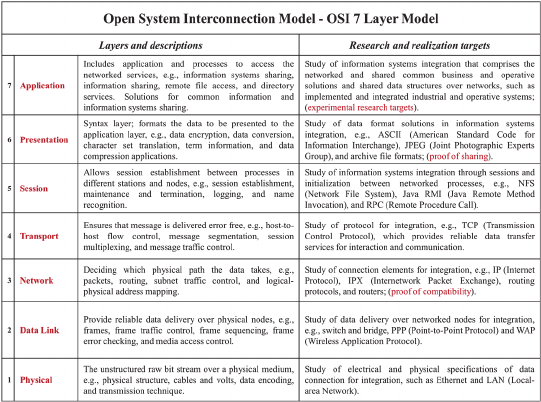

Figure 1 describes the compressed structure of

the OSI model as the first approach to IRLs

development. Sauser et al., (2006) selected the OSI

model, its layers and targets (Figure 1) as the

starting point of IRLs development. The OSI model

has been widely referenced in computer networking

to structure data transmitted on a network and allows

for the integration of various technologies on the

same network, networking theme (Beasley, 2009)

and system approach to computer networks

(Peterson and Davie, 2012).

2.2 Technology Readiness Level

Technology readiness level (TRL) metric includes

nine levels (Sauser et al., 2006). The TRL metric

was developed to assess technology and research

interventions and has been included in numerous

National Aeronautics and Space Administration and

United States Department of Defence efforts.

Much of the early works in this field involved

defining the risks and costs associated with various

TRLs. The reviewed literature indicates that TRLs

mainly addresses the evaluation of the readiness and

maturity of an individual technology. TRL metrics

adopt a given technology from the basic principles

as well as concept evaluation, validation, prototype

demonstration, and finally, completion and

successful operations.

While these characterizations are useful in

technology development, in this study, they address,

to an extent, how this technology is integrated within

complete information-intensive systems and applied

services. We understand that, currently, many

complex systems fail in the integration phase or

should be updated, for example, in integration owing

to the speed of technological development and new

updates. We draw on Tan, Ramirez-Marquez and

Sauser (2011) for an understanding of TRLs’.

2.3 Integration Readiness Levels

The IRL metrics were introduced by the Systems

Development and Maturity Laboratory at the

Stevens Institute of Technology and developed to

assess the progress of information system integration

and communication in the engineering field. The

study aimed at realizing and validating IRL metrics

in the extended context of the ISO DIS 16290

standard development framework by the

International Standards Organization.

The IRL metrics have been defined as a

‘systematic measurement of the interfacing of

compatible interactions for various technologies and

the consistent comparison of the maturity between

integration points’ (Sauser et al., 2006) (p. 5). IRLs

were used to describe and understand the integration

maturity of a developing technology using another

technology or mature information systems.

Figure 1: Interpretations of OSI 7 layer model (Zimmermann, 1980; revised form Pirinen et al., 2014).

Towards Common Information Sharing - Study of Integration Readiness Levels

357

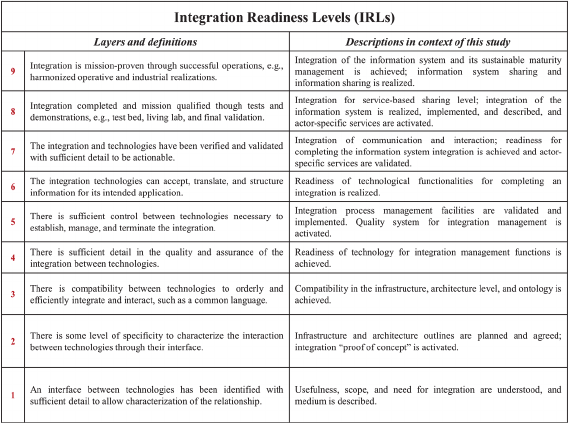

Figure 2: Integration readiness levels (Sauser et al., 2010; Pirinen et al., 2014).

IRLs contribute to TRLs by checking where the

technology is on an integration readiness scale and

offering direction to improve integration with other

technologies. In general, just as TRLs has been used

to assess risks associated with developing

technologies, IRLs was designed to assess the risk

and development needs of information systems

integration.

A reason underpinning the present IRLs research

is that the TRLs do not accurately capture the risk

involved in adopting a new technology and that

technology can have an architectural difference

related to integration readiness and system

integration. In this environment, because the

complexity of a system or information could

increase, and a practical situation often involves a

service-oriented network and shared systems, it is

reasonable to employ a reliable method and ontology

for integration readiness. This also allows other

readiness levels to be collectively combined for the

development of complex information-intensive

systems in information sharing and the integration of

systems as a common shared system.

Sauser et al., (2006) described IRLs development

path dependency that is based on the OSI model as

follows: ‘to build a generic integration index

required first examining what each layer really

meant in the context of networking and then

extrapolating that to general integration terms’ (p.

6). With this description, as shown on the left-hand

side of Figure 2, IRLs were defined to describe the

increasing maturity of the integration between any

two technologies between 2006 and 2010 through

the development of an integration readiness level

(Sauser et al., 2010) and using a system maturity

assessment approach (Tan et al., 2011). On the right-

hand side of Figure 2, the IRL metrics are described

in the context of this continuum of study.

As shown in Figure 2, IRL layer 1 represents an

interface level: it is not possible to have integration

without defining a medium. In turn, selecting a

medium can affect the properties and performance of

a system. Layer 2 represents interaction, the ability

of two technologies to influence each other over a

given medium; this can be understood as an

integration proof of the concept, such as facilitating

bandwidth, error correction and data flow control.

Layer 3 represents compatibility. If two integrating

technologies do not use the same interpretable data

constructs or a common language, then they cannot

exchange information. Layer 4 represents a data

integrity check. There is sufficient detail in the

quality and assurance of the integration between

technologies, which means that the data sent are

those received and there exists a checking

mechanism. In addition, the data could be changed if

part of its route is on an unsecured medium (cf.

realizations (Beasley, 2009) and understanding of

layers (Sauser et al., 2010)). In Figure 2, IRL layer 5

represents integration control: establishing,

maintaining and terminating integration, for

example, possibilities to establish integration with

other nodes for high availability or performance

pressures. Layer 6 represents the interpretation and

translation of data, specifying the information to be

exchanged and the information itself as well as the

ability to translate from a foreign data structure to a

ISE 2015 - Special Session on Information Sharing Environments to Foster Cross-Sectorial and Cross-Border Collaboration between Public

Authorities

358

used one. Layer 7 represents the verified and

validated integration of two technologies, such as

the integration achieving performance, throughput

and reliability requirements. Layers 8 and 9 describe

operational support and proven integration with a

system environment, corresponding to levels 8 and 9

of the TRLs (Sauser et al., 2010). In IRL, level 8, a

system-level demonstration in the relevant

environment can be performed (the system is

laboratory-test proven). Level 9 denotes that the

integrated technologies are being successfully used

both in the system environment and operations (see

also Tan et al., (2011)).

2.4 Combined Readiness Levels

This study, thus far, showed that a technological

readiness and integration readiness metric are two

basic elements of the thinking, building, improving

and testing of information systems, networked or

distributed integration and ontology. This view is

furthered by combined system readiness level (SRL)

metrics, which have been described as a

combination of TRLs function of technologies and

IRLs of integrations, as introduced by Sauser et al.,

(2006) and continued by Luna et al., (2013). SRL is

the collector of metrics represented by a single SRL

metric defined on the basis of the amalgamation of

other existing readiness levels, thus providing a

method to chain different readiness level metrics. An

aspect of SRL’s significance is that it gives

credibility to the quantitative collection of readiness

levels and opens possibilities to expand SRLs by

incorporating other readiness-level and validity

metrics, such as the manufacturing readiness level,

software readiness level, SRLs, and information

systems maturity as well as validity on an overall

scale (see also Tan et al., (2011)).

In the context of EU CISE 2020, it is noteworthy

that the reviewed literature on readiness metrics has

similarities to a combination of decision-making

items, a component of pre-operational or pre-order

validation and procurement thinking. The integration

viewpoints can also be related to a modular

implementation strategy as an approach that

addresses challenges related to the mobilization,

steering and organization of multiple stakeholders in

wide-scale R&D collaboration. Here, the focus is on

the challenges of realizing large-scale technological

and information-intensive systems, which are

understood not as standalone entities but as those

integrated with other information systems,

communication technologies and technical and non-

technical elements in the domain of national and

global information sharing and integrated

infrastructures. This also includes the fact that an

integrated system can be a shared system in a

network of shared information (cf. building

nationwide information infrastructures (Aanestad

and Jensen, 2011) and the case of building the

Internet (Hanseth and Lyytinen, 2010)).

2.5 Operational Validation

In this study, information systems validation is an

approach that an individual institution with respect

to a specific validation depends on, for example, the

rules, guidance, literature, regulation, standards,

agreements, best practices and characteristics of the

system, which is then validated. The validation

processes are used to determine whether the

improved or developed service or product meets the

requirements of the activity and whether the service

or product satisfies its intended use and collectively

understood needs. The validation processes have

similarities with methodological validation in a

grounded approach (Corbin and Strauss, 2008) and

especially, triangulation (Campbell and Fiske,

1959).

In this study, there are certain similarities

between the activities performed in practical

validation and the type of documented information

produced for the validity of integrated information

systems. One way to obtain an understanding of

these practices in the analysed cases is to examine

the canonical documents and standards accumulated

in the practices of the actors in question and their

customer networks (Davison et al., 2004). Examples

of such documents include the following:

requirements specifications; field regulations;

validation plans; project plans; supplier audit

reports; functional specifications; design

specifications; task reports; risk assessments;

infrastructure qualifications; operational

qualifications; standard operating procedures;

performance qualification; security qualification;

and validation descriptions, reports and plans.

3 METHODOLOGY

First, we decide whether to continue with a case

analysis or cross-case analysis (Patton, 1990). The

first two pilot studies (Pirinen et al., 2014) were

conducted on integration projects in the context of

industrial solutions and operative systems:

Utilization of the Integration Readiness Level in the

Context of Industrial System Projects (Sivlén and

Towards Common Information Sharing - Study of Integration Readiness Levels

359

Pirinen, 2014); and Utilization of the Integration

Readiness Level in Operative Systems (Mantere and

Pirinen, 2014).

We begin with a case analysis, which involved

writing a case study for each integrated unit. These

results are documented and reported and comprise a

research data continuum (cf. The Art of Case Study

Research (Stake, 1995) and the description of

multiple cases in Yin (2009)).

As a research continuum, this study employs a

complementary case analysis, which means

grouping together answers to various common

questions and analysing different perspectives on

central issues (Eisenhardt, 1989). In particular,

formal and open-ended interviews were used (Sauser

et al., 2010). Then, for the final cross-analysis, this

case study fits a cross case of each interview

question with a guided approach. Answers from

different interviews are grouped by topic as per

relevant data from the guide, which will not be

found in the exact same place in each note and open-

ended segment of the interviews (Robson, 2002).

The selection of interviews constitutes descriptive

analytics, as mentioned in Patton (1990) (p. 376).

In this study, a summary list of research

attributes was made to validate and describe the

methodological rigor in the performed case study

(Dubé and Paré, 2003). While the level of achieved

methodological rigor has been used in different

cases with respect to specific attributes, the overall

assessed rigor can be extended and improved (cf.

Davison et al., (2004)). The list of included

attributes was mainly extended from Dubé and Paré

(2003).

The main research attributes of this study are as

follows: 1) title of the study: Towards Common

Information Sharing: Studies of Integration

Readiness Levels (IRLs) 2) research questions:

‘How can IRL metrics be understood and realized in

the domain of EU CISE 2020?” 3) unit of analysis:

an experience of information systems integration

that is implemented, well documented and

experienced 4) importance of the study: contributes

to research on IRLs and related development of the

ISO/DIS 16290 standard series in EU CISE 2020 5)

methodological focus: continuum of case study

analysis, including triangulation and final cross-

analysis 6) analysis form: mainly a qualitative

analysis, saturation and triangulation 7) research

target: information service-system dissemination 8)

data collection methods: questions (n = 10) and

interviewees (n = 6) (the research data were

recorded, coded, reduced, archived and translated

from Finnish to English) and 9) Lime survey

questionnaires by ISDEFE, used to assess

integration activities on a system maturity scale to

evaluate a system; (questionnaires and comparison

of research findings were based on Sauser et al.,

(2010)).

4 RESEARCH FINDINGS

In this study, evaluation is understood as an

approach that an individual or institution takes with

respect to the specific verification of information-

intensive services or systems that depend on rules,

guidance, literature, regulation, standards,

agreements, best practices, trust management, risks,

confidence and system characteristics. In this study,

operational validation processes were related to

determining whether the improved or developed

service or product meets the requirements of the

shared operational activity and whether the service

or product satisfies its intended use and were

collectively understood.

The study addresses the validation and utility of

ISO standardization mainly related to the ISO DIS

16290 and interconnections as follows: 1)

improvements in the metrics for information systems

integration such as IRL metrics 2) advances in

global procurement management such as increased

trust and confidence in agreements and descriptions

3) pre-operational validation in information systems

investigations such as improved common ontology

4) progress in operational validation in information

systems implementation 5) findings of

methodological implications for the implementation

of IRLs as a description of the analysed categories 6)

usefulness to information systems sharing and

interconnections in which integration is demanding

7) expansion of large, networked information-

intensive services that can extend shared solutions

and routes of big data utilization as well as common

global information sharing and 8) educational

advances and challenges in research-related learning

in higher education functions, especially in the case

of shared university functions across national

borders in the European Union.

This study found that the current form of IRL

metrics is useful for integration purposes and

realizations on an overall scale. However, IRL

metrics (Sauser et al., 2010) were not understood as

a complete solution to integration maturity

determination, but rather a specific operational

validation path and tool for communication between

all the critical project’s parties and mutual

confidence and trust such as for pre-order validation.

ISE 2015 - Special Session on Information Sharing Environments to Foster Cross-Sectorial and Cross-Border Collaboration between Public

Authorities

360

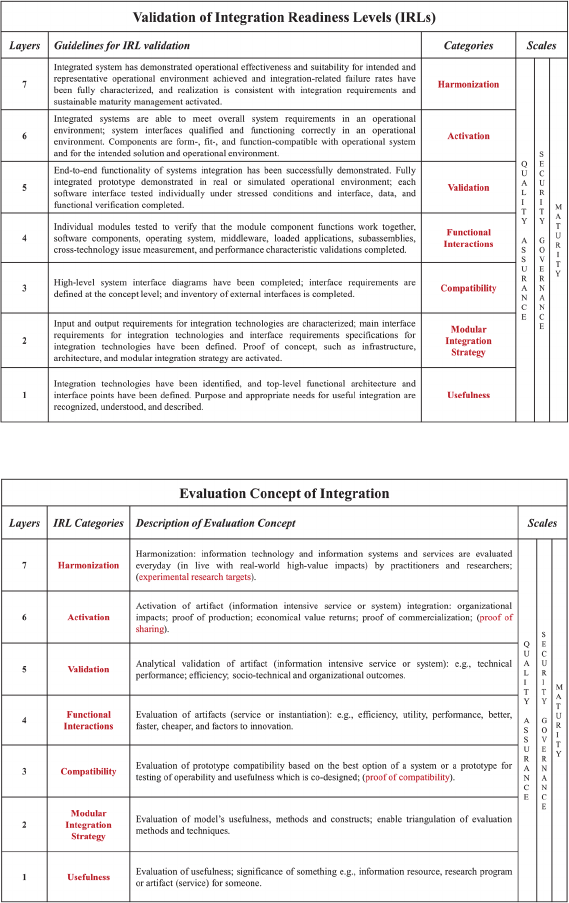

Figure 3: Validation of integration readiness levels (revised form Pirinen, 2014).

Figure 4: A proposal for evaluation of integration.

When IRL metrics were used at an acceptable

level, they contributed to the project’s goals in the

designated time schedule and significant strength in

the integration was achieved. The first reflected

research finding was that some criteria of reference

by Sauser et al., (2010) are more useful than others

and the most important criterion could be either

inserted at the beginning of the criteria list at each

level or marked in some way such that users pay

more attention to them. The second finding was that

integration quality, security governance and maturity

appear as scales rather than levels (see the described

scales in Figures 3 and 4).

This first IRLs validation guideline for

information systems integration is described in

Figure 3 and the description of the evaluation

concept in Figure 4. The evaluation of usefulness

denotes the significance of; for example, information

resources, research programs or artifacts as the

service of information system (see usefulness level 1

in Figures 3 and 4). Evaluation in level 1, (Figure 4),

focuses on the systematic determination of merit,

worth and significance.

The study revealed, that in the shared system

context, IRL metrics can provide a common

language and a method that improves the

Towards Common Information Sharing - Study of Integration Readiness Levels

361

organizational communication of scientists,

engineers, management and any other integration

stakeholders within documented systems

engineering guidance and overall confidence.

However, one difficulty is that the IRL criteria can

be interpreted in multiple ways: it would be easier if

expressions were more formal and more elaborate,

for example, the types of activities needed. On the

other hand, integrations included diversity and it was

found that descriptions should include more case-

sensitive data: there needs to be a place for criteria

inserted by users. In other words, the questionnaires

by Sauser et al., (2010) are appropriate but should be

left open-ended for resiliency and trust-related

aspects. Therefore, plan, purpose and usefulness are

placed in the first layer as category usefulness in

Figures 3 and 4.

Thus, IRL questionnaires should be

complemented with an expanded checklist that

would allow for the removal of subjectivity in many

of the maturity metrics. It was also found that each

IRL metric may have been differently interpreted by

the participants and some decision criteria may

belong to a different IRL scale, thereby altering its

criticality. The study revealed that some of the

presented criteria belonged to a test lab environment;

this can be improved by adding descriptions of them

to the questionnaire or creating a sheet for the test

lab to avoid conflicts when moving integration to

production. This indicates that the scale for the pre-

operational validation concept depends on the case,

development path and system architecture. Then,

using a modular strategy and alignments of attributes

for operational validation were considered because

the speed and diversity of applied technological

development is high even on a three-year scale. A

modular integration strategy is described in level 2

(Figures 3 and 4).

In Figures 3 and 4, the compatibility category

includes high-level system interface diagrams that

have been completed in an integration project, where

interface requirements and an inventory of external

interfaces are defined at the concept level. The proof

of the functional interactions phase comprises the

testing of individual modules to verify that the

module component functions work together and

software components, the operating system,

middleware, loaded applications, subassemblies,

cross-technology issue measurement and

performance characteristic validations are

completed. Here, the evaluation of prototype

compatibility can be based on the best option of a

system or prototype to test operability and

usefulness collectively designed. In Figure 3 and 4,

the final systems validation for IRLs between layers

five and seven and activation follow Sauser et al.,

(2010) and the OSI model. This includes an

evaluation of artifacts, such as the service or

information system; an evaluation of efficiency,

utility, performance and better, faster, cheaper

factors and functions of innovation; analytical

validation of artifacts, such as the service or

information system (e.g. technical performance,

efficiency, simulation, formal verification, socio-

technical outcomes and organizational impacts); and

activation of artifacts such as service or information

systems and integration (e.g. proof of production,

value returns, proof of commercialization and real-

world and high-value impacts).

Finally, the harmonization category denotes that

operational effectiveness and suitability for the

operational environment, integration-related failure

rates and recovery from failure have been fully

characterized; the realization is consistent with

integration requirements; and sustainable maturity

functions have been activated for continuity

management. Information technology and systems

or services are evaluated on a daily basis with real-

world high-value impacts by practitioners and

researchers on harmonization and realization.

The maturity scale (Figures 3 and 4) comprises

the IRLs related to maturity, as described in Sauser

et al., (2010), and information systems’ continuous

management maturity, which is based on appropriate

requirements, The scale provides a model that

improves the continuity of information systems and

services. This viewpoint extends to the management

of solutions where the failure rate increases with

time. For example, this can be useful for system

recovery in the case of disruptions and interruptions

in production process-related systems.

In Figures 3 and 4, the quality assurance scale

describes procedures, processes and systems used to

guarantee and improve the quality of operations. In

this study, the quality assurance scale was used to

jointly define operation-enhancing and appropriate

procedures, methods and tools, and then, monitor

and develop operations in a systematic manner. In

this study, quality refers to the suitability of

procedures, processes and systems in relation to

strategic objectives such as integration strategy.

Quality assurance and related systems combine

knowledge-based structures with the body of

knowledge.

So far, prescriptive metrics such as IRLs have

been introduced and used in engineering

management to assess the integration progress and

success of engineering and related scientific

ISE 2015 - Special Session on Information Sharing Environments to Foster Cross-Sectorial and Cross-Border Collaboration between Public

Authorities

362

determinations.

IRL metrics, explored in this study, have still two

major challenges: human subjectivity and

confidence in data estimates. However, IRL metrics

can be increasingly and commonly needed to

measure project and system integration and

demonstrate the magnitude of achieved performance

and integration level while allowing for a successful

evaluation of integration and systems harmonization.

5 DISCUSSION

The study has significant implications for further

discussion of common information sharing. The

results achieved, so far, do not necessarily address

sub-levels and utility levels, such as user interface or

security readiness, which are approached and

described here as scales. The success of integration

is highly dependent on users’ and actors’ experience

and understanding, e.g., the amount of work needed

for successful and sustainable integration, including

all necessary sub-solutions.

There are many reasons for future integration

progress and discussion: the number of systems,

interconnections and interface elements increases

over time; the system complexity increases and the

resulting integration becomes challenging to

maintain, e.g., number of updates and life cycles.

During the information systems evolution, while

each of the systems for digitalization and integration

may formally go through the development process,

e.g., IRLs requirements, the overall integration

analysis, development and corresponding

requirements are clearly increasingly due to

following elements which are ever more present: 1)

operational and managerial independence of

operations 2) commercial value of data 3) challenges

of border and cultural aspects 4) emergent strategies

and behavior 5) trust building and 6) evolutionary

and development path-dependency.

REFERENCES

Aanestad, M., Jensen, T. B., 2011. Building nation-wide

information infrastructures in healthcare through

modular implementation strategies. Journal of

Strategic Information Systems, 20, 161-176.

Beasley, J. S., 2009. Networking, Boston: Pearson

Education, 2nd edition.

Campbell, D. T., Fiske, D. W., 1959. Convergent and

discriminant validation by the multitrait-multimethod

matrix. Psychological Bulletin, 56, 81-105.

Corbin, J., Strauss, A., 2008. Basics of qualitative

research: Techniques and procedures for developing

grounded theory. Los Angeles: Sage Publications, 3rd

edition.

Davison, R. M., Martinsons, M. G., Kock, N., 2004.

Principles of canonical action research. Information

Systems Journal, 14, 65-86.

Dubé, L., Paré, G., 2003. Rigor in information systems

positivist case research: Current practices, trends, and

recommendations. MIS Quarterly, 27(4), 597-635.

Eisenhardt, K. M., 1989. Building theories from case

study research. Academy of Management Review,

14(1), 532-550.

Eisner, H., 2011. Systems engineering: Building

successful systems. San Rafael, California: Morgan &

Claypools.

Hanseth, O., Lyytinen, K., 2010. Design theory for

dynamic complexity in information infrastructures:

The case of building internet. Journal of Information

Technology, 28, 1-19.

Luna, S., Lopes, A., Tao, H., Zapata, F., Pineda, R., 2013.

Integration, verification, validation, test, and

evaluation (IVVT&E) framework for system of

systems (SoS). Procedia Computer Science, 20, 298-

305.

Mantere, E., Pirinen, R., 2014. Utilization of the

Integration Readiness Level in Operative Systems.

Proceedings of IEEE World Engineering Education

Forum (WEEF-2014), Dubai, United Arab Emirates,

726–735.

Patton, M., 1990. Qualitative evaluation and research

methods, London: Sage Publications, 2nd edition.

Peterson, L. L., Davie, B. S., 2012. Computer networks: A

system approach, Burlington: Elsevier, 5th edition.

Pirinen, R., 2014. Studies of Integration Readiness Levels:

Case Shared Maritime Situational Awareness System.

Proceedings of the Joint Intelligence and Security

Informatics Conference (JISIC-2014). The Hague,

Netherlands, 212–215.

Pirinen, R., Sivlén, E., Mantere, E., 2014. Samples of

Externally Funded Research and Development

Projects in Higher Education: Case Integration

Readiness Levels. Proceedings of IEEE World

Engineering Education Forum (WEEF-2014), Dubai,

United Arab Emirates, 691–700

Robson, C., 2002. Real world research, Oxford: Blackwell

Publishing, 2nd edition.

Sauser, B., Gove, R., Forbes, E., Ramirez-Marquez, J.,

2010. Integration maturity metrics: Development of an

integration readiness level. Information Knowledge

Systems Management, 9(1), 17-46.

Sauser, B., Verma, D., Ramirez-Marquez, J., Gove, R.,

2006. From TRL to SRL: The concept of systems

readiness levels. Conference on Systems Engineering

Research, Los Angeles.

Sivlén, E, Pirinen, R., 2014. Utilization of the Integration

Readiness Level in the Context of Industrial System

Projects. Proceedings of IEEE World Engineering

Education Forum (WEEF-2014), Dubai, United Arab

Emirates, 701–710.

Towards Common Information Sharing - Study of Integration Readiness Levels

363

Stake, R., 1995. The art of case study research, Thousand

Oaks: Sage Publications.

Tan, W., Ramirez-Marquez, J., Sauser, B., 2011. A

probabilistic approach to system maturity assessment.

Systems Engineering, 14(3), 279-293.

Yin, R. K., 2009. Case study research design and methods,

Thousand Oaks: Sage Publications, 4th edition.

Zimmermann, H., 1980. OSI reference model: The ISO

model of architecture for open systems

interconnection, IEEE Transactions on

Communications, 24(4), 425-432.

ISE 2015 - Special Session on Information Sharing Environments to Foster Cross-Sectorial and Cross-Border Collaboration between Public

Authorities

364