Gait-based Recognition for Human Identification using

Fuzzy Local Binary Patterns

Amer G. Binsaadoon and El-Sayed M. El-Alfy

College of Computer Sciences and Engineering, King Fahd University of Petroleum and Minerals,

Dhahran 31261, Saudi Arabia

Keywords:

Biometric, Human Identification, Gait Recognition, Local Binary Pattern, Fuzzy Logic.

Abstract:

With the increasing security breaches nowadays, automated gait recognition has recently received increasing

importance in video surveillance technology. In this paper, we propose a method for human identification at

distance based on Fuzzy Local Binary Pattern (FLBP). After the Gait Energy Image (GEI) is generated as

a spatiotemporal summary of a gait video sequence, a multi-region partitioning is applied and FLBP based

features are extracted for each region. We also evaluate the performance under the variation of some factors

including viewing angle, clothing and carrying conditions. The experimental work showed that GEI-FLBP

with partitioning has remarkably enhanced the identification accuracy.

1 INTRODUCTION

Biometric technology has witnessed great advances in

the past. However, common modalities such as face

and fingerprint require controlled working conditions

such as direct physical contact, closeness to acqui-

sition devices, predefined views, and inevitable sub-

ject cooperation. Relatively recent, gait recognition

has been shown to be an attractive alternative or com-

plementary behaviorial biometric. Gait is believed to

have a unique pattern for each person in normal con-

ditions. Moreover, it has the ability to mitigate the

above mentioned requirements. It can have a feasible

application in visual surveillance identification. Sub-

jects are identified at distance (e.g. 10 m) based on

the way they walk. Gait recognition doesn’t require

the subject under study to be close to the acquisi-

tion device or standing at a predefined viewing an-

gle. In gait-based systems, subjects can be identified

from low-resolution or infra-red images under differ-

ent conditions such as wearing coats, carrying objects,

or walking on different surfaces. A recent review of

current techniques of gait recognition and modelling

is present in (Lee et al., 2014).

Human identification approaches based on gait

can be either model-based or model free. However,

many of the research attempts are model-free due

to the high computational cost and limited perfor-

mance of model based methods (Zhang et al., 2010).

Different model-free methods have been proposed in

the literature using various methods for feature ex-

traction. One of the excellent methods for texture

representation is local binary pattern (LBP), which

was first proposed in 1996 by Ojala et al. (2002).

It has been extensively utilized in a variety of re-

search fields and has demonstrated notable perfor-

mance (Brahnam et al., 2014). LBP has been used in

face identification and expression recognition (Aho-

nen et al., 2006; Zhao and Pietikainen, 2007). A

few attempts have been reported in the literature that

utilize LBP for gait recognition. For example, Kel-

lokumpu et al. (2009) proposed a new gait recogni-

tion method based on using LBP from Three Orthog-

onal Planes (LBP-TOP) that spatiotemporally repre-

sent the human movements. Hu et al. (2013) em-

ployed LBP to encode the motion flow information.

In these two examples, only crisp LBP was used and

was shown to be an effective texture representation.

However, to cope with uncertainties that may result

from noisy images, Iakovidis et al. (2008) incorpo-

rated fuzzy logic with LBP and named it FLBP.

In this work, we investigate the performance of

applying fuzzy local binary patterns (FLBP) to ex-

tract more discriminative gait features from the Gait

Energy Image (GEI) (Han and Bhanu, 2006a). GEI

overcomes storage and computation burden of tem-

poral model-free approaches by representing the hu-

man walking sequence in a single image conserving

motion temporal properties. We also study the perfor-

mance for different number of non-overlapping parti-

314

Binsaadoon, A. and El-Alfy, E-S.

Gait-based Recognition for Human Identification using Fuzzy Local Binary Patterns.

DOI: 10.5220/0005693103140321

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 2, pages 314-321

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

tions of GEI.

The reminder of this paper is organized as follows.

Section 2 briefly reviews related work. Section 3 ex-

plains the proposed approach. Subsequently, experi-

mental work on CASIA B gait dataset is discussed in

Section 4. Finally, Section 5 concludes the paper.

2 RELATED WORK

Niyogi and Adelson (1994) presented an early at-

tempt to model the image sequence of a person in

spatiotemporal space dimensions. A model was fit

on the extracted subject’s contour and the model pa-

rameters were used for feature extraction. Lee and

Grimson (2002) divided the original silhouette into

seven parts, and extracted shape features consisting

of ellipse fitting parameters of each region. Bhanu

and Han (2002) estimated the human motion model

parameters using a least-square fit to project the 3D

kinematic motion into 2D silhouettes. Then, the esti-

mated parameters were used to extract gait features.

On the other hand, model-free approaches (Ran

et al., 2007; Ho et al., 2009; Zhang et al., 2010; Han

and Bhanu, 2006a; Chen et al., 2010; Nizami et al.,

2010) used static and dynamic components instead of

fitting a model for the human motion. The static com-

ponent reflects the shape and size of the person’s body

whereas the dynamic component reflects the move-

ment dynamics. Examples of static features include

height, width, stride length, and silhouette bounding

box lengths. Frequency and phase of movement are

examples of dynamic features.

Kale et al. (2003) proposed another algorithm to

track the walker and extract his/her canonical pose.

They used optical flow to discover the walking an-

gle and then wrapping the image to the new canonical

pose projection. The height and leg dynamics fea-

tures were used. This resulted in encouraging recog-

nition rate using the baseline algorithm of Sarkar et al.

(2005).

Some other gait recognition approaches used the

period of gait cycles as gait features. Ran et al.

(2007) used two different methods to extract the pe-

riod: Maximal Principal Gait Angle (MPGA) and

the Fourier transform. They used the input and out-

put signals generated by Voltage Controlled Oscilla-

tor (VCO) to get the cycle period as the phase differ-

ence of the two signals. Ho et al. (2009) used both

static and dynamic features to determine the gait cy-

cle period. Static features were the motion vector his-

tograms and the dynamic features were the Fourier

descriptors. They used Principal Component Analy-

sis (PCA) and Multiple Discriminant Analysis to re-

duce the feature dimensionality. For the recognition

process, they used the nearest neighbor classifier.

Kale et al. (2002) used the width vector feature

analysis proposed in (Kale et al., 2004) to identify

humans through their gaits. Width vector is the dif-

ference between the left and right boundaries in the

binary silhouette representation space. As a classifier,

they used Hidden Markov Model (HMM) for recog-

nition. The main drawback of their approach is that

it requires huge training data (more than 5,000 sam-

ples), which is not practical in gait application where

the data is very limited. Moreover, HMM perfor-

mance is sensitive to parameters initialization such as

the number of states. Also, the viewing angle affects

the overall recognition performance.

Zhang et al. (2010) proposed a new gait fea-

ture representation and called it Active Energy Image

(AEI). AEI shows the actively moving regions. Suc-

cessive frames are subtracted from each other and all

differences are then summed and normalized. AEI re-

duces the effect of noise on the silhouette images. The

authors applied two-dimensional Locality Preserving

Projections (2D-LPP) to reduce dimensionality. They

got high rate of recognition on the CASIA B dataset.

Wang et al. (2002) combined static and dynamic

features to achieve high accuracy on the Soton gait

database. The bidimensional silhouette was converted

into unidimensional distance signal. For each sil-

houette, the distance from the origin into predefined

points on the boundary of the silhouette was com-

puted to represent the dynamic features. All distance

signals were normalized using the magnitude and then

exposed to eigen-based analysis for dimensionality

reduction. Features like height and aspect ratios of

the silhouette were used as static features and com-

bined with the dynamic features to get the benefits of

both. For recognition, a nearest neighbor technique

was used.

Lee (2001) divided the binary silhouette of a walk-

ing human into seven elliptical-shaped regions. The

walking person was perpendicular to the image plane.

View and appearance based approach was used to

transform the person image into the image plane. Fea-

tures were extracted from the seven ellipses in form of

parameters. However, the parameters were exposed

to noise and it was difficult to find the periodicity us-

ing these features. As an efficient solution, mean and

standard deviation of the features were computed to

be used as the final summary features.

Han and Bhanu (2006a) proposed a new effective

method to summarize the silhouette sequence spa-

tiotemporally into a Gait Energy Image (GEI). Gait

cycle was extracted from the gait sequence of silhou-

ette and then all involved frames were summed and

Gait-based Recognition for Human Identification using Fuzzy Local Binary Patterns

315

normalized to get the GEI image. GEI describes how

motion proceeds and which regions are more involved

in motion, the brighter it is in the GEI image. Sev-

eral gait recognition approaches relied on features ex-

tracted from GEIs (Li et al., 2012; Huang et al., 2013;

Wang et al., 2014; Mansur et al., 2014). However,

they used reduced-dimensionality GEIs or applied the

feature extraction algorithm on the holistic GEI.

Chen et al. (2010) proposed a dimensionality re-

duction method called tensor-based Riemannian man-

ifold distance-approximating projection (TRIMAP).

A graph was constructed from the given data in a

way that preserves the geodesic distance between

data points. Then, the graph was projected into a

lower dimensional space by tensor-based optimiza-

tion methods. The authors used Gabor filter to ex-

tract features from GEI representation of gait image

sequences and applied their dimensionality reduction

on the extracted features.

Nizami et al. (2010) divided the whole gait se-

quence into subsets and derived their own summariza-

tion method called Moving Motion Silhouette Images

(MMSI) for each subset. Independent Component

Analysis (ICA) was used for dimensionality reduc-

tion purpose. Probabilistic Support Vector Machine

(SVM) was used to classify the independent compo-

nents. They evaluated the method on CASIA A and

SotonBig datasets.

Abdelkader (2002) tracked the walker in video

surveillance using bounding boxes. The frequency of

walking and stride length were then extracted through

these bounding boxes. To reduce the effect of the pose

of the walker, the height was included as a feature as

well. The recognition rate was 51% and enhanced to

65% using 2-dimensional and 4-dimensional feature

vectors respectively.

Lu and Zhang (2007) proposed a fusion strat-

egy to improve the classification performance in gait-

based human identification. Three features were used:

Fourier descriptor, wavelet descriptor, and pseudo-

Zernike moment. First, the silhouettes were extracted

and binarized. Then, the three types of features were

extracted from the binary silhouettes and ICA was

used for dimensionality reduction. The authors per-

formed the fusion on the decision level not the feature

level. The match scores for each feature in each view

were fused using the product of sum fusion strategy.

Genetic fuzzy SVM (GFSVM) was used as the clas-

sifier. The experiments were conducted on CASIA A

(20 subjects) and AUXT (50 subjects) datasets. Each

subject has 3 different views and 4 sequences in each

view. They achieved 95% recognition rate.

Figure 1: Gait recognition framework.

3 METHODOLOGY

In this section, we describe the proposed methodol-

ogy for gait recognition. Figure 1 shows an outline of

the proposed framework. Our approach is based on

the generation of GEI and applying FLBP to extract

effective features. However, unlike earlier GEI-based

approaches for gait recognition, which mainly utilize

the holistic GEI image, our approach applies FLBP

feature extraction in non-overlapped regions.

3.1 Motion Sequence Representation

Human silhouettes are extracted for various motion

frames by background subtraction and thresholding,

shadow elimination, morphological postprocessing

and normalization. GEI image is then calculated to

represent the motion sequence of a particular cycle in

a single image while preserving the temporal infor-

mation. The formula to calculate GEI is as follows

(Han and Bhanu, 2006b):

G(x,y) =

1

M

N

∑

t=1

B(x,y,t) (1)

where M is the number of frames in a complete cycle

in the silhouette sequence, x and y are the spatial co-

ordinate, and B(x,y,t) is the binary silhouette of the

t-th frame.

3.2 Feature Extraction

3.2.1 Crisp Local Binary Patterns (LBP)

The crisp form of local binary patterns uses the prop-

erties of the neighborhood pixels to describe each

pixel. It is computationally simple, efficient, resistant

to gray level changes made by lighting variations. It

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

316

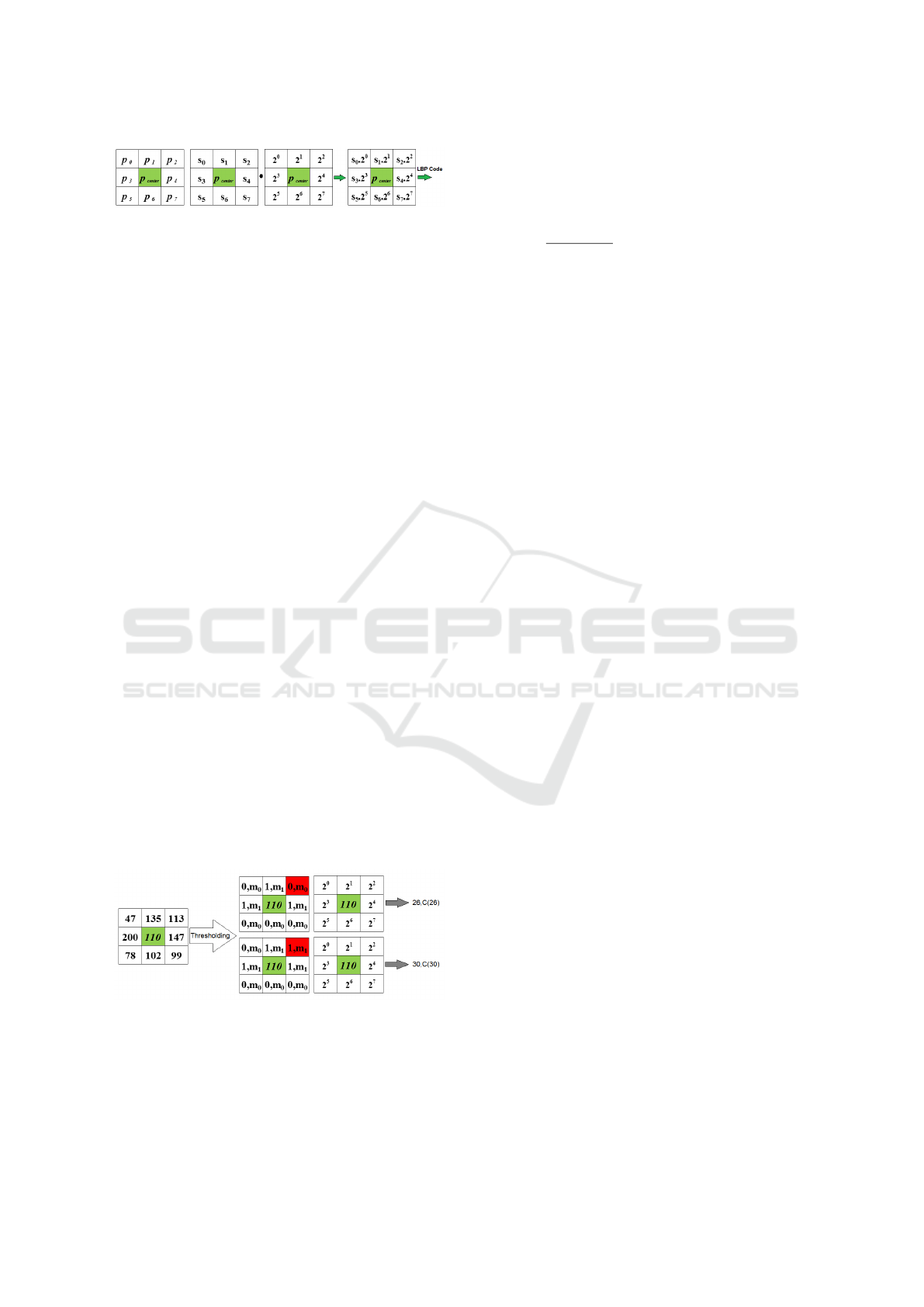

Figure 2: An example of LBP computation.

has the ability to capture fine texture details. The main

idea behind LBP is to extract the local micropatterns

in an image and to describe their distribution through

a histogram (in our case we used 256 bins). Each

pixel in the image p

center

is compared to its neighbor-

ing pixels p

i

and an LBP code is computed as follows

(Brahnam et al., 2014):

LBP

center

=

N−1

∑

i=0

s(p

i

− p

center

)2

i

(2)

where N is the number of neighboring pixels and s(x)

is an indicator function such that s(x ) = 1 if x ≥ 0 and

s(x) = 0 otherwise. After passing the operator over

the whole image or block, a 256-bin histogram of the

binary patterns is constructed to be used as the feature

vector. Figure 2 shows an illustrative example of LBP

computation with N = 8.

Despite the good characteristics of crisp LBP in

representing textures, it cannot handle all the machine

learning related problems. It uses hard thresholding

in computing its code and thus is more sensitive to

noise and has less discrimination power. Also, the

classifiers used directly affect the performance.

3.2.2 Fuzzy Local Binary Patterns (FLBP)

Fuzzy LBP (FLBP) (Iakovidis et al., 2008) incorpo-

rates fuzzy logic with LBP in order to alleviate the

effect of noise on LBP and increase its distinguish-

ing capability. The difference between crisp LBP and

Fuzzy LBP is that in FLBP each pixel can be char-

acterized by more than one LBP code which in turn

contributes in more than one bin of FLBP histogram.

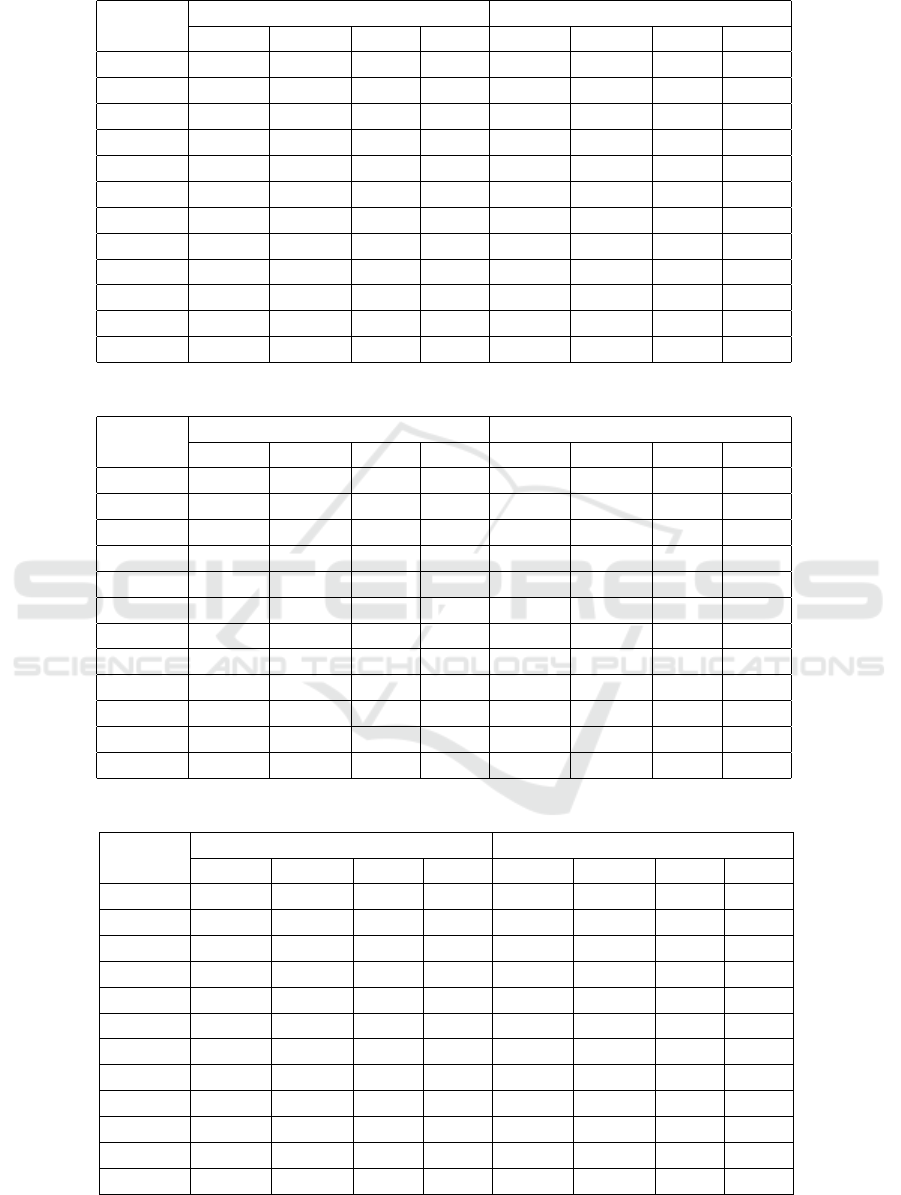

Figure 3: An example of FLBP computation.

An example of FLBP computation is shown in

Figure 3. Two membership functions are computed

m

1

() and m

0

() which indicate to what extent a neigh-

boring pixel p

i

has a greater or smaller gray value than

p

center

, respectively. T is the threshold parameter that

controls the degree of fuzziness. In our experiments,

T is set to 5. The calculations of membership func-

tions are as follows:

m

0

(i) =

0 p

i

≥ p

center

+ T

T −p

i

+p

center

2.T

p

center

− T < p

i

< p

center

+ T

1 p

i

≤ p

center

− T

(3)

m

1

(i) = 1 − m

0

(i) (4)

Unlike LBP, each 3 ×3 neighborhood can be char-

acterized by more than one LBP code. The member-

ship functions m

1

() and m

0

() are used to determine

the contribution of each LBP code to a single bin of

the FLBP histogram. The contribution of each LBP is

defined as follows:

C(LBP) =

8

∏

i=0

m

s

i

(i) (5)

where s

i

∈ {0, 1}. The sum of all contributions of a

single 3 × 3 neighborhood is always equal to unity as

follows:

255

∑

LBP=0

C(LBP) = 1 (6)

Crisp LBP histograms may have bins of zero

value. However, FLBP histograms have no zero-

valued bins and thus are more informative than the

crisp LBP.

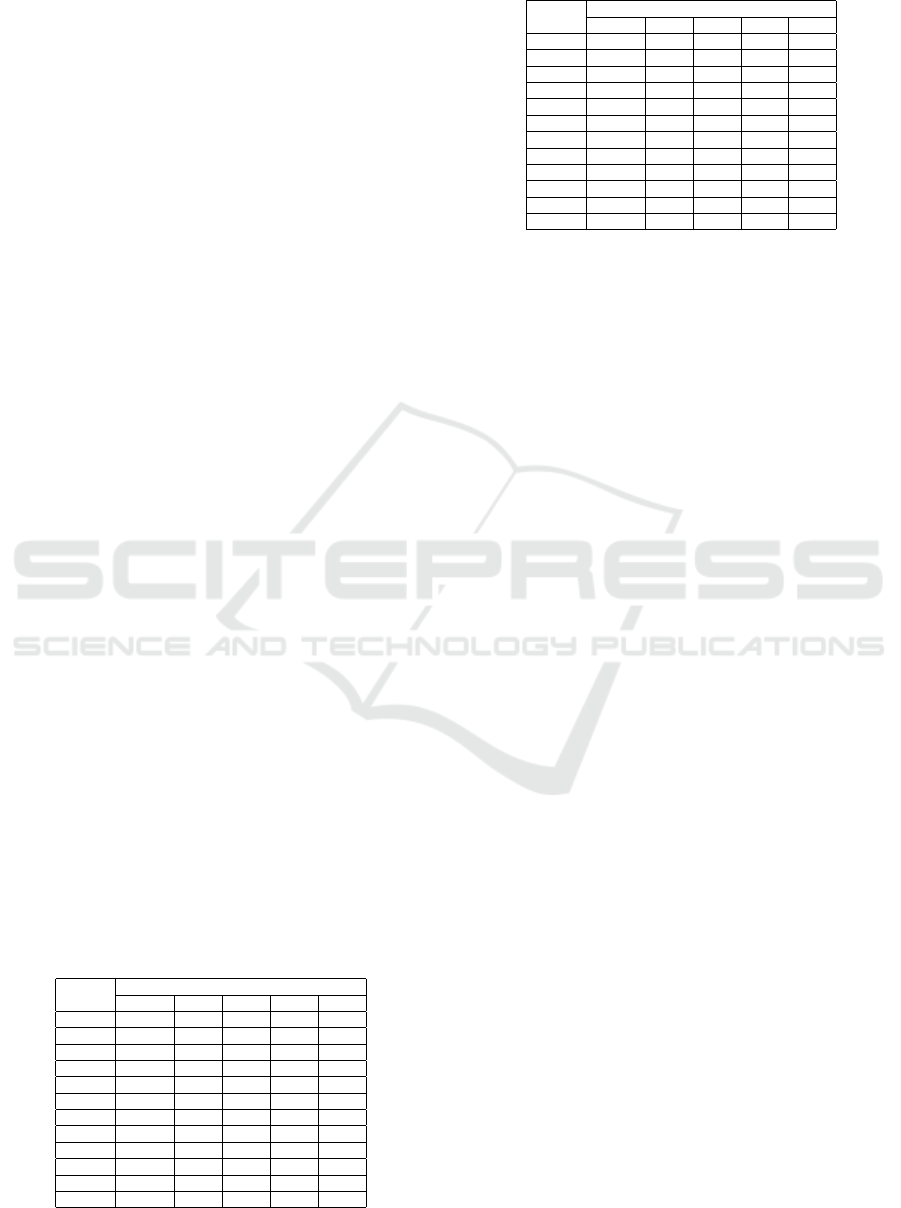

3.3 Partitioning

First, GEI bounding box is automatically extracted

as a preprocessing step as illustrated left side of Fig-

ure 4. To enhance the performance of FLBP features,

we explored partitioning the GEI into different-sized

non-overlapping predefined regions. The partition-

ing has been conducted as a fraction of the subject’s

height and width and denoted by horizontal and ver-

tical lines. The underlying idea is to separate mov-

ing parts such as head, arms, legs, etc. After nor-

malization and alignment of GEI, we statically set

the boundaries between regions. For example, we set

the head part to include about 19% of the whole sub-

ject’s height. Figure 4 shows two examples of non-

overlapping partitioning into 7 and 5 regions. Differ-

ent partitioning scenarios are evaluated in our experi-

ments.

3.4 Gait Classification

In this stage, a support vector machine (SVM) clas-

sifier with a linear kernel is used for gait recognition

using the extracted feature vectors. There are several

Gait-based Recognition for Human Identification using Fuzzy Local Binary Patterns

317

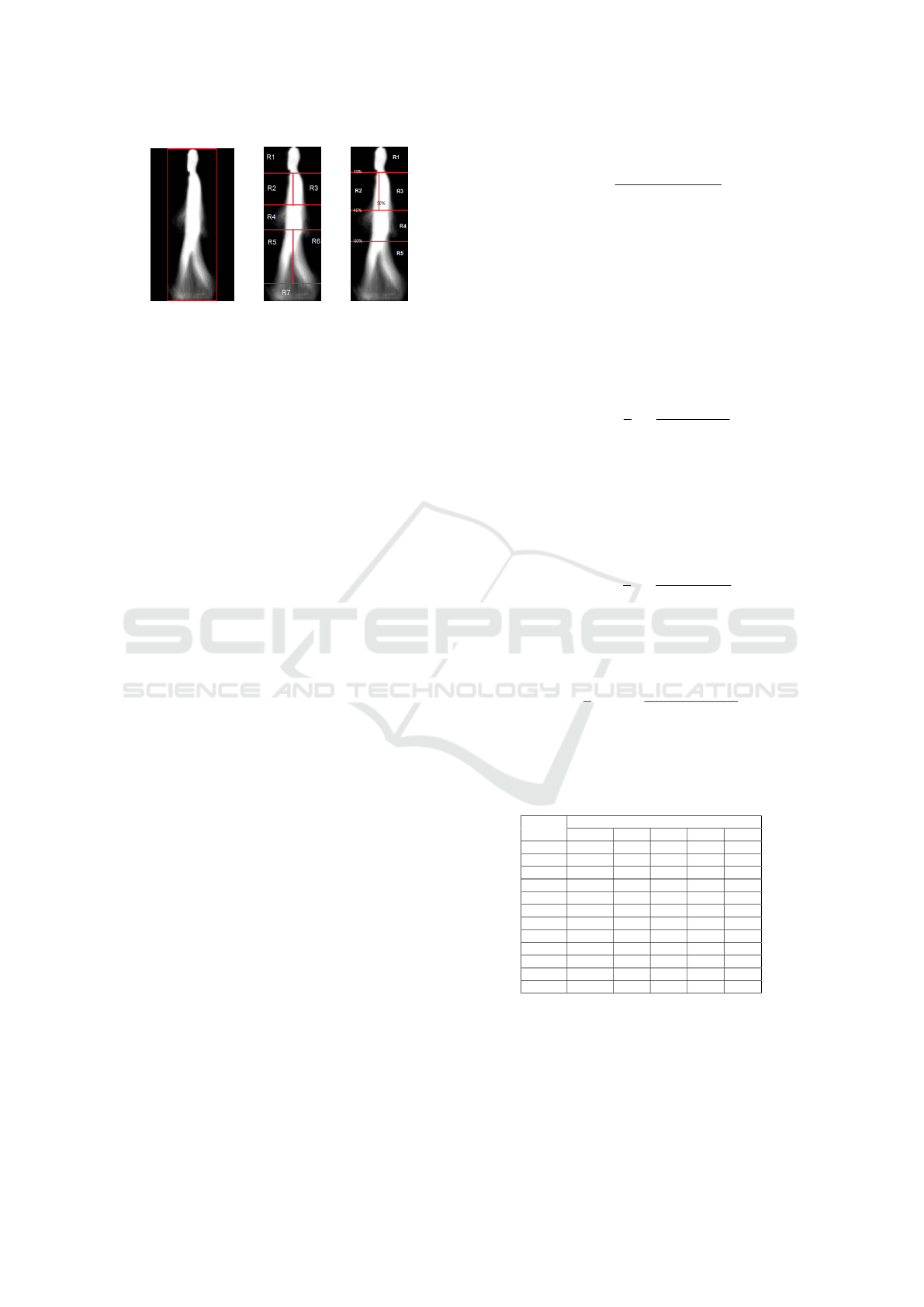

Table 1: Performance comparison of LBP and FLBP under Normal-Walking covariate without partitioning.

Angle(

◦

)

LBP FLBP

PRE

avg

REC

avg

F

avg

ACC PRE

avg

REC

avg

F

avg

ACC

0 54.00 56.00 52.00 56.90 75.00 74.00 72.00 74.14

18 68.00 66.00 64.00 66.81 79.00 78.00 76.00 78.45

36 60.00 60.00 57.00 60.35 69.00 67.00 65.00 67.24

54 56.00 56.00 53.00 56.90 74.00 74.00 71.00 74.14

72 70.00 68.00 67.00 68.54 74.00 75.00 73.00 75.43

90 72.00 73.00 70.00 73.28 79.00 78.00 76.00 78.02

108 68.00 69.00 66.00 68.97 75.00 76.00 73.00 76.29

126 62.00 62.00 60.00 62.50 77.00 76.00 74.00 75.86

144 62.00 61.00 58.00 61.21 76.00 75.00 73.00 75.00

162 67.00 69.00 65.00 68.97 80.00 78.00 76.00 77.59

180 54.00 57.00 52.00 57.33 73.00 71.00 70.00 70.69

Avg. 63.00 63.36 60.36 63.80 75.55 74.73 72.64 74.80

Table 2: Performance comparison of LBP and FLBP under Carrying-Bag covariate without partitioning.

Angle(

◦

)

LBP FLBP

PRE

avg

REC

avg

F

avg

ACC PRE

avg

REC

avg

F

avg

ACC

0 24.00 28.00 23.00 28.02 38.00 40.00 36.00 40.85

18 43.00 43.00 40.00 43.10 43.00 43.00 39.00 43.54

36 33.00 34.00 31.00 34.05 28.00 35.00 30.00 36.91

54 29.00 30.00 27.00 30.60 30.00 34.00 29.00 33.62

72 34.00 34.00 30.00 34.05 28.00 35.00 30.00 36.72

90 35.00 37.00 34.00 37.50 38.00 40.00 36.00 40.10

108 32.00 34.00 30.00 34.48 33.00 38.00 33.00 38.90

126 31.00 31.00 28.00 31.47 31.00 31.00 28.00 31.16

144 25.00 28.00 24.00 28.02 26.00 31.00 26.00 30.16

162 33.00 35.00 31.00 35.35 38.00 40.00 36.00 40.55

180 27.00 29.00 25.00 29.74 33.00 38.00 33.00 37.35

Avg. 31.45 33.00 29.36 33.31 33.27 36.82 32.36 37.26

Table 3: Performance comparison of LBP and FLBP under Wearing-Coat covariate without partitioning.

Angle(

◦

)

LBP FLBP

PRE

avg

REC

avg

F

avg

ACC PRE

avg

REC

avg

F

avg

ACC

0 7.00 9.00 7.00 9.91 8.00 11.00 8.00 11.33

18 7.00 9.00 7.00 9.91 14.00 16.00 13.00 16.50

36 13.00 15.00 13.00 15.95 16.00 18.00 14.00 17.62

54 16.00 18.00 15.00 18.10 17.00 20.00 17.00 20.64

72 11.00 16.00 12.00 16.38 17.00 20.00 17.00 20.36

90 12.00 15.00 12.00 15.09 18.00 21.00 17.00 21.07

108 10.00 13.00 10.00 13.79 14.00 16.00 13.00 16.50

126 14.00 17.00 14.00 17.24 16.00 18.00 14.00 18.52

144 9.00 10.00 8.00 10.78 10.00 14.00 10.00 14.81

162 7.00 10.00 6.00 10.78 11.00 13.00 11.00 13.91

180 8.00 11.00 8.00 11.21 11.00 13.00 11.00 13.91

Avg. 10.36 13.00 10.18 13.56 13.82 16.36 13.18 16.83

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

318

Figure 4: Bounding box and two examples of non-

overlapping partitioning of GEI with 7 and 5 regions.

implementations of SVM. In our study, we built our

model using LibSVM which implements one-against-

one for multi-class classification. If k is the number

of subjects under investigation, then k(k −1)/2 binary

classifiers are constructed. Each classifier is trained

on data belonging to two classes. Then max-win vot-

ing scheme is used to decide the predicted class. If

there is a tie (more than one class has identical max

vote, the one with the smaller index is chosen). We

could also use one-versus-all, but based on the com-

parisons conducted in (Hsu and Lin, 2002), the one-

versus-one training time was shorter with high accu-

racy.

4 EVALUATION

4.1 Dataset

The proposed approach is evaluated on CASIA B;

which is a large multiview gait database maintained

by the Institute of Automation, Chinese Academy of

Sciences (Yu et al., 2006). It includes sequence sam-

ples of 124 subjects of 93 males and 31 females. Gait

sequences for each subject were captured from 11 dif-

ferent views in an indoor environment with simple

background. Each subject was asked to walk 10 times

through a straight line of concrete ground (6 normal

walking, 2 wearing a coat, 2 carrying a bag). At each

walking, there were 11 cameras capturing the subject

walking. Consequently, each subject has 110 video

sequences and the database contains 110 × 124 =

13640 total video sequences for all subjects.

4.2 Performance Measures

We used four measures to evaluate and compare

the performance of gait recognitions based on LBP

and FLBP. These measures are: accuracy, precision,

recall and F-measure. The accuracy is calculated as

follows:

ACC =

∑

S

i=1

T P

i

∑

S

i=1

(T P

i

+ FN

i

)

(7)

where S is the number of classes (i.e. subjects), T P

i

is the number of subjects that are correctly predicted

to be of class i, and T N

i

is the number of subjects

of class i that are incorrectly predicted to be of other

classes. The precision measures the relevancy of re-

sults. In other words, it is the fraction of relevant re-

trieved instances. High value of precision indicates

a low false positive rate and shows that the classifier

and features are more accurate. We used the average

precision for all subjects which is defined as follows:

PRE

avg

=

1

S

S

∑

i=1

T P

i

(T P

i

+ FP

i

)

(8)

The recall measures how many relevant instances

are correctly retrieved. High value of recall indicates

a low false negative rate and shows that the classifier

is returning the majority of the positive instances. We

used the average precision for all subjects which is

defined as follows:

REC

avg

=

1

S

S

∑

i=1

T P

i

(T P

i

+ FN

i

)

(9)

The F-measure is the harmonic mean of precision

and recall for each class. Then we used the average

F-measure as given by:

F

avg

=

1

S

S

∑

i=1

2 ·

PRE

i

× REC

i

(PRE

i

+ REC

i

)

(10)

where PRE

i

and REC

i

are the precision and recall for

class i, respectively.

Table 4: Comparison of recognition rates under Normal-

Walking with different non-overlapping partitioning.

Angle(

◦

)

Number of Regions

Holistic 5 7 8 10

0 74.14 96.55 97.41 98.71 98.71

18 78.45 96.98 97.85 98.71 98.28

36 67.24 92.67 94.83 96.12 95.26

54 74.14 95.69 95.69 97.41 98.28

72 75.43 94.4 94.83 96.55 97.41

90 78.02 92.67 94.4 95.26 95.69

108 76.29 96.55 96.12 97.85 97.85

126 75.86 95.26 96.55 96.98 96.55

144 75 96.55 96.12 96.55 97.41

162 77.59 96.55 98.28 97.41 98.28

180 70.69 96.12 98.28 99.14 99.14

Avg. 74.80 95.45 96.40 97.34 97.53

4.3 Results and Discussion

The performance of our proposed approach is evalu-

ated under different environmental conditions using

Matlab implementation. The experiments setup is

similar to the one adopted by the authors of CASIA

Gait-based Recognition for Human Identification using Fuzzy Local Binary Patterns

319

B database (Yu et al., 2006). The galley set of nor-

mal walking of all subjects is always used to train

the SVM model. Three sets under different covari-

ates are used as the probe sets as follows: normal

walking, carrying bag, and wearing coat. Sequences

of subjects under normal walking were chosen to be

the gallery set as proposed by the authors of CASIA

B dataset. Probe sets are taken in three different co-

variates: walking normally, carrying bag, and wearing

coat.

First, the proposed approach was applied on the

GEI without any partitioning. Then, FLBP is applied

and compared with LBP. All comparisons were con-

ducted in terms of recognition rates (accuracy), preci-

sion, recall and F-measure. As shown in Tables 1, 2,

and 3, FLBP-based features have better accuracy for

each covariate.

To evaluate the effect of partitioning on the recog-

nition rate, a group of experiments is designed. The

results are shown in Tables 4, 5, and 6 for three dif-

ferent scenarios. These results demonstrate that using

the holistic image has lower performance. Moreover,

it is clear that more partitions lead to enhanced results.

However, it is not a guarantee that with this number

of partitions we can always get the best performance

in all cases.

5 CONCLUSIONS

In this paper, a fuzzy version of LBP was investi-

gated and applied for gait recognition. The GEIs im-

ages of CASIA B dataset were partitioned into non-

overlapping regions. Then, we investigated FLBP op-

erator to extract more local discriminative gait fea-

tures. The experimental results showed that the pro-

posed framework is outperforming LBP. Moreover,

the results demonstrated that using partitioning can

enhance the performance to promising levels. Future

work can include the investigation of FLBP on other

gait datasets and representations.

Table 5: Comparison of recognition rates under Carrying-

Bag with different non-overlapping partitioning.

Angle(

◦

)

Number of Regions

Holistic 5 7 8 10

0 40.85 62.5 69.4 72.85 73.71

18 43.54 48.71 52.16 60.78 58.19

36 36.91 44.4 49.14 48.71 50.43

54 33.62 33.19 35.35 43.54 40.95

72 36.72 31.9 34.91 35.35 35.78

90 40.1 36.21 42.24 34.05 35.35

108 38.9 32.76 38.79 25.86 30.17

126 31.16 35.35 36.64 36.64 37.08

144 30.16 40.52 48.71 41.38 45.26

162 40.55 59.91 63.36 65.09 63.79

180 37.35 62.07 66.81 65.95 68.97

Avg. 37.26 44.32 48.86 48.2 49.06

Table 6: Comparison of recognition rates under Wearing-

Coat with different non-overlapping partitioning.

Angle(

◦

)

Number of Regions

Holistic 5 7 8 10

0 11.33 19.83 23.71 34.91 33.62

18 16.5 27.16 31.47 35.78 34.48

36 17.62 22.41 26.72 36.64 33.19

54 20.64 25.86 31.04 40.52 43

72 20.36 28.45 31.9 34.91 34

90 21.07 28.88 33.62 41.38 41.81

108 16.5 29.31 37.93 36.21 38.36

126 18.52 22.41 27.16 37.5 35.35

144 14.81 25.43 27.16 37.07 36.64

162 13.91 33.62 34.48 37.5 37.93

180 13.9 31.04 38.79 39.22 39.22

Avg. 16.83 26.76 31.27 37.42 37.05

ACKNOWLEDGEMENT

The authors would like to thank King Fahd Uni-

versity for Petroleum and Minerals (KFUPM), and

Hadhramout Establishment for Human Development

for their support during this work.

REFERENCES

Abdelkader, C. B. (2002). Stride and cadence as a biometric

in automatic person identification and verification. In

Proc. 5th IEEE International Conf. on Automatic Face

and Gesture Recognition.

Ahonen, T., Hadid, A., and Pietikainen, M. (2006). Face

description with local binary patterns: Application to

face recognition. IEEE Transactions on Pattern Anal-

ysis and Machine Intelligence, 28(12):2037–2041.

Bhanu, B. and Han, J. (2002). Individual recognition by

kinematic-based gait analysis. In Proceedings of 16th

International Conference on Pattern Recognition.

Brahnam, S., Jain, L. C., Nanni, L., and Lumini, A. (2014).

Local Binary Patterns - New Variants and Applica-

tions. Springer-Verlag Berlin Heidelberg 2014.

Chen, C., Zhang, J., and Fleischer, R. (2010). Distance ap-

proximating dimension reduction of riemannian man-

ifolds. IEEE Transactions on Systems, Man, and Cy-

bernetics, Part B: Cybernetics, 40(1):208–217.

Han, J. and Bhanu, B. (2006a). Individual recognition us-

ing gait energy image. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 28(2):316–322.

Han, J. and Bhanu, B. (2006b). Individual recognition using

gait energy image. IEEE Trans. Pattern Analysis and

Machine Intelligence, 28(2):316–322.

Ho, M.-F., Chen, K.-Z., and Huang, C.-L. (2009). Gait anal-

ysis for human walking paths and identities recogni-

tion. In IEEE International Conference on Multimedia

and Expo (ICME).

Hsu, C.-W. and Lin, C.-J. (2002). A comparison of methods

for multiclass support vector machines. IEEE Trans.

Neural Networks, 13(2):415–425.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

320

Hu, M., Wang, Y., Zhang, Z., Zhang, D., and Little, J.

(2013). Incremental learning for video-based gait

recognition with lbp flow. IEEE Transactions on Cy-

bernetics, 43(1):77–89.

Huang, D.-Y., Lin, T.-W., Hu, W.-C., and Cheng, C.-H.

(2013). Gait recognition based on gabor wavelets and

modified gait energy image for human identification.

Journal of Electronic Imaging, 22(4).

Iakovidis, D., Keramidas, E., and Maroulis, D. (2008).

Fuzzy local binary patterns for ultrasound texture

characterization. In Campilho, A. and Kamel, M., edi-

tors, Image Analysis and Recognition, volume 5112 of

Lecture Notes in Computer Science, pages 750–759.

Springer Berlin Heidelberg.

Kale, A., Chowdhury, A., and Chellappa, R. (2003). To-

wards a view invariant gait recognition algorithm. In

Proceedings of IEEE Conference on Advanced Video

and Signal Based Surveillance.

Kale, A., Rajagopalan, A., Cuntoor, N., and Kruger, V.

(2002). Gait-based recognition of humans using con-

tinuous hmms. In Proc. 5th IEEE International Conf.

on Automatic Face and Gesture Recognition.

Kale, A., Sundaresan, A., Rajagopalan, A., Cuntoor, N.,

Roy-Chowdhury, A., Kruger, V., and Chellappa, R.

(2004). Identification of humans using gait. IEEE

Transactions on Image Processing, 13(9):1163–1173.

Kellokumpu, V., Zhao, G., Li, S., and Pietik

¨

ainen, M.

(2009). Dynamic texture based gait recognition.

In Advances in Biometrics, volume 5558 of Lecture

Notes in Computer Science. Springer Berlin Heidel-

berg.

Lee, L. (2001). Gait dynamics for recognition and classifi-

cation. In Proceedings of the 5th IEEE International

Conference on Automatic Face and Gesture Recogni-

tion (AFGR).

Lee, L. and Grimson, W. (2002). Gait analysis for recogni-

tion and classification. In Proceedings of Fifth IEEE

International Conference on Automatic Face and Ges-

ture Recognition, pages 148–155.

Lee, T. K. M., Belkhatir, M., and Sanei, S. (2014). A com-

prehensive review of past and present vision-based

techniques for gait recognition. Multimedia Tools and

Applications, 72(3):2833–2869.

Li, C.-R., Li, J.-P., Yang, X.-C., and Liang, Z.-W. (2012).

Gait recognition using the magnitude and phase of

quaternion wavelet transform. In International Con-

ference on Wavelet Active Media Technology and In-

formation Processing (ICWAMTIP).

Lu, J. and Zhang, E. (2007). Gait recognition for hu-

man identification based on {ICA} and fuzzy {SVM}

through multiple views fusion. Pattern Recognition

Letters, 28(16):2401 – 2411.

Mansur, A., Makihara, Y., Muramatsu, D., and Yagi, Y.

(2014). Cross-view gait recognition using view-

dependent discriminative analysis. In IEEE Interna-

tional Joint Conference on Biometrics (IJCB).

Niyogi, S. and Adelson, E. (1994). Analyzing and rec-

ognizing walking figures in xyt. In Proceedings of

IEEE Computer Society Conference on Computer Vi-

sion and Pattern Recognition, pages 469–474.

Nizami, I. F., Hong, S., Lee, H., Lee, B., and Kim, E.

(2010). Automatic gait recognition based on proba-

bilistic approach. International Journal of Imaging

Systems and Technology, 20(4):400–408.

Ojala, T., Pietikainen, M., and Maenpaa, T. (2002). Mul-

tiresolution gray-scale and rotation invariant texture

classification with local binary patterns. IEEE Trans-

actions on Pattern Analysis and Machine Intelligence,

24(7):971–987.

Ran, Y., Weiss, I., Zheng, Q., and Davis, L. (2007). Pedes-

trian detection via periodic motion analysis. Interna-

tional Journal of Computer Vision, 71(2).

Sarkar, S., Phillips, P., Liu, Z., Vega, I., Grother, P., and

Bowyer, K. (2005). The humanid gait challenge prob-

lem: data sets, performance, and analysis. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 27(2):162–177.

Wang, K., Xing, X., Yan, T., and Lv, Z. (2014). Couple

metric learning based on separable criteria with its ap-

plication in cross-view gait recognition. In Biometric

Recognition, volume 8833 of Springer Lecture Notes

in Computer Science, pages 347–356.

Wang, L., Hu, W., and Tan, T. (2002). A new attempt to

gait-based human identification. In Proc. 16th Inter-

national Conference on Pattern Recognition.

Yu, S., Tan, D., and Tan, T. (2006). A framework for eval-

uating the effect of view angle, clothing and carrying

condition on gait recognition. In Proc. 18th Interna-

tional Conf. on Pattern Recognition, volume 4, pages

441–444.

Zhang, E., Zhao, Y., and Xiong, W. (2010). Active energy

image plus 2dlpp for gait recognition. Signal Process-

ing, 90(7):2295 – 2302.

Zhao, G. and Pietikainen, M. (2007). Dynamic tex-

ture recognition using local binary patterns with an

application to facial expressions. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

29(6):915–928.

Gait-based Recognition for Human Identification using Fuzzy Local Binary Patterns

321