Risk-aware Planning in BDI Agents

Ronan Killough, Kim Bauters, Kevin McAreavey, Weiru Liu and Jun Hong

Queen’s University Belfast, Belfast, U.K.

Keywords:

Online Planning, BDI Agent, Risk Awareness, Decision Making.

Abstract:

The ability of an autonomous agent to select rational actions is vital in enabling it to achieve its goals. To do

so effectively in a high-stakes setting, the agent must be capable of considering the risk and potential reward

of both immediate and future actions. In this paper we provide a novel method for calculating risk alongside

utility in online planning algorithms. We integrate such a risk-aware planner with a BDI agent, allowing us

to build agents that can set their risk aversion levels dynamically based on their changing beliefs about the

environment. To guide the design of a risk-aware agent we propose a number of principles which such an

agent should adhere to and show how our proposed framework satisfies these principles. Finally, we evaluate

our approach and demonstrate that a dynamically risk-averse agent is capable of achieving a higher success

rate than an agent that ignores risk, while obtaining a higher utility than an agent with a static risk attitude.

1 INTRODUCTION

The Belief-Desire-Intention (BDI) model (Bratman,

1987) is a framework for designing rational agents in

which an agent is defined by its set of beliefs (what

it knows about the world), desires (what changes it

wants to bring about), and intentions (desires it has

comitted to bring about) (Rao and Georgeff, 1991).

Many implementations based on the BDI model have

been proposed in the literature, including AgentS-

peak (Rao, 1996) and Conceptual Agent Notation

(CAN) (Sardina et al., 2006) and 2APL (Dastani,

2008). However, these implementations use pre-

defined plans instead of lookahead planning to de-

scribe the execution of intentions and they ignore

the uncertainty/risk involved with undertaking ac-

tions. In real-world scenarios, actions are often un-

certain (e.g. a door may not open as expected) or risky

(e.g. line jumping may get you to checkout sooner, or

end you up at the back of the line). Risk can be in-

terpreted in many different ways. Here, however, it is

defined as the possibility of obtaining a utility/reward

lower than the expected utility due to an undesired

outcome of taking an action.

Recently, a number of papers have considered in-

tegrating first principles planners (FPPs) into BDI im-

plementations (Sardina et al., 2006; Chen et al., 2014;

Bauters et al., 2014). This allows an agent to gen-

erate custom plans when an unexpected situation is

encountered.

However, none of these contributions use the po-

tential of a planning algorithm to assess risk. By ig-

noring risk, the capabilities of such agents are limited

in high-stakes scenarios where pursuing high utility

often entails high potential costs. Instead, we want to

give an agent the ability to balance the trade-off be-

tween maximising expected utility (increasing utility)

and minimising potential costs (lowering risk).

Different agents may also have different risk aver-

sion attitudes. The approach proposed in this paper

allows an agent to assess risk alongside utility, and to

adapt to changed situations with varying levels of risk.

The contributions of this paper are threefold. First,

we propose a novel approach to calculate the risk of

an action in an online fashion by modifying existing

state-of-the-art online FPPs. Second, we define how

an agent can interact with a planner and obtain a set of

actions with associated utility and risk assessments.

Third, we define a way to assess the expected effect

of a risk reduction, and a way to induce a level of risk

aversion based on the agent’s current beliefs.

The following principles guide and motivate our

work in specifiying how a rational agent should react:

Principle 1. A rational agent will only consider an

action with a lower utility (resp. higher risk) when this

also involves a lower risk (resp. higher reward).

Principle 2. An action with a lower utility will only

be adopted when it sufficiently reduces the risk ac-

cording to the desired level of risk aversion.

Principle 3. The level of risk aversion increases as

the number of resources decreases.

322

Killough, R., Bauters, K., McAreavey, K., Liu, W. and Hong, J.

Risk-aware Planning in BDI Agents.

DOI: 10.5220/0005703103220329

In Proceedings of the 8th International Conference on Agents and Artificial Intelligence (ICAART 2016) - Volume 2, pages 322-329

ISBN: 978-989-758-172-4

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

We later show that our work satisfies these princi-

ples.

We also consider the following running example.

A number of robots are trying to reach a destination

(e.g. nuclear reactor) in succession to avoid a disas-

ter (e.g. a meltdown). The behaviour of the agents,

for simplicity, is fully determined by the underlying

risk-aware FPP. Each robot is aware of the success or

failure of the others. To reach the destination, a robot

has to cross a number of bridges. Some bridges are

narrower, implying a higher risk. In this scenario the

agents clearly need to balance risk and utility; nar-

rower bridges should only be taken when it leads to

a higher utility, and more risk-averse behaviour is re-

quired to improve the chance of mission success.

The remainder of the paper is organised as fol-

lows. Preliminaries are given in Section 2. In Sec-

tion 3, we propose a modified version of a UCT-

based online planner capable of assessing risk along-

side utility. Section 4 outlines how such a planner

can be integrated with a BDI agent to produce a set

of utility-ranked actions. The applicability of our ap-

proach is validated in Section 5 and related work and

conclusions are discussed in Section 6.

2 PRELIMINARIES

We start with some necessary preliminaries on the

CAN language, Markov Decision Processes (MDPs),

and Monte-Carlo algorithms for online planning.

BDI Agents. In CAN (Sardina et al., 2006),

an agent configuration, or agent, C is a tuple C =

hB,Π,Λ,Γ,H i. The belief base B is a set of atoms

describing the agent’s current beliefs. The plan li-

brary Π consists of a set of pre-defined plans of the

form e : ψ ← P with e an event-goal, ψ the con-

text, or preconditions, and P the plan body. The

plan body may consist of operations to add/delete be-

liefs +b,−b, trigger events !e, tests for conditions ?φ,

or execute primitive actions a. Primitive actions are

defined in the action description library Λ. The in-

tention base Γ consists of a set of (partially) executed

plans P. Finally, H is the sequence of primitive ac-

tions executed by the agent so far. A basic configura-

tion hB,H ,Pi is also often used in notation.

The operational semantics of CAN are described

in terms of configurations C and transitions C → C

0

.

A derivation rule describes in which cases the agent

can transition to a new configuration. Such a rule con-

sists of a (possibly empty) set of premises p

i

, and a

single transition relation c as conclusion:

p

1

p

2

... p

n

c

l

We refer the reader to (Sardina et al., 2006) for an

overview of the full semantics.

Online Planning in MDPs. An MDP consists of

a set of states S and actions A, a transition function

T , and a reward function R. The transition func-

tion is defined as T : S × A × S → [0,1], i.e. given a

state s and an action a, we transition to a new state

s

0

with probability T (s,a,s

0

). Rewards are defined

as R : S × A × S → R with R(s, a,s

0

) the reward for

taking action a in state s and arriving in state s

0

. A

discount factor γ prioritises immediate rewards. Our

aim is to find the best action at each state. Such a

mapping between states and actions is referred to as a

policy. Since the objective is to maximise the overall

expected reward, an optimal policy will consistently

select actions which will maximise both immediate

and potential future rewards. We can define a non-

stationary policy

1

as π

t

: S → A with t as the current

time step. The quantitative value of a policy π with an

initial state s

0

is given by:

V (π(s

0

)) = E

h−1

∑

t=0

γ

t

· R(s

t

,π

t

(s

t

),s

t+1

)

!

Here, π

t

(s

t

) produces an action according to policy

π

t

. The optimal policy π

∗

maximises V (π

∗

(s

0

)).

Finding optimal policies in this way is infeasible

as the size of the problem grows. In recent years

focus has therefore shifted to approximate methods

such as Monte Carlo Tree Search (MCTS). Planners

which employ such methods are often referred to as

online or agent-centric planners because they do not

produce a policy, but simply return the single next

best action to execute. These methods use sampling

to quickly build up the most promising part of the

search tree and allow a “good enough” action to be

returned at any time. In each cycle, (1) a leaf node is

selected, (2) that node is expanded with a new child

node, (3) a simulation of a random playout from this

new child to a terminal state (or the horizon) is per-

formed, and (4) the rewards from this simulation are

backpropagated towards the root node to guide fu-

ture searches. Improvements to the selection phase,

e.g. using UCT (Kocsis and Szepesv

´

ari, 2006), have

led to very competitive online planning algorithms.

A comprehensive overview of MCTS approaches can

be found in (Browne et al., 2012).

3 ASSESSING RISK ONLINE

In this section, we discuss how an online planner

can be modified to assess risk alongside utility. We

1

The behaviour of a nonstationary (resp. stationary) pol-

icy is dependent (resp. independent) on the current timestep.

Risk-aware Planning in BDI Agents

323

start with the popular UCT algorithm (Kocsis and

Szepesv

´

ari, 2006), which combines MCTS with a

Multi-Bandit selection procedure to balance explo-

ration and exploitation (Auer et al., 2002). We mod-

ify UCT so that it provides a set of utility-ranked

actions, each with an associated risk assessment, in-

stead of a single “best” action. UCT constructs a bi-

ased layered search tree where higher utility nodes are

explored more thoroughly while also biasing explo-

ration toward rarely visited branches (Keller and Ey-

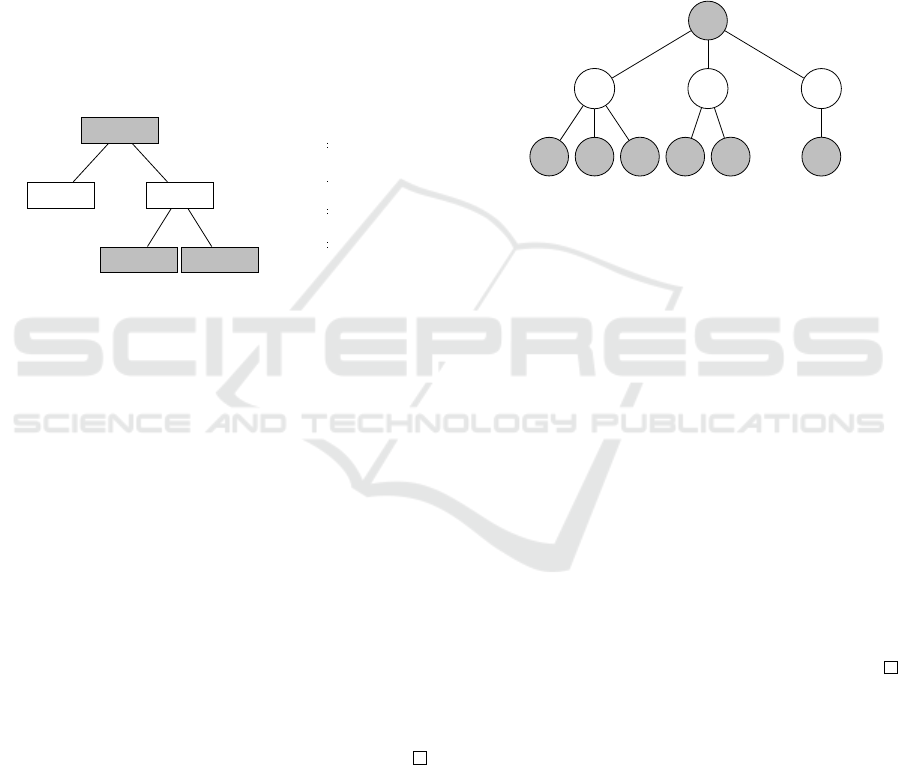

erich, 2012). As shown in Figure 1, layers in the tree

alternate between decision nodes and chance nodes

(resp. states and actions). The children of a deci-

sion node reflect the actions available at this state.

The children of a chance node reflect the states ob-

tained as a stochastic outcome of applying the action.

Decision

Chance Chance

Decision Decision

new state

stochastic outcome

action

agent decision

current state

Figure 1: Chance and decision nodes.

3.1 Establishing a Risk Metric

There are a number of ways to produce a measure of

risk from knowledge of rewards and probabilities of

occurrence (Johansen and Rausand, 2014). The most

appropriate metric for this framework is variance. We

therefore treat the immediate risk of taking an action

in a given state as the probability-weighted variance

of rewards from that action’s outcomes.

Definition 1. The immediate risk of taking action a in

state s, denoted IR(s,a), is defined as:

IR(s,a) =

∑

s

0

∈S

T (s,a,s

0

) · (R(s, a,s

0

) − E(s,a))

2

where E(s,a) =

∑

s

0

∈S

T (s,a,s

0

)R(s,a,s

0

) is the ex-

pected utility of taking action a in state s. IR(s,a) is

simply the probability weighted variance of the out-

come rewards of an action a.

To assess the risk of each immediate action, we

compute the variance of this chance node, where the

expected utility functions as the mean. However, a

number of issues prevent us from doing so directly.

One problem is that the classical way of computing

variance as shown above is an offline method which

assumes all samples are known. Crucially, an online

planning algorithm has to be able to return a solution

that is “good enough” at any moment. Therefore, this

approach for calculating variance is unsuitable. In-

stead, we must rely on approximations of variance

that work in an online setting such as the method pro-

posed in (Welford, 1962). Another problem is that we

must treat decision and chance nodes differently. In-

deed, a chance node imposes a risk due to the stochas-

tic outcome. In a decision node, an agent has a choice

of which action to take. As a result, the risk in a de-

cision node should adhere to rational decision con-

straints where it can be viewed as e.g. the risk of the

least risky action available.

s

0

a

0

s

1

15

0.5

s

2

8

0.4

s

3

-10

0.1

a

1

s

4

10

0.7

s

5

5

0.3

a

2

s

6

2

1

Figure 2.

We now formalise these ideas. For simplicity, we

first consider a full search tree to explain how we

compute risk, and how the notion of risk exposure is

used to associate a level of risk with decision nodes.

The search tree is depicted in Figure 2, with alternat-

ing decision and chance nodes, probabilities of transi-

tions on the edges, and rewards below the leaf nodes.

Example 1. Clearly, from Figure 2, we can see that

taking action a

2

does not involve risk. Indeed, we

are guaranteed of the outcome, so there is no chance

of obtaining any other utility than the expected one.

Similarly, IR(s

0

,a

2

) = 0 based on 100 samples. This

is not the case for actions a

0

and a

1

. For action a

0

it is

easy to verify that the expected utility is E(s

0

,a

0

) =

0.5·15 +0.4 ·8+ 0.1·(−10) = 9.7. However, we find

that IR(s

0

,a

0

) = 54.0 based on 100 samples. For a

1

we have E(s

0

,a

1

) = 8.5 and IR(s

0

,a

1

) = 5.3. Intu-

itively, a

0

is indeed the riskiest action since we have

the potential for a wider range of rewards.

While it is possible to simply use immediate vari-

ance as the risk indicator for an action, this would

tend toward very short-sighted behaviour with regards

to risk. Consider the example of the choice between

joining the army and becoming an accountant. Both

immediate actions (apply for, and accept, the job)

have the same, very low, risks. The level of risk one

would be exposed to having taken these initial actions

is, however, very different. In order to make informed

decisions, our risk metric must consider both imme-

diate risk and the risk of future actions.

We only calculate the risk measure for chance

nodes, which have stochastic outcomes, as only these

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

324

nodes can convey an unexpected outcome. Risk as we

define it does not apply to decision nodes since gen-

erally there is a choice of which action to take. To

consider the risk of future actions however, we intro-

duce the notion of risk exposure to quantify the risk

of being in a state with a choice of actions.

Definition 2. Let A

s

⊆ A be the set of available actions

in state s. We have:

IRE(s) = min

a∈A

s

IR(s,a).

With IRE(s) as the immediate risk exposure of s.

We define it as such since this is the minimum

amount of risk which the agent must take. Note that

this minimum risk action is not guaranteed to be the

action the agent will take in this state if it is encoun-

tered. However, when weighing cumulative expected

utility against cumulative risk it is more useful to have

a risk measure indicating the risk the agent must take

by following a certain path, rather than some distribu-

tion of the available risks at each step.

Definition 3. Let A

s

⊆ A be the set of available actions

in state s and γ

t

be a discount factor for time t. Then

the cumulative minimum risk up to horizon h of taking

action a in state s, denoted CMR, is defined as:

CRE(s,h) = min

a∈A

s

CMR(s, a,h) (1)

CMR(s, a,h) =

h−1

∑

t=1

γ

t−1

∑

s

0

∈S

T (s,a, s

0

) ·CRE(s

0

,h−1)

!

(2)

where CRE(s,h) is the cumulative risk exposure up to

horizon h of state s such that min(

/

0) = 0.

The CRE is identical to IRE(s

0

) except that it con-

siders the cumulative minimum risk of an action a in-

stead of the immediate risk. CMR is used as our risk

metric when considering actions. Note that it is based

on the probability adjusted risk exposure of relevant

states s

0

∈ S (i.e. s

0

∈ S such that T (s,a,s

0

) > 0 ).

a

1

s

4

a

3

IR = 200

a

4

IR = 70

a

5

IR = 10

0.7

s

5

a

6

IR = 40

0.3

10

5

Figure 3.

Example 2. We now extend Figure 2 to show out-

comes and subsequent available actions from action

a

1

. From s

4

we can take actions a

3

,a

4

or a

5

and from

state s

5

we have a single action choice a

6

. The subtree

with a

1

as its root is shown in Figure 3. For simplicity,

a set of terminal state outcomes are implied for each

leaf action a

3

...a

6

. We know the immediate risk (IR)

of a

1

is 5.3 and the IR of a

3

,a

4

,a

5

and a

6

is resp. 200,

70, 10 and 40. In order to determine the CMR of tak-

ing a

1

, we must first calculate the risk exposure of

its child decision nodes s

4

and s

5

. This is given by

CRE(n) = min

c∈C

n

IR(n,c)

where n is a decision

node and each c ∈ C

n

a child chance node. These risk

exposure values will then be modulated by the deci-

sion node’s likelihood of occurrence. We thus assess

CMR of a

1

as IR(s

0

,a

1

) +CRE(s

4

) · 0.7 +CRE(s

5

) ·

0.3 = 5.3 +10 + 40 = 55.3.

3.2 Approximate Algorithms

In calculating CMR, we have thus far assumed that the

tree is fully known. However, in order to harness the

speed and tractability afforded by agent-centric plan-

ning algorithms, we provide a method of calculating

our proposed risk metric in an online fashion. This is

carried out alongside the usual accumulation of ex-

pected reward. When not all outcome rewards are

known and only a single value is sampled at a time, an

algorithm is required which is capable of maintaining

a running variance estimate based on the rewards of

the nodes which have been sampled so far.

During the backpropagation step, the reward asso-

ciated with the most recently sampled decision node

and its parent (chance node) is used to update the vari-

ance estimate of the chance node. This value is then

compared to the current variance estimates of all sib-

ling nodes and, if a sibling exists with lower variance,

then the variance of that sibling will be backpropa-

gated to the parent (decision) node instead.

An issue which arises when calculating variance

online is that accuracy is severely reduced when sam-

ple counts are very low. This is exacerbated when

we backpropagate the lowest variance node, since this

will often be a node which has not been sampled and

thus has a variance of zero. To tackle this problem,

we further modify the UCT procedure to include mul-

tiple “variance” rollouts. This provides a preliminary

estimate of the variance of a newly generated chance

node with an accuracy corresponding to the number of

rollouts performed, denoted as ρ. Unlike traditional

rollouts carried out in UCT, which simulate a play-

out of the domain until a terminal state is reached,

variance rollouts repeatedly sample the outcomes of

a single leaf decision node to build up a preliminary

estimate of immediate variance for this node.

We can now obtain a reasonably accurate variance

estimate for each action by the time it is initially sam-

Risk-aware Planning in BDI Agents

325

pled. When the sibling nodes are compared during

backpropagation, nodes with no samples are ignored.

Nodes with at least one sample can now be guaranteed

to already have a reasonably accurate variance esti-

mate. Both the variance rollout and backpropagation

steps of the algorithm are shown resp. in Algorithm 1

and Algorithm 2. The selection, expansion and roll-

out procedures are omitted for brevity as they remain

largely unchanged from a plain UCT implementation.

Algorithm 1: Variance rollout.

1: function VARROLLOUT(n)

2: if visits(n) = 0 then

3: n ← parent(n)

4: visits(n) ← visits(n) + 1

5: for i ← 0,ρ do

6: outcome ← SimulateAction(node)

7: rwd ← GetRwd(action(n),outcome)

8: risk(n) ← UpdateRisk(n,rwd)

9: risk(n) ← risk(n)/ρ

10: M(n) ← 0

11: mean(n) ← 0

12: n ← SimulateAction(n)

13: return n

Both algorithms assume that the parameter (n) is a

decision node containing a state. In Algorithm 1, the

UpdateRisk(·) function implements an online vari-

ance calculation (Welford, 1962), taking a new reward

and producing a running variance estimate, which is

then saved in risk(n).

Algorithm 2: Backpropagation.

1: function BACKPROPAGATION(n)

2: while n 6= nil do

3: cReward ← 0

4: cRisk ← 0

5: visits(n) ← visits(n) + 1

6: outcome ← state(n)

7: n ← parent(n)

8: visits(n) ← visits(n) + 1

9: rwd ← GetRwd(action(n),outcome)

10: risk(n) ← U pdateRisk(n, rwd)

11: cReward ← cReward +rwd · γ

depth

12: cRisk ← cRisk +risk(n) · γ

depth

13: lwstRisk ← lwstRSib.risk/lwstRSib.visits

14: if cRisk > lwstRisk then

15: cRisk ← lwstRisk

16: UpdateReward(n,cReward)

17: n ← parent(n)

The backpropagation algorithm shown in Algo-

rithm 2 steps back through the constructed tree un-

til the root node is reached (parent(n) = nil). Lines

8 and 9 show the reward and risk being accumulated

and discounted by γ

depth

. Lines 10 to 12 state that

if a sibling with a lower risk exists (lwstRSib), then

the value to be backpropagated (cRisk) should accu-

mulate the risk level of that sibling, instead of the risk

level of the node sampled. This has the effect of build-

ing CMR estimates for each of the root node’s chil-

dren, which correspond to the agent’s available ac-

tions. The function U pdateReward(·) adds the accu-

mulated reward from this backpropagation to the node

n’s total accumulated reward.

4 A RISK-AWARE BDI AGENT

Agents with the ability to perform lookahead plan-

ning can respond to an unforeseen event by generat-

ing custom plans for these events. In this section, we

improve on this idea by describing how an agent can

decide between actions given both utility and risk as-

sessments from an online planner. We consider sce-

narios in which we may encounter dilemmas between

risk and utility and must therefore be able to make

risk-aware decisions. To do this, the agent must have

a choice of actions from which it can select based on

some decision strategy. Such a strategy must be ca-

pable of appropriately balancing the utility and risk

associated with an action. The latter part of this sec-

tion addresses how a BDI agent can adjust its level of

risk aversion based on beliefs about its environment.

4.1 Integrating an Online Planner

Returning multiple actions from an online planner re-

quires a trivial modification to existing algorithms.

This is because online planners rank actions by util-

ity and simply return the action with highest utility.

Instead of returning a single action, we return a set

of assessed actions A, where each α ∈ A is a tuple

ha,u,ri with a as a primitive action, u the cumulative

utility approximation and r the CMR approximation

for that action, as produced by the planner. We de-

note the cumulative utility and CMR of an assessed

action α as resp. u(α) and r(α), and the primitive ac-

tion as a(α). An action with a lower utility is not

guaranteed to also have a lower risk. It is therefore

possible to have an assessed action with both a lower

utility and a higher risk than that of alternative ac-

tions. Such actions generally pose no benefit to the

agent and are referred to as irrational.

Definition 4. Let A be a set of assessed actions. The

set of rational assessed actions A

R

in A is defined as:

A

R

= {ha,u, ri ∈ A | @ha

0

,u

0

,r

0

i ∈ A,u ≤ u

0

∧r ≥ r

0

}.

The set of irrational assessed actions in A, denoted

A

I

, is defined as A

I

= A \ A

R

.

Consider the running example. A robot is one de-

cision away from its goal of reaching the reactor (also

see Figure 4). We have the assessed actions α

0

=

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

326

current

state

a

1

fail

state

fail

state

a

0

a

2

success

state

−100

0.25

+50

0.75

−100

0.5

+50

0.5

−100

0.4

100

0.6

Figure 4: A simple risk/utility trade-off.

ha

0

,12.5,4218.75i, α

1

= ha

1

,20,9600i, and α

2

=

ha

2

,−25,5625i. We can see that A

R

= {a

0

,a

1

}. The

expected utility of a

0

is 0.75 ·50 + 0.25 ·−100 = 12.5,

and its IR value is 4218.75. These same calculations

give a

1

a utility of 20 and an IR value of 9600. The

agent must decide whether to take a riskier action for

a possibility of higher gain (a

1

), or take the safer op-

tion (a

0

) and gain a lower reward if successful. This

decision must be based upon the agent’s determined

level of risk aversion, which in turn is based on its

beliefs about its current situation.

4.2 Risk-aware Decision Making

We now discuss a decision rule capable of balancing

the risk and utility of actions produced by the plan-

ner in order to decide between rational assessed ac-

tions. The BDI agent has no control over how the

FPP produces its results, but chooses from the actions

returned. The agent’s influence is therefore purely in

the post-planning phase. This rule requires a defined

risk aversion level R in order to produce a single next

best action from a set of assessed actions.

Unlike in the BDI agent framework, “goals” in

MDPs are expressed implicitly in the rewards as-

signed to action outcomes. Therefore in order to have

the planner be goal-oriented, we must assign some

relatively high reward to the goal state(s) and rela-

tively low rewards to any fail state(s)

2

. By consis-

tently selecting the best action in terms of utility, as in

plain UCT, the agent should accumulate a higher total

reward over a sequence of actions if it is successful in

reaching the goal state. However, when considering

scenarios where there are trade-offs between risk and

utility, we can observe that taking lower risk actions

increases the likelihood of success while obtaining a

lower overall reward if successful.

In order to appropriately balance utility and risk

given the information from the planner, we treat util-

ity and risk as resp. the mean and variance. The in-

terval u(α) ± R

p

r(α) provides the interval in which

2

For our purposes, goal and fail states must also be ter-

minal states.

we can reasonably expect to find the expected utility

given a risk aversion level R. Since we are risk-averse,

we only consider the lower bound of each interval,

and select the action with the maximum lower bound

to minimise our potential worst-case. For actions with

higher risk values, raising the R will widen the inter-

val more significantly than for actions with lower risk.

Thus, a risk aversion level can be defined in terms of

R that will ensure our model satisfies Principle 2.

Definition 5. Let A be a set of assessed actions and

R ∈ [0,+∞] be a risk aversion degree. Then the opti-

mal assessed action in A, denoted α

∗

, is defined as:

α

∗

= argmax

α∈A

u(α) − R

p

r(α)

.

This formula will select a “best” action α

∗

from

the set of assessed actions according to a risk aver-

sion setting R. R = 0 corresponds to absolute risk

tolerance, as the lower bound of the interval will be

equivalent to the utility. Therefore at R = 0, max-

imising the lower bound will give us the action with

the highest utility and also, if only considering ratio-

nal actions, the highest risk. As R is increased, when

considering only A

R

, the lower interval bound of high

utility actions will begin to fall below that of lower

utility actions, due to their lower associated risk val-

ues. This means as we raise R, we will increasingly

accept lower utility (u(α)) actions provided they have

a proportional reduction in associated risk (r(α)).

Proposition 1. Let A be a set of assessed actions,

given Definition 5, then α

∗

∈ A

R

.

Proof. Since ∀α

I

∈ A

I

,∃α ∈ A u(α) > u(α

I

) ∧

r(α) < r(α

I

), at R = 0, the rule defined in Defini-

tion 5 will consistently select the action with the high-

est u(α). This eliminates the possibility of an action

from A

I

being selected since otherwise u(α) > u(α

I

)

would not hold. For any given R where R > 0, the

lower bound R

p

r(α) of the interval will never be

greater than other actions’ lower bounds since other-

wise r(α) < r(α

I

) would not hold.

At R = ∞, the lowest risk action would always be

chosen, regardless of any loss of utility. We define

maxR as the value at which, for a given domain, the

probability of successfully reaching the goal is high-

est. Values above this will be more risk-averse, but

will not necessarily produce goal-oriented behaviour

in the agent. Since goals in MDPs are implicitly en-

coded in the reward values of actions; a completely

risk-averse agent will not be sufficiently utility-driven

to ensure it progresses towards its goal.

As R is increased, the agent should take paths to-

wards a goal which have higher probabilities of suc-

cess. Of course, these paths may not exist in a given

Risk-aware Planning in BDI Agents

327

p

i

+!robot failed : robots left(3) ← risk(a);

−robots left(3); +robots left(2).

+!robot failed : robots left(2) ← risk(b);

−robots left(2); +robots left(1).

Figure 5: Plan sets which alter the agent’s R value when an

event is triggered. Where for p

1

: a = −3.5,b = −1 and for

p

2

: a = −2.5,b = −2.

domain. It is perfectly possible that the path provid-

ing the highest payoff is also the path with the lowest

risk. In such domains, awareness of the risk provides

no benefit. We argue however that this risk vs. reward

dilemma is common, as committing less resources to

achieving a goal tends to entail a higher risk of failure.

As outlined in Principle 3, an agent’s risk aversion

level R should be dynamic with respect to its situation.

To achieve this, we utilise the agent’s plan library Π

and instantiate a set of pre-defined plans which al-

ter the R value when a set of preconditions are met.

We then define a CAN derivation rule describing this

behaviour. The plan’s preconditions represent states

considered to signal a change in the agent’s risk state.

This enables the agent to adopt an appropriate risk

aversion level based on its current situation.

We now extend the standard agent configuration

to include our risk aversion value R, producing a tu-

ple C = hB,Π,Λ, Γ,H ,Ri. We illustrate this idea in

the context of the running scenario by considering

three robots simultaneously trying to reach the goal.

The number of robots left is treated as an indicator of

the risk aversion level remaining robots should adopt.

This assumes awareness of the failure/success status

of the other agents. Given three robots operating si-

multaneously, the chances of reaching the goal suc-

cessfully with any given strategy is increased. Each

robot should therefore be more risk tolerant (lower R).

If a robot fails, however, the remaining robots should

become more risk-averse (higher R), to ensure a simi-

lar chance of success as before. The plans outlined in

Figure 5 increase an agent’s risk aversion level when

another agent has failed.

We now define a CAN derivation rule describing

the semantics of the risk() plan shown in Figure 5.

k ∈ R R

0

= R + k

hB,H ,risk(+k),Ri −→ hB,H ,nil,R

0

i

risk

The event robot f ailed indicates to surviving agents

that another robot has failed. Depending on the num-

ber of robots left (comprising the plan’s context), the

risk level is adjusted accordingly and the beliefs about

the number of robots remaining is updated.

Figure 6: Different levels of risk aversion for a single agent.

5 EVALUATION

We first demonstrate that raising an agent’s risk aver-

sion level will indeed cause it to take less risky actions

and, by extension, have a greater probability of suc-

cessfully reaching its goal. We illustrate this using the

running example. We assign a reward of 100 and -100

for resp. reaching the goal or falling in the pits. Neg-

ative rewards (costs) are assigned to actions based on

how much time they will take to carry out, with higher

costs for actions reflecting longer paths.

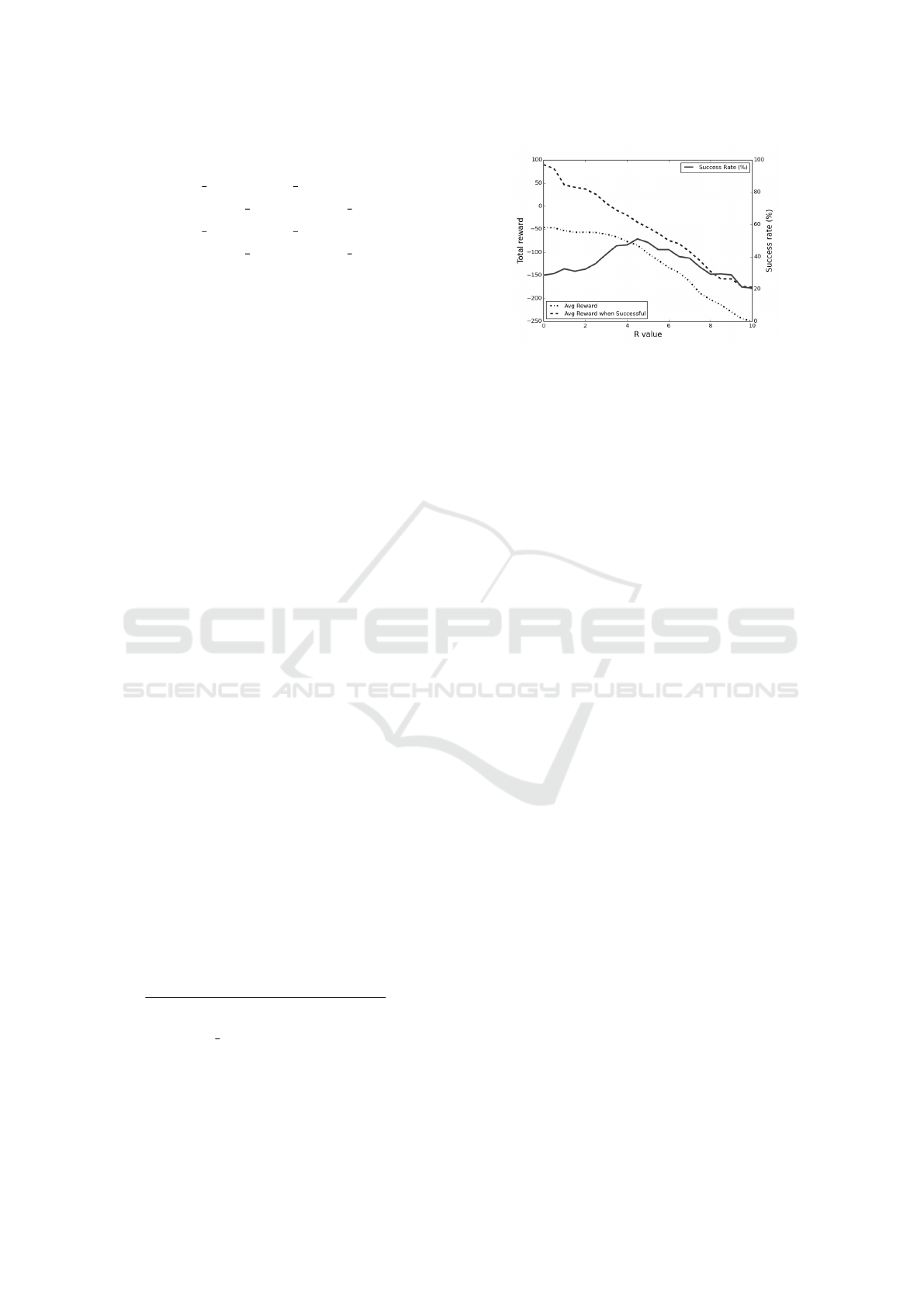

The graph in Figure 6 shows the effects of in-

creasing R values on the scenario outcome. For each

setting of R, the scenario is run through to comple-

tion (either succeeding or failing) 1000 times. As

can be seen, The probability of success initially in-

creases (at the cost of diminishing overall reward) as

R is increased, showing that increasing risk awareness

causes the agent to select less risky paths over those

with greater expected utility. However, probability of

success only increases up to a point. Further bias to-

ward risk aversion causes the agent to be overly cau-

tious and not progress toward the goal, leading to both

diminishing total reward and likelihood of success.

To observe the benefits of situation-dependant risk

awareness, we run the scenario with three agents, one

of which must reach the destination for the mission to

succeed. For comparison, we first run the scenario

with various static R values. For these agents, the

risk aversion level remains the same regardless of the

number of robots remaining. We then consider two

sets of dynamic R values with R changing depend-

ing on the number of robots remaining. Their risk

aversion level is therefore reactive to changes in the

environment. The results are shown in Figure 7. The

dynamic values correspond to plans p

1

and p

2

respec-

tively, as per Figure 5. The dynamic R values show an

increased likelihood of success with only a slightly re-

duced total reward compared to a low, static risk aver-

sion level such as R = 1.

ICAART 2016 - 8th International Conference on Agents and Artificial Intelligence

328

Figure 7: Static/dynamic R values in agent collaboration.

6 CONCLUSIONS

The MARAB algorithm (Galichet et al., 2014), is

a risk-aware multi-armed bandit algorithm. It pro-

vides an alternative to the UCB1 selection formula

and treats the conditional value at risk as the mea-

sure of branch quality. This approach can deal with

risk, but does not offer any decision making aspect.

Other approaches, such as (Liu and Koenig, 2008),

solve MDPs in a risk sensitive manner, while tak-

ing resource levels into consideration. This is done

by making use of a type of non-linear utility func-

tions known as “one-switch” utility functions. How-

ever, this approach computes offline policies, and thus

cannot readily be integrated with the highly dynamic

framework of BDI.

In this paper we presented a novel approach to cal-

culate risk alongside utility in popular MCTS algo-

rithms. We showed how such an online planner can be

integrated with a BDI agent. This integration allows

an autonomous agent to reason about its risk tolerance

level based on its current beliefs (e.g. the availability

of resources) and dynamically adjust them. It also al-

lows such an agent to react to unforeseen events, an

approach impossible in BDI agents that only use pre-

defined plans. Furthermore, our proposed framework

agrees with the principles as outlined in Section 1, en-

abling an agent to act appropriately in high-stakes en-

vironments. Experimental results underpin our theo-

retical contributions and show that taking risk dynam-

ically into account can lead to higher success rates

with only minimal reductions in utility compared to

agents with static risk aversion levels.

ACKNOWLEDGEMENTS

We would like to thank Carles Sierra, Llu

´

ıs Godo,

and Jianbing Ma for their inspiring discussions and

comments. This work is partially funded by EPSRC

PACES project (Ref: EP/J012149/1).

REFERENCES

Auer, P., Cesa-Bianchi, N., and Fischer, P. (2002). Finite-

time analysis of the multiarmed bandit problem. ML,

47(2-3):235–256.

Bauters, K., Liu, W., Hong, J., Sierra, C., and Godo, L.

(2014). CAN(PLAN)+: Extending the operational se-

mantics of the BDI architecture to deal with uncertain

information. In Proc. of UAI’14, pages 52–61.

Bratman, M. (1987). Intention, Plans, and Practical Rea-

son. Harvard University Press.

Browne, C. B., Powley, E., Whitehouse, D., Lucas, S. M.,

Cowling, P. I., Rohlfshagen, P., Tavener, S., Perez, D.,

Samothrakis, S., and Colton, S. (2012). A survey of

Monte Carlo tree search methods. IEEE Transactions

on comp. intell. and AI in games, 4(1):1–43.

Chen, Y., Bauters, K., Liu, W., Hong, J., McAreavey,

K., Sierra, C., and Godo, L. (2014). AgentSpeak+:

AgentSpeak with probabilistic planning. In Proc. of

CIMA’14, pages 15–20.

Dastani, M. (2008). 2APL: a practical agent programming

language. JAAMAS, 16(3).

Galichet, N., Sebag, M., and Teytaud, O. (2014). Explo-

ration vs exploitation vs safety: Risk-averse multi-

armed bandits. JMLR: Workshop and Conference Pro-

ceedings, 29:245–260.

Johansen, I. L. and Rausand, M. (2014). Foundations and

choice of risk metrics. Safety science, 62:386–399.

Keller, T. and Eyerich, P. (2012). PROST: Probabilistic

planning based on UCT. In Proc. of ICAPS.

Kocsis, L. and Szepesv

´

ari, C. (2006). Bandit based Monte-

Carlo planning. In Proc. of ECML’06, pages 282–293.

Liu, Y. and Koenig, S. (2008). An exact algorithm for

solving MDPs under risk-sensitive planning objec-

tives with one-switch utility functions. In Proc. of AA-

MAS’08, pages 453–460.

Rao, A. S. (1996). AgentSpeak (L): BDI agents speak out

in a logical computable language. In Agents Breaking

Away, pages 42–55. Springer.

Rao, A. S. and Georgeff, M. P. (1991). Modeling rational

agents within a BDI-architecture. KR, 91:473–484.

Sardina, S., de Silva, L., and Padgham, L. (2006). Hierar-

chical planning in BDI agent programming languages:

A formal approach. In Proc. of AAMAS’06, pages

1001–1008.

Welford, B. (1962). Note on a method for calculating cor-

rected sums of squares and products. Technometrics,

4(3):419–420.

Risk-aware Planning in BDI Agents

329