A Control Cycle for the Automatic Assisted Positioning of Auscultation

Sensors

Julio Cesar Bellido, Giuseppe De Pietro and Giovanna Sannino

Institute of High Performance Computing and Networking, CNR, Via Pietro Castellino 111, Naples, Italy

Keywords:

Automatic Assisted Sensor Positioning, Real-time Signal Processing, Auscultation, Positioning Logic,

Phonocardiography Sensor Positioning.

Abstract:

The correct positioning of wearable biomedical sensors is crucial in the use of any automatic measurement

systems. The effects of an inaccurate positioning can compromise the quality of the acquired vital waveforms,

like phonocardiograms, and can make ineffective any healthcare application which uses wearable sensors. To

solve this issue, this paper proposes an innovative control cycle for a control cycle to assist patients during the

positioning of an auscultation sensor, a digital stethoscope, for a healthcare monitoring application. The con-

trol cycle runs on a user-friendly app and, through the use of a smartphone camera, suggests the auscultation

sites overlapping the active camera view. In this way, the patient has a real-time feedback to ensure the correct

positioning of the sensor.

1 INTRODUCTION

The increasing availability of wearable technological

resources, computationally competitive and at a mod-

erate cost, makes the realization of mobile computer-

aided analysis and diagnosis systems more and more

possible.

Biomedical sensors, integrated both in commer-

cial and prototypal monitoring solutions, are com-

monly used in a lot of telemedicine applications

in heterogeneous application domains (Patel et al.,

2012). The growth of technology in integrated cir-

cuits manufacturing has brought huge innovations in

embedded and portable sensors and has opened new

interest in emerging application areas like ubiqui-

tous computing and pervasive monitoring (Wickra-

masinghe, 2013). Examples can be found in sev-

eral scientific papers, such as (Black et al., 2004) and

(Hayes et al., 2007).

Due to their specific characteristics, healthcare

systems, for continuous and non-invasive monitoring

of vital parameters and biomedical signals, have con-

solidated their effectiveness through the use of wear-

able sensors and through their integration with Health

Information Systems (HIS). These kinds of sensors,

through the possibility they provide of acquiring data

also during daily behaviour, are highly flexible in the

realization of clinical applications for various patient

profiles according to the individuals’ different charac-

teristics and lifestyles.

In general, during the use of wearable sensors,

such as the auscultation sensors which we are going

to discuss, their accurate positioning is an indispens-

able operating condition to ensure a high fidelity of

the acquired signal and, consequently, to guarantee

a high-quality analysis. Accordingly, any automatic

measuring system that makes use of wearable sensors

must take into account this consideration as an impor-

tant requirement. In fact, the quality of a biomedical

measurement depends both on the sensor sensitivity

and also, principally, on how the patient applies the

sensor.

In the case of a self-monitoring system, it becomes

crucial to consider a control cycle that helps the pa-

tient during the measurements by suggesting the cor-

rect positions of the auscultation sites. Hence, the

idea of focusing the interest of this research study on

the design of an intelligent control cycle to assist the

patient before starting the measurements and during

the signal acquisitions.

This control cycle, embedded in a mobile real-

time auscultation system, helps the patient during

his/her self-auscultation session at his/her own home

without any support from physicians. He/she follows

the visual hints presented by the application to start

and to guide the health monitoring session. If there

is any active interaction with a remote physician, the

control cycle can help to set the proper conditions for

Bellido, J., Pietro, G. and Sannino, G.

A Control Cycle for the Automatic Assisted Positioning of Auscultation Sensors.

In Proceedings of the International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AWE 2016), pages 53-60

ISBN: 978-989-758-180-9

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

53

a tele-auscultation service and respects the commit-

ment of promoting patient autonomy in the safeguard-

ing of the right to health.

With these intentions, in order to promote

widespread checks for a large catchment area, we

have chosen to implement the control cycle on mo-

bile devices, like smartphones or PDAs, taking advan-

tage, as we are going to describe, of their hardware,

in terms of interaction and calculation resources.

In summary, the paper investigates the theoretical

and practical conditions, and proposes a control cy-

cle for the assisted positioning of wearable sensors,

in order to help the self-monitoring of vital functions

in any auscultation system.

In the rest of the paper, we will introduce the back-

ground in section 2 and the state of art with its re-

lated works in section 3. In section 4 we will present

the proposed control cycle with the details of the re-

sources and the design choices made. Hints about its

usability and considerations relating to improvements

and performance evaluations will be discussed in sec-

tion 5, in which will be also presented new ideas for

future developments and perspectives. Finally, sec-

tion 6 completes the paper.

2 BACKGROUND

The positioning of sensors, like ECG electrodes or

auscultation sensors for heart or lung sounds monitor-

ing, is commonly supported by the medical staff who

assist the patient during the examination and guaran-

tee the accuracy of the monitoring session.

For a healthcare service remote from the presence

of clinical staff who can oversee the examination, for

example in a remote auscultation solution where the

patient is alone at his/her own home, it becomes nec-

essary to consider a supporting control cycle for the

sensor positioning that ensures the quality and the ac-

curacy of the acquired signals.

The problems related to a positioning logic are due

to the fact that the position of the sensor at the time of

the examination can not be determined without con-

sidering a reference system with respect to which it is

possible to evaluate that position. Therefore, it is use-

ful to make use of an external system that observes the

changes and tracks the positioning of the stethoscope

over time. This observation sensor could be an image

sensor embedded, for example, in a digital camera of

a smartphone.

The idea is to suggest to the patient the ausculta-

tion sites by displaying to him/her, overlapping with

the current active camera view, a map of the Regions

Of Interest (ROIs) dynamically calculated with com-

puter vision algorithms. The control cycle will be

able to recognize the body shape of the patient with a

shape matching algorithm using both chest and back

anatomical models, and a shape transformation in or-

der to redraw the ROIs from the model.

The following section explores the computer vi-

sion concepts used in heterogeneous environments

that we have selected to solve the presented prob-

lem. We have inspected several possible solutions to

choose the most appropriate approach.

3 STATE OF ART

Over the last few years, thanks to the theoretical con-

solidation of digital signal processing techniques and

their implementations, there has been a growing in-

terest in emerging trends that include the interpreta-

tion of optical and radar images for earth monitoring,

character recognition in natural language (Pathak and

Singh, 2014), medical image processing for automatic

diagnosis, and motion tracking for surveillance sys-

tems. However, all these applications solve their tasks

by adopting domain-independent algorithms.

In (Thijs et al., 2007) a technique for position-

ing sensors for acquiring vital parameters of a sub-

ject is presented. The method is based on a refer-

ence signal and a Doppler radar device to acquire a

reference body signal representing a movement of an

object within the subject’s body, the position of the

Doppler radar acquisition device being associated in

a defined way with the position of the sensor, and stor-

ing the acquired reference body signal by means of a

data storage device.

In applications in which object recognition is re-

quired, the most common methods use edge-based

shape detection methods, or adopt a probabilistic ap-

proach to realize an adaptive shape selection.

(Yang et al., 2010) introduce a multi-scale shape

context descriptor to model human body shapes and

measure their similarity in different scales with re-

spect to a predefined human body prototype.

(Hu et al., 2006) present a color-based method for

torso detection in presence of different clothing and

cluttered backgrounds. It extracts the torso by using

a color probability model based on the analysis of the

dominant colors.

Another very interesting paper is (Kumpituck

et al., 2009) in which the authors present a stereo-

based vision system that is used to determine the

stethoscope location on the human body in a car-

diac auscultation tele-diagnostic system. The recon-

struction of the points in the three-dimensional space

has been achieved by using a knowledge of the cam-

ICT4AWE 2016 - 2nd International Conference on Information and Communication Technologies for Ageing Well and e-Health

54

era calibration, image rectification, epipolar geome-

try, and disparity computation.

When there is a stream of moving images, the tar-

get tracking becomes more challenging. In this field,

the computing time plays a decisive role in the de-

tection process. To ensure the real-time property, it

is necessary to have fast algorithms that can return

results on time in order to monitor the environment

without any interruptions or delays.

In a visual surveillance service it may be useful

to detect, track and recognize, in real-time, moving

vehicles and pedestrians. Moving cameras capture a

changing background that complicates the foreground

detection. For this purpose, the paper (Li et al., 2012)

is very helpful. It presents a human shape descriptor

to detect and track human movements from moving

cameras.

In many systems, different open source projects

are supporting the programmer in developing the re-

quired solutions. For example, the Open Source Com-

puter Vision (OpenCV) library contains a set of com-

puter vision functions for digital image processing,

including edge detection algorithms, shape transfor-

mations, and face detection. A brief introduction to

the OpenCV can be found in (Culjak et al., 2012).

The OpenCV API offers the possibility of manipu-

lating the camera in real-time, to obtain a real-time vi-

sual feedback in order to monitor events and actions,

also on mobile platforms. Many scientific papers and

books are focusing on the development of systems for

both academic and commercial use by using this li-

brary. Additionally, our proposed supporting control

cycle uses OpenCV, which has helped us to solve the

shape recognition problem with its camera API. The

implementation uses the OpenCV4Android SDK.

4 THE PROPOSED CONTROL

CYCLE

The proposed control cycle uses the hardware re-

sources of smartphones and tablets to support the pro-

cessing steps. The control cycle suggests the cor-

rect location of the auscultation sites through a visual

feedback system by using only the rear camera of the

mobile device, in the case of the back auscultation, or

only the front camera, in the case of the chest auscul-

tation.

The application draws the map of the ROIs over-

lapping with the current camera view with an adap-

tive control cycle that uses an edge detection tech-

nique and extracts the back/chest edge of the patient

by shaping a reference anatomical model on the de-

tected human back/chest.

To select the real human body edge, the control

cycle implements a similarity model based on corre-

lation measures with respect to the reference model.

Possible problems due to the noise introduced by

the mobile devices camera sensor and by the disconti-

nuity of the background have been taken into account

and solved thanks to the use of digital filters designed

to reduce the spurious contributions that can interfere

with the body edge detection.

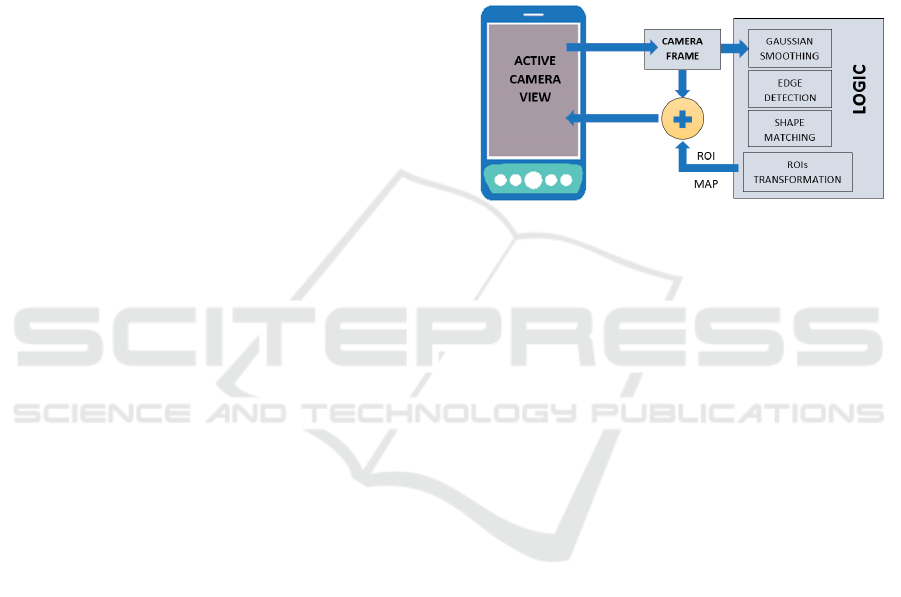

The figure 1 depicts a high-level schema to illus-

trate how the proposed control cycle draws the ROIs

on the active camera view.

Figure 1: High-level schema for drawing ROIs.

The processing chain uses the following steps, il-

lustrated in detail in the next sub-sections of the paper.

In summary, the proposed control cycle:

1. captures the raw camera frames and filters each

frame with a Gaussian smoothing filter;

2. uses an edge detector to extract the multiple edges

from the filtered frames;

3. chooses, from the various edges, the only one cor-

responding to the real human body edge applying

a shape matching algorithm; and

4. draws the ROIs by adapting the information from

the reference chest/back models for the detection

of the human body edge.

The 4-step control cycle runs in an asynchronous

task and notifies its results to the main task for the

drawing of the ROIs. When the asynchronous task is

completed, the main task updates the display with the

information about the position of the calculated ROIs.

From the implementation point of view, the pro-

posed control cycle is able to work in real time, with a

low computational cost, and is runnable on mobile de-

vices with limited resources. Functions computation-

ally more complex are written in native code, C ++,

and invoked on the Android platform through the Java

Native Interface (JNI). For this purpose, the OpenCV

library is useful for us in that it makes available a large

set of computer vision functions written in C++ ready

to be used.

A Control Cycle for the Automatic Assisted Positioning of Auscultation Sensors

55

4.1 The Gaussian Smoothing filter

Starting from the raw frame acquired by the mobile

device camera, the grey-scale converted frame is pro-

cessed with a Gaussian Smoothing filter in order to

reduce the background noise that is included in the

acquired image.

Moreover, due to the geometrical discontinuities

of objects behind the foreground subject, there may

be, in the output of the edge detector, multiple edges

belonging to the background of the image. These spu-

rious responses can introduce false positive and false

negative detections and for this reason, the design of

the filter must be realized with care to avoid, or at least

to reduce, these problems.

In fact, without imposing any constraints on the

usability of the application of which the control cycle

is part, it is necessary to take into account the possi-

bility of having various backgrounds for each situa-

tion. This can add, in the output to the detector, the

presence of unpredictable spurious edges that makes

the identification of the body edge, among all the de-

tected edges, more complicated.

In the absence of a noise model valid in general,

the background noise removal becomes very diffi-

cult in practice due to the unpredictable variability of

the background. The most suitable solution, rather

than breaking down this spurious noise, preserves the

useful information in the foreground, or rather the

chest/back area of the subject on which the control

cycle has to map the regions of auscultation, the ROIs.

The filter performs an average of the pixels in the

image on a number of points fixed by the kernel size

parameter. The standard deviation of the filter defines

the shape of the impulse response and the weights of

the filtering mask.

In general, a digital image a[m, n] described in a

2D discrete space is derived from an analogue image

a(x,y) in a 2D continuous space through a sampling

process that is frequently referred to as digitization.

The 2D continuous image a(x,y) is divided into N

rows and M columns. The intersection of a row and

a column is termed a pixel. The value assigned to

the integer coordinates [m,n] with m=0,1,2,,M1 and

n=0,1,2,,N1 is a[m,n].

Applying the Gaussian Smoothing filter, each out-

put pixel value is set to a weighted average of the

neighbouring pixels. The focal pixel receives the

heaviest weight (having the highest Gaussian value)

and neighbouring pixels receive smaller weights as

their distance from the original pixel increases. This

results in a blur that preserves boundaries and edges

better than other, more uniform blurring filters.

The equation of the Gaussian Smoothing filter in

two dimension is:

G(x, y) =

1

2πσ

2

e

−

x

2

+ y

2

2σ

2

(1)

where x is the distance from the origin in the

horizontal axis, y is the distance from the origin in

the vertical axis, and σ is the standard deviation of

the Gaussian distribution.

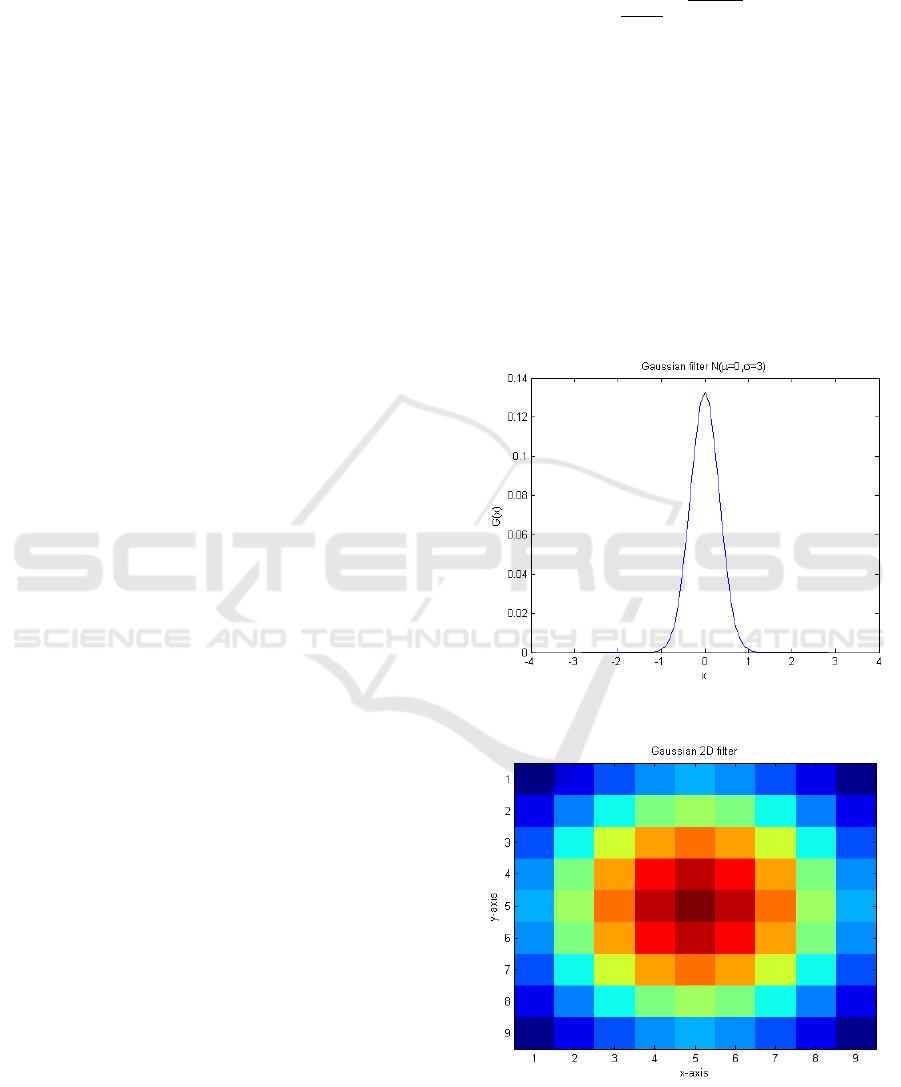

Our choice for the default filter parameters is 9x9

pixels for the kernel size (K) and 3 pixels for the stan-

dard deviation (σ) both in the x-axis and y-axis direc-

tions. Anyway, the parameters are adjustable from the

application interface.

Figures 2 and 3 show respectively the impulse re-

sponse of the Gaussian filter in the x-axis direction

and in the x-y image plane.

Figure 2: Normal distribution with µ = 0 and σ=3.

Figure 3: 2D Gaussian filter.

ICT4AWE 2016 - 2nd International Conference on Information and Communication Technologies for Ageing Well and e-Health

56

4.2 The Edge Detector

After the Gaussian filter, the smoothed frame is in the

input to the edge detector to extract the edges.

The detector uses the multi-stage Canny algorithm

(Canny, 1986), well known in literature as an optimal

detector (Moon et al., 2002) due to its characteristics

of low error detection rate, good edge localization and

minimal response.

The process of the Canny edge detection algo-

rithm includes these following steps:

- Application of a Gaussian filter to smooth the im-

age in order to remove the noise;

- Finding the intensity gradient of the image;

- Application of a non-maxima suppression to get

rid of spurious responses to the edge detection;

- Application of a double threshold to determine the

potential edges;

- Application of a hysteresis threshold to suppress

the weak edges not connected to the strong edges.

However, OpenCV implements the Canny algo-

rithm without the Gaussian filter. The previous Gaus-

sian Smoothing filter introduced in subsection 4.1

subsection covers the first step of the Canny imple-

mentation.

The C++ procedure receives as input the first and

second thresholds for the hysteresis, the aperture size

for the Sobel operator (Matthews, 2002) and a flag to

calculate the image gradient magnitude as an L1 or

L2 norm.

We calculate the gradient magnitude as an L2

norm, without any approximations, with a two Sobel

convolution kernel of 3x3 pixels and two hysteresis

thresholds set in the application preferences.

In the output to the edge detector, there is a grey-

scale image with multiple edges and, among these,

the real body edge. The extraction of this single edge

is performed by the shape matching algorithm.

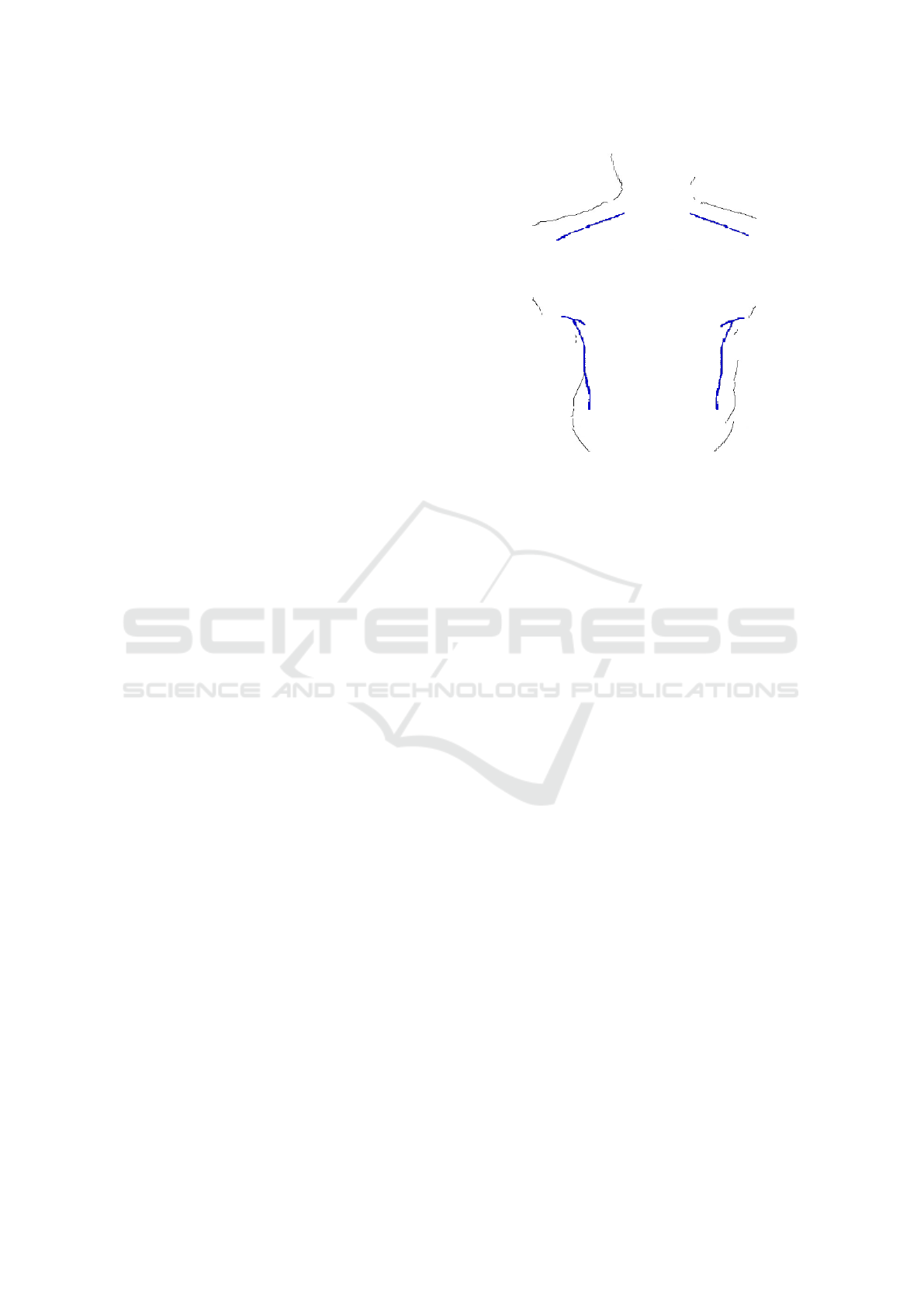

4.3 The Shape Matching Algorithm

In the output to the edge detector we can observe an

image in which all the false positives are external to

the body region and scattered in the background. It

follows that the extraction of the edge corresponding

to the human body shape can be performed by se-

lecting, among all the edges present, the one that ful-

fils a condition of similarity with respect to a model

taken as a reference and placed in the foreground. The

matching works from the inside of the foreground to-

wards the outside of the background.

Several template-based matching techniques to

find similarities among shapes have been explored in

Figure 4: An example of an image obtained from the edge

detector: the static reference anatomical model is visible in

blue.

the literature. (Mahalakshmi et al., 2012) gives an

outline of the general classifications of template or

image matching approaches, which are the Template

or Area-based approaches and the Feature-based ap-

proaches.

Nevertheless, in the current implementation of our

control cycle, the similarity is estimated with a mini-

mum distance criterion: among all edges, we choose

the one for which the pixel-to-pixel distance is the

minimum from a reference shape, in blue in figure 4.

In the output to the shape-matching algorithm, we

have the unique edge that represents the chest/back

shape useful for drawing the auscultation sites within

it.

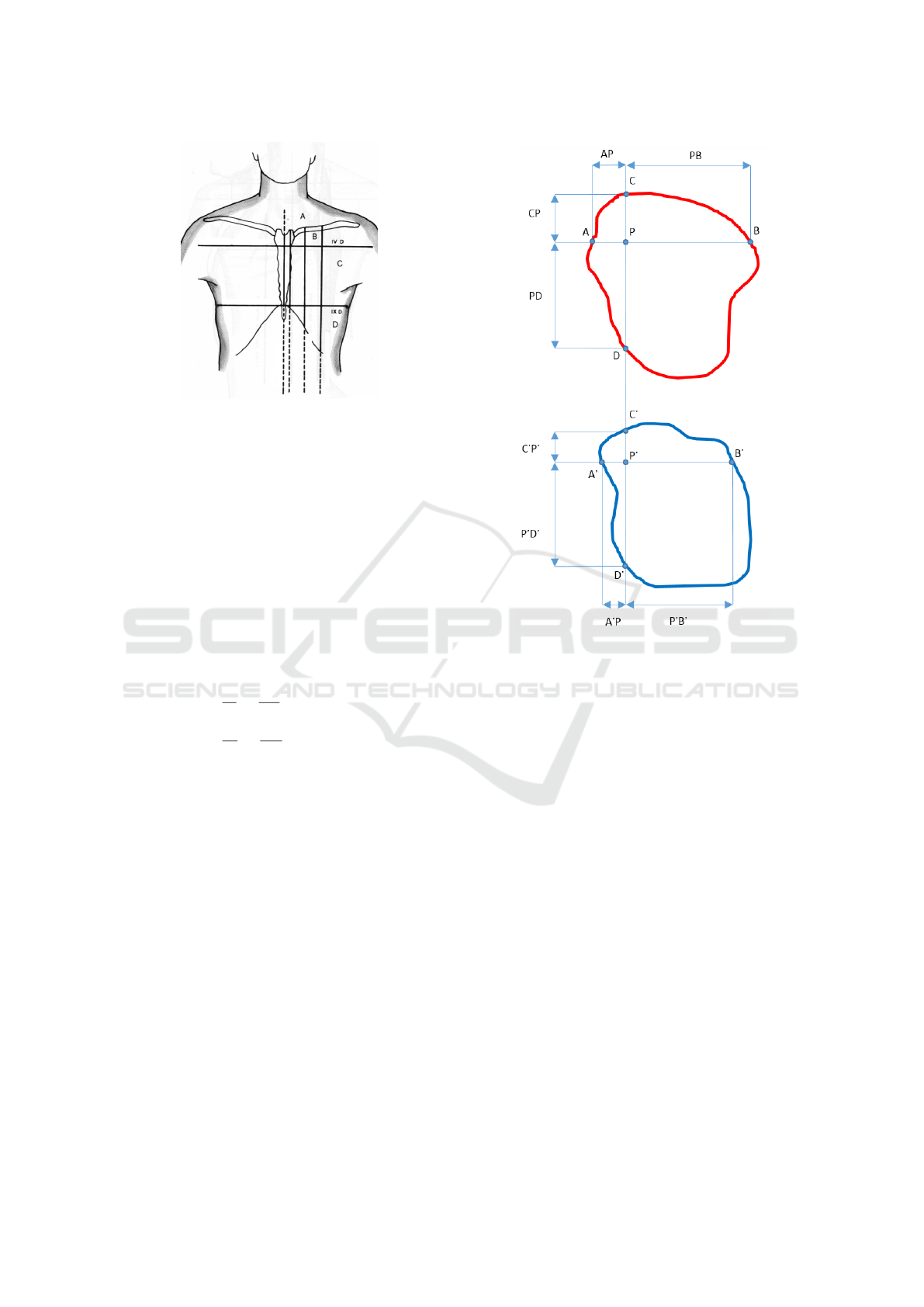

4.4 Drawing the ROIs

The positions where the ROIs are drawn are dynam-

ically calculated on the basis of geometric informa-

tion acquired from a reference anatomical model, a

static information source, and adapted to the specific

body shape of the patient, that is a dynamic informa-

tion source.

An example of a static anatomical chest table is

shown in figure 5.

We determine the dynamic map or ROIs associ-

ated with the dynamic edge of the human body by

transforming the ROIs found in the static map of the

model. The control cycle inspects the static model

and looks for the ROIs. Then, it returns the central

point of each ROI and applies a coordinate transfor-

mation to map the ROI coordinates from the model

reference system to the human body edge detected.

A Control Cycle for the Automatic Assisted Positioning of Auscultation Sensors

57

Figure 5: Reference body shape with ROIs.

The ideal transformation is a warping that calcu-

lates the new position of each pixel of the body edge

for each pixel of the reference shape model before

transforming the ROI coordinates. Due to its high

computing time, we prefer to adopt an approximation

by using two linear transformations working in cas-

cade.

Therefore, the coordinate transformation, math-

ematically defined by equations (2) and (3), moves

each point from the reference model to the human

body edge by redrawing each ROI coordinate as

shown in figure 6 that illustrates the geometry of the

transformation.

AP

PB

=

A

0

P

0

P

0

B

0

(2)

CP

PD

=

C

0

P

0

P

0

D

0

(3)

The new coordinates of point P, the center of the

ROI, are calculated as follows: first, the x-coordinate

of the point is calculated with equation (1), and then

the y-coordinate is calculated with equation (2) us-

ing the x-coordinate found through equation (1). The

new coordinates of point P’ are the result of geometric

translations in the plane x-y that rearrange the points

of the ROIs by preserving the proportions between the

two shapes.

5 CONSIDERATIONS AND

FUTURE WORKS

This section give a brief description of the proposed

control cycle from the patients point of view during

a self-auscultation session of his/her chest, and intro-

duce new ideas for future developments.

Once the application is running, the patient posi-

tions himself/herself in front of the smartphone cam-

Figure 6: Point transformation from model to edge.

era and can see his/her image in the active view on the

mobile device display. He/she can choose to activate

the assisted sensor positioning service, and after a few

fractions of a second, the exact waiting time depend-

ing on the computing power of the smartphone used,

he/she visualizes, overlapping with the active view,

the suggestions about the ROIs.

As we have already described, the control cycle

runs on an asynchronous task which notifies the main

task with the positions of the ROIs after a variable

time. We recommend the user not to move during this

time.

The proposed control cycle is based on a shape

matching with a static shape and a dynamic adjust-

ment of the ROIs through two linear transformations.

A full dynamic control cycle, which does not require

any constraint on the position of the user with respect

to the static shape model, considers the replacement

of the static matching block with a dynamic control

cycle based on face detection. With dynamic shape

positioning, the model will be shifted along the ver-

tical axis in the plane of the camera view according

to the shoulder level evaluated by the face detection

results. With this new control cycle, the model dy-

namically fits its position to the current position of

ICT4AWE 2016 - 2nd International Conference on Information and Communication Technologies for Ageing Well and e-Health

58

the user.

Possible improvements of the presented control

cycle could involve the filtering and the shape match-

ing algorithms. Other possible enhancements could

be the adopting of spatially-variant filters to manipu-

late the selective properties of the smoothing filter for

the noise reduction. In this case, the coefficients of

the filtering mask depend on the location of the pix-

els in the input image. A theoretical formulation that

faces with this issue and uses anisotropic diffusion is

described in (Perona and Malik, 1990).

Finally, to improve the selectivity of the shape

matching algorithm, we are considering to design spe-

cific static models taking account the gender and the

different body types of the patients.

As regards the performance evaluation of the sup-

porting control cycle, we are evaluating two different

criteria. First, we are measuring the accuracy of the

positioning, by checking the quality of the acquired

waveforms in various tests. In relationship to this lat-

ter aspect, we are inspecting the regularity of charac-

teristic parameters that could be calculated by the ac-

quired waveform, the phonocardiogram, such as the

average frequency and the information content of its

power spectrum. Secondly, we are considering the us-

ability of our supporting control cycle in terms of the

time saved for each acquisition.

The usability test, together with the accuracy test

on the waveform captured, will give us an important

feedback on how our innovative control cycle tries

to solve the sensor positioning issue in an automatic

way.

6 CONCLUSIONS

Telemedicine, as a support for improving a patients

quality of life, makes use of new solutions of non-

invasive and continuous monitoring with wearable

sensors.

The characteristics of these sensors help us to

monitor the health of the patient by collecting more

data during the day and the night. Behaviours and

habits during the illness can thus be tracked and used

to build a clinical profile more accurate for the pa-

tient. Additionally, the real-time acquisition of clini-

cal data helps to build new Electronic Health Records

of patients and gives the possibility to realize auto-

matic analysis and diagnosis systems to promptly as-

sist them.

As a support for the monitoring, this paper has in-

troduced a new control cycle to meet the operational

requirements of wearable sensors in an auscultation

system. The control cycle assists the patient to select

the right position of the auscultation sites on his/her

own chest or back before starting the signal acquisi-

tion.

With use of a mobile device, such as a smartphone

or a PDA, and its camera, the presented control cy-

cle maps the Region Of Interests, corresponding to

the positions of the auscultation sites, on the active

camera view. In real-time, on the smartphone display,

these visual hints are shown and the patient knows

where he need to place the stethoscope. This is the

initial condition to start a session to monitor the heart

sounds, with front camera in self-monitoring mode,

or to monitor the lung sounds, with back camera.

In the prospective of a medical tele-consulting ser-

vice, the positioning logic will be part of the prerequi-

sites of a real-time tele-auscultation application (Bel-

lido et al., 2015).

The solution will extend the control cycle of its in-

telligent layer with the smart interaction of the cam-

era, here proposed, to give the positioning feedback

to help the patients.

Accordingly, at his/her own home, the patient will

be able to enjoy an easier healthcare in ways and at

times meeting his/her own requirements, in autonomy

and independence.

REFERENCES

Bellido, J. C., De Pietro, G., and Sannino, G. (2015). A

prototype of a real-time solution on mobile devices for

heart tele-auscultation. In Proceedings of the 8th ACM

International Conference on PErvasive Technologies

Related to Assistive Environments, page 30. ACM.

Black, J., Segmuller, W., Cohen, N., Leiba, B., Misra, A.,

Ebling, M., and Stern, E. (2004). Pervasive computing

in health care: Smart spaces and enterprise informa-

tion systems. In MobiSys 2004 Workshop on Context

Awareness.

Canny, J. (1986). A computational approach to edge detec-

tion. Pattern Analysis and Machine Intelligence, IEEE

Transactions on, (6):679–698.

Culjak, I., Abram, D., Pribanic, T., Dzapo, H., and Cifrek,

M. (2012). A brief introduction to opencv. In MIPRO,

2012 Proceedings of the 35th International Conven-

tion, pages 1725–1730. IEEE.

Hayes, T. L., Pavel, M., Larimer, N., Tsay, I. A., Nutt, J.,

and Adami, A. G. (2007). Distributed healthcare: Si-

multaneous assessment of multiple individuals. IEEE

Pervasive Computing, (1):36–43.

Hu, Z., Lin, X., and Yan, H. (2006). Torso detection in static

images. In Signal Processing, 2006 8th International

Conference on, volume 3. IEEE.

Kumpituck, S., Kongprawechnon, W., Kondo, T.,

Nilkhamhang, I., Tungpimolrut, K., and Nishihara, A.

(2009). Stereo based vision system for cardiac auscul-

A Control Cycle for the Automatic Assisted Positioning of Auscultation Sensors

59

tation tele-diagnostic system. In ICCAS-SICE, 2009,

pages 4015–4019. IEEE.

Li, C., Xu, Y., Shi, X., and Wu, S. (2012). Human

body detection and tracking from moving cameras.

In Biomedical Engineering and Informatics (BMEI),

2012 5th International Conference on, pages 278–

281.

Mahalakshmi, T., Muthaiah, R., Swaminathan, P., and

Nadu, T. (2012). Review article: an overview of tem-

plate matching technique in image processing. Re-

search Journal of Applied Sciences, Engineering and

Technology, 4(24):5469–5473.

Matthews, J. (2002). An introduction to edge detection: The

sobel edge detector.

Moon, H., Chellappa, R., and Rosenfeld, A. (2002). Op-

timal edge-based shape detection. Image Processing,

IEEE Transactions on, 11(11):1209–1227.

Patel, S., Park, H., Bonato, P., Chan, L., Rodgers, M., et al.

(2012). A review of wearable sensors and systems

with application in rehabilitation. J Neuroeng Rehabil,

9(12):1–17.

Pathak, M. and Singh, S. (2014). Implications and emerging

trends in digital image processing. International jour-

nal of computer science and information technologies,

5.

Perona, P. and Malik, J. (1990). Scale-space and edge de-

tection using anisotropic diffusion. Pattern Analy-

sis and Machine Intelligence, IEEE Transactions on,

12(7):629–639.

Thijs, J. A. J., Such, O., and Muehlsteff, J. (2007). System

and method of positioning a sensor for acquiring a vi-

tal parameter of a subject. US Patent App. 12/376,319.

Wickramasinghe, N. (2013). Pervasive computing and

healthcare. In Pervasive Health Knowledge Manage-

ment, pages 7–13. Springer.

Yang, F., Lu, Y., and Li, B. (2010). Human body detection

using multi-scale shape contexts. In Bioinformatics

and Biomedical Engineering (iCBBE), 2010 4th In-

ternational Conference on, pages 1–4.

ICT4AWE 2016 - 2nd International Conference on Information and Communication Technologies for Ageing Well and e-Health

60