Customized Teaching Scenarios for Smartphones

in University Lecture Settings

Experiences with Several Teaching Scenarios using the MobileQuiz2

Daniel Sch

¨

on

1

, Melanie Klinger

2

, Stephan Kopf

1

and Wolfgang Effelsberg

1

1

Department of Computer Science IV, University of Mannheim, A5 6, Mannheim, Germany

2

Educational Developement, University of Mannheim, Castle, Mannheim, Germany

Keywords:

Audience Response System, ARS Evaluation, Peer Instruction, Lecture Feedback, Mobile Devices.

Abstract:

Many teachers use Audience Response Systems (ARS) in lectures to re-activate their listeners and to get

an insight in students’ knowledge of the current lecture contents. Plenty of such applications have been

developed in recent years, they provide a high variety of different teaching scenarios with the use of the

students’ smartphones, including quizzes, lecture feedback and dynamic message boards. We developed a

novel application based on an abstract model to enable this variety of customizable teaching scenarios within

one application. After presenting the application to the first charge of lecturers, the responses were quite good,

and several new teaching scenarios were created and used. This paper presents first experiences when using a

variety of customizable teaching scenarios, the special opportunities and challenges as well as the opinions of

lecturers and students, which we collected with a survey at the end of the semester.

1 INTRODUCTION

By now, Audience Response Systems (ARS) are com-

monly used to increase interactivity, activate the au-

dience and get a realistic feedback of the students’

knowledge. Besides specifically constructed hard-

ware clickers, the first implementations were already

written for PDAs and handheld computers. With to-

day’s availability of smart phones among students, the

usage of ARSs within classroom settings has become

very popular. Technologies are so evolved, too, that

a simple ARS can be programmed by a student of

informatics within a few months. Additionally, the

demand for response systems grew significantly dur-

ing the last years due to the perceived didactic ben-

efits. Most of these systems offer comparable fea-

tures, especially the opportunity to create test ques-

tions or self-assessment scenarios. Our own System,

the MobileQuiz was developed in 2012 and directly

integrated into the university’s learning management

system. It used the students’ smart phones as clicker

devices and was adapted by many lecturers, mostly

for knowledge quizzes (Sch

¨

on et al., 2012a).

With the increasing popularity, additional require-

ments were brought to our attention. Besides sim-

ple feedback and self-assessment scenarios, our lec-

turers asked for customized learning environments

with more complexity, adaptivity and increased stu-

dent interactivity. Requests ranged from guessing

questions with multiple correct answers, possibilities

for text input, message boards for in-class discussions

up to game-theory and decision-making experiments

for live demonstrations. So, every scenario differed

slightly from already existing scenarios or other lec-

turers’ ideas.

Therefore, we enhanced our system by designing

a generic model to depict various in-class teaching

scenarios on handheld devices. The model lead to the

development of a prototype application that merges

common features and scenarios of other tools within

one system: the MobileQuiz2 (Sch

¨

on et al., 2015).

Small sets of predefined elements were defined to de-

scribe diverse scenarios, including message boards,

knowledge quizzes, guessing games and other cus-

tomized scenarios. By now, several lecturers have

used the system in their lectures and created mul-

tiple new teaching scenarios. This new freedom in

designing individual teaching scenarios without the

usual technical boundaries gave us interesting insights

and lead to new, different challenges for the lecturer.

A first evaluation with 6 lecturers and 27 students

showed that students and lecturers appreciate the di-

versification in teaching scenarios. But while students

see the most important aspect in having a stable sys-

Schön, D., Klinger, M., Kopf, S. and Effelsberg, W.

Customized Teaching Scenarios for Smartphones in University Lecture Settings - Experiences with Several Teaching Scenarios using the MobileQuiz2.

In Proceedings of the 8th International Conference on Computer Supported Education (CSEDU 2016) - Volume 1, pages 441-448

ISBN: 978-989-758-179-3

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

441

tem, lecturers are more concerned to execute a high-

quality scenario.

This paper is structured as follows: Section 2 dis-

cusses related work and the didactic impact of ARS.

In Section 3, we give a short introduction of the

generic model and explain how the prototype works

with it. We describe a selection of different teaching

scenarios used by our lecturers in Section 4. Section

5 shows the results of our evaluation during the Fall

semester 2015. Section 6 discusses the benefits, diffi-

culties and limitations of our approach, and Section 7

concludes the paper.

2 RELATED WORK

As recommended by Beatty and Gerace (Beatty and

Gerace, 2009) we distinguish between educational

psychology aspects and technological aspects in the

following discussion about ARS.

2.1 Educational Psychology

Teaching aims at supporting students to understand

and learn the course contents. Unfortunately, com-

mon teaching styles focus on the presentation of

knowledge, especially in large lectures. Research has

shown that only the active usage of information leads

to effective learning (Biggs, 2003). Therefore, acti-

vating elements and methods are essential for the suc-

cess of a course. When trying to implement activat-

ing elements, lecturers are confronted with a range

of hindering factors, especially time constraints and

group size. Using eLearning tools is one solution to

these challenges: technology can offer possibilities

that save time and are well usable in large classes.

ARS are one common way of bringing interactivity

and variation to the classroom. Students can be asked

anonymously for their opinion on course contents or

course design. Lecturers can test the students’ un-

derstanding and immediately use the results for fur-

ther explanations or discussions in the classroom. Re-

search has shown many positive effects of ARS within

the last years of which only a few shall be mentioned

here: ARS can increase students’ motivation (Kopf

et al., 2005; Uhari et al., 2003) and decrease the indi-

vidually experienced boredom (Tremblay, 2010). Stu-

dents value individual feedback about their standing

in the class (Treesa and Jacksona, 2007). Students

also believe in the improvement of their learning suc-

cess by the usage of ARS (Uhari et al., 2003). Chen

et al. (Chen et al., 2010) and Reay et al. (Reay et al.,

2008) found out that the learning success is objec-

tively higher in courses using ARS.

Ideally, lecturers can chose and apply methods ac-

cording to their teaching goals (”form follows func-

tion approach”) and should not adapt their teaching to

the functionality of a system or, in the worst case, use

the tool for the sake of the tool.

2.2 Audience Response Systems

Early systems like Classtalk (Dufresne et al., 1996)

wanted to improve student activity by transferring

three to four tasks per course to students’ devices that

were common at that time: calculators, organizers, or

PCs. Some years later the system ConcertStudeo al-

ready consisted of an electronic blackboard and hand-

held devices (Dawabi et al., 2003). With Concert-

Studeo a lecturer could create exercises and interac-

tions like multiple-choice quizzes, queries, or brain-

storming.

Scheele et al. developed the Wireless Interac-

tive Learning Mannheim (WIL/MA) system to sup-

port interactive lectures (Scheele et al., 2005), using

a server and a client software part, that runs on hand-

held mobile devices. By using a specifically set up

Wi-Fi network, the components of the system com-

municated and offered the user a variety of functions

like multiple-choice quizzes, a chat, a feedback func-

tion and a call-in module. WIL/MA offered the pos-

sibility to extend the functions with little program-

ming effort. To use the system, students were re-

quired to have a JAVA compatible handheld device

and to install specific client software, which made a

university-wide extension of the tool rather compli-

cated. Experts claim that the purchase of specific

hardware devices leads to diverse challenges, like ad-

ditional overhead for, e. g., buying the devices, se-

curing them against theft, time for handing out and

collecting the devices, updating and maintaining as

well as replacement of broken devices, and finally

the instruction of teachers and students about the us-

age (Murphy et al., 2010; Kay and LeSage, 2009).

Hence, the integration of students’ private mobile de-

vices (tablets, smartphones etc.) and ARSs became

more popular. Nowadays, mobile devices and es-

pecially smartphones are extremely widespread tools

with a broad technical functionality, they can visual-

ize multimedia content (Sch

¨

on et al., 2012a) or use

learning materials like lecture recordings or e-books

(Vinaja, 2014). With the development of our first

ARS: the the MobileQuiz, we were able to fulfill three

basic requirements (Sch

¨

on et al., 2012b): (1) No addi-

tional software needs to be installed on the mobile de-

vices. (2) Almost all modern mobile devices are sup-

ported so that no extra hardware has to be purchased.

(3) The system is integrated into the learning man-

CSEDU 2016 - 8th International Conference on Computer Supported Education

442

agement system of our university. We were able to

develop a tool that requires only a web browser that

is nowadays integrated integrated in every handheld

device or notebook. The access to the quiz tool was

provided by a QR code or a tiny URL. This became a

common approach at that time which was also use by

other ARS like PINGO (Kundisch et al., 2012). An-

other common aspect of ARSs was the limited ability

for customization and adaptation.

Web-based systems have been proposed like

BrainGame (Teel et al., 2012), BeA (Llamas-Nistal

et al., 2012), AuResS (Jagar et al., 2012) or TUL

(Jackowska-Strumillo et al., 2013) to enhance the

flexibility and expandability of the systems. User in-

terfaces were improved to allow lecturers to add new

questions on the fly, to create a collection of ques-

tions, or to check answers immediately. Data collec-

tion and the export of the data for later analysis is fea-

sible, as well as the possibility for students to update

their votes or to support user authentication for au-

thenticated polls. Considering the technical innova-

tions, some systems use cloud technology for better

scalability in the case of large groups of students.

Several commercial ARS are available that in-

clude gamification aspects (Brophy, 2015). Kahoot

1

is an online ARS that also supports quizzes or surveys

and aggregates all responses immediately. Students

may also slip into the role of the teacher and create

their own quizzes. ClassDojo

2

is another ARS focus-

ing on gamification that supports a rewarding system

and communication between students, teachers, and

parents.

The main drawback of all these systems is the

fact that only predefined scenarios can be used, ev-

ery extension requires access to the source code, pro-

gramming effort and programming skills. Therefore,

we improved our system to function as a modular

construction tool that allows lecturers to realize a

huge variety of imaginable teaching scenario without

changing any lines of code of the MobileQuiz2 .

3 MODEL

We developed a model based on the assumptions that

the process of creating a learning unit is divided into

the five phases listed in Table 1 and that every teach-

ing scenario can be constructed out of a few basic el-

ements (Sch

¨

on et al., 2015).

The beginning blueprint phase consists of stating

the learning goals of the course unit and defining the

1

https://getkahoot.com/

2

http://www.classdojo.com

Table 1: The five phases of a learning scenario.

Phase Description

Blueprint Definition of teaching scenario.

Quiz cre-

ation

Creation of an actual quiz with

specific questions of a scenario.

Game

round

Performance of a classroom ac-

tivity.

Result Presentation and discussion of the

result.

Learning

analytics

Analysis of students’ behavior

and scenario success.

concept of the teaching scenario. According to the

learning goals, this could be a personal knowledge

feedback, an in-class message board, a live experi-

ment in game theory, or audience feedback on the cur-

rent talking speed of the lecturer. The lecturer has to

define the elements and their appearance on the stu-

dents’ phones, the interactions between the partici-

pants and the compilation of the charts he or she wants

to present and/or discuss in the classroom. This phase

needs a fair amount of structural and didactic input,

because small details have a large influence on suc-

cessful or unsuccessful scenarios.

After defining the scenario’s blueprint, lecturers

can take a predefined scenario and build a concrete

entity of it, stated as quiz. If the scenario is, e. g.,

a classroom response scenario for knowledge assess-

ment, the concrete questions and possible answers are

entered here. These quizzes are then used to per-

form game rounds with the actual students.. In phase

four, the students’ input is displayed in form of result

charts. Depending on the type of input data, the re-

sults are displayed as text, colored bar charts or sum-

marized pie charts. The last phase is mostly for ana-

lyzing the learning behavior and performing didactic

research with meta data which is logged in the back-

ground.

The elements of our model take the inspiration

from a classical collection of family games. Such a

game usually consists of different objects like tokens

in different colors, cards with text, or resource coins

in different values. Objects also exist in a learning

scenario and could, e. g., consist of questions with an-

swers. Comparable to a board game, these objects

also have attributes, like the text and correctness of

a multiple choice option. Beside the tokens and the

board, a game has rules. They describe the logic be-

hind the game and the way the players interact with

the game elements and with each other. Such rules are

also required for the execution of an ARS. They can

be described as a set of checks and actions. Checks

are conditions under which the rule gets activated,

while actions describe the action that has to be per-

Customized Teaching Scenarios for Smartphones in University Lecture Settings - Experiences with Several Teaching Scenarios using the

MobileQuiz2

443

formed. This covers very simple rules, like a button-

click triggering a player’s counter to increase by one,

to very complex ones where a sum of an attribute of

all players exceeds a given value.

We have implemented a prototype to analyze the

described model in real lecture environments. The

MobileQuiz2 is written in PHP and uses the ZEND2

3

framework and current web technologies like AJAX,

HTML5, CSS3 and the common web frameworks

jQuery

4

, jQuery Mobile

5

and jqPlot

6

. Students open

the quizzes with their mobile browsers by scanning a

QR code or entering an URL directly.

4 EXAMPLES OF USE

Due to the high flexibility and adaptability of the

model, the lecturers of the University of Mannheim

used the MobileQuiz2 for a variety of different sce-

narios.

Single and Multiple Choice Questions: The most

popular scenarios use plain single and multiple choice

questions, similar to many other ARS. They are

mostly used to analyze the students’ current under-

standing of the course contents. The benefits of this

scenario are that the students are forced to reflect their

knowledge, and the lecturer gets an overview of the

students’ knowledge gaps.

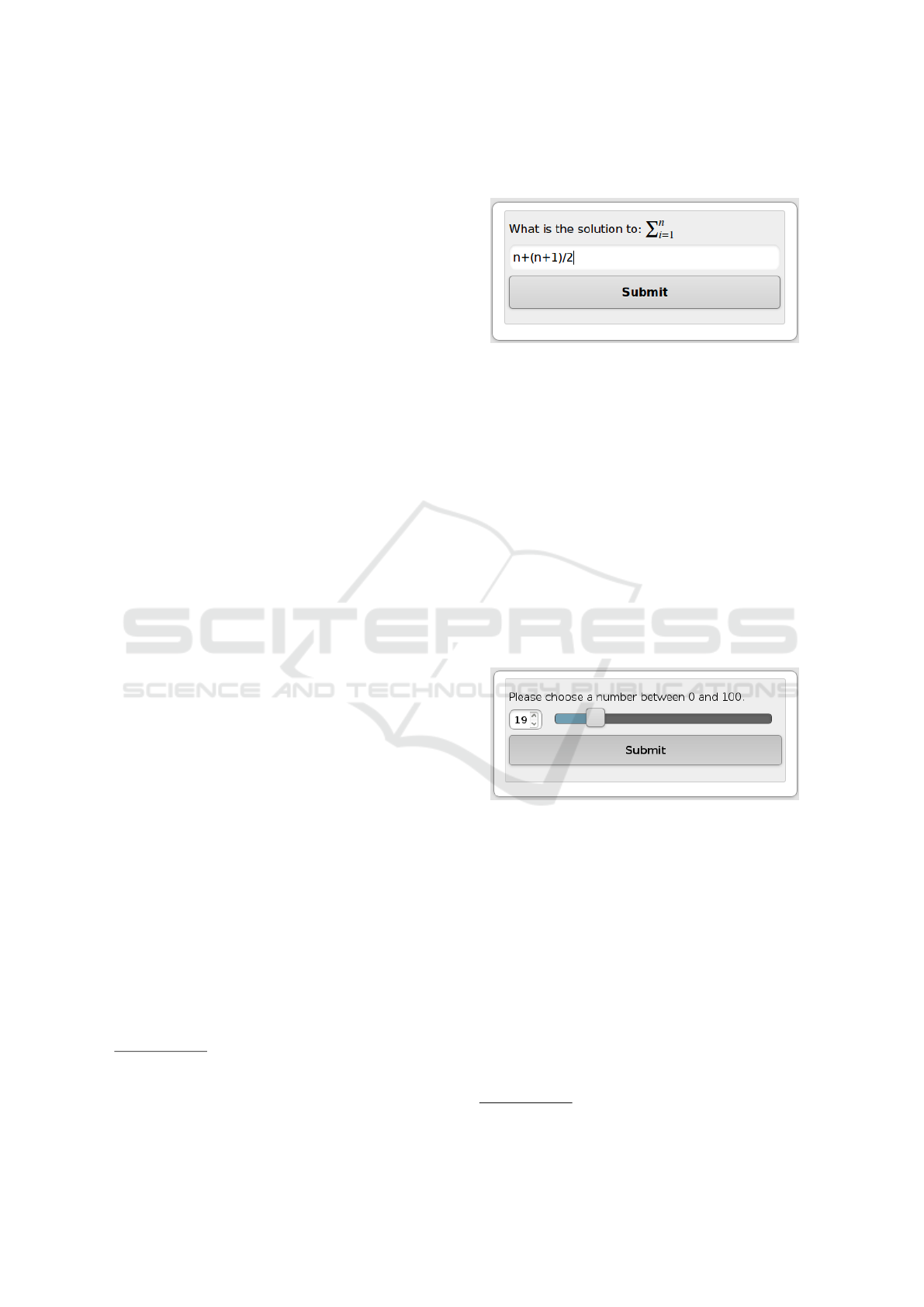

Question Phases: This scenario enables the lec-

turer to switch through different phases which contain

different types of learning objects. The scenario was

used several times in slightly different variations. In

the most complex variation, the lecturer of computer

science explained a mathematical problem and let the

students train the solution. During the first phase, the

students got a quite easy example, entered the result

into the given field and submit their results as can be

seen in Figure 1. The results were automatically com-

pared against the right answer, and the students got

direct feedback on their individual answers. When

they were wrong, they got the possibility for another

try. Thus, they were able to practice by themselves,

while the lecturer got an overview of how many stu-

dents already understood the topic. After a discussion

about the correct result and right way of computation,

a more complex problem was explained and the sec-

ond phase was started, with a more difficult exercise.

3

http://framework.zend.com/

4

http://jquery.com/

5

http://jquerymobile.com/

6

http://www.jqplot.com/

In that way, students were guided through four phases

with a steadily growing difficulty.

Figure 1: Screenshot of a question phases scenario.

Guess Two Thirds of the Average: Additionally

to absolute repeatable scenarios, it is also possible

to realize environmental-dependent live experiments.

A professor in business economics uses a game the-

ory example called guess two thirds of the average.

With that, students have to guess a number between

zero and one hundred. After everybody submitted his

guess as can be seen in figure 2, the average of all an-

swers is calculated and the student who is closest to

two thirds of the calculated average wins the game.

The average and winning number are calculated for

the current setting as soon as the lecturer closes the

quiz round, and the winning student gets an according

message on the screen. The “correct” result, therefore

changes for every iteration of this scenario.

Figure 2: Screenshot of a guessing scenario.

Message Board: A special kind of scenario for

comments is the Message Board. The students only

see a short textual description, a text input field and a

submit button. They are able to enter a comment into

the text input field which is cleared again as soon as

the comment is sent to the server by clicking the sub-

mit button. The comments are put on a Twitter-like

7

message board, where the latest post is displayed at

the top. One of our lecturers used it during live pro-

gramming in a big exercise with about eighty students

who should write feedback and questions during his

programming. After the first ten minutes, the students

gave about 30 comments, which were discussed im-

mediately. Unfortunately, after half the time of the

7

a mikroblogging service. http://twitter.com

CSEDU 2016 - 8th International Conference on Computer Supported Education

444

lecture, some students got bored and started monkey

business by writing nonsense and inappropriate mes-

sages. Therefore, the MobileQuiz2 was improved; the

lecturer is now able to hide inappropriate comments,

highlight noteworthy ones, and ban students from the

current round when they misbehave.

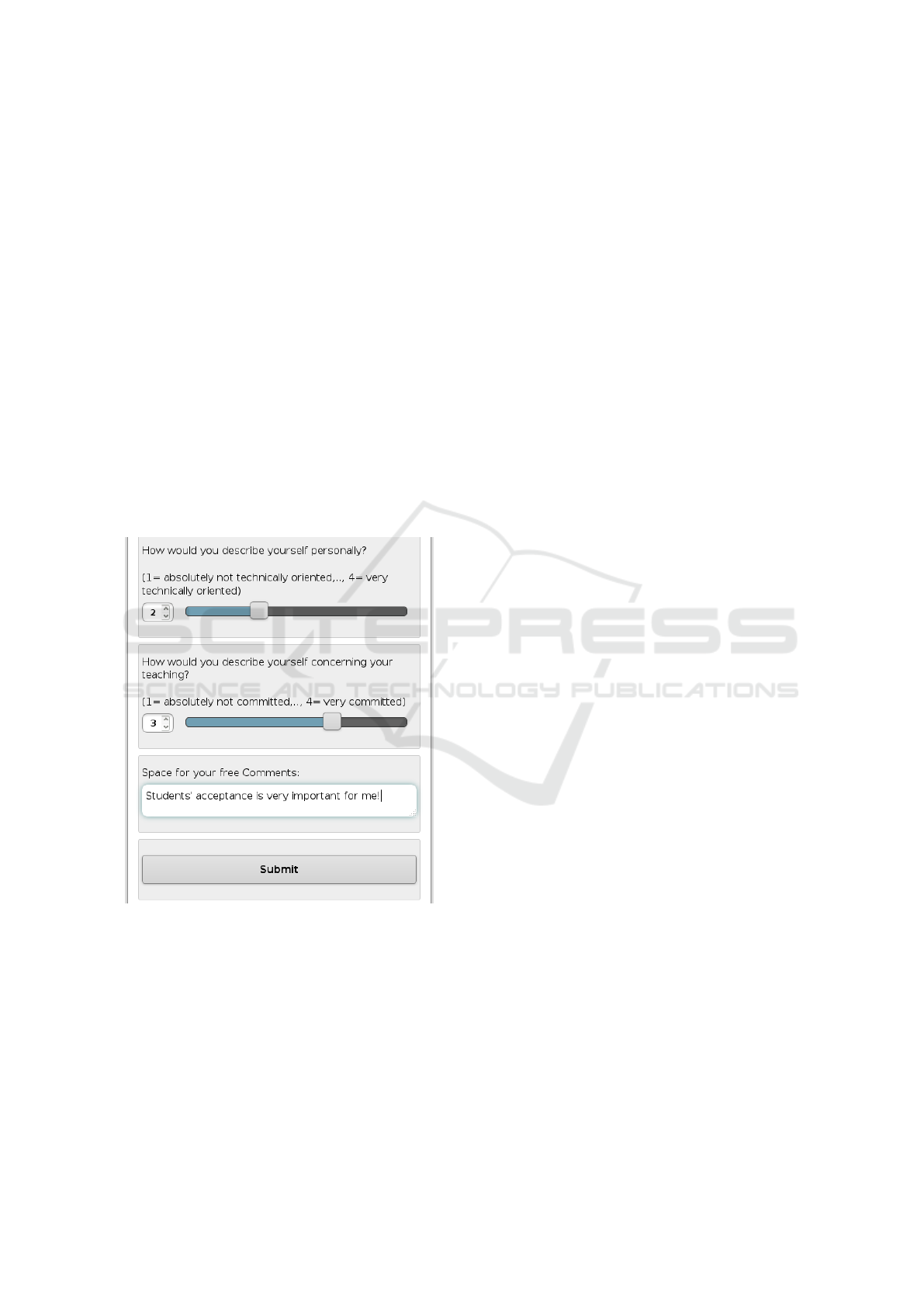

Survey: The MobileQuiz2 can also be used to col-

lect information in a survey scenario. This scenario

uses a Likert scale question to ask the opinion about a

specific topic. The range of the scale can be changed

by the lecturer. Therefore, the scale can be adjusted

for the current question, e. g., from 1 to 4 or 0 to 10.

It has a multiple-choice question to pick several top-

ics out of a list and an open text question for free text

answers as can be seen in Figure 3. Additionally, a

description field can be used to describe the current

question. This description appears as a read-only la-

bel on the students’ devices. The answers can be dis-

played like in the other scenarios. This scenario was

used to collect the data for the evaluations below.

Figure 3: Screenshot of a survey scenario.

Likert Scale Evaluation: Slider elements can be

used to create a Likert scale evaluation scenario. In

this way, the agreement of the audience on specific

statements can be collected (as known from social sci-

ences). These statements can be a starting point for

discussions and further explanations. Additionally,

evaluations on the course design can be conducted.

A lecturer of a weekend seminar on Economics edu-

cation in Spring semester 2015 used such a scenario

to let the students evaluate each other’s presentations.

The scenario consisted of several discussion points

which were chosen by the lecturer. These discussion

points contained a textual question and a slider from

one to five. The students saw all discussion points at

once and were able to express their opinions continu-

ously during the seminar. The results were aggregated

and presented directly.

Lecture Feedback: Similar to the Likert scale sce-

nario, the feedback scenario uses sliders for catching

the students’ opinion about the current lecture. The

scenario is designed to give the lecturer a live feed-

back about his talking speed and the comprehension

of his course content and to enable free comments by

the students. The scenario is used without an extra

submit button, as the feedback is updated continu-

ously.

5 EVALUATION

In addition to a continuous communication with our

lecturers about challenges and needs for improvement

of the new MobileQuiz2 , we did a evaluation at the

end of the Fall semester 2015. We used the survey

scenario of 3 and created one questionnaire for stu-

dents and one for lecturers. Although we introduced

more than a dozen lecturers to the MobileQuiz2 , only

a few actually used it regularly during the ongoing

semester. Most lecturers wanted to wait for the next

semester, using the vacation period to get into the sys-

tem and prepare their scenarios. Therefore, we cre-

ated three questionnaires in total and interviewed the

hesitating lecturers, the performing lecturers and the

students separately. We conducted the survey with all

lecturers who already got an introduction to the new

application (users and hesitaters).

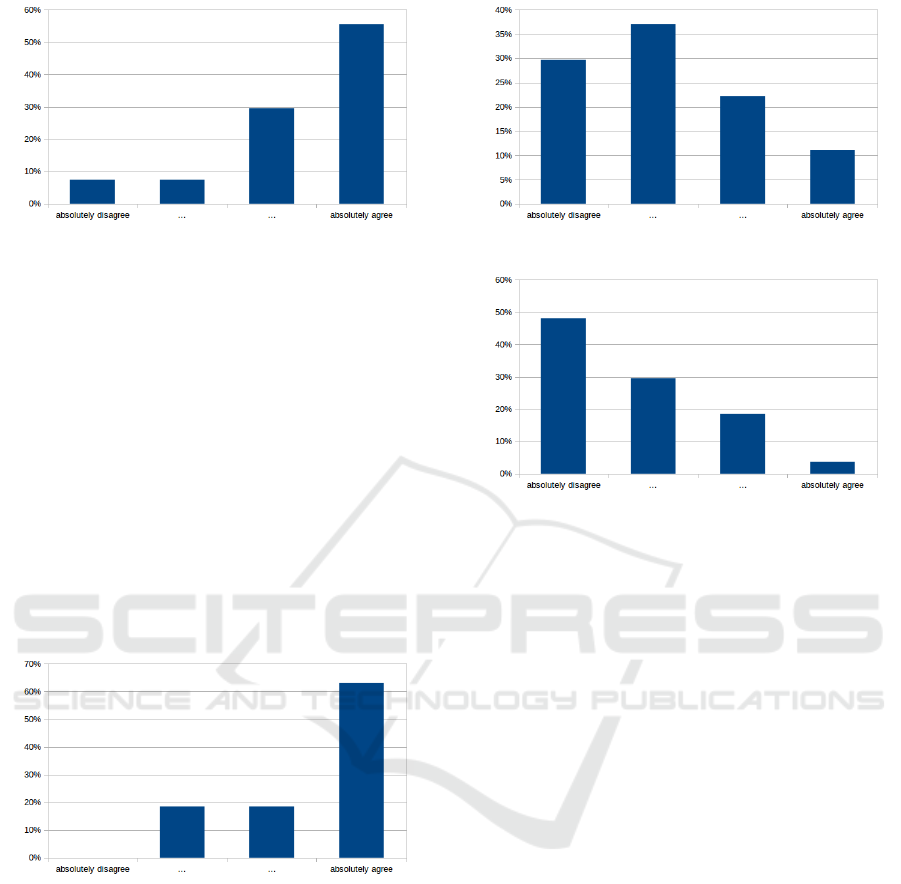

5.1 Students

The short survey was conducted in an exercise of

computer science where 27 of about 35 students par-

ticipated. The students were confronted with seven

statements and gave their rate of agreement on a four-

point Likert scale from absolutely disagree to abso-

lutely agree. The results showed that students mostly

enjoyed the new quizzes. When asked about the im-

provement in understanding the course contents, the

majority of the students stated that the quizzes sup-

port the understanding. More than 50% of the stu-

dents agreed with the statement. But at least 30% of

the students do not thought that their understanding

was supported. We also asked if the system distracts

them more than it helps the understanding. More than

Customized Teaching Scenarios for Smartphones in University Lecture Settings - Experiences with Several Teaching Scenarios using the

MobileQuiz2

445

Figure 4: The new variation makes the course more inter-

esting.

80% stated that they are not more distracted than it

helps.

We asked if the variations in teaching scenarios

which were possible with the new system increased

the courses attractivity. The course lecturer performed

at least five different scenarios during this semester.

Figure 4 shows that more than 80% of the answers

stated that these variations made the course more in-

teresting.

When asked if the anonymous participation is im-

portant, 80% of the students agreed. Less than 20%

slightly disagreed as shown in Figure 5. It is notable

that the amount of absolute agreement is very high

with about 60%.

Figure 5: Importance of an anonymous quiz participation.

Figure 6 shows the results when the students were

asked if they would accept to install software to

participate in the quizzes. The answers were not

as explicit as with the statements before, but about

60% of the students would not accept to install addi-

tional software. Only 10% stated that they absolutely

agree to accept installing additional software on their

phone.

We also asked if they wanted to be graded with

the help of these quizzes. Figure 7 shows that almost

80% disagree with that and almost 50% even abso-

lutely disagree. Finally, we gave space for a free tex-

tual comments. The student especially noted that the

stability of the system has a high priority for them.

Figure 6: Acceptance of installing a separate software.

Figure 7: Acceptance of being graded by the system.

5.2 Lecturers

We asked six lecturers from different disciplines to

give us a qualitative feedback. Two of them had an

informatics background, the others came from didac-

tics, mathematics and economics. They stated that

they are very committed to their teaching (with one

saying he or she is only slightly committed).

Not every lecturer had used the quiz in the past

semester, even if every one of them showed high in-

terest in the system and took part in a personal in-

troduction. When asked for the reasons, the answers

were mainly that they had time constraints during this

phase of the semester, and that the current course con-

cept does not include the quizzes yet. But they are

looking forward to the next semester, where they have

the semester break to prepare the next courses.

When asked how the students could benefit from

the customized scenarios, the answers were similar to

the benefits of regular ARSs. They mentioned the stu-

dents’ reflection of their own learning process and the

lecturer’s insight into the students’ knowledge base.

All lecturers said that the application can be used

without further support after an introduction, but the

greater challenges are getting all the students to par-

ticipate, the creation of suitable and wise quiz ques-

tions, and the design of good teaching scenarios.

Considering recommendations for improvements,

the lecturers want a better usability and overall stabil-

ity of the system to support larger user groups. They

CSEDU 2016 - 8th International Conference on Computer Supported Education

446

also would like to have more predefined blueprints of

teaching scenarios.

6 DISCUSSION

The results in Section 5 give an impression about stu-

dents’ and lecturers’ priorities. Despite appreciating

the new variations in teaching scenarios, both groups

have concerns. Students like the current quizzes and

think that they do support their learning process, but

they do not want to be graded with these quizzes, and

they want to participate anonymously. These findings

support the initial didactic focus of the tool: students

should be able to apply knowledge in an active way

within their learning process, and lecturers should

thereby be able to identify gaps in understanding. A

non-anonymous or even graded usage would lead to

pressure to perform well already during the learning

process. Consequently, only smart students would

give answers and therefore make a realistic analysis

of the students’ understanding impossible. Weaker

students would hold off and thus would not benefit

from the potentials of the tool to identify strengths

and weaknesses in the learning process. Nevertheless,

there could be additional reasons why students refuse

to be graded by the ARS system: maybe they fear the

system’s sensitivity for manipulation, or they prefer

assessment formats where they can demonstrate their

knowledge in more than, e. g., one multiple choice an-

swer (and the input of a longer free text answer on

a mobile device is very uncomfortable). Further re-

search needs to be done to identify the concrete rea-

sons. The questioned lecturers enjoy the new appli-

cation and think it is easy to use after having an in-

troduction. Nonetheless, only a few lecturers already

use the system and found the time to create suitable

content and to integrate it into their current courses.

Many of the more complex scenarios were created

and used by one lecturer. Others mostly hold with

already known questioning scenarios.

One conclusion could be that the system is still

too complex to easily be used by lecturers on their

own, even after an introduction. The improvement

to an even more intuitive usability and the offer of a

permanent didactic support could help to overcome

these challenges. Didactic support is also crucial to

solve the problems of getting students to participate,

of creating suitable and wise quiz questions and of

designing other good teaching scenarios. Concerning

the latter, one advantage of the system lies in the pos-

sibility to access teaching scenarios that were created

by other lecturers. Thereby, teachers can get inspira-

tions for their own courses. As expected, all lectur-

ers that answered the survey described themselves as

committed to teaching. A conclusion could be that

these teachers are more open to innovations in teach-

ing, and more experimental in using new tools. To

widely spread the usage of innovations in teaching,

another challenge would be to convince less commit-

ted lecturers. To achieve this, convincing arguments

and a user-friendly interface are essential.

7 CONCLUSIONS

The evaluation showed that our five-phase model is

able to depict customized teaching scenarios in a suit-

able way. Our application is able to perform a variety

of different moderate to complex scenarios in lectures

with up to seventy students. But when performing

very complex scenarios with a large number of rules

and more than one hundred students, we observed se-

rious performance issues. One reason probably is the

old desktop PC where the application server runs, but

the described model also encourages a high number

of database entries.

One could think that the students’ acceptance of

ARS tools is a short-term effect that vanishes as soon

as ARSs become ordinary and therefore boring. In

contrast, with the novel MobileQuiz2 , lecturers have

so many possibilities to vary and differently use the

tool that a ’system fatigue’ seems to be hardly possi-

ble. But the possibility to create customized teaching

scenarios implies that lecturers have a fundamental

knowledge in the field of instructional design. Fur-

thermore, it requires a reasonable amount of creativ-

ity and audacity, as lecturers state they want to have

more predefined scenario blueprints to choose from.

So, although our system offers various opportunities

to improve the student’s learning, using it in a didac-

tically reasonable way faces the lecturers with notice-

able challenges. We conclude that offering didactic

support and qualification is essential for a successful

application.

We now trained about twenty lecturers in the us-

age of the MobileQuiz2 , and many of them want to

use the system in the next Spring semester. There-

fore, we focus on further polishing our prototype for

a smooth operation and will add some minor func-

tionalities and connections to our older quiz applica-

tions. With the increasing number of active users, we

want to evaluate the effects of different scenarios on

the students more thoroughly and analyze the key suc-

cess factors of ARS and their impact.

Customized Teaching Scenarios for Smartphones in University Lecture Settings - Experiences with Several Teaching Scenarios using the

MobileQuiz2

447

REFERENCES

Beatty, I. D. and Gerace, W. J. (2009). Technology-

enhanced formative assessment: A research-based

pedagogy for teaching science with classroom re-

sponse technology. Journal of Science Education and

Technology, 18:146–162.

Biggs, J. (2003). Teaching for Quality Learning at Uni-

versity. Maidenhead: The society for Research into

Higher Education & Open Press University.

Brophy, K. (2015). Gamification and mobile teaching and

learning. In Handbook of Mobile Teaching and Learn-

ing, pages 91–105.

Chen, J. C., Whittinghill, D. C., and Kadlowec, J. A. (2010).

Classes that click: Fast, rich feedback to enhance stu-

dent learning and satisfaction. Journal of Engineering

Education, pages 159–168.

Dawabi, P., Dietz, L., Fernandez, A., and Wessner, M.

(2003). ConcertStudeo: Using PDAs to support

face-to-face learning. In Wasson, B., Baggetun, R.,

Hoppe, U., and Ludvigsen, S., editors, International

Conference on Computer Support for Collaborative

Learning 2003 - Community Events, pages 235–237,

Bergen, Norway.

Dufresne, R. J., Gerace, W. J., Leonard, W. J., Mestre, J. P.,

and Wenk, L. (1996). Classtalk: A classroom commu-

nication system for active learning. Journal of Com-

puting in Higher Education, 7:3–47.

Jackowska-Strumillo, L., Nowakowski, J., Strumillo, P.,

and Tomczak, P. (2013). Interactive question based

learning methodology and clickers: Fundamentals of

computer science course case study. In Human System

Interaction (HSI), 2013 The 6th International Confer-

ence on, pages 439–442.

Jagar, M., Petrovic, J., and Pale, P. (2012). Auress: The

audience response system. In ELMAR, 2012 Proceed-

ings, pages 171–174.

Kay, R. H. and LeSage, A. (2009). Examining the benefits

and challenges of using audience response systems:

A review of the literature. Comput. Educ., 53(3):819–

827.

Kopf, S., Scheele, N., Winschel, L., and Effelsberg, W.

(2005). Improving activity and motivation of students

with innovative teaching and learning technologies. In

Methods and Technologies for Learning, pages 551–

556.

Kundisch, D., Herrmann, P., Whittaker, M., Beutner, M.,

Fels, G., Magenheim, J., Sievers, M., and Zoyke, A.

(2012). Desining a web-based application to support

peer instruction for very large groups. In Proceed-

ings of the International Conference on Information

Systems, pages 1–12, Orlando, USA. AIS Electronic

Library.

Llamas-Nistal, M., Caeiro-Rodriguez, M., and Gonzalez-

Tato, J. (2012). Web-based audience response system

using the educational platform called bea. In Com-

puters in Education (SIIE), 2012 International Sym-

posium on, pages 1–6.

Murphy, T., Fletcher, K., and Haston, A. (2010). Supporting

clickers on campus and the faculty who use them. In

Proceedings of the 38th Annual ACM SIGUCCS Fall

Conference: Navigation and Discovery, SIGUCCS

’10, pages 79–84, New York, NY, USA. ACM.

Reay, N. W., Li, P., and Bao, L. (2008). Testing a new vot-

ing machine question methodology. American Journal

of Physics, 76:171–178.

Scheele, N., Wessels, A., Effelsberg, W., Hofer, M., and

Fries, S. (2005). Experiences with interactive lec-

tures: Considerations from the perspective of educa-

tional psychology and computer science. In Computer

Support for Collaborative Learning: Learning 2005:

The Next 10 Years!, pages 547–556. International So-

ciety of the Learning Sciences.

Sch

¨

on, D., Klinger, M., Kopf, S., and Effelsberg, W. (2015).

A model for customized in-class learning scenarios

- an approach to enhance audience response systems

with customized logic and interactivity. In Proceed-

ings of the 7th International Conference on Computer

Supported Education, pages 108–118.

Sch

¨

on, D., Kopf, S., and Effelsberg, W. (2012a). A

Lightweight Mobile Quiz Application with Support

for Multimedia Content. In 2012 International Con-

ference on E-Learning and E-Technologies in Educa-

tion (ICEEE), pages 134–139. IEEE.

Sch

¨

on, D., Kopf, S., Schulz, S., and Effelsberg, W.

(2012b). Integrating a Lightweight Mobile Quiz on

Mobile Devices into the Existing University Infras-

tructure. In World Conference on Educational Mul-

timedia, Hypermedia and Telecommunications (ED-

MEDIA) 2012, Denver, Colorado, USA. AACE.

Teel, S., Schweitzer, D., and Fulton, S. (2012). Braingame:

A web-based student response system. J. Comput. Sci.

Coll., 28(2):40–47.

Treesa, A. R. and Jacksona, M. H. (2007). The learning

environment in clicker classrooms: student processes

of learning and involvement in large universitylevel

courses using student response systems. Learning,

Media and Technology, 32:21–40.

Tremblay, E. (2010). Educating the Mobile Generation us-

ing personal cell phones as audience response systems

in post-secondary science teaching. J. of Computers in

Mathematics and Science Teaching, 29:217–227.

Uhari, M., Renko, M., and Soini, H. (2003). Experiences

of using an interactive audience response system in

lectures. BMC medical education, 3:12.

Vinaja, R. (2014). The use of lecture videos, ebooks, and

clickers in computer courses. J. Comput. Sci. Coll.,

30(2):23–32.

CSEDU 2016 - 8th International Conference on Computer Supported Education

448