Total Information Transmission between Autonomous Agents

Bernat Corominas-Murtra

Section for Science of Complex Systems, Medical University of Vienna, Spitalgasse, 23 1090 Vienna, Austria

Keywords:

Information Theory, Meaningful Communication, Autonomous Agents, Emergence of Communication.

Abstract:

This note explores the current framework of information theory to quantify the amount of semantic content of

a given message is sent in a given communicative exchange. Meaning issues have been out of the mainstream

of information theory since its foundation. However, in spite of the enormous success of the theory, recent

advances on the study of the emergence of shared codes in communities of autonomous agents revealed that the

issue of meaningful transmission cannot be easily avoided and needs a general framework. This is due to the

absence of designer/engineer and the presence of functional/semantic pressures within the process of shaping

new codes or languages. To overcome this issue, we demonstrate that the classical Shannon framework can be

expanded to accommodate a minimal explicit incorporation of meaning within the communicative exchange.

1 INTRODUCTION

The exploration of the emergence of communication

has been a hot topic of research recent years (Hurford,

1989; Nowak, 1999; Cangelosi, 2002; Komarova,

2004; Niyogi, 2006; Steels, 2003). In particular, the

emergence of shared, non-designed codes between

autonomous agents pushed by selective pressures has

been a source of interesting results (Nowak, 1999;

Steels, 2001). In most of these studies, codes emerge

among agents by the need to communicate things

about the world they are immersed in. These agents

are autonomous, therefore, no designer or engineer

is explicitly behind the communicative exchanges en-

suring the correct transmission and interpretation of

the message. The role of code designer is taken by

evolution and its associated selective pressures. These

selective pressures apply at different levels: the stan-

dard information-theoretic level –the physical coding

and transmission of the events of the world shared by

the autonomous agents– and the semantic/functional

level. By this semantic/functional level we refer to

the content of the message, which, in turn, can be

split in two parts: 1) The relevance of the event to be

transmitted, a crucial issue in a selective framework

and 2) the proper referentiation of such event during a

communicative exchange (Corominas-Murtra, 2013)

-see figure (1). Both dimensions of the communica-

tive phenomenon will lead the functional response of

the agent and its potential success through the selec-

tive process. It is worth observing that these seman-

tic/functional issues are totally absent in standard in-

formation theory (Hopfield, 1994).

⇤⇤

⇤⇤

⇤⇤

⇤⇤

a)

b)

c)

d)

B

A

B

A

B

A

B

A

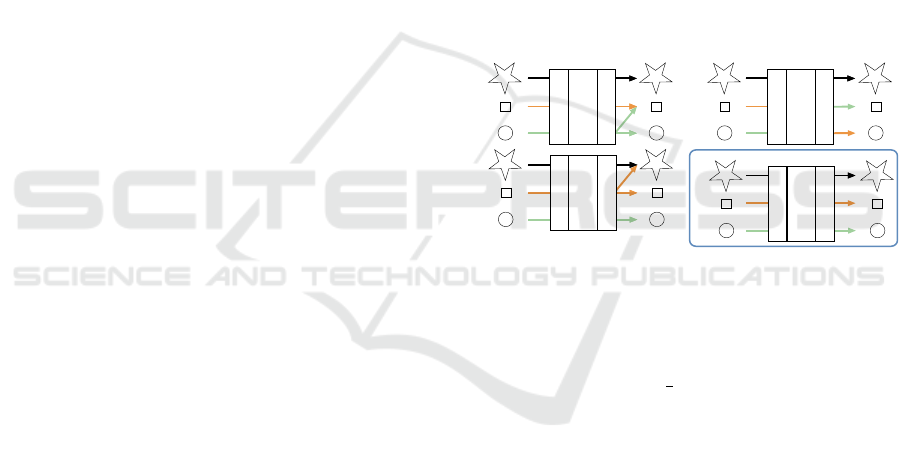

Figure 1: The two problems of Shannon’s Information con-

cerning the simplest meaning transmission: Blindness of it

to i) internal hierarchy of relevance of events and ii) Ref-

erentiality conservation. We have a communication sys-

tem consisting on three objects Ω = {x

1

,x

2

,x

3

} appearing,

for the sake of simplicity, with equal probabilities, namely

p(x

1

) = p(x

2

) = p(x

3

) =

1

3

. The appearance of an object

is coded in some way by the coder agent A, passes through

the channel Λ and is decoded by the decoder agent B, which

gives a referential value to the signal received. Standard

mutual information does not distinguish between a) and b).

However, if we assume that the information contained in x

1

is larger than the others, the same communication mistake

involving this object should be penalised higher in the over-

all information transfer. In c) and d) we depict the referen-

tiality problem. Mutual information is blind to referential-

ity mistakes. Only d) represents a perfect communication

schema where all semantic aspects are respected. In this pa-

per we derive the information-theoretic functional that ac-

counts for the internal hierarchy of relevance of events. By

accounting the semantic value or weight of each event to be

coded and sent through the channel, we derive the proper

functional able to distinguish between a) and b).

In this article we present a minimal incorpora-

tion of the semantics of elements in a given infor-

mation/theoretic functional, quantifying the amount

statistical bits properly transmitted plus the average

Corominas-Murtra, B.

Total Information Transmission between Autonomous Agents.

In Proceedings of the 1st International Conference on Complex Information Systems (COMPLEXIS 2016), pages 21-25

ISBN: 978-989-758-181-6

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

21

semantic content of them in a given communicative

exchange between two autonomous agents. This pro-

vides a solution to problem 1) pointed out above con-

cerning meaning/functional issues in standard infor-

mation theory: The relevance of the event to be trans-

mitted -see figure (1). In this new framework informa-

tion becomes a 2-dimensional entity: on the one hand,

one has the classical Shannon information and, on the

other hand, one has the semantic information which

is carried by those statistical bits. The sum of both is

the total information transmitted, the amount of bits

which will be taken into account for the selective pres-

sures, presented in equation (11). Point 2), concern-

ing the conservation of the referential value, stems

from the conception of the dual nature of the commu-

nicative sign, a primitive kind of Saussurean duality

(Saussure, 1916; Hopfield, 1994; Corominas-Murtra,

2013) which considers the signal and the reference

as the fundamental unit to be conserved in a commu-

nicative exchange. The incorporation of such duality

in a consistent information-theoretic framework has

been already addressed in (Corominas-Murtra, 2013).

Crucially, this approach did not take into account any

meaning quantification, and this is the target of this

work.

The inclusion of meaning can be performed

through any justified quantification to the elements of

the world. In selective scenarios, meaning/functional

quantification may be understood as emerging from a

kind of language game, in Wittgenstein’s interpreta-

tion (Wittgenstein, 1953; Kripke, 1982; Steels, 2001).

This game would combine the interaction between the

environment and the autonomous agents with the in-

teraction among the autonomous agents themselves.

In a primary approach, one can attribute the meaning

quantification out of this language game to be tied to

the relevance of the functional response to the events

to be coded and transmitted. In general, and follow-

ing Wittgenstein’s footsteps, no absolute measure of

meaning quantification is assumed to be achievable,

and such a quantification, if possible, must be the out-

come of an agreement/consensus among the agents

under the conditions imposed by evolutionary pres-

sures. We point out that, in formal systems, stan-

dard approaches developed to quantify the amount of

information of abstract objects beyond the statistical

framework can provide solid formal background for

meaning quantification. As paradigmatic examples,

the approach to semantic information proposed by

Yehoshua Bar-Hillel and Rudolf Carnap over logical

systems (Carnap, 1953) or the theory of Algorithmic

Complexity to quantify the amount of information

required to describe a formal object (Kolmogorov,

1965; Chaitin, 1966; Li, 1997; Cover, 2001). To bet-

ter grasp the intuition behind this paper, let us stop a

while with an example of Bar-Hillel and Carnap’s se-

mantic information theory (Carnap, 1953). Roughly

speaking, the main idea underlying their approach

stems from the following observation: let us imag-

ine that we have a world made of two variables p, q

and that the ’state’ of the world is given by formulas:

p, q, p ∨ q, p ∧ q.

Now assume that the sender transmits events from the

world, as long as the formulas are satisfied, i.e., their

truth values, at a given point in time are 1. Undoubt-

edly, p ∧ q will contain much more information about

the state of the world than p ∨ q. This happens be-

cause the conditions under which p ∧ q is satisfied

are much more restrictive than the ones than satisfy

p ∨ q. This is classic work from (Carnap, 1953). Now

imagine that what we have a receiver which decodes

the message of the sender after a presumably noisy

channel. How much of the semantic information has

been transmitted? In other words, even p ∨ q and

p ∧ q may have the same probability of appearing –

and therefore, the same weight in the computation

of the statistical information– is there a way to take

into account that p ∧ q contains more information, in

terms of message content? As pointed out above, if

the sender/receiver system is autonomous, being able

to perform such a distinction can make a difference

in terms, for example, survival chances in selective

scenarios.

Nevertheless, it is worth to emphasise that the

choice of the semantic framework is incidental, and

it remains deliberately open. A final word of cau-

tion is required: the presented results are tied to the

range of applicability of standard information the-

ory, and therefore concern closed systems, and as

such, extremely simple abstractions of real scenarios.

Nonetheless, it demonstrates that in such controlled

cases, transmission of meaning is affordable from a

consistent, information-theoretic viewpoint.

2 TOTAL INFORMATION

TRANSMISSION

2.1 Quantification of Meaning

In general, we have a finite set of objects Ω =

{x

1

,...,x

N

} and a function Q,

Q : Ω −→ R

+

, (1)

such that Q(x

k

) is the information content of x

k

. We

will have in mind the following schema: Two au-

tonomous agents A and B immersed in a shared

COMPLEXIS 2016 - 1st International Conference on Complex Information Systems

22

world whose events/objects are members of the set

Ω = {x

1

,....,x

N

}. Agents exchange information about

Ω. Objects in Ω = {x

1

,...,x

N

}, appear following a

given random variable X

Ω

∼ p. Every object x

k

∈ Ω

will thus appear with probability p(x

k

). The entropy

of such an ensemble of objects will give us the min-

imal amount of information to describe the statistical

behaviour of X

Ω

, namely:

h(X

Ω

) = −

∑

x

k

∈Ω

p(x

k

)log p(x

k

).

As it is standard in information theory, X

Ω

is an infor-

mation source sending h(X

Ω

) bits to the information

channel -we follow the communication schema pro-

vided in figure (1).

2.2 Total Information of an Ensemble of

Objects

Total information of an ensemble of objects.- How

much of semantic information is sent, in average, if

we consider X

Ω

as an information source? To answer

this question, we first define the vector φ, whose ele-

ments φ(x

k

) are defined as:

φ(x

k

) ≡ −

p(x

k

)log p(x

k

)

h(X

Ω

)

. (2)

Then, the amount of semantic information sent by X

Ω

as an information source is:

hQi

φ

=

∑

x

k

∈Ω

φ(x

k

)Q(x

k

), (3)

namely, the average semantic information × per bit of

the information source defined by X

Ω

. In other words:

The amount of semantic bits carried by statistical bits;

or how much meaning can you send, in average, hav-

ing the ensemble of objects Ω which is sampled using

X

Ω

.

The total information we get, in average, from the

ensemble of objects Ω, to be named H(Ω,X

Ω

), will

thus be:

H(Ω,X

Ω

) = h(X

Ω

) + hQi

φ

, (4)

i.e., the statistical information per event plus the aver-

age amount of semantic information carried by such

statistical information. Notice that the derivation we

provided for the total information differs from the

one given by Gell-mann and Lloyd and Ay et al

(Gell-mann, 1996; Ay, 2010). Although inspired in

Gell-mann and Lloyd’s definition, the definition here

provided better fits in a broad information/theoretic

frame where transmission is taken into account. To

have a consistent framework accounting for trans-

mission, it is necessary to weight the semantic con-

tent with the informative contribution of a given sig-

nal/object to the overall information content. As we

shall see in the following lines, this is crucial get

consistent results when, for example, the channel is

totally noisy and all information is destroyed. The

framework here presented will be able to cope with

the natural assumption that, in these cases, both the

semantic and statistic information transmitted must be

zero. The ontological discussion between these two

approaches, even interesting from the epistemologi-

cal and formal viewpoint, exceeds the scope of this

paper.

Now we proceed our construction by defining

q(x

k

) ≡

Q(x

k

)

h(X

Ω

)

,

from which we can rewrite H(Ω, X

Ω

) as:

H(Ω,X

Ω

) ≡ h(X

Ω

)

∑

x

k

∈Ω

φ(x

k

)(1 + q(x

k

)). (5)

We observe that another approach would be

to consider H(Ω, X

Ω

) a two-dimensional vector

H(Ω,X

Ω

) ≡ hh(X

Ω

),hQi

φ

i, thereby highlighting the

two-dimensional character of the approach. We take

the definition provided in equation (4) for the sake of

simplicity.

2.3 Transmission of Semantic and

Statistic Information

Formally, we have an ensemble of objects Ω whose

behaviour is described by X

Ω

. This is the informa-

tion source. Agents A and B sharing the world made

by the objects of Ω transmit messages about it among

them. Information provided by the source X

Ω

is coded

in some way by agent A, and agent B assign the re-

ceived message to a given object x

i

∈ Ω, being this as-

signment process depicted by the random variable X

0

Ω

.

We say that X

0

Ω

is the reconstruction of X

Ω

made by

agent B . The communication channel between agents

A and B is described by the matrix Λ

Λ(x

k

,x

j

) ≡ p(X

0

Ω

= x

j

|X

Ω

= x

k

).

For simplicity, we will simply write p(x

j

|x

k

). Like-

wise, we will refer to the join probability p(X

Ω

=

x

k

,X

0

Ω

= x

j

) simply as p(x

k

,x

j

) and, to the conditional

probability p(X

Ω

= x

j

|X

0

Ω

= x

k

) simply as p

0

(x

j

|x

k

).

We finally note that X

0

Ω

follows the probability distri-

bution p

0

defined as:

p

0

(x

k

) =

∑

x

i

∈Ω

p(x

k

|x

i

)p(x

i

).

Having all the ingredients properly defined, we first

put Shannon information in a suitable way to work

Total Information Transmission between Autonomous Agents

23

with, namely:

I(X

Ω

: X

0

Ω

) = =

∑

x

k

,x

`

∈Ω

p(x

k

,x

`

)log

p(x

k

,x

`

)

p(x

k

)p

0

(x

`

)

=

∑

x

k

∈Ω

p(x

k

)D(p(X

0

Ω

|x

k

)||p

0

),

where

D(p(X

0

Ω

|x

k

)||p

0

) ≡

∑

x

`

∈Ω

p(x

`

|x

k

)log

p(x

`

|x

k

)

p

0

(x

`

)

,

is the Kullback-Leibler (KL) divergence between dis-

tributions p(X

0

Ω

|x

k

) and p

0

(Cover, 2001). The KL

divergence D(p(X

0

Ω

|x

k

)||p

0

) can be interpreted as the

information gain agent B has from observing x

k

, in

statistical terms. The KL divergence is non-negative

and, in this particular problem, is bounded as follows:

0 ≤ D(p(X

0

Ω

|x

k

)||p

0

)

=

∑

x

i

∈Ω

p(x

i

|x

k

)log

p(x

k

,x

i

)

p

0

(x

i

)

− log p(x

k

) (6)

≤ −log p(x

k

).

In this framework, p(x

k

)D(p(X

0

Ω

|x

k

)||p

0

) is the av-

erage contribution of x

k

to the mutual information.

Thus, the ratio φ

0

(x

k

), defined as:

φ

0

(x

k

) ≡ p(x

k

)

D(p(X

0

Ω

|x

k

)||p

0

)

h(X

Ω

)

, (7)

depicts the average fraction of bits from the source X

Ω

coded by agent A due to x

k

that are properly transmit-

ted. By defining the vectors φ ≡ (φ(x

1

),...,φ(x

N

)) and

φ

0

≡ (φ

0

(x

1

),...,φ

0

(x

N

)), one can completely describe

the effect of the channel Λ as a transformation of the

vector φ:

φ → φ

0

. (8)

Understanding the effect of the channel as the trans-

formation depicted in equation (8) creates a compact

and intuitive way to express the effect of the channel

over all the information-theoretic functionals that we

will derive. From equations (6,7) we observe that, for

any x

k

∈ Ω,

0 ≤ φ

0

(x

k

) ≤ φ(x

k

). (9)

Notice that, in general, φ

0

is not a probability. Now,

we observe that we can rewrite the usual mutual in-

formation among X

Ω

and X

0

Ω

, I(X

Ω

: X

0

Ω

), in terms of

φ and φ

0

:

I(X

Ω

: X

0

Ω

) = h(X

Ω

)

∑

x

k

∈Ω

φ

0

(x

k

),

and consistently, the standard noise term h(X

Ω

|X

0

Ω

)

(Cover, 2001), can be expressed as:

h(X

Ω

|X

0

Ω

) = h(X

Ω

)

∑

x

k

∈Ω

(φ(x

k

) − φ

0

(x

k

)).

The transmitted semantic information, to be referred

to as Q

Ω

(Λ) can be now easily derived in terms of φ

and φ

0

:

Q

Ω

(Λ) =

∑

x

k

∈Ω

φ

0

(x

k

)Q(x

k

). (10)

Equation (10) quantifies the average semantic content

of the information received by agent B after being de-

coded in some way by agent A and sent through the

channel Λ. Consistently to what we have done above

with the standard mutual information, the semantic

noise or the loss of semantic information, η(X

Ω

|X

0

Ω

)

can be expressed as:

η(X

Ω

|X

0

Ω

) =

∑

x

k

∈Ω

(φ(x

k

) − φ

0

(x

k

))Q(x

k

).

2.4 Transmission

We are now in the position to compute the total infor-

mation transmission from agent A to agent B . This

involves the mutual information between X

Ω

and its

reconstruction made by agent B , X

0

Ω

plus the seman-

tic content that can be properly conveyed. According

to this, one has that total information transmission,

I

T

(X

Ω

,X

0

Ω

,Ω) is defined as:

I

T

(X

Ω

,X

0

Ω

,Ω) = I(X

Ω

: X

0

Ω

) + Q

Ω

(Λ). (11)

which can be rewritten, as we did in equation (4), as:

I

T

(X

Ω

,X

0

Ω

,Ω) = h(X

Ω

)

∑

x

k

∈Ω

φ

0

(x

k

)(1 + q(x

k

)).

We observe that the above expression is identical to

the one we derived in equation (5) describing total in-

formation of an ensemble of objects, but with chang-

ing φ → φ

0

. Finally, if one wants to highlight the role

of the source and the different noise contributions,

mimicking the standard formulation of information

theory, I

T

(X

Ω

,X

0

Ω

,Ω) can be rewritten as:

I

T

(X

Ω

,X

0

Ω

,Ω) = H(X

Ω

,Ω) − h(X

Ω

|X

0

Ω

) − η(X

Ω

|X

0

Ω

).

2.5 Properties

We finally point out some of the some of the prop-

erties of I

T

as defined in equation (11). We first

observe that, if the channel is totally noisy, (∀x

k

∈

Ω)(φ

0

(x

k

) = 0). Indeed, in a totally noisy channel Λ,

(∀x

k

,x

j

∈ Ω)(Λ(x

k

,x

j

) = 1/N), leading to

(∀x

k

∈ Ω) φ

0

(x

k

) = 0,

which results, consistently, into

I

T

(X

Ω

,X

0

Ω

,Ω) = 0.

On the contrary, if the channel is noiseless, Λ is a

N × N permutation matrix, namely, a N × N matrix

COMPLEXIS 2016 - 1st International Conference on Complex Information Systems

24

which has exactly one entry equal to 1 in each row

and each column and 0’s elsewhere It is straight-

forward to check that this leads to φ

0

= φ, so that

I(X

Ω

,X

0

Ω

) = h(X

Ω

) and Q

Ω

(Λ) = hQi

φ

, and, accord-

ingly:

I

T

(X

Ω

,X

0

Ω

,Ω) = H(X

Ω

,Ω).

We observe that there are

N

2

permutation matrices,

so there are

N

2

different configurations that lead to

the above result. Finally, using equation (9) we can

properly bound I

T

:

0 ≤ I

T

(X

Ω

,X

0

Ω

,Ω) ≤ H(Ω,X

Ω

).

The above chain of inequalities ensures that I

T

has

a good behaviour concerning the intuitive constraints

one has to assume for an information measure: 1) a

totally noisy channel implies that no information is

transmitted and 2) no information is created in the

process of sending and processing.

3 DISCUSSION

We presented an information-theoretic framework

to evaluate the communication between two au-

tonomous agents which includes its semantic rele-

vance. Specifically, we derived, within the Shannon’s

paradigm, the amount of total information transmit-

ted: The standard mutual information plus a term ac-

counting for the amount of semantic bits carried by

each statistical bit. The main result of the paper, pro-

vided in equation (11) shows that it is possible to eval-

uate the transmission of message content using the

standard framework. Crucially, the specific quantifi-

cation of the content of the message is left from the

theory and remains deliberately open, giving a total

generality to the derived results. Beyond the gener-

ality of the result, it paves the way towards a rigor-

ous information-theoretic exploration of code emer-

gence in scenarios where autonomous agents develop

and evolve under evolutionary constraints. This is

the case, among others, of artificial intelligence stud-

ies based on autonomous robots or simplified biologi-

cal problems concerning the resilience and emergence

of shared codes. Further works should explore how

to match the obtained results accounting for mean-

ing transmission –without referentiality conservation

assumed– with the results provided in (Corominas-

Murtra, 2013), where the problem of referentiality

conservation –without meaning quantification– is ad-

dressed.

ACKNOWLEDGMENTS

This work was supported by the Austrian Fonds zur

FWF project KPP23378FW. The author wants to

thank Jordi Fortuny and Rudolf Hanel for helpful dis-

cussions on the manuscript.

REFERENCES

Ay, N. M

¨

uller, M. & Szkola, (2010) A Effective complex-

ity and its relation to logical depth. IEEE Trans. on

Inform. Theory 56(9): 4593-4607.

Cangelosi A. & Parisi D. (Eds.) (2000) Simulating the Evo-

lution of Language. (Springer: London).

Bar-Hillel, Y. & Carnap, R. (1953) Semantic Information

The British Jour. for the Phil. of Sci., 4, 14 147-157.

Chaitin, G-J.(1966) On the length of programs for comput-

ing finite binary sequences. J ACM 13, 547-569.

Corominas-Murtra, B., Fortuny, J. & Sol

´

e, R. (2014) To-

wards a mathematical theory of meaningful commu-

nicationNature Scientific Reports 4, 4587.

Cover, T. M. and Thomas, J.-A. (2001) Elements of Infor-

mation Theory (John Wiley and Sons:New York).

Gell-mann, M. & Lloyd, S. (1996) Information

measures, effective complexity, and total informa-

tion.Complexity 2 144-52.

Hopfield, J. (1994) Physics, computation, and why biology

looks so different. J. Theor.l Biol.171 53.

Hurford, J. (1989) Biological evolution of the Saussurean

sign as a component of the language acquisition de-

vice. Lingua 77, 187.

Kolmogorov, A.(1965) Logical basis for information theory

and probability theory. Problems Inform Transmission

1, 1-7.

Komarova N. L. and Niyogi,P. (2004) Optimizing the

mutual intelligibility of linguistic Agents in a shared

World. Art. Int.154 1.

Kripke, S. (1982) Wittgenstein on Rules and Private Lan-

guage. (Oxford:Blackwell).

Ming, L, Vit

´

anyi, P (1997) An introduction to Kolmogorov

complexity and its applications. (Springer:New York).

Niyogi, P. (2006). The Computational Nature of Lan-

guage Learning and Evolution. )MIT Press. Cam-

bridge, Mass).

Nowak, M. A. and Krakauer, D.(1999) The Evolution of

Language. Proc. Nat. Acad. Sci. USA96 8028

Saussure, F. (1916) Cours de Linguistique G

´

en

´

arale. (Bib-

lioth

`

que scientifique Payot. Paris:France).

Solomonoff, R.(1964) A Formal Theory of Inductive Infer-

ence. Inform and Control 7-1, 1-22.

Steels, L. (2001) Language games for Autonomous

Robots.IEEE Int. Syst.16, 16

Steels, L. (2003) Evolving grounded communication for

robots.Trends. Cogn. Sci. 7 308.

Wittgenstein, L. (1953) Philosophical Investigations in

Anscombe, G. E. M., and Rhees R. (eds.) (Oxford:

Blackwell).

Total Information Transmission between Autonomous Agents

25