Evaluating Body Tracking Interaction in Floor Projection Displays

with an Elderly Population

Afonso Gonçalves

1

and Mónica Cameirão

1,2

1

Madeira Interactive Technologies Institute, Funchal, Portugal

2

Universidade da Madeira, Funchal, Portugal

Keywords: Large Display Interface, Floor Projection, Elderly, Natural User Interface, Kinect.

Abstract: The recent development of affordable full body tracking sensors has made this technology accessible to

millions of users and gives the opportunity to develop new natural user interfaces. In this paper we focused

on developing 2 natural user interfaces that could easily be used by an elderly population for interaction with

a floor projection display. One interface uses feet positions to control a cursor and feet distance to activate

interaction. In the second interface, the cursor is controlled by ray casting the forearm into the projection and

interaction is activated by hand pose. The interfaces were tested by 19 elderly participants in a point-and-click

and a drag-and-drop task using a between-subjects experimental design. The usability and perceived workload

for each interface was assessed as well as performance indicators. Results show a clear preference by the

participants for the feet controlled interface and also marginal better performance for this method.

1 INTRODUCTION

Developed countries’ populations are becoming

increasingly older, with estimates that one third of the

European citizens will be over 65 years old by 2060

(European Commission, Economic and Financial

Affairs, 2012). With older age, vision perception is

commonly negatively affected (Fozard, 1990) and the

effects of sedentary lifestyles become more

prominent. A computer system that could alleviate

such problems through the use of large dimension

displays and motion tracking interfaces could prove

advantageous. More concretely, applications

targeting engagement and physical fitness would

provide extensive health benefits in older adults

(World Health Organization, 2010).

Meanwhile, the release of low-cost body tracking

sensors for gaming consoles has made it possible for

gesture detection to be present in millions of homes.

Sensors like the Kinect V1, of which more than 24

million units were sold by Feb. 2014 (Microsoft News

Center, 2013), and Kinect V2, having 3.9 million

units bundled and sold along with Xbox One consoles

by Jan. 2014 (“Microsoft’s Q2,” 2014, p. 2). The

popular access to this technology opens the way for

more user natural ways of interacting with computing

systems. Natural user interfaces (NUI), where users

act with and feel like naturals, aim at reflecting user

skills and taking full advantage of their capacities to

fit their task and context demands from the moment

they start interacting (Wigdor and Wixon, 2011). In

addition to the body tracking sensors’ unique

interface capabilities they also provide exciting

possibilities for automatic monitoring of health

related problems through kinematic data analysis. For

example, automated systems for assessing fitness

indicators in elderly (Chen et al., 2014; Gonçalves et

al., 2015), automatic exercise rehabilitation guidance

(Da Gama et al., 2012), or diagnosis and monitoring

of Parkinson’s disease (Spasojević et al., 2015).

The coupling of body tracking depth sensors, such

as Kinect, and projectors enable systems to not only

track the user movements relative to the sensor but

also the mapping of the projection surfaces. In a well

calibrated system, where the transformation between

the sensor and projector is known, this allows for

immersive augmented reality experiences, such as the

capability of augmenting a whole room with

interactive projections (Jones et al., 2014).

In this paper we present the combination of floor

projection mapping with whole body tracking to

provide two modalities of body gesture NUIs in

controlling a cursor. One modality is based on feet

position over the display while the other uses forearm

orientation (pointing). We assessed the interfaces

with an abstraction of two common interaction tasks,

the point-and-click and drag-and-drop, on an elderly

24

Gonçalves, A. and Cameirão, M.

Evaluating Body Tracking Interaction in Floor Projection Displays with an Elderly Population.

DOI: 10.5220/0005938900240032

In Proceedings of the 3rd International Conference on Physiological Computing Systems (PhyCS 2016), pages 24-32

ISBN: 978-989-758-197-7

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

population sample. The differentiation was done by

evaluating the systems in terms of usability,

perceived workload and performance. This work is an

initial and important step in the development of a

mobile autonomous robotic system designed to assist

elderly in keeping an active lifestyle through

adaptable exergames. The platform, equipped with a

micro projector and depth sensor will be able to

identify users and provide custom exergames through

live projection mapping, or spatial augmented reality.

The results from this experiment will not only help in

the improvement of a gesture interface for such

platform but also contribute to exergame interaction

design.

2 RELATED WORK

While gesture based interaction is not a requirement

for a NUI, it is an evident candidate for the

development of such an interface.

An area where several in-air gesture interfaces

have been proposed is in pan-and-zoom navigation

control. In (Nancel et al., 2011) the authors

investigated the impact three interaction variables had

in task completion time and navigation overshoots

when interacting with a wall-sized display. The

variables were: uni- vs. bi- manual, linear vs. circular

movements, and number of spatial dimensions for

gesture guidance (in zooming). Panning was

controlled by ray casting the dominant hand into the

screen and activated by device clicking. Results

showed that performance was significantly better

when participants controlled the system bimanually

(non-dominant hand zooming), with linear control

and 1D guidance (mouse scroll wheel for zooming).

A NUI for controlling virtual globes is introduced in

(Boulos et al., 2011). The system uses a Kinect sensor

to provide pan, zoom, rotation and street view

navigation commands to Google Earth. The system

presents an interesting possibility for a NUI as in-air

gestures follow the same logic as common multi-

touch gestures. Hand poses (open/close) are used to

activate commands while relative position of the

hands is used to control the virtual globe. For street

view control it makes use of gestures that mimic

human walk, swinging arms makes the point-of-view

move forward while twisting the shoulders rotates it.

The use of metaphors that make computer controls

relate to other known controls is not uncommon. In

(Francese et al., 2012) two different approaches for

interfacing with Bing Maps were tested for their

usability, presence and immersion. Using a Wiimote

the authors built a navigation interface inspired in the

motorcycle metaphor. A handlebar like motion

controlled turning and right hand tilting acted as

throttle. Additionally to the metaphor, altitude over

the map was controlled by left hand tilting. The

alternative approach used the Kinect to provide

control and feedback inspired in the bird metaphor.

Raising the arms asymmetrically enables turning,

both arms equally raised or lowered from a neutral

position control altitude and moving the hands

forward makes the user advance; the controls are

enhanced by providing feedback in form of a

bird/airplane avatar. Descriptive statistic results

showed high levels of usability and presence for both

systems, with higher values for the latter. The use of

torso angle to control an avatar in a virtual reality city

and how this control method affected the user

understanding of size proportions in the virtual world

was investigated in (Roupé et al., 2014). The system

uses forward/backward leaning and shoulder turning

to move and turn in the respective direction. It was

tested on participants chosen for their knowledge in

urban planning and building design, and compared to

the common first-person-shooter mouse/keyboard

interface. The results show that the system navigation

was perceived as both easier and less demanding than

the mouse/keyboard, and that it gave a better

understanding of proportions in the modelled world.

Beyond navigation interface, gesture NUIs have

been studied in the context of controlling

computerized medical systems. This is particularly

important in the surgery room where doctors must

maintain a sterile field while interacting with medical

computers. In (Tan et al., 2013), the authors present

their Kinect based system for touchless radiology

imaging control. It replaces the mouse/keyboard

commands with hand tracking controls where the

right hand controls the cursor and the left hand is used

for clicking, the activation of the system was done by

standing in front of the Kinect and waving. Tested for

its qualitative rating with radiologists, 69%

considered that the system would be useful in

interventional radiology. The majority also found it

easy to moderately difficult to accomplish the tasks.

Similarly, in (Bigdelou et al., 2012) the authors

introduced a solution for interaction with these

systems using inertial sensors instead. Here the

activation of the gesture detection was made by using

a physical switch or voice commands.

Several exploratory research studies have been

made to find the common gestures that naïve users

would naturally perform. In (Fikkert et al., 2009) the

authors found, by running an experiment in a Wizard

of Oz set-up, that when asked to perform tasks in a

large display participants would adopt the point-and-

Evaluating Body Tracking Interaction in Floor Projection Displays with an Elderly Population

25

click mouse metaphor. In (Vatavu, 2012), participants

were asked to propose gestures for common TV

functions. The gesture agreement was assessed for

each command and a set of guidelines proposed.

Contrary to what was shown in (Nancel et al., 2011)

for pan and zoom gesture, here one hand gesturing

was preferred. Hand posture naturally emerged as a

way of communicating intention for gesture

interaction.

When designing a NUI that supports in-air

gestures one must be aware of the “live mic” issue.

As the system is always listening, if not mitigated,

this can lead to false positive errors (Wigdor and

Wixon, 2011). Effective ways of countering the “live

mic” problem are to reserve specific actions for

interaction or reserve clutching mechanism that will

disengage the gesture interpretation. The review

made by the Golod et al. (Golod et al., 2013) suggests

a gesture phrase sequence of gestures to define one

command, where the first phase is the activation. The

activation serves as the segmentation cue to separate

casual from command gestures. Some example

guidelines are the definition of activation zones or

dwell-based interactions. In (Lee et al., 2013), from a

Wizard of Oz design, the authors tried to identify

gestures for pan, zoom, rotate and tilt control. More

importantly, by doing so they identified the natural

clutching gestures for direct analogue input, a subtle

change from open-hand to semi-open. Similarly, the

system proposed in (Bragdon et al., 2011) used the

hand palm facing the screen for activating cursor

control. (Hopmann et al., 2011) proposed two

activation techniques: holding a remote trigger, and

activation through gaze estimation. These two

activating techniques plus the control (trigger gesture

of showing the palms to the screen) were tested for

their hedonic and pragmatic qualities. Results showed

that both the trigger gesture and remote trigger scored

neutral on their hedonic and pragmatic scales.

However, gaze activation scored high in both scales,

achieving a “desired” rating.

Although much less common that vertical

displays, interactive floors and floor projected

interfaces possess unique features. In (Krogh et al.,

2004) the authors describe an interactive floor

prototype, controlled by body movement and mobile

phones, which was set-up on a large public library

hall. This arrangement enabled them not only to take

advantage of the open space, filled by the large

projected interface, but also from its public function

of promoting social interaction. These types of

interfaces were proposed as an alternative to

interactive tabletops (Augsten et al., 2010), useful for

not being as spatially restraining as the latter. In their

study the authors also explored the preferred methods

of activation for buttons in these floors, being feet tap

their final choice of design.

Even though the literature on NUI is extensive,

our review shows that most research has been made

with exploratory or pilot design and could be

advanced by validation studies. Furthermore, while

most studies target the general population, usually

their samples are not representative of the elderly

portion and thus ignore their specific impairments and

needs. To generally address their visual perception

impairments and support their needs of physical

activity and engagement we focused our research on

large interactive floors. In order to better understand

how this population can interact with such an

interface we proposed the following question:

• When designing a NUI to be used by an elderly

population in floor projection displays what

interaction is best?

This was narrowed down by limiting the answers to

two types of interface control: arm ray casting,

commonly studied for vertical displays, and a touch

screen like control, where the user activates

interaction through stepping on the virtual elements.

Considering the goals of an interface we chose three

elements to be rated: usability, workload, and

performance. As one method would provide clear

mapping at the expense of increased physical activity

(stepping), the other would free the user from such

movements while requiring him to mentally project

their arm into the floor. Therefore, we hypothesized

that differences for each of the three evaluation

elements would exist when considering the two NUIs

proposed. To test this hypothesis, the two proposed

modes of interface control were developed and tested

on an elderly population sample for two types of tasks

where they were evaluated in terms of usability,

perceived workload and performance. We expected

that ray casting would provide better results as it is

more widely used for interaction with large displays

and requires little physical effort by the user.

3 METHODS

3.1 Modes of Interacting

Two modes of interacting with the computer were

developed based on the kinematic information

provided by a Kinect V2 sensor and a display

projection on the ground. In the first, henceforth

named “feet”, the cursor position is controlled by the

average position of both feet on the floor plane;

PhyCS 2016 - 3rd International Conference on Physiological Computing Systems

26

activation upon the virtual elements by the cursor is

performed by placing the feet less than 20cm apart.

For the second mode of interaction, named “arm”, the

forearm position and orientation is treated as a vector

(from elbow to wrist) and ray casted onto the floor

plane, the cast controls the position of the cursor (as

schematized in Figure), while activation is done by

closing the hand. Due to low reliability of the Kinect

V2 sensor in detecting the closed hand pose, during

the experiment this automatic detection was replaced

by the visual detection done by the researcher in a

Wizard of Oz like experiment.

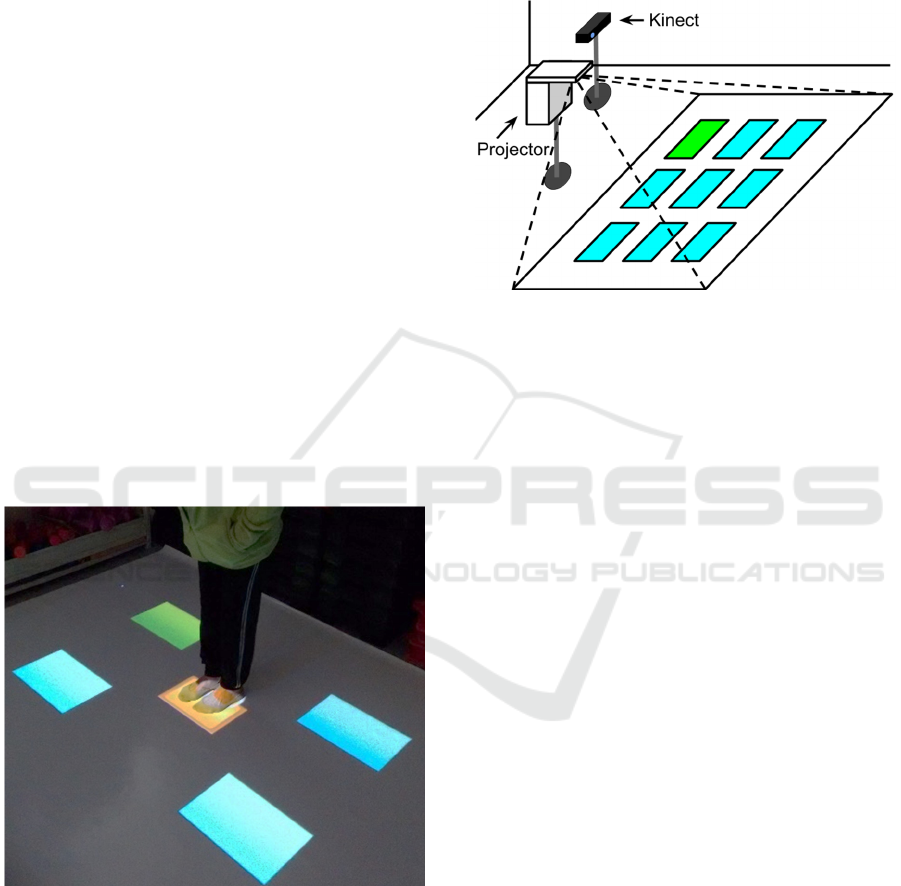

Figure 1: Controlling the cursor position through forearm

ray casting.

3.2 Experimental Tasks’ Description

The interfaces were tested in two different tasks to

give a broader insight into what kind of interactions

with computers our two systems would impact. A task

to mimic the traditional point-and-click and another

the common drag-and-drop.

In both tasks the participant controls a circular

cursor (ø 17 cm) with 1 second activation duration,

meaning that the activation gesture (feet together or

hand closed) must be sustained for 1 second for the

cursor to interact with the virtual element it is

positioned on. This activation is represented on the

cursor itself, which changes colour in a circular way

proportionally to the duration of the gesture.

3.2.1 Point-and-Click Task

In the point-and-click task a set of 9 rectangles (40 cm

x 25 cm) are projected in the floor, on a 3 by 3

configuration, separated 12 cm laterally and 8 cm

vertically as shown in Figure 2. Out of the 9

rectangles 8 are distractors (blue) and one is the target

(green). Every time the target is selected it trades

places with a distractor chosen on a random sequence

(the same random sequence was used for all

participants). The purpose of the task is to activate the

target repeatedly while avoiding activating the

distractors. Performance is recorded in this task as a

list of events and their time tags, the possible events

being: target click (correct click); background click

(neutral click); and distractor click (incorrect click).

In this task, maintaining the activation pose while

moving the cursor from inside a rectangle to outside,

or vice versa, resets the activation timer.

Live feedback is given by drawing different

coloured frames around the rectangles. An orange

frame is drawn around the rectangle over which the

cursor is located. Upon activation the frame changes

colour to red if the rectangle was a distractor or green

if it was the target. This frame remains until the cursor

is moved off the rectangle.

Figure 2: Point-and-click task being performed with the

“feet” interface.

3.2.2 Drag-and-Drop Task

In the drag-and-drop task 4 rectangles (40 cm x 25

cm) are projected on the ground, spaced 70 cm

horizontally and 40 cm vertically, 3 of which are blue

distractors and one is the target (green). In the centre

a movable yellow rectangle (30 cm x 19 cm) is

initially shown, as presented in Figure. The

Evaluating Body Tracking Interaction in Floor Projection Displays with an Elderly Population

27

participant can “grab” the yellow rectangle by

activating it, once it has been “grabbed” it can be

dropped by activating it again (joining the feet or

closing the hand, depending on mode of interaction).

The purpose of the task is to “grab” the yellow

rectangle and “drop” it onto the target repeatedly.

Every time this is done successfully the yellow

rectangle is reset to the centre and the target changes

places with one of the distractors in a random

sequence (the sequence was kept constant across all

participants). Performance is recorded as a list of

events and their time tags, the possible events for this

task are: grab yellow (correct grab); attempt to grab

anything else (neutral grab); drop yellow on target

(correct drop); drop yellow on background (neutral

drop); and drop yellow on distractors (incorrect drop).

As before maintaining the activation pose while

moving the cursor from a rectangle to outside, or vice

versa, resets the activation timer. Likewise, a set of

coloured frames are used to give live feedback to the

users. An orange frame highlights any rectangle

under the cursor. Once activated, the frame of the

yellow object changes to green indicating that is

being dragged by the cursor. Dropping it on a

distractor will create a red frame around the

distractor, oppositely dropping it on a target will show

a green frame around it.

Figure 3: Drag-and-drop task being performed with the

“feet” interface.

3.3 Technical Setup

The hardware was setup in a dimly illuminated room

and a white plastic canvas was placed on the floor to

enhance the reflectivity of projection. A Hitachi CP-

AW100N projector was positioned vertically to face

the floor. This arrangement enabled a high contrast of

the virtual elements being projected and an area of

projection greater than what our tasks needed (150 cm

x 90 cm). A Microsoft Kinect V2 was placed

horizontally next to the projector, facing the

projection area (Figure 4).

Figure 4: Experimental setup diagram.

3.4 Sample

The target population of the study were community

dwelling elderly. A self-selecting sample of this

population was recruited at Funchal’s Santo António

civic centre with the following inclusion criteria:

1. Being more than 60 years old;

2. Do not present cognitive impairments (assessed

by the Mini Mental State Examination Test

(Folstein et al., 1975));

3. Do not present low physical functioning (assessed

by the Composite Physical Function scale (Rikli,

1998)).

The experiment took place over the course of 2 days

at the facilities of the civic centre municipal

gymnasium for the elderly. Nineteen participants

(ages: M = 70.2 SD = 5.3) volunteered and provided

written informed consent, 3 males and 16 females.

The participants were randomly allocated to each

condition, 10 being assigned to the “feet” and 9 to the

“arm” condition of interaction.

3.5 Experimental Protocol

The experiment followed a between-subjects design.

The participants were asked to answer questionnaires

regarding identification, demographical information

and level of computer use experience. They were

evaluated with the Composite Physical Function

Scale and Mini Mental State Examination Test.

During each individual participant trial, the point-

and-click task was explained and shown being

PhyCS 2016 - 3rd International Conference on Physiological Computing Systems

28

performed through example according to the

participant experimental condition. This was

followed by a training period and then by a 2 minutes’

session while performance metrics were recorded.

Lastly the participant was asked to fill the System

Usability Scale (SUS) (Brooke, 1996) and NASA-

TLX (TLX) (Hart and Staveland, 1988)

questionnaires. After it, the same procedure was

followed for the drag-and-drop task.

3.6 Analysis

For each participant data consisted of: SUS score and

TLX index (both measured from 0 to 100), and task

related performance, as described in sub-sections

3.2.1 and 3.2.2. Normality of the data distributions

was assessed using Kolmogorov-Smirnov test for

measurements concerning performance. The

variables that showed such a distribution are

highlighted in Table 1 and Table 2. For the pairs

(between conditions) of measurements that fitted the

assumption of normality, parametric t-tests were

used, when significant differences in the pairs

variances were present, shown by the Levene’s test,

equal variances were not assumed. All the others pairs

were tested with Mann-Whitney’s U test. Differences

in the SUS and TLX scores (ordinal variables)

between conditions were also tested with Mann-

Whitney’s U test. All statistical testing was done

using 2-tailed testing at α .05 with the IBM software

SPSS Statistics 22.

4 RESULTS

4.1 Point-and-Click Task

For the “feet” condition, in the point-and-click task

the descriptive statistics are presented in Table, were

we can observe very low values of incorrect clicks,

and high median scores for the SUS, which is

considered to be a good value when over 68. The

descriptive statistics for the “arm” condition are also

presented in Table 1. Higher values of neutral and

incorrect clicks are visible compared to the previous

condition. Similarly, it can be seen a decrease in the

median of the SUS usability score and an increase of

the TLX workload index.

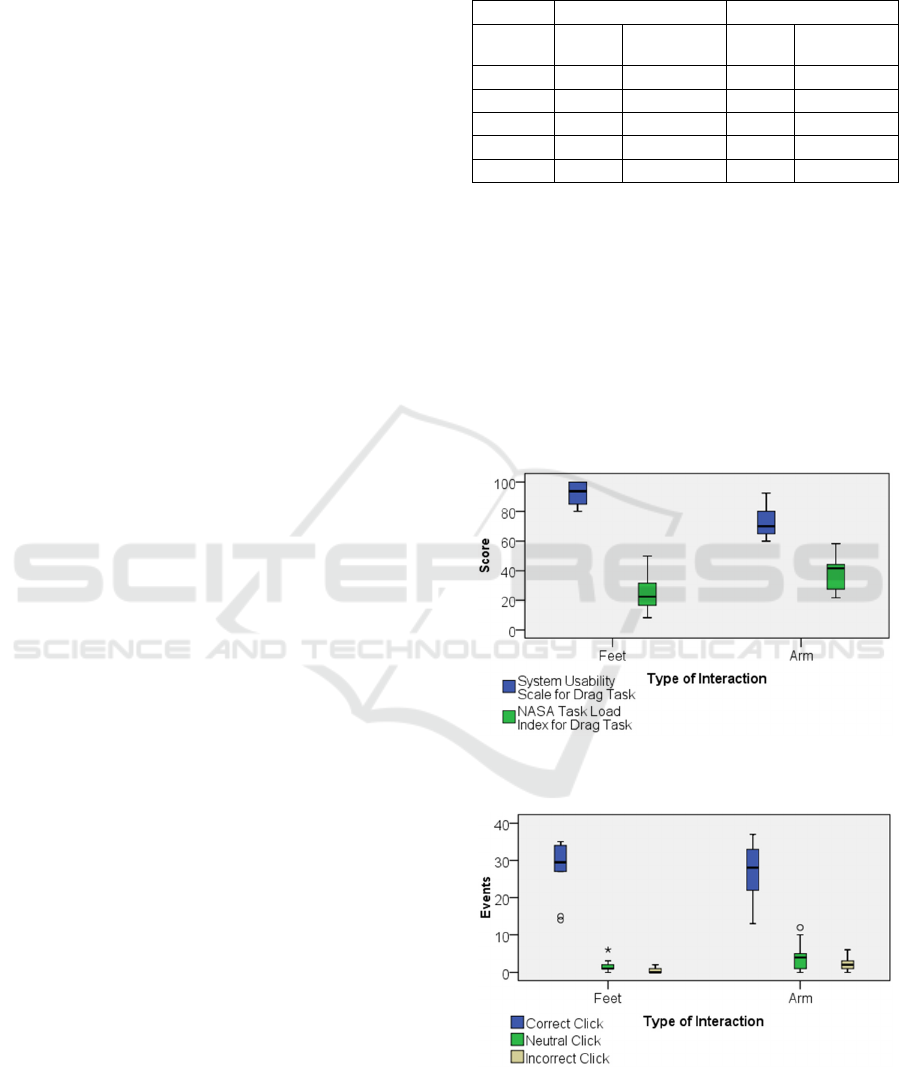

Results revealed significant higher System

Usability Scale scores for the participants interfacing

with their feet compared to the participants

interfacing with their dominant arm, U = 18.5, p < .05,

with effect size r = -.4997. The Task Load Index

Table 1: Descriptive statistics of the measurements for the

point-and-click task.

“Feet” Interface “Arm” Interface

Variable

Median Interquartile

Range

Median Interquartile

Range

SUS 91.25 21.25 72.50 25.00

TLX 23.75 27.71 40.83 18.33

Correct 29.50 10 28.00

n

15

Neutral 1.00 2 4.00

n

7

Incorrect 0.00 1 2.00

n

3

n

Normally distributed

scores were not significantly different for both

interfaces, U = 24.5, p > .05 (Figure 5). The number

of correct and neutral clicks was not significantly

different for both interfaces, U = 40.5 and U = 29.0,

p > .05, respectively. However, it was found that there

was lower number of incorrect clicks for the

participants interfacing with their feet compared to

the participants interfacing with the arm, U = 15.0, p

< .05, r = -.5863 (Figure 6), where circles represent

outliers and stars extreme outliers).

Figure 5: System Usability Scale and Nasa-Task Load

Index scores for the point-and-click task.

Figure 6: Participants’ performance on the point-and-click

task.

Evaluating Body Tracking Interaction in Floor Projection Displays with an Elderly Population

29

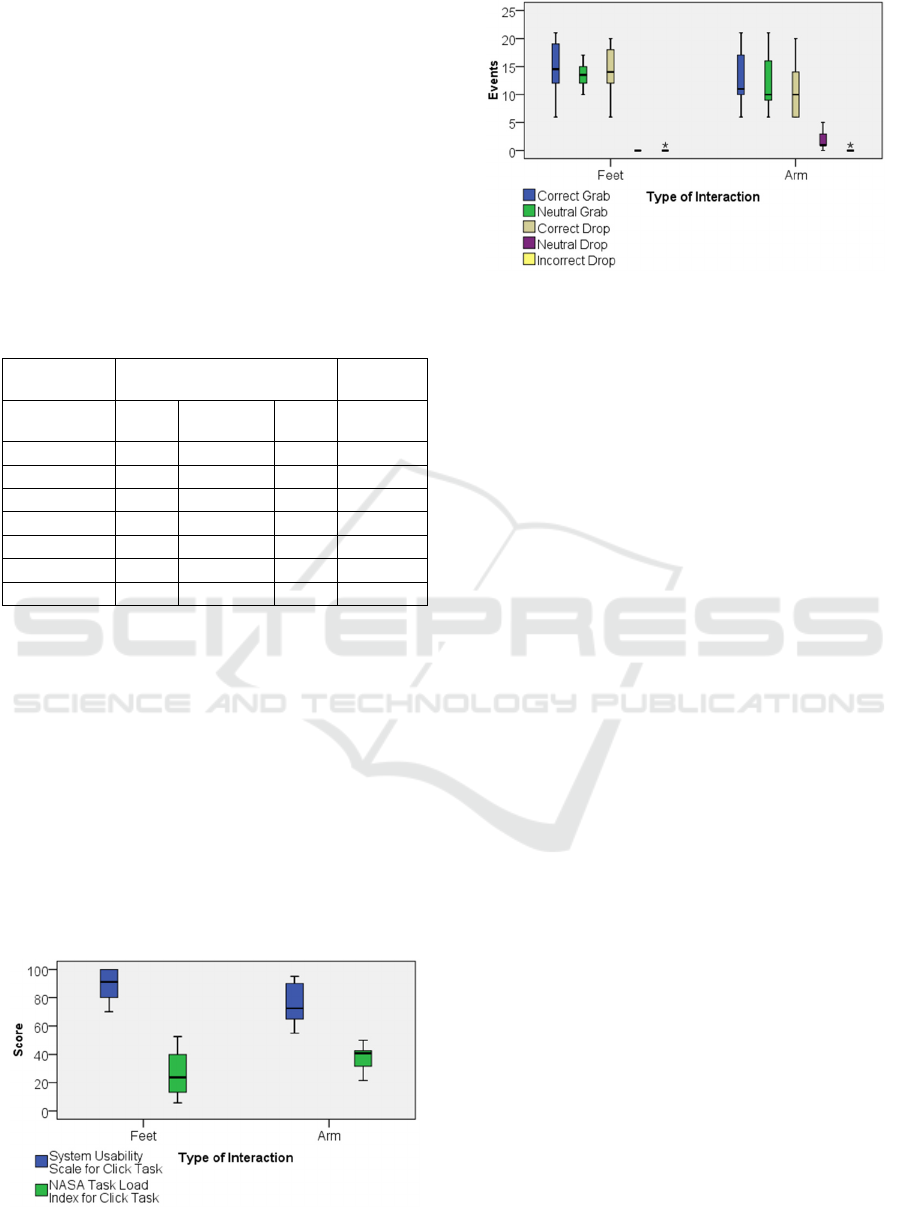

4.2 Drag-and-Drop Task

The descriptive statistics for the “feet” condition, in

the drag-and-drop task are presented in Table 2, were

we can observe low values of incorrect drops and no

neutral drops (accidental drops). The values of

usability are very high and workload moderately low.

In the “arm” condition of the drag-and-drop task we

can see, in Table 2, a marginally good value for the

SUS usability score, barely over 68. The TLX

workload has relative medium levels and neutral

drops (accidental) are present.

Table 2: Descriptive statistics of the measurements for the

drag-and-drop task.

“Feet” Interface

“Arm”

Interface

Variable

Median

Interquartile

Range

Median

Interquartile

Range

SUS 93.75 16.25 70.00 21.25

TLX 22.50 16.46 41.67 22.50

Correct Grab 14.50

n

8 11.00

n

9

Neutral Grab 13.50

n

4 10.00

n

9

Correct Drop 14.00

n

7 10.00

n

10

Neutral Drop 0 0 1.00

n

3

Incorrect Drop 0.00 0 0.00 0

n

Normally distributed

The results indicated again a significantly higher

System Usability Scale score and lower Task Load

Index score for the Feet interaction condition, with U

= 9 and U = 17, p < .05, effect size r = -.6777 and r =

-.5247 respectively (Figure 7). There were no

significant differences for the normally distributed

data with correct grabs, neutral grabs, and correct

drops, t(17) = .565, t(17) = .863 and t(17) = 1.336, p

> .05, respectively. Neutral drops were significantly

higher in the “arm” interaction condition, U = 10, p <

.05, r = -.7595 and there were no significant

differences between the number of incorrect drops, U

= 44.5, p > .05 (Figure 8).

Figure 7: System Usability Scale and Nasa-Task Load

Index scores for the drag-and-drop task.

Figure 8: Participants’ performance on the drag-and-drop

task.

5 DISCUSSION

For both the point-and-click and drag-and-drop tasks

it was found that there is a significant impact on

system usability, being the “feet” interaction method

preferable in both cases. With the “feet” modality

achieving high levels of usability, scores over 90,

while the “arm” had levels of usability around 71,

very close to the standard lower limit of good, 68. In

the case of perceived workload indexes, for the point-

and-click there were no significant differences found

between the conditions. While for drag-and-drop the

“feet” interface was significantly less demanding to

use by the participants. In both cases, workload

indexes for the “feet” were around 23 while for the

“arm” the values were situated around 41. Although

interfaces similar to our “arm” method have been the

focus of previous research (Bragdon et al., 2011;

Nancel et al., 2011) and shown to be a method that

participants naturally display (Fikkert et al., 2009;

Lee et al., 2013; Vatavu, 2012), in our experiment we

found sufficient evidence that an alternative way of

interacting with projected floor elements is preferred

by elder people. This preference by the participants

for the “feet” interface might be linked to the simpler

mapping of the cursor control provided, which is

known to have a lowering effect on cognitive load

(Hondori et al., 2015; Roupé et al., 2014). Finally, in

terms of performance, for the point-and-click task

were observed very low numbers of neutral and

incorrect clicks (although significantly higher for the

“arm”) and comparable number of correct clicks.

Similar results were found in the drag-and-drop task,

with low numbers of neutral and incorrect drops for

both methods and analogous values of correct grabs,

neutral grabs and correct drops. Still, the “feet”

interface was again better, with the number of neutral

drops being significantly lower than in the “arm”

PhyCS 2016 - 3rd International Conference on Physiological Computing Systems

30

interface. Albeit these differences, the remaining

performance indicators were shown not to be

significantly different. Therefore, caution is advised

in the interpretation of these results as proof of a clear

performance advantage provided by any of the

interfaces.

6 CONCLUSIONS

Due to the increasing number of elderly in developed

countries and the specific needs of this population we

tried to get an insight of the desirability of different

modes of controlling interaction in interactive floors.

A medium which, by being easily scaled, can mitigate

the visual perception deficits associated with old age,

and can promote physical activity. Thus, in this work,

two methods of interacting with virtual elements

projected on the floor were developed and tested for

differences in their usability, perceived workload and

performance ratings by an elderly population. The

interfaces consisted on either controlling the cursor

with the direct mapping of feet position onto the

projection surface or, alternatively, by mapping the

cursor position to the participant’s ray-casted forearm

on the surface. These interfaces were tested on two

different tasks, one mimicking a point-and-click

interaction, the other a drag-and-drop. Although the

NUI research field is extensive there is a lack of

studies that approach the floor projected interfaces

and studies with the elderly are even rarer. This study

gives a successful insight into the preferred modes of

interaction for this elder population. Contrary to our

initial guess, the results showed that from the two

proposed methods the “feet” interface was superior in

all the domains measured. It was shown that this

method was perceived as more usable in both the

tasks tested and at least less demanding in terms of

workload for the drag-and-drop task. In terms of

performance a marginal advantaged was shown also

for the “feet” method. This insight delivered by the

results will help in the development of systems

aiming at providing full body NUI for floor projection

displays such as in mobile robots.

ACKNOWLEDGMENT

The authors thank Funchal’s Santo António

municipal gymnasium for their cooperation, Teresa

Paulino for the development of the experimental tasks

and Fábio Pereira for his help during the data

collection process.

This work was supported by the Fundação para a

Ciência e Tecnologia through the AHA project

(CMUPERI/HCI/0046/2013) and LARSyS –

UID/EEA/50009/2013.

REFERENCES

Augsten, T., Kaefer, K., Meusel, R., Fetzer, C., Kanitz, D.,

Stoff, T., Becker, T., Holz, C., Baudisch, P., 2010.

Multitoe: High-precision Interaction with Back-

projected Floors Based on High-resolution Multi-touch

Input, in: Proceedings of the 23Nd Annual ACM

Symposium on User Interface Software and

Technology, UIST ’10. ACM, New York, NY, USA, pp.

209–218. doi:10.1145/1866029.1866064

Bigdelou, A., Schwarz, L., Navab, N., 2012. An Adaptive

Solution for Intra-operative Gesture-based Human-

machine Interaction, in: Proceedings of the 2012 ACM

International Conference on Intelligent User

Interfaces, IUI ’12. ACM, New York, NY, USA, pp.

75–84. doi:10.1145/2166966.2166981

Boulos, M.N.K., Blanchard, B.J., Walker, C., Montero, J.,

Tripathy, A., Gutierrez-Osuna, R., 2011. Web GIS in

practice X: a Microsoft Kinect natural user interface for

Google Earth navigation. Int. J. Health Geogr. 10, 45.

doi:10.1186/1476-072X-10-45

Bragdon, A., DeLine, R., Hinckley, K., Morris, M.R., 2011.

Code Space: Touch + Air Gesture Hybrid Interactions

for Supporting Developer Meetings, in: Proceedings of

the ACM International Conference on Interactive

Tabletops and Surfaces, ITS ’11. ACM, New York, NY,

USA, pp. 212–221. doi:10.1145/2076354.2076393

Brooke, J., 1996. SUS-A quick and dirty usability scale.

Usability Eval. Ind. 189, 194.

Chen, C., Liu, K., Jafari, R., Kehtarnavaz, N., 2014. Home-

based Senior Fitness Test measurement system using

collaborative inertial and depth sensors, in: 2014 36th

Annual International Conference of the IEEE

Engineering in Medicine and Biology Society (EMBC).

Presented at the 2014 36th Annual International

Conference of the IEEE Engineering in Medicine and

Biology Society (EMBC), pp. 4135–4138.

doi:10.1109/EMBC.2014.6944534

Da Gama, A., Chaves, T., Figueiredo, L., Teichrieb, V.,

2012. Guidance and Movement Correction Based on

Therapeutics Movements for Motor Rehabilitation

Support Systems, in: 2012 14th Symposium on Virtual

and Augmented Reality (SVR). Presented at the 2012

14th Symposium on Virtual and Augmented Reality

(SVR), pp. 191–200. doi:10.1109/SVR.2012.15

European Commission, Economic and Financial Affairs,

2012. The 2012 Ageing Report.

Fikkert, W., Vet, P. van der, Veer, G. van der, Nijholt, A.,

2009. Gestures for Large Display Control, in: Kopp, S.,

Wachsmuth, I. (Eds.), Gesture in Embodied

Communication and Human-Computer Interaction,

Lecture Notes in Computer Science. Springer Berlin

Heidelberg, pp. 245–256.

Evaluating Body Tracking Interaction in Floor Projection Displays with an Elderly Population

31

Folstein, M.F., Folstein, S.E., McHugh, P.R., 1975. Mini-

mental state. J. Psychiatr. Res. 12, 189–198.

doi:10.1016/0022-3956(75)90026-6

Fozard, J., 1990. Vision and hearing in aging, in: Handbook

of the Psychology of Aging. Academic Press, pp. 143–

156.

Francese, R., Passero, I., Tortora, G., 2012. Wiimote and

Kinect: Gestural User Interfaces Add a Natural Third

Dimension to HCI, in: Proceedings of the International

Working Conference on Advanced Visual Interfaces,

AVI ’12. ACM, New York, NY, USA, pp. 116–123.

doi:10.1145/2254556.2254580

Golod, I., Heidrich, F., Möllering, C., Ziefle, M., 2013.

Design Principles of Hand Gesture Interfaces for

Microinteractions, in: Proceedings of the 6th

International Conference on Designing Pleasurable

Products and Interfaces, DPPI ’13. ACM, New York,

NY, USA, pp. 11–20. doi:10.1145/2513506.2513508

Gonçalves, A., Gouveia, É., Cameirão, M., Bermúdez i

Badia, S., 2015. Automating Senior Fitness Testing

through Gesture Detection with Depth Sensors.

Presented at the IET International Conference on

Technologies for Active and Assisted Living

(TechAAL), Surrey, United Kingdom.

Hart, S.G., Staveland, L.E., 1988. Development of NASA-

TLX (Task Load Index): Results of Empirical and

Theoretical Research, in: Meshkati, P.A.H. and N.

(Ed.), Advances in Psychology, Human Mental

Workload. North-Holland, pp. 139–183.

Hopmann, M., Salamin, P., Chauvin, N., Vexo, F.,

Thalmann, D., 2011. Natural Activation for Gesture

Recognition Systems, in: CHI ’11 Extended Abstracts

on Human Factors in Computing Systems, CHI EA ’11.

ACM, New York, NY, USA, pp. 173–183.

doi:10.1145/1979742.1979642

Jones, B., Sodhi, R., Murdock, M., Mehra, R., Benko, H.,

Wilson, A., Ofek, E., MacIntyre, B., Raghuvanshi, N.,

Shapira, L., 2014. RoomAlive: Magical Experiences

Enabled by Scalable, Adaptive Projector-camera Units,

in: Proceedings of the 27th Annual ACM Symposium on

User Interface Software and Technology, UIST ’14.

ACM, New York, NY, USA, pp. 637–644.

doi:10.1145/2642918.2647383

Krogh, P., Ludvigsen, M., Lykke-Olesen, A., 2004. “Help

Me Pull That Cursor” A Collaborative Interactive Floor

Enhancing Community Interaction. Australas. J. Inf.

Syst. 11. doi:10.3127/ajis.v11i2.126

Lee, S.-S., Chae, J., Kim, H., Lim, Y., Lee, K., 2013.

Towards More Natural Digital Content Manipulation

via User Freehand Gestural Interaction in a Living

Room, in: Proceedings of the 2013 ACM International

Joint Conference on Pervasive and Ubiquitous

Computing, UbiComp ’13. ACM, New York, NY,

USA, pp. 617–626. doi:10.1145/2493432.2493480

Microsoft News Center, 2013. Xbox Execs Talk

Momentum and the Future of TV. News Cent.

Microsoft’s Q2: record $24.52 billion revenue and 3.9

million Xbox One sales [WWW Document], 2014. .

The Verge. URL http://www.theverge.com/

2014/1/23/5338162/microsoft-q2-2014-financial-

earnings (accessed 1.19.16).

Mousavi Hondori, H., Khademi, M., Dodakian, L.,

McKenzie, A., Lopes, C.V., Cramer, S.C., 2015.

Choice of Human-Computer Interaction Mode in

Stroke Rehabilitation. Neurorehabil. Neural Repair.

doi:10.1177/1545968315593805

Nancel, M., Wagner, J., Pietriga, E., Chapuis, O., Mackay,

W., 2011. Mid-air Pan-and-zoom on Wall-sized

Displays, in: Proceedings of the SIGCHI Conference on

Human Factors in Computing Systems, CHI ’11. ACM,

New York, NY, USA, pp. 177–186. doi:10.1145/

1978942.1978969

Roberta E. Rikli, C.J.J., 1998. The Reliability and Validity

of a 6-Minute Walk Test as a Measure of Physical

Endurance in Older Adults [WWW Document]. J.

Aging Phys. Act. URL (accessed 12.29.15).

Roupé, M., Bosch-Sijtsema, P., Johansson, M., 2014.

Interactive navigation interface for Virtual Reality

using the human body. Comput. Environ. Urban Syst.

43, 42–50. doi:10.1016/j.compenvurbsys.2013.10.003

Spasojević, S., Santos-Victor, J., Ilić, T., Milanović, S.,

Potkonjak, V., Rodić, A., 2015. A Vision-Based

System for Movement Analysis in Medical

Applications: The Example of Parkinson Disease, in:

Computer Vision Systems, Lecture Notes in Computer

Science. Springer International Publishing, pp. 424–

434.

Tan, J.H., Chao, C., Zawaideh, M., Roberts, A.C., Kinney,

T.B., 2013. Informatics in Radiology: Developing a

Touchless User Interface for Intraoperative Image

Control during Interventional Radiology Procedures.

RadioGraphics 33, E61–E70. doi:10.1148/

rg.332125101

Vatavu, R.-D., 2012. User-defined Gestures for Free-hand

TV Control, in: Proceedings of the 10th European

Conference on Interactive Tv and Video, EuroiTV ’12.

ACM, New York, NY, USA, pp. 45–48.

doi:10.1145/2325616.2325626

Wigdor, D., Wixon, D., 2011. Brave NUI World: Designing

Natural User Interfaces for Touch and Gesture.

Elsevier.

World Health Organization, 2010. Global recommen-

dations on physical activity for health.

PhyCS 2016 - 3rd International Conference on Physiological Computing Systems

32