A Novel Method for Unsupervised and Supervised Conversational

Message Thread Detection

Giacomo Domeniconi

1

, Konstantinos Semertzidis

2

, Vanessa Lopez

3

, Elizabeth M. Daly

3

,

Spyros Kotoulas

3

and Gianluca Moro

1

1

Department of Computer Science and Engineering (DISI), University of Bologna, Bologna, Italy

2

Department of Computer Science and Engineering, University of Ioannina, Ioannina, Greece

3

IBM Research - Ireland Damastown Industrial Estate Mulhuddart, Dublin 15, Ireland

Keywords:

Clustering Algorithms, Conversation Threads, Topic Detection.

Abstract:

Efficiently detecting conversation threads from a pool of messages, such as social network chats, emails,

comments to posts, news etc., is relevant for various applications, including Web Marketing, Information

Retrieval and Digital Forensics. Existing approaches focus on text similarity using keywords as features that

are strongly dependent on the dataset. Therefore, dealing with new corpora requires further costly analyses

conducted by experts to find out new relevant features. This paper introduces a novel method to detect threads

from any type of conversational texts overcoming the issue of previously determining specific features for

each dataset. To automatically determine the relevant features of messages we map each message into a three

dimensional representation based on its semantic content, the social interactions in terms of sender/recipients

and its timestamp; then clustering is used to detect conversation threads. In addition, we propose a supervised

approach to detect conversation threads that builds a classification model which combines the above extracted

features for predicting whether a pair of messages belongs to the same thread or not. Our model harnesses the

distance measure of a message to a cluster representing a thread to capture the probability that a message is

part of that same thread. We present our experimental results on seven datasets, pertaining to different types

of messages, and demonstrate the effectiveness of our method in the detection of conversation threads, clearly

outperforming the state of the art and yielding an improvement of up to a 19%.

1 INTRODUCTION

In recent years, online texting has become a part of

most people’s everyday lives. The use of email, web

chats, online conversations and social groups has be-

come widespread. It is a fast, economical and effi-

cient way of sharing information and it also provides

users the ability to discuss different topics with dif-

ferent people. Understanding the context of digital

conversations supports a wide range of applications

such as marketing, social network extraction, expert

finding, the improvement of email management, rank-

ing content and others (Jurczyk and Agichtein, 2007;

Coussement and den Poel, 2008; Glass and Colbaugh,

2010; F. M. Khan and Pottenger, 2002).

The increased use of online fora leads to people

being overwhelmed by information. For example, this

can happen when a user has hundreds of new unread

messages in a chat or one needs to track and organise

posts in forums or social groups. To instantly have

a clear view of different discussions, which requires

expensive and tedious efforts for a person, we need to

automatically organise this data stream into threads.

There has been considerable effort in extracting

topics from document sets, mainly through a variety

of techniques derived from Probabilistic Latent Se-

mantic Indexing (pLSI) (Hofmann, 1999) and Latent

Dirichlet Allocation (LDA) (Blei et al., 2003). How-

ever, the problem of detecting threads from conversa-

tional messages differs from document topic extrac-

tion for several aspects (Shen et al., 2006; Huang

et al., 2012; F. M. Khan and Pottenger, 2002; Adams

and Martell, 2008; Yeh, 2006): (i) conversational

messages are generally much shorter than usual doc-

uments making the task of topic detection much more

difficult (ii) thread detection strongly depends on so-

cial interactions between the users involved in a mes-

sage exchange, (iii) as well the time of the discussion.

Domeniconi, G., Semertzidis, K., Lopez, V., Daly, E., Kotoulas, S. and Moro, G.

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection.

DOI: 10.5220/0006001100430054

In Proceedings of the 5th International Conference on Data Management Technologies and Applications (DATA 2016), pages 43-54

ISBN: 978-989-758-193-9

Copyright

c

2016 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

43

Other studies deal with thread tree reconstruction

where given a known thread they seek to construct

the conversation tree. In particular, in (X. Wang and

Chen, 2008) the authors use headers extracted from

emails to create the parent/child relationships of a

thread, and in (Aumayr et al., 2011) the authors pro-

pose an algorithm which uses reply behaviours in fo-

rum threads in order to output the threads’ structure.

This paper addresses the efficient detection of con-

versation threads from pools of online messages - for

example from social groups, pages chats, email mes-

sages etc. - namely the detection of sets of messages

related with respect to contents, time and involved

users. The thread detection problem is different from

thread tree reconstruction, since the latter requires

that the conversation threads are known. In contrast

the former, seeks to find the messages which form a

conversation thread. In other words, thread detection

is the essential initial step of thread tree reconstruc-

tion.

We consider a three dimensional representation

(Zhao and Mitra, 2007) which consists of text con-

tent, temporal information, and social relations. In

Figure 1, we depict the three dimensional representa-

tion which illustrates 3 threads with different colours

and shapes, that yields to total of 14 messages. The

green circles and red squares threads have the same

social and content dimensions but not time. While

the blue diamonds thread consists of different topics

and users, but it occurs in the same time frame of the

green circles one. The use of the three dimensional

representation leads to emphasis of thread separation.

We propose several measures to exploit the mes-

sages features, based on this three dimensional rep-

resentation. Then, the generated features are embed-

ded into a metric distance in density and hierarchi-

cal clustering algorithms (Ester et al., 1996; Bouguet-

taya et al., 2015) which cluster messages in threads.

In order to enhance our approach to efficiently detect

threads in any type of dataset, we build a classification

model from a set of messages previously organised in

threads. The classifier exploits the same features used

in the clustering phase and it returns the probability

that a pair of messages belong to the same thread. In

other words, a binary supervised model is trained with

instances, each referring to a pair of messages. Each

instance uses the same features described previously,

and a label describing whether the two messages be-

long to the same thread or not. This model provides

a probability of being in the same thread for a pair

of messages, we propose to use this probability as

a similarity distance in clustering methods to detect

the threads. We observe that the classifiers output can

help the clustering process to achieve higher accuracy

Time

Social

Content

Figure 1: Three dimensional representation of threads mes-

sages.

by identifying the threads correctly. We have exten-

sively evaluated our approach with real world datasets

including emails and social group chats. Our experi-

mental results show that our method can identify the

large majority of the threads in several type of dataset,

such as web conversation including emails, chats and

posts.

In summary, this paper presents the following con-

tributions:

• a three dimensional message representation based

on textual semantic content, social interactions

and time to generate features for each message;

• clustering algorithms to detect threads, on top of

the features generated from the three dimensional

representation;

• combination of the generated features to build a

classifier that identifies the membership probabil-

ity of pair of messages to the same thread and this

probability is used as a distance function for the

clustering methods to detect threads;

• the combined classification technique with clus-

tering algorithms provides a higher accuracy than

using clustering alone..

The rest of this paper is structured as follows. In

Section 2, we present related work, while in Section

3, we formally define the thread detection problem.

In Section 4, we introduce our model and our algo-

rithms for thread detection. Section 5 presents the ex-

perimental results on real datasets and Section 6 con-

cludes the paper.

2 RELATED WORK

As discussed in the previous section, thread detec-

tion has received a lot of attention, including content

and metadata based approaches. Metadata based ap-

proaches refers to header fields that are contained in

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

44

emails or forum posts (e.g. send-to, reply-to). Con-

tent based approaches focus on text analysis on sub-

ject and content text. In this paper, we differentiate

from the existing works by generalizing the problem

of detecting threads in different types of datasets, not

only in email sets like the most of related work (Wu

and Oard, 2005; Yeh, 2006; X. Wang and Chen, 2008;

Erera and Carmel, 2008; Joshi et al., 2011). The au-

thors of (Wu and Oard, 2005) focus on detecting con-

versation threads from emails using only the subject.

They cluster all messages with the same subject and at

least one participant in common. Here, we also han-

dle cases where messages belong to the same thread

but have different subject. Similarly, in (X. Wang and

Chen, 2008) the authors detect threads in emails us-

ing the extracted header information. They first try to

detect the parent/child relationships using Zawinski

algorithm

1

and then they use a topic-based heuris-

tic to merge or decompose threads to conversations.

Another approach for detecting threads in emails is

proposed in (Erera and Carmel, 2008), where clus-

tering into threads exploits a similarity function that

considers all relevant email attributes, such as sub-

ject, participants, text content and date of creation.

Quotations are taken into account in (Yeh, 2006)

where combined with several heuristics such as sub-

ject, sender/recipient relationships among email and

time, and as a result can construct email threads with

high precision. Emails relationships are also consid-

ered in (Joshi et al., 2011) where the authors use a

segmentation and detection of duplicate emails and

they group them together based on reply and forward-

ing relationships.

The work most closely related to ours is that of

(Dehghani et al., 2013), that studies the conversation

tree reconstruction, by first detecting the threads from

a set of emails. Specifically, they map the thread de-

tection problem to a graph clustering task. They cre-

ate a semantic network of a set of emails where the

nodes denote emails and the weighted edges repre-

sent co-thread relationships between emails. Then,

they use a clustering method to extract the conversa-

tion threads. However, their approach is focus only

on email datasets and their results are strongly bound

with the used features, since when they do not take

into account all features they have a high reduction

in their accuracy. In contrast here, we consider gen-

eral datasets and by using our classification model we

are able to detect threads even when there are missing

features. Although, it is not clear which graph clus-

tering algorithm is used and how it detects the clus-

ters. We conduct an extensive comparison between

our approach and the study of (Dehghani et al., 2013)

1

https://www.jwz.org/doc/threading.html

in Section 5.

Another line of research addresses mining threads

from online chats (F. M. Khan and Pottenger, 2002;

Adams and Martell, 2008; Huang et al., 2012; Shen

et al., 2006). Specifically, the study of (F. M. Khan

and Pottenger, 2002) focuses on identifying threads

of conversation by using pattern recognition tech-

niques in multi-topic and multi-person chat-rooms. In

(Adams and Martell, 2008) they focus on conversa-

tion topic thread detection and extraction in a chat

session. They use an augmented t f .id f to compute

weights between messages’ texts as a distance met-

ric exploiting the use of Princeton WordNet

2

ontol-

ogy, since related messages may not include iden-

tical terms, they may in fact include terms that are

in the same semantic category. In combination with

the computed distance between messages they use the

creation time in order to group messages with high

similarity in a short time interval. In (Shen et al.,

2006), they propose three variations of a single-pass

clustering algorithm for exploiting the temporal infor-

mation in the streams. They also use an algorithm

based on linguistic features in order to exploit the dis-

course structure information. A single-pass clustering

algorithm is also used in (Huang et al., 2012) which

employs the contextual correlation between short text

streams. Similar to (Adams and Martell, 2008), they

use the concept of correlative degree, which describes

the probability of the contextual correlation between

two messages, and the concept of neighboring co-

occurrence, which shows the number features co-

existing in both messages.

Finally, there also exists a line of research on re-

constructing the discussion tree structure of a thread

conversation. In (Wang et al., 2011), a probabilis-

tic model in conditional random fields framework is

used to predict the replying structure for online forum

discussions. The study in (Aumayr et al., 2011) em-

ploys conversation threads to improve forum retrieval.

Specifically, they use a classification model based on

decision trees and given a variety of features, includ-

ing creation time, name of authors, quoted text con-

tent and thread length, which allows them to recover

the reply structures in forum threads in an accurate

and efficient way. The aforementioned works achieve

really high performance (more than 90% of accuracy)

in the conversation tree reconstruction, while the state

of the art in threads detection obtains lower perfor-

mance, about 80% for emails data and 60% for chats

and short messages data. To this end, in this study we

focus on improving thread detection performance.

2

http://wordnet.princeton.edu/

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection

45

3 METHOD DESCRIPTION

In this section, we outline a generic algorithm for de-

tecting messages which belong to the same thread

from a set of messages M , such as emails, social

group posts and chats. As an intermediate step, the al-

gorithm addresses the problem of computing the sim-

ilarity measure between pairs of messages. We pro-

pose a suite of features and two methods to combine

them (one unsupervised and one supervised) to com-

pute the similarity measure between two messages.

We also present clustering algorithms which detect

threads based on this similarity measure in Section

3.3.

3.1 Data Model

We consider a set of messages M = {m

1

,m

2

,...} that

refers to online texts such as emails, social group

chats or forums. Each message is characterized by

the following properties: (1) textual data (content

and subject in case of emails), (2) creation time, and

(3) the users involved (authors or sender/recipients

in case of emails). We represent each message as

a three-dimensional model (Zhao and Mitra, 2007;

Zhao et al., 2007) to capture all these components.

Thus, a message m ∈ M can be denoted as a triplet

m = <c

m

,U

m

,t

m

>, where c

m

refers to text content,

U

m

= {u

1

,u

2

,...} refers to the set of users that are in-

volved in m, and t

m

refers to the creation time. Some

dimensions can be missing, for instance chat, groups

and forum messages provide only the author informa-

tion, without any recipients.

A conversation thread is defined as a set of mes-

sages exchanged on the same topic among the same

group of users during a time interval, more formally,

the set of messages M is partitioned in a set of conver-

sations C . Each message m ∈ M belongs to one and

only one conversation c ∈ C . The goal of the thread

reconstruction task is to automatically detect the con-

versations within a pool of messages. To this aim,

we propose a clustering-based method that relies on a

similarity measure between a pair of messages, called

SIM(m

i

,m

j

). In the following sections, we define dif-

ferent proposed approaches to calculate the similarity

measure. In the rest of the paper, we will use the nota-

tion Ω = {ω

1

,ω

2

,...} to refer the predicted extracted

conversations.

3.2 Messages Features

Social text messages, like emails or posts, can be sum-

marized by three main components: text content, tem-

poral information, and social relations (Zhao and Mi-

tra, 2007). Each of the three main components can

be analyzed under different points of view to com-

pute the distance between a pair of messages, which

involves the creation of several features. The func-

tion SIM(m

i

,m

j

) relies on these features and returns

a similarity value for each pair of messages (m

i

, m

j

),

which is used by the clustering algorithm that returns

the finding threads. We now present the extracted fea-

tures used to measure the similarity between two mes-

sages.

The content component relies on the semantics

of the messages. There are two main sources: the

messages text and the subject, if present (e.g., so-

cial network posts do not have this information). The

first considered feature is the similarity of the mes-

sages text content. We make use of the common

Bag of Words (BoW ) representation, that describes

a textual message m by means of a vector W (m) =

{w

1

,w

2

,...}, where each entry indicates the presence

or absence of a word w

i

. Single words occurring in

the message text are extracted, discarding punctua-

tion. A stopwords list is used to filter-out all the words

that are not informative enough. The standard Porter

stemming algorithm (Porter, 1980) is used to group

words with a common stems. To estimate the im-

portance to each word, there exist several different

weighting schemes (Domeniconi et al., 2016), here

we make use of the commonly used tf.idf scheme

(Salton and Buckley, 1988).

Using BoW representation, the similarity between

two vectors m

i

,m

j

can be measured by means of the

commonly used cosine similarity (Singhal, 2001):

f

C

T

(m

i

,m

j

) =

W (m

i

) · W (m

j

)

kW (m

i

)kkW (m

j

)k

Since by definition the BoW vectors have only posi-

tive values, the f

C

T

(m

i

,m

j

) takes values between zero

and one, being zero if the two vectors do not share

any word, and one if the two vectors are identical.

In scenarios where the subject is available, the same

process is carried out, computing the similarity cosine

f

C

S

(m

i

,m

j

) of words contained in the messages sub-

ject.

The cosine similarity allows a lexical comparison

between two messages but does not consider the se-

mantic similarity between two messages. There are

two main shortcomings of this measure: the lack of

focus on keywords, or semantic concepts expressed

by messages, and the lack of recognition of lexico-

graphically different words but with similar meaning

(i.e. synonyms), although this is partially computed

through the stemming. In order to also handle this

aspect, we extend the text similarity by measuring

the correlation between entities, keywords and con-

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

46

cepts extracted using AlchemyAPI

3

. AlchemyAPI is

a web service that analyzes the unstructured content,

exposing the semantic richness in the data. Among

the various information retrieved by AlchemyAPI, we

take into consideration the extracted topic keywords,

involved entities (e.g. people, companies, organiza-

tions, cities and other types of entities) and concepts

which are the abstractions of the text (for example,

”My favorite brands are BMW and Porsche = ”Au-

tomotive industry”). These three information are ex-

tracted by Alchemy API with a confidence value rang-

ing from 0 to 1. We create three vectors, one for

each component of the Alchemy API results for key-

words, entities and concepts for each message and us-

ing the related confidence extracted by AlchemyAPI

as weight. Again we compute the cosine similarity of

these vectors, creating three novel features:

• f

C

K

(m

i

,m

j

): computes the cosine similarity of the

keywords of m

i

and m

j

. This enables us to quan-

tify the similarity of the message content based

purely on keywords rather than the message as a

whole.

• f

C

E

(m

i

,m

j

): computes the cosine similarity of the

entities that appear in m

i

and m

j

focusing on the

entities shared by the two messages.

• f

C

C

(m

i

,m

j

): computes the cosine similarity of the

concepts in m

i

and m

j

, allowing the comparison of

the two messages on a higher level of abstraction:

from words to the expressed concepts.

The second component is related to the social sim-

ilarity. For each message m, we create a vector of in-

volved users U(m) = {u

1

,u

2

,...} defined as the union

of the sender and the recipients of m (note that the re-

cipients information is generally not provided in so-

cial network posts). We exploit the social relatedness

of two messages through two different features:

• The similarity of the users involved in the two

messages f

S

U

(m

i

,m

j

), defined as the Jaccard sim-

ilarity between U(m

i

) and U(m

j

):

f

S

U

(m

i

,m

j

) =

|U(m

i

) ∩ U(m

j

)|

|U(m

i

) ∪ U(m

j

)|

• The neighborhood Jaccard similarity f

S

N

(m

i

,m

j

)

of the involved users. The neighborhood set N (u)

of an user u is defined as the set of users that have

received at least one message from u. We also

include each user u in its neighborhood N (u) set.

The neighborhood similarity of two messages m

i

and m

j

is defined as follows:

f

S

N

(m

i

,m

j

) =

1

|U(m

i

)||U(m

j

)|

∑

u

i

∈U(m

i

)

u

j

∈U(m

j

)

|N (u

i

) ∩ N (u

j

)|

|N (u

i

) ∪ N (u

j

)|

3

http://www.alchemyapi.com/

m

1

m

2

Subject: request for

presentation

Content: Hi all, I want to

remember you to change the

presentation of Friday including

some slides on data related to

the contract with Acme.

Users: u

1

→ [u

2

, u

3

]

Date: September 15, 2015

W

m

1

: {want rememb chang

present Fridai includ slide data

relat contract Acme}

K

m

1

: {Hi, Acme, slides, Friday,

presentation, data, contract}

C

m

1

: {}

E

m

1

: {Acme}

Subject: presentation changes

Content: Yes sir, I added a

slide on the Acme contract at

the end of the presentation.

Users: u

2

→ [u

1

]

Date: September 16, 2015

W

m

2

: {sir ad slide Acme contract

present}

K

m

2

: {Acme, contract, sir, slide,

end, presentation}

C

m

2

: {}

E

m

2

: {Acme}

Content component f

C

T

=0.492 f

C

S

=0.5 f

C

K

=0.463 f

C

C

=0 f

C

K

=1

Social component f

S

U

=0.667 f

S

N

=0.667

Time Component f

T

=0.585

Figure 2: Example of features calculation for a pair of

messages. Message components: Subject, Content, Users

(sender → recipients) and creation date. W (m

i

) refers to

the bag of words of a message obtained after the tokeniza-

tion, stopwords removal and stemming. The vectors of key-

words (K (m

i

)), concepts (C (m

i

)) and entities (E (m

i

)) ex-

tracted from AlchemyAPI are shown. In the bottom the val-

ues for each proposed feature are also shown. For simplic-

ity, we assume binary weight for components.

Finally, the last component relies on the time of

two messages. We define the time similarity as the

logarithm of the inverse of the distance between the

two messages, expressed in days, as follows:

f

T

(m

i

,m

j

) = log

2

(1 +

1

1 + |t

m

i

−t

m

j

|

)

We use the inverse normalization of the distance in

order to give a value between zero and one, where

zero correspond to a high temporal distance and one

refers to messages with low distance.

As a practical example, Figure 2 shows two mes-

sages, with the related properties, and the values of

the features generated from them.

3.3 Clustering

In this section, we present the clustering

methods used to detect the threads. Based

on the set of aforementioned features F =

{ f

C

T

, f

C

S

, f

C

K

, f

C

E

, f

C

C

, f

S

U

, f

S

N

, f

T

}, we define

a distance measure that quantifies the similarity

between two messages:

SIM(m

i

,m

j

) = Π

f ∈F

(1 + f (m

i

,m

j

)) (1)

We compute a N × N matrix with the similari-

ties between each pair of messages (m

i

,m

j

) and we

use density based and hierarchical clustering algo-

rithms, being the two most common distance-based

approaches.

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection

47

3.3.1 Density-based Clustering

We use the DBSCAN (Ester et al., 1996) density-

based clustering algorithm in order to cluster mes-

sages to threads because given a set of points in

some space, DBSCAN groups points that are closely

packed together (with many nearby neighbors). DB-

SCAN requires two run time parameters, the mini-

mum number min of points per cluster, and a thresh-

old θ that defines the neighborhood distance between

points in a cluster. The algorithm starts by selecting

an arbitrary point, which has not been visited, and by

retrieving its θ-neighborhood it creates a cluster if the

number of points in that neighborhood is equals to or

greater than min. In situations where the point resides

in a dense part of an existing cluster, its θ-neighbor

points are retrieved and are added to the cluster. This

process stops when the densely-connected cluster is

completely found. Then, the algorithm processes new

unvisited points in order to discover any further clus-

ters.

In our study, we use messages as points and we

use weighted edges that connect each message to the

other messages. An edge (m

i

,m

j

) between two mes-

sages m

i

and m

j

is weighted with the similarity mea-

sure SIM(m

i

,m

j

). When DBSCAN tries to retrieve

the θ-neighborhood of a message m, it gets all mes-

sages that are adjacent to m with a weight in their edge

greater or equal to θ. Greater weight on an edge in-

dicates that the connected messages are more similar,

and thus they are closer to each other.

3.3.2 Hierarchical Clustering

This approach uses the Agglomerative hierarchical

clustering method (Bouguettaya et al., 2015) where

each observation starts in its own cluster, and pairs

of clusters are merged as one moves up the hierar-

chy. Running the agglomerative method requires the

choice of an appropriate linkage criteria, which is

used to determine the distance between sets of obser-

vations as a function of pairwise distances between

clusters that should be merged or not. In our study

we examined, in preliminary experiments, three of the

most commonly used linkage criteria, namely the sin-

gle, complete and average linkage (Manning et al.,

2008). We observed that average linkage clustering

leads to the best results. The average linkage cluster-

ing of two clusters of messages Ω

y

and Ω

z

is defined

as follows:

avgLinkCl(Ω

y

,Ω

z

) =

1

|Ω

y

||Ω

z

|

∑

ω

i

∈Ω

y

ω

j

∈Ω

z

SIM(ω

i

,ω

j

)

The agglomerative clustering method is an itera-

tive process that merges the two clusters with high-

est average linkage score. After each merge of the

clusters, the algorithm starts by recomputing the new

average linkage scores between all clusters. This pro-

cess runs until a cluster pair exists with a similarity

greater than a given threshold.

3.4 Classification

The clustering algorithms described above rely on the

similarity measure SIM, that combines with a sim-

ple multiplication several features, to obtain a single

final score. This similarity measure in eq. 1 gives

the same weight, namely importance, to each feature.

This avoids the requirement to tune the parameters re-

lated to each feature, but could provide an excessively

rough evaluation and thus bad performance. A differ-

ent possible approach, is to combine the sub compo-

nents of similarity measure SIM as features into a bi-

nary supervised model, in which each instance refers

to a pair of messages, the features are the same de-

scribed in the Section 3.2 and the label is one if the

messages belonging to the same thread and zero oth-

erwise. At runtime, this classifier is used to predict

the probability that two messages belong to the same

thread, using this probability as the distance between

the pairs of messages into the same clustering algo-

rithms. The benefit of such approach is that it au-

tomatically finds the appropriate features to use for

each dataset and it leads to a more complete view of

the importance of each feature. Although it is shown

in (Aumayr et al., 2011) that decision trees are faster

and more accurate in classifying text data, we experi-

mented with a variety of classifiers.

The classification requires a labeled dataset to

train a supervised model. The proposed classifier re-

lies on data in which each instance represents a pair of

messages. Given a set of training messages M

Tr

with

known conversation subdivision, we create the train-

ing set coupling each training message m ∈ M

Tr

with

n

s

messages of M

Tr

that belong to the same thread

of m and n

d

messages belonging to different threads.

We label each training instance with one if the cor-

responding pair of messages belong to same thread

and zero otherwise. Each of these coupled messages

are picked randomly. Theoretically we could create

(|M

Tr

| · |M

Tr

− 1|)/2 instances, coupling each mes-

sage with the whole training set. In preliminary tests

using Random Forest as the classification model, we

notice that coupling each training message with a few

dozen same and different messages can attain higher

performances. All the experiments are conducted us-

ing n

s

= n

d

= 20, i.e. each message is coupled with

at maximum 20 messages of the same conversation

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

48

Table 1: Characteristics of datasets.

Dataset Messages type #messages #threads #users Peculiarities

BC3 Emails 261 40 159 Threads contain emails with different subject

Apache Emails from mailing list 2945 334 113 Threads always contain emails with same subject

Redhat Emails from mailing list 12981 802 931 Threads always contain emails with same subject

WhoWorld Posts from Facebook page 2464 132 1853 Subject and recipients not available

HealthyChoice Posts from Facebook page 1115 132 601 Subject and recipients not available

Healthcare Advice Posts from Facebook group 3436 468 801 Subject and recipients not available

Ireland S. Android Posts from Facebook group 4831 408 354 Subject and recipients not available

and 20 of different ones. In the rest of the paper we

refer to the proposed clustering algorithm based on a

supervised model, as SVC.

As it will be shown in the Section 4.3, the Ag-

glomerative hierarchical clustering achieves better re-

sults with respect to the DBSCAN, thus, we use this

clustering algorithm in the SVC approach.

4 EVALUATION

In this section, we compare the accuracy of the clus-

tering methods described in Section 3 in terms of de-

tecting the actual threads.

4.1 Datasets

For evaluating our approach we consider the follow-

ing seven real datasets:

• The BC3 dataset (Ulrich et al., 2008), which is

a special preparation of a portion W3C corpus

(Soboroff et al., 2006) that consists of 40 conver-

sation threads. Each thread has been annotated by

three different annotators, such as extractive sum-

maries, abstractive summaries with linked sen-

tences, and sentences labeled with speech acts,

meta sentences and subjectivity.

• The Apache dataset which is a subset of Apache

Tomcat public mailing list

4

and it contains the dis-

cussions from August 2011 to March 2012.

• The Redhat dataset which is a subset of Fedora

Redhat Project public mailing list

5

and it con-

tains the discussions that took place in the first six

months of 2009.

• Two Facebook pages datasets, namely Healthy

Choice

6

and World Health Organizations

7

,

crawled using the Facebook API

8

. They consist

of real posts and relative replies between June

4

http://tomcat.apache.org/mail/dev

5

http://www.redhat.com/archives/fedora-devel-list

6

https://www.facebook.com/healthychoice

7

https://www.facebook.com/WHO

8

https://developers.facebook.com/docs/graph-api

and August 2015. We considered only the text

content of the posts (discarding links, pictures,

videos, etc.) and only those written in English

(AlchemyAPI is used to detect the language).

• Two Facebook public groups datasets, namely

Healthcare Advice

9

and Ireland Support An-

droid

10

, also crawled using the Facebook API.

They consist of conversations between June and

August 2015. Also for this dataset we considered

only the text content of the posts written in en-

glish.

We use the first three datasets that consist of

emails in order to compare our approach with exist-

ing related work (Dehghani et al., 2013; Erera and

Carmel, 2008; Wu and Oard, 2005) on conversa-

tion thread reconstruction in email messages. To our

knowledge, there are no publicly available datasets of

social network posts with a gold standard of conversa-

tion subdivision. We use the four Facebook datasets

to evaluate our method in a real social network do-

main.

The considered datasets have different peculiar-

ities, in order to evaluate our proposed method un-

der several perspectives. BC3 is a quite small dataset

(only 40 threads) of emails, but with the peculiarity of

being manually curated. In this dataset is possible to

have emails with different subjects in the same con-

versation. However, in Apache and Redhat the mes-

sages in the same thread, have also the same subject.

With regards to Facebook datasets, we decided

to use both pages and groups. Facebook pages are

completely open for all users to read and comment in

a conversation. In contrast, only the members of a

group are able to view and comment a group post and

this leads to a peculiarity of different social interac-

tion nets. Furthermore, each message - post - in these

datasets has available only the text content, the sender

and the time, without information related to subject

and recipients. Thus, we do not take into account the

similarities that use the recipients or subject. Table

1 provides a summary of the characteristics of each

dataset.

9

https://www.facebook.com/groups/533592236741787

10

https://www.facebook.com/groups/848992498510493

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection

49

In the experiments requiring a labeled set to train

a supervised model, the datasets are evaluated with 5-

fold cross-validation, subdividing each of those in 5

thread folds.

4.2 Evaluation Metrics

The precision, recall and F

1

-measure (Manning et al.,

2008) are used to evaluate the effectiveness of the

conversation threads detection. Here, we explain

these metrics in the context of the conversation detec-

tion problem. We evaluate each pair of messages in

the test set. A true positive (TP) decision correctly as-

signs two similar messages to the same conversation.

Similarly, a true negative (TN) assigns two dissimilar

messages to different threads. A false positive (FP)

case would be when the two messages do not belong

to the same thread but are labelled as co-threads in the

extracted conversations. Finally, false negative (FN)

case is when the two messages belong to the same

thread but are not co-threads in the extracted conver-

sations. Precision (p) and recall (r) are defined as fol-

lows:

p =

T P

T P + FP

r =

T P

T P + FN

The F

1

-measure is defined by combining the pre-

cision and recall together, as follows:

F

1

=

2 · p · r

p + r

We also use the purity metric to evaluate the clus-

tering. The dominant conversation, i.e. the conver-

sation with the highest number of messages inside a

cluster, is selected from each extracted thread clus-

ter. Then, purity is measured by counting the number

of correctly assigned messages considering the domi-

nant conversation as cluster label and finally dividing

by the number of total messages. We formally define

purity as

purity(Ω,C ) =

1

|M |

∑

k

max

j

|ω

k

∈ c

j

|

where Ω = {ω

1

,ω

2

,...,ω

k

} is the set of extracted con-

versations and C = {c

1

,c

2

,...,c

j

} is the set of real

conversations.

To better understand the purity metric, we refer to

the example of thread detection depicted in Figure 3.

For each cluster, the dominant conversation and the

number of related messages are: ω

1

: c

1

,4, ω

2

: c

2

,4,

ω

3

: c

3

,3. The total number of messages is |M | = 17.

Thus, the purity value is calculated as purity = (4 +

4 + 3)/17 = 0.647.

c

1

c

1

c

1

c

1

c

2

ω

1

c

2

c

3

c

3

c

2

c

2

c

1

c

3

c

1

c

1

c

3

c

3

c

2

ω

2

ω

3

Figure 3: Conversation extraction example. Each ω

k

refers

to an extracted thread and each c

j

corresponds to the real

conversation of the message.

A final measure of the effectiveness of the cluster-

ing method, is the simple comparison between the the

number of detected threads (|Ω|) against the number

of real conversations (|C |).

4.3 Results

In Table 2 and 3, we report the results obtained in the

seven datasets. All the results in these tables are ob-

tained using the Weka (Hall et al., 2009) implemen-

tation of Random Forest algorithm, using a 2 ×2 cost

matrix with a weight of 100 for the instances labeled

with one. The reported results are related to the best

tuning of the threshold parameter of the clustering

approaches, both for DBSCAN and Agglomerative.

Further analysis on the parameters of our method will

be discussed in the next section.

Table 2 shows the results on the email datasets, on

which we can compare our results (SVC) with other

existing approaches, such as the studies of Wu and

Oard (Wu and Oard, 2005), Erera and Carmel (Erera

and Carmel, 2008) and the lastest one of Dehghani et

al (Dehghani et al., 2013). The first two approaches

(Wu and Oard, 2005; Erera and Carmel, 2008) are

unsupervised, as the two clustering baselines, while

the approach in (Dehghani et al., 2013) is supervised,

like our proposed SVC; both this supervised methods

are evaluated with the same 5-fold cross-validation,

described above. All of the existing approaches use

the information related to the subject of the emails,

we show in the top part of the table a comparison

using also the subject as feature in our proposed ap-

proach. We want point out that in Apache and Redhat

dataset, the use of the subject could make the clus-

terization effortless, since all messages of a thread

have same subject. It is notable how our supervised

approach obtains really high results, reaching almost

perfect predictions and always outperforming the ex-

isting approaches, particularly in Redhat and Apache

dataset. In our view, the middle of Table 2 is of partic-

ular interest, where we do not considered the subject

information. The results, especially in Redhat and

Apache, have a little drop, remaining anyhow at high

levels, higher than all existing approaches that take

into consideration the subject. Including the subject

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

50

Table 2: Conversation detection results on email datasets. (Wu and Oard, 2005; Erera and Carmel, 2008), DBSCAN and

Agglom. are unsupervised methods, while (Dehghani et al., 2013) and SVC are supervised. The top part of the table shows

the results obtained by methods using subject information, the middle part shows those achieved without such feature, finally

the bottom part shows the results obtained with SVC method considering only a single dimension. With + and – we indicate

respectively the use or not of the specified feature (s: subject feature, a: the three Alchemy features). For clustering and SVC

approach we report results with best threshold tuning.

BC3 Apache Redhat

Methods Precision Recall F

1

Precision Recall F

1

Precision Recall F

1

Wu and Oard (Wu and Oard, 2005) 0.601 0.625 0.613 0.406 0.459 0.430 0.498 0.526 0.512

Erera and Carmel (Erera and Carmel, 2008) 0.891 0.903 0.897 0.771 0.705 0.736 0.808 0.832 0.82

Dehghani et al. (Dehghani et al., 2013) 0.992 0.972 0.982 0.854 0.824 0.839 0.880 0.890 0.885

DBSCAN (+s) 0.871 0.737 0.798 0.359 0.555 0.436 0.666 0.302 0.416

Agglom. (+s) 1.000 0.954 0.976 0.358 0.918 0.515 0.792 0.873 0.83

SVC (+s) 1.000 0.986 0.993 0.998 1.000 0.999 0.995 0.984 0.989

DBSCAN (–s) 0.696 0.615 0.653 0.569 0.312 0.403 0.072 0.098 0.083

Agglom. (–s) 1.000 0.954 0.976 0.548 0.355 0.431 0.374 0.427 0.399

SVC (–s) 1.000 0.952 0.975 0.916 0.972 0.943 0.966 0.914 0.939

SVC (–s –a) 0.967 0.979 0.973 0.892 0.994 0.940 0.815 0.699 0.753

SVC (content) 1.000 0.919 0.958 0.954 0.974 0.964 0.988 0.984 0.986

SVC (content –s) 0.964 0.902 0.932 0.604 0.706 0.651 0.899 0.872 0.885

SVC (content –s –a) 1.000 0.828 0.905 0.539 0.565 0.552 0.68 0.558 0.613

SVC (social) 0.939 0.717 0.813 0.345 0.361 0.353 0.360 0.045 0.08

SVC (time) 0.971 0.897 0.933 0.656 0.938 0.772 0.376 0.795 0.511

Table 3: Conversation detection results on Facebook post datasets (subject and recipient information are not available). The

top part of the table shows the results obtained considering all the dimensions, the bottom part shows the results obtained

with SVC method considering only a single dimension. For clustering and our approach we report results with best threshold

tuning.

Healty Choice World Health Org. Healthcare Advice Ireland S. Android

Methods Precision Recall F

1

Precision Recall F

1

Precision Recall F

1

Precision Recall F

1

DBSCAN 0.027 0.058 0.037 0.159 0.043 0.067 0.206 0.051 0.082 0.201 0.002 0.004

Agglom. 0.228 0.351 0.276 0.154 0.399 0.223 0.429 0.498 0.461 0.143 0.141 0.142

SVC 0.670 0.712 0.690 0.552 0.714 0.623 0.809 0.721 0.763 0.685 0.655 0.67

SVC (–a) 0.656 0.713 0.683 0.543 0.742 0.627 0.802 0.733 0.766 0.708 0.714 0.711

SVC (content) 0.308 0.032 0.058 0.406 0.120 0.185 0.443 0.148 0.222 0.127 0.042 0.063

SVC (content –a) 0.286 0.025 0.046 0.376 0.11 0.171 0.414 0.127 0.195 0.105 0.033 0.050

SVC (social) 0 0 0 0 0 0 0.548 0.188 0.280 0.155 0.234 0.186

SVC (time) 0.689 0.670 0.679 0.531 0.750 0.622 0.638 0.769 0.697 0.667 0.703 0.685

or not, the use of a supervised model to evaluate the

similarity between two messages, brings a great im-

provement to the clustering performances, compared

to the use of a simple combination of each feature as

described in Section 3.3. In the middle part of Table

2 is also shown the effectiveness of our SVC predic-

tor without the three features related to AlchemyAPI

information; these features lead to an improvement of

results especially in Redhat, which is the largest and

more challenging dataset.

The aforementioned considerations, are valid also

for the experiments on social network posts. To the

best of our knowledge, there is not any related work

on such type of datasets. In Table 3, we report the re-

sults of our approach on the four Facebook datasets.

These data do not provide the subject and recipients

information of messages, thus the reported results are

obtained without the features related to the subject

and neighborhood similarities, namely f

C

S

(m

i

,m

j

)

and f

S

N

(m

i

,m

j

). We notice that the pure unsupervised

clustering methods, particularly DBSCAN, achieve

𝐹

𝑇

𝐹

𝐶

𝑆

𝐹

𝑆

𝑁

different

𝐹

𝑇

different same

𝐹

𝐶

𝑆

𝐹

𝐶

𝑇

samedifferent

same

𝐹

𝑇

𝐹

𝑆

𝑁

𝐹

𝑆

𝑁

same different same

𝐹

𝐶

𝑇

differentsame

<= 0.10

> 0.10

<= 0.73

> 0.73

<= 0.02

> 0.02

<= 0.04

> 0.04

<= 0.49

> 0.49

<= 0.04

> 0.04

> 0.23

<= 0.23

<= 0.73

> 0.73

<= 0.06

> 0.06

<= 0.004

> 0.004

Figure 4: Decision trees created for BC3 dataset.

low precision and recall. This is due to the real dif-

ficulties of these post’s data: single posts are gener-

ally short with little semantic information. For ex-

ample suppose we have two simultaneous conversa-

tions t1: ”How is the battery of your new phone?”

- ”good!” and t2: ”how was the movie yesterday?”

- ”awesome!”. By using only the semantic informa-

tion of the content, it is not possible to associate the

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection

51

replies to the right question, thus the time and the so-

cial components become crucial. Although there is a

large amount of literature to handle grammatical er-

rors or misspelling, in our study we have not taken

into account these issues. Despite these difficulties,

our method guided by a supervised model achieves

quite good results in such data, with an improve-

ment almost always greater than 100% with respect

the pure unsupervised clustering. Results in Table 3

show the difficulties also for AlchemyAPI to extract

valuable information from short text posts. In fact, re-

sults using the AlchemyAPI related features does not

lead to better results.

The results achieved by the SVC method for each

dimension are reported at the bottom of the Tables 2

and 3, in particular those regarding the content dimen-

sion have been produced with all features, excepts the

subject and the Alchemy related features. In Table 2

is notable that considering the content dimension to-

gether with the subject feature leads, as expected, to

the highest accuracy. By excluding the subject fea-

ture, SVC produces quite good results with each di-

mension, however they are lower than those obtained

by the complete method; this shows that the three

dimensional representation leads to better clusterisa-

tion.

Table 3 shows the differentiation in the results re-

lated to the Facebook datasets. In particular, the so-

cial dimension performs poorly if used alone, in fact

the author of a message is known, whereas not the re-

ceiver user; also the text content dimension behaves

badly if considered alone. In these datasets, the time

appears to be the most important feature to discrim-

inate the conversations, however the results achieved

only with this dimension are worse than those of the

SVC complete method.

From these results, achieved using each dimension

separately from the others, we deduce that SVC is ro-

bust to different types of data. Moreover the use of a

supervised algorithm allows both to detect the impor-

tance of the three dimensions and to achieve a method

that can deal with different datasets without requiring

ad-hoc tuning or interventions.

The learning algorithm we used, Random Forest,

builds models in form of sets of decision trees, which

predicts the relationship between an input features

vector by walking across branches to a leaf node. An

example of such a decision tree is reported in Figure

4: to predict the relation between a pair of messages,

the classifier start from the root and follow branches

of the tree according to the computed features. The

numbers in the edges are the threshold of feature val-

ues which classifier checks in order to choose which

branch of the tree should pick. We shown that the time

similarity is the most significant feature. In particular,

when we include the subject as a feature, we notice

that the second significant features are the text cosine

similarity and the Jaccard neighborhood. However,

when we exclude the subject, involved users Jaccard

similarity takes the place of text cosine similarity.

Table 4: Results varying the supervised model used to com-

pute the distance between two email.

Model Purity Precision Recall F

1

|Ω|

BC3 (|C | = 40)

LibSVM 0.980 0.962 0.984 0.973 40

Logistic 1.000 1.000 0.965 0.982 45

J48 0.961 0.915 0.993 0.952 39

RF 1.000 1.000 0.961 0.980 45

RF:100 1.000 1.000 0.952 0.975 46

Apache (|C| = 334)

LibSVM 0.785 0.584 0.583 0.584 500

Logistic 0.883 0.904 0.883 0.893 275

J48 0.851 0.865 0.931 0.897 281

RF 0.862 0.885 0.979 0.930 255

RF:100 0.920 0.916 0.972 0.943 286

Redhat (|C | = 802)

LibSVM 0.575 0.473 0.674 0.556 450

Logistic 0.709 0.619 0.697 0.656 572

J48 0.672 0.614 0.516 0.561 1330

RF 0.89 0.888 0.900 0.894 762

RF:100 0.954 0.966 0.914 0.939 818

Facebook page: Healty Choice |C| = 132)

LibSVM 0.766 0.657 0.694 0.675 187

Logistic 0.788 0.676 0.724 0.699 211

J48 0.777 0.621 0.710 0.662 219

RF 0.771 0.682 0.656 0.668 218

RF:100 0.787 0.670 0.712 0.690 214

Facebook page: World Health Organization (n

c

= 132)

LibSVM 0.628 0.444 0.805 0.573 118

Logistic 0.755 0.566 0.702 0.627 198

J48 0.774 0.603 0.615 0.609 260

RF 0.731 0.536 0.718 0.614 186

RF:100 0.747 0.552 0.714 0.623 222

Facebook group: Healthcare Advice (|C | = 468)

LibSVM 0.692 0.502 0.768 0.607 383

Logistic 0.840 0.699 0.761 0.729 548

J48 0.775 0.610 0.671 0.639 573

RF 0.766 0.596 0.773 0.673 467

RF:100 0.909 0.809 0.721 0.763 714

Facebook page: Ireland Support Android (|C| = 408)

LibSVM 0.655 0.460 0.744 0.568 356

Logistic 0.814 0.654 0.723 0.687 573

J48 0.758 0.590 0.636 0.612 658

RF 0.786 0.646 0.641 0.644 627

RF:100 0.821 0.685 0.655 0.670 663

4.3.1 Parameter Tuning

Parameter tuning in machine learning techniques is

often a bottleneck and a crucial task in order to obtain

good results. In addition, for practical applications,

it is essential that methods are not overly sensitive to

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

52

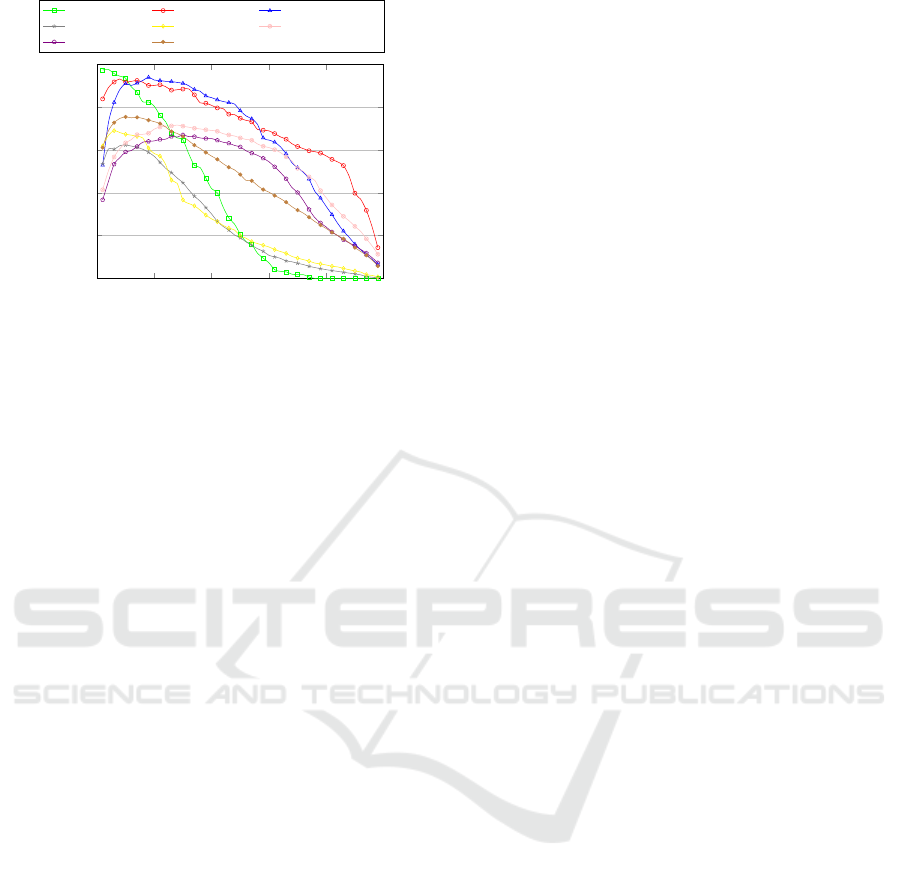

0 0.2 0.4

0.6

0.8 1

0

0.2

0.4

0.6

0.8

1

threshold

F

1

BC3 Apache Redhat

WHO Healthy Choice Healthcare Device

Ireland Android

Average

Figure 5: F

1

measure for varying number of threshold.

parameter values. Our proposed method requires the

setting of few parameters. In this section, we show

the effect of changing different parameter settings. A

first investigation of our SVC regards the supervised

algorithm used to define the similarity score between

a pair of messages. We conducted a series of experi-

ments on the benchmark datasets varying the model.

Namely, we used decision trees (Random Forest and

J48), SVM (LibSVM) and Logistic Regression. For

all the algorithms we used the Weka implementation

using the default parameter values. Considering the

intrinsic lack of balance of the problem (i.e. each

message has a plenty of pairs with messages that be-

long to different threads and just few in the same one)

we also experimented with a cost-sensitive version of

Random Forest, setting a ratio of 100 for instances

with messages belonging to the same thread. Table

4 shows the results, it is notable that the cost sensi-

tive Random Forest always outperforms the standard

Random Forest. Logistic regression and cost sensi-

tive Random Forest achieve better results, with a little

predominance of the latter.

The main parameter of our proposed method re-

gards the threshold value used in the clustering algo-

rithms. We experimented with the use of a supervised

model in the DBSCAN clustering algorithm, but we

noticed the results were not good. This is not surpris-

ing if we consider how DBSCAN works: it groups

messages in a cluster iteratively adding the neighbors

of the messages belonging to the cluster itself. This

leads to the erroneous merge of two different conver-

sations, if just one pair of messages is misclassified

as similar, bringing a sharp decline to the clustering

precision. The previous issue, however, does not af-

fect the agglomerative clustering, because of the use

of average link of two messages inside two clusters,

to decide whether to merge them or not. In this ap-

proach the choice of the threshold parameter is cru-

cial, namely the stop merge criterion. Figure 5 shows

the F

1

trend varying the agglomerative threshold, us-

ing the weighted Random Forest as the supervised

model. Is notable that all the trends have only one

peak that corresponds to a global maximum, thus with

a simple gradient descent is possible to find the best

threshold value. Furthermore, our method is generally

highly effective for threshold values ranging from 0.1

to 0.3, as shown in Figure 5. This is also confirmed

by the average trend, that has a peak with a threshold

equal to 0.1.

4.4 Towards Subject-based Supervised

Models

In this section, we discuss the possibility of creating

an - incomplete - training set from which to create the

supervised model of SVC, with the peculiarity that

is not a labeled set known a priori. This proposed

method is particularly suitable for email datasets. The

main assumption is that a conversation of emails can

be formed by emails with the same subject and also

by emails with different ones. It is quite common, but

not always true, that emails with the same subject re-

fer to the same conversation (Wu and Oard, 2005).

This intuition can provide preliminary conversation

detection of a message pool in an unsupervised way.

Our proposal is to create the training set using this

simple clusterization as labels for distinguishing mes-

sages belonging to the same or different threads (i.e.

if the two messages have same subject). We can train

the classifier with this labeled set using this model

inside the clustering method as described in section

3.4. Since the subject is a known feature of an email

dataset, this approach guarantees the use of a super-

vised classifier even if no labeled dataset is given.

From the benchmark datasets we considered, only

BC3 provides emails with different subject in the

same threads. To test this approach, there is no need

of a crossfold validation: the whole dataset is initially

labeled based only on the subject of emails, a classi-

fier is trained with this data and then used to clusterize

the starting dataset. The results are extremely promis-

ing: we obtain a purity and a precision equal to 1, a

recall equal to 0.986 and the resulting F

1

measure is

equal to 0.993, higher than the state of art.

5 CONCLUSIONS

In this paper, we focus on the problem of detecting

threads from a pool of messages that correspond to

social network chats, mailing list, email boxes, chats,

A Novel Method for Unsupervised and Supervised Conversational Message Thread Detection

53

forums etc. We address the problem by using a three-

dimensional representation for each message, which

involves textual semantic content, social interactions

and creation time. Then, we propose a suite of fea-

tures based on the three dimensional representation

to compute the similarity measure between messages,

which is used in a clustering algorithms to detect the

threads. We also propose the use of a supervised

model which combines these features using the prob-

ability to be in the same thread estimated by the model

as a distance measure between two messages. We

show that the use of a classifier leads to higher ac-

curacy in thread detection, outperforming all earlier

approaches.

For future work, an interesting variation of the

problem to consider is the conversation tree recon-

struction, where we have to detect the reply structure

of the conversations inside a thread.

REFERENCES

Adams, P. H. and Martell, C. H. (2008). Topic detection and

extraction in chat. In ICSC 2008, pages 581–588.

Aumayr, E., Chan, J., and Hayes, C. (2011). Reconstruction

of threaded conversations in online discussion forums.

In Weblogs and Social Media.

Blei, D. M., Ng, A. Y., and Jordan, M. I. (2003). Latent

dirichlet allocation. the Journal of machine Learning

research, 3:993–1022.

Bouguettaya, A., Yu, Q., Liu, X., Zhou, X., and Song, A.

(2015). Efficient agglomerative hierarchical cluster-

ing. Expert Syst. Appl., 42(5):2785–2797.

Coussement, K. and den Poel, D. V. (2008). Improving

customer complaint management by automatic email

classification using linguistic style features as predic-

tors. Decision Support Systems, 44(4):870–882.

Dehghani, M., Shakery, A., Asadpour, M., and

Koushkestani, A. (2013). A learning approach

for email conversation thread reconstruction. J.

Information Science, 39(6):846–863.

Domeniconi, G., Moro, G., Pasolini, R., and Sartori, C.

(2016). A comparison of term weighting schemes for

text classification and sentiment analysis with a super-

vised variant of tf.idf. In Data Management Technolo-

gies and Applications (DATA 2015), Revised Selected

Papers, volume 553, pages 39–58. Springer.

Erera, S. and Carmel, D. (2008). Conversation detection

in email systems. In ECIR, Glasgow, UK, March 30-

April 3, 2008., pages 498–505.

Ester, M., Kriegel, H., Sander, J., and Xu, X. (1996). A

density-based algorithm for discovering clusters in

large spatial databases with noise. In (KDD-96), Port-

land, Oregon, USA, pages 226–231.

F. M. Khan, T. A. Fisher, L. S. T. W. and Pottenger, W. M.

(2002). Mining chatroom conversations for social and

semantic interactions. In Technical Report LU-CSE-

02-011, Lehigh University.

Glass, K. and Colbaugh, R. (2010). Toward emerging topic

detection for business intelligence: Predictive analysis

of meme’ dynamics. CoRR, abs/1012.5994.

Hall, M. A., Frank, E., Holmes, G., Pfahringer, B., Reute-

mann, P., and Witten, I. H. (2009). The WEKA data

mining software: an update. SIGKDD Explorations,

11(1):10–18.

Hofmann, T. (1999). Probabilistic latent semantic indexing.

In ACM SIGIR, pages 50–57. ACM.

Huang, J., Zhou, B., Wu, Q., Wang, X., and Jia, Y. (2012).

Contextual correlation based thread detection in short

text message streams. J. Intell. Inf. Syst., 38(2):449–

464.

Joshi, S., Contractor, D., Ng, K., Deshpande, P. M., and

Hampp, T. (2011). Auto-grouping emails for faster

e-discovery. PVLDB, 4(12):1284–1294.

Jurczyk, P. and Agichtein, E. (2007). Discovering author-

ities in question answer communities by using link

analysis. In CIKM, Lisbon, Portugal, November 6-10,

2007, pages 919–922.

Manning, C. D., Raghavan, P., Sch

¨

utze, H., et al. (2008).

Introduction to information retrieval, volume 1. Cam-

bridge university press Cambridge.

Porter, M. F. (1980). An algorithm for suffix stripping. Pro-

gram, 14(3):130–137.

Salton, G. and Buckley, C. (1988). Term-weighting ap-

proaches in automatic text retrieval. Information pro-

cessing & management, 24(5):513–523.

Shen, D., Yang, Q., Sun, J., and Chen, Z. (2006). Thread

detection in dynamic text message streams. In SIGIR,

Washington, USA, August 6-11, 2006, pages 35–42.

Singhal, A. (2001). Modern information retrieval: A brief

overview. IEEE Data Eng. Bull., 24(4):35–43.

Soboroff, I., de Vries, A. P., and Craswell, N. (2006).

Overview of the TREC 2006 enterprise track. In

TREC, Gaithersburg, Maryland, USA, November 14-

17, 2006.

Ulrich, J., Murray, G., and Carenini, G. (2008). A publicly

available annotated corpus for supervised email sum-

marization. In AAAI08 EMAIL Workshop.

Wang, H., Wang, C., Zhai, C., and Han, J. (2011). Learn-

ing online discussion structures by conditional ran-

dom fields. In In SIGIR 2011, Beijing, China, July

25-29, 2011, pages 435–444.

Wu, Y. and Oard, D. W. (2005). Indexing emails and email

threads for retrieval. In SIGIR, pages 665–666.

X. Wang, M. Xu, N. Z. and Chen, N. (2008). Email conver-

sations reconstruction based on messages threading

for multi-person. In (ETTANDGRS ’08), volume 1,

pages 676–680.

Yeh, J. (2006). Email thread reassembly using similarity

matching. In CEAS, July 27-28, 2006, Mountain View,

California, USA.

Zhao, Q. and Mitra, P. (2007). Event detection and visu-

alization for social text streams. In ICWSM, Boulder,

Colorado, USA, March 26-28, 2007.

Zhao, Q., Mitra, P., and Chen, B. (2007). Temporal and

information flow based event detection from social

text streams. In AAAI, July 22-26, 2007, Vancouver,

British Columbia, Canada, pages 1501–1506.

DATA 2016 - 5th International Conference on Data Management Technologies and Applications

54