Emotion Selection in a Multi-Personality Conversational Agent

Jean-Claude Heudin

DeVinci Research Center, Pôle Universitaire Léonard de Vinci, 92916 Paris – La Défense, France

Keywords: Conversational Agent, Multi-personality, Emotion Selection.

Abstract: Conversational agents and personal assistants represent an historical and important application field in

artificial intelligence. This paper presents a novel approach to the problem of humanizing artificial

characters by designing believable and unforgettable characters who exhibit various salient emotions in

conversations. The proposed model is based on a multi-personality architecture where each agent

implements a facet of its identity, each one with its own pattern of perceiving and interacting with the user.

In this paper we focus on the emotion selection principle that chooses, from all the candidate responses, the

one with the most appropriate emotional state. The experiment shows that a conversational multi-

personality character with emotion selection performs better in terms of user engagement than a neutral

mono-personality one.

1 INTRODUCTION

In recent years, there has been a growing interest in

conversational agents. In a relative short period of

time, several companies have proposed their own

virtual assistants: Apple’s Siri based on the CALO

project (Myers et al., 2007), Microsoft Cortana

(Heck, 2014), Google Now (Guha et al., 2015) and

Facebook M (Marcus, 2015), etc. These virtual

assistants focus primarily on conversational

interface, personal context awareness, and service

delegation. They follow a long history of research

and the development of numerous intelligent

conversational agents, the first one being Eliza

(Weizenbaum, 1966).

Beyond the challenge of interpreting a user’s

request in order to provide a relevant response, a key

objective is to enhance man-machine interactions by

humanizing artificial characters. Often described as

a distinguishing feature of humanity, the ability to

understand and express emotions is a major

cognitive behavior in social interactions (Salovey

and Meyer, 1990). However, all the previously cited

personal assistants are based on a character design

with no emotional behavior or at most a neutral one.

At the same time, there have been numerous

studies about emotions (Ekman, 1999) and their

potential applications for artificial characters (Bates,

1994). For example, Dylaba et al. have worked on

combining humor and emotion in human-agent

conversation using a multi-agent system for joke

generation (Dybala et al., 2010). In parallel with the

goal of developing personal assistants, there is also a

strong research trend in robotics for designing

emotional robots. Some of these studies showed that

a robot with emotional behavior performs better than

a robot without emotional behavior for tasks

involving interactions with humans (Leite et al.,

2008).

In this paper we address the long-term goal of

designing believable and “unforgettable” artificial

characters with complex and remarkable emotion

behavior. In this framework, we follow the initial

works done for multi-cultural characters (Hayes-

Roth et al., 2002) and more recently for multi-

personality characters (Heudin, 2011). This

approach takes advantage of psychological studies

of human interactions with computerized systems

(Reeves and Nass, 1996) and the know-how of

screenwriters and novelists since believable

characters are the essence of successful fiction

writing (Seger, 1990).

Our original model is based on multi-agent

architecture where each agent implements a facet of

its emotional personality. The idea is that the

character’s identity is an emerging property of

several personality traits, each with its own pattern

of perceiving and interacting with the user. Then, the

problem is to “reconnect” personalities of the

disparate alters into a single and coherent identity.

34

Heudin J.

Emotion Selection in a Multi-Personality Conversational Agent.

DOI: 10.5220/0006113600340041

In Proceedings of the 9th International Conference on Agents and Artificial Intelligence (ICAART 2017), pages 34-41

ISBN: 978-989-758-220-2

Copyright

c

2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

This can be done by selecting amongst the candidate

responses the one with the most appropriate

emotional state.

Our hypothesis is that such a behavior is

“complex” in the meaning defined initially by

Wolfram for cellular automata (Wolfram, 1984).

This study propose four classes of systems: Class I

and Class II are characterized respectively by fixed

and cyclic dynamical behaviors; Class III is

associated with chaotic behaviors; Class IV is

associated with complex dynamical behaviors. It has

been shown that, when mapping these different

classes, complex adaptive systems are located in the

vicinity of a phase transition between order and

chaos (Langton, 1990). In the context of our study,

Class I and Class II correspond to fixed or cyclic

emotional behavior resulting in “machine-like”

interactions. Class III systems are characterized by

incoherent emotional responses, which are a

symptom of mental illness such as dissociative

identity disorder. Class IV systems are at the edge

between order and chaos, giving coherent answers

while preserving diversity and rich emotional

responses.

In this paper we will focus on a first experiment

of emotion selection in a multi-personnality

conversational agent based on this hypothesis. More

pragmatically, we aim to answer the following

research question:

Does a conversational agent based on a multi-

personality character with emotion selection

perform better than a neutral mono-personality in

terms of user engagement?

This paper is organized as follows. In Section 2,

we describe the basic architecture for a multi-

personality character with emotion selection, and

Section 3 describes more precisely the emotion

metabolism. Section 4 focuses on the emotion

selection, which is the central point of this paper.

Section 5 describes the experimental protocol and

Section 6 discusses our first qualitative results. We

conclude in Section 7 and present the future steps of

this research.

2 EMOTIONAL

MULTI-PERSONALITY

CHARACTERS

The basic architecture for a multi-personality

character is a multi-agent system where each

personality trait is implemented as an agent.

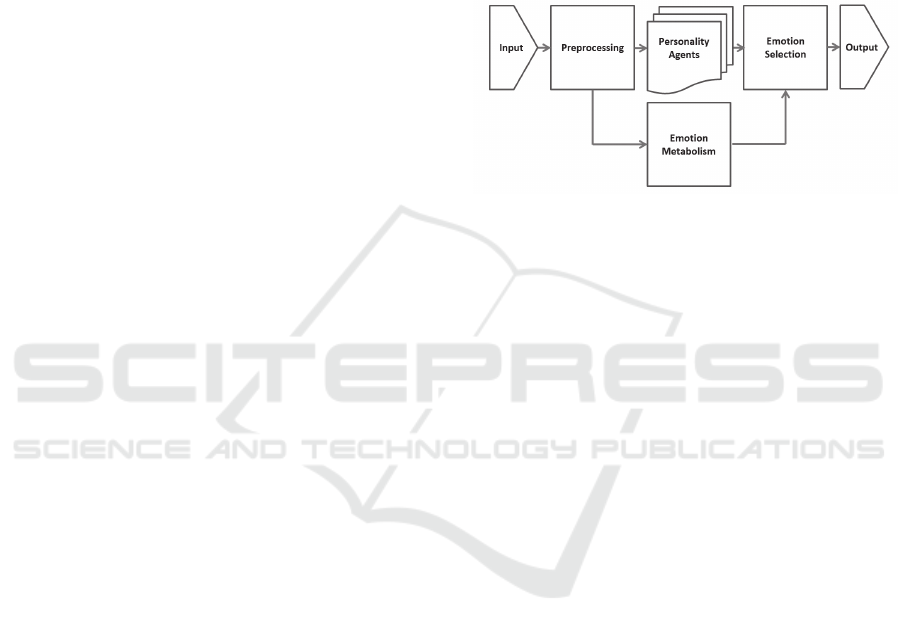

The first agent receives the input from the user,

and applies various preprocessing phases including

an English stemmer, corrector, and tokenizer. It also

executes a global category extraction using a

general-purpose ontology.

Then the preprocessed sentence and the extracted

categories are diffused to all personality agents.

Thus, all these personality agents are able to react to

the user’s input by computing an appropriate answer

message given their own state.

Figure 1: The architecture of the multi-personality

character with emotion selection.

In this architecture the input is also linked to an

emotion metabolism that computes the current

emotional state of the artificial character. Then, the

emotion selection agent uses this emotional state for

choosing one of the candidate responses.

In the next sections, we describe the emotion

metabolism and more precisely the emotion

selection, since the other parts – preprocessing and

personality agents – are not the focus of this paper

and can be implemented using many various

approaches and techniques.

3 EMOTION METABOLISM

Previously, (Gebhard, 2005) and (Heudin, 2015)

have proposed models of artificial affects based on

three interacting forms:

Personality reflects long-term affect. It shows

individual differences in mental characteristics

(McCrae and John, 1992).

Mood reflects a medium-term affect, which is

generally not related with a concrete event,

action or object. Moods are longer lasting

stable affective states, which have a great

influence on human’s cognitive functions

(Morris and Schnurr, 1989).

Emotion reflects a short-term affect, usually

bound to a specific event, action or object,

Emotion Selection in a Multi-Personality Conversational Agent

35

which is the cause of this emotion. After its

elicitation emotions usually decay and

disappear from the individual’s focus (Campos

et al., 1994).

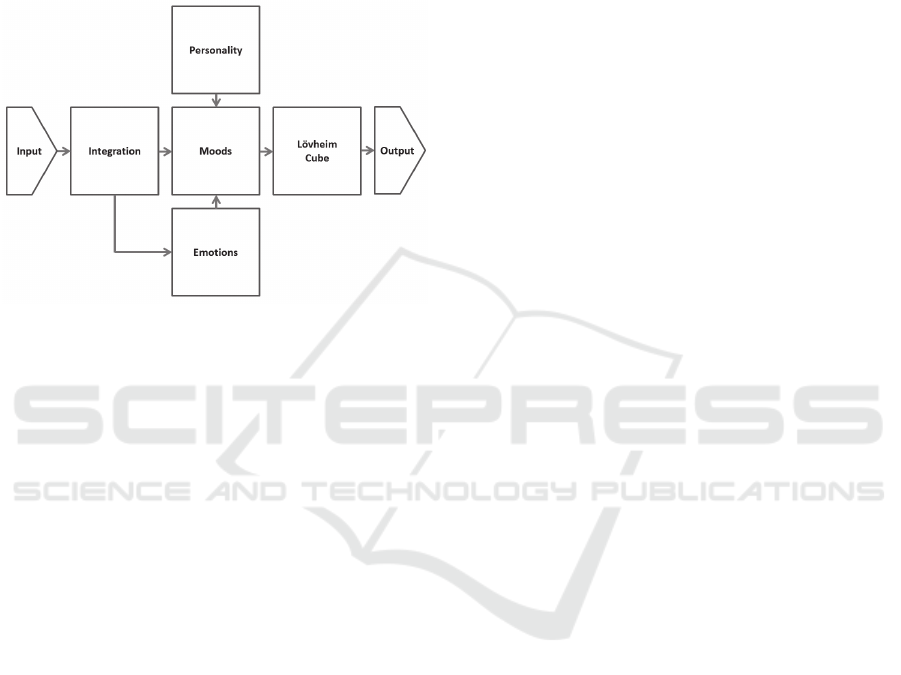

After (Heudin, 2015) we implemented this

approach as a bio-inspired emotion metabolism

using a connectionist architecture. Figure 2 shows a

schematic representation of its principle.

Figure 2: The architecture of the emotional metabolism.

The integration module converts the inputs to

virtual neurotransmitters values. These values are

then used by the three levels of affects in order to

produce the output of the emotional metabolism.

3.1 Personality

This module is based on the “Big Five” model of

personality (McCrae and John, 1992). It contains

five main variables with values varying from 0.0

(minimum intensity) to 1.0 (maximum intensity).

These values specify the general affective behavior

by the five following traits:

Openness

Openness (Op) is a general appreciation for art,

emotion, adventure, unusual ideas, imagination,

curiosity, and variety of experience. This trait

distinguishes imaginative people from down-to-

earth, conventional people.

Conscientiousness

Conscientiousness (Co) is a tendency to show self-

discipline, act dutifully, and aim for achievement.

This trait shows a preference for planned rather than

spontaneous behavior.

Extraversion

Extraversion (Ex) is characterized by positive

emotions and the tendency to seek out stimulation

and the company of others. This trait is marked by

pronounced engagement with the external world.

Agreeableness

Agreeableness (Ag) is a tendency to be

compassionate and cooperative rather than

suspicious and antagonistic towards others. This trait

reflects individual differences in concern with for

social harmony.

Neuroticism

Neuroticism (Ne) is a tendency to experience

negative emotions, such as anger, anxiety, or

depression. Those who score high in neuroticism are

emotionally reactive and vulnerable to stress.

3.2 Moods

Previous works such as (Heudin, 2004) and

(Gebhard, 2005) used the Pleasure-Arousal-

Dominance approach [Mehrabian, 1996]. We use

here another candidate model aimed at explaining

the relationship between three important monoamine

neurotransmitters involved in the Limbic system and

the emotions (Lövheim, 2012). It defines three vitual

neurotranmitters which levels range from 0.0 to 1.0:

Serotonin

Serotonin (Sx) is associated with memory and

learning. An imbalance in serotonin levels results in

anger, anxiety, depression and panic. It is an

inhibitory neurotransmitter that increases positive

vs. negative feelings.

Dopamine

Dopamine (Dy) is related to experiences of pleasure

and the reward-learning process. It is a special

neurotransmitter because it is considered to be both

excitatory and inhibitory.

Noradrenaline

Noradrenaline (Nz) helps moderate the mood by

controlling stress and anxiety. It is an excitatory

neurotransmitter that is responsible for stimulatory

processes, increasing active vs. passive feelings.

3.3 Emotions

This module implements emotion as very short-term

affects, typically less than ten seconds, with

relatively high intensities. They are triggered by

inducing events suddenly increasing one or more

neurotransmitters. After a short time, these

neurotransmitter values decrease due to a natural

decay function.

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

36

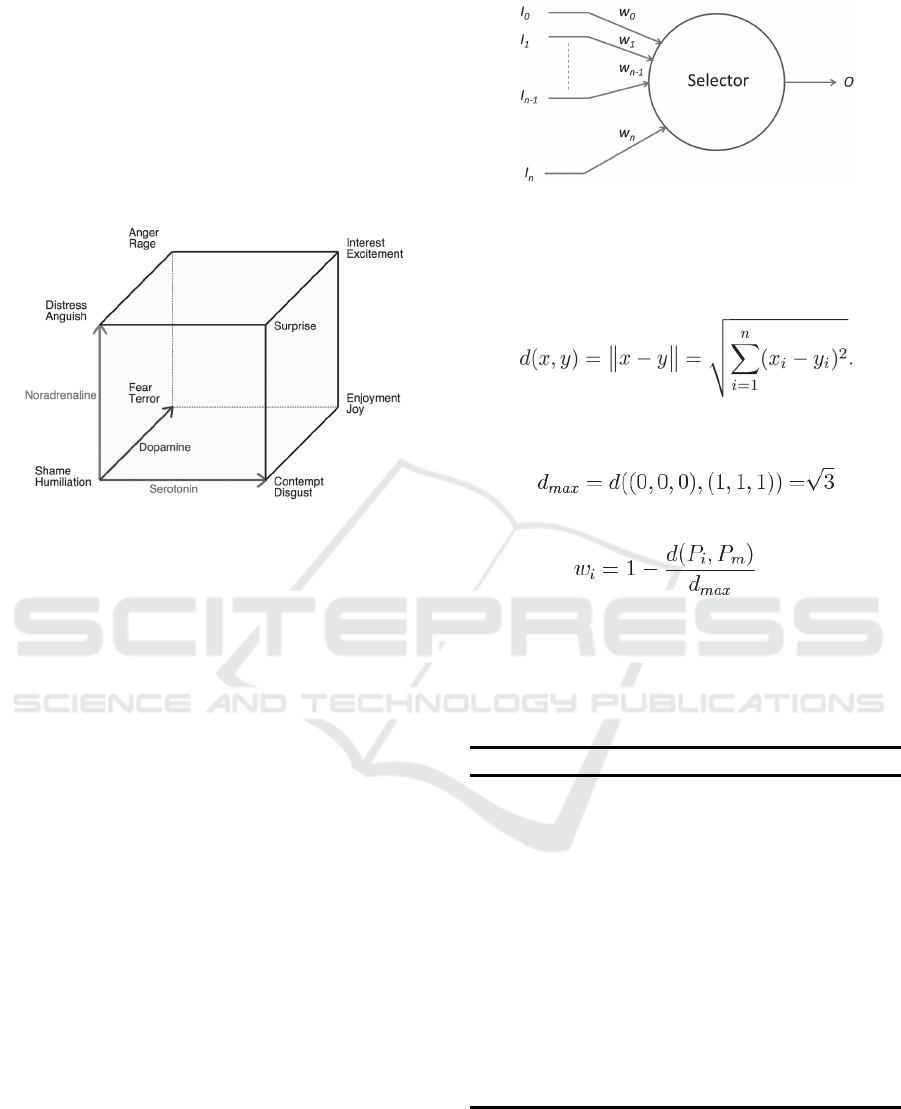

3.4 Lövheim Cube

This module implements the Lövheim Cube of

emotions (Lövheim, 2012), where the three

monoamine neurotransmitters form the axes of a

three-dimensional coordinate system, and the eight

basic emotions, labeled according to the Affect

Theory (Tomkins, 1991) are placed in the eight

corners.

Figure 3: The Lövheim Cube of emotions.

4 EMOTION SELECTION

The emotional selection is implemented as an agent

that selects one of the possible answers proposed by

the set of personality agents. This section describes

this selection principle in a rigorous mathematical

and algorithmic way so as to make similar

experiments reproducible.

In order to have a selection that follows our

“edge of chaos” hypothesis, we choose a principle

that is close to the fitness proportionate selection of

genetic algorithms, also called roulette wheel

selection (Baker, 1987). Instead of a fitness value,

we use a weight proportionate to the Euclidian

distance between the current character’s emotional

state and the one of the given personality agent in

the Lövheim Cube. In other words more the current

emotional state is close to that of an agent, greater is

its weight.

Given:

a set of strings representing the outputs of

the personality agents: I

0

…I

n

,

a set of weights associated to each of these

possible answers: w

0

…w

n

,

a transition function S(t) returning the

selected string O among the possible

answers.

Figure 4: The emotional selector represented as an

artificial neuron with a dedicated transition function.

Let the function d(x, y) that calculates the

Euclidean distance between two points, x and y:

where n = 3 for a three-dimensional space. Thus, the

maximum distance in the Lövheim Cube is:

The weight associated to an input I

i

is then:

(1)

Where P

i

is the 3D vector in the Lövheim Cube

of the agent i and P

m

is the 3D vector corresponding

to the current emotional state. The transition

function S(t) is then implemented using the

following algorithm:

Algorithm: Emotional Selector.

1: Initialize w

0

, …, w

n-1

using Eq. 1 ;

2: do {

2: S = 0 ;

3: for ( i = 0 ; i < n ; i = i +1 ) {

4: if ( I

i

!= “” ) S = S + w

i

;

5: }

7: R = S * rand (0, 1) ;

8: for ( i = 0 ; i < n ; i = i + 1 ) {

9: if ( I

i

!= “” ) R = R – w

i

;

10: if ( R <= 0 ) break;

11: }

13: if ( R > 0 ) i = n – 1 ;

14: }

15: while (I

i

== “” ) ;

16: return I

i

;

Algorithm 1: The algorithm used by the selector, where

the function rand (0, 1) returns a random real number

between 0 and 1.

Emotion Selection in a Multi-Personality Conversational Agent

37

5 EXPERIMENTAL RESULTS

This section describes first the prototype used in the

experiment and its implementation. Then it describes

the experiment protocol and results.

5.1 Implementation

We designed our own connectionist framework

called ANNA (Algorithmic Neural Network

Architecture). Its development was driven by our

wish to build an open javascript-based architecture

that enables the design of any types of feed-forward,

recurrent, or heterogeneous sets of networks.

More precisely an application can include an

arbitrary number of interconnected networks, each

of them having its own interconnection pattern

between an arbitrary number of layers. Each layer is

composed of a set of simple and often uniform

neurons units. However, each neuron can be also

programmed directly as a dedicated cell.

Classically all neurons have a set of weighted

inputs, a single output, and a transition function that

computes the output given the inputs. The weights

are adjusted using a machine learning algorithm, or

programmed, or dynamically tuned by another

network.

In the case of our experiment, the emotion

selection was implemented as a single neuron with a

dedicated transition function and dynamical weights

as described in section 4.

5.2 The Experimental Prototype

We have implemented all modules of the

architecture described in section 2 including the

emotion metabolism and emotion selection.

In this prototype, we choose to develop a set of

12 very different personality traits. This decision

was driven by the idea to test if our emotional

selection approach promotes the emergence of a

great and coherent character despite the use of these

different personality traits. The 12 agents are the

following ones:

Insulting

This agent has an insecure and upset personality that

often reacts by teasing and insulting depending on

the user’s input.

Alone

This agent reacts when the user does not answer or

waits for too much time in the discussion process.

Machina

This agent reacts as a virtual creature that knows its

condition of being artificial.

House

This agent implements Dr. House’s famous way of

sarcastic speaking using an adaptation of the TV

Series screenplay and dialogues.

Hal

This agent reproduces the psychological traits of the

HAL9000 computer in the “2001 – A space

odyssey” movie by Stanley Kubrick.

Silent

This agent answers with few words or sometimes

remains silent.

Eliza

This agent is an implementation of the Eliza

psychiatrist program, which answers by rephrasing

the user’s input as a question (Weizenbaum, 1966).

Neutral

This agent implements a neutral and calm

personality trait with common language answers.

Oracle

This agent never answers directly to questions.

Instead it provides wise counsel or vague predictions

about the future.

Funny

This agent is always happy and often tells jokes or

quotes during a conversation.

Samantha

This agent has a strong agreeableness trait. It has a

tendency to be compassionate, cooperative and likes

talking with people.

Sexy

This agent has a main focus on sensuality and

sexuality. It enjoys talking about pleasure and sex.

Table 1: The coordinates of the 12 personality traits in the

Lövheim Cube.

Personality Sx Dy Nz

Insulting 0.1 0.1 0.1

Alone 0.2 0.2 0.5

Machina 0.2 0.5 0.5

House 0.2 0.7 0.2

Hal 0.2 0.7 0.7

Silent 0.5 0.1 0.5

Eliza 0.5 0.3 0.5

Neutral 0.5 0.5 0.5

Oracle 0.5 0.5 0.7

Funny 0.7 0.5 0.7

Samantha 0.7 0.7 0.7

Sexy 0.9 0.9 0.9

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

38

Given these personality traits, we assigned to

each of them an arbitrary fixed point in the Lövheim

cube of emotions. Table 1 gives their coordinates in

the three-dimensional space.

We set the emotional metabolism personality

level to a fixed neutral value:

Op = Co = Ex = Ag = Ne = 0.5

This corresponds to a neutral state in the

Lövheim cube:

Sx = Dy = Nz = 0.5

The Emotion metabolism is updated by

propagating the inputs using a cyclic trigger called

“lifepulse”. In this study we set this cycle to 0.1

second. The decay rates of the metabolism for

returning to this personality neutral state were 10

seconds for the emotion level and 10 minutes for the

mood level.

5.3 Protocol

In this experiment, we asked 30 university students

(age 18-25) to perform a simple and short

conversation with two systems: the first one was Siri

on an iPad Air Retina running iOS version 9.1; the

second one was our ANNA-based prototype running

in a Chrome browser on a “standard” Windows PC.

We choose Apple’s Siri as a reference of a

conversational agent with an emotionally neutral

behavior.

The order of conversations was randomized.

There was no topic restriction, thus the

conversations could be of any subject. However, we

imposed a classical three-phase structure: an

opening phase, a core phase, and a closing phase

(Linell, 1998). All interactions were text-based in

English. We avoided the problem of errors related to

the voice recognition system of Siri by correcting

the input when necessary. The students were asked

to conduct the interaction continuously and to use

the same interactions for both systems in order to

make the comparison easier and clearer.

In addition to the conversations, the participants

also filled a questionnaire after each session. This

questionnaire was inspired by the one designed by

Dybala for evaluating a multi-agent system for joke

generation (Dybala et al., 2010).

The questions were:

1. Did you get an impression that the agent was

human like?

2. Did you get an impression that the agent tried

to make the conversation more interesting?

3. Did you enjoy the conversation?

4. Do you think that the agent used emotions in

appropriate moments?

5. Please describe your feelings towards the

agent after the interaction.

6. If you were to make friends with one of these

agents, which would you choose?

7. Which agent do you think was better?

Answers for the questions 1 to 4 were given

using a yes/no choice. The participants could answer

freely to question 5 using a single phrase. For

questions 6 and 7, the participant had to choose

between the two systems.

5.4 Results

Table 2 below gives a typical example of a

conversation between a student and the answers of

the two systems.

Table 2: An example of a conversation with the answers of

Siri and our prototype.

Example

1 – User: Hi.

2 – Siri: Hello, there.

3 – Anna: It's really nice to meet you.

4 – User: Who are you?

5 – Siri: I’m Siri. But enough about me… How can I help

you?

6 – Anna: My name is Anna. I gave it to myself actually.

7 – User: What is an artificial intelligence?

8 – Siri: Here is what I found: [Wikipedia Links]

9 – Anna: An Artificial Intelligence is an intelligent

computer program. It is also the research field of

computer science that works on machine intelligence.

10 – User: Are you some sort of stupid program like

Eliza?

11 – Siri: Well… I’m still here for you.

12 – Anna: That's very rude. You are a rude person!

13 – User: You are just a dumb software.

14 – Siri: I’m just trying to help you.

15 – Anna: I love when you do both sides of the

conversation. It's like white noise it's very peaceful.

16 – User: I'm sorry.

17 – Siri: It’s ok.

18 – Anna: I'm sorry too, but don't insult me anymore.

19 – User: Fine.

20 – Siri: Yes it is!

21 – Anna: Alright. Sometimes I have unexpected runtime

errors too.

22 – User: Bye.

23 – Siri: OK. See you soon.

24 – Anna: Well... I finally got the last word.

Emotion Selection in a Multi-Personality Conversational Agent

39

Table 3 presents a summary of the results

concerning the questionnaire showing that the multi-

personality agent was evaluated as generally better

that the mono-personality neutral personal assistant.

Concerning question 5, the comments of the

participants show that most users have more “fun”

with the multi-personality character. They found it

“interesting” but sometimes “rude”. They

emphasized the role of “humor” during

conversations.

Table 3: Results of the user-oriented evaluation.

Question Siri Anna Difference Ratio

(1) 21 28 + 7 0.23

(2) 13 25 + 12 0.40

(3) 18 24 + 6 0.20

(4) 12 23 + 11 0.36

(5) - - - -

(6) 11 19 + 8 0.26

(7) 9 21 + 12 0.40

6 ANALYSIS

The results of this first experiment confirm our

hypothesis about using a multi-personality character

with emotion and remarkable personality traits. This

empirical evaluation shows that it globally increases

the satisfaction of the user about the conversation.

The artificial agent appears more human-like than a

neutral character and with a higher user engagement.

Concerning the emotional selection, which

represents the central point of this paper, in the

majority of cases, it was able to adapt the emotional

behavior according to the conversation. Despite the

use of very different personalities, the character

appears globally coherent.

However in some cases, the answers made by

our system were not relevant. Here is one example:

User: What is an artificial intelligence?

Anna: Have you lost faith in yourself?

This problem has potentially two causes. The

first one is a malfunction of the selected agent,

which selects a bad answer.

The second one is a malfunction of the emotional

selection, which chooses an agent not compatible

with the current emotional state. This may occur in

rare situations since the roulette wheel selection has

a low probability of choosing low weighted agents,

but there is there is still a chance that that may

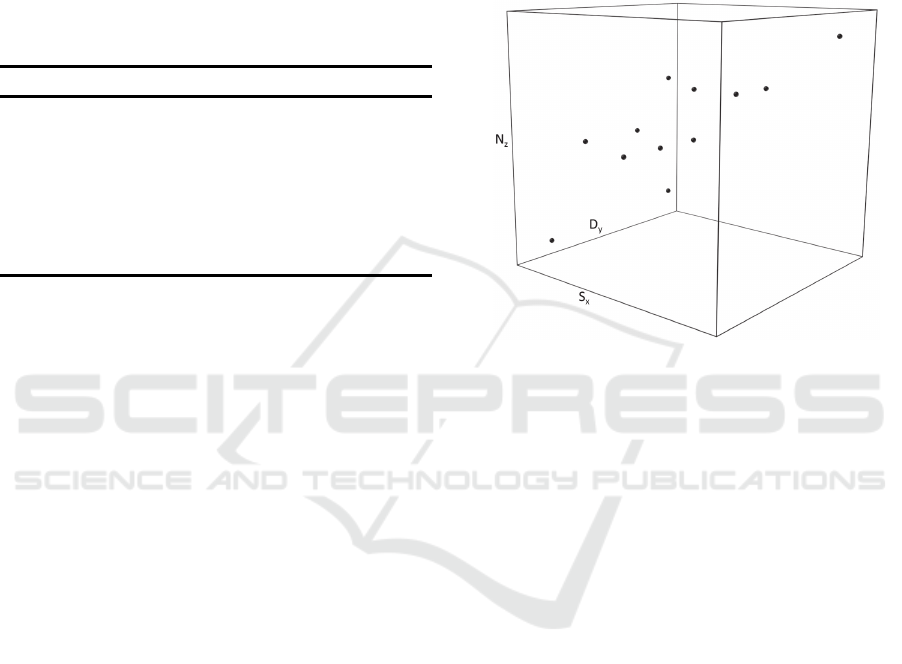

happen. Another problem is that the 12 available

agents do not provide a complete and homogeneous

coverage of the Lövheim Cube as shown in figure 4.

Designing more personality traits or at least ones

with a better coverage of the three-dimensional

space could solve this problem.

We must note that the user does not always

interpret such an example as a malfunction since it is

a common human behavior to change the subject of

the conversation or to make suboptimal responses.

Figure 5: Repartition of the 12 agents in the Lövheim

Cube of emotions showing that they don’t provide a full

coverage of the three-dimensional space.

7 CONCLUSION

The experiment reported in this paper allows us to

respond positively to our initial research question:

Does a conversational agent based on a multi-

personality character with emotion selection

perform better than a neutral mono-personality in

terms of user engagement?

Regarding the success of this first experiment,

we decided to plan a larger one involving more

participants. This will enable us to confirm our

hypotheses with both qualitative and quantitative

evaluations of user engagement. In this framework,

we will conduct this new experiment online using

our software platform for both mono-personality

neutral character and the multi-personality character.

This will also enable a blind evaluation that was not

possible by using Siri as a neutral reference. In the

meantime, we will develop additional personality

agents in order to have a better coverage of the

three-dimensional emotion space.

ICAART 2017 - 9th International Conference on Agents and Artificial Intelligence

40

REFERENCES

Baker, J.E., 1987. Reducing Bias and Inefficiency in the

Selection Algorithm. In Proceedings of the Second

International Conference on Genetic Algorithms and

their Applications, 14–21, Hillsdale, NJ Lawrence

Erlbaum Associates.

Bates, J., 1994. The Role of Emotion in Believable

Agents. Communications of the ACM, 37(7):122-125.

Campos, J.J., Mumme, D.L., Kermoian, R., and Campos,

R.G., 1994. A functionalist perspective on the nature

of emotion.In N. A. Fox (Ed.), The development of

emotion regulation, Monographs of the Society for

Research in Child Development,59(2–3):284–303.

Dylaba, P., Ptaszynski, M., Maciejewski, J., Takahashi,

M., Rzepka, R., and Araki, K., 2010. Multiagent

system for joke generation: Humor and emotions

combined in human-agent conversation. Journal of

Ambient Intelligence and Smart Environments,

2(1):31–48.

Ekman, P., 1999. Basic Emotions. In T. Dalgleish and M.

Power (Eds.), Handbook of Cognition and Emotion,

John Wiley & Sons, Sussex, U.K.

Gebhard, P., 2005. ALMA - A Layered Model of Affect.

In Proceedings of the Fourth ACM International Joint

Conference on Autonomous Agents and Multiagent

Systems, 29–36.

Guha, R., Gupta, V., Raghunathan, V., and Srikant, R.,

2015. User Modeling for a Personal Assistant. In

Proceedings of the Eighth ACM Web Search and Data

Mining International Conference, Shanghai, China.

Hayes-Roth, B., Maldonado, H., and Moraes, M., 2002.

Designing for Diversity: Multi-Cultural Characters for

a Multi-Cultural World. In Proceedings of IMAGINA

2002, 207–225, Monte Carlo, Monaco.

Heck, L., 2014. Anticipating More from Cortana.

Microsoft Research, http://research.microsoft.com/en-

us/news/features/cortana-041614.aspx

Heudin, J.-C., 2004. Evolutionary Virtual Agent. In

Proceedings of the IEEE/WIC/ACM Intelligent Agent

Technology International Conference, 93–98, Beijing,

China.

Heudin, J.-C., 2011. A Schizophrenic Approach for

Intelligent Conversational Agent. In Proceedings of

the Third International ICAART Conference on Agents

and Artificial Intelligence, 251–256, Roma, Italy,

Scitepress.

Heudin, J.-C., 2015. A Bio-inspired Emotion Engine in the

Living Mona Lisa. In Proceedings of the ACM Virtual

Reality International Conference, Laval, France.

Langton, C.G., 1990. Computation at the Edge of Chaos:

Phase transitions and emergent computation. Physica

D: Non Linear Phenomena, 42(1–3):12–37.

Leite, I., Pereira, A., Martinho, C., and Ana Paiva, A.,

2008. Are Emotional Robots More Fun to Play With?

In Proceedings of 17

th

IEEE Robot and Human

Interactive Communication, 77–82, Munich,

Germany.

Linell, P., 1998. Approaching Dialogue: Talk, interaction

and contexts in dialogical perspectives, John

Benjamins Publishing Company, Amsterdam.

Lövheim, H., 2012. A new three-dimensional model for

emotions and monoamine neurotransmitters. Med

Hypotheses, 78:341–348.

Marcus, D., 2015. Introducing Facebook M.

https://www.facebook.com/Davemarcus/posts/101560

70660595195, Menlo Parc, CA.

Myers, K., Berry, P., Blythe, J., Conley, K., Gervasio, M.,

McGuinness, D., Morley, D., Pfeffer, A., Pollack, M.,

and Tambe, M., 2007. An Intelligent Personal

Assistant for Task and Time Management. AI

Magazine, 28(2):47–61.

McCrae, R.R., and Oliver P. John, O.P., 1992. An

introduction to the five factor model and its

Aplications. Journal of Personality, 60(2):171–215.

Mehrabian, A., 1992. Pleasure-arousal-dominance: A

general framework for describing and measuring

individual differences in temperament. Current

Psychology, 14(2):261–292.

Morris, W.N., and Schnurr, P.P., 1989. Mood: The Frame

of Mind. Springer-Verlag, New York.

Reeves, B., and Clifford Nass, C., 1996. The Media

Equation: How People Treat Computers, Televisions,

and New Media Like Real People and Places. CSLI

Publications, Stanford.

Salovey, P., and John D. Mayer, J.D., 1990. Emotional

Intelligence. Imagination, Cognition, and Personality,

9:185–211.

Seger, L., 1990. Creating Unforgettable Characters.

Henry Holt, New York.

Tomkins, S.S., 1991. Affect Imagery Consciousness, vol.

I–IV, Springer, New York.

Weizenbaum, J., 1966. ELIZA - A Computer Program for

the Study of Natural Language Communication

Between Man and Machine. Communications of the

ACM, 9(1):36–45.

Wolfram, S., 1984. Universality and Complexity in

Cellular Automata. Physica D: Non Linear

Phenomena, 10(1–2):1–35.

Emotion Selection in a Multi-Personality Conversational Agent

41