Extending Cognitive Skill Classification of Common Verbs in the

Domain of Computer Science for Algorithms Knowledge Units

Fatema Nafa, Javed I. Khan and Salem Othman

Department of Computer Science, Kent State University, Kent, Ohio, U.S.A.

Keywords: Higher Order Thinking Skills, Knowledge Representation, Bloom Taxonomy, Learning Objectives.

Abstract: To provide an adaptive guidance to the instructors through designing an effective curriculum and associated

learning objective, an automatic system needs to have a solid idea of the prerequisite cognitive skills that

students have before commencing a new knowledge before enhancing those skills which will enable students

to steadily acquire new skills. Obtaining the learning objectives in knowledge units based on cognitive skills

is a tedious and time-consuming task. This paper presents subtasks of an automatic meta-learning

recommended model that enables the extraction of learning objectives from knowledge units, which are

teaching materials. Knowing the cognitive skills will help mentors to connect the knowledge gaps between

learning materials and their aims. The model applies Natural Language Processing (NLP) techniques to

identify relevant knowledge units and their verbs, which assist in the identification of extracting the learning

objectives and classifying the verbs based on cognitive skill levels. This work focuses on the computer science

knowledge domain. We share the result that evaluates and validates the model using three textbooks. The

performance analysis shows the importance and the strength of the automatic extraction and classification of

the verbs among knowledge units based on cognitive skills.

1 INTRODUCTION

Bloom’s Taxonomy is an important tool that is used

as a guideline for educators to develop teaching

materials, organize learning goals, and create

assessments to match a learning activity with the

learning objective (Thompson,2008) and

(Lister,2000). Benjamin Bloom proposed Bloom’s

taxonomy; this divides learning objectives into three

domains: cognitive, psychomotor, and affective

(Bloom,1956). The cognitive domain is related to the

knowledge and mental skills of a learner. It is the

most widely used domain including six levels from

moderate to high mental processing levels.

Bloom’s taxonomy was modified by (Anderson,

2001), and a significant change was made by adding

and ordering the levels’ names. However, the number

of levels was kept consistent. The revised cognitive

domain’s levels from simplest to most complex are:

1) remembering, 2) understanding, 3) applying, 4)

analyzing, 5) evaluating, and 6) creating.

Bloom’s Taxonomy is a cognitive skills

classification that has been applied for different

educational purposes in many fields of study. In the

area of computer science, Bloom’s taxonomy was

used in course design, teaching methodology,

preparing materials, and measuring student responses

to learning (Doran,1995) and (Burgess,2005). The

ACM Computer Science Curriculum specifies

learning objectives based on the revised Bloom

Taxonomy(Cassel,2008) and (Gluga, January 2013).

There is a strong need to describe computer science

knowledge units regarding learning goals and the

level of mastery.

Using already acquired skills’ will help nescience

or lack of understanding to connect the knowledge

gaps between learning objectives and learning

materials as well as ensure the effectiveness of

teaching and learning. Also, the learner should have a

better understanding of learning objectives and

learning materials, and teach students how to master

all new concepts in each knowledge unit. Also, their

mental skill levels should increase as they progress

from one knowledge unit to the next.

Generally, the Bloom taxonomic relationships are

extracted manually. Until now, extracting and

describing learning objectives were derived using the

verbs that connect concepts. In 1956, Bloom

suggested a classification of verbs, which are now

widely used as a “clue” to determine the relationship

Nafa, F., Khan, J. and Othman, S.

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units.

DOI: 10.5220/0006376501730183

In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU 2017) - Volume 1, pages 173-183

ISBN: 978-989-758-239-4

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

173

between concepts. In 2001, the verb list was extended

and revised by Bloom. Bloom’s measurable verbs are

indicative of cognitive skills, (Nevid, 2013) but not

all the verbs are included in Bloom’s verb list.

Unfortunately, for a fast-growing knowledge domain

such as computer science, these tables do not contain

many verbs used in the field. A question was raised

as for how to determine the Bloom relationship that is

implied by other verbs, not on Bloom’s verbs list.

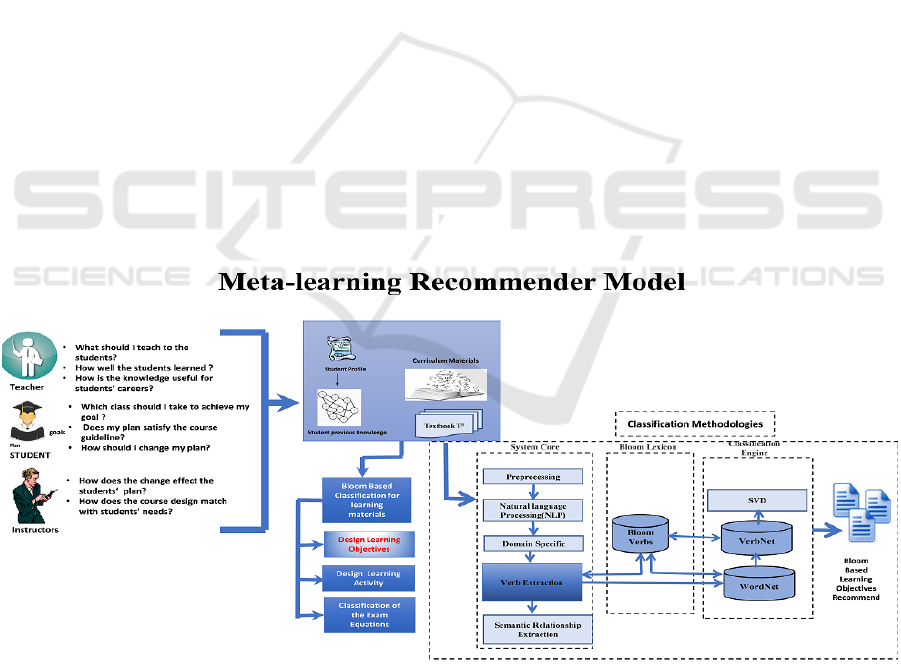

This paper presents a meta-learning

recommended model foundation to extend the

cognitive skill relations indicated by those new

knowledge domain verbs figure 1 illustrates the

model. A subtask of the model is used to classify

verbs in the learning objectives into cognitive skill

levels. For this task, not all verbs are equally

important; we are primarily interested in a domain-

specific verb, which is computer science. The

classification of a domain-specific verb is defined as

a relationship between the concepts that are used in

sentences with the given verb.

We investigate three techniques to extend the

current classification of the listed verbs, where

Bloom’s verb list is used as a baseline method. The

first method includes WordNet (

WordNet,2010) which

was used to access the verb synonym. Then, we

investigate the use of VerbNet to further extend the

classification based on the class and the membership

of the verbs in VerbNet database. Finally, The verbs

that were not found in WordNet synonym, or VerbNet

class another method is used, which is Singular Value

Decomposition (SVD), to classify the rest of the

verbs; the three methodologies will be explained in

section 4.

The rest of the paper is organized as follows: the

literature review is presented in Section 2; the

problem definition is discussed in Section 3; Section

4 describes an overview of the meta-learning

recommended model and the classification

methodologies; Section 5 shows the experiment setup

and an evaluation of the methods, and Section 6

presents the conclusion, discussion, and the direction

for future work.

2 LITERATURE REVIEW

In the area of linguistics, verbs are central to the

syntactic structure and semantics of a sentence. Some

computational resources and classifications have

been developed for verbs. These resources can be

classified into these three types:

Syntactic Resources: Examples of these are multiple

dictionaries (Grishman et al., 1994) and ANLT

(Boguraev et al., 1987), which are manually

developed. An entry here will have verb forms and

subcategorization information.

Figure 1: Meta-Learning Recommender Model.According to Palmer (2000), WordNet lacks generalization, and its level of

sense distinction is too fine-grained for a computational lexicon. Syntactic Semantic Resources: Here verbs are grouped by

properties such as shared meaning components and morpho-syntactic behaviour of words in Levin’s 1993 verb classification.

Since then, VerbNet expands this classification with new verbs and classes (Kipper-Schuler, 2005).

CSEDU 2017 - 9th International Conference on Computer Supported Education

174

Semantic Resources: Examples of these include

FrameNet (Baker et al., 1998) and WordNet (Miller,

1995). FrameNet groups word according to

conceptual structures and their patterns of

combinations. The second example, WordNet, groups

words into synsets (synonym sets) and records

semantic relations between synsets. There is little

syntactic information present in these resources.

In the area of cognitive domain, to the best of our

knowledge, there has been no previous work on

Bloom’s Taxonomy in the field of computer science

for various purposes such as managing course design

(Machanick, May), measuring the cognitive difficulty

levels of computer science materials (Lister, 2003),

and structuring assessments (

Oliver,2004). Bloom’s

Taxonomy has also been used as an alternative to

grading with a curve (

Hearst, 1992). Additionally,

from the perspective of mining information, there has

been some interesting research about extracting

relations among concepts. Relations could be

replaced by the synonym relationships, or a

hypernym, an association, etc. (

Hearst, 1992), and

(Ritter, 2009) these relationships are successfully

used in different domains and applications

(

Fürst,2009).

Another related work comes under graphical

representation; the graph being the representation of

the relationship that was gathered by the extracted

data. There has been some research on graphical text

representation such as concept graphs

(

Rajaraman,2003) and ontology (Navigli,2003). The

authors proposed Concept Graph Learning to present

relations among concepts from prerequisite relations

among courses.

Even though there exists an extensive collection

of literature on verb classification, none of the

presented techniques have been developed to classify

the verbs based on Bloom’s Taxonomy levels.

Benjamin Bloom and his colleagues provided the

verbs to help identify which action verbs align with

each Bloom level to describe the learning objectives

(Starr,2008). Benjamin Bloom provides a sub-list; to

which not all the verbs are included. There is a need

in the computer sciences to use the domain verbs to

keep the description of the learning objectives

measurable and clear.

3 PROBLEM DEFINITION

In this section, we introduce some terms used in this

paper and define the problem.

Concept (C): Represent the most important words in

a text that describe a particular domain.

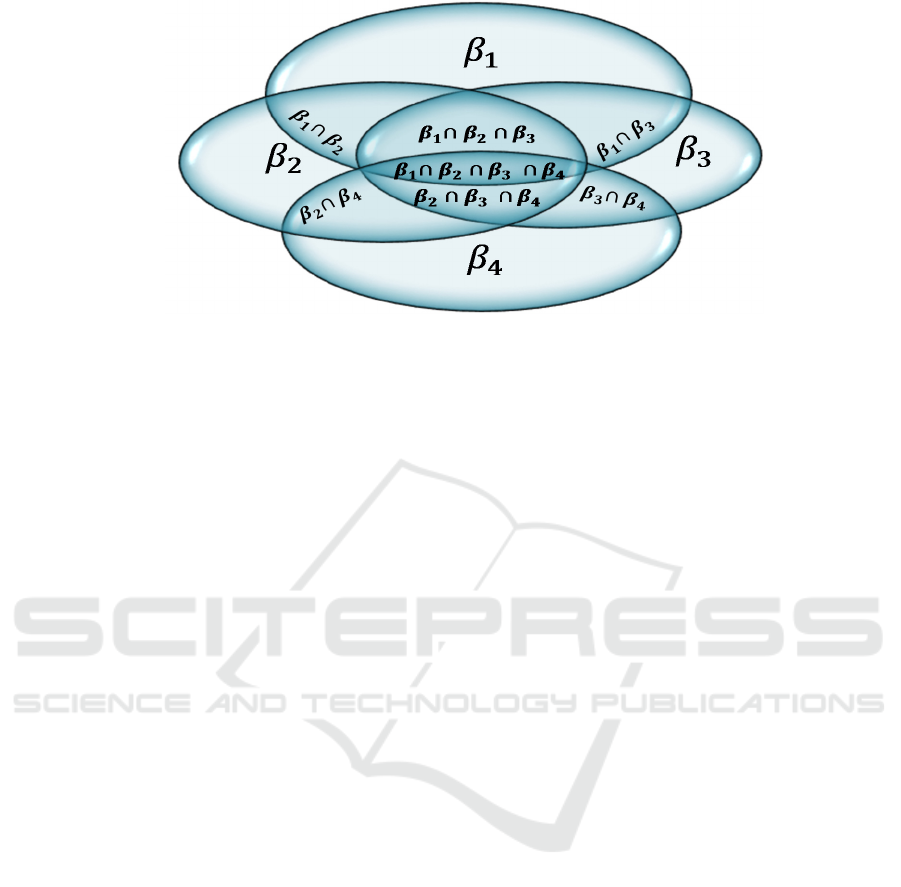

Cognitive domain verb (βi): According to Bloom

theory, a learnable concept, can be learned at multiple

cognitive skill level. The prerequisite concept which

needs to learn the target concept at specific cognitive

level depends on the verb connecting them. Thus,

each verb can have multiple cognitive skill level

labels (βi) where βi= {β1, β2, β3, β4}.

Cognitive graph (Gc): It is a directed Graph Gc = (C,

CL) where Nodes represent a concept (c) and Edges

represent CL (cognitive level).

Computer-Science based Cognitive Domain (CSCD):

is a modification of the Bloom Taxonomy tool which

is more useful to computer science learners than

existing generic ones (Nafa and Khan, 2015).

Semantic domain knowledge graph (Gk): is an

instruction of the domain knowledge content in a field

text. Each text has a set of domain concepts(C), the

sentences in the text describes the relationship

between a pair of concepts. We label the concepts by

their domain terminology, and we mark the edges (E)

by the principal verb connecting two concepts in a

sentence.

Problem Definition: Given 1) a semantic domain

knowledge graph Gk = (C, E), where nodes represent

concept(C) and edges(E)represent knowledge domain

verbs (Vi) and 2) a subset of cognitive domain verbs

Vi⊂ βi. Find out a mapping function £: Vi→βi which

maps domain knowledge verbs to their βi cognitive

levels, where each edge v ∈V belongs to a particular

relation type £ (v) ∈ βi.

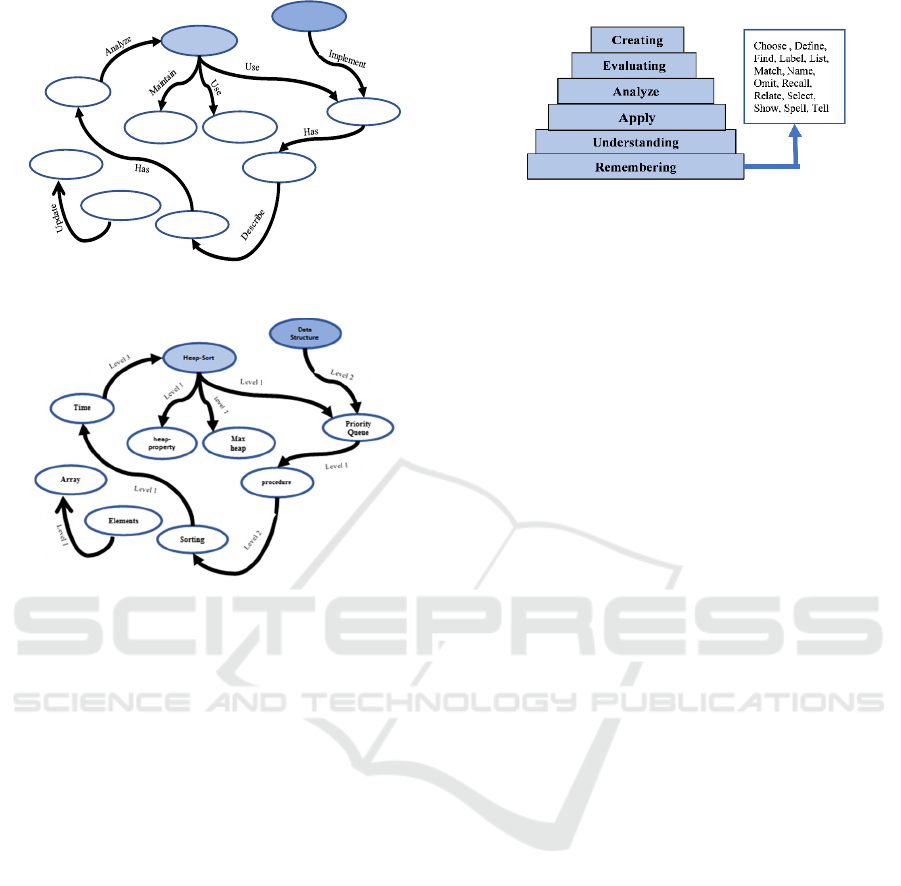

For example, suppose we have a knowledge unit

represented as a semantic graph Gs including ten

nodes, which are concepts C = {Heap-Sort, heap-

property, time, priority-Queue, max-heap, producer,

sorting, array, Data Structure and elements} and

edges E = {Analyse, Describe, Has, Implement,

Maintain, and Update}. We need to map the domain

knowledge verb Vi to its βi levels which are used to

describe the learning objectives required for

mastering this knowledge unit at different cognitive

levels. Figure 2.a shows a given semantic graph Gs

and figure 2.b illustrates the cognitive level required

to master each group of concepts in the knowledge

unit.

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units

175

Figure 2.a: The example of our problem.

Figure 2.b: The example of our problem.

4 META-LEARNING

RECOMMENDED MODEL

(PHASE 2)

In this subtask of the model, which is extracting

learning objectives based on cognitive skills, the verb

level used to describe the obtained learning

objectives. Bloom’s Taxonomy provides a ready-

made structure and list of action verbs. These verbs

are vital to writing learning objectives. All the verbs

are action verbs since the learning objectives are

concerned with what the students can do at the end of

mastering a specific knowledge unit. As an example,

a list of the active verbs used to assess a remembering

level is shown in Figure 3.

Figure 3: Example of Bloom Action Verb List

Remembering Level.

To run the linguistic analysis for the knowledge

unit in the textbook, Stanford University’s Core NLP

library is used. This step has been done in the first

phase of our model, but more characteristics are

added to analyze the verbs in the knowledge units.

We analyzed the results of the sentence structures for

the verbs. The most common modification to get a

high accuracy of the results were incorrect POS tags;

we noticed errors are stemming, sometimes a verb can

be mistagged as a noun. These incorrect POS tags,

causing incorrect parsing structures, are modified

manually. Also for auxiliary verbs, we removed all of

them by checking the verb with a list of all auxiliary

verbs and their derivatives. For more accurate results,

we introduce the proposed methodologies used

(WordNet, VerbNet, and Value Decomposition

(SVD)). The three methodologies will be explained in

detail respectively.

4.1 WordNet(WN) Methodology

WordNet is a lexical database for English words and

was developed at the University of Princeton

(

WordNet,2010

). It clusters words (Nouns, verbs,

adjectives, and adverbs) into sets of synonyms called

synsets to help identify the meaning of the words, and

is interlinked with a variety of relations. There is a

different type of relations available in WordNet.

These relations relate to concepts as follows:

Nouns (Synonym ~ antonym, Hypernyms ~

hyponym, Coordinate, and Holonym ~ meronym);

Verbs (Synonym ~ antonym, Hypernym ~ troponym,

Entailment, and Coordinate);

Adjectives/Adverbs in addition to above relations

(Related nouns, Verb participles, and Derivational

information).

In this research, we are interested in the Verbs

relation, which is the Synonym relations only. The

Synonymy relation is at the base of the structure of

WordNet. WordNet-like taxonomies behave in some

ways as a dictionary, in others as an ontology. To

avoid confusion, we use WordNet in this research as

Time

Priority

Queue

Data

Structure

heap‐

property

Heap‐

Sort

Max

heap

procedu r

e

Sorting

Array

Elements

CSEDU 2017 - 9th International Conference on Computer Supported Education

176

a dictionary for verb synonym relations. Around

3,600 verb senses are included in WordNet.

As the first method to finding the level of domain-

specific verbs based on Bloom Taxonomies, we

mapped all domain-specific verbs to their verb

synonym from the WordNet database. Due to the

WordNet limitation of not having all the classes for

all verbs, classifying some of the verbs nor the others.

WordNet has certain restrictions. It does not cover

particular domain words or includes the forms of

irregular verbs.

Figure 4 presents an algorithm used in WordNet

methodology Input for the algorithm include Bloom’s

verb list and a domain specific verb list. The

algorithm starts by reading a domain-specific verb list

and compares the verbs in both records. It then

indicated the verbs that are in Bloom’s list and those

that are not. It then starts to maintain the unknown

verb list by checking the verb synonym from the

WordNet database; some new verbs have been added

to known verbs in Bloom’s list. In case the verb

synonym does not return any Bloom level for the

verb, the Algorithm returns a new verb list with

Bloom’s classification and another verb list, not in

Bloom’s taxonomy. Thus, new verbs synonym based

have been added to known verbs as in Bloom list. The

list will be saved in a text file as unknown verbs in

Bloom’s taxonomy. A limitation for WordNet suffers

from gaps between verbs in the database for that

reason; some of the verbs will not be found in the

WordNet database. Finally, for those verbs in which

a classification is not determined, the algorithm starts

the classification process over for verbs and uses a

different methodology using the VerbNet

methodology. This will be explained in detail in the

next section.

Verb‐List=open('Verbs.txt','r') //Reading verb‐

list

ForVerbinVerb‐List:

Verb‐Synonym=WordNet‐Synonym(Verb)

//TocheckwhoseSynonymtowhofortheCS‐

VERBLIST

CS‐Verb‐Synonym=list(set(Verb‐

List).difference(Verb‐Synonym))

//CheckifeachCS‐Verb‐SynonyminBloomVerb‐

list

Bloom‐List, Level=Give‐Bloom‐Level(CS‐Verb‐

Synonym)

DefGive‐Bloom‐Level(CS‐Verb‐Synonym):

CS‐Verb‐Synonym()

ForVerbinCS‐Verb‐Synonym:

CS‐Verb‐Synonym.Add(Verb)

Bloom‐Verb=open('Bloom‐Verb.txt','r')

Bloom‐Verb‐list()

Bloom‐Verb‐dic{}

ForVerbinBloom‐Verb:

Bloom‐Verb‐list.Add(Verb)

IfVerbnotinBloom‐Verb‐dic.Keys():

Bloom‐Verb‐dic[Verb]=Level

Else:

Bloom‐Verb‐dic[Verb].append(Level)

Bloom‐Found = GetmostFrequ (Bloom‐

Verb‐list)

ReturnBloom‐Found

DefGetmostFrequ(Level):

Returnmax(set(Level),key=Level.count)

DefWordNet‐Synonym(Verb):

WN_Verb=Verb.replace('','_')

Forposinposes:

Forsynsetinwn.synsets(WN_Verb,pos):

Forlemmainsynset.lemmas():

Name=lemma.name().replace('_','')

IfName!=VerbandNamenotinsyns:

Syns.append(Name)

Returnsync

Figure 4: WordNet Algorithm.

4.2 VerbNet (VN) Methodology

VerbNet is a vast online repository for the

classification of English verbs, which includes

syntactic and semantic information for classes of

English verbs derived from Levin’s classification as

explained in related works, section 2. It is an updated

version considered more detailed than that included

in the original organization. VerbNet classification

considers paramount properties, the lexical meaning

of a verb and the kind of argument interchanges that

can be observed in the sentences with a verb. The

ranking of VerbNet is verb sense- based. It covers

5,200 verb senses. The classification is partially

hierarchical, including 237 top-level classes with only

three more levels of subdivision (Kipper Schuler

2005).

The VerbNet database contains information about

the correspondence between the categories of verbs

and lexical entries in other resources. Each verb class

in VerbNet include a set of members, thematic roles

for the predicate-argument structure of these

members, local restrictions on the arguments, and

frames consisting of a syntactic description and

semantic predicates with a temporal function. New

subclasses are added to the original Levin classes to

achieve syntactic and semantic coherence among

members.

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units

177

VerbNet Includes over 5,000 verb senses. It is a

rich database with verb classification and provides

easy access to be used by the programming language.

It is also not very helpful when it comes to processing

texts in specific domains where verb senses only

partly overlap with those in general language use. It

has been used to help NLP applications such as

semantic role labeling (Swier and Stevenson, 2004)

and word sense disambiguation (Dang, 2004).

Figure 5 explains the algorithm used for VerbNet

methodology. As an input for the algorithm, it starts

by reading the output verb lists from the previous

methodology, which is WordNet. It then checks

unknown verbs in Bloom’s list to return the verb class

from the VerbNet database. After it returns the verb

class from the VerbNet database, new verbs are added

to the known verbs as Bloom’s list. In case the verb

class returns nothing for the verb, the algorithm uses

the verb member to check the availability of having

new verb members for the verb under study and

checks if the new verb is in Bloom’s list. If so, the

verb level is returned.

If the verb is not found either in a class or members

in the VerbNet database, the list will be saved in a text

file as unknown verbs in Bloom’s taxonomy. A

limitation for VerbNet includes gaps between verbs

in the database; for that reason, some of the verbs will

not be found in the VerbNet database. Finally, for

those verbs whose classification is not found, the

algorithm starts the classification process over for

verbs but uses a different methodology, the Singular

Value Decomposition (SVD) method, which will be

explained in detail in the next section.

Verb‐List=open('Verbs.txt','r')//Readingverb‐list

ForVerbinVerb‐List:

Verb‐Class=VerbNet.classids(Verb.strip())

IfVerb‐Class!=[]:

ForEachinVerb‐Class:

Verb‐Class‐list.append(Each)

//Checkifeachverb‐classinBloomVerb‐list

Bloom‐List,Level=Give‐Bloom‐Level(Verb‐Class‐list)

DefGive‐Bloom‐Level(Verb‐Class‐list):

Verb‐Class‐list()

ForVerbinVerb‐Class‐list:

Verb‐Class‐list.add(Verb)

Bloom‐Verb=open('Bloom‐Verb.txt','r')

Bloom‐Verb‐list()

Bloom‐Verb‐dic{}

ForVerbinBloom‐Verb:

Bloom‐Verb‐list.add(Verb)

IfVerbnotinBloom‐Verb‐dic.keys():

Bloom‐Verb‐dic[Verb]=Level

Else:

Bloom‐Verb‐dic[Verb].append(Level)

Bloom‐Found=Verb‐Class‐list.intersection(Bloom‐Verb‐list)

ReturnBloom‐Found

Verb‐List=open('Verbs.txt','r')

ForVerbinVerb‐List:

Verb‐Class=VerbNet.classids(Verb.strip())

DefGetmostFrequ(Level):

Returnmax(set(Level),key=Level.Count)

Figure 5: VerbNet Algorithm.

4.3 Singular Value Decomposition

(Svd) Methodology

In this part, verbs are classified based on Latent

Semantic Analysis (LSA). LSA is a theory and

method for extracting and representing the usage

meaning of domain concepts by statistical

computations (Landauer et al. 1998). The process is

divided into two tasks, calculating SVD to split the

matrix A into three matrixes, and finding verb level

in Bloom Taxonomy applying SVD to the matrix (A)

will break down each dimension in the matrix using

equation 1. The details of this methodology were

published in (Nafa, 2015).

1

4.4 Example to Explain the

Methodologies

Let the given knowledge unit include ten high-level

concepts C = {Heap-Sort, heap-property, time,

priority-Queue, max-heap, producer, sorting, array,

Data Structure and elements}. The process of finding

the cognitive level of the verb is to describe the

learning objectives required for mastering this

knowledge unit at different cognitive levels.

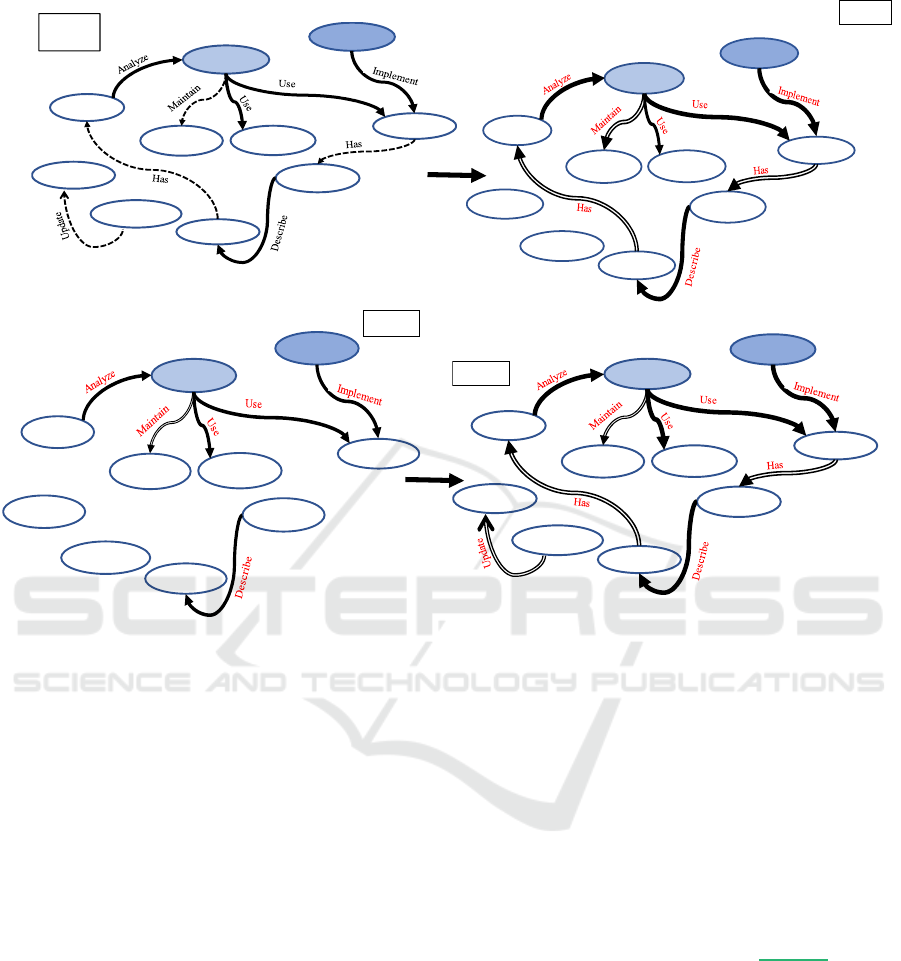

Figure 6 shows an example of a text graph where the

concepts extracted as learning objectives are used to

describe the knowledge unit with instances of the

high-level concepts.

In figure 6 A, only four verbs are known their

cognitive level from the Bloom original list appears

as black lines in the graph. In figure 6 B, by using the

first methodology for verb classification which is

WordNet only, one verb is classified into its cognitive

level. The verb appears with a double line in the

graph. In figure 6 C, by using the second

methodology for verb classification which is VerbNet

only, two verbs are classified into their cognitive

level. The verbs appear with a double line in the

graph. In figure 6.D, by using the third methodology

for verb classification which is SVD, the rest of the

verbs are classified into their cognitive levels. The

verbs appear with a double line in the graph.

CSEDU 2017 - 9th International Conference on Computer Supported Education

178

Figure 6: Example of verb Classification Methodologies.

After all, verbs are classified into their cognitive

levels, and the high-level concepts candidate to be

learning objectives for this knowledge unit, the

teacher queries his\herself on what cognitive level are

needed for my students to master this knowledge unit.

Based on that, we query the graph to answer the

question as a subgraph to describe the learning

objectives for the knowledge unit.

The task of a learning objective extractor is to

automatically identify a set of high-level concepts in

the textbook that best describes it. Figure 6 illustrates

three different levels of learning objectives for

mastering a knowledge unit, which is Heapsort

Algorithm. As it is evident in figure 6, to understand

Heapsort Algorithm, students must learn the concepts

in subgraph A, which represents the concepts that are

in one of the lowest skill levels which is the

understanding and remembering level because most

of the concepts are common but important to

understand the knowledge unit.

5 EXPERIMENT RESULT AND

EVALUATION

5.1 Experiment Result

For this paper, we test the methodologies using three

high-quality textbooks that are used in computer

science classes as course materials. We obtain three

text corpora, “Introduction to Algorithms,” “Data

Structures and Algorithms,” and “Algorithms,”

respectively. These written works are used at other

universities. Experimental result and evaluation show

that the proposed task of our model is effective in

classifying verbs based on the cognitive level of

learning. Table I. shows the statistical information for

the three textbooks.

Ti me

Priority

Queue

Data

Structure

heap‐

property

Heap‐Sort

Max

heap

proce dure

Sorting

Array

Elements

C

Ti me

Priority

Queue

Data

Structure

heap‐

property

Heap‐Sort

Max

heap

proce dure

Sorting

Array

Elements

A

Ti me

Priority

Queue

Data

Structure

heap‐

property

Heap‐Sort

Max

heap

proce dure

Sorting

Array

Elements

D

Ti me

Priority

Queue

Data

Structure

heap‐

property

Heap‐Sort

Max

heap

proce dure

Sorting

Array

Elements

B

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units

179

Table 1: Physical Characteristics of the Textbooks.

In this paper, our focus is only to classify the

extracted computer science action verbs based on

CSCD levels. As a subtask of the meta-learning

model, the verbs are used to describe the learning

objectives.

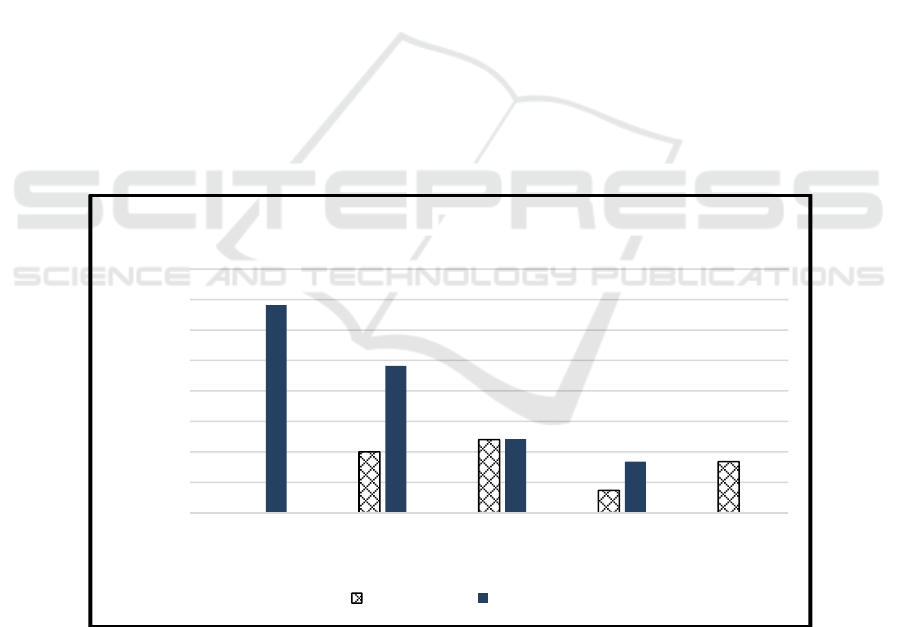

Figure 8 illustrates the percentage of verbs found

in the textbook which equates to 341 words. The first

scan in the original Bloom list consisted of 100 verbs

found in Bloom levels where 241 classified as

unknown verbs in Bloom’s list. Then, out of 241, a

total of 120 verbs were classified using WordNet

synonym methodology, and 121 of those were

unknown verbs. Then by using VerbNet, out of 121,

a total of 37 verbs found in Bloom levels and 84 verbs

were classified as unknown verbs. Finally, a total of

84 verbs were classified as Bloom’s verbs using SVD

methodology.

As more details of the classification resulted in

figure 9, a prominent feature is that significantly

equal percentages of the verbs fell in Bloom level2,

Bloom level3, and Bloom level4, while the proportion

of the verbs in the Bloom level1 are the most heights.

We can say that the textbook used to describe a small

Bloom cognitive level is the undergrad level. So the

learning objectives for this book will be a prerequisite

for the advanced courses of the algorithm. On the

other hand, there are equal opportunities for high

Bloom cognitive levels in the textbook.

5.2 Evaluation Measures

As an evaluation step, the gold standard for any

linguistic analysis is human judgment. In this paper,

we used statistical measures to estimate the

agreement between the human classification of the

verbs as well as the agreement between the results of

verb classification and the “gold standard.” There are

different measures of the agreement; we applied

Cronbach’s alpha measure from the fields of inter-

rater agreement.

Cronbach’s alpha α is one of the most common

measures of internal consistency. The calculation of

α uses equation 2. Cronbach’s alpha is a value

between 0 and 1; the closer a value is to 1, the higher

the reliability. The acceptable ranges of alpha are

from 0.70 to 0.95 (Mohsen and Reg, 2011). The result

of Cronbach’s alpha for our data was 0.70.

∝

1

∑

) (2)

where N is the number of cases,

refers to the

variance associated with item

i

, and

refers to the

variance associated with the observed total scores.

In this result, humans share intuitions about the

analysis. For the methodologies output, the classified

verbs were given to native English speakers, who are

Master’s students in English. This is typically done

by checking to see if they agree or disagree with the

automatic classification of the verbs. Apart from the

cognitive validation of our analysis, the majority

agreed that the verb classification could be used as a

baseline classification for computer sciences to

describe the learning objective.

6 CONCLUSIONS AND

DISCUSSION

In this paper, we described and discussed the concept

of using Bloom Taxonomy in the field of computer

science. Automatic methodologies that are used to

classify the verbs according to CSCD levels has been

presented. The methodologies are a sub-task of our

previous work (Nafa,2015) and (Nafa,2016).

Classifying verbs based on CSCD levels is a novel

and challenging problem.

The classification methodologies make use of the

cognitive domain in computer sciences. Not all the

verbs found in the corps are equally important in the

process of extracting the learning objectives; the most

informative are the action verbs. These verbs are

automatically classified using proposed

methodologies; Bloom suggested a short verb list be

used as a baseline. The methodologies are also able to

recover verbs that are relatively infrequent or

specialized and thus unlikely to be captured manually

by an expert.

In the three textbooks analysis, we started with the

first textbook which is “Introduction to Algorithm,”

and we used it as a knowledge base for the other

textbook that included four levels of CSCD. Also, we

involved the intersection between verbs in the four

levels; one verb could have more than one level based

on the semantic meaning and the semantic function

Book1 Book2 Book3

Number of

Knowledge unit

120 60 30

Number of

extracted

Relationships

8500 8200 3000

Number of

concepts

1060 1020 950

Number of verbs 341 480 300

CSEDU 2017 - 9th International Conference on Computer Supported Education

180

Figure 7: Verbs Intersection between Cognitive Classes.

that verb used for. The intersection set could be

presented as:

|

∪

∪

∪

|

|

|

|

|

|

|

|

|

|

∩

|

|

∩

|

|

∩

|

|

∩

||

∩

||

∩

||

∩

∩

∩

|

Figure 7 shows the verbs intersection between

cognitive classes.

The results show that the classification of verbs is

overlapping between CSCD levels; one verb can be

in more than one level based on its function as a

cognitive verb level. This adds a different flavor of

describing the learning objectives. Based on our

analytical result, it is possible to conclude that by

using CSCD levels we can decide which verbs to use

at which level to match with the learner’s skills and

help in writing the learning objectives.

Through our experiments, we identified several

promising lines for future research. First, we planned

to present our model as a complete online tool and

included a feedback section for the expertise based on

their background in the learning materials. Second,

we plan to carry out larger-scale experiments to

generalized across different domains such as physics

and math.

REFERENCES

Anderson, L. W., Krathwohl, D. R., & Bloom, B. S. (2001).

A taxonomy for learning, teaching, and assessing: A

revision of Bloom's taxonomy of educational

objectives. Allyn & Bacon.

Baker, R. L., Mwamachi, D. M., Audho, J. O., Aduda, E.

O., & Thorpe, W. (1998). The resistance of Galla and

Small East African goats in the sub-humid tropics to

gastrointestinal nematode infections and the peri-

parturient rise in fecal egg counts. Veterinary

Parasitology, 79(1), 53-64.

Bloom, B. S. (1956). Taxonomy of educational objectives:

The classification of educational goals.

Boguraev, B., Carter, D., & Briscoe, T. (1987, April). A

multi-purpose interface to an on-line dictionary. In

Proceedings of the third conference on European

chapter of the Association for Computational

Linguistics (pp. 63-69). Association for Computational

Linguistics.

Burgess, G. A. (2005). Introduction to programming:

blooming in America. Journal of Computing Sciences

in Colleges, 21(1), 19-28.

Cassel, L., Clements, A., Davies, G., Guzdial, M.,

McCauley, R., McGettrick, A., ... & Weide, B. W.

(2008). Computer science curriculum 2008: An interim

revision of CS 2001.

Doran, M. V., and Langan, D. D. (1995, March). A

cognitive-based approach to introductory computer

science courses: lesson learned. In ACM SIGCSE

Bulletin (Vol. 27, No. 1, pp. 218-222). ACM.

Fürst, F., & Trichet, F. (2005, October). Axiom-based

ontology matching. In Proceedings of the 3rd

international conference on Knowledge capture (pp.

195-196). ACM.

Gluga, R., Kay, J., Lister, R., Charleston, M., Harland, J.,

& Teague, D. (2013, January). A conceptual model for

reflecting on expected learning vs. demonstrated

student performance. In Proceedings of the Fifteenth

Australasian Computing Education Conference-

Volume 136 (pp. 77-86). Australian Computer Society,

Inc.

Grishman, R., Macleod, C., & Meyers, A. (1994, August).

COMLEX syntax: Building a computational lexicon. In

Proceedings of the 15th conference on Computational

Linguistics-Volume 1 (pp. 268-272). Association for

Computational Linguistics.

Hearst, M. A. (1992, August). Automatic acquisition of

hyponyms from large text corpora. In Proceedings of

the 14th conference on Computational Linguistics-

Volume 2 (pp. 539-545). Association for

Computational Linguistics.

Lister, R. (2000, December). On blooming first year

programming, and its blooming assessment. In

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units

181

Proceedings of the Australasian conference on

Computing education (pp. 158-162). ACM.

Lister, R., & Leaney, J. (2003). Introductory programming,

criterion-referencing, and bloom. ACM SIGCSE

Bulletin, 35(1), 143-147.

Machanick, P. (2000, May). The experience of applying

Bloom’s Taxonomy in three courses. In Proc. Southern

African Computer Lecturers’ Association Conference

(pp. 135-144).

Nafa. Fatema and Khan J.”Conceptualize the Domain

Knowledge Space in the Light of Cognitive Skills.” In

Proceedings of the 7th International Conference on

Computer Supported Education. 2015.

Navigli, R., Velardi, P., & Gangemi, A. (2003). Ontology

learning and its application to automated terminology

translation. IEEE Intelligent systems, 18(1), 22-31.

Nevid, J. S., & McClelland, N. (2013). Using action verbs

as learning outcomes: applying Bloom’s taxonomy in

measuring instructional objectives in introductory

psychology. Journal of Education and Training Studies,

1(2), 19-24.

Oliver, D., Dobele, T., Greber, M., & Roberts, T. (2004,

January). This course has a Bloom Rating of 3.9. In

Proceedings of the Sixth Australasian Conference on

Computing Education-Volume 30 (pp. 227-231).

Australian Computer Society, Inc.

Princeton University "About WordNet." WordNet.

Princeton University. 2010.

http://wordnet.princeton.edu.

Rajaraman, K., & Tan, A. H. (2003, November). Mining

semantic networks for knowledge discovery. In Data

Mining, 2003. ICDM 2003. Third IEEE International

Conference on (pp. 633-636).

Ritter, Alan, Stephen Soderland, and Oren Etzioni. "What

Is This, Anyway: Automatic Hypernym Discovery." In

AAAI Spring Symposium: Learning by Reading and

Learning to Read, pp. 88-93. 2009.

Schuler, K. K. (2005). VerbNet: A broad-coverage,

comprehensive verb lexicon.

Starr, C. W., Manaris, B., & Stalvey, R. H. (2008). loom's

taxonomy revisited: specifying assessable learning

objectives in computer science. ACM SIGCSE

Bulletin, 40(1), 261-265.Chicago.

Thompson, E., Luxton-Reilly, A., Whalley, J. L., Hu, M.,

& Robbins, P. (2008, January). Bloom's taxonomy for

CS assessment. In Proceedings of the tenth conference

on Australasian computing education-Volume 78 (pp.

155-161). Australian Computer Society, Inc.

Mohsen Tavakol and Reg Dennick.( 2011) Making sense of

Cronbach's alpha. International journal of medical

education, 2:53–55.

Landauer, T. K., Foltz, P. W., & Laham, D. (1998). An

introduction to latent semantic analysis. Discourse

processes, 25(2-3), 259-284.

Figure 8: Verb Classification Methodologies for a txt-book (Introduction to Algorithms).

100

120

37

84

341

241

121

84

0

0

50

100

150

200

250

300

350

400

CS‐Verbs Bloom

(Baseline)

WordNet VerbNet SVD

PercentageofVerbs

VerbClassificationMethodologies

BloomVerbs CSVerbs

CSEDU 2017 - 9th International Conference on Computer Supported Education

182

Figure 9: All verbs classified Based on Bloom levels for the textbook (Introduction to Algorithms).

147

57

50

55

1

10

100

1000

BloomLevel BloomLevel2 BloomLevel3 BloomLevel4

PercentageofVerbs

BloomLevels

AllVerbs

Extending Cognitive Skill Classification of Common Verbs in the Domain of Computer Science for Algorithms Knowledge Units

183