Home Furniture Detection by Geometric Characterization by

Autonomous Service Robots

Oscar Alonso-Ramirez

1

, Antonio Marin-Hernandez

1

, Homero V. Rios-Figueroa

1

and Michel Devy

2

1

Artificial Intelligence Research Center, Universidad Veracruzana, Sebasti

´

an Camacho No. 5, Xalapa, Ver., 91000, Mexico

2

CNRS, LAAS, Universit

´

e de Toulouse, 7 avenue du Colonel Roche, F-31077 Toulouse Cedex, France

Keywords:

Service Robots, Furniture Detection.

Abstract:

Service robots are nowadays more and more common on diverse environments. In order to provide useful

services, robots must not only identify different objects but also understand their use and be able to extract

characteristics that make useful an object. In this work, a framework is presented for recognize home furniture

by analyzing geometrical features over point clouds. A fast and efficient method for horizontal and vertical

planes detection is presented, based on the histograms of 3D points acquired from a Kinect like sensor onboard

the robot. Horizontal planes are recovered according to height distribution on 2D histograms, while vertical

planes with a similar approach over a projection on the floor (3D histograms). Characteristics of points belong-

ing to a given plane are extracted in order to match with planes from furniture pieces in a database. Proposed

approach has been proved and validated in home like environments with a mobile robotic platform.

1 INTRODUCTION

When someone thinks of a fully functional service

robot, it is very common to imagine a robot perform-

ing different tasks the same way humans do. Al-

though many advances has been achieved from di-

verse groups around the world, e.g. in the fields of

mobile robot localization, path planning and human-

robot interaction; cognitive representation of environ-

ments is still a challenge. For a robot to understand

that a chair has a surface to sit down, surface that it is

closely linked with the property of being a chair, but

that in some cases could be used to place objects, it is

an abstraction very difficult to do for a robot.

Many works for detecting objects do not consider

characteristics of high level that allow to understand

the use or the properties of an object. Dealing with

such a problem, in this paper it is proposed to ana-

lyze furniture pieces by extracting some of the prin-

cipal characteristics of them, we focus particularly on

pieces of furniture that can be moved or relocated by

humans or the same robot while doing his tasks, e.g.

cleaning beside or under a couch or bed. Furniture

fixed to the environment as wardrobes or cabinets that

do not move, are out of the scope of this work.

Along this work it is proposed to model and iden-

tify objects by its geometrical properties, i.e. horizon-

tal or vertical planes, supports (legs), etc. By model-

ing individually each part of the furniture the robot

could infer in the future, that a flat surface like the

main plane of a table has the same characteristics as

the horizontal plane of the chest of drawers and then

suppose that is possible to pose objects on it.

This paper is organized as follow, on next sec-

tion (2) some of the most important related works are

described. Section 3 refers to planes characteristics

extractions for furniture model representation and on

section 4 those characteristics are used to identify ob-

jects in a data base. On section 5 are shown some ex-

periments and finally on section 6 are given the con-

clusions and future work.

2 RELATED WORK

In the recent years, a lot of works for detection and

recognition of objects have been developed, com-

monly working with 3D information, which gives

to the robots more information but also increase the

processing required. In order to keep this process-

ing from becoming untreatable, new techniques have

been created.

There are different ways to represent the 3D data

of the objects, choosing the better representation will

depend on the application. In (Nießner et al., 2013), a

508

Alonso-Ramirez, O., Marin-Hernandez, A., Rios-Figueroa, H. and Devy, M.

Home Furniture Detection by Geometric Characterization by Autonomous Service Robots.

DOI: 10.5220/0006478405080513

In Proceedings of the 14th International Conference on Informatics in Control, Automation and Robotics (ICINCO 2017) - Volume 2, pages 508-513

ISBN: Not Available

Copyright © 2017 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

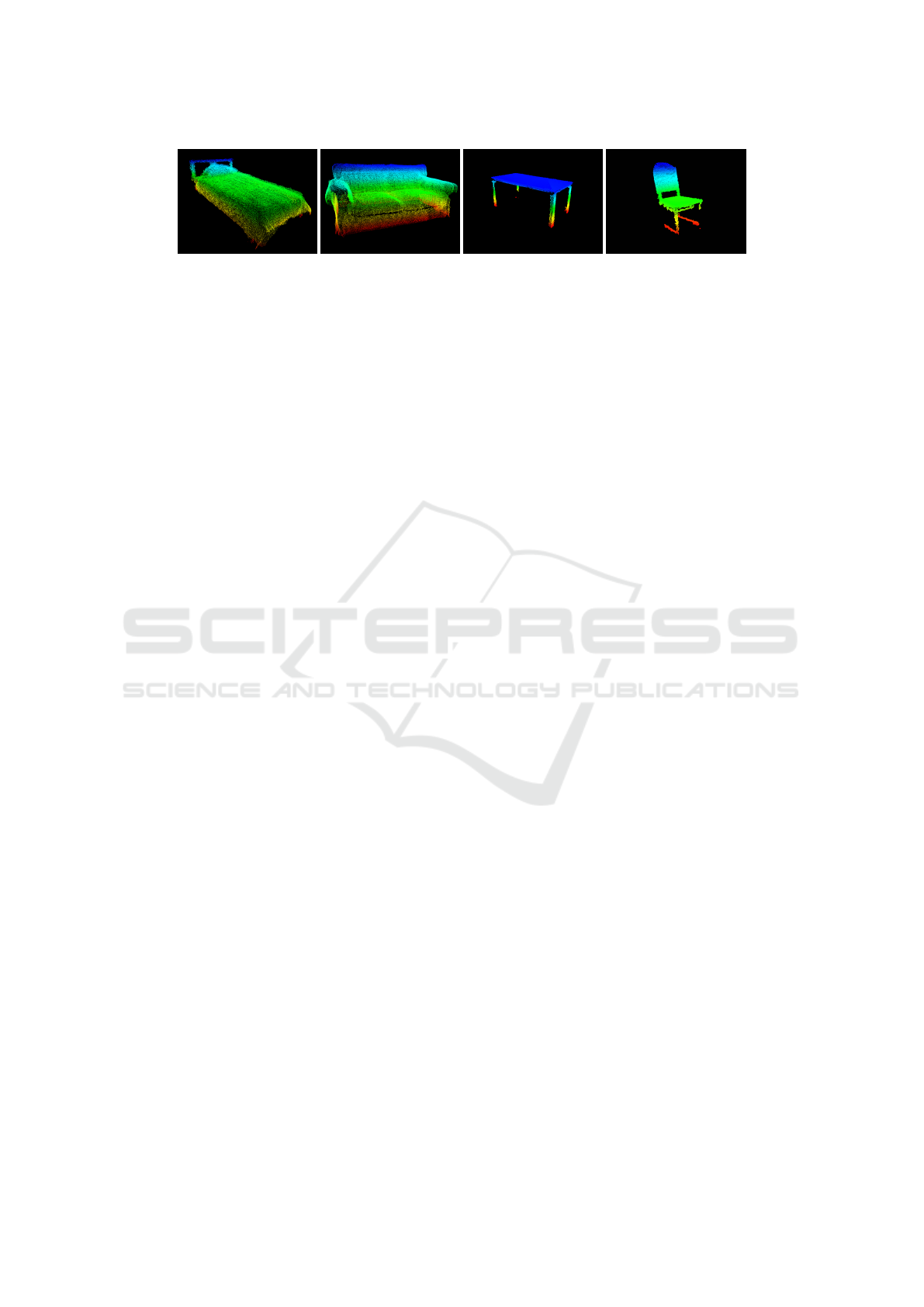

(a) (b) (c) (d)

Figure 1: Examples of point cloud models: (a) bed, (b) couch, (c) table and (d) a chair.

simple spatial hashing scheme is presented for large

and fine scale reconstruction. This helps for more

efficient object reconstruction and real time updates.

In (Wu et al., 2015) is proposed to represent the 3D

shape of objects as a probability distribution of bi-

nary variables on a voxel grid using a Convolutional

Deep belief Network. They are able to recognize and

reconstruct objects based on their own dataset.

In (Wahl et al., 2003) is introduced a four-

dimensional feature invariant to translation and rota-

tion that captures the intrinsic geometrical relations

between pairs of oriented surface points. In (Drost

et al., 2010) is proposed an off-line global model de-

scription based on oriented point pair features. For

the recognition phase they match the object and obtain

its pose based on a voting scheme. This method, re-

ported in (Salas-Moreno et al., 2013) for an object ori-

ented SLAM technique, has shown good results when

the objects occupy most of the field of view, but fails

when the objects are distant or partially occluded.

Most of these techniques are able to capture small

details on the objects and in general they work better

for small objects, when the pieces of furniture of a

home are analyzed, it is necessary to focus on bigger

features like planes.

As stated in (Swadzba and Wachsmuth, 2014), it

is reasonable to represent a 3D scene as a collection

of planes since a typical indoor environment mostly

consist of planar surfaces. In (Trevor et al., 2012)

they also highlighting the importance of the planar

surfaces as landmarks.

In (G

¨

unther et al., 2013) the furniture is repre-

sented as a set of planar structures that “have a cer-

tain size, orientation, height above ground and spatial

relation to each other”. A faster alternative to plane

segmentation was presented in (Holz et al., 2012), us-

ing integral images and taking advantage of the struc-

tured point cloud from RGB-D cameras. In (Alonso-

Ramirez et al., 2015) was presented an approach to a

fast horizontal planes detection also based on struc-

tured point clouds.

In (G

¨

unther et al., 2017) is presented a classifica-

tion technique based on semantic models from furni-

ture objects based on their planar surface.

Taking advantage of statistical properties of the

3D world and the contextual relationships between

the objects in the world, (Lin et al., 2013) present a

technique for object detection and scene understand-

ing. Based on (Carreira and Sminchisescu, 2012) to

find object regions and later classify them. By us-

ing a conditional random field (CRF) model they inte-

grate appearance, geometry, object relations with the

environment and with other objects and they avoid

some of the problems with feature-based approaches

like pose variation, object occlusion or illumination

changes.

These works highlight how plane detection com-

bined with semantic information can provide a good

representation of the environments for the robots. The

proposed approach characterize the planes of the fur-

niture and extract some characteristic information to

classify them, information that could be used in the

future for semantics.

3 OBTAINING AND

CHARACTERIZING THE PCD

MODELS FOR THE

FURNITURE

To be able to identify the furniture, the robot requires

to previously model each one of the pieces of furni-

ture in the environment. At this stage of the research,

a specific model for each piece of furniture has been

created and not a general model, however this could

be incorporated later.

To obtain the models, there are two ways; to get

the model from the internet or to construct the model

ourselves. Our approach requires for the model to be

a point cloud. It is possible to extract one from the

online models (depending on its format) but is very

likely some extracted points correspond from surfaces

not visible for the robot, for example the lower side of

a tables plane. In order to have a model similar to the

point clouds the robot will see, we decided to con-

struct the models ourselves from several point clouds

from different views of the furniture.

Home Furniture Detection by Geometric Characterization by Autonomous Service Robots

509

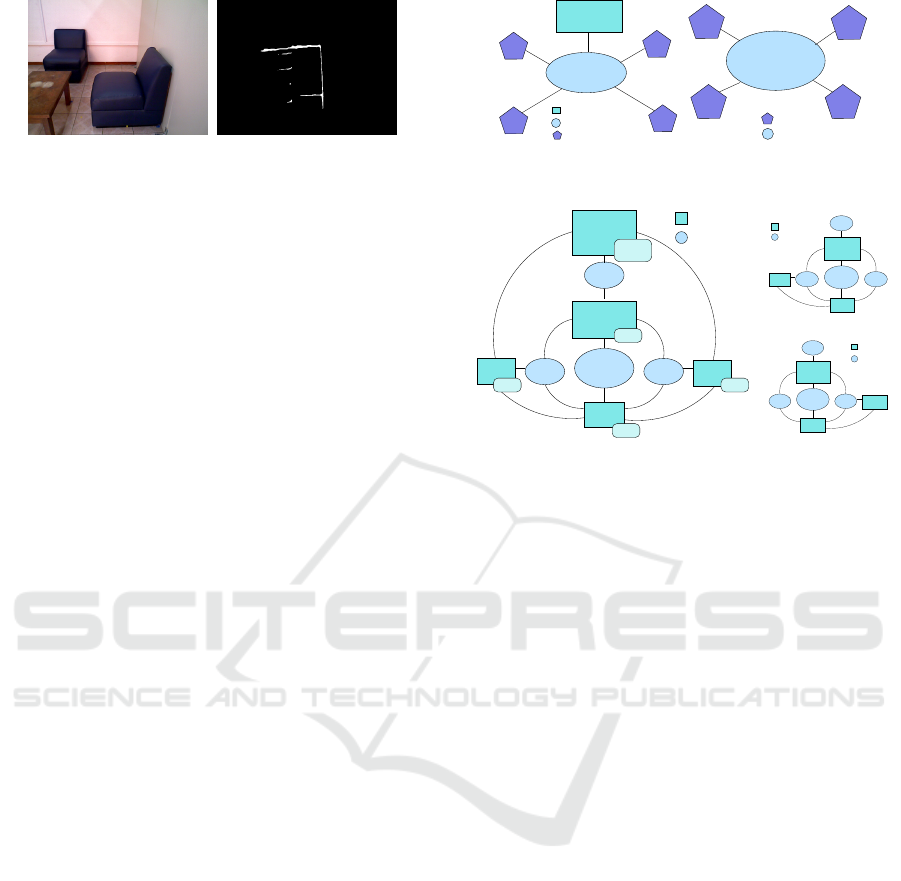

(a) (b)

Figure 2: In (a) an indoor scene and in (b) its height his-

togram.

3.1 Creating a PCD Model

We took several images around each piece of furni-

ture. Since the robot was localized in the environ-

ment, all those images were in the same reference

frame. Small errors in alignment are corrected with an

ICP algorithm. Then, the different views were merged

and down-sampled to obtain the point cloud model of

the furniture. The figure 1 shows some examples of

the PCD models obtained, the point cloud color rep-

resents the z value of each point.

In order to characterize the obtained models, it is

necessary to extract the planes from the PCD. Most

of the methods for plane extraction use computa-

tionally expensive variations of RANSAC-like algo-

rithms. However, a quick analysis of the distribution

of the points can be enough.

Using an RGB-D camera mounted on a PTU in the

robot, point clouds can be extracted and they can be

transformed from the camera reference frame to the

robot’s base frame or to the world reference frame.

Under these circumstances, detecting horizontal and

vertical planes on the point clouds can be simplified

by analyzing the concentration of the points, avoiding

a complex mathematical approach.

3.2 Characterizing the horizontal

planes

To extract the horizontal planes, the approach pre-

sented in (Alonso-Ramirez et al., 2015) was followed.

The first step is to construct a height histogram.

Since the point cloud is transformed to the robot’s

base coordinate frame, a horizontal plane will con-

centrate a considerable amount of points at the same

hight. In order to extract the planes from the his-

togram we need to search for the higher peaks.

The figure 2 shows an example of a height his-

togram, the figure 2(a) shows the RGB data of the

point cloud that is being analyzed and the figure 2(b)

shows the height histogram. It can be observed how

there are two outstanding peaks on the histogram,

which correspond to the height of the table and the

(a) (b)

Figure 3: Examples of height histograms for (a) a chest of

drawers and (b) a couch.

height of the seats of the chairs.

To find the outstanding peaks, first the curve of

the histogram is reduced with the DouglasPeucker al-

gorithm (a curve simplification algorithm) to reduce

the noise in the signal by eliminating small variations

without increasing the bin size. Then a search is per-

formed to find the larger peaks in the curve. All the

points within those peaks are retrieved and projected

to a floor plane. Then, a clustering algorithm is per-

formed on the projection in order to separate different

planes that can exist at the same high for example the

seats of different chairs.

The Figure 3, shows the characteristic curves for

some models of pieces of furniture. However this in-

formation is not enough for the robot to classify them

since the histograms can change significantly during

the execution due to noise and partial views. In or-

der to characterize each plane detected the following

characteristics are extracted.

center The 3D point center of the plane points

orientation Defining an horizontal or vertical plane

height The average height of the points.

height deviation The standard deviation of the

point’s height.

area Area of the plane projected

PCA eigenvectors and eigenvalues Eigenvectors

and eigenvalues resulting of a principal compo-

nent analysis (PCA) to the plane points

This information allow to perform a better analy-

sis of the planes, which leads to a better classification.

The horizontal planes of the furniture have different

height, area, and some adjust better to a mathematical

plane than others, for example the points from a table

have a smaller height deviation than the points on the

horizontal plane of a bed.

However, more information needs to be extracted

from the point clouds apart from the horizontal

planes, to complete the furniture models. In a differ-

ent way as the presented on (Alonso-Ramirez et al.,

2015), along this work the color or normal histograms

are not used.

ICINCO 2017 - 14th International Conference on Informatics in Control, Automation and Robotics

510

(a) (b)

Figure 4: In (a) indoor scene and (b) its vertical projection.

3.3 Characterizing the Vertical Planes

For detecting the vertical planes, the same approach

for the horizontal planes can be extended. A two di-

mensions histogram will be constructed, projecting

all 3D points to a floor plane and then counting the

number of points that lie at the same coordinates on

the projection. All the points on a vertical plane pro-

jected to the floor plane will produce a line on the

projection. Therefore retrieving all the points in those

lines will extract the vertical planes.

In order to make the lines in the projection more

remarkable, a smoothing filter has been applied and

then morphological operators of dilation and erosion.

Figure 4 shows an example of a vertical projection

from a particular scene where it can be seen the walls

project the bigger lines, smaller lines for the side, the

front and the back of the couches and small dots for

the table legs.

Since the lines in the projection are not always

straight and their width can be variable, the Hough

algorithm did not provide good results. To extract the

lines a clustering algorithm is performed, the clusters

obtained are approximated to polygons for reducing

its number of points. A convex hull is calculated to

determinate, based on its size, if the cluster is one sin-

gle line or it has two o more lines together, for ex-

ample two walls joining in a corner or a piece of fur-

niture placed against the wall. If that is the case, the

lines are separated on the joints. The points belonging

to the planes are retrieved the same way as they were

retrieved in the horizontal planes.

The vertical planes or regions detected will be

considered as secondary characteristics to the hori-

zontal regions. They will bring new information and

together with the horizontal planes will complete the

model of the furniture. The same characteristics cal-

culated for the horizontal planes are calculated for the

verticals. In this case, based on the PCA eigenvectors

and eigenvalues it can be make a subdivision to the

vertical planes and identify the legs of some furniture

like tables and chairs.

Seat

Vertical

Backrest

Leg

Leg

Leg

Leg

Horizontal

Leg

(a)

table

leg

leg

leg

leg

Horizontal

Leg

(b)

Figure 5: Graph for (a) a chair and (b) a table.

armrest armrest

seat

backrest

top

front

side

rear

Vertical

Horizontal

side

backrest

front

rear

side

rear

side

(a)

Vertical

Horizontal

armrest armrest

seat

backrest

top

front

side

(b)

Vertical

Horizontal

armrest armrest

seat

backrest

top

front

side

(c)

Figure 6: In (a) a complete couch graph and in (b) the left

and in (c) the right visual sub-graphs.

3.4 Furniture Characterization

Once the models were created and their planes were

separated and characterized, a graph is constructed.

Each plane will be a node and the arcs will represent

adjacency between two planes. The principal node

will be the horizontal plane of the furniture or the

biggest horizontal plane in cases where there are more

than one. In Figure 5 are shown some examples of the

graphs of the furniture’s model. The node’s shape rep-

resent it’s type, the ellipses nodes correspond to hori-

zontal planes, the rectangles to vertical planes and the

pentagons are the furniture supports (legs).

These graphs contain all the planes of each piece

of furniture. However, when we analyze an image

from a scene the robot is unable to see all the planes

since they can be on opposite sides. In the figure

6(a) is shown the graph for the couch. In the lower

right corner of each node there is a list of the opposite

planes, for example, if the robot sees the rear plane,

depending on the angle maybe he can see the seat, but

he can not see the backrest or the front plane.

Based on these viewing restrictions sub-graphs are

created, each one showing the visible planes from the

piece of furniture from different point of views. In

the figures 6(b) and 6(c) there are sub-graphs from

the couch viewed from the front left side and from the

front right side respectively.

Home Furniture Detection by Geometric Characterization by Autonomous Service Robots

511

4 SCENE ANALYSIS

Once a furniture’s models database has been con-

structed and the robot is wandering in the environ-

ment, it is required to identify the furniture present in

the scene. Once the robot takes a point cloud to an-

alyze, the first step is to eliminate the floor by filter-

ing all the points corresponding to a height smaller to

10cm. And then, as described in the previous section,

the horizontal and vertical planes are extracted.

The characteristics of the horizontal planes are

compared with the main horizontal planes of the mod-

els. The probability of a plane to belong to each type

of furniture is based on the equation (1).

p

plane

(x|h,σ,a) =

1

2

ph +

1

4

pσ +

1

4

pa (1)

where h, σ and a represent the heigh, the height de-

viation and the area of the plane. The probability is

the weighted sum of the similarity of the attributes to

the model’s as described in the equations (2), (3) and

(4). To classify the plane, all the categories with the

probability higher than certain threshold are chosen.

ph = 1 − |h

m

− h

x

|/h

range

(2)

pσ = 1 − |σ

m

− σ

x

|/σ

m

(3)

pa = 1 − |a

m

− a

x

|/a

m

(4)

Once the horizontal plane has been classified as

belonging to a furniture, a search is performed and the

planes which are close to it are combined on a graph.

This graph from the scene will be compared with the

sub-graphs of the probable models.

First, it is necessary to match the nodes from the

scene graph to the model’s graph. The node for the

main horizontal plane is automatically matched, a

search of configurations is performed to match the

rest of the nodes based on its characteristics and its

position with respect to the main plane.

Once all the nodes from the scene graph are

matched to the nodes of the model graph, their simi-

larity is calculated in a similar way as the probability

for the horizontal planes. Then, a weighted sum of the

similarities of all the nodes, is performed. The weight

for each pair of nodes will be the percentage of node

from the total area of the model sub-graph.

p

ob ject

=

N

∑

i

w

i

p

plane

(x) (5)

5 RESULTS IN FURNITURE

CLASSIFICATION

The figure 7 shows some of the results obtained. The

images on the left side are the RGB of the point cloud

analyzed, the images in the center show the planes ob-

tained and the images on the right show the resulting

graphs and their classification.

The figure 7(a) shows a scene where two pieces

of furniture can be found. It can be observed that

two horizontal planes were detected. For the plane

labelled as “H00” (which correspond to a coffee ta-

ble) there were two plane probabilities higher than the

threshold of 0.6, the higher probability for the coffee

table and a smaller one for the couch. The results can

be observed on the table 1. In this case, no adjacent

planes were found, so the constructed graph will con-

sist only on the main node. Once it is compared to the

graphs for the coffee table and the couch, it is con-

firmed a higher probability for the former and then it

is labelled.

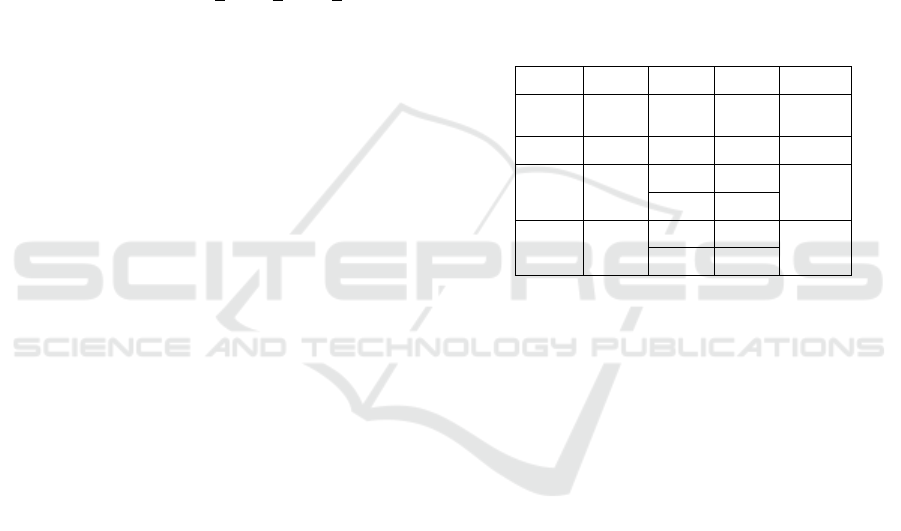

Table 1: Example for graph classification.

Main Node Node

Prob

Adjacent

Nodes

Node

Prob

Graph

Probability

H00

Coffee Ta-

ble

none – 0.5517

0.8238

H00

Couch none – 0.1344

0.6903

H01

Couch V01 backrest 0.6241

0.9375 0.7703

V02 front

0.7960

H01

Bed V01 no match 0.5895

0.7651 –

V02 front

0.6496

For the second plane “H01”, corresponding to a

couch, there were also two plane probabilities higher

than the threshold, and there were two adjacent planes

found. The results can be observed in table 1 were the

higher object probability correspond to the couch. In

figure 7(b) and 7(c) other results for different types of

furniture can be found.

6 CONCLUSION

Along this work, an approach for home furniture de-

tection, has been presented. The proposed approach

is based on the characterization of geometric entities

of the diverse pieces of furniture. As described, the

main characteristic for these furniture pieces is its hor-

izontal planes but also vertical planes and legs are ex-

tracted. A neighbourhood graph is then constructed in

order to identify the correct piece of furniture present

in the scene. The approach has been validated and

proven their effectiveness on a home like environment

with a robot moving in it. In future work, the charac-

terization are going to be used to let the robot to infer

about similar properties of geometric entities, e.g. the

horizontal planes.

ICINCO 2017 - 14th International Conference on Informatics in Control, Automation and Robotics

512

Coffe Table

H00

Wall

V03

V00

H01

V01

V02

Couch

Horizontal

Vertical

Leg

(a)

V02

Vertical

Horizontal

V00

H00

V01

V03

Drawer

(b)

Table

Chair

V03

V00

V01

V02

H01

H00

Leg

Horizontal

Vertical

Wall Wall

(c)

Figure 7: Results for the furniture detection.

REFERENCES

Alonso-Ramirez, O., Aguas-Garcia, Y., Marin-Hernandez,

A., Rios-Figueroa, H. V., and Devy, M. (2015). An

efficient alternative approach for home furniture de-

tection and localization by an autonomous mobile

robot. In 2015 IEEE International Autumn Meeting on

Power, Electronics and Computing (ROPEC), pages

1–6.

Carreira, J. and Sminchisescu, C. (2012). Cpmc: Auto-

matic object segmentation using constrained paramet-

ric min-cuts. IEEE Transactions on Pattern Analysis

and Machine Intelligence, 34(7):1312–1328.

Drost, B., Ulrich, M., Navab, N., and Ilic, S. (2010). Model

globally, match locally: Efficient and robust 3d object

recognition. In IEEE CVPR’10, pages 998–1005.

G

¨

unther, M., Wiemann, T., Albrecht, S., and Hertzberg, J.

(2013). Building semantic object maps from sparse

and noisy 3d data. In 2013 IEEE/RSJ International

Conference on Intelligent Robots and Systems, pages

2228–2233.

G

¨

unther, M., Wiemann, T., Albrecht, S., and Hertzberg, J.

(2017). Model-based furniture recognition for build-

ing semantic object maps. Artificial Intelligence,

247:336 – 351. Special Issue on {AI} and Robotics.

Holz, D., Holzer, S., Rusu, R. B., and Behnke, S. (2012).

Real-Time Plane Segmentation Using RGB-D Cam-

eras, pages 306–317. Springer Berlin Heidelberg,

Berlin, Heidelberg.

Lin, D., Fidler, S., and Urtasun, R. (2013). Holistic scene

understanding for 3d object detection with rgbd cam-

eras. In IEEE ICCV’13, pages 1417–1424.

Nießner, M., Zollh

¨

ofer, M., Izadi, S., and Stamminger,

M. (2013). Real-time 3d reconstruction at scale us-

ing voxel hashing. ACM Trans. Graph., 32(6):169:1–

169:11.

Salas-Moreno, R. F., Newcombe, R. A., Strasdat, H., Kelly,

P. H. J., and Davison, A. J. (2013). Slam++: Simulta-

neous localisation and mapping at the level of objects.

In IEEE CVPR’13, pages 1352–1359.

Swadzba, A. and Wachsmuth, S. (2014). A detailed anal-

ysis of a new 3d spatial feature vector for indoor

scene classification. Robotics and Autonomous Sys-

tems, 62(5):646 – 662. Special Issue Semantic Per-

ception, Mapping and Exploration.

Trevor, A. J. B., Rogers, J. G., and Christensen, H. I. (2012).

Planar surface slam with 3d and 2d sensors. In IEEE

ICRA’12, pages 3041–3048.

Wahl, E., Hillenbrand, U., and Hirzinger, G. (2003). Surflet-

pair-relation histograms: a statistical 3d-shape repre-

sentation for rapid classification. In Fourth Interna-

tional Conference on 3-D Digital Imaging and Mod-

eling, 2003. Proceedings., pages 474–481.

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X.,

and Xiao, J. (2015). 3d shapenets: A deep representa-

tion for volumetric shapes. In IEEE CVPR’15, pages

1912–1920.

Home Furniture Detection by Geometric Characterization by Autonomous Service Robots

513