Evaluating Accuracy and Usability of Microsoft Kinect Sensors and

Wearable Sensor for Tele Knee Rehabilitation after Knee Operation

MReza Naeemabadi

1,*

, Birthe Dinesen

1

, Ole Kæseler Andersen

2

, Samira Najafi

3

and John Hansen

4,5

1

Laboratory of Welfare Technologies - Telehealth and Telerehabilitation, Integrative Neuroscience Research Group,

SMI®, Department of Health Science and Technology, Faculty of Medicine, Aalborg University, Denmark

2

Integrative Neuroscience Research Group, SMI®, Department of Health Science and Technology,

Faculty of Medicine, Aalborg University, Denmark

3

Clinical Engineering Department, Vice-Chancellor in Treatment Affairs, Mashhad University of Medical Science, Iran

4

Laboratory for Cardio-Technology, Medical Informatics Group, Department of Health Science and Technology,

Faculty of Medicine, Aalborg University, Denmark

5

Laboratory of Welfare Technologies - Telehealth and Telerehabilitation, SMI®, Department of Health Science

and Technology, Faculty of Medicine, Aalborg University, Denmark

Keywords: Microsoft Kinect, IMU, Accelerometer, Inertial Measure Units, Knee Angle, Knee Rehabilitation, Wearable

Sensors.

Abstract: The Microsoft Kinect sensors and wearable sensors are considered as low-cost portable alternative of

advanced marker-based motion capture systems for tracking human physical activities. These sensors are

widely utilized in several clinical applications. Many studies were conducted to evaluate accuracy, reliability,

and usability of the Microsoft Kinect sensors for tracking in static body postures, gait and other daily activities.

This study was aimed to asses and compare accuracy and usability of both generation of the Microsoft Kinect

sensors and wearable sensors for tracking daily knee rehabilitation exercises. Hence, several common

exercises for knee rehabilitation were utilized. Knee angle was estimated as an outcome. The results indicated

only second generation of Microsoft Kinect sensors and wearable sensors had acceptable accuracy, where

average root mean square error for Microsoft Kinect v2, accelerometers and inertial measure units were 2.09°,

3.11°, and 4.93° respectively. Both generation of Microsoft Kinect sensors were unsuccessful to track joint

position while the subject was lying in a bed. This limitation may argue usability of Microsoft Kinect sensors

for knee rehabilitation applications.

1 INTRODUCTION

Telerehabilitation after knee surgery is recognized as

one of the well-known type of telemedicine.

Nowadays, this service is wildly provided in the

world. Previous studies remarked low-bandwidth

Audio/Video communication improved rehabilitation

program after total knee replacement remotely

(Tousignant et al., 2009; Russell et al., 2011;

Tousignant et al., 2011).

Motion capture systems are utilized to evaluated

improvements in patients’ physical activity

performance beside the subjective rehabilitation test.

However; marker-based motion capture systems are

highly precise and known as a gold standard, they

have several limitations. First, the motion capture

systems are considerably expensive. Moreover, data

acquisition process due to attaching several markers

on the subject’s body and calibration process is

relatively complex. Finally, the marker-based motion

capture systems are not portable, and large space is

required.

Consequently, the marker-based motion capture

systems are not considered as a proper candidate for

tracking human activities during daily rehabilitation

sessions.

Wearable sensors and Microsoft Kinect sensors

are the most common systems are being employed as

low-cost, portable and less complex alternative for

human motion tracking system.

Several studies utilized Microsoft Kinect sensors

as marker-less physical activity tracker for physical

rehabilitation program (Pedraza-Hueso et al., 2015;

128

Naeemabadi, M., Dinesen, B., Andersen, O., Najafi, S. and Hansen, J.

Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation.

DOI: 10.5220/0006578201280135

In Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2018) - Volume 1: BIODEVICES, pages 128-135

ISBN: 978-989-758-277-6

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Vernadakis et al., 2014; Lange et al., 2012; Pavão et

al., 2014).

While wearable sensors were also employed as a

human motion capture system for tracking patient’s

performance during telerehabilitation program

(Piqueras et al., 2013; Tseng et al., 2009).

In 2012 Microsoft introduced a RGB-Depth

camera as a part of Microsoft Xbox 360 called

Microsoft Kinect Xbox 360 (or Kinect v1) (Schröder

et al. 2011). Microsoft Kinect SDK v1.8 using the

depth images and embedded skeleton algorithm

provides the geometric positions of 20 joints of each

detected body in the space (Microsoft 2013).

The second generation of Microsoft Kinect was

presented together with Microsoft Xbox One and

known as Microsoft Kinect One (or Kinect v2).

Several improvement in the sensors have been

applied in the Kinect v2 (Sell and O’Connor, 2014).

Microsoft Kinect SDK 2.0 was developed for Kinect

v2 and enables developer to record estimated position

of 25 joints for each detected skeleton (Microsoft,

2014).

Consequently, several studies were conducted to

assess accuracy and reliability of Microsoft Kinect

sensors for static postures(Xu and McGorry, 2015;

Darby et al., 2016; Clark et al., 2012), gait (Mentiplay

et al., 2015; Auvinet et al., 2017; Eltoukhy et al.

2017), body sway (Yeung et al., 2014), and joints

angles in specific physical activities(Anton et al.,

2016; Woolford, 2015; Huber et al., 2015) using gold

standard marker-based motion capture systems.

Wearable sensors such as accelerometers,

gyroscopes and inertial measure units (IMU) are also

being used to track physical activities. Boa et al. used

five biaxial accelerometers to classify 20 activities

with 84% accuracy (Bao and Intille, 2004) while

Karantonis et al., (2006) classified 12 physical tasks

using a tri-axial accelerometer with 90.8% accuracy.

Joints and limb orientation can be estimated by using

accelerometer and gyroscope sensors (Hyde et al.,

2008; Roetenberg et al., 2013) but it still has one

degree of ambiguity in the 3-dimension space.

Accuracy of human body orientation was improved

by using IMU sensors (Ahmed and Tahir, 2017; Lin

and Kulic

́

, 2012).

In this paper, accuracy and usability of both

generation of Microsoft Kinect sensors (Kinect v1

and Kinect v2), IMUs and accelerometer sensors for

seven common exercises in knee rehabilitation

program were investigated by using a gold standard

marker-based motion capture system. The main aim

of this study was to evaluate Microsoft Kinect sensors

and wearable sensors for knee rehabilitation

application.

2 METHODS

2.1 Data Collection

In this study, seven series of recording were

performed to assess accuracy and usability of

mentioned human activity tracking systems

simultaneously while subject doing common

rehabilitation exercises for knee. Eight Qualisys Oqus

300/310 cameras were pointed to the area of interest.

The recordings were carried out using Qualisys Track

Manager 2.14 (build 3180) with 256Hz sampling

frequency. Pelvis markers were placed on the Ilium

Anterior Superior and Ilium Posterior Superior. Knee

joint defined by Femur Medial Epicondyle and Femur

Lateral Epicondyle markers, while Fibula Apex of

Lateral Malleolus and Tibia Apex of Medial

Malleolus landmarks were used for defining ankle

joint. Consequently, TH1-4, SK1-4, FCC, FM1 and

FM5 landmarks were employed to track lower limbs

movement (van Sint Jan, 2007).

Kinematics and kinetics measurement of lower

extremities were worked out using Visual 3D v6

software based on the Qualisys recordings (C-

Motion, 2017).

A pair of Kinect v1 and Kinect 2 were also

utilized to record joint positions. Two custom

applications were developed to capture, and store

estimated skeleton and corresponding joint positions.

Microsoft Kinect SDK version 1.8 and version 2.0

were utilized in the software development. A server

application was also developed to establish global

timing between applications using transmission

control protocol/Internet protocol through the local

network. Sampling frequencies were adjusted at

30Hz, which is highest available rate.

Linear acceleration, angular velocity, and

magnetic field were acquired using embedded

accelerometer, gyroscope and magnetometer sensors

in Shimmer 3 (Shimmer Sensing, 2016). Shimmer

wearable sensors were fixed on thigh, shin and foot

and the data including timestamps were streamed to

the computer using Bluetooth in real-time. Sampling

frequency for sensor were set at 128Hz. Acceleration,

angular velocity, and magnetics field recording range

were set at ±2g, ±500dps, ±1.3Ga respectively.

Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation

129

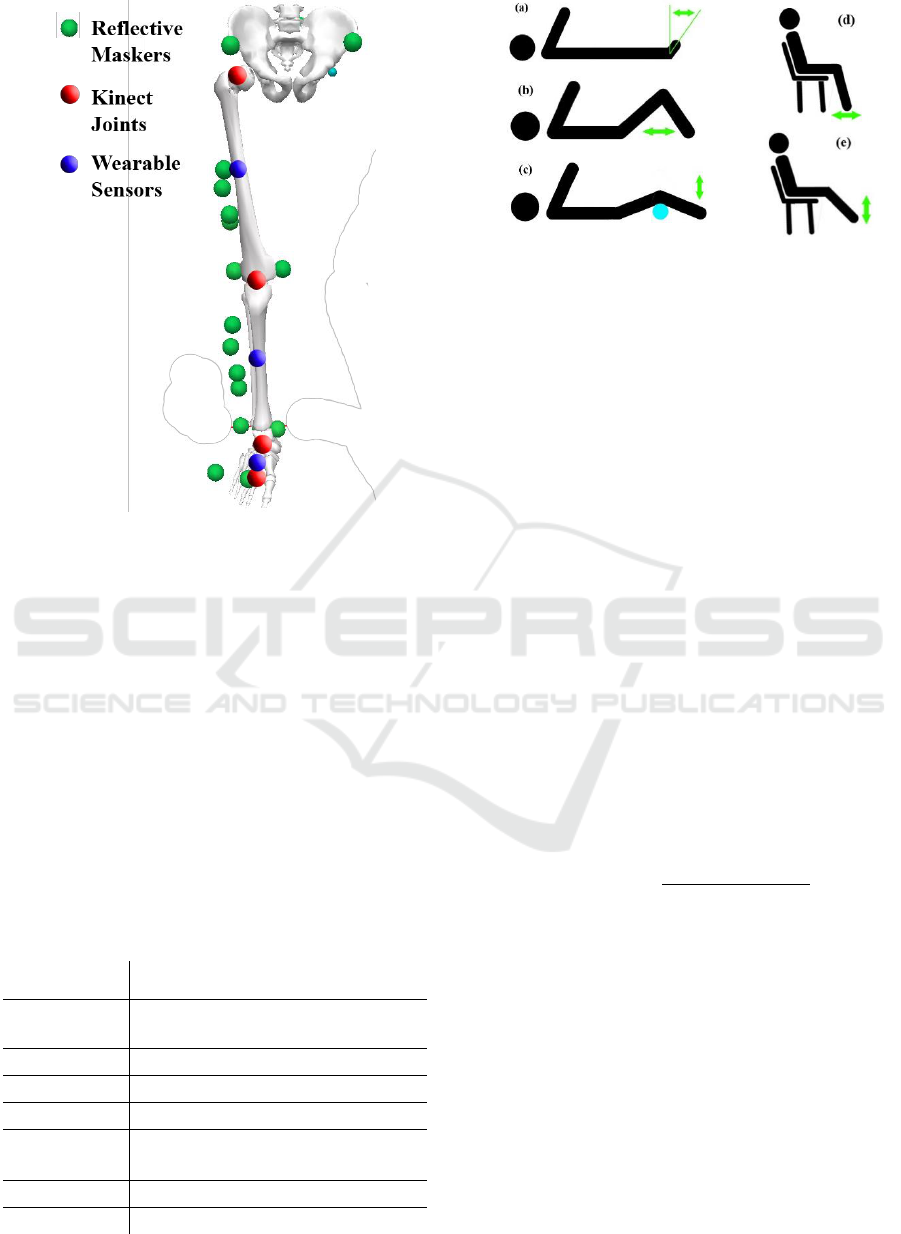

Figure 1: Positions of reflective markers, Microsoft Kinect

virtual landmarks and placement of wearable sensors.

Green circles show position of reflective markers and red

circles show position of estimated joint. Wearable sensors

were fixed at blue circles.

2.2 Procedure

Seven common exercises for knee rehabilitation were

performed during the recordings. These exercises are

mainly involving knee flexion and extension. Table 1

describes each exercise and Figure 2 shows the

exercise visually.

In the first three exercises both Kinect sensors

were mounted on the ceilings while for the rest of

exercises Kinect sensors were place on the table with

1m height. The Z-plane of Kinect sensors was

Table 1: Exercise details.

Posture

Physical Activity

Exercise 1

Laying

Ankle Dorsi/Plantar

flexion

Exercise 2

Laying

Knee flexion/extension

Exercise 3

Laying

Knee flexion/extension

Exercise 4

Sitting

Biking

Exercise 5

Sitting/

Standing

Sit to Stand

Exercise 6

Sitting

Leg bend and stretch

Exercise 7

Sitting

Knee flexion/extension

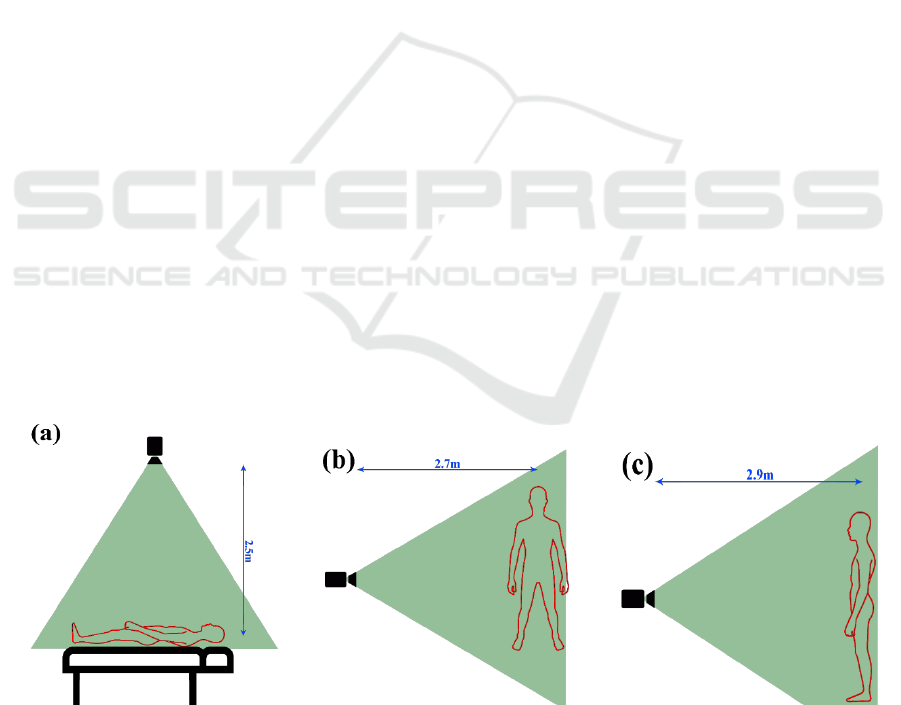

Figure 2: Visual explanation of exercises including posture

and movement. The green arrow shows direction of

movement. (a) exercise 1, (b) exercise 2, (c) exercise 3, (d)

exercise 6, (e) exercise 7.

perpendicular to coronal plane in all exercises except

exercise four and five (which in these two exercises

the Z-plane of Kinect sensors was perpendicular to

median plane). Figure 3 shows position and

alignment of Kinect sensors corresponding to the

subject’s body.

2.3 Data Analysis

The recorded data were filtered using a 10Hz low pass

filter implemented using a 20

th

order IIR Butterworth

filter. Data were synchronized in two steps. First data

were synchronized using recorded timestamp. In the

second step, small delays due to the data transferring

and processing latency were removed by using

dynamic time warping.

The knee joint in Kinect skeleton data was define

by virtual thigh, shin, and foot bones using position

of hip, knee, ankle, and foot joints position. Equation

1 represents calculated knee angle (

using

virtual bones.

(1)

Similarly, knee angle was calculated based on

acceleration data by using the acceleration vectors of

sensors on the thigh, shin and foot.

The knee angle in the gold standard recorded was

estimated in Visual 3D software by referencing thigh

and shank modelled bones respectively.

Orientation of IMU sensors were calculated using

attitude and heading reference system transformation

algorithm by fusing triaxial acceleration, triaxial

angular velocity, and triaxial magnetic field recording

(Kalkbrenner et al., 2014; Madgwick et al., 2011).

Consequently, orientation of each sensor was

represented by quaternions. Using shin and thigh

BIODEVICES 2018 - 11th International Conference on Biomedical Electronics and Devices

130

quaternions, the knee angle was calculated based on

equation 2.

(2)

2.4 Statistical Analyses

The range of motion (ROM) for all recordings were

calculated based on estimated knee angle. Root-

mean-square error (RMSE) and Pearson correlation

coefficient (R) were calculated. Coefficient of

variation between two systems was also calculated as

a ratio of error to mean comparing the gold standard.

95% limits of agreement (LOA) and bias (B) were

analysed using Bland-Altman analysis.

3 RESULTS

The results showed Microsoft Kinect SDKs (SDK 1.8

and SDK 2.0) were not able to detect body skeleton

and estimated joint positions for those exercises

subject laid in the bed (exercise 1-3). However,

Kinect sensors were place perpendicular to subject’s

coronal plane.

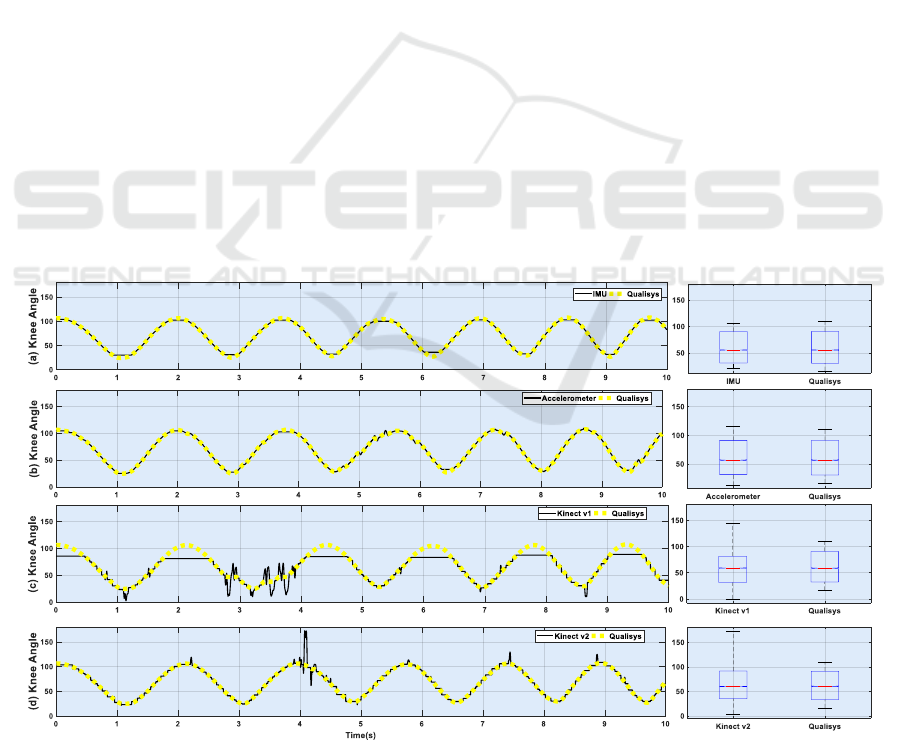

The results indicated the estimated knee angle

using accelerometer presents less RMSE. Mean

RMSE were 2.085° and 3.107° respectively in accele-

rometers and IMUs recordings, while the mean values

for Kinect v1 and Kinect v2 were 13.408° and 4.930°.

The estimated knee angle using IMU sensors

introduced lower RMSE than the provided knee angle

with Kinect v2 except exercise 4 (biking).

According to the table 2, estimated knee angle

was highly correlated with actual knee angle

(correlation > 0.796).

Moreover, the results show accelerometer sensors

represented higher accuracy in estimating lower

boundaries of range of motion in comparison to

IMUs. Whereas, Kinect sensors introduced higher

difference with actual knee angle during biking

exercise (see figure 4).

Bland Altman estimations indicated estimated

angle with accelerometer and IMU had higher

agreement with calculated values using gold standard

comparing with Microsoft Kinect sensor.

4 DISCUSSION

This study evaluated accuracy and usability of

wearable sensors (accelerometers and IMUs) and

Microsoft Kinect sensors (Microsoft Kinect v1 and

Microsoft Kinect v2) as a daily tracking system for

knee rehabilitation applications. Hence, the most

common exercises for knee rehabilitation were

utilized and tracking knee angle and range of motion

in interested knee were emphasized.

Qualisys marker-based motion capture system

was utilized as a gold standard system for tracking

lower limbs activities and C-Motion Visual 3D was

employed to compute joints angle.

Microsoft Kinect v1 introduced lower accuracy in

all the results (more than 10 degrees in average).

While the other recording systems presented

comparable accuracy. The trials showed the wearable

sensors and Microsoft Kinect v2 had acceptable

performance for tracking knee angle during exercises

with higher and more complex physical activities like

biking.

Figure 3: Position and alignment of Kinect sensors corresponding to the subject's body. (a) The subject was lying in a bed for

exercise 1-3 and the Kinect sensors were mounted on the ceiling and facing the floor. (b) In the exercise 4 and 5 the Kinect

sensors were put on a table with 1m height and subject did the exercises which standing sidewise corresponding to the Kinect

view point. (c) In exercise 6 and 7 the Kinect sensors were put on a table with 1m height and subject trained facing the Kinect

sensors.

Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation

131

Eltoukhy et al. (Eltoukhy et al. 2017) used Vicon

marker-based motion capture system to evaluated

Microsoft Kinect v2 and showed Kinect v2

measurements on knee ROM presented high

consistency and agreement with the gold standard and

the calculated absolute error for knee angle was 2.14°.

While in this study average RMSE for knee angle was

4.93°. Bonnechere et al. (Bonnechère et al., 2014)

evaluated validity of Microsoft Kinect v1 and the

reported poor to no agreement between gold standard

and Kinect v1 measurement for knee flexion. Bland

Altman calculations for Kinect v1 in this study also

emphasizes on lower agreement with gold standard in

comparison to other systems. Wiedemann et al.

(Wiedemann et al., 2015) used Kinect v2 to estimate

knee angle in 16 different static postures. Median

difference for the left knee was 0.26° where upper and

lower bounds were 16.47° and -11.84°.

Madgwick et al. (Madgwick et al., 2011) and Diaz

et al., (2015) used Vicon motion capture system to

evaluate developed AHRS algorithm using IMU

sensors. The result in this study is comparable with

their finding.

In summary, we can conclude that both wearable

sensors and Microsoft Kinect v2 had acceptable

performance for tracking knee movements while

performing knee rehabilitation exercises, but we need

to keep it in mind Microsoft Kinect v2 has three

noticeable limitation which they may argue usability

of Kinect v2 for tracking daily knee rehabilitation

exercises. First, Kinect v2 skeleton algorithm was not

able to detect skeleton body and estimate

corresponding joints position while the subject was

lying in a bed. The first three exercises in this study

were performed where the subject lying in a bed.

Secondly, subjects should stand in front Kinect v2

sensor with optimal distance (2-3.5m) while the body

is seen by cameras. This may pose issues while

subject is doing the exercise behind a chair or any

other objects may cover the body. Final limitation

may argue required computation resources for

estimating joint positions, while joint angle may

estimate without any external computer using

wearable sensors.

5 CONCLUSIONS

Accuracy and usability of wearable sensors and

Microsoft Kinect sensors as low-cost portable

alternative human body tracking systems for knee

rehabilitation application were evaluated. The

findings indicated that wearable sensors and

Microsoft Kinect v2 have acceptable accuracy.

Whereas, usability of Microsoft Kinect v2 might be

argued due to it is unable to track physical activities

in particular circumstances specifically while the

users laying on the floor or in a bed.

Figure 4: estimated knee angle using gold standard (dotted yellow line), (a) IMUs, (b) accelerometers, (c) Microsoft Kinect

v1, and (d) Microsoft Kinect v2.

BIODEVICES 2018 - 11th International Conference on Biomedical Electronics and Devices

132

Table 2: Summary of results on estimated knee angle using Qualisys, Accelerometer sensors, IMU sensors, Microsoft Kinect

v1, and Microsoft Kinect v2 for each exercise. ROM stands as range of motion and RMSE represents root mean square error.

LOA, B, and CV stand as 95% limits of agreement, bias, and coefficient of variations. Pearson correlation coefficient in this

table is shown by R.

Exe 1

Exe 2

Exe 3

Exe 4

Exe 5

Exe 6

Exe 7

ROM

Qualisys

[20,25]

[22,102]

[2,95]

[16,110]

[21,85]

[22,105]

[19,116]

Acc

[20,24]

[7,98]

[0,93]

[12,115]

[11,89]

[16,101]

[20,114]

IMU

[18,24]

[11,101]

[3,89]

[21,105]

[33,86]

[31,102]

[23, 201]

Kinectv1

-

-

-

[0, 144]

[1,81]

[3,72]

[1,85]

Kinectv2

-

-

-

[ 3,172]

[4,106]

[10,88]

[2,99]

RMSE

Acc

0.46

3.44

0.92

1.00

1.66

2.78

4.34

IMU

0.39

2.73

0.92

3.78

6.74

3.34

3.85

Kinectv1

-

-

-

10.21

12.52

17.61

13.29

Kinectv2

-

-

-

5.31

3.57

6.61

4.23

LOA

Acc

3.86

22.07

78.28

5.8

13.41

8.46

26.21

IMU

3.17

26.51

61.36

35.81

49.04

24.37

26.55

Kinectv1

-

-

-

25.25

33.23

42.63

26.47

Kinectv2

-

-

-

38.66

23.63

14.40

13.84

B

Acc

-0.45

-4.64

14.34

0.09

-1.72

-1.29

6.34

IMU

-0.46

-2.88

11.96

7.81

13.90

3.77

4.67

Kinectv1

-

-

-

-4.86

-10.50

-11.45

-8.53

Kinectv2

-

-

-

7.35

-0.76

-3.70

-1.78

CV

Acc

9

22

280

5

12

6

21

IMU

7

27

210

31

43

18

21

Kinectv1

-

-

-

22

33

35

24

Kinectv2

-

-

-

32

22

11

12

R

Acc

0.894

0.987

0.999

1

0.998

0.994

0.991

IMU

0.920

0.992

0.999

0.996

0.983

0.993

0.994

Kinectv1

-

-

-

0.964

0.908

0.796

0.949

Kinectv2

-

-

-

0.988

0.99

0.973

0.993

ACKNOWLEDGMENT

This study is supported by the Aage and Johanne

Louis-Hansen foundation, Aalborg University and by

the partners. For further information, see http://www.

labwellfaretech.com/fp/kneeortho/?lang=en

REFERENCES

Ahmed, H. & Tahir, M., 2017. Improving the Accuracy of

Human Body Orientation Estimation with Wearable

IMU Sensors. IEEE Transactions on Instrumentation

and Measurement, 66(3), pp.1–8. Available at:

http://ieeexplore.ieee.org/document/7811233/.

Anton, D. et al., 2016. Validation of a Kinect-based

telerehabilitation system with total hip replacement

patients. Journal of telemedicine and telecare, 22(3),

pp.192–197.

Auvinet, E. et al., 2017. Validity and sensitivity of the

longitudinal asymmetry index to detect gait asymmetry

using Microsoft Kinect data. Gait and Posture, 51,

pp.162–168. Available at: http://dx.doi.org/10.1016/

j.gaitpost.2016.08.022.

Bao, L. & Intille, S.S., 2004. Activity Recognition from

User-Annotated Acceleration Data. Pervasive

Computing, pp.1–17.

Bonnechère, B. et al., 2014. Validity and reliability of the

Kinect within functional assessment activities:

Comparison with standard stereophotogrammetry. Gait

and Posture, 39(1), pp.593–598.

C-Motion, 2017. Visual 3D. Available at: http://www2.c-

motion.com/products/visual3d/ [Accessed July 26,

2017].

Clark, R. A. et al., 2012. Validity of the Microsoft Kinect

for assessment of postural control. Gait and Posture,

36(3), pp.372–377. Available at: http://dx.doi.org/

10.1016/j.gaitpost.2012.03.033 [Accessed February 5,

2016].

Darby, J. et al., 2016. An evaluation of 3D head pose

estimation using the Microsoft Kinect v2. Gait &

Posture, 48, pp.83–88.

Diaz, E. M. et al., 2015. Evaluation of AHRS algorithms for

inertial personal localization in industrial environ-

Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation

133

ments. In Proceedings of the IEEE International

Conference on Industrial Technology. pp. 3412–3417.

Eltoukhy, M. et al., 2017. Improved kinect-based

spatiotemporal and kinematic treadmill gait

assessment. Gait and Posture, 51, pp.77–83. Available

at: http://dx.doi.org/10.1016/j.gaitpost.2016.10.001.

Huber, M. E. et al., 2015. Validity and reliability of Kinect

skeleton for measuring shoulder joint angles: A

feasibility study. Physiotherapy (United Kingdom),

101(4), pp.389–393. Available at: http://dx.doi.org/

10.1016/j.physio.2015.02.002.

Hyde, R. A. et al., 2008. Estimation of upper-limb

orientation based on accelerometer and gyroscope

measurements. IEEE Transactions on Biomedical

Engineering, 55(2), pp.746–754.

Kalkbrenner, C. et al., 2014. Motion Capturing with Inertial

Measurement Units and Kinect - Tracking of Limb

Movement using Optical and Orientation Information.

Proceedings of the International Conference on

Biomedical Electronics and Devices, pp.120–126.

Available at: http://www.scitepress.org/DigitalLibrary/

Link.aspx?doi=10.5220/0004787601200126.

Karantonis, D. M. et al., 2006. Implementation of a real-

time human movement classifier using a triaxial

accelerometer for ambulatory monitoring. IEEE

Transactions on Information Technology in

Biomedicine, 10(1), pp.156–167.

Lange, B. et al., 2012. Interactive Game-Based

Rehabilitation Using the Microsoft Kinect. Ieee Virtual

Reality Conference 2012 Proceedings, pp.170–171.

Available at: http://www.scopus.com/inward/record.

url?eid=2-s2.0-84860745387&partnerID=tZOtx3y1

[Accessed February 8, 2016].

Lin, J. F. S. & Kulic

́

, D., 2012. Human pose recovery using

wireless inertial measurement units. Physiological

measurement, 33(12), pp.2099–115. Available at:

http://www.ncbi.nlm.nih.gov/pubmed/23174667.

Madgwick, S. O. H., Harrison, A. J. L. & Vaidyanathan, R.,

2011. Estimation of IMU and MARG orientation using

a gradient descent algorithm. In IEEE International

Conference on Rehabilitation Robotics.

Mentiplay, B. F. et al., 2015. Gait assessment using the

Microsoft Xbox One Kinect: Concurrent validity and

inter-day reliability of spatiotemporal and kinematic

variables. Journal of Biomechanics, 48(10), pp.2166–

2170. Available at: http://dx.doi.org/10.1016/j.jbio

mech.2015.05.021 [Accessed June 2, 2015].

Microsoft, 2014. Kinect for Windows SDK 2.0. Available

at: https://www.microsoft.com/en-us/download/details.

aspx?id=44561 [Accessed January 31, 2017].

Microsoft, 2013. Kinect for Windows SDK v1.8. Available

at: https://www.microsoft.com/en-us/download/details.

aspx?id=40278 [Accessed January 31, 2017].

Pavão, S.L. et al., 2014. [Impact of a virtual reality-based

intervention on motor performance and balance of a

child with cerebral palsy: a case study]. Revista paulista

de pediatria : orga

̃

o oficial da Sociedade de Pediatria

de Sa

̃

o Paulo, 32(4), pp.389–94. Available at:

http://www.sciencedirect.com/science/article/pii/S010

3058214000173 [Accessed February 10, 2016].

Pedraza-Hueso, M. et al., 2015. Rehabilitation Using

Kinect-based Games and Virtual Reality. Procedia

Computer Science, 75(Vare), pp.161–168. Available at:

http://www.sciencedirect.com/science/article/pii/S187

7050915036947 [Accessed December 30, 2015].

Piqueras, M. et al., 2013. Effectiveness of an interactive

virtual telerehabilitation system in patients after total

knee arthoplasty: a randomized controlled trial. Journal

of rehabilitation medicine, 45(4), pp.392–6. Available

at: http://www.mendeley.com/catalog/effectiveness-

interactive-virtual-telerehabilitation-system-patients-

after-total-knee-arthoplasty-ra/.

Roetenberg, D., Luinge, H. & Slycke, P., 2013. Xsens

MVN : Full 6DOF Human Motion Tracking Using

Miniature Inertial Sensors. Technical report, pp.1–7.

Available at: http://en.souvr.com/product/pdf/MV

N_white_paper.pdf.

Russell, T. G. et al., 2011. Internet-Based Outpatient

Telerehabilitation for Patients Following Total Knee

Arthroplasty. Journal of Bone & Joint Surgery,

American Volume, 93–A(2), pp.113–120. Available at:

http://ezproxy.lib.ucalgary.ca/login?url=http://search.e

bscohost.com/login.aspx?direct=true&db=s3h&AN=5

7567660&site=ehost-live.

Schröder, Y. et al., 2011. Multiple kinect studies. Computer

Graphics, 2(4), p.6.

Sell, J. & O’Connor, P., 2014. The xbox one system on a

chip and kinect sensor. IEEE Micro, 34(2), pp.44–53.

Shimmer Sensing, 2016. Shimmer3 Wireless Sensor

Platform. Available at: http://www.shimmersensing.

com/products/shimmer3-development-kit [Accessed

November 7, 2017].

van Sint Jan, S., 2007. Color Atlas of Skeletal Landmark

Definitions, Elsevier Health Sciences.

Tousignant, M. et al., 2009. In home telerehabilitation for

older adults after discharge from an acute hospital or

rehabilitation unit: A proof-of-concept study and costs

estimation. Disability and Rehabilitation: Assistive

Technology, 1(4), pp.209–216. Available at: http://

www.ncbi.nlm.nih.gov/pubmed/19260168 [Accessed

February 24, 2016].

Tousignant, M. et al., 2011. Patients’ satisfaction of

healthcare services and perception with in-home

telerehabilitation and physiotherapists’ satisfaction

toward technology for post-knee arthroplasty: an

embedded study in a randomized trial. Telemedicine

journal and e-health : the official journal of the

American Telemedicine Association, 17(5), pp.376–82.

Available at: http://www.ncbi.nlm.nih.gov/pubmed/

21492030 [Accessed January 10, 2016].

Tseng, Y. C. et al., 2009. A wireless human motion

capturing system for home rehabilitation. In

Proceedings - IEEE International Conference on

Mobile Data Management. pp. 359–360.

Vernadakis, N. et al., 2014. The effect of Xbox Kinect

intervention on balance ability for previously injured

young competitive male athletes: A preliminary study.

Physical therapy in sport : official journal of the

Association of Chartered Physiotherapists in Sports

Medicine, 15(3), pp.148–155. Available at:

BIODEVICES 2018 - 11th International Conference on Biomedical Electronics and Devices

134

http://www.sciencedirect.com/science/article/pii/S146

6853X13000709 [Accessed August 24, 2015].

Wiedemann, L. G. et al., 2015. Performance evaluation of

joint angles obtained by the kinect V2. Technologies for

Active and Assisted Living (TechAAL), IET

International Conference on, pp.1–6. Available at:

http://ieeexplore.ieee.org/xpl/articleDetails.jsp?tp=&ar

number=7389248&url=http%3A%2F%2Fieeexplore.i

eee.org%2Fxpls%2Fabs_all.jsp%3Farnumber%3D738

9248.

Woolford, K., 2015. Defining Accuracy in the Use of

Kinect V2 for Exercise Monitoring. Proceedings of the

2nd International Workshop on Movement and

Computing, pp.112–119. Available at: http://doi.acm.

org/10.1145/2790994.2791002%5Cnhttp://dl.acm.org/

ft_gateway.cfm?id=2791002&type=pdf.

Xu, X. & McGorry, R. W., 2015. The validity of the first

and second generation Microsoft Kinect

TM

for

identifying joint center locations during static postures.

Applied Ergonomics, 49, pp.47–54. Available at:

http://www.sciencedirect.com/science/article/pii/S000

3687015000149 [Accessed October 16, 2015].

Yeung, L. F. et al., 2014. Evaluation of the Microsoft

Kinect as a clinical assessment tool of body sway. Gait

& Posture, 40(4), pp.532–538. Available at:

http://dx.doi.org/10.1016/j.gaitpost.2014.06.012.

Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation

135