Towards a Digital Personal Trainer for Health Clubs

Sport Exercise Recognition Using Personalized Models and Deep Learning

Sebastian Baumbach

1,2

, Arun Bhatt

2

, Sheraz Ahmed

1

and Andreas Dengel

1,2

1

German Research Center for Artificial Intelligence, Kaiserslautern (DFKI), Germany

2

University of Kaiserslautern, Germany

Keywords:

Human Activity Recognition, Sport Activities, Machine Learning, Deep Learning, LSTM.

Abstract:

Human activity recognition has emerged as an active research area in recent years. With the advancement

in mobile and wearable devices, various sensors are ubiquitous and widely available gathering data a broad

spectrum of peoples’ daily life activities. Research studies thoroughly assessed lifestyle activities and are

increasingly concentrated on a variety of sport exercises. In this paper, we examine nine sport and fitness

exercises commonly conducted with sport equipments in gym, such as abdominal exercise and lat pull. We

collected sensor data of 23 participants for these activities, for which smartphones and smartwatches were

used. Traditional machine learning and deep learning algorithms were applied in these experiments in order

to assess their performance on our dataset. Linear SVM and Naive Bayes with Gaussian kernel performs best

with an accuracy of 80 %, whereas deep learning models outperform these machine learning techniques with

an accuracy of 92 %.

1 INTRODUCTION

It is commonly known that sport activities and regular

exercises are the key for preserving people’s physical

and mental health. In 2010, the British Association

of Sport and Exercise Sciences published a consensus

statement pointing out the correlation between no reg-

ular physical activity and an increased risk of cardio-

vascular disease or type 2 diabetes (O’Donovan et al.,

2010). Consequently, people regardless of their age

seek to take part in exercise programs or join gyms

to improve their fitness and strengthen their muscles.

It is also recommended to regularly perform sport as

this training lower blood pressure, improve glucose

metabolism, and reduce cardiovascular disease risk

(O’Donovan et al., 2010).

However, many athletes suffer from the right motiva-

tion to constantly practice over a long period of time.

According to the study of Scott Robert, this is one of

the people’s main reason for hiring a personal train-

ing: The wish to have someone motivating themselves

(Roberts, 1996).

Issues arouse from a practical perspective. Hiring a

personal trainer is expensive; especially when exer-

cising with a professional trainer. It does not make a

difference whether a personal trainer is hired privately

or provided by gyms. Professional health clubs usu-

ally offer personal trainer as a supplementary service

promise. Even in this case, however, sportsmen are

not constantly guided and supervised during their ex-

ercises in a way, which is really beneficial. Personal

trainers are still a large cost factor and thus, there can-

not normally be assigned a personal trainer per ath-

letes over their entire training session.

Those problems can be avoided with a system that

functions as a personal trainer, which accompany

each and every sportsmen in their training. A dig-

ital personal trainer that is able to supervise ath-

letes in their training has great potential to support

both professional and amateur athletes. A system

integrated into sport equipment can guide exercises

through their training not only helping to motivate

people. It can also supervise athletes performing sport

activities, which increases the safety and efficiency of

their training.

While many research studies focused on movement

activities (i.e. walking or jogging (Parkka et al.,

2006)) or daily life actions (vacuum cleaning or

brushing teeth (Kao et al., 2009)), only little work has

evaluated sport activities beyond endurance. Conse-

quently, the problem being examined in this paper is

how to perform activity recognition with sport equip-

ment of modern gyms. Therefore, the focus lies on

common devices (such as chest press) which are well

438

Baumbach, S., Bhatt, A., Ahmed, S. and Dengel, A.

Towards a Digital Personal Trainer for Health Clubs - Sport Exercise Recognition Using Personalized Models and Deep Learning.

DOI: 10.5220/0006590504380445

In Proceedings of the 10th International Conference on Agents and Artificial Intelligence (ICAART 2018) - Volume 2, pages 438-445

ISBN: 978-989-758-275-2

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

known among athletes and a common practice per-

formed by many people in health clubs.

As to the best knowledge of the authors, no sport

equipment is currently available, which is automati-

cally sensing their users in gyms. This work utilized

the athlete’s smartphone and smartwatch, which are

widely available nowadays. Recent studies showed

the possibility to integrate such a human activity

recognition system in wearable devices (Ravi et al.,

2005; Shoaib et al., 2013). Sensor data of 23 par-

ticipants were collected performing nine common ex-

ercises with sport equipment in gyms. We evaluated

common state of the art machine learning algorithms

as well as latest deep learning models to assess their

classification accuracy. In order to enable and support

further research with our collected dataset, we made

our dataset publicly available.

In particular, this paper made following contributions:

• A novel and publicly available dataset contain-

ing smartphone and smartwatch sensor data of 23

male as well as female participants for nine com-

mon sport equipments of gyms. For each partici-

pants, we collected two sets of each exercise with

ten till fifteen repetition in each set. The dataset

can be downloaded from http://www.dfki.uni-

kl.de/∼baumbach/digital personal trainer.

• An detailed comparison of traditional machine

learning algorithms and state of the art deep learn-

ing techniques, i.e. LSTM. Experiments showed

that decision tree, linear SVM and Naive Bayes

with Gaussian kernel performed best with accu-

racy of 80 %. However, deep learning model

outperformed these machine learning models with

accuracy of 92 %

• Our results showed a significant increase of

26 percentage points in the performance of all

machine learning algorithms when personalized

models were used.

The rest of this paper is organized as follows.

Chapter 2 summarizes and assesses the state-of-the-

art in sport activity recognition for both machine

learning algorithms and deep learning techniques.

Section 3 presents the utilized activity recognition

process including the preprocessing steps on the data

as well as the applied classification algorithms. Sec-

tion 4 depicts the experimental setup where data for

23 participants were collected in a gym. Section

6 presents our finding where deep learning outper-

formed traditional machine learning algorithms by

twelve percentage points. Finally, the results are sum-

marized and discussed in Section 8.

2 RELATED WORK

Human Activity recognition is a vast area. Research

work have studied different kind of activities, rang-

ing from basic (such as walking, running, sleeping, or

climbing stairs) to complex (including eating, vacuum

cleaning, or swimming) activities. Especially sport

activities (e.g., basketball (Per

ˇ

se et al., 2009)), health

monitoring system (like sleep tracking (de Zambotti

et al., 2015) and patient care (Chen et al., 2014)) re-

cently gained attention in the research community.

Promising results for deep learning also stimulated

further research in the field of human activity recog-

nition. Studies already conducted using deep neu-

ral network outperformed traditional machine learn-

ing approaches.

2.1 Recognition of Sports Activities

Although research work in the field of human activ-

ity recognition traces back to the 90s (Polana and

Nelson, 1994) and assessed many fitness and sport

exercises, only little work about sport equipment of

gyms were published so far. Numerous studies fo-

cused the domain ”ambulation” (such as walking or

jogging), daily life (like reading or stretching), or up-

per body activities (e.g., chewing or speaking) (Lara

and Labrador, 2013). Interested readers are pointed

to the extensive survey on human activity recognition

published by Lara et al. (Lara and Labrador, 2013).

Prior work focused on placing multiple acceleration

sensors on several parts of the participant’s body

(Parkka et al., 2006; Subramanya et al., 2012). This

setup were capable of identifying a wide range of ac-

tivities, such as running, walking, or climbing stairs.

However, they require users to wear multiple propri-

etary sensors distributed across his body. To come

around these limitations, other studies conducted ex-

periments where only a single accelerometer mea-

sures the activities (Lee, 2009; Long et al., 2009).

With the constantly growing availability of mobile

and wearable devices over the last years, ”ubiquitous

sensing” (Lara and Labrador, 2013) comes into fo-

cus. Consequently, several investigations assessed the

use of these widely available mobile devices for HAR.

(Ravi et al., 2005; Lester et al., 2006).

Tapia et al. (Tapia et al., 2007) presented a real-time

algorithm for automatic recognition of physical activ-

ities and partly their intensities. They utilized five tri-

axial accelerometers and a heart rate monitor to dif-

ferentiate 30 physical gymnasium activities from 21

participants. For recognizing activity types with their

intensity, the authors obtained a recognition accuracy

of 94.6 % using subject-dependent and 56.3 % using

Towards a Digital Personal Trainer for Health Clubs - Sport Exercise Recognition Using Personalized Models and Deep Learning

439

subject-independent training.

Velloso et al. (Velloso et al., 2013) dealt in their work

with the qualitative activity recognition of weight lift-

ing exercises. Their goal was the recognition of cor-

rect and false execution as well as providing feedback

to the user. For a 10-fold cross validation, their ap-

proach scored a precision of 98.03%. For leave-one-

subject-out cross validation it scored 78.2%.

2.2 Machine Learning vs. Deep

Learning

Deep learning is by no means a new technology, the

recent progress in GPU based data processing gave

new possibilities to apply deep learning to a wide va-

riety of problems. This section provides an overview

over the recent research results and classification ac-

curacy in deep activity recognition.

Yang et al. (Yang et al., 2015) proposed a con-

volutional neural network (CNN) with 17 layer and

rectified linear units (ReLU) as activation function.

Alsheikh et al. (Alsheikh et al., 2016) applied the

deep learning paradigm to triaxial accelerometers

and presented a hybrid approach of deep belief net-

work (DBN) and hidden Markov models (called DL-

HMM) for sequential activity recognition. The au-

thors showed that deep models outperform shallow

ones, more layers will enhance the recognition ac-

curacy, and overcomplete representations are advan-

tageous. Ord

´

o

˜

nez et al. (Ord

´

o

˜

nez and Roggen,

2016) proposed an 8 layer deep architecture based

on the combination of convolutional and long short-

term memory (LSTM) recurrent layers, called Deep-

ConvLSTM. Once trained in a full-supervised man-

ner, DeepConvLSTM directly works on raw data with

only minimal pre-processing required. Wang (Wang,

2016) proposed a continuous autoencoder (CAE) as a

novel stochastic neural network as well as a new fast

stochastic gradient descent (FSGD) algorithm to up-

date the gradients of the CAE. The FSGD is capable

of achieving a 0.3 % error rate after just 180 epochs

of training. Wang then applies time and frequency do-

main feature extract (TFFE) to extract feature vectors,

followed by PCA to end up with a 42 dimensional fea-

ture vector. This feature vector is then fed into a DBN

composed of stacked CAEs. The DBN consist of 6

layers (2 CAEs and a BP layer) and is trained in a

semi-supervised manner. Ronaoo and Cho (Ronaoo

and Cho, 2015) proposed to utilize CNNs to classify

activities. Their experiments showed that increasing

the number of convolutional layers increased perfor-

mance, but the complexity of the derived features de-

creased with every additional layer. Zeng et al. (Zeng

et al., 2014) proposed a method based on Convolu-

tional Neural Networks (CNN), which can capture lo-

cal dependency and scale invariance of a signal. They

use a 6-layer deep CNN (input - convolution - max-

pooling - fully connected - fully connected - softmax).

3 METHODOLOGY OF THE

SPORT ACTIVITY

RECOGNITION PROCESS

The exercises sensed in our experiment consists of

rotation, magnetic field and acceleration of different

body part. The accelerometer sensor data from phone

and watch needs to be preprocessed before the data

can be classified by machine learning approaches.

Furthermore, sensor data is noisy and passing the raw

data to the learning algorithms negatively effect the

accuracy of the recognition.

3.1 Preprocessing

The orientation of devices affects the accelerometer

sensor data (Thiemjarus, 2010). To standardize the

sensor data regarding the underlying coordinate sys-

tem, a rotation matrix was calculated using gyroscope

and magnetometer sensor data. This rotation ma-

trix was then used to transform the acceleration val-

ues from device coordinate to fixed word’s coordinate

system.

The sensor data from phone and watch contains noise

and outliers. The reason are the sensors’ inaccuracy

and noise in the sensors’ signals as well as some unex-

pected behavior of the users during the exercise. Re-

moving these noise element from sensor data proved

to produce better recognition results for human ac-

tivities (Wang et al., 2011). A median filter of order

three was applied to the sensor data to remove impulse

noise (Thiemjarus, 2010).

3.2 Feature Extraction

Data was collected with sample rate of 30 Hz for all

sensors. From this data, a specific set of features is ex-

tracted from each segment using a sliding window ap-

proach without inter-window gaps for segmentation.

Four different window sizes, 1.5, 2, 2.5 and 3 seconds

were used here.

A features vector were calculated on each sensor data

segment in two domain, namely the time and fre-

quency domain. Mean, Minimum, Maximum, Range,

Standard Deviation, and Root-Mean-Square were cal-

culated for time domain features. To calculate fea-

tures in the frequency domain first we transformed the

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

440

signal to frequency domain using Fast Fourier Trans-

form (Cooley et al., 1969). The dominant and the

second dominant frequencies were extracted from the

transformed signal.

For each feature 4 values are calculated, one for each

axis A

x

, A

y

, A

z

and the fourth component as magni-

tude component calculated by

p

x

2

+ y

2

+ z

2

. These

features were extracted for each sensor and for each

device. Thus, we used a feature vector of 192 values

to define the feature space of exercises (2 device types

× 4 components × 8 features × 3 sensors). Each

window size in the sliding window corresponds to a

feature vector which describes one repetition of the

exercise. For deep learning, data from the segmented

windows were passed as input without any feature ex-

traction.

4 EXPERIMENTAL SETUP

The conducted experiments focused on collecting

data of activities from sport equipment of Unifit gym

located at the Technical University Kaiserslautern.

The data was used to build two datasets for evaluation

the performance of the system: an impersonal (user-

independent) and a hybrid personalized model.

4.1 Devices

We used Samsung Galaxy phone along with the Sam-

sung Gear Live smart watch to collect sensor data

from participants. A standalone Android application

was developed for the wear and a mobile Android

application was developed for the phone. The data

was collected with the constant frequency of 30Hz.

The smartphone was placed on a west band and at-

tached to the participant’s west aligned to the right

side. The smartwatch was worn on the left hand.

This arrangement facilitates the data with information

of hand movements and the lower body movements.

Sensors in the devices record different aspects of the

movement like acceleration, magnetic field, rate of

turn and orientation of sensor frame with respect to

earth.

4.2 Participants

The data was collected from 23 participants. 20 male

and three female participants took part in the experi-

ment. The dataset consists of data from participants

with novice, intermediate and expert level of exercise.

Each exercise has been performed in two till three sets

with 10 till 15 repetitions in each set. Table 1 shows

the demographics of exercise participation.

Table 1: Demographies of participants in experiment.

Attribute Novice Intermediate Expert

Age (years) 23-28 22-29 26-30

Height (cm) 168-184 166-199 166-175

Weight (kg) 67-83 62-95 65-69

4.3 Activities

According to the fitness trainers working in the unifit

gym, the most common gym exercises were chosen

for this research work. Table 2 shows the details of

performed exercises, number of participants for each

exercise and total number of sets. These exercises

include movement of different combinations of body

parts. These exercises were performed with the sport

equipment located in unifit gym.

Table 2: Experiment participation.

Exercises Participants Total sets

Abdominal Exercise 19 38

Back Extension 18 38

Chest Press 19 38

Fly 20 44

Lat Pull 16 30

Overhead Press 19 41

Pull Down 13 25

Rear Delt 18 38

Rotary Torso 17 36

5 DATASET

The conducted experiments results in a dataset con-

taining sensor readings of 23 participants with nine

common gym exercises. The total recording of 211.57

minutes contains 328 exercise set, each with 10 to 15

reputation. The data was collected in the form of CSV

files which contains values in x, y and z axis for each

sensor along with the timestamps. For each activity

the data was recorded in six CSV files, one for each

sensor and three for each device. Each CSV file con-

tains additional information about the participant such

as height, weight, age and gender. This personal in-

formation about the participant is useful to build a hy-

brid personalized models. CSV file name is in ’Ran-

domID ExeciseName DateTime Device Sensor’ for-

mate and gives information about device and sensor

type.

Towards a Digital Personal Trainer for Health Clubs - Sport Exercise Recognition Using Personalized Models and Deep Learning

441

6 EVALUATION

To evaluate the classification performance of differ-

ent machine learning algorithms for our dataset, three

different evaluation approaches were used: Partici-

pant separation and K-fold cross validation for imper-

sonal models as well as a hybrid personalized mod-

els. The classification performance was evaluated for

four most common traditional machine learning algo-

rithms. k-nearest neighbor with k=2 and k=5, Support

Vector Machine with linear and polynomial kernels,

Naive Bayes algorithm with Gaussian and Bernoulli

probabilities and decision tree.

6.1 Participant Separation

For the evaluation of the impersonal model, data of

19 participant for training and data of four participant

were used as test data. Table 3 shows the classifica-

tion results as f-measure score for different machine

learning algorithms. Linear SVM, Naive Bayes with

Gaussian probability and decision tree algorithms per-

formed best with window size 2.0 and 2.5 seconds.

The maximum recognition score of 80% was achieved

by decision tree and Naive Bayes classifier. Table 4

shows the confusion matrix for decision tree for win-

dow size 2.5 seconds.

Table 3: F-measure for different window sizes.

Models W=1.5 s W=2.0 s W=2.5 s W=3.0 s

KNN (K=2) 61 62 63 63

KNN (K=5) 61 62 63 63

Linear SVM 77 77 79 76

SVM Polynomial 69 68 67 67

Naive Bayes Gaussian 77 79 80 80

Naive Bayes Bernoulli 33 34 36 37

Decision tree 75 80 78 80

Table 4: Decision tree with window size 2.5 seconds (Par-

ticipant separation).

Activity

Abdominal Exercise

Back Extension

Chest Press

Fly

Lat Pull

Overhead Press

Pull Down

Rear Delt

Rotary Torso

Recall

Abdominal

Exercise

10291 447 294 4 53 35 0 9 228 0.97

Back

Extension

111 17404 22 40 194 17 1 15 905 0.95

Chest Press 98 73 10037 127 118 285 126 150 553 0.89

Fly 11 0 0 8630 0 223 0 68 2251 0.89

Lat Pull 3 1 528 51 2631 1255 959 31 240 0.31

Overhead

Press

1 0 269 45 1162 8540 574 12 99 0.70

Pull Down 15 82 68 37 4146 1758 3831 12 166 0.67

Rear Delt 0 0 43 552 58 4 249 14206 0 0.98

Rotary

Torso

110 345 0 196 8 1 14 67 16981 0.79

Precision 0.91 0.93 0.87 0.77 0.46 0.80 0.38 0.94 0.96

6.2 Cross Validation

To further evaluate the performance of the impersonal

model, leave-one-out cross validation is applied. The

value was chosen according to the number of partici-

pant and average number of sets for exercises (Baum-

bach and Dengel, 2017). As the dataset contains data

from 23 participants and average sets performed for

each exercises are two, we used 46-fold cross valida-

tion here. Table 5 shows the performance measure

for different machine learning algorithms for cross

validation. Same as for participant separation, lin-

ear SVM and decision tree performed best with maxi-

mum f-measure score of 80%. Table 6 shows the final

confusion matrix for linear SVM as the average of the

classification results from 26 iterations.

Table 5: F-measure for different window sizes for 46 fold

cross validation.

Models W=1.5 s W=2.0 s W=2.5 s W=3.0 s

KNN (K=2) 60 68 60 68

KNN (K=5) 60 68 60 68

Linear SVM 80 80 79 79

SVM Polynomial 68 74 68 75

Naive Bayes Gaussian 75 72 74 74

Naive Bayes Bernoulli 35 30 35 32

Decision tree 78 79 77 79

Table 6: Linear SVM with window size 2.0 seconds (46-

Fold cross validation).

Activity

Abdominal Exercise

Back Extension

Chest Press

Fly

Lat Pull

Overhead Press

Pull Down

Rear Delt

Rotary Torso

Recall

Abdominal

Exercise

727 17 17 5 1 2 1 0 34 0.94

Back

Extension

3 835 2 25 4 5 10 1 8 0.94

Chest Press 6 2 701 7 6 41 8 3 15 0.83

Fly 11 18 18 554 34 12 0 129 60 0.64

Lat Pull 6 7 1 17 407 21 65 40 9 0.67

Overhead

Press

3 4 42 11 22 574 39 0 7 0.84

Pull Down 1 0 34 12 112 25 307 0 6 0.70

Rear Delt 0 1 12 149 14 1 1 638 3 0.78

Rotary

Torso

14 5 14 88 9 5 8 12 1489 0.91

Precision 0.9 0.94 0.89 0.66 0.71 0.82 0.62 0.78 0.91

6.3 Personalized Models

In the work of Baumbach and Dengel (Baumbach and

Dengel, 2017), the qualitative analysis of pushup ex-

ercise showed that personalized models improves the

classification accuracy. To assess the performance of

a personalized models, we utilize a hybrid personal

model with a two phase process. In the first phase,

the learning models (M) were trained on data from 22

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

442

participants and tested for one test participant (T). In

the second phase, a subset of data of the test partic-

ipant is used to train the models again. The data of

the test participant (T), was divided into two sets T1

and T2. The learning models (M) were again trained

using T1 and these newly trained models were tested

on data set T2. Table 7 shows the result of normal

and personalized models for window size 2.0 and 2.5

seconds. Results shows a significant increase in the

performance of all machine learning algorithms when

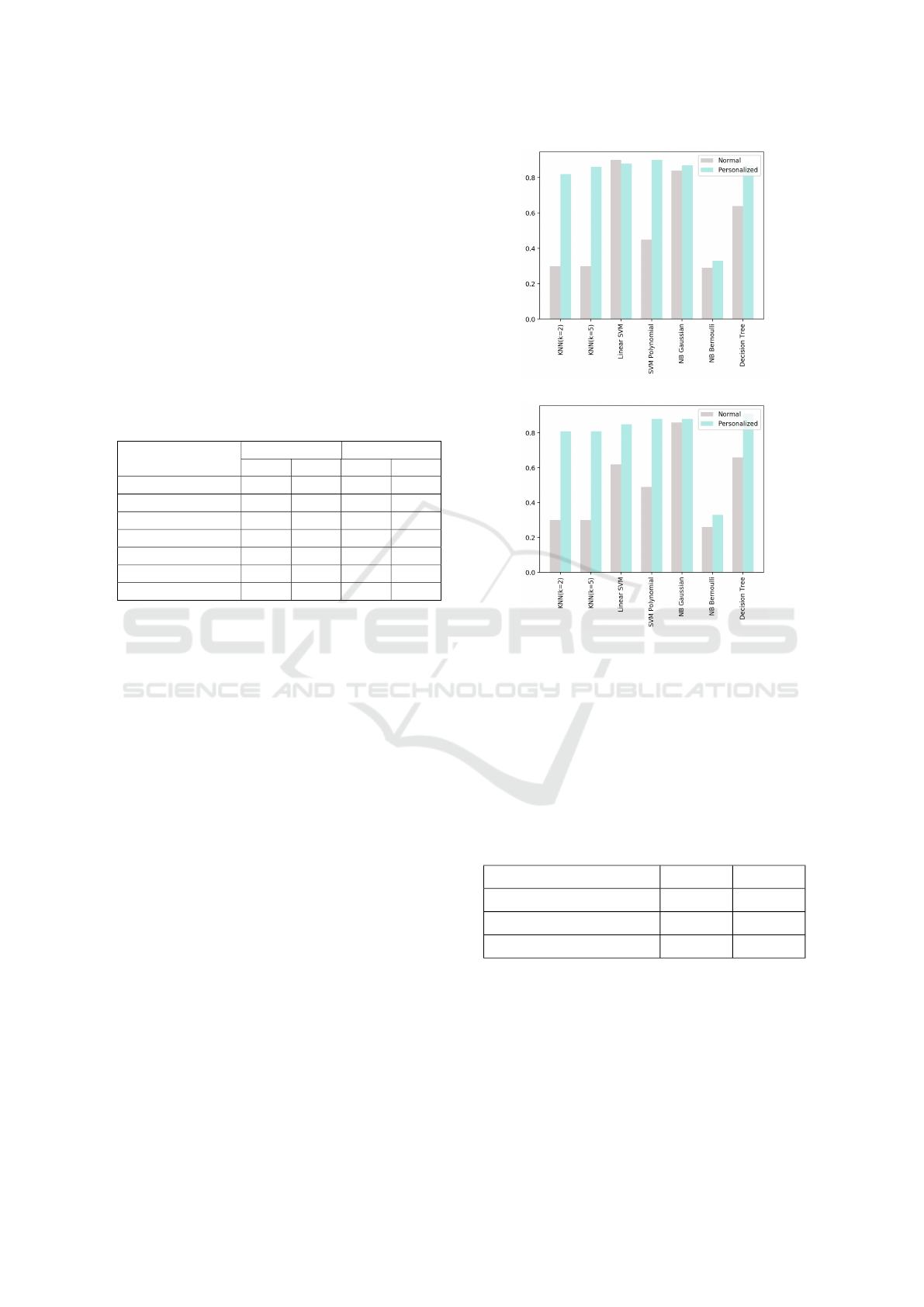

personalized models were used. Figure 1 shows the

comparison between normal and personalized model

in the form of bar charts.

Table 7: F-measure for different window sizes for hybrid

personalized model.

Models

Normal Personalized

w=2.0s w=2.5s w=2.0s w=2.5s

KNN (K=2) 30 30 82 81

KNN (K=5) 30 30 86 81

Linear SVM 90 62 88 85

SVM Polynomial 45 49 90 88

Naive Bayes Gaussian 84 86 87 88

Naive Bayes Bernoulli 29 26 33 33

Decision tree 64 66 87 91

7 DER - DEEP EXERCISE

RECOGNIZER

(Hammerla et al., 2016) showed a significant im-

provement of the classification accuracy for activity

recognition when deep learning algorithms were ap-

plied. This research work evaluated different deep

learning approaches such as Deep feed-forward net-

works, Convolutional networks and Recurrent net-

works using LSTM on three different datasets (Reiss

and Stricker, 2012; Chavarriaga et al., 2013; Bulling

et al., 2014). Neural networks with LSTM and CNN

outperformed in most of the cases. Our dataset con-

tains data in the form of time series, where the body

movement recorded at previous time stamps effects

the next time series value and thus, contributes to

the overall recognition accuracy. Using LSTM, the

network can exploit these temporal dependencies.

With these circumstances in mind, we developed a

deep neural network architecture using LSTM cells.

The deep neural network for our exercise recognition

(DER) consists of three hidden layers. Each hidden

layer consists of 150 LSTM cells. Dropout regulariza-

tion was used after each layer to prevent overfitting.

This deep neural architecture was again evaluated us-

ing participant separation, k-fold cross validation and

(a) w = 2.0 Seconds.

(b) w = 2.5 Seconds.

Figure 1: Comparison of Normal and Personalized Models.

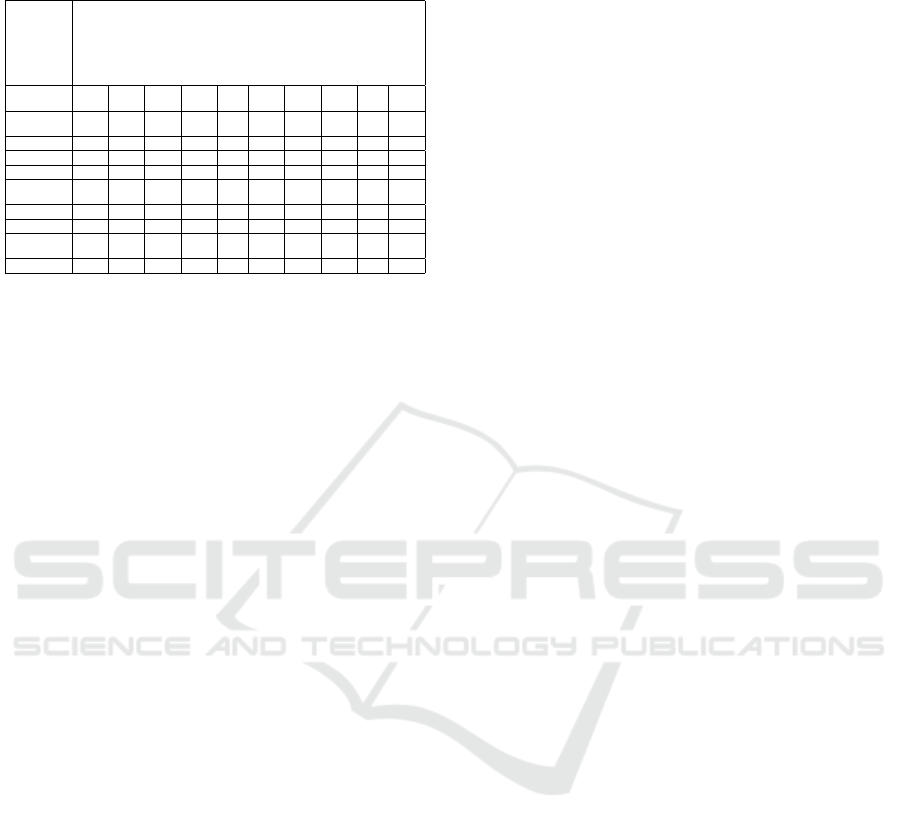

personalized models. Table 8 shows the result of the

classification for our proposed approach. The maxi-

mum score for f-measure achieved by traditional ma-

chine learning algorithm was 80% while the deep net-

work increases the classification performance by 12%

with maximum accuracy of 92%. Figure 9 shows the

confusion matrix for window size 2.5 Seconds.

Table 8: Results for deep neural network for classification.

Evaluation Method W=2.0 s W=2.5 s

Participant Separation 91 92

46-Fold Cross Validation 91 91

Personalized Models 81 82

8 CONCLUSION & FUTURE

WORK

In this paper, activity recognition for sport equipment

in modern gyms are assessed by applying different

machine learning algorithms and deep learning mod-

Towards a Digital Personal Trainer for Health Clubs - Sport Exercise Recognition Using Personalized Models and Deep Learning

443

Table 9: Deep Exercise Recognizer with LSTM (window

size = 2.5 seconds).

Activity

Abdominal Exercise

Back Extension

Chest Press

Fly

Lat Pull

Overhead Press

Pull Down

Rear Delt

Rotary Torso

Recall

Abdominal

Exercise

10935 45 85 700 4 70 312 439 0 0.91

Back

Extension

293 12716 48 118 103 19 51 298 22 0.95

Chest Press 61 49 12121 14 9 41 2 128 2 0.96

Fly 135 43 0 11802 59 26 50 881 0 0.78

Lat Pull 0 46 5 116 7780 253 66 82 651 0.89

Overhead

Press

7 9 178 13 56 10379 0 2 19 0.94

Pull Down 325 303 31 601 37 0 10893 220 0 0.96

Rear Delt 310 116 71 1683 21 40 26 24867 32 0.92

Rotary

Torso

0 24 51 96 636 176 0 62 6416 0.90

Precision 0.87 0.93 0.98 0.91 0.86 0.97 0.88 0.92 0.86

els. The results showed that learning approaches can

recognize different exercise types like pull down or

chest press. Among machine learning models, deci-

sion tree, linear SVM and Naive bayes with Gaussian

kernel performs best with a maximum accuracy of 80

percent. Furthermore, we proposed a deep neural net-

work for our exercise recognition (DER) consisting of

three hidden layers with each hidden layer having of

150 LSTM cells. DER outperformed traditional ma-

chine learning techniques with a maximum accuracy

of 92 percent. Additionally, we made the collected

dataset for our evaluation publicly available in order

to support and encourage further research.

The main drawback is the confusion between exer-

cises for the same body part, i.e., fly and rear delt as

well as lat pull, overhead press, and pull down. Since

mainly exercises for the same body part are affected,

other sensors producing more information could help

the recognition system differentiating between these

exercises.

Most important is conducting of larger experiments

in order to perform more robust evaluation. This in-

cludes experiments with not only more people, but

also more women and different levels of athletic (pro-

fessional and non-professional participants). This

work could be further extended by incorporating more

sensors (e.g. heart rate sensor) or by examining the

effects of changes to the location of sensors on the

exerciser’s body. In the same way, participant specific

attributes, such as height, weight, age, or gender, can

be fit into the models in order to assess if these kind of

physical information per participant leads to an higher

recognition accuracy.

REFERENCES

Alsheikh, M. A., Selim, A., Niyato, D., Doyle, L., Lin, S.,

and Tan, H.-P. (2016). Deep activity recognition mod-

els with triaxial accelerometers. In Workshops at the

Thirtieth AAAI Conference on Artificial Intelligence.

Bao, L. and Intille, S. S. (2004). Activity recognition from

user-annotated acceleration data. In Pervasive com-

puting, pages 1–17. Springer.

Baumbach, S. and Dengel, A. (2017). Measuring the perfor-

mance of push-ups - qualitative sport activity recogni-

tion. In Proceedings of the 9th International Confer-

ence on Agents and Artificial Intelligence, pages 374–

381. INSTICC, ScitePress.

Blair, S. N. (2009). Physical inactivity: the biggest public

health problem of the 21st century. British journal of

sports medicine, 43(1):1–2.

Bulling, A., Blanke, U., and Schiele, B. (2014). A tuto-

rial on human activity recognition using body-worn

inertial sensors. ACM Computing Surveys (CSUR),

46(3):33.

Chavarriaga, R., Sagha, H., Calatroni, A., Digumarti, S. T.,

Tr

¨

oster, G., Mill

´

an, J. d. R., and Roggen, D. (2013).

The opportunity challenge: A benchmark database for

on-body sensor-based activity recognition. Pattern

Recognition Letters, 34(15):2033–2042.

Chen, L. Y., Tee, B. C.-K., Chortos, A. L., Schwartz, G.,

Tse, V., Lipomi, D. J., Wong, H.-S. P., McConnell,

M. V., and Bao, Z. (2014). Continuous wireless pres-

sure monitoring and mapping with ultra-small passive

sensors for health monitoring and critical care. Nature

communications, 5.

Cooley, J. W., Lewis, P. A., and Welch, P. D. (1969).

The fast fourier transform and its applications. IEEE

Transactions on Education, 12(1):27–34.

de Zambotti, M., Baker, F. C., and Colrain, I. M.

(2015). Validation of sleep-tracking technology com-

pared with polysomnography in adolescents. Sleep,

38(9):1461–1468.

Foerster, F., Smeja, M., and Fahrenberg, J. (1999). Detec-

tion of posture and motion by accelerometry: a vali-

dation study in ambulatory monitoring. Computers in

Human Behavior, 15(5):571–583.

Garcia-Ceja, E. and Brena, R. (2015). Building personal-

ized activity recognition models with scarce labeled

data based on class similarities. In International Con-

ference on Ubiquitous Computing and Ambient Intel-

ligence, pages 265–276. Springer.

Hammerla, N. Y., Halloran, S., and Ploetz, T. (2016). Deep,

convolutional, and recurrent models for human ac-

tivity recognition using wearables. arXiv preprint

arXiv:1604.08880.

Kao, T.-P., Lin, C.-W., and Wang, J.-S. (2009). Develop-

ment of a portable activity detector for daily activ-

ity recognition. In Industrial Electronics, 2009. ISIE

2009. IEEE International Symposium on, pages 115–

120. IEEE.

Lara, O. D. and Labrador, M. A. (2013). A survey on human

activity recognition using wearable sensors. IEEE

Communications Surveys and Tutorials, 15(3):1192–

1209.

Lee, M. (2009). Physical activity recognition using a single

tri-axis accelerometer. In Proceedings of the world

ICAART 2018 - 10th International Conference on Agents and Artificial Intelligence

444

congress on engineering and computer science, vol-

ume 1.

Lester, J., Choudhury, T., and Borriello, G. (2006). A prac-

tical approach to recognizing physical activities. In

Pervasive Computing, pages 1–16. Springer.

Long, X., Yin, B., and Aarts, R. M. (2009). Single-

accelerometer-based daily physical activity classifica-

tion. In Engineering in Medicine and Biology Society,

2009. EMBC 2009. Annual International Conference

of the IEEE, pages 6107–6110. IEEE.

O’Donovan, G., Blazevich, A. J., Boreham, C., Cooper,

A. R., Crank, H., Ekelund, U., Fox, K. R., Gately,

P., Giles-Corti, B., Gill, J. M., et al. (2010). The abc

of physical activity for health: a consensus statement

from the british association of sport and exercise sci-

ences. Journal of sports sciences, 28(6):573–591.

Oniga, S. and Suto, J. (2016). Activity recognition in adap-

tive assistive systems using artificial neural networks.

Elektronika ir Elektrotechnika, 22(1):68–72.

Ord

´

o

˜

nez, F. J. and Roggen, D. (2016). Deep convolutional

and lstm recurrent neural networks for multimodal

wearable activity recognition. Sensors, 16(1):115.

Parkka, J., Ermes, M., Korpipaa, P., Mantyjarvi, J., Peltola,

J., and Korhonen, I. (2006). Activity classification us-

ing realistic data from wearable sensors. IEEE Trans-

actions on information technology in biomedicine,

10(1):119–128.

Per

ˇ

se, M., Kristan, M., Kova

ˇ

ci

ˇ

c, S., Vu

ˇ

ckovi

ˇ

c, G., and Per

ˇ

s,

J. (2009). A trajectory-based analysis of coordinated

team activity in a basketball game. Computer Vision

and Image Understanding, 113(5):612–621.

Pl

¨

otz, T., Hammerla, N. Y., and Olivier, P. L. (2011).

Feature learning for activity recognition in ubiqui-

tous computing. In Twenty-Second International Joint

Conference on Artificial Intelligence.

Polana, R. and Nelson, R. (1994). Low level recognition of

human motion (or how to get your man without find-

ing his body parts). In Motion of Non-Rigid and Artic-

ulated Objects, 1994., Proceedings of the 1994 IEEE

Workshop on, pages 77–82. IEEE.

Ravi, N., Dandekar, N., Mysore, P., and Littman, M. L.

(2005). Activity recognition from accelerometer data.

In AAAI, volume 5, pages 1541–1546.

Reiss, A. and Stricker, D. (2012). Introducing a new bench-

marked dataset for activity monitoring. In Wearable

Computers (ISWC), 2012 16th International Sympo-

sium on, pages 108–109. IEEE.

Roberts, S. (1996). The business of personal training. Hu-

man Kinetics.

Ronaoo, C. A. and Cho, S.-B. (2015). Evaluation of deep

convolutional neural network architectures for human

activity recognition with smartphone sensors. In Proc.

of the KIISE Korea Computer Congress, pages 858–

860.

Sefen, B., Baumbach, S., Dengel, A., and Abdennadher, S.

(2016). Human activity recognition - using sensor data

of smartphones and smartwatches. In Proceedings of

the 8th International Conference on Agents and Artifi-

cial Intelligence. International Conference on Agents

and Artificial Intelligence (ICAART-2016), volume 2,

pages 488–493. SCITEPRESS.

Shoaib, M., Scholten, H., and Havinga, P. J. (2013). To-

wards physical activity recognition using smartphone

sensors. In Ubiquitous Intelligence and Computing,

2013 IEEE 10th International Conference on and 10th

International Conference on Autonomic and Trusted

Computing (UIC/ATC), pages 80–87. IEEE.

Subramanya, A., Raj, A., Bilmes, J. A., and Fox, D. (2012).

Recognizing activities and spatial context using wear-

able sensors. arXiv preprint arXiv:1206.6869.

Tapia, E. M., Intille, S. S., Haskell, W., Larson, K., Wright,

J., King, A., and Friedman, R. (2007). Real-time

recognition of physical activities and their intensities

using wireless accelerometers and a heart rate moni-

tor. In Wearable Computers, 2007 11th IEEE Interna-

tional Symposium on, pages 37–40. IEEE.

Thiemjarus, S. (2010). A device-orientation independent

method for activity recognition. In Body Sensor

Networks (BSN), 2010 International Conference on,

pages 19–23. IEEE.

Velloso, E., Bulling, A., Gellersen, H., Ugulino, W., and

Fuks, H. (2013). Qualitative activity recognition of

weight lifting exercises. In Proceedings of the 4th

Augmented Human International Conference, pages

116–123. ACM.

Wang, L. (2016). Recognition of human activities using

continuous autoencoders with wearable sensors. Sen-

sors, 16(2):189.

Wang, W.-z., Guo, Y.-w., Huang, B.-Y., Zhao, G.-r., Liu, B.-

q., and Wang, L. (2011). Analysis of filtering methods

for 3d acceleration signals in body sensor network. In

Bioelectronics and Bioinformatics (ISBB), 2011 Inter-

national Symposium on, pages 263–266. IEEE.

Weiss, G. M. and Lockhart, J. W. (2012). The impact of

personalization on smartphone-based activity recog-

nition. In AAAI Workshop on Activity Context Repre-

sentation: Techniques and Languages, pages 98–104.

Yang, J. (2009). Toward physical activity diary: motion

recognition using simple acceleration features with

mobile phones. In Proceedings of the 1st interna-

tional workshop on Interactive multimedia for con-

sumer electronics, pages 1–10. ACM.

Yang, J., Nguyen, M. N., San, P. P., Li, X. L., and Krish-

naswamy, S. (2015). Deep convolutional neural net-

works on multichannel time series for human activ-

ity recognition. In Twenty-Fourth International Joint

Conference on Artificial Intelligence.

Zeng, M., Nguyen, L. T., Yu, B., Mengshoel, O. J., Zhu,

J., Wu, P., and Zhang, J. (2014). Convolutional neu-

ral networks for human activity recognition using mo-

bile sensors. In Mobile Computing, Applications and

Services (MobiCASE), 2014 6th International Confer-

ence on, pages 197–205. IEEE.

Towards a Digital Personal Trainer for Health Clubs - Sport Exercise Recognition Using Personalized Models and Deep Learning

445