Wrinkles Individuality Preserving Aged Texture Generation using

Multiple Expression Images

Pavel A. Savkin

1

, Tsukasa Fukusato

2

, Takuya Kato

1

and Shigeo Morishima

1

1

Waseda University, Tokyo, Japan

2

The University of Tokyo, Tokyo, Japan

Keywords:

Texture Synthesis, Facial Aging, Aged Wrinkles, And Facial Individuality.

Abstract:

Aging of a human face is accompanied by visible changes such as sagging, spots, somberness, and wrinkles.

Age progression techniques that estimate an aged facial image are required for long-term criminal or missing

person investigations, and also in 3DCG facial animations. This paper focuses on aged facial texture and

introduces a novel age progression method based on medical knowledge, which represents an aged wrinkles

shapes and positions individuality. The effectiveness of the idea including expression wrinkles in aging facial

image synthesis is confirmed through subjective evaluation.

1 INTRODUCTION

Facial aging is widely studied in computer vision

fields. Age classification of input faces has been par-

ticularly well-studied and various methods have been

proposed (Shu et al., 2016).

One of the well-known application for facial age

progression is a criminal investigation. High-quality

aged facial images would help camera-based authen-

tication systems to find such criminals or missing

person. Facial image can be aged with manual as-

sistance by special artists having medical knowledge

(age, 2011), but aging a facial image requires high-

level skills and creating photorealistic aged facial im-

ages of each criminal and missing person worldwide

is impractical. Therefore, creating aged facial images

without special skills has been widely researched.

Since it is recognized in the facial authentication field

that authentication accuracy would be improved by

also considering the skin texture [9], these age pro-

gression methods can be improved by providing ad-

ditional individuality features such as wrinkles, spots,

luster, and somberness.

Aging features undergo two major types of visible

changes: surface skin changes such as spots, somber-

ness, and wrinkles (Farage et al., 2008), and facial

shape changes under sagging or gravity (Coleman and

Grover, 2006). Facial wrinkles are among the most

significant changes, especially in older people. Wrin-

kling is caused by internal factors (reduction in skin

elasticity due to repetitive movements of facial mus-

cles) and external factors (smoking and irradiation by

direct sunlight) (Farage et al., 2008)(Pi

´

erard et al.,

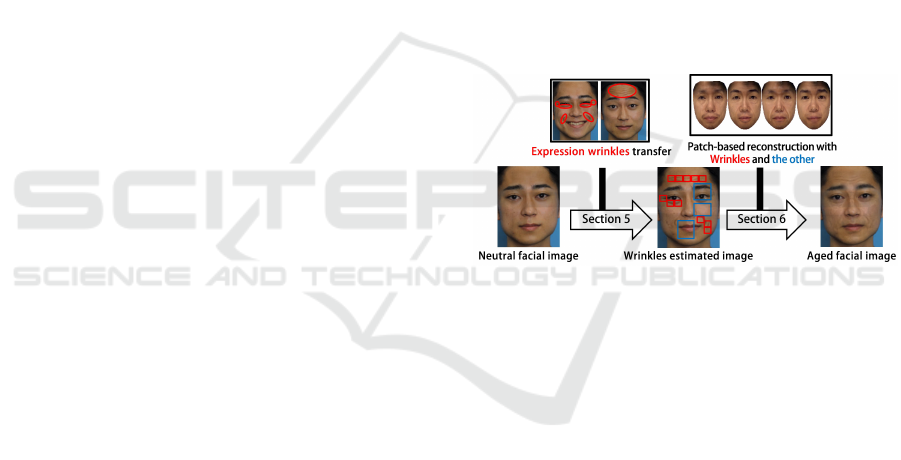

Figure 1: Workflow of our proposed method. First, by in-

spiring to the medical knowledge, expression wrinkles are

transferred to provide a guideline for the aged face synthe-

sis. Then, a patch-based synthesis approach is conducted

to generate a wrinkles shape- and position-preserving aged

facial image.

2003). Especially, internal factors of a single indi-

vidual are invariant with age. Therefore, it is safe to

say that wrinkling is one of the most important, im-

mutable, and predictable changes in aging.

This paper focuses on aged facial texture and pro-

poses an age progression method based on the med-

ical knowledge (Pi

´

erard et al., 2003) that aged wrin-

kles emerge from the wrinkles appearing in the ex-

pressions of younger faces (define as expression wrin-

kles). To examine this assumption, we modify the

texture synthesis method. With prepared multiple in-

put facial images: one with a neutral expression (neu-

tral facial image) and others with expression wrinkles

(expression facial images), We transfer the expression

wrinkles onto the neutral facial image, which can be

treated as ”guide wrinkles” that indicates where to

synthesize aged wrinkles. Using the wrinkles trans-

ferred image and a facial images database of the target

Savkin, P., Fukusato, T., Kato, T. and Morishima, S.

Wrinkles Individuality Preserving Aged Texture Generation using Multiple Expression Images.

DOI: 10.5220/0006614405490557

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 4: VISAPP, pages

549-557

ISBN: 978-989-758-290-5

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

549

age, we represent the age-likeness in wrinkles, spots,

and somberness by a patch-based texture reconstruc-

tion method. To analyze the effectiveness, we cre-

ate a new database with standardized lighting, head

pose, resolution, and races with only Japanese. The

subjective evaluation improved by including the ex-

pression wrinkles in image synthesis under the cre-

ated database, which suggests that the accuracy can

be improved of other age progression methods.

2 RELATED WORK

2.1 Linear Combination and Neural

Networks

Among the several methods for generating aged facial

images (Shu et al., 2016), most researchers adopt lin-

ear combination models. Several methods based on

active appearance models (AAMs) (Patterson et al.,

2006)(Park et al., 2010)(Suo et al., 2010) have been

proposed. For other methods, Scherbaum et al.

(Scherbaum et al., 2007) reconstructed an aged face

in three dimensions. The average face-based method

that applies the features in average faces of differ-

ent ages to the input facial image while account-

ing for skin color and the lighting environment were

introduced by Kemelmacher et al. (Kemelmacher-

Shlizerman et al., 2014). Shu et al. (Shu et al.,

2015) and Yang et al. (Yang et al., 2016) proposed a

linear combination-based method that preserves age-

invariant individual features or trains age properties

by Hidden Factor Analysis, respectively. The method

of Wang et al.(Wang et al., 2016) and Zhang et al.

(Zhang et al., 2017) proposed a method based on

neural networks, which showed a better performance.

These latest linear combination or neural network-

based methods improve the cross-age face verifica-

tion rate by considering the age-invariant features or

by age-evolution-based training. However, the indi-

viduality can be further improved by considering in-

dividual skin features that are age-dependent, such as

wrinkles, spots, luster, and somberness. Also, gener-

ating highly detailed aged facial images are common

problems in both methods.

2.2 Texture Synthesis

Maejima et al. (Maejima et al., 2014) proposed

an age progression method based on texture syn-

thesis. They synthesized a statistical wrinkle pat-

tern model to an input facial image and then applied

a patch-based reconstruction method called Visio-

lization (Mohammed et al., 2009) by using a database

of a target age. Finally, they synthesized the re-

constructed image to the input facial image. During

the reconstructed image synthesis, they excluded the

eyes, nose, and mouth areas to maintain their individ-

uality. While poorly defined edge textures in linear

combination and neural network methods are prob-

lematic, Maejima et al. employed a reconstruction

approach by using original textures in the database,

rather than combining ones. In this method, account-

ing for the individuality in skin texture would also

achieve a better accuracy.

2.3 Our Method

In all of the above methods, the authentication rate

and visual plausibility of aged facial images can be

improved by considering the aging-induced individu-

ality in skin texture. Our main aim is to provide such

a new features for incorporation into these methods in

texture generation.

Based on medical knowledge and by allowing in-

puts to be multiple images, we assume that the shapes

and positions of wrinkles are individually preserved

in the age progression. To examine its effect cor-

rectly in terms of visual plausibleness, we consider

two things. First, we modify the age progression

method of Maejima et al. (Maejima et al., 2014),

to obtain fine wrinkles in the aged face. Second, to

improve the visual plausibility, we construct a com-

pletely new database with various ages, and with

standardized lighting, head pose, and race with only

Japanese. Fig. 1 shows the workflow of our proposed

method. The shapes and positions of the aged wrin-

kles are estimated from the neutral and expression fa-

cial images. While reconstructing the image using the

target-age database, the local (wrinkles) and global

features (cheek luster etc.) are simultaneously repre-

sented by the modified representation method, which

changes the reconstruction patch size of each facial

area. Finally, the individualities of the eyes, nose, and

mouth are retained by Maejima et al.’s approach. Our

main contributions are as follows:

• Based on medical knowledge (Pi

´

erard et al.,

2003), we synthesize an aged facial image con-

taining aged wrinkles that are unique to the input

image. To achieve this, we prepare a neutral facial

image and multiple expression facial images and

estimate the shapes and positions of future wrin-

kles from the expression facial images.

• We create a new aging database that is standard-

ized for lighting, head pose, resolution, and race

with only Japanese. This database will be made

publicly available.

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

550

• We simultaneously synthesize aged wrinkles and

other global aging textures by dividing the facial

region into expression wrinkles and other areas.

While Maejima et al. assumed constant patch

sizes, we allow variable patch sizes for each fa-

cial area.

3 MEDICAL FACTS AND INPUT

PREPARATION

Aging-induced changes in facial appearance have

been widely researched in the medical field. Aging

features have been classified into two types: surface

skin changes such as spots, somberness, and wrinkles

(Farage et al., 2008) and facial shape changes caused

by sagging or gravity (Coleman and Grover, 2006).

Consequently, the facial appearance of an individual

changes greatly over time. Among the most signif-

icant features are facial wrinkles, which occur over

the entire face. On account of their distinctive nature

and wide distribution, wrinkles are used in person ver-

ification (Batool et al., 2013). Pi

´

erard et al. (Pi

´

erard

et al., 2003) reported that expression changes cause

wrinkles by repeatedly contracting the facial muscles

in the same positions, destroying the rigid structure

of the subcutaneous connective tissue. Therefore,

expression wrinkles can be a powerful and effective

metric for estimating the shapes and positions of the

future wrinkles.

In our study, we estimate the shapes and positions

of aged wrinkles from not only a neutral facial im-

age but also multiple expression facial images with

expression wrinkles. The input facial images are as-

sumed to be nearly frontal and not occluded. In addi-

tion, as the shapes and positions of expression wrin-

kles are independent of expression categories, we pre-

pare arbitrary single or multiple expression facial im-

ages. Since we focus on generating aged facial tex-

tures, sagging effect was not considered.

4 AGING DATABASE

CONSTRUCTION

We first explain the processing of our aging database.

Patch-based reconstruction by Visio-lization (Mo-

hammed et al., 2009) requires a target age database

representing wrinkles or the age-related features of

skin textures. To prevent reconstruction failure, fa-

cial features such as eyes, nose, and mouth should

be normalized at the same position in aging database.

Therefore, we normalize the shapes and positions of

the facial parts in our database by Maejima et al.’s

approach (Maejima et al., 2014). In addition, we

normalize the color in the aging database to that of

the neutral facial images of the input person, as de-

scribed by Kawai et al. (Kawai and Morishima,

2015). This step reduces the color differences be-

tween the database and the input.

5 EXPRESSION WRINKLES

TRANSFER

This section describes the process of estimating the

shapes and positions of aged wrinkles from facial im-

ages with neutral (Fig. 2(a)) and multiple expres-

sions (Fig. 2(b)). The flow proceeds in three steps:

expression normalization, expression wrinkles detec-

tion, and expression wrinkles transfer.

5.1 Expression Normalization

In Section 3, we mentioned that expression wrin-

kles in an expression facial image can effectively in-

dicate the appearance of aged wrinkles in individu-

als. Therefore, we propose a method that estimates

the shapes and positions of aged wrinkles by trans-

ferring expression wrinkles to a neutral facial im-

age. To accomplish this properly, we take the corre-

spondence between the neutral and expression facial

shapes. First, the facial feature points are obtained

from both images. The correspondence is then calcu-

lated by fitting the 2D facial template model into the

neutral and expression facial images by Noh et al.’s

(Noh et al., 2000) method, which smoothly interpo-

lates between the known facial feature points (RBF

centers) by RBF interpolation. Based on the fitted

models, we reshape the expression facial images to

the neutral facial shape by mesh deformation and gen-

erate expression normalized facial images (Fig. 2(c)).

5.2 Detection of Expression Wrinkles

To transfer the normalized expression wrinkles to the

neutral facial image, we detect wrinkles by a simple

automatic approach. The expression normalized fa-

cial images are processed by adaptive binary thresh-

olding. Eight neighbors in a continuous area of the

binary image are then labeled with the same index,

and bounding boxes (blobs) are output for each la-

beled area. The number of significantly large areas

is reduced by the facial feature points and the num-

ber of significantly small areas is reduced by setting

a threshold number of pixels S (In this paper, we set

S = 1.5e +02 when a facial area is about 1000×1000

Wrinkles Individuality Preserving Aged Texture Generation using Multiple Expression Images

551

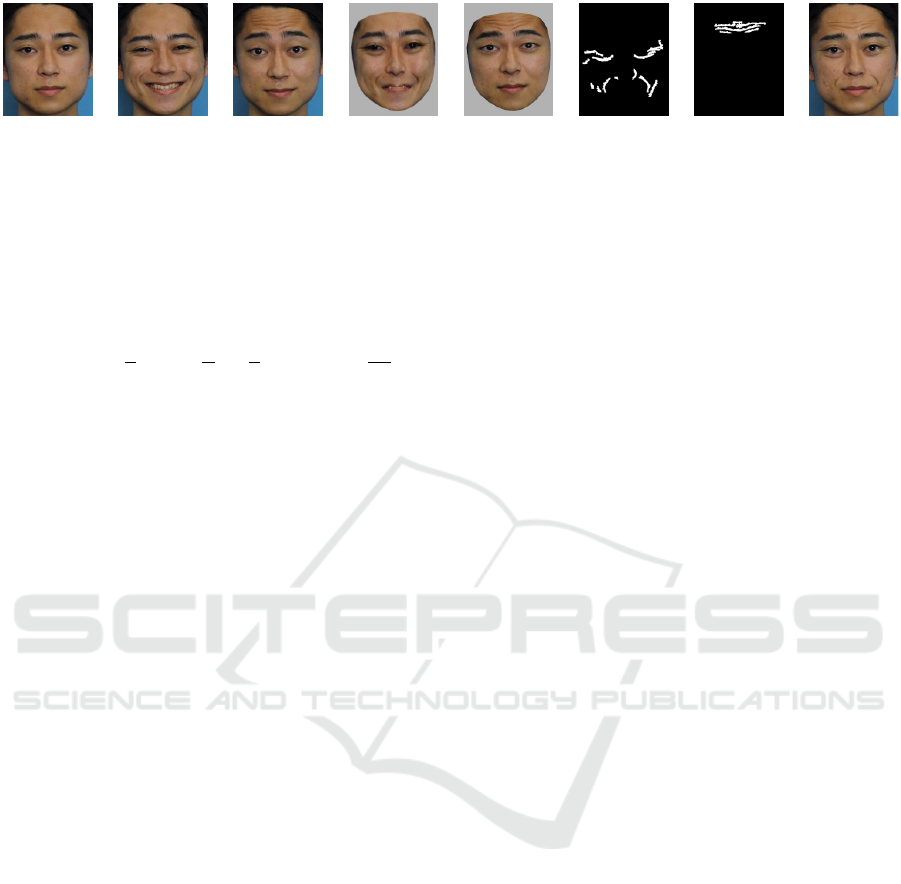

(a) (b) (c) (d) (e)

Figure 2: Input facial images and transfer of expression wrinkles. (a) and (b) are neutral and expression facial images with

expression wrinkles, respectively. (c) Expression facial images normalized to the neutral shape of facial images. (d) Wrinkles

detected images. (e) Wrinkles estimated image, which is generated by transferring the expression wrinkles from (c) to (a).

pixels). The wrinkles are detected by an evaluation

function that depends on the square closeness of the

blobs and the density of the pixels:

φ = α{1.0 − |

4

π

(tan

−1

(

h

w

) −

π

4

)|} + (1 − α)

s

wh

(1)

where α is a constant weight coefficient (0 ≤ α ≤ 1),

w and h are the width and height of the blobs, respec-

tively, and s is the number of pixels. The first term de-

scribes the diagonal angle of the blobs from the hor-

izontal line. As this angle approaches π/4, the blob

more closely resembles a square and the first term in

Eq. (1) increases. The second term describes the pixel

density in a blob, and is greater when the pixel density

is higher. Experimentally, we determine α = 0.5 for

φ < 0.8. To reduce the detection of such blobs that are

not wrinkles, we validate the wrinkles by reference to

facial areas. From experiments, the blobs are reduced

by using aspect ratio thresholding h/w > 1.5 around

the eyes and w/h > 1.5 around the mouth. Fig. 2(d)

shows the final detected results.

5.3 Transfer of Expression Wrinkles

The detected wrinkles are transferred to the neutral

facial image, generating the aged wrinkles estimation

result. We apply a seamless blending called Poisson

image editing (P

´

erez et al., 2003). This method pre-

serves the color of the target images by transferring

the luminance gradient of the source image, thereby

generating a synthesized image. The transfer requires

a source image, a target image, and a mask image

which determines the area to be synthesized. In our

case, the source, target, and mask images Fig. 2(c),

Fig. 2(a), and Fig. 2(d), respectively. Here, we

pass the mask image through a dilation filter. A re-

sult (wrinkles estimated image) is shown in Fig. 2(e).

Any existing wrinkle area in the neutral facial image

is removed from the wrinkle transfer by applying the

same wrinkle detection to the neutral facial image.

6 AGED FACE SYNTHESIS

6.1 Patch Sizes and Reconstructed

Results Change

When reconstructing an image using the aging

database, the reconstruction results appearance de-

pends on the patch size, as indicated in Fig. 3.

From Fig. 3(b) and (c), it can be seen that the large

patch size reconstruction better represents the features

of the target age, such as wrinkles and somberness,

whereas the small patch size reconstruction better pre-

serves the facial features of the input image, respec-

tively. To retain the shapes and positions of the wrin-

kles estimated image, we apply small-patch recon-

struction to the wrinkle-transferred regions. For other

regions, we apply large-patch reconstruction to repre-

sent the entire facial features of the target age.

6.2 Patch-based Reconstruction using

the Aging Database

Wrinkles estimated image is subjected to patch-based

reconstruction. First, the wrinkles estimated image

is normalized to the average facial shape in the same

way as described for the aging database construction

in Section 4. The division of areas into expression-

wrinkle and non-expression-wrinkle areas is demon-

strated in Fig. 4. Areas containing expression wrin-

kles are determined by referencing the wrinkles de-

tected images (Fig. 2(d)). If the neutral facial image

contains any aged wrinkles, its wrinkles detected im-

age is also used in the area selection. Unlike Mae-

jima et al. (Maejima et al., 2014), patch overlapping

is not conducted, in order to better represent spots and

somberness features. Also, patch continuity is disre-

garded to select a proper patch which relies only on

luminance similarity between the aging database and

the target image.

The small patches are reconstructed by selecting

patches with the following evaluation function. Let

I be the normalized wrinkles estimated image and D

n

be the n-th facial image in the target age database. The

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

552

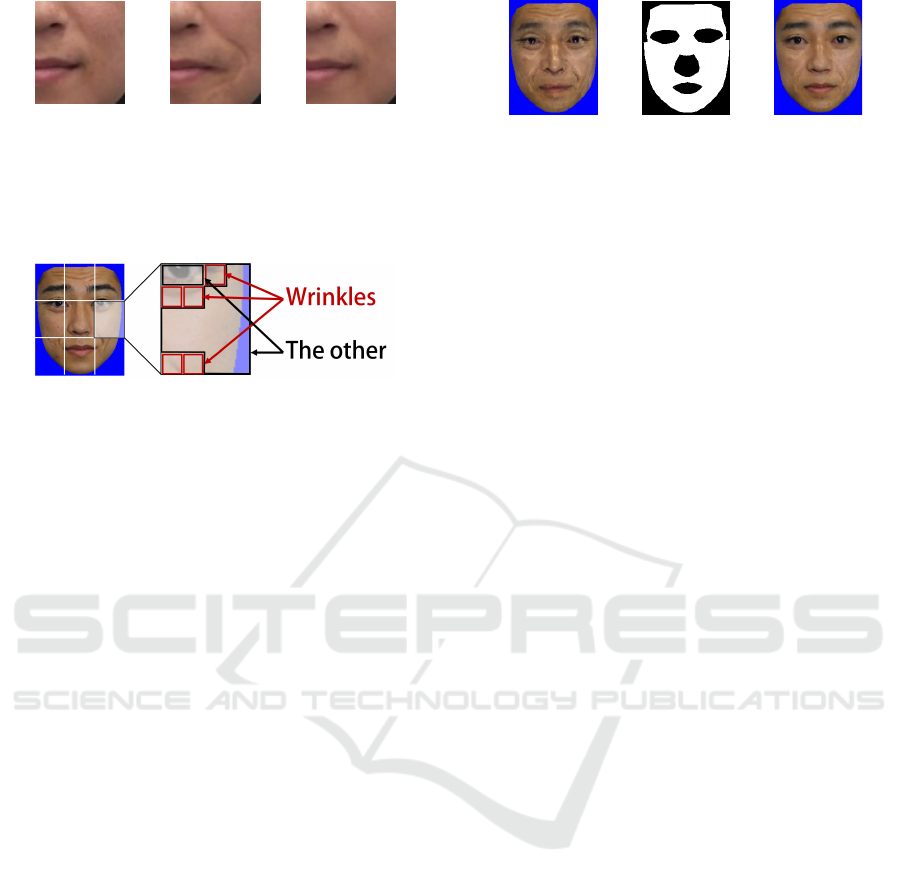

(a) (b) (c)

Figure 3: Effect of patch size on facial appearance. (a) In-

put image, (b) image reconstructed with large patches, and

(c) image reconstructed with small patches. The large and

small patches represent the databases features and the input

image features, respectively.

Figure 4: Assignment of expression wrinkles and other

regions. The existing area containing wrinkles is recon-

structed by small patches, and other areas are reconstructed

by a single large patch.

evaluation function selects the patch with the smallest

RGB Euclidean distance.

E

wrinkle

(n) =

∑

(x,y)∈P

||I

patch

(x,y) − D

n

patch

(x,y)||

2

(2)

where I

patch

(x,y) and D

n

patch

(x,y) are the RGB lumi-

nance vector (R,G,B) of the pixel (x,y) in a given

patch and P denotes the entire area of the small

patch. This evaluation function selects the patch that

best matches the color of the corresponding patch

on the input person. To more correctly estimate the

shapes and positions of the expression wrinkles, we

apply patch selection not only to the corresponding

patch but also to neighboring patches that are con-

centrically shifted within a constant range. Non-

expression-wrinkle patches are reconstructed by se-

lecting patches with the smallest energy, as calculated

by following Eq. (3).

E = β ∗ E

RGB

(n) + (1 − β) ∗ E

HOG

(n) (3)

where β is a constant weight coefficient selected from

[0,1]. E

RGB

and E

HOG

are respectively defined by

E

RGB

(n) =

∑

(x,y)∈P

∗

||I

patch

(x,y) − D

n

patch

(x,y)||

2

(4)

E

HOG

(n) = ||HOG(I|P

∗

) − HOG(D

n

|P

∗

)||

2

(5)

where P

∗

denotes the entire region of non-expression

wrinkles in the large patch. Eq. (4) and (5) are ex-

pressed in terms of the RGB Euclidean distance and

HOG features (Dalal and Triggs, 2005), respectively.

(a) (b) (c)

Figure 5: Patch-based reconstruction and the synthesized

result. (a) Reconstructed result. (b) Mask area excluding

the eyes, nose, and mouth. (c) Result of synthesizing (a) to

the normalized neutral facial image.

These equations incorporate the color of the input per-

son into the reconstruction. With HOG features, we

can select patches with spots, somberness and skin

luster, which would better represent the target age fea-

tures. Here, we set β = 0.5. The reconstructed result

is shown in Fig. 5(a).

6.3 Synthesizing the Reconstructed

Result

The reconstructed result should not be taken as the

final aged facial image for two reasons. First, the

boundary lines between patches are unnatural. Sec-

ond, the reconstructed result loses the individuality

of the input person’s eyes, nose, and mouth. Hence,

we adopt Maejima et al.’s approach (Maejima et al.,

2014) and synthesize the reconstructed result to the

neutral facial image. The synthesis is detailed in Mae-

jima et al.’s paper (Maejima et al., 2014). The synthe-

sized result is then reshaped to the neutral facial im-

age with background, generating the final result (Fig.

5(c)).

7 EXPERIMENT AND

EVALUATION

7.1 Synthesized Results

Fig. 6 shows facial images of a male in his 20s, and

projected to ages of 50s to 70s by our method and

Maejima et al.’s (Maejima et al., 2014) method. To

compare results wrinkles position, we also present an

image where the regions containing expression wrin-

kles were marked by hand on a neutral expression.

Fig. 7 shows the facial images of the young male in

his 20s projected to ages of 50s by our method and

Maejima et al.’s (Maejima et al., 2014) method. For

comparison, we also present the actual photographs

of the young man at the target age (the ground truth).

This aged facial image generated by our method con-

siders the expression wrinkles which are only con-

Wrinkles Individuality Preserving Aged Texture Generation using Multiple Expression Images

553

Neutral

Facial Image

Smile Surprise

Wrinkles

Estimated Image

Wrinkles

Marked Image

Proposed

[Maejima

et al. 2014]

51-60 61-70 71-80

Figure 6: Results of 21 − 30 year-old male, aged by the

proposed method and [Maejima et al. 2014] method.

fined to the right side. As examples, we applied smile

and surprise expressions in Fig. 6, and smile expres-

sions in Fig. 7. The resolution of the normalized fa-

cial image is 300 × 300 pixels. In Maejima et al.’s

method, the patch size was 40 × 40 pixels with an

overlap of 20 pixels. In our method, the large and

small patch sizes were 75×75 pixels and 5 × 5 pixels

respectively and the small patches were shifted over

concentric regions extending to 80 pixels. In both

methods, these parameters were determined empiri-

cally. The numbers of pictures in each age group and

gender of the aging database are listed in Table 1.

Comparing the results of the proposed and Mae-

jima et al.’s method (Maejima et al., 2014) with the

wrinkle-marked image in Fig. 6, we observe that the

proposed method better represents the shapes and po-

sitions of the wrinkles; for example, the nasolabial

folds and wrinkles in the forehead. Moreover, as

demonstrated in Fig. 7, the nasolabial folds and wrin-

kles around the eyes are closer to the ground truth

in our method than in Maejima et al.’s method. As

mentioned in Pi

´

erard et al. (Pi

´

erard et al., 2003), our

method preserves the individual qualities of the aged

wrinkles. Moreover, these results imply that the spots

and somberness in the non-expression-wrinkle area

are better represented by our method than by Mae-

jima et al. We attribute this success to the avoid-

ance of overlapping and continuity, which suppress

smoothing and propagate patches without aging fea-

tures through the reconstruction step.

Neutral Facial Image Expression Facial Image Wrinkle Facial Image

Fround Truth Proposed [Maejima et al. 2014]

Figure 7: Results of aging a 21 −30 year old male to 51−60

by our method and [Maejima et al. 2014] method.

7.2 Subjective Evaluation

To validate our method in terms of wrinkles indi-

viduality, synthesis naturalness, and how much it re-

sembles the target age, we carried out the following

subjective evaluation. By internet searching, we first

selected neutral and expression facial images of 15

people aged in their 20s (11 males, 4 females), and

ground truth images of the same people at a later

age. In 2, 9 and 4 of the ground truth images, the

subjects were aged in their 50s, 60s, and 70s, re-

spectively. When the expression wrinkles of an input

person were too poorly resolved to detect, the wrin-

kles were manually selected in the mask image. The

15 internet subjects were also chosen because their

ground truth images exhibit noticeably aged features

such as wrinkles and old skin textures. The images

were presented to 32 study participants (21 males, 11

females). Specifically, an image created by our pro-

posed method and Maejima et al.’s (Maejima et al.,

2014) method were randomly placed at either side of

the ground truth, and referred to as facial image A and

facial image B, respectively. A neutral facial image of

the same target person at a younger age was also pre-

sented. The 32 participants reported their answers on

a questionnaire. To ensure that answers are focused

on the unique aging process of the target person, and

not the maturity of the person’s appearance, we pro-

vided the actual ages below the neutral facial image

and the ground truth. To emphasize that the target age

equals the ground truth age, we also wrote the age

below facial image A and facial image B. The partici-

pants evaluated the naturalness of facial images A and

B on a 5-point Likert scale. They were asked to esti-

mate the ages of images A and B in decade units (20s

to 70s). Also, they were asked which image they per-

ceived to best match the ground truth in terms of the

wrinkles individuality, the age-likeness of the aged

wrinkles, and the age-likeness of other skin textures

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

554

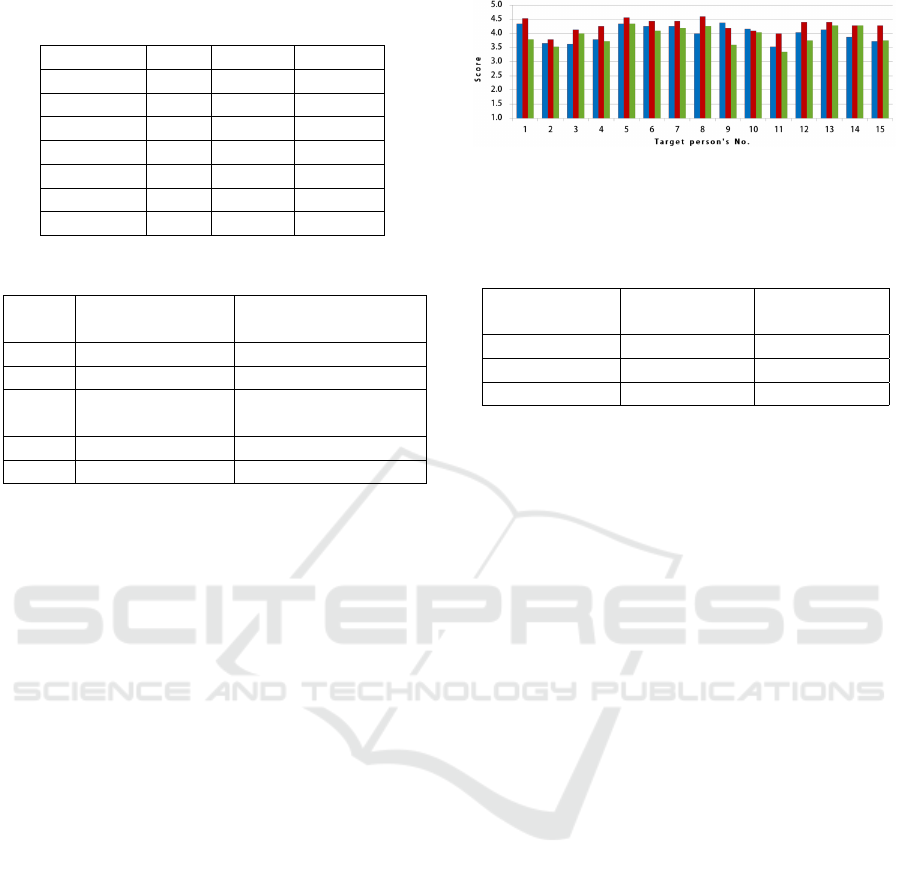

Table 1: Number of pictures stored for each age group and

gender.

Age range Male Female Subtotal

21-30 14 27 41

31-40 22 31 53

41-50 36 71 107

51-60 7 9 16

61-70 10 10 20

71-80 9 5 14

Total 98 153 251

Table 2: Evaluation items in the subjective evaluation.

Likert Synthesis Comparison between

scale naturalness the ground truth

1 Disagree A is closer

2 Slightly disagree A is slightly closer

3

Neither agree Neither A nor B

nor disagree is closer

4 Slightly agree B is slightly closer

5 Agree B is closer

on a 5-point Likert scale. The options for evaluating

naturalness are given in Table 2. Wrinkles individ-

uality refers to how accurately the shapes and posi-

tions of the wrinkles match those of the ground truth.

Age-likeness of the aged wrinkles (and skin textures)

refers to whether the appearances of the aged wrinkles

(and skin textures other than wrinkles) are consistent

with the processed image and the ground truth. Fig.

8 presents the average scores of the 32 participants

for each target person (labeled by their ID) assigned

to individuality and age-likeness of the aged wrinkles

and the age-likeness of other skin textures. Scores of

5, 3 and 1 mean that our method is decidedly closer to

the ground truth, no closer than, and decidedly further

from the ground truth, respectively, than Maejima’s

method. Table 3 gives the average scores and stan-

dard deviations (SD) of the 15 image sets evaluated

by the 32 participants. The aging error was computed

as the ground truth age minus the perceived age. Ta-

ble 3 also lists the average naturalness scores and their

standard deviations rated (SD) by the 32 participants.

As shown in Fig. 8 our method was rated higher

than 3.0 in every item for every target person. The to-

tal average scores and their standard deviations were

4.00 ± 0.978 for wrinkles individuality, 4.29 ± 0.836

for age-likeness of aged wrinkles, and 3.93 ± 0.975

for age-likeness of other skin textures. Although the

scores are slightly variable, our method clearly out-

performed the previous method in all three evaluation

terms, confirming that our method better represents

that wrinkles individuality and surface skin appear-

ance of the ground truth than the previous method.

Figure 8: Evaluation scores of wrinkle individuality (blue

bars), age-likeness of the wrinkles (red bars), and age-

likeness of the skin textures (green bars).

Table 3: Statistics of synthesized image quality and age er-

ror.

Aging Error

Synthesis

Naturalness

Average ± SD Average ± S D

Proposed 13.4 ± 7.60 3.41 ± 1.22

Maejima et al. 25.4 ± 7.23 3.91 ± 1.06

To investigate how closely the appearance of the

entire skin texture approaches that of the ground truth,

we asked participants to assess the ages of our gener-

ated images. As the age of each image was provided,

and the experimental environment was designed so

that participants would focus solely on the aging pro-

cess of the targets, the validity of an aged facial image

could be effectively estimated by the above-defined

aging error.

Table 3 reveals that the aging error is lower in our

method than in the previous method, indicating that

the aged facial image generated by our method better

resembles the ground truth. As for the synthesis natu-

ralness, both methods scored above 3.0, although the

previous method was rated higher than ours. The nat-

uralness of our method may have been reduced by the

inconsistency of the wrinkle textures across the entire

face.

7.3 Limitations

Several problems and tasks are currently unresolved

in our method. Wrinkle detection might be im-

proved by Batool et al.’s (Batool and Chellappa, 2012)

method, and facial sagging could be properly added

by Kemelmacher et al.’s (Kemelmacher-Shlizerman

et al., 2014) method. Induced by the recent successes

on image generation based on Generative Adversarial

Network (Liao et al., 2017), applying such methods

would help generating high-quality and more accurate

images. Still, our observation of estimating the wrin-

kle position from the expression images would cer-

tainly be the important asset to improve the accuracy

of the generated wrinkles appearances and positions.

Wrinkles Individuality Preserving Aged Texture Generation using Multiple Expression Images

555

8 CONCLUSION

The medical literature reports that aged wrinkles are

the permanent impressions of expression wrinkles.

Based on this knowledge, we proposed a method that

captures the individuality of a person’s aging-induced

wrinkles. From subject evaluations it is confirmed

that preserving the wrinkles individuality effectively

improves the visual plausibility. We aim to examine

our ideas with image generation approaches based on

Generative Adversarial Network (Liao et al., 2017) to

further improve our results.

Proposed method has a wide scalability in 3DCG

facial animations. In recent years, there are sev-

eral methods dealing with aged faces in 3DCG field

such as high fidelity 3D facial shape reconstruction

(Cao et al., 2015), and aged textures’ optical prop-

erty modeling and rendering (Iglesias-Guitian et al.,

2015). These methods are focusing on accurately re-

constructing or rendering the aged faces in real time.

Our method can provide high resolution aged facial

textures which considers both the facial and wrinkles

individuality. This enables making 3DCG aged facial

animation with better quality. Thus, we aim to real-

ize such system by improving our method in terms of

3DCG facial animation in the future.

ACKNOWLEDGEMENT

This work was supported by JST ACCEL Grant Num-

ber JPMJAC1602, Japan.

REFERENCES

(2011). Age progression, forensic and medical artist. https:/

/aurioleprince.wordpress.com/.

Batool, N. and Chellappa, R. (2012). Modeling and detec-

tion of wrinkles in aging human faces using marked

point processes. In European Conference on Com-

puter Vision, pages 178–188. Springer.

Batool, N., Taheri, S., and Chellappa, R. (2013). Assess-

ment of facial wrinkles as a soft biometrics. In 2013

10th IEEE International Conference and Workshops

on Automatic Face and Gesture Recognition (FG),

pages 1–7. IEEE.

Cao, C., Bradley, D., Zhou, K., and Beeler, T. (2015). Real-

time high-fidelity facial performance capture. ACM

Transactions on Graphics (TOG), 34(4):46.

Coleman, S. R. and Grover, R. (2006). The anatomy

of the aging face: volume loss and changes in 3-

dimensional topography. Aesthetic Surgery Journal,

26(1 Supplement):S4–S9.

Dalal, N. and Triggs, B. (2005). Histograms of oriented gra-

dients for human detection. In IEEE Computer Society

Conference on Computer Vision and Pattern Recogni-

tion, 2005, volume 1, pages 886–893. IEEE.

Farage, M., Miller, K., Elsner, P., and Maibach, H. (2008).

Intrinsic and extrinsic factors in skin ageing: a review.

International Journal of Cosmetic Science, 30(2):87–

95.

Iglesias-Guitian, J. A., Aliaga, C., Jarabo, A., and Gutier-

rez, D. (2015). A biophysically-based model of the

optical properties of skin aging. Computer Graphics

Forum, 34(2):45–55.

Kawai, M. and Morishima, S. (2015). Focusing patch: Au-

tomatic photorealistic deblurring for facial images by

patch-based color transfer. In Proceedings of Inter-

national Conference on Multimedia Modeling, pages

155–166. Springer.

Kemelmacher-Shlizerman, I., Suwajanakorn, S., and Seitz,

S. M. (2014). Illumination-aware age progression. In

Proceedings of the IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 3334–3341.

Liao, J., Yao, Y., Yuan, L., Hua, G., and Kang, S. B. (2017).

Visual attribute transfer through deep image analogy.

arXiv preprint arXiv:1705.01088v2.

Maejima, A., Mizokawa, A., Kuwahara, D., and Mor-

ishima, S. (2014). Facial aging simulation by patch-

based texture synthesis with statistical wrinkle aging

pattern model. In Mathematical Progress in Expres-

sive Image Synthesis I, pages 161–170. Springer.

Mohammed, U., Prince, S. J., and Kautz, J. (2009). Visio-

lization: generating novel facial images. In ACM

Transactions on Graphics (TOG), volume 28, page 57.

ACM.

Noh, J.-y., Fidaleo, D., and Neumann, U. (2000). Animated

deformations with radial basis functions. In Proceed-

ings of the ACM symposium on Virtual reality software

and technology, pages 166–174. ACM.

Park, U., Tong, Y., and Jain, A. K. (2010). Age-invariant

face recognition. IEEE Transactions on Pattern Anal-

ysis and Machine Intelligence, 32(5):947–954.

Patterson, E., Ricanek, K., Albert, M., and Boone, E.

(2006). Automatic representation of adult aging in

facial images. In Proc. IASTED International Confer-

ence on Visualization, Imaging, and Image Process-

ing, pages 171–176.

P

´

erez, P., Gangnet, M., and Blake, A. (2003). Poisson im-

age editing. In ACM Transactions on Graphics (TOG),

volume 22, pages 313–318. ACM.

Pi

´

erard, G. E., Uhoda, I., and Pi

´

erard-Franchimont, C.

(2003). From skin microrelief to wrinkles. an area ripe

for investigation. Journal of Cosmetic Dermatology,

2(1):21–28.

Scherbaum, K., Sunkel, M., Seidel, H.-P., and Blanz, V.

(2007). Prediction of individual non-linear aging tra-

jectories of faces. In Computer Graphics Forum, vol-

ume 26, pages 285–294. Wiley Online Library.

Shu, X., Tang, J., Lai, H., Liu, L., and Yan, S. (2015). Per-

sonalized age progression with aging dictionary. In

Proceedings of the IEEE International Conference on

Computer Vision, pages 3970–3978.

Shu, X., Xie, G.-S., Li, Z., and Tang, J. (2016). Age pro-

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

556

gression: current technologies and applications. Neu-

rocomputing, 208:249–261.

Suo, J., Zhu, S.-C., Shan, S., and Chen, X. (2010). A com-

positional and dynamic model for face aging. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 32(3):385–401.

Wang, W., Cui, Z., Yan, Y., Feng, J., Yan, S., Shu, X., and

Sebe, N. (2016). Recurrent face aging. In Proceed-

ings of the IEEE Conference on Computer Vision and

Pattern Recognition, pages 2378–2386.

Yang, H., Huang, D., Wang, Y., Wang, H., and Tang, Y.

(2016). Face aging effect simulation using hidden fac-

tor analysis joint sparse representation. IEEE Trans-

actions on Image Processing, 25(6):2493–2507.

Zhang, Z., Song, Y., and Qi, H. (2017). Age pro-

gression/regression by conditional adversarial autoen-

coder. arXiv preprint arXiv:1702.08423.

Wrinkles Individuality Preserving Aged Texture Generation using Multiple Expression Images

557