Data-driven Enhancement of SVBRDF Reflectance Data

Heinz Christian Steinhausen, Dennis den Brok, Sebastian Merzbach, Michael Weinmann

and Reinhard Klein

Institute of Computer Science II, University of Bonn, Bonn, Germany

Keywords:

Appearance, Spatially Varying Reflectance, Reflectance Modeling, Digitization.

Abstract:

Analytical SVBRDF representations are widely used to represent spatially varying material appearance de-

pending on view and light configurations. State-of-the-art industry-grade SVBRDF acquisition devices al-

low the acquisition within several minutes. For many materials with a surface reflectance behavior exhibit-

ing complex effects of light exchange such as inter-reflections, self-occlusions or local subsurface scatter-

ing, SVBRDFs cannot accurately capture material appearance. We therefore propose a method to transform

SVBRDF acquisition devices to full BTF acquisition devices. To this end, we use data-driven linear models

obtained from a database of BTFs captured with a traditional BTF acquisition device in order to reconstruct

high-resolution BTFs from the SVBRDF acquisition devices’ sparse measurements. We deal with the high

degree of sparsity using Tikhonov regularization. In our evaluation, we validate our approach on several mate-

rials and show that BTF-like material appearance can be generated from SVBRDF measurements in the range

of several minutes.

1 INTRODUCTION

The accurate digitization of material appearance has

been a challenging task for decades. Reproducing

fine details of surface reflectance is crucial for a re-

alistic depiction of virtual objects and is of great im-

portance for many applications in entertainment, vi-

sual prototyping or product advertisement. Among

the widely used reflectance models are analytical

spatially varying bidirectional reflectance distribu-

tion functions (SVBRDFs) that model the surface re-

flectance behavior per surface point depending on the

view and light configurations. While the acquisition

of a sparse set of images taken under different view-

light configurations can be achieved within several

minutes, the dense information is obtained by means

of fitting analytical models to the reflectance samples

captured per surface point. For instance, in the re-

cent work by Nielsen et al. (Nielsen et al., 2015), a

Tikhonov regularized reconstruction is used to recon-

struct isotropic BRDFs from a sparse set of measure-

ments.

However, many materials such as leathers or fab-

rics show a more complex reflectance behavior in-

cluding fine effects of light exchange such as inter-

reflections, self-occlusions or local subsurface scat-

tering. These effects are not captured by energy-

conserving analytical BRDFs. Capturing these ef-

fects requires the use of more complex reflectance

representations such as bidirectional texture functions

(BTFs) (Dana et al., 1997). These are parameter-

ized on an approximate surface geometry and store

spatially varying reflectance behavior depending on

the view and light conditions in a data-driven man-

ner within non-energy-conserving apparent BRDFs

(aBRDFs) (Wong et al., 1997) per surface point.

While a dense sampling of the view and light con-

ditions is required to accurately capture these effects,

the acquisition becomes prohibitively costly with time

requirements in the order of several hours (Schwartz

et al., 2014). To reduce the acquisition effort to only

a few hours, den Brok et al. (den Brok et al., 2014)

introduced a patch-wise sparse BTF reconstruction

based on linear models obtained from a given BTF

database.

In this paper, we extend the Tikhonov regularized

sparse reconstruction framework for isotropic BRDFs

proposed by Nielsen et al. (Nielsen et al., 2015) to-

wards a more general reflectance reconstruction in-

cluding the aforementioned complex effects of light

transport. Similar to the work of den Brok et al. (den

Brok et al., 2014), we consider a prior in the form

of a given BTF database to derive basis vectors that

are used to represent materials in terms of a linear

combination of these vectors. However, in contrast

to the approach of den Brok et al. (den Brok et al.,

Steinhausen, H., Brok, D., Merzbach, S., Weinmann, M. and Klein, R.

Data-driven Enhancement of SVBRDF Reflectance Data.

DOI: 10.5220/0006628602730280

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 1: GRAPP, pages

273-280

ISBN: 978-989-758-287-5

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

273

2014) we do not perform a patch-wise reconstruction

of aBRDFs, but use the more memory-efficient point-

wise reconstruction of aBRDFs in combination with

the more robust Tikhonov regularized reconstruction

framework. This allows, in contrast to the approach

presented by Nielsen et al. (Nielsen et al., 2015), cap-

turing complex effects of light exchange within the

reflectance model.

In summary, the key contributions of our work are:

• the practical acquisition of BTFs using SVBRDF

acquisition devices and

• a Tikhonov-regularized sparse BTF reconstruc-

tion framework based on data-driven linear mod-

els.

2 RELATED WORK

While detailed surveys on appearance acquisition and

modeling are provided in the literature (M

¨

uller et al.,

2004; Filip and Haindl, 2009; Haindl and Filip, 2013;

Weinmann and Klein, 2015), we focus on the acquisi-

tion and modeling of spatially varying reflectance un-

der varying viewing and illumination conditions and

only briefly review the corresponding developments.

Reflectance Modeling and Acquisition: In this

context, Spatially Varying Bidirectional Reflectance

Distribution Functions (SVBRDFs) (Nicodemus

et al., 1977) and Bidirectional Texture Functions

(BTFs) (Dana et al., 1997) have been widely applied

in the literature. While SVBRDFs are parameterized

over the surface of a material in terms of storing

independent BRDFs at different locations, BTFs

represent the reflectance behavior w.r.t. a surface that

does not necessarily coincide with the exact surface.

This is advantageous when considering materials

such as fabrics or leather where the exact surface

cannot accurately be reconstructed as their fine

structures fall below the resolution of the acquisition

device. As BTFs furthermore only rely on appar-

ent BRDFs (Wong et al., 1997) where there is no

requirement of energy conservation for the BRDFs

stored on the surface, BTFs are capable of captur-

ing mesoscopic effects of light exchange such as

inter-reflections, self-occlusions or self-shadowing.

During the acquisition process, images need to be

taken from different views and under different illu-

mination conditions which mostly has been realized

based on simple camera-light source setups (Lensch

et al., 2001), gonioreflectometers (Dana et al., 1997;

Matusik et al., 2002; Marschner et al., 2005; Hol-

royd et al., 2010; Filip et al., 2013), arrays of cameras

and light sources (Furukawa et al., 2002; Weyrich

et al., 2006; Schwartz et al., 2011; Ruiters et al., 2012;

N

¨

oll et al., 2013; den Brok et al., 2014), combinations

of extended light sources and cameras (Aittala et al.,

2013) or mobile devices (Aittala et al., 2015).

Sparse Reflectance Acquisition: Instead of relying

on a dense sampling of the view-light configurations

that can be realized with the respective acquisition se-

tups, several techniques focus on faster and less costly

reflectance acquisition from only a suitable subset of

these configurations. The non-captured information

is interpolated based on certain models.

Such a sparse acquisition has e.g. been followed

by Matusik et al. (Matusik et al., 2003a), where a re-

flectance model based on linear combinations from

a set of densely sampled BRDF measurements has

been proposed. Similarly, another technique of Ma-

tusik et al. (Matusik et al., 2003b) relies on a BRDF

database that is used to compute a wavelet basis which

allows the representation of isotropic materials from

only about 5% of the original data.

Ruiters et al. (Ruiters et al., 2012) presented a

compact data-driven BRDF representation where a

set of separable functions is fitted to irregular angular

measurements. The exploitation of spatial coherence

of the reflectance function over the surface makes this

technique even capable of reconstructing the appear-

ance of specular materials from sparse measurements.

However, the technique is tailored to isotropic materi-

als and cannot be used for too coarse samplings where

analytical models are better suited.

Aiming at the compression of BTFs, Koudelka

et al. (Koudelka et al., 2003) used linear models

computed from apparent BRDFs per material. More

recent work by den Brok et al. (den Brok et al.,

2014) demonstrated that a sparse BTF acquisition can

be obtained for several materials from only about

6% of the view-light configurations taken into ac-

count by conventional setups. Alternatively, the

sparse acquisition of anisotropic SVBRDFs based on

manifold-bootstrapping has been proposed by Dong

et al. (Dong et al., 2010). Analytical BRDFs are fit-

ted to the data acquired for certain surface positions

and used for the construction of a manifold. Unfortu-

nately, the dimensionality of the manifold of per-texel

reflectance functions would be significantly higher for

BTFs.

Further work focused on sparse reflectance acqui-

sition based on logarithmic mappings of BRDFs of a

database. Nielsen et al. (Nielsen et al., 2015) focus on

BRDF reconstruction from an optimized set of view-

light-configurations by using a linear BRDF subspace

based on a logarithmic mapping of the MERL BRDF

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

274

database. This approach has been improved by using

priors (Xu et al., 2016) and by its application per point

on the material (Yu et al., 2016).

Zhou et al. (Zhou et al., 2016) approach sparse

SVBRDF acquisition by modeling reflectance in

terms of a convex combination over a set of certain

target-specific basis materials and sparse blend pri-

ors. Further sparse acquisition approaches include

the technique by V

´

avra and Filip (V

´

avra and Filip,

2016), that uses a database of isotropic slices, trained

from anisotropic BRDFs, to complement sparse mea-

surements of anisotropic BRDFs and the use of a

dictionary-based SVBRDF representation (Hui and

Sankaranarayanan, 2015). Only involving a mobile

device, Aittala at al. (Aittala et al., 2015) perform

SVBRDF reconstruction based on pairs of flash and

non-flash images. Their approach, however, heavily

relies on the stationarity of the texture of the consid-

ered materials and the extreme sparse sampling re-

sults in non-accurate material depictions. Steinhausen

et al. (Steinhausen et al., 2014), as well as Aittala et

al. (Aittala et al., 2016), propose the use of guided

texture synthesis on sparse reflectance data, the latter

relying on deep convolutional neural network statis-

tics.

In this work, we focus on the enhancement of

the reflectance representation resulting from a com-

mercially available state-of-the-art SVBRDF acqui-

sition device by replacing the local fitting of ana-

lytical BRDF functions by a Tikhonov-regularized

sparse reconstruction using data-driven linear mod-

els. This allows to consider non-local effects such as

inter-reflections, self-occlusions and local subsurface

scattering within the reflectance representation and,

hence, can be seen as an approach for sparse BTF ac-

quisition.

3 METHOD

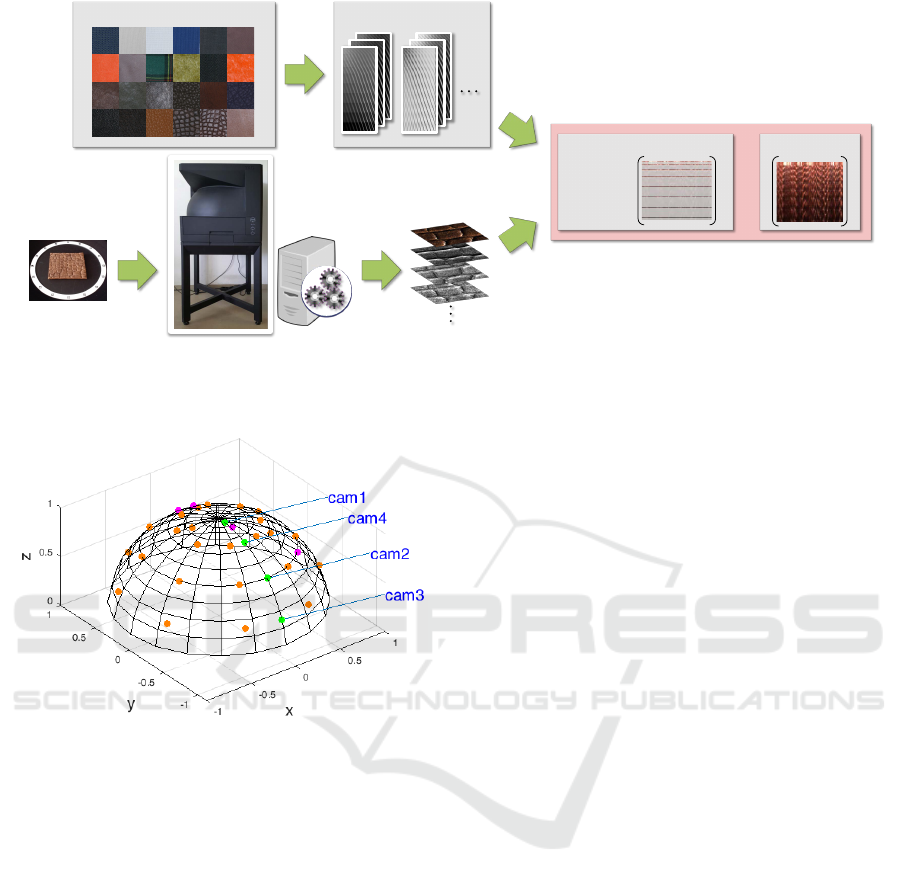

Our approach for the digitization of material appear-

ance relies on taking a sparse set of images depicting

a material sample under different view/light configu-

rations with a state-of-the-art industry-grade acquisi-

tion device, and the subsequent Tikhonov-regularized

BTF reconstruction from these sparse measurements

using data-driven linear models (see Figure 1). Fur-

ther details on the individual steps are provided in the

following subsections.

3.1 Acquisition Setup

In this work, we used the recently released industry-

grade acquisition device TAC7 (X-Rite, 2016) (see

Figure 1) for the digitization of materials. This de-

vice is equipped with four monochromatic cameras

mounted on a hemispherical gantry at zenith angles

of 5.0

◦

, 22.5

◦

, 45.0

◦

and 67.5

◦

that observe the mate-

rial sample which is placed on a turntable in the center

of the gantry. Furthermore, 30 LED light sources are

mounted on the gantry and an additional movable lin-

ear light source is included for the acquisition of spec-

ular reflectance of the sample. In addition, a projector

is integrated in the setup so that the surface geometry

can be acquired based on structured light techniques.

While four colored LEDs in combination with spec-

tral filter wheels allow the acquisition of spectral re-

flectance behavior, we do not exploit this feature in

the scope of this paper.

The database D of densely sampled material BTFs

from which the linear models are derived is captured

with a traditional BTF acquisition device (Schwartz

et al., 2013). This device is equipped with a turntable,

which allows a dense azimuthal sampling, and eleven

cameras (resolution of 2048 × 2048 pixels) that are

mounted along an arc on an hemispherical gantry with

a diameter of about 2m, covering zenith angles be-

tween 0

◦

and 75

◦

with an angular increment of 7.5

◦

.

Furthermore, the gantry is equipped with 198 uni-

formly distributed light sources.

3.2 Data Acquisition

To gather reflectance samples for each surface point

with the TAC7, photos of the considered material

sample are taken under different turntable rotations

and illuminations for different exposure times. In-

volved parameters such as the rotation angles of the

turntable, the use of the linear light source or the num-

ber of exposure steps used for high dynamic range

(HDR) imaging can be specified by the user depend-

ing on the complexity of the surface geometry and

the reflectance behavior of the material to be ac-

quired. For instance, smaller rotation angles of the

turntable can be used for complex surface geome-

try or anisotropic reflectance behavior. Furthermore,

the linear light source can be moved in small angu-

lar increments to densely sample specular highlights

of highly specular materials. After the generation of

HDR images for different configurations of turntable

and illumination, these are projected onto the sur-

face geometry of the material sample. This allows to

gather reflectance samples per surface point for dif-

ferent configurations of view and illumination.

For our experiments, we took images using all

four cameras, the 28 monochrome LEDs for lumi-

nance and 4 colored LEDs for RGB image data. The

sampling is depicted in Figure 2. The turntable was

Data-driven Enhancement of SVBRDF Reflectance Data

275

≈

BTF

BTF / ABRDF Database Data-driven Linear Model

𝑈

Sparse Recovery

(𝑆𝑈)

−1

Sparse Measurement

𝐵

�

𝐷

𝐵

Figure 1: Overview of the proposed pipeline: Reflectance samples for a sparse set of view-light configurations are acquired

using the X-Rite TAC7 device (bottom row). They serve as input to the proposed Tikhonov-regularized sparse BTF recon-

struction using data-driven linear models.

Figure 2: Visualization of the captured view-light configu-

ration. Orange: 24 monochrome LEDs, magenta: 4 LEDs

with color filters, green: camera positions.

rotated between 0

◦

and 180

◦

in steps of 45

◦

or 30

◦

.

Measurement times were in the range of 30 − −40

minutes, while data processing for our chosen patch

size of 256 × 256 texels took up to 90 minutes (with-

out SVBRDF fitting).

3.3 BTF Reconstruction from Sparse

Samples

Instead of fitting an analytical Ward BRDF (Ward,

1992) to the sparse reflectance samples per surface

point as performed by the TAC7 software (X-Rite,

2016), we fit the sparsely measured data

˜

B to a

densely sampled data-driven linear model U, obtained

from a database D of material BTFs.

For the purpose of fitting the sparse TAC7 mea-

surements to the linear model, we represent the set of

n

s

sparsely measured samples

˜

B in terms of a matrix

product

˜

B = SB, (1)

where S denotes a binary sparse measurement ma-

trix with S ∈ {0, 1}

n

s

×n

lv

and SS

t

= 1 which selects

the TAC7 view-light configurations from the densely

sampled measurement B to be reconstructed, where

n

s

,n

lv

denote the number of view-light configurations

of the TAC7 device and the DOME II device, respec-

tively. To deal with the fact that the TAC7’s camera

and light source positions relative to the material sam-

ple do not coincide with those in the DOME II device,

we perform a simple nearest neighbor search on the

normalized positions of lights and cameras, mapping

each point in the TAC7’s coordinate space to the clos-

est light or camera in the database coordinate space.

This is encoded in the measurement matrix S. Note

that even for the TAC7 on its own, there are different

samplings for color and monochromatic images.

Assuming the existence of a suitable linear model

U that represents B well, i.e. B ≈ UV for suitable pa-

rameters V, we determine parameters from the sparse

measurement SB via

V = argmin

˜

V

kSU

˜

V −SBk

2

F

+ kR

˜

Vk

2

F

. (2)

The Tikhonov regularization term kR

˜

Vk

2

F

is included

to penalize implausible solutions. Similar to the work

on BRDF recovery from a sparse sampling by Nielsen

et al. (Nielsen et al., 2015), we use R = λ1, where λ

determines the weight of the regularization term. This

results in a penalization of large deviations from the

distribution of basis coefficients in the training data.

However, in contrast to the approach of Nielsen et

al. (Nielsen et al., 2015) that is designed for isotropic

BRDFs, we focus on the recovery of spatially varying

reflectance characteristics in terms of BTFs that in-

clude non-local effects of light exchange. Moreover,

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

276

Nielsen et al.’s method deals with multi-channel im-

ages by considering a single BRDF’s color channels

as completely different BRDFs in both the training

and the testing step. This does not apply to our sce-

nario, where we have to consider monochromatic and

color samples from different sample points simulta-

neously.

In the following, we provide more details on the

computation of the employed basis and the BTF re-

construction.

Basis Computation. Given a database D of BTF

measurements such as e.g. the materials introduced

by Weinmann et al. (Weinmann et al., 2014), where

each BTF is represented by a n

lv

× n

x

matrix and n

lv

and n

x

denote the number of view-light configurations

and the number of texels, respectively, we extract a

subset D

class

⊂ D for the material class under consid-

eration (e.g. leather, fabric, wool etc.). We concate-

nate the individual BTFs along the second dimension,

i.e. we generate a matrix whose columns contain all

of the individual aBRDFs stored within the different

BTFs. In order to later be able to consider monochro-

matic and color samples at once, we first convert the

RGB data to YUV color space and compute models

for each channel separately; the rationale will become

clear in the subsequent section on reconstruction. It is

well established that some kind of reduction of dy-

namic range is necessary in order to avoid obtaining

a model mostly concerned with modeling noise in the

highlight regions (cf. (Matusik et al., 2003b; Nielsen

et al., 2015)). Like den Brok et al. (den Brok et al.,

2014), we therefore scale the U and V channels with

the corresponding entries from the Y channel, and use

the logarithm of the Y channel, such that a pixel tuple

(y,u,v) becomes (logy,

u

/y,

v

/y).

As shown by den Brok et al. (den Brok et al.,

2014), a straight-forward approach for BTF basis

computation is given by the use of matrix factoriza-

tion techniques such as the (truncated) singular value

decomposition (SVD).

D

class

≈ UΣV

t

. (3)

In the following, for brevity we shall write U for UΣ.

The basis obtained that way is known to generalize

well up to some error measure ε for materials B not

contained in the database:

||UV − B|| < ε. (4)

Contrary to den Brok et al. (den Brok et al., 2014), we

use Tikhonov regularization instead of BTF patches

for regularization, which makes our method more

efficient in terms of computation time and memory

consumption. The huge size of the data, however,

still makes computing the exact truncated SVD on

D

class

impossible. Among the many ways to obtain

good approximations, we choose to follow den Brok

et al. (den Brok et al., 2015) and compute trun-

cated SVDs first for each training material, and subse-

quently merge the resulting models using eigenspace

merging.

Reconstruction. Given a sparse TAC7 measure-

ment

˜

B = SB, we first apply the same color space

transformation as above to the RGB part of the data.

The monochromatic images are assumed to be pure

luminance data (Y-channel of a YUV-triple), and we

only apply the logarithm. Because of the color space

transformation, we can now deal with each channel

separately, and therefore assume our measurement to

be of the form S

logY

B

logY

and S

{U,V }

B

{U,V }

. We can

then compute approximations B ≈ UV per channel

via

V = ((SU)

t

(SU) + R

t

R)

−1

(SU)

t

(SB). (5)

As mentioned before, the matrix R = λ1 is the

Tikhonov regularization term, where λ represents the

regularization strength. Note that according to den

Brok et al. (den Brok et al., 2015), a typical BTF’s

U,V channels have a much lower rank than the lumi-

nance channel; we can thus hope that the very sparse

RGB data from the TAC7 measurement will still suf-

fice for obtaining suitable model parameters.

4 RESULTS

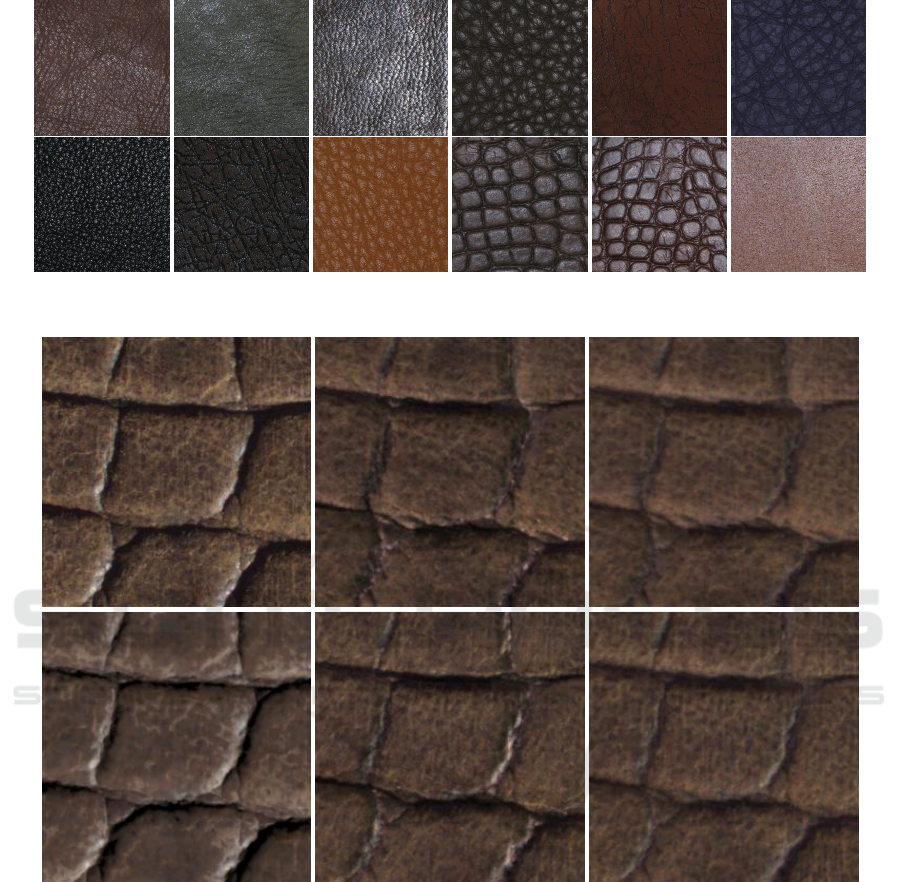

In our experiments, focus on the material class

Leather, represented by 12 material samples as de-

picted in Figure 3. For BTF reconstruction, we leave

out the material to be reconstructed and compute the

linear model on the remaining 11 data sets. For the

presented samples, using 512 basis vectors per color

channel proved to be sufficient.

Reconstructions of size 256×256 texels were per-

formed on a server equipped with an Intel(R) Xeon(R)

E5-2650 CPU, operating at 2.00 GHz, with 128 GB of

RAM. Generating a BTF for one material sample took

up to ten minutes on this machine, which fits well to

the time the TAC7 software takes for computing an

SVBRDF for the same sample (≈ 12 – 15 minutes)

on a desktop computer equipped with an Intel Core

i7-4930 processor operating at 3.4 GHz and 32 GB of

RAM.

We tried two sampling strategies, involving sam-

ple rotations in steps of either 30

◦

or 45

◦

from 0

◦

to 180

◦

. This yields 480 monochrome and 80 color

images in the 45

◦

-setting and 672 monochrome and

Data-driven Enhancement of SVBRDF Reflectance Data

277

Figure 3: Overview of the samples in the material class Leather.

(a) Reference (photo) (b) 45

◦

;λ = (500,100) (c) 45

◦

;λ = (5000,100)

(d) SVBRDF (e) 30

◦

;λ = (500,100) (f) 30

◦

;λ = (5000,100)

Figure 4: Reference image acquired with the TAC7 (a) and corresponding renderings (b-f) of the material sample Leather10,

approximating lighting and viewing direction of the real capturing situation. For the rendering shown in (d), we used an

SVBRDF computed using the TAC7 software’s standard settings, fitting an isotropic Ward model to the measured data, while

(b), (c), (e) and (f) illustrate reconstruction results. We used an angular sampling of 45

◦

for the BTF reconstruction shown

in (b) and (c) and 30

◦

for (e) and (f). The middle and rightmost column illustrate the effects of varying weights λ for the

Tikhonov regularization term.

112 color images for 30

◦

. This corresponds to 2.14%

respectively 3.00% of the 26 136 images of a typ-

ical BTF measurement with the DOME II device

(Schwartz et al., 2011). In Figure 4, we provide ren-

derings of the material sample Leather10 for both

sampling strategies in comparison to a rectified, HDR

combined input image taken using approximately the

same viewpoint and lighting direction, as well as a

rendering from an SVBDRF reconstruction, produced

by the software pipeline accompanying the TAC7.

One can observe that the reconstruction result is

quite sensitive to the choice of λ weighting the reg-

ularization term, especially for the luminance chan-

nel. Figure 4 demonstrates this effect by compar-

ing results at λ = (500, 100) (luminance, color) and

λ = (5000,100). A weight of 5000 for the luminance

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

278

component proved to be sufficient, as higher weights

did not influence the resulting quality significantly.

For the color component, results become stable al-

ready at λ ≥ 100.

The visible effect that the highlights in the re-

constructions are less pronounced than e.g. in the

SVBRDF with its fitted lobe are due to the resampling

and compression which took place in preparation of

the database.

5 CONCLUSIONS AND

OUTLOOK

We have presented a method for turning industrial

SVBRDF acquisition devices like the X-rite TAC7 to

full BTF capturing devices by means of sparse recon-

struction techniques, thereby combining the speed of

SVBRDF acquisition with the meso-scale accuracy of

BTFs. Compared to the measurement time required

when using common devices like the DOME II, rang-

ing around 20 hours, our approach using a ready-to-

buy device allows to achieve a usable BTF within

under two hours, including image acquisition (≤ 40

minutes), data processing (≤ 90 minutes) and fitting

(≈ 10 minutes).

Limitations are especially in the method’s ability

to model specular highlights which might be missed

due to the coarse angular sampling. A logical next

step would be to evaluate the applicability of our re-

construction technique to further material classes.

Another use of the TAC7’s special capabilities

could be BTF spectralization, using the different com-

binations of LEDs and filters to improve the accuracy

in methods for spectral reflectance acquisition such as

the method by Dong et al. (Dong et al., 2016).

Another possible enhancement, following Nielsen

et al. (Nielsen et al., 2015), as well as den Brok et

al. (den Brok et al., 2014) would be to optimize the

choice of TAC7 sampling directions depending on the

material class.

ACKNOWLEDGEMENTS

The authors wish to thank the X-Rite office in Bonn

for helpful advice on operating the TAC7 device.

REFERENCES

Aittala, M., Aila, T., and Lehtinen, J. (2016). Reflectance

modeling by neural texture synthesis. ACM Trans.

Graph., 35(4):65:1–65:13.

Aittala, M., Weyrich, T., and Lehtinen, J. (2013). Practi-

cal SVBRDF capture in the frequency domain. ACM

Transactions on Graphics (Proc. SIGGRAPH), 32(4).

Aittala, M., Weyrich, T., and Lehtinen, J. (2015). Two-

shot SVBRDF capture for stationary materials. ACM

Transactions on Graphics (TOG), 34(4):110.

Dana, K. J., Nayar, S. K., van Ginneken, B., and Koen-

derink, J. J. (1997). Reflectance and texture of real-

world surfaces. In 1997 Conference on Computer Vi-

sion and Pattern Recognition (CVPR ’97), June 17-19,

1997, San Juan, Puerto Rico, pages 151–157. IEEE

Computer Society.

den Brok, D., Steinhausen, H. C., Hullin, M. B., and Klein,

R. (2014). Patch-based sparse reconstruction of mate-

rial BTFs. Journal of WSCG, 22(2):83–90.

den Brok, D., Weinmann, M., and Klein, R. (2015). Linear

models for material BTFs and possible applications.

In Proceedings of the Eurographics Workshop on Ma-

terial Appearance Modeling: Issues and Acquisition,

pages 15–19. Eurographics Association.

Dong, W., Shen, H.-L., Du, X., Shao, S.-J., and Xin, J. H.

(2016). Spectral bidirectional texture function recon-

struction by fusing multiple-color and spectral im-

ages. Appl. Opt., 55(36):10400–10408.

Dong, Y., Wang, J., Tong, X., Snyder, J., Lan, Y., Ben-Ezra,

M., and Guo, B. (2010). Manifold bootstrapping for

SVBRDF capture. ACM Trans. Graph., 29(4):98:1–

98:10.

Filip, J. and Haindl, M. (2009). Bidirectional texture func-

tion modeling: A state of the art survey. Pattern Anal-

ysis and Machine Intelligence, IEEE Transactions on,

31(11):1921–1940.

Filip, J., V

´

avra, R., Haindl, M.,

ˇ

Zid, P., Krupi

ˇ

cka, M., and

Havran, V. (2013). BRDF slices: Accurate adaptive

anisotropic appearance acquisition. pages 1468–1473.

Furukawa, R., Kawasaki, H., Ikeuchi, K., and Sakauchi, M.

(2002). Appearance based object modeling using tex-

ture database: Acquisition, compression and render-

ing. pages 257–266.

Haindl, M. and Filip, J. (2013). Visual Texture: Accu-

rate Material Appearance Measurement, Representa-

tion and Modeling. Springer.

Holroyd, M., Lawrence, J., and Zickler, T. (2010). A coax-

ial optical scanner for synchronous acquisition of 3D

geometry and surface reflectance. ACM Transactions

on Graphics (TOG), 29(4):99.

Hui, Z. and Sankaranarayanan, A. C. (2015). A dictionary-

based approach for estimating shape and spatially-

varying reflectance. In 2015 IEEE International

Conference on Computational Photography (ICCP),

pages 1–9.

Koudelka, M. L., Magda, S., Belhumeur, P. N., and Krieg-

man, D. J. (2003). Acquisition, compression, and

synthesis of bidirectional texture functions. In ICCV

Workshop on Texture Analysis and Synthesis.

Lensch, H. P. A., Kautz, J., Goesele, M., Heidrich, W.,

and Seidel, H.-P. (2001). Image-based reconstruc-

tion of spatially varying materials. In Gortler, S. J.

and Myszkowski, K., editors, Rendering Techniques

2001: Proceedings of the Eurographics Workshop in

Data-driven Enhancement of SVBRDF Reflectance Data

279

London, United Kingdom, June 25–27, 2001, pages

103–114, Vienna. Springer Vienna.

Marschner, S. R., Westin, S. H., Arbree, A., and Moon,

J. T. (2005). Measuring and modeling the appearance

of finished wood. ACM Transactions on Graphics,

24(3):727–734.

Matusik, W., Pfister, H., Brand, M., and McMillan, L.

(2003a). A data-driven reflectance model. ACM

Trans. Graph., 22(3):759–769.

Matusik, W., Pfister, H., Brand, M., and McMillan, L.

(2003b). Efficient isotropic BRDF measurement. In

Proceedings of the 14th Eurographics Workshop on

Rendering, EGRW ’03, pages 241–247, Aire-la-Ville,

Switzerland, Switzerland. Eurographics Association.

Matusik, W., Pfister, H., Ziegler, R., Ngan, A., and McMil-

lan, L. (2002). Acquisition and rendering of transpar-

ent and refractive objects. pages 267–278.

M

¨

uller, G., Meseth, J., Sattler, M., Sarlette, R., and Klein,

R. (2004). Acquisition, synthesis and rendering of

Bidirectional Texture Functions. pages 69–94. INRIA

and Eurographics Association, Aire-la-Ville, Switzer-

land.

Nicodemus, F., Richmond, J., Hsia, J., Ginsberg, I., and

Limperis, T. (1977). Geometrical considerations and

nomenclature for reflectance, natl. Bur. of Stand.

Monogr, 160.

Nielsen, J. B., Jensen, H. W., and Ramamoorthi, R. (2015).

On optimal, minimal BRDF sampling for reflectance

acquisition. ACM Trans. Graph., 34(6):186:1–186:11.

N

¨

oll, T., J., K., Reis, G., and Stricker, D. (2013). Faithful,

compact and complete digitization of cultural heritage

using a full-spherical scanner. pages 15–22.

Ruiters, R., Schwartz, C., and Klein, R. (2012). Data driven

surface reflectance from sparse and irregular samples.

Computer Graphics Forum (Proc. of Eurographics),

31(2):315–324.

Schwartz, C., Sarlette, R., Weinmann, M., and Klein, R.

(2013). DOME II: A parallelized BTF acquisition sys-

tem. In Proceedings of the Eurographics Workshop

on Material Appearance Modeling, pages 25–31. The

Eurographics Association.

Schwartz, C., Sarlette, R., Weinmann, M., Rump, M., and

Klein, R. (2014). Design and implementation of prac-

tical bidirectional texture function measurement de-

vices focusing on the developments at the university

of Bonn. Sensors, 14(5).

Schwartz, C., Weinmann, M., Ruiters, R., and Klein, R.

(2011). Integrated high-quality acquisition of geome-

try and appearance for cultural heritage. pages 25–32.

Steinhausen, H. C., den Brok, D., Hullin, M. B., and Klein,

R. (2014). Acquiring bidirectional texture functions

for large-scale material samples. Journal of WSCG,

22(2):73–82.

V

´

avra, R. and Filip, J. (2016). Minimal sampling for ef-

fective acquisition of anisotropic BRDFs. Computer

Graphics Forum, 35(7):299–309.

Ward, G. J. (1992). Measuring and modeling anisotropic

reflection. ACM SIGGRAPH Computer Graphics,

26(2):265–272.

Weinmann, M., Gall, J., and Klein, R. (2014). Material

classification based on training data synthesized us-

ing a BTF database. In Computer Vision - ECCV

2014 - 13th European Conference, Zurich, Switzer-

land, September 6-12, 2014, Proceedings, Part III,

pages 156–171. Springer International Publishing.

Weinmann, M. and Klein, R. (2015). Advances in geome-

try and reflectance acquisition (course notes). In SIG-

GRAPH Asia 2015 Courses, SA ’15, pages 1:1–1:71,

New York, NY, USA. ACM.

Weyrich, T., Matusik, W., Pfister, H., Bickel, B., Donner,

C., Tu, C., McAndless, J., Lee, J., Ngan, A., Jensen,

H. W., and Gross, M. (2006). Analysis of human faces

using a measurement-based skin reflectance model.

ACM Transactions on Graphics, 25:1013–1024.

Wong, T.-T., Heng, P.-A., Or, S.-H., and Ng, W.-Y. (1997).

Image-based rendering with controllable illumination.

In Proceedings of the Eurographics Workshop on Ren-

dering Techniques ’97, pages 13–22, London, UK,

UK. Springer-Verlag.

X-Rite (2016). Tac7.

http://www.xrite.com/categories/Appearance/tac7.

accessed at 1st June 2017.

Xu, Z., Nielsen, J. B., Yu, J., Jensen, H. W., and Ramamoor-

thi, R. (2016). Minimal BRDF sampling for two-shot

near-field reflectance acquisition. ACM Trans. Graph.,

35(6):188:1–188:12.

Yu, J., Xu, Z., Mannino, M., Jensen, H. W., and Ramamoor-

thi, R. (2016). Sparse Sampling for Image-Based

SVBRDF Acquisition. In Klein, R. and Rushmeier,

H., editors, Workshop on Material Appearance Mod-

eling. The Eurographics Association.

Zhou, Z., Chen, G., Dong, Y., Wipf, D., Yu, Y., Snyder,

J., and Tong, X. (2016). Sparse-as-possible SVBRDF

acquisition. ACM Trans. Graph., 35(6):189:1–189:12.

GRAPP 2018 - International Conference on Computer Graphics Theory and Applications

280