Distributed and Resource-Aware Load Testing

of WS-BPEL Compositions

Afef Jmal Ma

ˆ

alej

1

, Mariam Lahami

1

, Moez Krichen

1,2

and Mohamed Jma

¨

ıel

1,3

1

ReDCAD Laboratory, National School of Engineers of Sfax,

University of Sfax, B.P. 1173, 3038 Sfax, Tunisia

2

Faculty of CSIT, Al-Baha University, Saudi Arabia

3

Digital Research Center of Sfax, B.P. 275, Sakiet Ezzit, 3021 Sfax, Tunisia

Keywords:

Web Services Composition, Distributed Load Testing, Distributed Log Analysis, Performance Monitoring,

Resource Awareness, Distributed Execution Environment, Tester Instance Placement.

Abstract:

One important type of testing Web services compositions is load testing, as such applications solicit concurrent

access by multiple users simultaneously. In this context, load testing of these applications seems an important

task in order to detect problems under elevated loads. For this purpose, we propose a distributed and resource

aware test architecture aiming to study the behavior of WS-BPEL compositions considering load conditions.

The major contribution of this paper consists of (i) looking for the best node hosting the execution of each tester

instance, then (ii) running a load test during which the composition under test is monitored and performance

data are recorded and finally (iii) analyzing in a distributed manner the resulting test logs in order to identify

problems under load. We also illustrate our approach by means of a case study in the healthcare domain

considering the context of resource aware load testing.

1 INTRODUCTION

Nowadays, Web services compositions (particularly

WS-BPEL

1

(Barreto et al., 2007) (or BPEL) com-

positions) offer different utilities to hundreds even

thousands of users at the same time. An important

challenge of testing these applications is load test-

ing (Beizer, 1990), which is frequently performed in

order to ensure that a system satisfies a particular per-

formance requirement under a heavy load. In our con-

text, load refers to the rate of incoming requests to a

given system during a period of time.

In addition to conventional functional testing pro-

cedures, such as unit and integration testing, load test-

ing is a required activity that reveals programming er-

rors, which would not appear if the composition is ex-

ecuted with a small workload or for a short time. They

emerge when the system is executed under a heavy

load or over a long period of time. On the other hand,

a given process may be correctly implemented but

fails under some particular load conditions because of

external causes (e.g., misconfiguration, hardware fail-

ures, buggy load generator, etc.) (Jiang et al., 2008).

Hence, it is important to identify and remedy these

1

Web Services-Business Process Execution Language

different problems.

To handle challenges of load testing, we have pro-

posed in a previous work (Ma

ˆ

alej and Krichen, 2015)

an approach that combines functional and load testing

of BPEL compositions. Indeed, our study is based

on conformance testing concept which verifies that

a system implementation performs according to its

specified requirements. For more details, monitoring

BPEL compositions behaviors during load testing was

proposed in order to perform later an advanced anal-

ysis of test results. This step aims to identify both

causes and natures of detected problems. For that,

the execution context of the application under test is

considered while periodically capturing, under load,

some performance metrics of the system such as CPU

usage, memory usage, etc.

However, recognizing problems under load is a

challenging and time-consuming activity due to the

large amount of generated data and the long running

time of load tests. During this process, several risks

may happen and undermine SUT

2

quality and may

even cause software and hardware failures such as

SUT delays, memory, CPU overload, node crash, etc.

Such risks may also impact the tester itself, which can

2

System Under Test

Jmal Maâlej, A., Lahami, M., Krichen, M. and Jmaïel, M.

Distributed and Resource-Aware Load Testing of WS-BPEL Compositions.

DOI: 10.5220/0006693400290038

In Proceedings of the 20th International Conference on Enterprise Information Systems (ICEIS 2018), pages 29-38

ISBN: 978-989-758-298-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

29

produce faulty test results.

To overcome such problems, we extend our previ-

ous approach dealing with functional and load testing

of BPEL compositions by distribution and resource

awareness capabilities. Indeed, supporting test dis-

tribution over the network may alleviate considerably

the test workload at runtime, especially when the SUT

is running on a cluster of BPEL servers. Moreover,

it is highly demanded to provide a resource-aware

test system, that meets resource availability and fits

connectivity constraints in order to have a high con-

fidence in the validity of test results, as well as to

reduce their associated burden and cost on the SUT.

To show the feasibility of the proposed approach, a

case study in the health care domain is introduced, its

BPEL process is outlined and it is applied to the con-

text of distributed and resource aware load testing.

The remainder of this paper is organized as fol-

lows. Section 2 is dedicated to describe our proposed

testing approach for the study of BPEL compositions

under load conditions. Then, we describe in Section 3

our resource-aware tester deployment solution. In

Section 4, we illustrate our test solution by means of

a case study in the healthcare domain. Section 5 con-

tains discussions about some works addressing load

testing issue, test distribution and test resource aware-

ness. Finally, Section 6 provides a conclusion that

summarizes the paper and discusses items for future

work.

2 OUR APPROACH FOR THE

STUDY OF WS-BPEL

COMPOSITIONS UNDER LOAD

Our proposed approach is based on gray box testing,

which is a strategy for software debugging where the

tester has limited knowledge of the internal details of

the program. Indeed, we simulate in our case the dif-

ferent partner services of the composition under test

as we suppose that only the interactions between this

latter and its partners are known. Furthermore, we

rely on the online testing mode considering the fact

that test cases are generated and executed simultane-

ously (Mikucionis et al., 2004). Moreover, we choose

to distribute the testing architecture components on

different nodes in order to realistically run an impor-

tant number of multiple virtual clients.

2.1 Principle of Load Distribution

When testing the performance of an application, it can

be beneficial to perform the tests under a typical load.

This can be difficult if we are running our application

in a development environment. One way to emulate

an application running under load is through the use

of load generator scripts. For more details, distributed

testing is to be used when we reach the limits of a ma-

chine in terms of CPU

3

, memory or network. In fact,

it can be used within one machine (many VMs

4

on

one machine). If we reach the limits of one reasonable

VM in terms of CPU and memory, load distribution

can be used across many machines (1 or many VMs

on 1 or many machines). In order to realize remote

load test distribution, a test manager is responsible to

monitor the test execution and distribute the required

load between the different load generators. These lat-

ters invoke concurrently the system under test as im-

posed by the test manager.

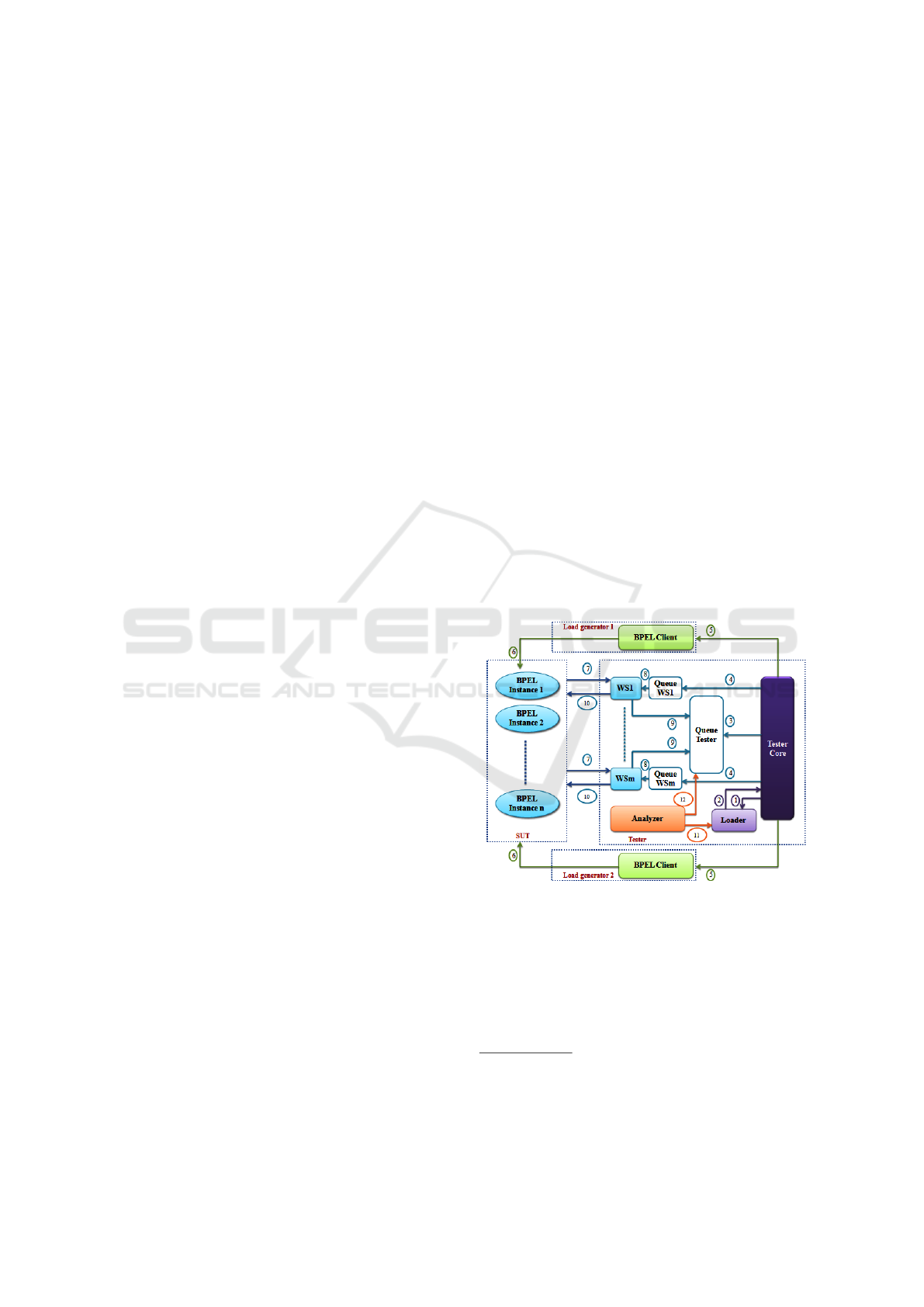

2.2 Load Testing Architecture

In this section, we describe a proposed distributed

framework for behavior study of BPEL compositions

under load conditions (Ma

ˆ

alej and Krichen, 2015).

For simplicity reasons, we consider that our load test-

ing architecture is composed, besides the SUT and

the tester, of two load generators

5

, as depicted in Fig-

ure 1.

Figure 1: Load Testing Architecture.

As shown in Figure 1, the main components of our

proposed architecture are:

• The System under test (SUT): a new BPEL in-

stance is created for each call of the composi-

tion under test. A BPEL instance is defined by

a unique identifier. Each created instance invokes

3

Central Processing Unit

4

Virtual Machines

5

More machines may be considered as load generators

in order to distribute the load more efficiently.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

30

its own partner services instances by communicat-

ing while exchanging messages.

• The tester (Tester): it represents the system under

test environment and consists of:

– The Web services (WS1, ..., WSm): these ser-

vices correspond to simulated partners of the

composition under test. For each call of the

composition during load testing, new instances

of partner services are created.

– The Queues (Queue WS1, ..., Queue WSm):

these entities are simple text files through

which partner services and the Tester Core ex-

change messages.

– The Loader: it loads the SUT specification de-

scribed in Timed Automata, besides the WSDL

files of the composition under test and the

WSDL files of each partner service. Moreover,

it defines the types of input/output variables of

the considered composition as well as of its

partner services.

– The Tester Core: it generates random input

messages of the BPEL process under test. It

communicates with the different partner ser-

vices of the composition by sending them

the types of input and output messages. In

case of partner services which are involved in

synchronous communications, the Tester Core

sends information about their response times to

the composed service. Finally, it distributes the

load between the two generators. It orders each

one to perform (more or less) half of concurrent

calls to the composition under test, and passes

in parameters the time between each two suc-

cessive invocations besides the input variable(s)

of the system.

– The test log (QueueTester): it stores the gen-

eral information of the test (number of calls of

the composition under test, the delay between

the invocation of BPEL instances, etc.). Also

it saves the identifiers of created instances, the

invoked services, the received messages from

the SUT, the time of their invocations and the

verdict corresponding to checking of partner in-

put messages types. This log will be consulted

by the Analyzer to verify the functioning of the

different BPEL instances and to diagnose the

nature and cause of the detected problems.

– The test analyzer (Analyzer): this component is

responsible for offline analysis of the test log

QueueTester. It generates a final test report and

identifies, as far as possible, the limitations of

the tested composition under load conditions.

• The load generators (BPEL Client): these enti-

ties meet the order of the Tester Core by perform-

ing concurrent invocations of the composed ser-

vice. For that, they receive from the tester as test

parameters the input(s) of the composition under

test, the number of required process calls and the

delay between each two successive invocations.

Besides, we highlight that load testing of BPEL

compositions in our approach is accompanied by a

module for the monitoring of the execution environ-

ment performances, aiming to supervise the whole

system infrastructure during the test. Particularly, this

module permits the selection, before starting test, of

the interesting metrics to monitor, and then to display

their evolution in real-time. In addition, the moni-

toring feature helps in establishing the correlation be-

tween performance problems and the detected errors

by our solution.

2.3 Automated Advanced Load Test

Analysis Approach

Current industrial practices for checking the results

of a load test mainly persist ad-hoc, including high-

level checks. In addition, looking for functional prob-

lems in a load testing is a time-consuming and diffi-

cult task, due to the challenges such as no documented

system behavior, monitoring overhead, time pressure

and large volume of data. In particular, the ad-hoc

logging mechanism is the most commonly used, as

developers insert output statements (e.g. printf or

System.out) into the source code for debugging rea-

sons (James et al., 2010). Then most practitioners

look for the functional problems under load using

manual searches for specific keywords like failure, or

error (Jiang, 2010). After that, load testing practi-

tioners analyze the context of the matched log lines to

determine whether they indicate functional problems

or not. Depending on the length of a load test and the

volume of generated data, it takes load testing practi-

tioners several hours to perform these checks.

However, few research efforts are dedicated to

the automated analysis of load testing results, usually

due to the limited access to large scale systems for

use as case studies. Automated and systematic load

testing analysis becomes much needed, as many ser-

vices have been offered online to an increasing num-

ber of users. Motivated by the importance and chal-

lenges of the load testing analysis, an automated ap-

proach was proposed in (Ma

ˆ

alej and Krichen, 2015)

to detect functional and performance problems in a

load test by analyzing the recorded execution logs

and performance metrics. In fact, performed opera-

tions during load testing of BPEL compositions are

Distributed and Resource-Aware Load Testing of WS-BPEL Compositions

31

stored in QueueTester. In order to recognize each

BPEL instance which is responsible for a given ac-

tion, each one starts with the identifier of its corre-

sponding BPEL instance (BPEL-ID). At the end of

test running, the Analyzer consults QueueTester.

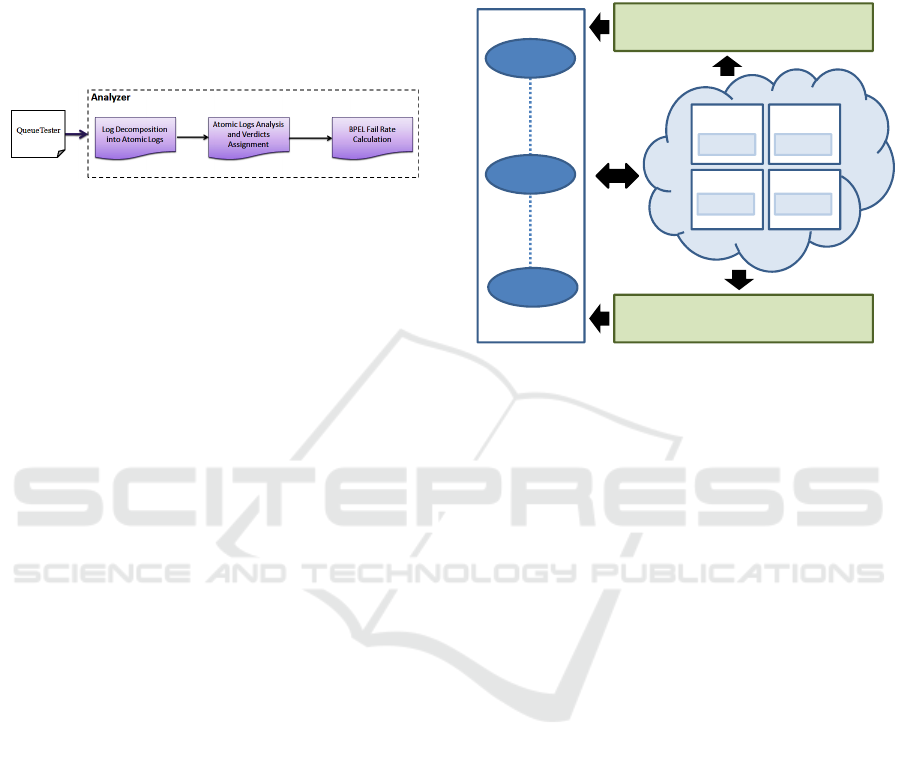

Hence, our automated log analysis technique takes

as input the stored log file (QueueTester) during load

testing, and goes through three steps as shown in Fig-

ure 2:

Figure 2: Automated Log Analysis Technique.

• Decomposition of QueueTester: based on BPEL-

ID, the Analyzer decomposes information into

atomic test reports. Each report is named BPEL-

ID and contains information about the instance

which identifier is BPEL-ID.

• Analysis of atomic logs: the Analyzer consults

the generated atomic test reports of the different

BPEL instances. It verifies the observed executed

actions of each instance by referring to the speci-

fied requirements in the model (Timed Automata).

Finally, the Analyzer assigns corresponding ver-

dicts to each instance and identifies detected prob-

lems.

• Generation of final test report: this step consists

in producing a final test report recapitulating test

results relatively to all instances and also describ-

ing both nature and cause of each observed FAIL

verdict.

It is true that our load test analysis is automated.

Yet, the analysis of the atomic logs is performed se-

quentially for each BPEL instance, which may be

costly especially in term of time execution. Another

limitation consists in using only one instance of tester

and thus one analyzer for each test case. To solve this

issue, we propose to use more than one instance of

the tester which are deployed in distributed nodes and

connected to the BPEL process under test. Each tester

instance studies the behavior of the composition con-

sidering different load conditions (SUT inputs, num-

ber of required process calls, delay between each two

successive invocations, etc.).

3 CONSTRAINED TESTER

DEPLOYMENT

In this section, we deal with the assignment of tester

instances to test nodes while fitting some resource and

connectivity constraints. The main goal of this step

is to distribute efficiently the load across virtual ma-

chines, computers, or even the cloud. This is crucial

for the test system performance and for gaining con-

fidence in test results.

Load Generator

BPEL

instance 1

BPEL

instance i

Tester 1

VM

VM

VM

VM

Tester i

Load Generator

SUT

BPEL

instance n

instance i

VM

VM

Tester j Tester k

Cloud

Figure 3: Distributed Load Testing Architecture.

Figure 3 illustrates the distributed load testing ar-

chitecture in which several tester instances are created

and connected to the BPEL process under test. These

instances run in parallel in order to perform efficiently

load testing.

Recall that each tester instance includes an an-

alyzer component that takes as input the generated

atomic test reports of the different BPEL instances,

and generates as output a Local Verdict (LV). As out-

lined in Figure 4, we propose a new component called

Test System Coordinator which is mainly charged

with collecting the local verdicts generated from the

analyzer instances and producing the Global Verdict

(GV). As depicted in Algorithm 1, if all local verdicts

are PASS, the global verdict will be PASS. If at least

one local verdict is FAIL (respectively INCONCLU-

SIVE), the global verdict will be FAIL (respectively

INCONCLUSIVE).

Once the distributed load testing architecture is

elaborated, we have to assign efficiently its compo-

nents (i.e.,its tester instances) to the execution nodes.

To do so, we have defined two kinds of constraints

that have to be respected in this stage: resources and

connectivity constraints. They are detailed in the fol-

lowing subsections.

3.1 Formalizing Resource Constraints

In general, load testing is seen as a resource con-

suming activity. Consequently, the consideration of

resource allocation during test distribution should be

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

32

Test System Coordinator

LV1

LV i

LV k

GV

⌧

Tester 1

Analyzer 1

Logs

Tester i

Analyzer i

Logs

Tester k

Analyzer k

Logs

VM 1 VM i VM k

Figure 4: Distributed Load Test Analysis Architecture.

Algorithm 1: Generation of the Global Verdict.

Input: The array LocalVerdicts.

Output: The global verdict.

1: BEGIN

2: for i = 0 to LocalVerdicts.size − 1 do

3: if (LocalVerdicts[i]==FAIL) then

4: return FAIL

5: end if

6: if (LocalVerdicts[i]==INCONCLUSIVE)

then

7: return INCONCLUSIVE

8: end if

9: end for

10: return PASS

11: END

applied with the aim of decreasing test overhead and

increase confidence in test results.

For each node in the test environment, three re-

sources are monitored: the available memory, the cur-

rent CPU load and the energy level (i.e., battery).

The value of each resource can be directly captured

on each node through the use of internal monitors.

Formally, they are represented through three vectors:

C that contains the provided CPU, R that provides

the available RAM

6

and E that introduces the energy

level.

For each tester instance, we introduce the memory

size (i.e., the memory occupation needed by a tester

during its execution), the CPU load and the energy

consumption properties. We suppose that these val-

ues are provided by the test manager or computed af-

ter a preliminary test run. Similarly, they are formal-

ized over three vectors: D

c

that contains the required

CPU, D

r

that introduces the required RAM and D

e

that contains the required energy by each tester.

As the proposed approach is resource aware,

6

Random Access Memory

checking resource availability during test distribution

is usually performed before starting the load test-

ing process. Thus, the overall required resources by

n tester instances must not exceed the available re-

sources in m nodes. This rule is formalized through

three constraints to fit as outlined by (1) where the

two dimensional variable x

i j

can be equal to 1 if the

tester instance i is assigned to the node j, 0 otherwise.

n

∑

i=1

x

i j

dc

i

≤ c

j

∀ j ∈

{

1, ··· , m

}

n

∑

i=1

x

i j

dr

i

≤ r

j

∀ j ∈

{

1, · ·· , m

}

n

∑

i=1

x

i j

de

i

≤ e

j

∀ j ∈

{

1, · ·· , m

}

(1)

3.2 Formalizing Connectivity

Constraints

Dynamic environments are characterized by frequent

and unpredictable changes in connectivity caused by

firewalls, non-routing networks, node mobility, etc.

For this reason, we have to pay attention when as-

signing a tester instance to a host computer by finding

at least one path in the network to communicate with

the component under test.

x

i j

= 0 ∀ j ∈ f orbiddenNode(i) (2)

where the f orbiddenNode(i) function returns a

set of forbidden nodes for a test component i.

Finding a satisfying test placement solution is

merely achieved by fitting the former constraints (1)

and (2).

3.3 Optimizing the Tester Instance

Placement Problem

Looking for an optimal test placement solution con-

sists in identifying the best node to host the concerned

Distributed and Resource-Aware Load Testing of WS-BPEL Compositions

33

tester in response with two criteria: its distance from

the node under test and its link bandwidth capacity.

To do so, we are asked to attribute a profit value p

i j

for assigning the tester i to a node j. For this aim, a

matrix P

n×m

is computed as follows:

p

i j

=

∗ ∗ ∗ ∗ ∗ ∗ ∗0 if j ∈ f orbiddenNode(i)

maxP − k × step

p

otherwise

(3)

where maxP is constant, step

p

=

maxP

m

, k corre-

sponds to the index of a node j in a Rank Vector that

is computed for each node under test. This vector cor-

responds to a classification of the connected nodes ac-

cording to both criteria: their distance far from the

node under test and their link bandwidth capacities.

As a result, the constrained tester instance ap-

proach generates the best deployment host for each

tester instance involved in the load testing process by

maximizing the total profit value while fitting the for-

mer resource and connectivity constraints. Thus, it

is formalized as a variant of the Knapsack Problem,

called Multiple Multidimensional Knapsack Problem

(MMKP)(Jansen, 2009).

MMKP =

maximize Z =

n

∑

i=1

m

∑

j=1

p

i j

x

i j

(4)

sub ject to (1) and (2)

m

∑

j=1

x

i j

= 1 ∀i ∈

{

1, · ·· , n

}

(5)

x

i j

∈

{

0, 1

}

∀i ∈

{

1, · ·· , n

}

and ∀ j ∈

{

1, · ·· , m

}

Constraint (4) corresponds to the objective func-

tion that maximizes tester instance profits while sat-

isfying resource (1) and connectivity (2) constraints.

Constraint (5) indicates that each tester instance has

to be assigned to at most one node.

To solve such a problem, a well-known solver

in the constraint programming area, namely

Choco (Jussien et al., 2008), is used to compute

either an optimal or a satisfying solution of the

MMKP problem (Lahami et al., 2012).

3.4 Advantages and Limitations

Related to our previous work (Ma

ˆ

alej and Krichen,

2015) in which the test system is centralized on a sin-

gle node, several problems may occur while dealing

with load testing. In fact, we have noticed that not

only the SUT, which is made up of several BPEL in-

stances, can be affected by this heavy load but also

the relevance of the obtained test results. In order to

increase the performance of such test system and get

confidence in its test results, testers and analyzers are

distributed over several nodes. In this case, load test-

ing process is performed in parallel.

Moreover, our proposal takes into consideration

the connectivity to the SUT and also the availability

of computing resources during load testing. Thus, our

work provides a cost effective distribution strategy of

testers, that improves the quality of testing process

by scaling the performance through load distribution,

and also by moving testers to better nodes offering

sufficient resources required for the test execution.

Recall that the deployment of our distributed and

resource aware test system besides BPEL instances is

done on the cloud platform. It is worthy to note that

public cloud providers like Amazon Web Services

7

and Google Cloud Platform

8

offer a cloud infrastruc-

ture made up essentially of availability zones and re-

gions. A region is a specific geographical location

in which public cloud service providersdata centers

reside. Each region is further subdivided into avail-

ability zones. Several resources can live in a zone,

such as instances or persistent disks. Therefore, we

have to choose the VM instances in the same region

to host the testers, the analyzers and the SUT. This is

required in order to avoid the significant overhead that

can be introduced when the SUT and the test system

components are deployed in different regions on the

cloud.

4 ILLUSTRATION THROUGH

TRMCS CASE STUDY

This section is mainly dedicated to show the relevance

of our approach through a case study in the healthcare

domain. Subsections below details the adopted sce-

nario, its business process and also its application in

the context of resource aware load testing.

4.1 Case Study Description

Called Teleservices and Remote Medical Care Sys-

tem (TRMCS), this case study consists of providing

monitoring and assistance to patients suffering from

chronic health problems. Due to the new needs of

modern medicine, it can be enhanced with more elab-

orated functionalities like the acquisition, the analysis

and the storage of biomedical data.

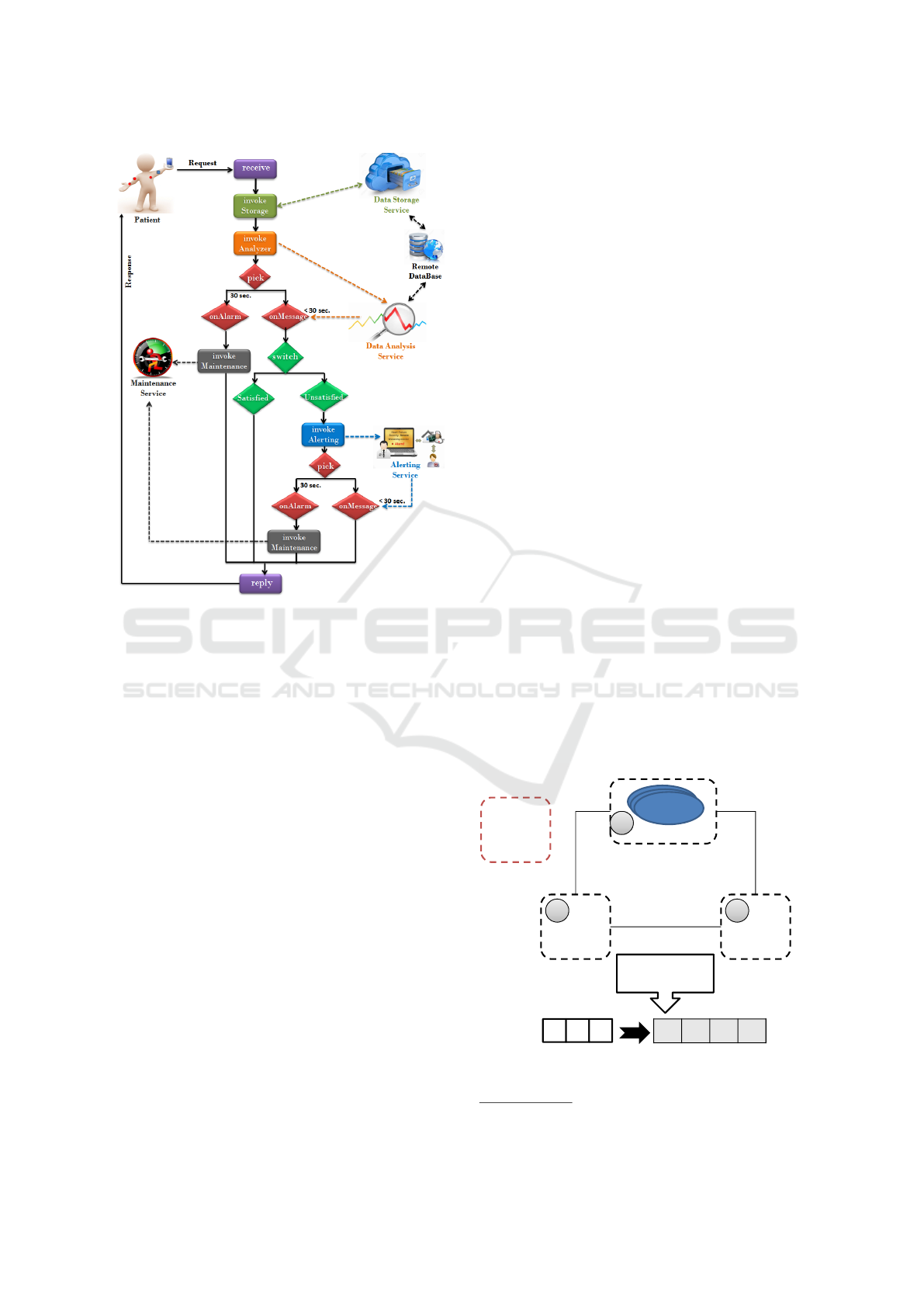

The adopted business process is highlighted

through a BPEL process in Figure 5. We assume that

it composes of several Web services namely Storage

Service (SS), Analysis Service (AnS), Alerting Ser-

vice (AlS) and Maintenance Service (MS).

7

https://aws.amazon.com/fr/

8

https://cloud.google.com/

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

34

Figure 5: The TRMCS Process.

For a given patient suffering from chronic high

blood pressure, measures like his arterial blood pres-

sure and his heart-rate beats per minute are collected

periodically (for instance three time per day). For

the two collected measures, a request is sent to the

TRMCS process. First, the Storage Service is invoked

to save periodic reports in the medical database.

Then, the Analyzer Service is charged with analyzing

the monitored data in order to detect whether some

thresholds are exceeded. This analysis is conditioned

by a processing time. Indeed, the process should re-

ceive a response from the AnS before reaching 30

seconds. Otherwise, the process send a connection

problem report to the Maintenance Service. In case

of receiving the analysis response before reaching 30

seconds, two scenarios are studied. If thresholds are

satisfied, a detailed reply is sent to the corresponding

patient. Otherwise, the Alerting Service is invoked in

order to send urgent notification to the medical staff

(such as doctors, nurses, etc.).

Similar to the Analysis Service, the Alerting Ser-

vice is constrained by a waiting time. If medical staff

are notified before reaching 30 seconds, the final re-

ply is sent to the corresponding patient. Otherwise,

the Maintenance Service is invoked.

We suppose that the TRMCS application can be

installed in different cities within a country. Thus,

we should consider that several BPEL servers are re-

quired to handle multiple concurrent patient requests.

From a technical point of view, we adopted Oracle

BPEL Process Manager

9

as a solution for designing,

deploying and managing the TRMCS BPEL process.

We also opted for Oracle BPEL server. The major

question to be tackled here is how to apply our pro-

posal of load testing TRMCS BPEL composition in

a cost effective manner and without introducing side

effects?

4.2 Distributed and Resource Aware

Load Testing of TRMCS Process

With the aim of checking the satisfaction of perfor-

mance requirements under a heavy load of patients

requests, we apply our distributed and resource aware

load testing approach. For simplicity reasons, we fo-

cus at this stage on studying the load testing of a sin-

gle BEPL server while the load is handled by several

testers simulating concurrent patient requests. To do

so, we consider a test environment made up of four

nodes: a server node (N1) and three test nodes (N2,N3

and N4). As illustrated in Figure 6, we suppose that

this environment has some connectivity problems. In

fact, the node N4 is forbidden to host tester instances

because no route is available to communicate with the

BPEL instance under test.

To perform distributed load tests efficiently and

without impacting test results, tester instances Ti have

to be deployed in this test environment while fitting

resources and connectivity constraints (e.g., memory

and energy consumption, link bandwidth, etc.).

P

11

= maxP

Test Node N4

BPEL Server Node N1

Forbidden

Node for Ti

Ti

Ti Ti

100Mbps 150Mbps

150Mbps

TRMCS BPEL

instance

Test Node N2 Test Node N3

N1 N3 N2

0

1 2

Rank Vector

100 50 75 0

Matrix P

maxP=100

Step

p

=25

Profit Calculation

150Mbps

P

12

= maxP-1*step

p

P

13

= maxP-2*step

p

Figure 6: Illustrative Example.

9

http://www.oracle.com/technetwork/middleware/bpel/

Distributed and Resource-Aware Load Testing of WS-BPEL Compositions

35

Thus, we look for a best placement solution of a

given tester Ti. First of all, the node N4 is discarded

from the tester placement process because the link

with the BPEL server is broken. Consequently, the

variable x

i4

is equal to zero. Second, we compute the

Rank Vector for the rest of connected test nodes and

we deduce the profit matrix. We remark here that the

profit p

i j

is maximal if the tester Ti is assigned to the

server node N1 because assigning a tester to its cor-

responding node under test and performing local tests

reduces the network communication cost. This profit

decreases with respect to the node index in the Rank

Vector. For instance, N3 is considered a better target

for Ti than N2 although they have the same distance

far from the server node because the link bandwidth

between N3 and N1 is greater than the link bandwidth

between N2 and N1.

For example, in the case of four nodes and a given

tester Ti (see Figure 6), the optimal solution of place-

ment can be the test node N1. Consequently, the gen-

erated variable x

i

is as follows:

x

i

=

1, 0, 0, 0

5 RELATED WORKS

In the following, we discuss some existing works ad-

dressing load testing in general, test distribution and

test resource awareness.

5.1 Existing Works on Load Testing

Load testing and performance monitoring become fa-

cilitated thanks to existing tools. In fact, load testing

tools are used for software performance testing in or-

der to create a workload on the system under test, and

measure response times under this load. These tools

are available from large commercial vendors such as

Borland, HP Software, IBM Rational and Web Per-

formance Suite, as well as Open source projects. Web

sites. Krizanic et al (Krizanic et al., 2010) analyzed

and compared several existing tools which facilitate

load testing and performance monitoring, in order to

find the most appropriate tools by criteria such as ease

of use, supported features, and license. Selected tools

were put in action in real environments, through sev-

eral Web applications.

Despite the fact that commercial tools offer richer

set of features and are in general easier to use, avail-

able open source tools proved to be quite sufficient

to successfully perform given tasks. Their usage re-

quires higher level of technical expertise but they are

a lot more flexible and extendable.

There are also different research works deal-

ing with load and stress testing in various contexts.

Firstly, Yang and Pollock (Yang and Pollock, 1996)

proposed a technique to identify the load sensitive

parts in sequential programs based on a static analy-

sis of the code. They also illustrated some load sensi-

tive programming errors, which may have no damag-

ing effect under small loads or short executions, but

cause a program to fail when it is executed under a

heavy load or over a long period of time. In addi-

tion, Zhang and Cheung (Zhang and Cheung, 2002)

described a procedure for automating stress test case

generation in multimedia systems. For that, they iden-

tify test cases that can lead to the saturation of one

kind of resource, namely CPU usage of a node in the

distributed multimedia system. Furthermore, Grosso

et al. (Grosso et al., 2005) proposed to combine static

analysis and program slicing with evolutionary test-

ing, in order to detect buffer overflow threats. For that

purpose, the authors used of Genetic Algorithms in

order to generate test cases. Garousi et al. (Garousi

et al., 2006) presented a stress test methodology that

aims at increasing chances of discovering faults re-

lated to distributed traffic in distributed systems. The

technique uses as input a specified UML 2.0 model of

a system, extended with timing information. More-

over, Jiang et al. (Jiang et al., 2008) and Jiang (Jiang,

2010) presented an approach that accesses the exe-

cution logs of an application to uncover its dominant

behavior and signals deviations from the application

basic behavior.

Comparing the previous works, we notice that

load testing concerns various fields such as multime-

dia systems (Zhang and Cheung, 2002), network ap-

plications (Grosso et al., 2005), etc. Furthermore, all

these solutions focus on the automatic generation of

load test suites. Besides, most of the existing works

aim to detect anomalies which are related to resource

saturation or to performance issues as throughput, re-

sponse time, etc. Besides, few research efforts, such

as Jiang et al. (Jiang et al., 2008) and Jiang (Jiang,

2010), are devoted to the automated analysis of load

testing results in order to uncover potential problems.

Indeed, it is hard to detect problems in a load test

due to the large amount of data which must be ana-

lyzed. We also notice that the identification of prob-

lem cause(s) (application, network or other) is not the

main goal behind load testing, rather than studying

performance of the application under test, this fact

explains why few works address this issue. How-

ever, in our work, we are able to recognize if the

detected problem under load is caused by implemen-

tation anomalies, network or other causes. Indeed,

we defined and validated our approach based on in-

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

36

terception of exchanged messages between the com-

position under test and its partner services. Thus it

would be possible to monitor exchanged messages in-

stantaneously, and to recognize what is the cause be-

hind their loss or probably their reception delay, etc.

Studying the existing works on load testing, we re-

mark that the authors make use of one instance tester

for both the generation and execution of load test

cases. To the best of our knowledge, there is no re-

lated work that proposes to use multiple testers at the

same time on the same SUT. Thus we do not evoke

neither testers placement in different nodes nor their

management.

5.2 Existing Works on Test Distribution

The test distribution over the network has been rarely

addressed by load testing approaches. We have identi-

fied only two approaches that shed light on this issue.

In the first study (Bai et al., 2006), the au-

thors introduce a light-weight framework for adap-

tive testing called Multi Agent-based Service Testing

in which runtime tests are executed in a coordinated

and distributed environment. This framework encom-

passes the main test activities including test genera-

tion, test planning and test execution. Notably, the

last step defines a coordination architecture that facil-

itates mainly test agent deployment and distribution

over the execution nodes and test case assignment to

the adequate agents.

In the second study (Murphy et al., 2009), a

distributed in vivo testing approach is introduced.

This proposal defines the notion of Perpetual Testing

which suggests the proceeding of software analysis

and testing throughout the entire lifetime of an ap-

plication: from the design phase until the in-service

phase. The main contribution of this work consists

in distributing the test load in order to attenuate the

workload and improve the SUT performance by de-

creasing the number of tests to run.

Unlike these approaches, our work aims at defin-

ing a distributed test architecture that optimizes the

current resources by instantiating testers in execution

nodes while meeting resource availability and fitting

connectivity constraints. This has an important im-

pact on reducing load testing costs and avoiding over-

heads and burdens.

5.3 Existing Works on Test Resource

Awareness

As discussed before, load testing is a resource-

consuming activity. In fact, computational resources

are used for generating tests if needed, instantiating

tester instances charged with test execution and finally

starting them and analyzing the obtained results. No-

tably, the bigger the number of test cases is, the more

resources such as CPU load, memory consumption

are used. Hence, we note that the intensive use of

these computational resources during the test execu-

tion has an impact not only on the SUT but also on

the test system itself. When such a situation is en-

countered, the test results can be wrong and can lead

to an erroneous evaluation of the SUT responses.

To the best of our knowledge, this problem has

been studied only by Merdes work (Merdes et al.,

2006). Aiming at adapting the testing behavior to the

given resource situation, it provides a resource-aware

infrastructure that keeps track of the current resource

states. To do this, a set of resource monitors are im-

plemented to observe the respective values for pro-

cessor load, main memory, battery charge, network

bandwidth, etc. According to resource availability,

the proposed framework is able to balance in an intel-

ligent manner between testing and the core function-

alities of the components. It provides in a novel way a

number of test strategies for resource aware test man-

agement. Among these strategies, we can mention,

for example, Threshold Strategy under which tests are

performed only if the amount of used resources does

not exceed thresholds.

Contrary to our distributed load testing architec-

ture, this work supports a centralized test architecture.

6 CONCLUSION & FUTURE

WORK

In this paper, we firstly described our contribution for

the study of BPEL compositions behaviors under var-

ious load conditions. Then, we explained the princi-

ple of test logs analysis phase. Indeed, test results are

exhaustively analyzed and advanced information are

provided by our tester.

In order to better the system performance and re-

sponsiveness, we also proposed in this work a tester

deployment solution that considers many distributed

tester instances. Thus, we extended a previous tool

with test distribution and resource awareness capabil-

ities. To do so, we adopted a distributed test archi-

tecture in which several BPEL instances are running

in a given execution node and several tester instances

as well as load generators are running in several test

nodes. With the aim of avoiding the network burden,

the placement of tester instances was performed with

respect to resource availability and network connec-

tivity. As illustrative example, a case study in the

healthcare domain is introduced and applied in the

Distributed and Resource-Aware Load Testing of WS-BPEL Compositions

37

context of resource aware load testing.

A promising future work would be to support the

scalability issue by opting, as an example, for the load

balancing concept. In fact, implementing clustering

mechanisms presents an interesting technique to im-

prove the performance of distributed BPEL servers

deploying the same SUT. Thus, incoming BPEL

client requests should be equally distributed among

the servers to achieve quick response. Indeed, the

principle of load balancing is to realize a distribution

of tasks to some machines in an intelligent way.

REFERENCES

Bai, X., Dai, G., Xu, D., and Tsai, W. (2006). A Multi-

Agent Based Framework for Collaborative Testing on

Web Services. In Proceedings of the 4th IEEE Work-

shop on Software Technologies for Future Embedded

and Ubiquitous Systems, and the 2nd International

Workshop on Collaborative Computing, Integration,

and Assurance (SEUS-WCCIA’06), pages 205–210.

Barreto, C., Bullard, V., Erl, T., Evdemon, J., Jordan, D.,

Kand, K., Knig, D., Moser, S., Stout, R., Ten-Hove,

R., Trickovic, I., van der Rijn, D., and Yiu, A. (2007).

Web Services Business Process Execution Language

Version 2.0 Primer. OASIS.

Beizer, B. (1990). Software Testing Techniques (2Nd ed.).

Van Nostrand Reinhold Co., New York, NY, USA.

Garousi, V., Briand, L. C., and Labiche, Y. (2006). Traffic-

aware stress testing of distributed systems based on

UML models. In Proceedings of ICSE’06, pages 391–

400, Shanghai, China. ACM.

Grosso, C. D., Antoniol, G., Penta, M. D., Galinier, P.,

and Merlo, E. (2005). Improving network applica-

tions security: a new heuristic to generate stress test-

ing data. In Proceedings of GECCO’05, pages 1037–

1043, Washington DC, USA. ACM.

James, H. H., Douglas, C. S., James, R. E., and Aniruddha,

S. G. (2010). Tools for continuously evaluating dis-

tributed system qualities. IEEE Software, 27(4):65–

71.

Jansen, K. (2009). Parametrized Approximation Scheme for

The Multiple Knapsack Problem. In Proceedings of

the 20th Annual ACM-SIAM Symposium on Discrete

Algorithms (SODA’09), pages 665–674.

Jiang, Z. M. (2010). Automated analysis of load testing

results. In Proceedings of ISSTA’10, pages 143–146,

Trento, Italy. ACM.

Jiang, Z. M., Hassan, A. E., Hamann, G., and Flora, P.

(2008). Automatic identification of load testing prob-

lems. In Proceedings of ICSM’08, pages 307–316,

Beijing, China. IEEE.

Jussien, N., Rochart, G., and Lorca, X. (2008). Choco: an

Open Source Java Constraint Programming Library.

In Proceeding of the Workshop on Open-Source Soft-

ware for Integer and Contraint Programming (OS-

SICP’08), pages 1–10.

Krizanic, J., Grguric, A., Mosmondor, M., and Lazarevski,

P. (2010). Load testing and performance monitoring

tools in use with ajax based web applications. In 33rd

International Convention on Information and Com-

munication Technology, Electronics and Microelec-

tronics, pages 428–434, Opatija, Croatia. IEEE.

Lahami, M., Krichen, M., Bouchakwa, M., and Jmaiel, M.

(2012). Using knapsack problem model to design a

resource aware test architecture for adaptable and dis-

tributed systems. In ICTSS, pages 103–118.

Ma

ˆ

alej, A. J. and Krichen, M. (2015). Study on the limi-

tations of WS-BPEL compositions under load condi-

tions. Comput. J., 58(3):385–402.

Merdes, M., Malaka, R., Suliman, D., Paech, B., Brenner,

D., and Atkinson, C. (2006). Ubiquitous RATs: How

Resource-Aware Run-Time Tests Can Improve Ubiq-

uitous Software Systems. In Proceedings of the 6th

International Workshop on Software Engineering and

Middleware (SEM’06), pages 55–62.

Mikucionis, M., Larsen, K. G., and Nielsen, B. (2004). T-

uppaal: Online model-based testing of real-time sys-

tems. In Proceedings of ASE’04, pages 396–397,

Linz, Austria. IEEE Computer Society.

Murphy, C., Kaiser, G., Vo, I., and Chu, M. (2009). Quality

Assurance of Software Applications Using the In Vivo

Testing Approach. In Proceedings of the 2nd Inter-

national Conference on Software Testing Verification

and Validation (ICST’09), pages 111–120.

Yang, C. D. and Pollock, L. L. (1996). Towards a struc-

tural load testing tool. SIGSOFT Softw. Eng. Notes,

21(3):201–208.

Zhang, J. and Cheung, S. C. (2002). Automated test case

generation for the stress testing of multimedia sys-

tems. Softw., Pract. Exper., 32(15):1411–1435.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

38