A New Approach to Visualise Accessibility Problems of Mobile Apps in

Source Code

Johannes Feiner

1

, Elmar Krainz

1,2

and Keith Andrews

3

1

Institute of Internet Technologies & Applications, FH-JOANNEUM, Werk-VI-Straße 46b, 8605 Kapfenberg, Austria

2

IIS, Kepler University Linz, Altenberger Straße 69, 4040 Linz, Austria

3

Institute of Interactive Systems and Data Science, Graz University of Technology, Inffeldgasse 16c, 8010 Graz, Austria

Keywords:

Accessibility, Code Visualisation, Usability.

Abstract:

A wide range of software development is moving to the direction and domain of mobile applications. Single

developer or small teams create apps for smartphones. Too often, they have not the capacity or know-how to

check for usability problems and do not care for accessibility. We propose a novel workflow to bring usability

issues into the development process: A quick accessibility evaluation (QAC) with 15 predefined metrics allow

to collect issues. These issues are further condensed into formalised (UsabML) and the issues are tagged with

the location in the source code. A dashboard view (RepoVis) showing the source code from a repository allows

to spot and interactively inspect code and related issues simultaneously.

1 INTRODUCTION

The aim of software engineers is to create high qua-

lity software products including readable, structured

source code and error free programs. From the user’s

perspective, the most important component is the per-

ceivable part – the user interface (UI).

The quality of the user interface is defined by usa-

bility attributes, like effectiveness, efficiency and sa-

tisfaction (ISO, 2000). But, many people cannot even

experience good or bad usability, in cases where the

software is not accessible at all.

1.1 Problem

The UN Convention on the Rights of Persons with Di-

sabilities (CRPD) (The United Nations, 2006) reveals

that about 760 million people, i.e. 10% of the world

population, are handicapped. Therefore, accessibility

(A11Y) is a significant issue on software user inter-

faces. The ISO Standard 9241-171:2008 (ISO, 2008)

defines accessibility as: interactive system usability of

a product, service, environment or facility by people

with the widest range of capabilities. This means the

ability for anyone to understand and operate a soft-

ware product.

Usability and accessibility are not built-in featu-

res. They require awareness of developers. To im-

prove usability and accessibility three steps are nee-

ded in the development process:

1. Finding errors in the user interface

2. Mapping error to code

3. Improving the code

1.2 Approach

The paper at hand shows a novel approach to combine

accessibility evaluation and reporting with the visua-

lisation of reported bugs along with the source code.

Following the recommendation for good research in

Software Engineering (Shaw, 2002) we set up the fol-

lowing research questions:

• RQ1 Which methods would help software develo-

pers to evaluate accessibility of mobile apps in a

quick and structured way?

• RQ2 How to visually present accessibility issues

of mobile android apps along with corresponding

source code?

To answers this questions we proceeded in follo-

wing way: Fist we analysed the cyclic work flows of

mobile application developers when they are impro-

ving apps; how do they extract relevant aspects from

usability reports. Then we condensed this informa-

tion gathering flow into a new workflow, which can

Feiner, J., Krainz, E. and Andrews, K.

A New Approach to Visualise Accessibility Problems of Mobile Apps in Source Code.

DOI: 10.5220/0006704405190526

In Proceedings of the 20th International Conference on Enterprise Information Systems (ICEIS 2018), pages 519-526

ISBN: 978-989-758-298-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

519

better support improvements concerning accessibility.

Finally, some tools for software engineers were ad-

ded (compare Sections 3.1 Quick Accessibility Check

QAC, 3.2 Usability Markup Lanaguage UsabML and

Repository Visualisation 3.3 RepoVis) to enhance the

overall efficiency. The tools support comprehension

of existing A11Y problems and guide during the im-

plementation of usability improvements.

Our efforts resulted in an heuristic approach to

evaluate app accessibility with the newly designed

tool QAC. This includes several thoughtfully selected

predefined evaluation criteria and partial support by

existing tools. The reports are processed into a struc-

tured reporting format, compare previous research on

UsabML (Feiner et al., 2010), and the usability pro-

blems found are linked to code locations. Further-

more, the developers can take advantage of the new

tool RepoVis implemented by the authors to view

source code and accessibility issues side-by-side.

To validate the novel workflow of detecting acces-

sibility issues (RQ1) and reporting them back to de-

velopers in a visual way (RQ2) for fixing the flaws

we performed an experiment with two selected mo-

bile apps from the Google Play store.

1.3 Hypothesis

The main hypothesis we build upon is that an alter-

native – above all simpler – approach would improve

awareness and software quality in terms of accessibi-

lity. The main aspects of our new approach can be

summarised as follows:

• For quick, nevertheless useful results, a UX team

is not used in any case. Small developer teams or

single developers can perform a quick accessibi-

lity check (QAC) on their own.

• The integration of two domains, namely usability

and software engineering is relevant. This way

it is possible to connect user experience (UX),

accessibility (A11Y) and source code which rai-

ses acceptance of the workflows and tools enga-

ged.

• A dashboard overview supports faster comprehen-

sion of source code and related usability evalua-

tion results. Combined views are helpful, because

there is no need for retrieving information from

different tools and data-sources manually.

The remainder of this paper is structured in the follo-

wing way. In Section 2 alternative approaches are lis-

ted, in Section 3 the suggested workflow is described

in detail and in Section 4 the performed evaluations

of two Android apps from the Google Play store are

presented.

2 RELATED WORK

Accessibility is an important factor to provide servi-

ces and information to the vast majority of people.

For example the European Union enacted in 2016 the

directive 2016/2102 which ..aims to ensure that the

websites and mobile applications of public sector bo-

dies are made more accessible on the basis of com-

mon accessibility requirements... (European Union,

2016).

The definitions and the principles defined in the

accessibility standards provide the basis of accessi-

ble user interfaces. The Web Accessibility Initia-

tive (WAI) offers guidelines for developers about pro-

per implementation of websites and mobile appli-

cations. The Web Content Accessibility Guidelines

(WCAG 2.0) constitute a common standard, which

provides generic principles for accessible develop-

ment. WCAG 2.0 outlines four principles of acces-

sibility:

1. Perceivable: Information and user interface com-

ponents must be presentable to users in ways they

can perceive. For example one might provide an

alternative text to an image.

2. Operable: User interface components and naviga-

tion must be operable, which means, for example,

not limited to mouse usage.

3. Understandable: Information and the operation of

user interface must be understandable in terms of

readability or predictability of navigation.

4. Robustness: Content must be robust enough that

it can be interpreted reliably by a wide variety of

user agents, including assistive technologies.

Mobile operating systems like iOS and Android

have their own platform guidelines for developers.

Compare the design guidelines for Android (Google,

2017) and for iOS (Apple, 2012). They follow the

WAI principles and have many aspects in common,

but also some varieties in the implementation for the

platforms assistive technologies.

Other relevant rulesets are the Accessible Tool

Authoring Tool ATAG 2.0 (Treviranus et al.,

2015) and the User Agent Accessibility Guidelines

UAAG 2.0 (Patch et al., 2015a). The ATAG 2.0 pro-

vide guidance to make the software and services for

web content creation accessible. The accessibility of

browsers, media players and the interface to assistive

technologies is recommended by the UAAG 2.0. All

these guidelines are the foundation for development

and for the assessment of accessibility.

The evaluation can be done either with user tes-

ting or in a more formal way with experts using those

guidelines. The expert evaluation method is related to

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

520

the Heuristic Evaluation (Nielsen and Molich, 1990),

but contrary to the intuitive usability heuristics, using

the accessibility guidelines needs more background

know-how and much more efforts even for experts.

Inspired by the idea of Guerrilla HCI (Nielsen,

1994) to simplify the usability engineering process

and the WAI – Easy Checks (Henry, 2013), a method

for a first accessibility review, this paper proposes a

quick method to get an overview of the most signi-

ficant accessibility problems of a mobile application

(see Section 3).

The data acquisition during usability testing hap-

pens sometimes on paper (Vilbergsd

´

ottir et al., 2006)

according given schemes and sometimes with sup-

port of various electronic tools (Hvannberg et al.,

2007; Andrews, 2008). The collected raw data is

further condensed into a written report. Compa-

rability (Molich et al., 2010) of evaluation results,

as well as reusability of reported data, is still un-

der research (Cheng, 2016). In most cases the re-

sults of usability evaluations are available to mana-

gers and software developers in printed formats or

as unstructured electronic documents such as PDF.

Other researchers suggest a standard method (ISO,

2006; Komiyama, 2008) for reporting usability test

findings. UsabML (Feiner et al., 2010; Feiner and

Andrews, 2012) introduces an XML structured appro-

ach to work with evaluation results. Through formali-

sation it is possible to programmatically process col-

lected data. For example, issues might be pushed into

bug tracking systems automatically.

A well-designed feedback loop is vital in modern

user centred design (UCD) to enable developers to re-

act on the results of an review. Usability defect repor-

ting should address the needs of developers (Yusop

et al., 2016).

Software engineers prefer the evaluation results

presented within their existing toolchains. That me-

ans, the acceptance of usability suggestions is better

when, for example, an accessibility issue is presen-

ted as bug in a bug tracker or with tools combining

and linking the different sources of code and evalua-

tion data. To fix bugs, code comprehension is vital.

For code comprehension (Hawes et al., 2015) of lar-

ger software systems various mappings could be used.

Novel approaches, such as statistical language mo-

dels (Murphy, 2016) should improve software com-

prehension furthermore. Among many different ways

for graphical representation, the SeeSoft (Eick et al.,

1992) software visualisation is special in displaying

all files at the same time by mapping the lines of

source code to coloured pixels according to selected

software metrics. Based on the SeeSoft idea, the aut-

hors introduce RepoVis, which tries to visualise the

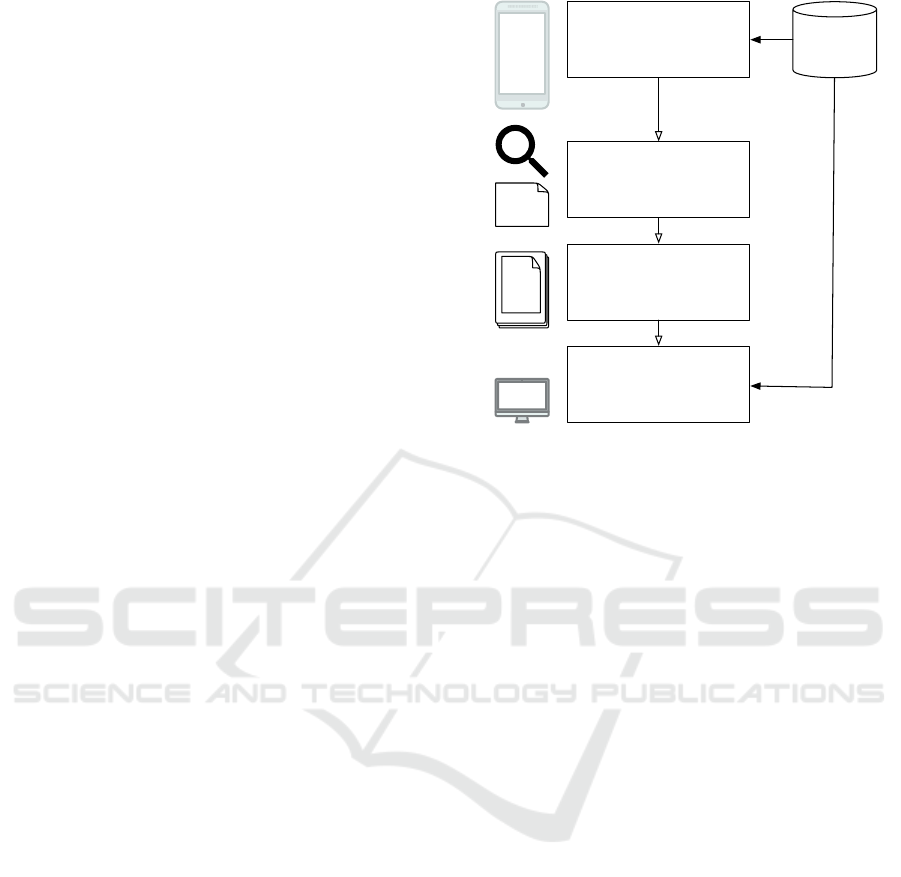

Qac a11y check

Formalising to UsabML

RepoVis dashboard

Source

Code

Mobile App

Figure 1: The novel workflow of A11Y inspection, forma-

lising the results and combined issue code visualisation.

issues side-by-side to source code as discussed later

in the paper in Section 3.

3 A NOVEL WORKFLOW

The questions of effective usability evaluation and re-

porting of mobile smartphone apps is addressed by

the design of a novel workflow. This should ena-

ble developers of small development teams – in cases

where no usability evaluation experts are available –

to create software which takes accessibility into ac-

count.

The workflow is depicted in Figure 1. It explains

the stages from checking accessibility of an app up to

presenting results to developers.

3.1 Quick Accessibility Check - QAC

To integrate accessibility evaluation in the develop-

ment process one needs a method which is (a) appli-

cable to developers and testers without the deep back-

ground knowledge of usability and accessibility eva-

luation and (b) provides measurable result to rate and

compare the improvements in the user interface.

The quick accessibility check (QAC) uses 15 cri-

teria in 3 sections to measure and compare the acces-

sibility of an user interface. These heuristics are ba-

sed/are an intersection of the Material Accessibility

Design Guidelines (Google, 2017), the Web Content

Accessibility Guidelines (Caldwell et al., 2008) as the

most significant standard for accessibility evaluation

A New Approach to Visualise Accessibility Problems of Mobile Apps in Source Code

521

Table 1: Quick Accessibility Check (QAC) with 15 questi-

ons grouped into three sections.

QAC-Section No. Checking

Assistive Android 1 Screenreader

-”- 2 Tabbing

-”- 3 External Keyboard

A11Y Scanner 4 Touch Size

-”- 5 Contrast Images

-”- 6 Contrast Text

-”- 7 Missing Labels

-”- 8 Redundant Labels

-”- 9 Implementation

Manual Checks 10 Text: Clear & Concise

-”- 11 Evident Navigation

-”- 12 Font Size

-”- 13 Support for Zooming

-”- 14 Position of Elements

-”- 15 Colour-blindness

and W3C Mobile Accessibility for Mobile (Patch et al.,

2015b).

The first section Assistive Android gives feedback

about the support for assistive technologies like the

screen reader or an external keyboard. In a scale from

1 (best) to 5 (worst) these topics are evaluated with as-

sistive technology (e.g. Talkback on Android device)

enabled.

The second section A11Y Scanner covers au-

tomatic testable features like contrast of texts and

images, suitable labels and descriptions of non-

text elements and the minimum size of toucha-

ble elements. On the Android platform a handy

tool is the Accessibility Scanner which can be

found at https://play.google.com/store/apps/details?

id=com.google.android.apps.accessibility.auditor. It

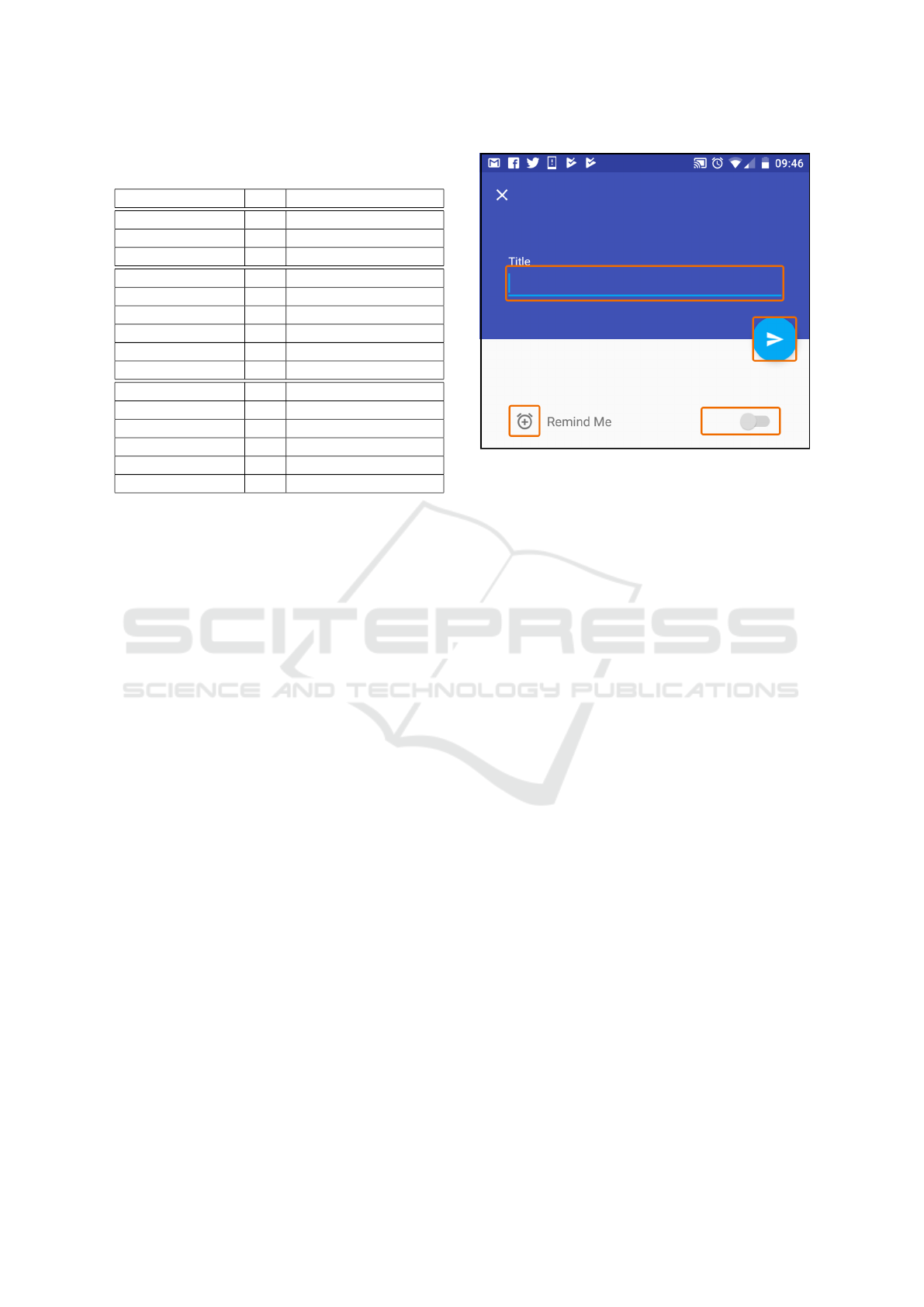

analyses the active user interface at runtime and provi-

des a textual (see Listing 3.1) and visual (see Figure 2)

description of the findings. In the QAC for each error

criteria the total number of occurrence is reported.

1 ...

2 Tou c h Ta r g et

3 com . av j i nd e r s i n gh s e k h o n . m in i ma l to d o :id

4 / use r To D o E di t T e xt

5 C o nsi d er maki n g this cl i c ka b le item

6 l a r g er . Th is item ’ s he i g ht is 44 dp .

7 C o nsi d er a mini m u m heigh t of 48 dp

8 ....

9 Item label

10 com . av j i nd e r s i n gh s e k h o n . m in i ma l to d o :id

11 / use r To D o R em i n d er

12 I co n I m ag e B u tt o n

13 This it e m may n ot have a l abel

14 r e ada b le by screen rea d e rs

15 ...

16 Ima g e c o ntr a s t

Figure 2: Tools can support the analysis of the UI accessi-

bility by highlighting problematic elements onscreen.

17 com . av j i nd e r s i n gh s e k h o n . m in i ma l to d o :id

18 / mak e T o D o F l o a t i n g Ac ti on Bu t t o n

19 C o nsi d er i n c re a si n g the con t ras t r a t i o

20 of this image ’ s fo r eg r ou n d and

21 b a ck g ro u nd .

22 ...

23 Item de s cr i pt i o n

24 com . av j i nd e r s i n gh s e k h o n . m in i ma l to d o :id

25 / use r To D o E di t T e xt

26 The cl ic k abl e item ’ s spea k ab l e

27 text (" Tit l e ") is id en t ic a l to t h a t of 1

28 oth e r item (1)

29 ...

Listing 1: Automated reports by the Google Accessibility

Checker can provide hints about related source code.

The third and last section Manual Checks is a manual

examination of informal criteria like the position of

interactive elements, a useful navigation or clear and

understandable texts and descriptions. These ratings

are also in a scale form 1 to 5.

A complete list of all 15 heuristics is shown in Ta-

ble 1. The Quick Accessibility Check QAC allows

even accessibility amateurs to find and rate accessi-

bility issues in a replicable and comparable way.

3.2 UsabML

The QAC results are formalised into Usability Mar-

kup Language (UsabML) for further processing. This

step is especially important, as it results in an XML

document format for the data, which means the infor-

mation can processed by tools further on. The Usa-

bML format allows validation checks on the data, it

supports the rendering of results via various styles-

heets to differently styled HTML documents for target

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

522

groups such as managers or developers. Additionally,

scripts might process the data and create post issues

to bug trackers.

As tools provide some hints about the source of an

accessibility problem – compare the Google Accessi-

bility Checker discussed above – this information can

be part of an UsabML report. Optionally, usability

managers or software developers might map issues

with code locations.

Find in Listing 3.2 selected parts of the formalised

report in UsabML, including the mapping of an issue

to the related source file and line number.

< rep o rt id = " rpt 2 01 3 34 "

gen - t im e st am p =" 2 0 17 -10 -22 T1 1 :3 5 :40 Z"

meth o d = " he " >

< titl e > N e x t cl ou d A p p Q- Eval </ ti t l e >

...

< h e ur i st i c id = " qa c07 ">

< titl e > Mi s si n g L a b els </ t i tle >

< d e sc ri p t i on >

Pro v ide label s de s cr ib i ng t he

pur p ose of an inp u t field .

</ de sc ri p ti on >

</ he ur i st i c >

...

< negative - fi nd i ng

heuristi c - id = " he u 12 " rank = " 3 "

is - main - ne gat i ve =" t r u e " id = " ne g 3 ">

< titl e > Bu t t on too sma l l ... < / title >

< d e sc ri p t i on >... > </ des c r i pt io n >

< found - by evaluat o r - id =" eval_ e k "/ >

< se ver i ty evaluator - id = " ev a l_j f " >

< valu e >3 </ valu e >

</ sev eri t y >

< do cum e nt ty p e = " image " >

< d e sc ri p t i on > The size s houl d ...

</ de s c r ip t i o n >

< k e y > kra i nz 3 3 < / key >

< fi len a me > k r ain z33 . p n g < / f ile n am e >

</ doc ume n t >

<code - ref er e nc e

project - id = " n e xt cl o u d ap p "

version - id = " commit - e ef 5 4 3 26 rf e 8 "

class - id = " main . xml "

package - id = " l a you t s "

meth od - id = " m a i n " line - n umbe r = " 24 " >

</ code - r ef e re n ce >

</ n e g a t i ve - fin d ing >

...

</ r epo r t

Listing 2: Formalised findings in UsabML.

One useful usage of the accessibility findings in the

structured and formalised UsabML format is to ren-

der them with source code on demand. The idea is to

allow developers to work in an environment and with

tools (such as git) they are accustomed to.

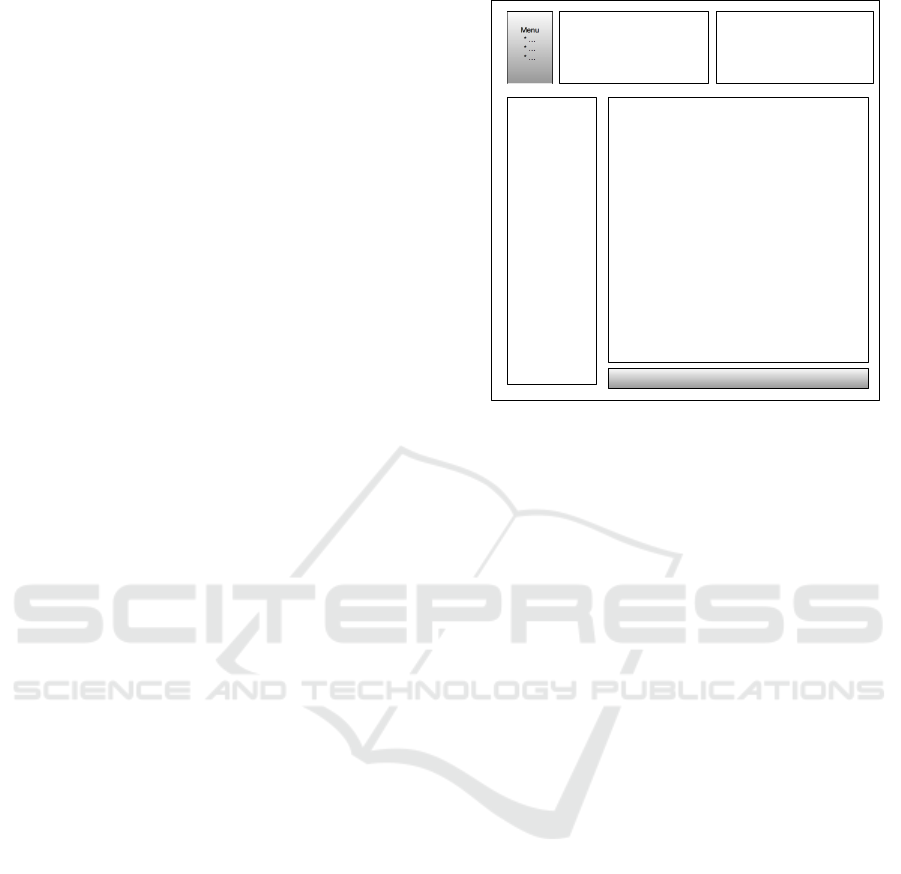

Usabiliy - INFOSCode- - INFOS

Repo GIT-Visualisation

Files & Directories

Source

Code

Menu

* …

* …

* …

Timeline

Figure 3: Visualising files of a project, source code and re-

lated accessibility issues side-by-side.

3.3 RepoVis

The web-based tool RepoVis consists of a backend

which connects to existing git repositories and a fron-

tend for rendering source code onscreen.

The visualisation allows to view all files within a

repository at once to provide a dashboard-like over-

view. The layout of this frontend is shown in Figure 3,

where separate – but connected – areas for the git re-

pository overview, the source code of a single file, and

the related issues are depicted.

The files are rendered as rectangular boxes with

the pixels inside coloured according given metrics.

For example, the pixels inside could show the author

who last changed and committed single lines of the

file. When drilling down, when inspecting single fi-

les, the source code related to a file is presented. In

the same way an usability – in this case an accessibi-

lity – issue will be shown to the developer for selected

files.

Developer can highlight on the dashboard all the

files with connected accessibility issues. This way of

presenting the current source code (or historical code

from former commits) and related accessibility issues

side-by-side should be an huge advantage for soft-

ware engineers. An advantage in terms of speed for

finding problematic code and in terms of code com-

prehension. For example, areas within the code base

with hot spots of multiple issues can be detected more

easily.

A New Approach to Visualise Accessibility Problems of Mobile Apps in Source Code

523

4 VALIDATION

To validate the approach we selected two popular

(more than 10.000 installs) open source mobile apps,

which on the one hand are examples where accessi-

bility plays an important role and on the other hand

apps where the source code is public available.

1. The Nextcloud Client App supports access

to cloud documents with mobiles. Available at

https://play.google.com/store/apps/details?id=com.

nextcloud.client; find the source at https://

github.com/nextcloud/ android; 100.000 installs.

2. The Minimal ToDo App allows to ma-

nage personal task lists. Available at

https://play.google.com/store/apps/details?id=com.

avjindersinghsekhon.minimaltodo; find the

source code at https://github.com/avjinder/

Minimal-Todo; 10.000 installs.

The evaluation steps follow the flow layout explained

in Section 3 consisting of following steps:

• QAC Evaluation according the quick accessibility

check criteria (again, compare Table 1 with the 15

A11Y metrics) and with tool support (in the sce-

nario presented the Google Accessibility Scanner

was used).

• UsabML Formalisation is done on the QAC re-

sults of Step 1. It outputs a single UsabML file,

a structured report in XML format. Mapping is-

sues to related lines of code is possible without

knowing the ins and outs of a project, because

the Google scanner already provides hints regar-

ding the origin of accessibility problems within

the source code.

• RepoVis Visualisation renders the connected git

repository and uses the output of Step 2, the Usa-

bML file. The connections specified in UsabML

are extracted to present source and issues side-by-

side.

The experts reviewing the two mobile apps rated for

the first section of the QAC AT Assistive technolo-

gies Android each topic (Screenreader, Tabbing, ex-

ternal Keyboard) from 1 (best) – 5 (worse). Then the

Accessibility Scanner output (number of issues found

for Touch Size, Contrast Images, Contrast Text, Mis-

sing Labels, Redundant Labels and Implementation)

were added. Finally, the experts rated the apps ac-

cording the items 10 to 15 from the third section Ma-

nual Checks. There, for Clear/Concise Text, Evident

Navigation, Font-size, Support for Zooming, Position

of Elements and Colour-blindness their rating ranged

from 1 (best) to 5 (worse). A summary of the results

is shown in Table 2.

Table 2: Quick Accessibility Check (QAC) results summa-

rised per section with notes about main reason for low ra-

tings.

Ratings in

section:

App 1 App 2 Selected rea-

sons for low

ratings:

Techno-

logies

Android

3,00 2,33 Screenreader

support mis-

sing.

No of

Accessibi-

lity Scanner

Issues

4 6 Small touch si-

zes, low con-

trast, missing la-

bels.

Manual

Checks

1,67 1,83 Positioning of

elements and

lack of concise

text

The format of the scanner and the condensed in-

formation in UsabML has been discussed in Section 3

and shown in Listing 3.2.

For the visualisation the repositories of the given

apps where cloned from github to the local file sys-

tems and configured in the RepoVis system. The Usa-

bML reports were provided by adding proper named

xml file into the file system of the RepoVis backend

server.

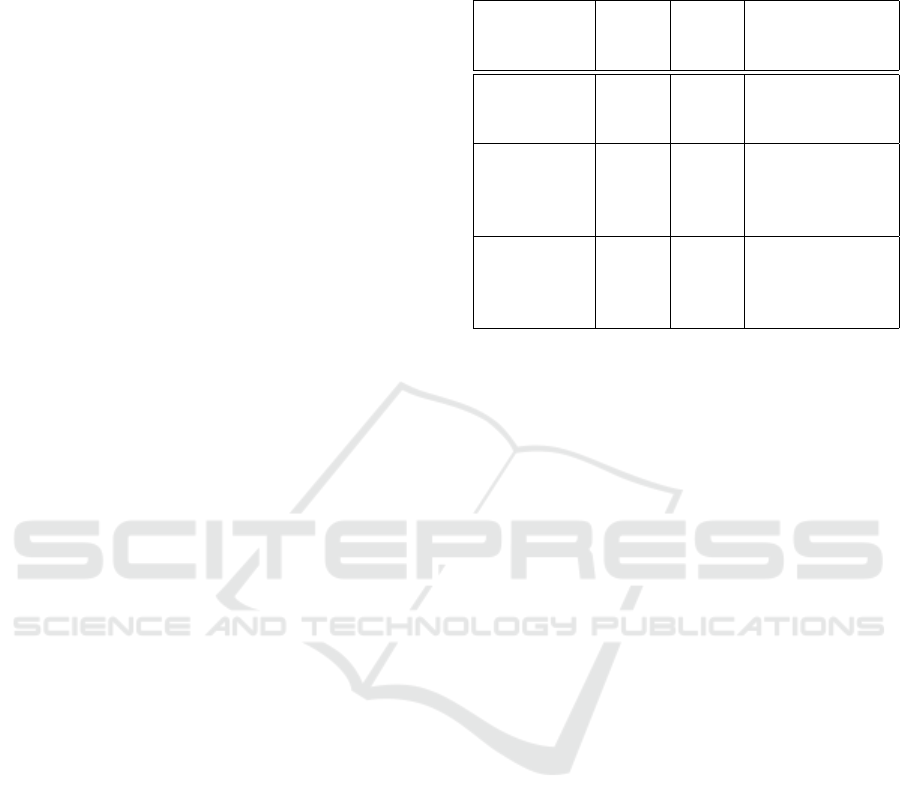

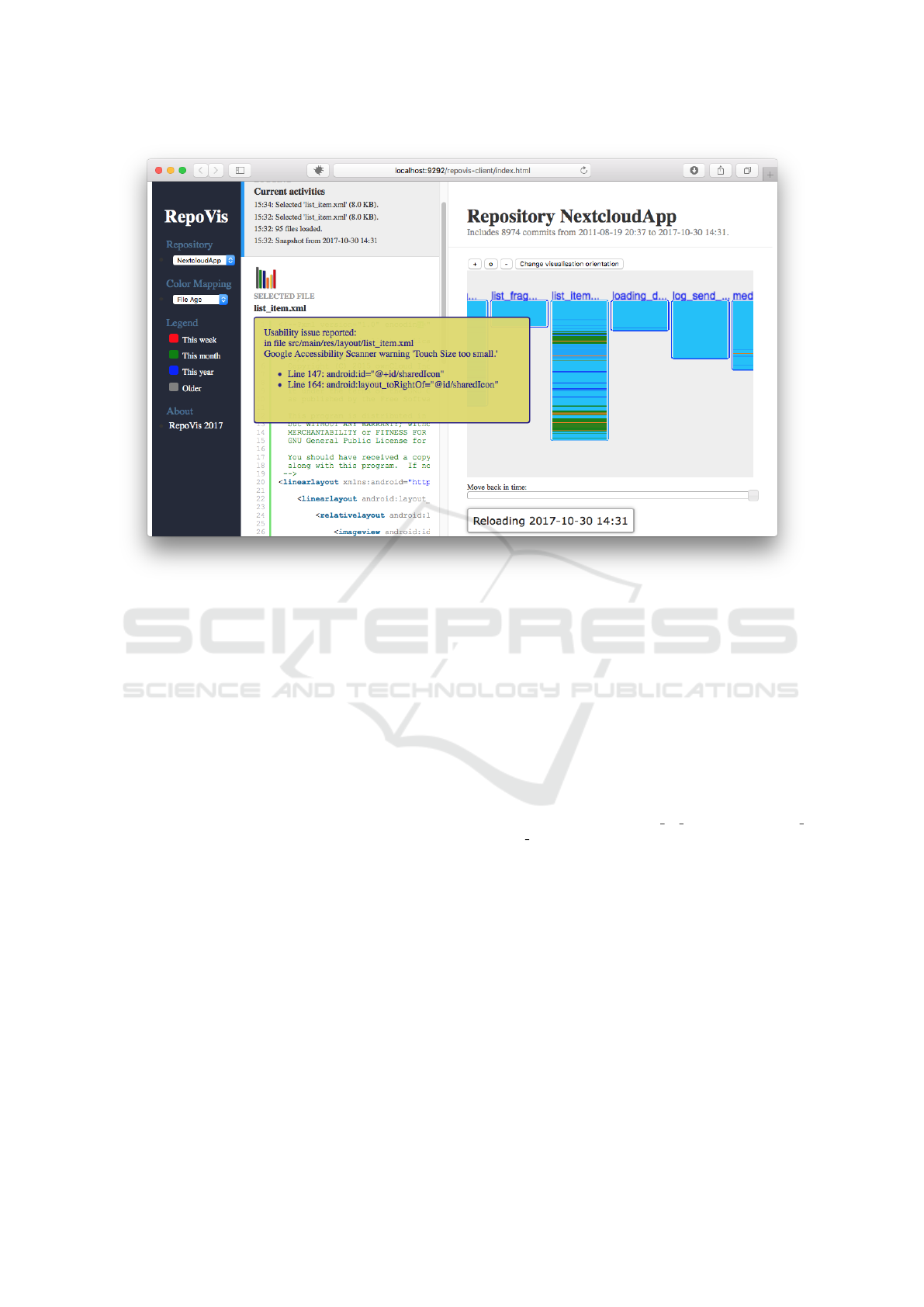

In Figure 4 the dashboard for an app is shown.

Developers can view the source code and the related

accessibility issues at once.

5 CONCLUSIONS

The contribution of this paper is a novel workflow

with strong focus on quick accessibility checking

(QAC) supporting the goal of increased willingness

among software teams to perform evaluations. In

a first step only 15 selected metrics deliver rele-

vant findings about A11Y problems in mobile ap-

plications. A formalisation step results in xml ba-

sed data (UsabML) which allows reuse and further

(semi-)automatic processing. Finally, we contribute

an integrated interactive visualisation (RepoVis) of

source code augmented with the accessibility pro-

blems found.

First results with QAC in combination with Re-

poVis assure the authors that many further impro-

vements for developers concerning accessible mobile

applications are possible. The novel workflow presen-

ted will be a starting point to automate several steps

of the process and integrate various representations of

accessibility issues (for example with advanced visu-

alisations).

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

524

Figure 4: Visualising code and related accessibility issues side-by-side.

In upcoming research we plan to address issues, such

as:

• Extending the target domain to usability in gene-

ral. Not only accessibility issues, but other kinds

of usability issues could be integrated.

• Including more tools to automate parts of the eva-

luation.

• Support for mapping issues and bugs found to the

location in the source code. In many cases this

mapping is not trivial and a semi-automated or ot-

herwise tool-supported approach would be help-

ful.

• Extend the presented solution to support the iOS

platform.

• Add tools to improve the QAC checking and map-

ping steps by auto-creating the suggested structu-

red reporting format UsabML.

To conclude, we hope that in the future the compu-

ter science community will put an even stronger fo-

cus on the user experience and accessibility of mobile

applications. This is necessary to support people with

special needs, who rely on accessibility to use smartp-

hone apps.

REFERENCES

Andrews, K. (2008). Evaluation comes in many gui-

ses. CHI 2008 Workshop on BEyond time

and errors: novel evaLuation methods for In-

formation Visualization (BELIV’08). http://

www.dis.uniroma1.it/beliv08/pospap/andrews.pdf.

Retrieved 2017-12-22.

Apple (2012). Accessibility programming guide for

ios. https://developer.apple.com/library/content/

documentation/UserExperience/Conceptual/iPhone

Accessibility/Accessibility

on iPhone/Accessibility

on iPhone.html. Retrieved 2017-12-22.

Caldwell, B., Reid, L. G., Cooper, M., and Van-

derheiden, G. (2008). Web content accessibility

guidelines (WCAG) 2.0. W3C recommendation,

W3C. http://www.w3.org/TR/2008/REC-WCAG20-

20081211/. Retrieved 2017-12-22.

Cheng, L. C. (2016). The mobile app usability inspection

(MAUi) framework as a guide for minimal viable pro-

duct (mvp) testing in lean development cycle. In Proc.

2

nd

International Conference in HCI and UX on Indo-

nesia 2016, CHIuXiD 2016, pages 1–11.

Eick, S. G., Steffen, J. L., and Sumner, E. E. J. (1992). See-

soft — a tool for visualizing line oriented software sta-

tistics. IEEE Trans. Softw. Eng., 18(11):957–968.

European Union (2016). Directive (eu) 2016/2102 of the

european parliament and of the council of 26 octo-

ber 2016 on the accessibility of the websites and mo-

bile applications of public sector bodies. http://eur-

A New Approach to Visualise Accessibility Problems of Mobile Apps in Source Code

525

lex.europa.eu/eli/dir/2016/2102/oj. Retrieved 2017-

12-22.

Feiner, J. and Andrews, K. (2012). Usability reporting with

UsabML. In Winckler, M., Forbrig, P., and Bern-

haupt, R., editors, Proc. 4

th

International Conference

on Human-Centered Software Engineering, volume

7623 of HCSE 2012, pages 342–351. Lecture Notes

in Computer Science, Springer Berlin / Heidelberg.

Feiner, J., Andrews, K., and Krajnc, E. (2010). UsabML

– the usability report markup language: Formalising

the exchange of usability findings. In Proc. 2

nd

ACM

SIGCHI Symposium on Engineering Interactive Com-

puting Systems, EICS 2010, pages 297–302. ACM.

Google (2017). Accessibility – usability – material design

guidelines. https://material.io/guidelines/usability/

accessibility.html. Retrieved 2017-12-22.

Hawes, N., Marshall, S., and Anslow, C. (2015). Code-

surveyor: Mapping large-scale software to aid in code

comprehension. In 3

rd

Working Conference on Soft-

ware Visualization, VISSOFT 2015, pages 96–105.

Henry, S. L. (2013). Easy checks – a first review

of web accessibility. https://www.w3.org/WAI/eval/

preliminary.html. Retrieved 2017-12-22.

Hvannberg, E. T., Law, E. L.-C., and L

´

erusd

´

ottir,

M. K. (2007). Heuristic evaluation: Compa-

ring ways of finding and reporting usability pro-

blems. Interacting with Computers, 19(2):225–240.

http://kth.diva-portal.org/smash/get/diva2:527483/

FULLTEXT01. Retrieved 2017-12-22.

ISO (2000). ISO/DIS 9241-11 ergonomics of human-

system interaction – part 11: Usability: Definitions

and concepts. Standard, International Organization

for Standardization. https://www.iso.org/standard/

63500.html. Retrieved 2017-12-22.

ISO (2006). SO/IEC 25062:2006 software engineering –

software product quality requirements and evaluation

(SQuaRE) – common industry format (CIF) for usabi-

lity test reports. International Organization for Stan-

dardization. http://www.iso.org/iso/iso catalogue/

catalogue tc/catalogue detail.htm?csnumber=43046.

Retrieved 2017-12-22.

ISO (2008). ISO/DIS 9241-171: 2008 ergonomics

of human-system interaction – part 171: Gui-

dance on software accessibility. Technical report,

International Organization for Standardization.

http://www.iso.org/iso/iso catalogue/catalogue ics/

catalogue detail ics.htm?csnumber=39080. Retrieved

2017-12-22.

Komiyama, T. (2008). Usability evaluation based on

international standards for software quality evalua-

tion. Technical Journal 2, NEC. http://www.nec.

co.jp/techrep/en/journal/g08/n02/080207.pdf. Retrie-

ved 2017-12-22.

Molich, R., Chattratichart, J., Hinkle, V., Jensen, J. J.,

Kirakowski, J., Sauro, J., Sharon, T., and Traynor,

B. (2010). Rent a car in just 0, 60, 240 or 1,217

seconds? — comparative usability measurement,

cue-8. Journal of Usability Studies, 6(1):8–24. http://

www.upassoc.org/upa publications/jus/2010november/

JUS Molich November 2010.pdf. Retrieved 2017-

12-22.

Murphy, G. C. (2016). Technical perspective: Software is

natural. Commun. ACM, 59(5):121–121.

Nielsen, J. (1994). Guerrilla hci – using discount usa-

bility engineering to penetrate the intimidation bar-

rier. http://www.nngroup.com/articles/guerrilla-hci/.

Retrieved 2017-12-22.

Nielsen, J. and Molich, R. (1990). Heuristic evaluation of

user interfaces. In Proc. Conference on Human Fac-

tors in Computing Systems, CHI ’90, pages 249–256.

ACM.

Patch, K., Allan, J., Lowney, G., and Spellman, J. F.

(2015a). User agent accessibility guidelines (UAAG)

2.0. W3C note, W3C. http://www.w3.org/ TR/ 2015/

NOTE-UAAG20-20151215/. Retrieved 2017-12-22.

Patch, K., Spellman, J., and Wahlbin, K. (2015b). Mo-

bile accessibility: How wcag 2.0 and other w3c/wai

guidelines apply to mobile. Technical report,

W3C. https://www.w3.org/TR/mobile-accessibility-

mapping/. Retrieved 2017-12-22.

Shaw, M. (2002). What makes good research in software

engineering? International Journal on Software Tools

for Technology Transfer, 4(1):1–7.

The United Nations (2006). Convention on the rights of per-

sons with disabilities. Treaty Series, 2515:3. https://

www.un.org/development/desa/disabilities/convention-

on-the-rights-of-persons-with-disabilities/convention-

on-the-rights-of-persons-with-disabilities-2.html.

Retrieved 2017-12-22.

Treviranus, J., Richards, J., and Spellman, J. F. (2015).

Authoring tool accessibility guidelines (ATAG) 2.0.

W3C recommendation, W3C. http://www.w3.org/

TR/2015/REC-ATAG20-20150924/. Retrieved 2017-

12-22.

Vilbergsd

´

ottir, S. G., Hvannberg, E. T., and Law, E.

L.-C. (2006). Classification of usability problems

(cup) scheme: Augmentation and exploitation. In

Proc. 4

th

Nordic Conference on Human-Computer

Interaction: Changing Rules (NordiCHI2006), pages

281–290. http://diuf.unifr.ch/people/pallottv/docs/

NordiCHI-2006/LongPapers/p281-vilbergsdottir.pdf.

Retrieved 2017-12-22.

Yusop, N. S. M., Grundy, J., and Vasa, R. (2016). Reporting

usability defects: Do reporters report what software

developers need? In Proc. 20

th

International Confe-

rence on Evaluation and Assessment in Software En-

gineering, EASE ’16, pages 38:1–38:10. ACM.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

526