Super-Resolution 3D Reconstruction from Multiple Cameras

Tomoaki Nonome, Fumihiko Sakaue and Jun Sato

Department of Computer Science and Engineering, Nagoya Institute of Technology,

Gokiso, Showa, Nagoya 466-8555, Japan

Keywords:

Super-Resolution, Multiple Cameras, 3D Reconstruction, High Resolution.

Abstract:

In this paper, we propose a novel method for reconstructing high resolution 3D structure and texture of the

scene. In the image processing, it is known that image super-resolution is possible from multiple low resolution

images. In this paper, we extend the image super-resolution into 3D space, and show that it is possible to

recover high r esolution 3D structure and high resolution texture of the scene from low resolution images taken

at different viewpoints. The experimental results from real and synthetic images show the efficiency of the

proposed method.

1 INTRODUCTION

Recovering struc ture of the scene is one of the very

important objectives in computer vision, and many ef-

ficient reconstruction methods have been proposed in

the past research.

The early studies in this field revealed what kind

of constraints exist in multiple images, and what

kind of information can be obtained from these ima-

ges (Hartley and Zisserman, 2000; Faugeras et al.,

2004). For this objective, two-view, three-view

and multi-view geometry have been studied extensi-

vely (Longuet-Higgins, 1 981; Shashua and Werman,

1995; Hartley and Zisserman, 2000; Faugeras et al.,

2004). The bundle adjustment (Triggs et al., 1999)

has been combined with these theoretical advances,

and the sparse 3D reconstruction has been achieved.

More rece ntly, multiple images are used for reco-

vering large scale structures of the scene efficiently.

One of the mile stone research in this field was pre-

sented by Agarwal et al. (Agarwal et al., 2011), who

showed that whole buildings and cities ca n be re con-

structed autom atically from vast amount of images.

Furthermore, the accuracy of 3D reconstruction of

large scale scenes has been im proved drastically in

recent years (Galliani e t al., 2015; Schonbe rger et al.,

2016). However, these existing methods use multi-

ple images mainly for reducing outliers and noises

in reconstructed 3D structures. That is, the multiple

images have been used for improving the stability of

3D reconstruction. On the contrary, we in this paper

propose a method which uses multiple images for re-

covering finer 3D structu res of the scene.

In the image processing research field, the super-

resolution of 2D images has been studies extensively.

The existing methods in this field can be classified

into two groups. The first group of methods are based

on statistical priors which are obtained from advanced

learning (Glasner et al., 200 9; K im et al., 2016; Ledig

et al., 2016). Th ese method s can obtain a high reso-

lution image ju st from a single low resolution ima ge,

since the statistical prio rs can compensate th e lack of

high frequency ter m in the image. However, these

methods are heavily depend on the priors, and if the

priors do no t agree with the input images, they out-

put wrong high r esolution images. The seco nd group

of methods are based on mu ltiple observations (Har-

die et al., 1997; Tom et al., 19 94). Altho ugh these

methods needs multiple images, they can recover high

resolution images accurately without any wrong infe-

rence. In this paper, we prop ose a new method for re-

covering fine 3D structures of the scene by extending

the image super-resolution based on multiple images.

In our method, we recover fine 3D structures of

the scene, whose resolu tions are much higher than the

input image resolutions. For this objective, we reco-

ver th e high resolution structures of the scene directly

from the image intensity of low resolution image s.

Thus point correspond ences among multiple images

are not required in our method. Instead, we recover

the high resolution texture of the scene as well a s the

high resolution 3D structure of the scene. By esti-

mating the high resolution textures and the high reso-

lution 3D structures simultaneously, we can recover

Nonome, T., Sakaue, F. and Sato, J.

Super-Resolution 3D Reconstruction from Multiple Cameras.

DOI: 10.5220/0006718304810486

In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2018) - Volume 5: VISAPP, pages

481-486

ISBN: 978-989-758-290-5

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

481

۷

۷

ଵ

܅

Figure 1: Image super-resolution from multiple images. By

obtaining multiple image, I

0

and I

1

, wi th different sam-

pling phases, a high resolution image W can be recovered

by combining these images.

fine structure of the scene from low resolu tion ima-

ges. As as result, w e can recover fine 3D structures,

which could not be recovered by the existing multiple

view reconstruction methods.

2 IMAGE SUPER-RESOLUTION

Before considering the super-resolution 3D recon-

struction, we revise the standard image super-

resolution from multiple images. In the ima ge super-

resolution, multiple images observed at different vie-

wpoints are combined together, so that these mu lti-

ple observations compensate the lack of observation

in single image as shown in Fig. 1. The maximum a

posteriori probability (MAP) estimation is often used

for obtaining a high resolution image W fr om multi-

ple low resolution images I

i

(i = 1, ··· , N) under the

existence of image noise as follows:

ˆ

W = argmin

W

N

∑

i=1

kI

i

− A

i

Wk

2

+ αkL Wk

2

(1)

The first term in Eq.(1 ) is a data term, and A

i

deno-

tes a matrix which represents down sampling in ith

image, i.e. a down sampling at ith viewpoint. The

second term is a r egu la rization term, and L denotes

the Laplacian filter for smoothness constraints. k · k

2

denotes the L

2

norm, and α de notes the magnitude of

the regularization term.

The image super-resolution assumes that the ob-

jective surface is planar, and the difference of sam-

pling phase in each image is constant. Thus, if the

objective surface is not planar, the standard image

super-resolution fails. On the contrary, we in this pa-

per consider non-planar objects, and propose a met-

hod for reconstructing high resolution 3D su rfaces as

well as their high re solution textures. We call it super-

resolution 3D reconstru ction.

(a) 3D scene

⋯

(b) observed images

⋯

(c) reprojection images

Figure 2: observed images and reprojection images.

3 SUPER-RESOLUTION 3D

RECONSTRUCTION

Our super-resolution 3D re c onstruction is a c hieved by

estimating hig h resolution 3D structures and high re-

solution textures simultane ously by minimizing a cost

functions defined by low resolution im ages observed

at multiple viewpoints.

Let us consider a 3D surface whose high resolu-

tion structure and texture are D and W respectively.

Suppose the 3D surface is p rojected into N cameras

C

i

(i = 1, · · · , N), and N low resolution images I

i

(i =

1, ··· , N) are observed as shown in Fig. 2. Then, these

projections can be described by projection functions

P

i

(i = 1, ·· · , N) as follows:

I

i

= P

i

(D, W) (2)

The projection functions P

i

represent not only the re-

lative position and orientation among N cameras, but

also the down sampling in the se cameras. In this rese-

arch, we assume that the cameras are calibrated , and

the projection functions P

i

are known. Also, we as-

sume the ambient light is constan t in all th e orienta-

tions around the 3D surface, and the local orientation

of the surface does not affect the intensity in images.

Then, the objective of our method is to estimate

the hig h resolution structure D and the high resolution

texture W simultaneously, which best fit low resolu-

tion images I

i

(i = 1, · ·· , N) observed by N came ras

as fo llows:

{

ˆ

D,

ˆ

W} = argmin

D,W

N

∑

i=1

kI

i

− P

i

(D, W)k

2

(3)

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

482

In the real scenes, we can assume that the struc-

ture and the texture of the 3D surface does not change

drastically except the boundary of objects and the

boundary of textures.Thus, we can make the estima -

tion more stable by adding the smoothness constraints

S (·) on structure an d texture as follows:

{

ˆ

D,

ˆ

W} = argmin

D,W

N

∑

i=1

kI

i

− P

i

(D, W)k

2

+S (D) + S (W) (4)

Unfortu nately, the simultaneous estimation of

high resolution structures and textures described in

Eq.(4) is very difficult and unstable, since we have

to minimize the cost function in very high dimensio-

nal sp ace. In the n ext section, we describe a p ractical

method for estimating high resolution structures and

high r esolution textures based on Eq.(4).

4 PRACTICAL

SUPER-RESOLUTION 3D

RECONSTRUCTION

Suppose we have N cameras, and each of which

obtains a low resolution image I

i

= [I

1

, ··· , I

P

]

⊤

(i =

1, ··· , N), where P de notes the number of pixels in

a low resolution image. Then, we consider one of

these camera s as a basis camera, and its came ra coor-

dinates a re considered as the basis 3D coordinates of

the scene. T hus, the high resolution 3D structure of

the scene is represented by a high resolution depth

image D = [D

1

, ··· , D

Q

]

⊤

observed at the basis ca-

mera, where Q denotes the number of pixels in a high

resolution image. Also, the high resolution texture of

the scene is represented by a high resolution inten-

sity image W = [W

1

, ··· , W

Q

]

⊤

observed at the ba sis

camera. Naturally, we assume P ≤ Q. Then, our ob-

jective is to estimate D and W from I

i

.

Since the simultaneous estimation of high resolu-

tion structures and textures shown in Eq.(4) is difficult

and unstable, we in this paper estima te high resolution

structures and high resolution textures alternately by

iterating th e following two steps.

4.1 Estimation of High Resolution

Textures

We fist estimate a high resolution texture W given an

estimated hig h resolutio n structure D.

Suppose we have a high resolution structure D =

[D

1

, ··· , D

Q

]

⊤

. Then, the low resolution images I

i

(i = 1, · ·· , N) observed by N cameras can be descri-

bed by using the high resolution texture W as follows:

I

i

= A

i

(D)W (5)

where, A

i

(D) denotes a P × Q matrix, wh ic h repre-

sents a projec tion from the high resolution texture at

the basis camera to the low resolution ima ge at th e ith

camera given a high resolution structure D. Thus, the

high resolution texture W can be estimated from low

resolution images I

i

observed at N cameras by solving

the following minimization problem:

ˆ

W(D) = arg min

W

N

∑

i=1

kI

i

− A

i

(D)Wk

2

+ αkLWk

2

(6)

where, L denotes a matrix for computing the La p-

lacian of W, and k · k

2

denotes the L

2

norm. Thus

the second term represents the smoothness constraints

S (W) on high resolution textures, and α is its weight.

From Eq.(6), the high resolution texture W can be es-

timated given a high re solution structur e D.

Note, the estimation of W in Eq.(6) is a linear pro-

blem, and thus W can be estimated linearly.

4.2 Estimation of High Resolution

Structures

We next estimate a high resolution structure D given

an estimated high resolution texture W.

Given a high resolution texture W =

[W

1

, ··· , W

Q

]

⊤

, the low re solution camera ima-

ges I

i

can be described by Eq.(5) as before. Then,

the high resolution structure D can be estimated from

low resolution images I

i

observed at N cameras as

follows:

ˆ

D(W) = argmin

D

N

∑

i=1

kI

i

− A

i

(D)Wk

2

+ βkLDk

2

(7)

The second term represents the smooth ness con-

straints S (D) on high resolution structures, and β is

its weight. α and β are chosen empirically in our ex-

periments.

By iteratin g Eq.(6) and Eq.(7) alternately, we can

estimate the high resolution structure D and texture

W of 3D surfaces. In this estimation, we also use co-

arse to fine technique to stabilize the super resolution

estimation. That is, we gradually increase the scale

of estimated texture and stru c ture during the iteration.

Since we need initial values o f 3D structure and 2D

texture in our estimation, we used a flat surface as the

initial structure and used the low resolution image of

the basis camera as the initial texture. By using the

proposed method, th e high resolution structures and

textures can be estimated efficiently.

Super-Resolution 3D Reconstruction from Multiple Cameras

483

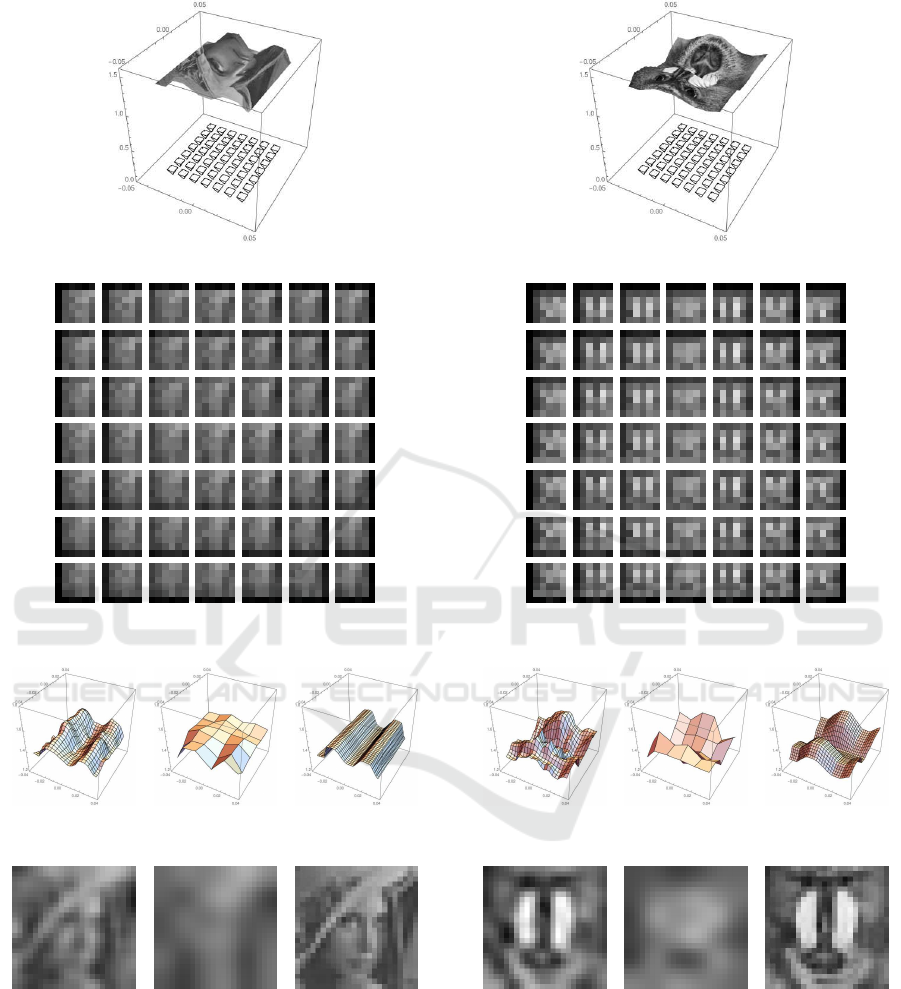

(a) 3D object and 7 × 7 cameras

(b) low resolution camera images

Recovered high resolution 3D structure

(c) proposed (d) stereo (e) ground truth

Recovered high resolution texture

(f) proposed (g) bi-cubic (h) ground truth

Figure 3: Results of super-resolution reconstruction (lenna).

5 EXPERIMENTS

We next show the efficiency of the proposed me thod

by using synthetic images as well as real images.

(a) 3D object and 7 × 7 cameras

(b) low resolution camera images

Recovered high resolution 3D structure

(c) proposed (d) stereo (e) ground truth

Recovered high resolution texture

(f) proposed (g) bi-cubic (h) ground truth

Figure 4: Results of super-resolution reconstruction (man-

drill).

5.1 Synthetic Image Experiments

We first show that high resolution 3D structures and

textures can be obtained from th e pro posed meth od

by using sy nthetic images.

Fig. 3 (a) shows a 3D object used in our synthe-

tic image experiment. The 7 × 7 quadrangular py-

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

484

ramids in this figure show the position and the orienta-

tion of 49 cameras used in our super resolution recon-

struction. These cameras were assumed to be calibra-

ted in this experiment. The image resolution of each

camera is 6 pix × 6 pix, and 49 images obtained from

Fig. 3 (a ) are shown in Fig . 3 (c). The intensity r ange

of these images is 0 to 1, and the random Gaussian

noise with the standard deviation of 0.01 was added to

the image intensity for simulating th e image noise in

observation. These low resolution images were used

for recovering high resolution 3D structures and tex-

tures with the resolution of 24 pix × 24 pix.

Fig. 3 (c) shows a high resolutio n 3D structure

obtained from the proposed method. The ground truth

structure is shown in Fig. 3 (e). For comparison, we

also reconstructed the 3 D structure from the low re-

solution images by using the standard stereo metho d.

The obtained 3D structures is shown in Fig. 3 (d). As

shown in these figures, the proposed method provides

us fine structure of the original shape, while the stan-

dard stereo method suffers from the aliasing problem,

and cannot recover correct shape of object.

We next show a high resolution texture, i.e. high

resolution image at th e basis camera, recovered from

the proposed method. Fig. 3 (f) shows the resu lt from

the proposed method, and Fig. 3 (h) shows the groun d

truth textur e. For compariso n, the result from the

standard bi-cubic interpolation is also shown in Fig. 3

(g). As shown in these figures, the proposed method

provides us the high resolution texture of the object

accurately, even if the input im ages ar e very low re-

solution. On the contrary, the result from the standard

bi-cubic interpolation is very bad.

Fig. 4 shows the results from another synthetic 3D

object. Again, the proposed method provides us very

accurate high resolutio n structure and texture, while

the standard stereo method and bi-cubic interpolation

cannot recover high resolution structure and texture.

The numerical accuracy of recovered structures and

textures shown in tab le 1 and table 2 also show the

efficiency of the proposed method.

Table 1: Accuracy of recovered high resolution 3D struc-

ture.

proposed method existing method

lenna 0.0185 0.0405

mandrill 0.0195 0.0406

Table 2: Accuracy of recovered high resolution texture.

proposed method existing method

lenna 0.0636 0.0910

mandrill 0.1035 0.1734

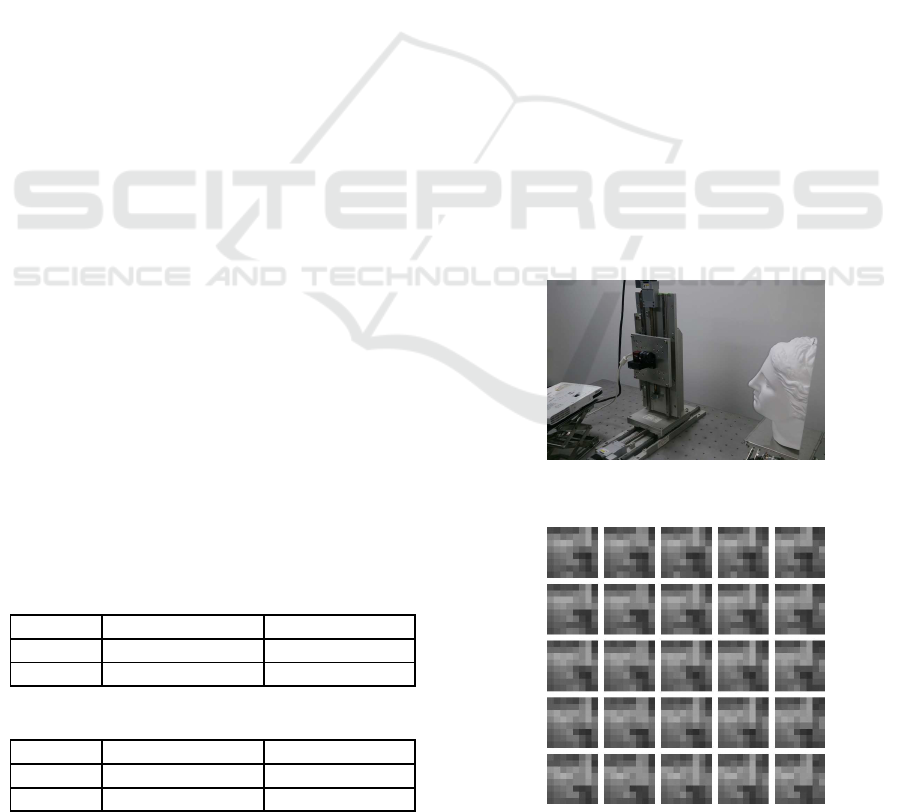

5.2 Real Image Experiments

We n ext show the results from real image experiment.

In this experiment, we recovered the high reso lution

structure and texture o f a plaster face shown in Fig. 5.

The plaster face was observed by a camera whic h was

translated in 2 directions by usin g a moving stage

shown in Fig. 5, and 5 × 5 images were obtained with

every 2cm translation. For o btaining the ground truth

image of high resolution texture, we generated the

low resolution images by taking the average of 4 × 4

pixels, and used th e se low resolution images for super

resolution 3D reconstruction. The ground tr uth shap e

of the object was measured by using structured lig-

hts projected from the projector in Fig. 5, and the ca-

mera internal parameters were calibrated in advance

by using a calibration board. Fig. 6 shows 5 × 5 low

resolution images obtained from the camera. The re-

solution o f these images is 8 × 8. We used these low

resolution images for recovering the high resolution

structure and texture whose resolution is 3 2 × 32.

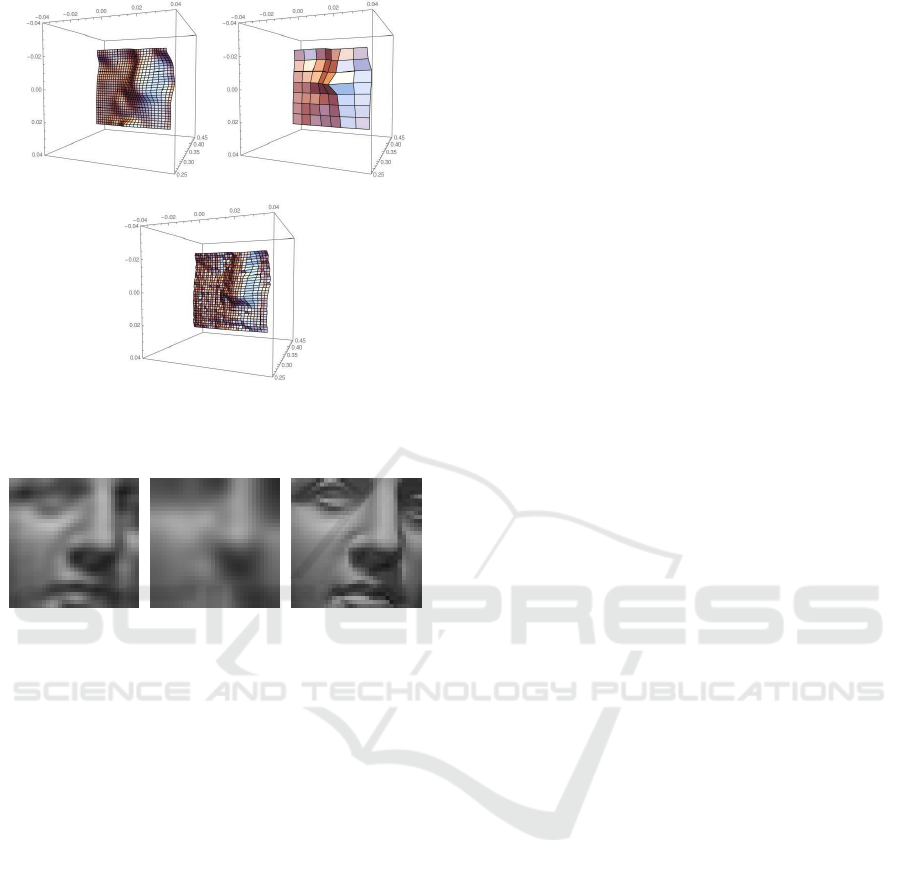

Fig. 7 (a) shows the high resolution 3D structure

recovered from the pr oposed method, and (c) shows

the ground truth of the structure. For comparison the

result from the standa rd stereo me thod is shown in

Fig. 7 (b). As shown in this figure, the result from the

proposed method is very fine and accurate, while the

result f rom the standard stereo is very rough and in-

accurate. The high resolution textures recovered from

the pr oposed method is also com pared with that of the

Figure 5: The experimental setup of our real i mage experi-

ment.

Figure 6: Low resolution images obtained from a moving

camera.

Super-Resolution 3D Reconstruction from Multiple Cameras

485

(a) proposed (b) stereo

(c) ground truth

Figure 7: The high resolution 3D structures recovered from

low resolution images.

(a) proposed (b) bi-cubic (c) ground truth

Figure 8: The high resolution textures recovered from low

resolution images.

bi-cubic interpolation in Fig. 8. Again, the proposed

method is superior to the standard bi-cubic method.

From these results, we find that the proposed met-

hod is very efficient to recover accurate high resolu-

tion 3D structures and textures.

6 CONCLUSION

In this paper, we proposed a novel method for recon-

structing high resolution 3D structure and texture of

the scene. For this objective, we extended the 2D

image super-resolution into 3D space, and showed

that it is possible to recover high resolution 3D struc-

ture and high resolution texture of the scene from low

resolution images taken at different viewpoints.

We showed the efficiency of the prop osed method

by using real and synth etic image experimen ts com-

paring with the existing methods.

REFERENCES

Agarwal, S., Furukawa, Y., Snavely, N., Simon, I., Curless,

B., Seitz, S. M., and Szeliski, R. (2011). Building

rome in a day. Commun. ACM, 54(10):105–112.

Faugeras, O., Luong, Q., and Papadopoulo, T. (2004). The

geometry of multiple images: the laws that govern the

formation of multiple images of a scene and some of

their applications. MIT press.

Galliani, A., K.Lasinger, and Schindler, K. (2015). Mas-

sively parallel multiview stereopsis by surface normal

diffusion. In Proc. ICCV.

Glasner, D., Bagon, S ., and Irani, M. (2009). Super-

resolution from a single image. In Proc. ICCV.

Hardie, R., Barnard, K., and Armstrong, E. (1997). Joint

map registration and high-resolution image estima-

tion using a sequence of undersampled images. IEEE

Transactions on Image Processing, 6(12):1621–1633.

Hartley, R. and Zisserman, A. (2000). Multiple View Ge-

ometry in Computer Vision. Cambridge University

Press.

Kim, J., Lee, J., and Lee, K. (2016). Accurate image super-

resolution using very deep convolutional networks. In

IEEE Conference on Computer Vision and Pattern Re-

cognition, pages 1646–1654.

Ledig, C., Theis, L., Huszar, F., Caballero, J., Cunning-

ham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J.,

Wang, Z., and Shi, W. (2016). Photo-realistic single

image super-resolution using a generative adversarial

network. In Proc. IEEE Conference on Computer Vi-

sion and Pattern Recognition, pages 2414?–2423.

Longuet-Higgins, H. (1981). A computer algorithm for re-

constructing a scene from two projections. Nature,

293:133–135.

Schonberger, J., Zheng, E., Pollefeys, M., and Frahm, J.-

M. (2016). Pixelwise view selection for unstructured

multi-view stereo. In Proc. ECCV.

Shashua, A. and Werman, M. (1995). Trilinearity. In Proc.

ICCV, pages 920–925.

Tom, B., Katsaggelos, A., and Galatsanos, N. (1994). Re-

construction of a high resolution image from registra-

tion and restoration of low resolution i mages. In Proc.

IEEE International Conference on Image Processing,

pages 553–557.

Triggs, B., McLauchlan, P., Hartley, R., and Fitzgibbon, A.

(1999). Bundle adjustment - a modern synthesis. In

Proc. International Workshop on Vision Algorithms.

VISAPP 2018 - International Conference on Computer Vision Theory and Applications

486