An Investigation of the Impact of a Social Constructivist Teaching

Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

Giuliano Orru

1

, Federico Gobbo

2,3

, Declan O’Sullivan

4,5

, Luca Longo

1,5

1

School of Computing, College of Sciences and Health, Dublin Institute of Technology, Dublin, Ireland

2

Faculty of Humanities, Amsterdam Center for Language and Communication,

University of Amsterdam, Amsterdam, The Netherlands

3

Department of Humanities, University of Turin, Turin, Italy

4

School of Computer Science and Statistics, The University of Dublin, Trinity College, Dublin, Ireland

5

ADAPT: The Global Centre of Excellence for Digital Content and Media Innovation, Dublin, Ireland

Keywords:

Cognitive Load Theory, Cognitive Load Measurement, Cognitivism, Social Constructivism, Trigger Questi-

ons, Concept Maps, Performance, Efficiency.

Abstract:

Social constructivism is grounded on the construction of information with a focus on collaborative learning

through social interactions. However, it tends to ignore the human mental architecture, pillar of cognitivism.

A characteristic of cognitivism is that instructional designs built upon it are generally explicit, contrarily

to constructivism. This position paper proposes a novel learning task that is aimed at combining both the

approaches through the use of trigger questions in a collaborative activity executed after a traditional delivery

of instructions. To evaluate this new task, a metric of efficiency based upon a measure of mental workload and

a measure of performance is proposed. The former measure is taken from Ergonomics, and two well know

subjective self-reporting mental workload assessment techniques are envisioned. The latter measure is taken

from an objective quantitative assessment of the performance of learners employing concept maps.

1 INTRODUCTION

The theoretical premises of this position paper relate

cognitivist and social constructivist approaches to le-

arning. The former is built upon the human mental

architecture and is grounded in the transferral of in-

formation from short to long term memory, suppor-

ting in practice learning. From the cognitivist point of

view, receiving explicit instructions is ‘condicio sine

qua non’ the transfer of information can occur. The

latter is grounded in the construction of information

with a focus on the collaborative nature of learning

which is a product of social interactions. However, as

Sweller (2009) pointed out, constructivism in general

ignores the human mental architecture. As a conse-

quence, constructivism can not lead to instructional

designs aligned to the way humans learn, so they are

set to fail. An important issue of constructivism is the

lack of explicit instructional designs (Kirschner et al.,

2006). The research question being proposed in this

paper is: To what extent can a social constructivist

activity improve the efficiency of a traditional cogniti-

vist activity when added to it?’ To answer this, an ex-

isting metric of teaching efficiency proposed by Paas

and Van Merri

¨

enboer (1993) is adopted. This is based

upon two other measures: the cognitive load expe-

rienced by learners and their performance. However,

both these two measures are hard to be precisely and

objectively quantified. An important theory in educa-

tional psychology, based upon the construct of cogni-

tive load, is the Cognitive Load Theory (CLT). This

is a theoretical framework that provides guidelines to

assist instructors in the presentation of information

by incorporating explicit instructional design to foster

the learners’ activities and optimise their intellectual

performance (Sweller et al., 1998). CLT argues that

instructional designs can generate three types of load:

the extraneous, the intrinsic and the germane load.

The extraneous load corresponds to the way by which

the information is presented. The intrinsic load re-

fers to the level of difficulty of an underlying learning

task while the germane load relates to the mental re-

sources used to complete the learning task by creating

schemata of knowledge in working memory (Sweller,

292

Orru, G., Gobbo, F., O’Sullivan, D. and Longo, L.

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental Workload and Efficiency.

DOI: 10.5220/0006790702920302

In Proceedings of the 10th International Conference on Computer Supported Education (CSEDU 2018), pages 292-302

ISBN: 978-989-758-291-2

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2010). Regrettably, despite decades of research en-

deavour, no empirical measures of the three types of

load have emerged. As a consequence, the theory has

been criticised and believed not to be scientific in na-

ture as it does not allow empirical investigations (Ger-

jets et al., 2009; De Jong, 2010). Contrarily, the situ-

ation in Ergonomics is favourable, and an entire field

of research is devoted to the development of measu-

res of Mental Workload (MWL), a psychological con-

struct strictly connected to cognitive load. According

to Wickens (2012), MWL is the amount of the mental

resources that humans need to carry on a task. This

is connected to the human working memory which

is limited in its capacity so, to get an optimal per-

formance, it is necessary not to exceed its limits. If

this occurs, the mental resources are no longer suffi-

cient to complete a task causing a situation of over-

load (Paivio, 1986; Baddeley, 1998). In relation to le-

arning, an optimal level of mental workload facilitates

the learning process, whereas a high level (overload)

or a low level (underload), hampers the learning phase

(Longo, 2016). Although a robust and generally ap-

plicable measure of MWL still has to emerge, a num-

ber of uni-dimensional and multi-dimensional measu-

res have been conceptualised, applied and validated.

Examples include the Nasa Task Load Index and the

Workload Profile (WP), well known multidimensio-

nal assessment techniques. Similarly to mental wor-

kload, objective performance of learners is hard to

quantify. Assessing their performance after a learning

activity is far from being easy. However, a number

of assessment strategies have been proposed. One of

these is based on conceptual maps, useful tools that

display a concept’s component visualising their rela-

tionships and organising the related thoughts. This

type of maps can be objective and meaningful asses-

sment tools to evaluate an instructional design in the

classroom. They are expected to be effective to iden-

tify both valid and invalid ideas grasped by learners

and they can provide quantitative information about

the performance achieved by a learner (Novak and

Ca

˜

nas, 2008). Having quantitative measures of men-

tal workload and objective performance, through an

existing measure of efficiency, it is now possible to

answer the research question set above. The missing

element is the formation of a novel learning task that

combines the cognitivist and social constructivist ap-

proaches. The proposal of this position paper is to

make use of traditional explicit instructions methods

followed by a collaborative activity based upon trig-

ger questions. These questions are aimed to exercise

the cognitives abilities of a learner and to develop a

higher level of thinking (Lipman, 2003).

The reminder of this paper is organised as it fol-

lows. Section 2 discusses the theoretical background

of the research with an overview of cognitivist and

social constructivist approaches to teaching and lear-

ning discussing their limitations. The human mental

architecture, in the context of Cognitive Load The-

ory (CLT), is subsequently described emphasising its

drawbacks. A focus on human Mental Workload

(MWL), its measurement techniques and measures

follows. A description of conceptual maps and a mar-

king scheme for their objective evaluation is presen-

ted. Eventually, the origins of trigger questions and

their functioning is described. With these notions,

section 3 proposes the design of a noverl primary re-

search experiment while section 4 emphasises the ex-

pected contribution to the body of knowledge.

2 RELATED WORK

2.1 The Cognitivist Paradigm

The traditional cognitivist approach to teaching is fo-

cused on the transmission of information. It stresses

the acquisition of knowledge and considers the inter-

nal mental structure of humans. Its focus is on the

conceptualisation of learning processes. Cognitive

theories address how information is received, orga-

nised, stored and retrieved by the mind. ‘Learning is

concerned not so much with what learners do but with

what they know and how they come to acquire it’ (Jo-

nassen, 1991). The goal of Cognitivism is to use ap-

propriate learning strategies to relate new knowledge

to the prior knowledge. According to Schunk (1996),

‘Transfer is a function of how information is stored in

memory’. The emphasis is on the role of practice with

corrective feedback. Transfer occurs when a learner is

able to apply knowledge in different circumstances.

To support this, instructional designers usually adopt

two different techniques: simplification and standar-

disation. The transfer occurs when irrelevant informa-

tion is eliminated, when simplification and standar-

disation techniques facilitate the knowledge transfer

to achieve effectiveness and efficiency. During this

transfer phase, knowledge is analysed, decomposed,

and simplified into schemata. A schema synthesi-

ses the functions required to carry out a task (Mayer,

2002).

2.2 The Social Constructivist Paradigm

Vygotsky (1986), Dewey (2004), Lipman (2003) con-

sider learning strictly being related to the social con-

text. The individualistic ideal of autonomy to tea-

ching and learning is characterised by self-sufficiency

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

293

and independence. From this point of view, depen-

dency, inter subjectivity, and community are seen

as opposed to autonomy and maturity. Thus, self-

sufficiency and independence are seen as virtuous,

while dependency and interconnectedness with others

are considered weaknesses (Bleazby, 2006). Under

this assumption, it is believed that the exacerbation

of the individualistic ideas of autonomy could create

competitive behaviours that could hamper instead of

facilitating the learning phase (Bleazby, 2006). So-

cial interaction is considered as a potential solution

for facilitating the learning phase and to fill in the

gap of different levels of prior knowledge of learners.

The Deweyian and Vigotskyian notion of auto-

nomy is incorporated and developed in the pedagogi-

cal approach proposed by Lipman (2003). ‘In order to

think for oneself, one must be a member of a commu-

nity’. In a community, the social interaction internali-

ses the functions and the processes of the interaction.

Therefore, the participants become intrapsychologi-

cal functions: the learners create, define and redefine

the meanings by themselves after having participated

in a dialog with the others (Vygotsky, 1986). This

notion is strictly connected to the notion of Commu-

nity of Inquiry proposed by Peirce (1877). Here, the

focus is on the formation of knowledge through a pro-

cess of scientific inquiry. The Community of Inquiry

can be defined as a group of people interacting in a

social context who investigate the conceptual limits

of a problematic concept through the use of dialog.

Here, ‘Dialog’ is not a conversation nor a discussion.

A conversation is a spontaneous exchange and sharing

of ideas and information. A discussion is a conversa-

tion where participants explain their own ideas trying

to persuade the others. It is a competitive dialectical

exchange of ideas that converges to the extrapolation

of the correct one, emphasising a winner. Instead a di-

alog focuses on group thinking, processing the infor-

mation in order to expand individual and group kno-

wledge and to extend understanding (Bleazby, 2006).

In line with the definition of dialog, a pedagogical

framework grounded in the ‘Philosophy for Children’

proposed by Mathew Lipman exists (the project NO-

RIA) (S

´

atiro, 2006). It proposes a set of questions

aimed to exercise the cognitives abilities of a lear-

ner and to develop a higher level of thinking. Lip-

man (2003) presents a model of reasoning which is

considered to be a genuine and important aspect of

any instructive process: the complex thinking. It is

an educational process composed by three dimensi-

ons: critical, creative and caring thinking. The criti-

cal thinking is focused on the formulation of judge-

ments and it is governed by the criteria of logic, it is

self-correcting and sensitive to the context. The cre-

ative thinking tends towards the formulation of jud-

gements too but these are strictly related to the con-

text. Additionally, it is governed by the context, it

is self-transcendent and it is sensitive to criteria but

not governed by them. The caring thinking is aimed

at the development of practice regarding substantial

and procedural reflection related to the resolution of

some problem. It is sensitive to the context and it

requires metacognitive process of thinking to formu-

late and orient practical judgments. The development

of complex thinking occurs in the Community of In-

quiry, a process of discovery learning which is focu-

sed on generating and answering philosophical que-

stions on logic (critical thinking), aesthetic (creative

thinking) and ethic (caring thinking).

2.3 The Human Mental Architecture

and the Cognitive Load Theory

The human mental structure is believed to be compo-

sed by two parts: short and long term memory (At-

kinson and Shiffrin, 1968). The former memory pro-

cesses incoming information, while the latter stores

relevant information transforming it as acquired kno-

wledge (Baddeley, 1998; Miller, 1956). Under the as-

sumptions of the human mental architecture frame-

work, learning takes place by transferring pieces of

information from working memory, conscious and li-

mited, to long term memory, unconscious and unli-

mited (Atkinson and Shiffrin, 1968; Baddeley, 1998).

This assumption is at the core of the Cognitive load

theory (CLT) (Sweller et al., 1998). According to

CLT, in the learning phase, the transfer of informa-

tion occurs by generating schemata of knowledge.

Here, schemata are automatic functions created after

several application of some concept related to some

task (Sweller et al., 1998). The creation of schemata

reduces the working memory load because of their ca-

pacity to hold undefined amount of information. Ac-

cording to the Schema Theory, knowledge is stored in

long term memory in the form of schema. A schema

categorises elements of information according to the

manner in which they will be used (Sweller et al.,

1998). To construct a schema means to relate diffe-

rent kinds of information from a lower to a higher

level of complexity and hold them as a single unity

understandable as a single chunk of information. In

summary, the construction of schemata occurs in wor-

king memory while their permanent storage occurs in

long term memory. Cognitive Load Theory is aimed

at providing guidelines to design instructional mate-

rial to reduce the cognitive load of learners and at ex-

panding their working memory limits, facilitating the

transfer between short and long memory. The rese-

CSEDU 2018 - 10th International Conference on Computer Supported Education

294

arch conducted in the last 3 decades by Sweller and

his colleagues brought to the definition of three types

of load: intrinsic, extraneous and germane load. The

intrinsic load refers to numbers of elements that must

be processed simultaneously in working memory for

schema construction and their interactions (defined as

element interactivity). Extraneous load is the unne-

cessary cognitive load and it influences by the way in-

structional material has been designed. Germane load

is the effective cognitive load which is the result of be-

neficial cognitive processes such as abstractions and

elaboration (the construction of schemata) that are

promoted by a clear design of the instruction (Gerjets

and Scheiter, 2003). The three types of load were ini-

tially thought to be additive: the total cognitive load

experienced by a subject during a task correspond to

the sum of the three different types of load. Reducing

extraneous load and improving germane load by de-

veloping schema construction and automation should

be the main goal of the discipline of instructional de-

sign.

After several critiques related to the theoretical

development of CLT, as in Schnotz and K

¨

urschner

(2007), Gerjets et al. (2009) and De Jong (2010) and

after several failed attempts to find a generally ap-

plicable measurement of the three different types of

load, CLT has been re-conceptualised using the no-

tion of element interactivity, as previously discussed.

If initially the degree of element Interactivity underli-

ned the intrinsic load, now it also underlies the extra-

neous load (Sweller, 2010). This re-conceptualisation

brought to a new definition of extraneous load itself

that now refers to the degree of interactivity of the

elements of instructional material used for teaching

activities. In other words, if an instructional design

enhances the degree of element interactivity during

problem solving, the load can be considered as extra-

neous. In contrast to the previous definition of extra-

neous load, which focused on hampering the schema

contribution and automation, now extraneous load is

defined as the degree of element interactivity as well

as the germane load. This is because, indirectly,

the degree of element interactivity underlies germane

load due to the fact that the latter refers to the wor-

king memory resources devoted to manage the inte-

raction of elements. Germane load is no longer an in-

dependent source of load. It is now a function of those

working memory resources that need to deal with the

interaction of the elements of the instructional mate-

rial being presented. Intrinsic load depends on the

characteristic of the material, while extraneous load

now depends on the characteristic of the instructional

material, on the characteristic of instructional design

and on the prior knowledge of the learners. Additio-

nally, germane load now refers only to the characteris-

tics of the learner: the resources of working memory

allocated to deal with the intrinsic load. According

to this reconceptualisation, the logical foundation of

CLT have become more stable, and germane load is

complementary to extraneous load without creating

logical and empirical contradictions.

In summary, to the best of our understanding, the

main theoretical contradiction before the reconceptu-

alisation of CLT was that germane and extraneous lo-

ads were additive Sweller et al. (1998) and at the same

time complementary. This means that, if extraneous

load decreases, while keeping the intrinsic load con-

stant, then germane load should increase. ‘However,

if germane load can compensate extraneous load, why

does total load change? It should remain constant but

does not’ (Sweller, 2010). After the reconceptualisa-

tion of CLT by Sweller (2010), if intrinsic load re-

mains constant but extraneous load changes, the total

cognitive load changes as well because more or less

working memory resources are devoted to deal with

the degree of element interactivity Sweller (2010).

Additionally, germane load is a function of working

memory dealing with the degree of element interacti-

vity. At a given level of knowledge and expertise,

intrinsic load cannot be altered without changing the

content of the material being learnt. Rather, extrane-

ous load can be altered by changing the instructional

procedures and germane load is a function of the re-

sources of the working memory dealing with the pro-

cessing of the interactions of instructional elements of

an underlying learning task. Germane load is no lon-

ger independent because it depends on the instructi-

onal design and the complexity of the underlying le-

arning task (Sweller, 2010). Despite the evolution of

the theory over the years, reliable measurement of the

three different types of load is still the main challenge

regarding the theoretical and the scientific value of

CLT (Paas et al., 2003).

2.4 Mental Workload

Although the field of educational psychology is st-

ruggling to find ways of measuring mental workload

of learning tasks, there is an entire field within Er-

gonomics devoted to the design, development and

validation of reliable measures of mental workload

(Longo and Leva, 2017). In the last 50 years of rese-

arch, different definitions of mental workload (MWL)

have emerged in the literature. According to Wickens

(1979) ’..., the concept of operator workload is de-

fined in terms of the human’s limited processing re-

sources’. His Multiple Resource Theory (MRT) sta-

tes that humans have a limited set of resources availa-

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

295

ble for mental processes (Wickens, 1984). These re-

sources correspond to an available amount of energy

that is used for a variety of mental procedures. This

shared pool of resources are allocated across different

stages related to the tasks, their use depends on the

modalities of the task and on the process required to

carry out this task. Cognitive resources are restricted

and a supply and demand problem occurs when a per-

son performs two or more tasks that require the same

resource. Excess workload, caused by a task using

the same resource, can create problems and result in

errors or lower task performance. When workload

increases it does not mean that performance always

decreases: performance can be affected by workload

being too high or too low (Nachreiner, 1995). A high

level of mental workload can be related with a high

level of focus on the task whereas a low level might

means no attention or no mental resources allocated

to a task. Wicken’s definition implicitly means that

mental workload should be optimal to increase the

performance during tasks. In general, MWL is not

a linear concept (Longo, 2015; Rizzo et al., 2016) but

it can be intuitively defined as the volume of cognitive

work necessary for an individual to accomplish a task

over time. It is not ‘an elementary property, rather

it emerges from the interaction between the require-

ments of a task, the circumstances under which it is

performed and the skills, behaviours and perceptions

of the operator’ (Hart, 2006). However, this is only

a practical definition, as many other factors influence

mental workload (Longo and Barrett, 2010; Longo,

2014).

2.4.1 Mental Workload Measurement

Techniques

Different techniques have been proposed in the

Eduction to measure mental workload (cognitive

load). This can be clustered, in two main groups:

subjective and objective measures (Plass et al., 2010).

The most commonly adopted subjective measures are

unidimensional. These are the Subjective Rating of

Perceived Mental Effort (Paas and Van Merri

¨

enboer,

1993) combined with Subjective Rating of Percei-

ved Task Difficulty (Paas and Van Merri

¨

enboer, 1994;

Paas et al., 2003). Paas (1992) equals the effort of

learners to overall cognitive load, thus mental ef-

fort alone can measure the different types of load.

In Paas and Van Merri

¨

enboer (1993), ‘Mental ef-

fort may be defined as the total amount of control-

led cognitive processing in which a subject is enga-

ged’. Through a measure of mental effort it is possi-

ble to get information about the cognitive costs of le-

arning, therefore predict the performance of learners.

Objective measurement of cognitive load through va-

rious means have been proposed, as summarised in

Plass et al. (2010). These means include learning

outcomes (Mayer, 2005; Mayer and Moreno, 1998),

time-on-task (Tabbers et al., 2004), task complexity

(Seufert et al., 2007), behavioural data (Van Gerven

et al., 2004), secondary task analysis (Br

¨

unken et al.,

2002) and Eye-tracking analysis (Folker et al., 2005).

Both subjective and objective measures of cognitive

load have been combined in Paas et al. (2003). Here,

cognitive load is the relation between invested effort

and learning outcome. In summary, most of the mea-

surement techniques present in the literature of educa-

tion are mainly proxies to infer cognitive load. The si-

tuation is different in Ergonomics. Here, the measure-

ment of MWL is an extensive area (Longo and Leva,

2017) where several assessment techniques have been

proposed (Cain, 2007; Tsang, 2006; Wilson and Eg-

gemeier, 2006; Young and Stanton, 2004, 2006; Mou-

stafa et al., 2017): a) self-assessment measures; b)

task measures; c) physiological measures. The ca-

tegory of self-assessment measures is often referred

to as self-report measures. It relies on the subject

perceived experience of the interaction with an un-

derlying interactive system through the direct estima-

tion of individual differences such as the emotional

state, attitude and stress of the operator, the effort de-

voted to the task and its demands (De Waard, 1996;

Hart, 2006). It is strongly believed that only the indi-

vidual concerned with the task can provide an accu-

rate judgement with respect to the MWL experienced.

The class of task performance measures is based upon

the assumption that the mental workload of an ope-

rator, interacting with a system, gain relevance only

if it influences system performance. Primary and se-

condary task measures exist including reaction time

to a secondary task or number of errors on the pri-

mary task or completion time (Rubio et al., 2004a;

Tsang and Vidulich, 2006). The category of physio-

logical measures considers bodily responses derived

from the operator’s physiology (heart rate, pupil dila-

tion etc). These responses are believed to be correla-

ted to MWL and are aimed at interpreting psychologi-

cal processes by analysing their effect on the state of

the body. Their advantage is that they can be collected

continuously over time, without requiring an overt re-

sponse by the operator (O’ Donnel and Eggemeier,

1986) but they require specific equipment and trai-

ned operators mitigating their use in real-world tasks.

Self-assessment measures have always attracted many

practitioners and seem to be the right candidates for

adoption in education. The following section descri-

bes two of these as their are adopted in the experiment

of section 3.

CSEDU 2018 - 10th International Conference on Computer Supported Education

296

2.4.2 The NASA-TLX and the Workload Profile

Two well known multi-dimensional subjective mea-

sures are the NASA-task Load Index (NASA-TLX)

Hart and Staveland (1988) and the Workload Profile

(Tsang and Velazquez, 1996). In contrast to unidi-

mensional scales of mental load measurements such

as effort and task difficulty proposed in Paas and

Van Merri

¨

enboer (1993, 1994) and Paas et al. (2003),

they focus on different components of load. In educa-

tion, these are not widely employed, but a few studies

have confirmed their validity and sensitivity (Gerjets

et al., 2006; Kester et al., 2006; Gerjets et al., 2004).

In general, the NASA-TLX has been used to pre-

dict critical levels of mental workload that can signi-

ficantly influence the execution of an underlying task.

The NASA-TLX consists of six sub scales that re-

present somewhat independent clusters of variables:

mental, physical, and temporal demands, frustration,

effort, and performance (Hart and Staveland, 1988).

To recollect ratings for these dimensions twenty grade

scales are utilised. A score from 0 to 100 (assigned to

the nearest point 5) is collected on each scale from re-

spondents. To connect the six individual scale ratings

into an average score, a weighting calculation is used.

This procedure requires a paired comparison task to

be performed prior to the workload assessments. Pai-

red comparisons demand the operator to select which

dimension is more pertinent to workload over all pairs

of the six dimensions. The number of times a dimen-

sion is chosen as more important, the weighting of

that dimension scale for a given task for that operator

is. A workload score from 0 to 100 is obtained for

each rated task by multiplying the weight by the indi-

vidual dimension scale score, summing across scales,

and dividing by 15 (the total number of paired com-

parisons).

NASA : [0..100] ∈ ℜ NASA =

6

∑

i=1

d

i

×w

i

!

1

15

The Workload Profile (WP) is a subjective wor-

kload assessment technique, based on the Multiple

Resource Theory (MRT) of Wickens (1984). In

this technique, eight factors are considered: percep-

tual/central processing, response selection and exe-

cution, spatial processing, verbal processing, visual

processing, auditory processing, manual output and

speech output. The WP procedure requires the opera-

tors to furnish the proportion of attentional resources,

in the range 0 to 1, used during a task. The overall

workload rating is calculated summing each of the 8

scores (Tsang and Velazquez, 1996). Formally:

W P : [0..100] ∈ ℜ W P =

1

8

8

∑

i=1

d

i

×100

2.5 Conceptual Map for Assessment

Concept maps are graphical tools aimed at organising

and representing knowledge. The conceptual maps

are useful learning tools because they display a con-

cept’s component visualising their relationships and

organising the related thoughts. Conceptual maps fo-

cuses on the promotion of creativity, they improve

the effective externalisation and visualisation of ideas,

discovering new problem solving methods and mea-

suring concept understanding (Cristea and Okamoto,

2001). They are built upon concepts that are usu-

ally enclosed in circles or boxes of some type, and

upon relationships between them, usually explicated

by a linking line connecting two concepts (Novak and

Ca

˜

nas, 2008). On this connecting line, linking words

or linking phrases can be placed, aimed at specifying

the relation between concepts. A word, or multiple

words can be used to label a concept as well as one

or more symbols (for example: ‘+’ ‘/’ ‘=’). Proposi-

tions contain two or more concepts connected by lin-

king words or phrases to form a meaningful statement

(Novak and Ca

˜

nas, 2008). The concepts are visuali-

sed in a hierarchical structure starting from the most

inclusive to the less. They are grounded on a key que-

stion related to some context and the links between

different sections and an area of the map are called

cross-link. The ability to draw a good hierarchical

structure and the ability to find and characterise new

cross-links promote the creative thinking phase.

Figure 1: Example of a concept map (Novak and Ca

˜

nas,

2008).

The criteria for measuring conceptual understan-

ding is the reason why we are interested in Novak’s

conceptual maps. This type of maps are objective and

meaningful assessment tools to evaluate an instructi-

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

297

onal design in the classroom. They are expected to be

effective to identify both valid and invalid ideas gras-

ped by learners and they can provide quantitative in-

formation about the student performance (Novak and

Ca

˜

nas, 2008). A rubik for quantitatively assessing

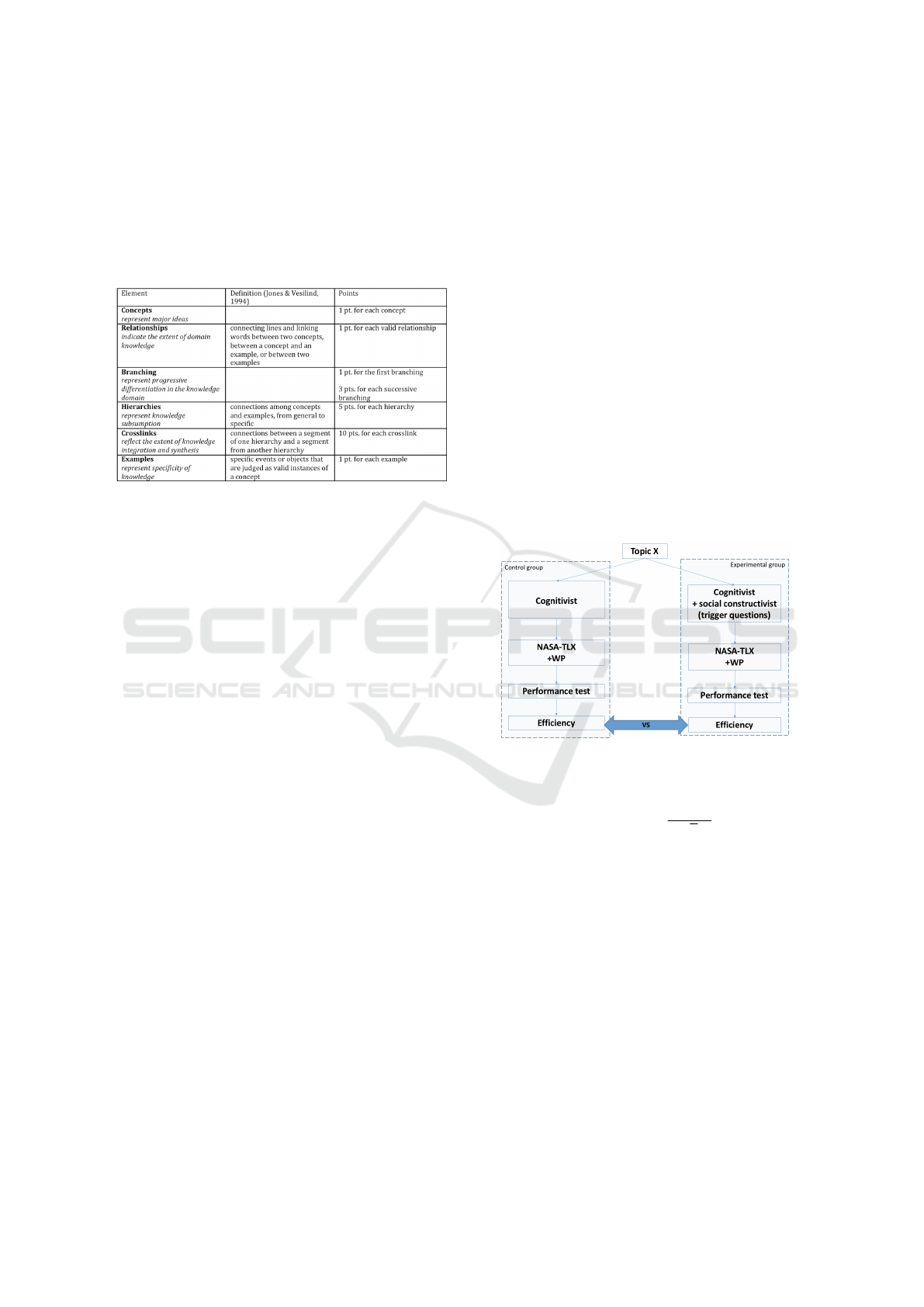

a conceptual map has been proposed as per figure 2

Markham et al. (1994).

Figure 2: Rubik for concept maps (Markham et al., 1994).

3 DESIGN AND METHODOLOGY

A primary research study is envisioned. A number

of different topics will be delivered by a number of

selected lecturers using two teaching conditions:

• a traditional cognitivist activity (section 2.1), in

which the instructor presents explicit information.

• a social constructivist activity added to the first,

based upon trigger questions (section 2.2).

The experiment will involve students, of third-level

classes, divided in two groups: a control group, re-

ceiving condition 1, and an experimental group, re-

ceiving condition 2. The goal of the trigger ques-

tions (as defined in table 1) is to support the deve-

lopment of the cognitive skills of learners. The list

has been formed through a selection of the questions

originally proposed in S

´

atiro (2006) that can be also

applied in third-level contexts. After the completion

of each class, students will be provided with a copy

of the NASA-TLX or the Workload Profile question-

naires by a lecturer. Subsequently, an assessment of

the information received by the lecturer, based on the

use of conceptual maps, as described in section 2.5,

will be distributed to learners (table 2). The basic

idea of the graphic representation, through the use of

conceptual maps, corresponds to coordinate the cog-

nitive construction of schemata of knowledge with its

externalisation (van Bruggen et al., 2002). The pe-

dagogical goal is to improve the metacognitive skills

by asking questions aimed at monitoring and control-

ling the transfer (and the construction) of information

from working memory to long-term working memory

and retrieving the schemata from long-term memory

to working memory (Valcke, 2002). It is supposed

that, the externalisation of the internal cognitive sche-

mata of knowledge by sharing collaborative activities,

improves metacognitive thinking, facilitating the lear-

ning process. It is important to note that the schema-

tic performance test will be evaluated by the quan-

titative marking scheme developed by (Novak et al.,

1984) and extended by (Markham et al., 1994) (Fi-

gure 2). This scheme will generate a score of perfor-

mance in percentage. With an overall index of men-

tal workload and a performance score, the proposal is

to combine these two measures towards an index of

efficiency. This will serve as a metric for the empi-

rical evaluation of the two envisioned teaching con-

ditions. In details, efficiency will be computed em-

ploying the Relative Efficiency measure proposed in

Paas and Van Merri

¨

enboer (1993) (equation 1), up-

dated with the overall score of mental workload – as

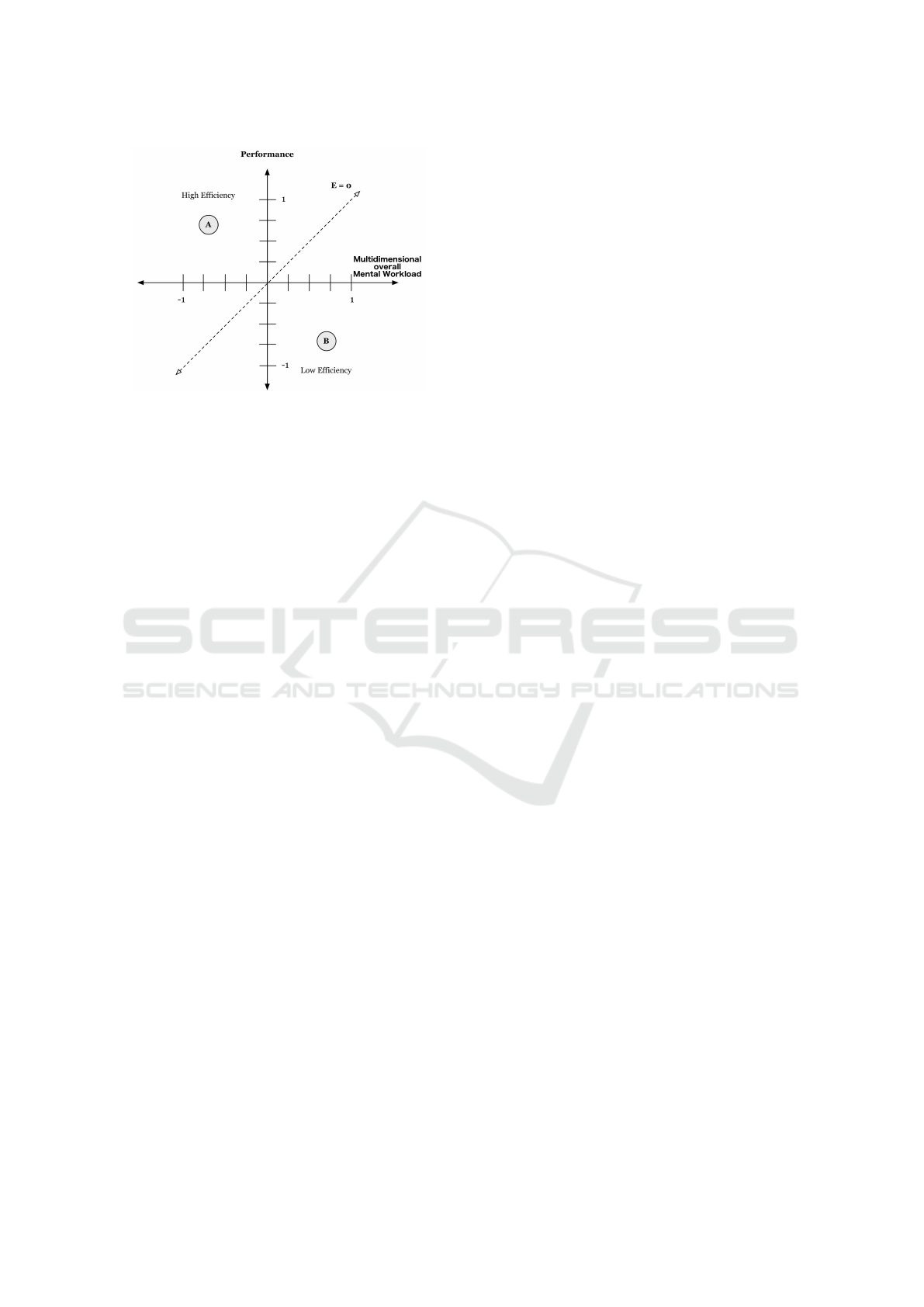

measured by the NASA-TLX and WP – (figure 4).

Figure 3: Schematic representation of the envisioned pri-

mary research experiment.

E f f iciency =

|R −P|

√

2

(1)

where P is the standardised performance score (in

percentage) and R is the standardised mental wor-

kload score. If R −P < 0, then E is positive, and if

R −P > 0, then E is negative.

4 EXPECTED CONTRIBUTION

Different contributions to the body of knowledge are

expected from this research. The first is the use

of the NASA Task Load Index (Hart and Staveland,

1988) and the Workload Profile (Tsang and Vela-

zquez, 1996) instruments as multidimensional sub-

jective measures of mental workload in third-level

educational contexts. This is in contrast to most of

CSEDU 2018 - 10th International Conference on Computer Supported Education

298

Figure 4: Instructional efficiency graph, adapted from Paas

and Van Merri

¨

enboer (1993) by incorporating an overall

measure of mental workload.

the studies in the literature that have employed uni-

dimensional mental workload subjective assessment

techniques. The second expected contribution is the

use of a social constructivist approach jointly with a

traditional cognitivist approach to teaching. The no-

velty of this social constructivist approach is the use

of trigger questions in a shared dialogue, this aimed

at increasing the metacognitive skills of learners and

support creative and critical thinking. This metacog-

nitive activity is supposed to facilitate the control of

information during the transfer and the construction

phase. The third contribution is the extension of the

relative measure of efficiency, proposed in Paas and

Van Merri

¨

enboer (1993), with an overall measure of

mental workload instead to perceived effort.

REFERENCES

Atkinson, R. C. and Shiffrin, R. M. (1968). Human me-

mory: A proposed system and its control processes.

Psychology of learning and motivation, 2:89–195.

Baddeley, A. (1998). Working memory. Comptes Rendus

de l’Acad

´

emie des Sciences-Series III-Sciences de la

Vie, 321(2):167–173.

Bleazby, J. (2006). Autonomy, democratic community,

and citizenship in philosophy for children: De-

wey and philosophy for childrens rejection of the

individual/community dualism. Analytic teaching,

26(1):30–52.

Br

¨

unken, R., Steinbacher, S., Plass, J. L., and Leutner, D.

(2002). Assessment of cognitive load in multimedia

learning using dual-task methodology. Experimental

psychology, 49(2):109.

Cain, B. (2007). A review of the mental workload literature.

Technical report, Defence Research & Dev.Canada,

Human System Integration.

Cristea, A. I. and Okamoto, T. (2001). Object-oriented col-

laborative course authoring environment supported by

concept mapping in myenglishteacher. Educational

Technology and Society, 4(2).

De Jong, T. (2010). Cognitive load theory, educational rese-

arch, and instructional design: some food for thought.

Instructional science, 38(2):105–134.

De Waard, D. (1996). The measurement of drivers’ mental

workload. The Traffic Research Centre VSC, Univer-

sity of Groningen.

Dewey, J. (2004). Democracy and education. Courier Cor-

poration.

Folker, S., Ritter, H., and Sichelschmidt, L. (2005). Proces-

sing and integrating multimodal material: The influ-

ence of color-coding. In Proceedings of the 27th an-

nual conference of the cognitive science society, pages

690–695. Citeseer.

Gerjets, P. and Scheiter, K. (2003). Goal configurations

and processing strategies as moderators between in-

structional design and cognitive load: Evidence from

hypertext-based instruction. Educational psycholo-

gist, 38(1):33–41.

Gerjets, P., Scheiter, K., and Catrambone, R. (2004). Desig-

ning instructional examples to reduce intrinsic cogni-

tive load: Molar versus modular presentation of so-

lution procedures. Instructional Science, 32(1-2):33–

58.

Gerjets, P., Scheiter, K., and Catrambone, R. (2006). Can

learning from molar and modular worked examples

be enhanced by providing instructional explanations

and prompting self-explanations? Learning and In-

struction, 16(2):104–121.

Gerjets, P., Scheiter, K., and Cierniak, G. (2009). The scien-

tific value of cognitive load theory: A research agenda

based on the structuralist view of theories. Educatio-

nal Psychology Review, 21(1):43–54.

Hart, S. G. (2006). Nasa-task load index (nasa-tlx); 20 ye-

ars later. In Human Factors and Ergonomics Society

Annual Meeting, volume 50. Sage Journals.

Hart, S. G. and Staveland, L. E. (1988). Development of

nasa-tlx (task load index): Results of empirical and

theoretical research. Advances in psychology, 52:139–

183.

Jonassen, D. H. (1991). Objectivism versus constructivism:

Do we need a new philosophical paradigm? Educa-

tional technology research and development, 39(3):5–

14.

Kester, L., Lehnen, C., Van Gerven, P. W., and Kirschner,

P. A. (2006). Just-in-time, schematic supportive infor-

mation presentation during cognitive skill acquisition.

Computers in Human Behavior, 22(1):93–112.

Kirschner, P. A., Sweller, J., and Clark, R. E. (2006). Why

minimal guidance during instruction does not work:

An analysis of the failure of constructivist, discovery,

problem-based, experiential, and inquiry-based tea-

ching. Educational psychologist, 41(2):75–86.

Lipman, M. (2003). Thinking in education. Cambridge Uni-

versity Press.

Longo, L. (2014). Formalising Human Mental Workload

as a Defeasible Computational Concept. PhD thesis,

Trinity College Dublin.

Longo, L. (2015). A defeasible reasoning framework

for human mental workload representation and as-

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

299

sessment. Behaviour and Information Technology,

34(8):758–786.

Longo, L. (2016). Mental workload in medicine: Foun-

dations, applications, open problems, challenges and

future perspectives. In Computer-Based Medical Sy-

stems (CBMS), 2016 IEEE 29th International Sympo-

sium on, pages 106–111. IEEE.

Longo, L. and Barrett, S. (2010). A computational analy-

sis of cognitive effort. In Intelligent Information and

Database Systems, Part II, pages 65–74.

Longo, L. and Leva, M. C. (2017). Human Mental Wor-

kload: Models and Applications: First International

Symposium, H-WORKLOAD 2017, Dublin, Ireland,

June 28-30, 2017, Revised Selected Papers, volume

726. Springer.

Markham, K. M., Mintzes, J. J., and Jones, M. G. (1994).

The concept map as a research and evaluation tool:

Further evidence of validity. Journal of research in

science teaching, 31(1):91–101.

Mayer, R. E. (2002). Rote versus meaningful learning. The-

ory into practice, 41(4):226–232.

Mayer, R. E. (2005). Principles for managing essential pro-

cessing in multimedia learning: Segmenting, pretrai-

ning, and modality principles. The Cambridge hand-

book of multimedia learning, pages 169–182.

Mayer, R. E. and Moreno, R. (1998). A split-attention effect

in multimedia learning: Evidence for dual processing

systems in working memory. Journal of educational

psychology, 90(2):312.

Miller, G. A. (1956). The magical number seven, plus or

minus two: Some limits on our capacity for processing

information. Psychological review, 63(2):81.

Moustafa, K., Saturnino, L., and Longo, L. (2017). Asses-

sment of mental workload: a comparison of machine

learning methods and subjective assessment techni-

ques. In 2017 1st International Symposium on Hu-

man Mental Workload: models and applications., vo-

lume CCIS 726, pages 30–50. Springer International

Publishing.

Nachreiner, F. (1995). Standards for ergonomics principles

relating to the design of work systems and to mental

workload. Applied Ergonomics, 26(4):259–263.

Novak, J. D. and Ca

˜

nas, A. J. (2008). The theory underlying

concept maps and how to construct and use them.

Novak, J. D., Gowin, D. B., and Kahle, J. B. (1984). Lear-

ning How to Learn. Cambridge University Press.

O’ Donnel, R. D. and Eggemeier, T. F. (1986). Workload as-

sessment methodology. In Boff, K., Kaufman, L., and

Thomas, J., editors, Handbook of perception and hu-

man performance, volume 2, pages 42/1–42/49. New

York, Wiley-Interscience.

Paas, F., Tuovinen, J. E., Tabbers, H., and Van Gerven, P. W.

(2003). Cognitive load measurement as a means to

advance cognitive load theory. Educational psycholo-

gist, 38(1):63–71.

Paas, F. G. (1992). Training strategies for attaining trans-

fer of problem-solving skill in statistics: A cognitive-

load approach. Journal of educational psychology,

84(4):429.

Paas, F. G. and Van Merri

¨

enboer, J. J. (1993). The efficiency

of instructional conditions: An approach to combine

mental effort and performance measures. Human Fac-

tors: The Journal of the Human Factors and Ergono-

mics Society, 35(4):737–743.

Paas, F. G. and Van Merri

¨

enboer, J. J. (1994). Variabi-

lity of worked examples and transfer of geometrical

problem-solving skills: A cognitive-load approach.

Journal of educational psychology, 86(1):122.

Paivio, A. (1986). Mental representation: A dual-coding

approach oxford univ. Press, New York.

Peirce, C. S. (1877). The fixation of belief. 1877.

Plass, J. L., Moreno, R., and Br

¨

unken, R. (2010). Cognitive

load theory. Cambridge University Press.

Rizzo, L., Dondio, P., Delany, S. J., and Longo, L. (2016).

Modeling Mental Workload Via Rule-Based Expert

System: A Comparison with NASA-TLX and Workload

Profile, pages 215–229. Springer International Publis-

hing, Cham.

Rubio, S., Diaz, E., Martin, J., and Puente, J. M. (2004a).

Evaluation of subjective mental workload: A compa-

rison of swat, nasa-tlx, and workload profile methods.

Applied Psychology, 53(1):61–86.

Rubio, S., D

´

ıaz, E., Mart

´

ın, J., and Puente, J. M. (2004b).

Evaluation of subjective mental workload: A compa-

rison of swat, nasa-tlx, and workload profile methods.

Applied Psychology, 53(1):61–86.

S

´

atiro, A. (2006). Jugar a pensar con mitos: este libro

forma parte dle Proyecto Noria y acomp

˜

na al libro

para ni

˜

nos de 8-9 a

˜

nos: Juanita y los mitos. Octaedro.

Schnotz, W. and K

¨

urschner, C. (2007). A reconsideration

of cognitive load theory. Educational Psychology Re-

view, 19(4):469–508.

Schunk, D. H. (1996). Learning theories. Printice Hall Inc.,

New Jersey, pages 1–576.

Seufert, T., J

¨

anen, I., and Br

¨

unken, R. (2007). The impact of

intrinsic cognitive load on the effectiveness of graphi-

cal help for coherence formation. Computers in Hu-

man Behavior, 23(3):1055–1071.

Sweller, J. (2009). What human cognitive architecture tells

us about constructivism.

Sweller, J. (2010). Element interactivity and intrinsic, ex-

traneous, and germane cognitive load. Educational

psychology review, 22(2):123–138.

Sweller, J., Van Merrienboer, J. J., and Paas, F. G. (1998).

Cognitive architecture and instructional design. Edu-

cational psychology review, 10(3):251–296.

Tabbers, H. K., Martens, R. L., and Merri

¨

enboer, J. J.

(2004). Multimedia instructions and cognitive load

theory: Effects of modality and cueing. British Jour-

nal of Educational Psychology, 74(1):71–81.

Tsang, P. S. (2006). Mental workload. In Karwowski, W.,

editor, International Encyclopedia of Ergonomics and

Human Factors (2nd ed.), volume 1, chapter 166. Tay-

lor & Francis.

Tsang, P. S. and Velazquez, V. L. (1996). Diagnosticity and

multidimensional subjective workload ratings. Ergo-

nomics, 39(3):358–381.

Tsang, P. S. and Vidulich, M. A. (2006). Mental wor-

kload and situation awareness. In Salvendy, G., editor,

Handbook of Human Factors and Ergonomics, pages

243–268. John Wiley & Sons, Inc.

CSEDU 2018 - 10th International Conference on Computer Supported Education

300

Valcke, M. (2002). Cognitive load: updating the theory?

Learning and Instruction, 12(1):147–154.

van Bruggen, J. M., Kirschner, P. A., and Jochems, W.

(2002). External representation of argumentation in

cscl and the management of cognitive load. Learning

and Instruction, 12(1):121–138.

Van Gerven, P. W., Paas, F., Van Merri

¨

enboer, J. J., and

Schmidt, H. G. (2004). Memory load and the cogni-

tive pupillary response in aging. Psychophysiology,

41(2):167–174.

Vygotsky, L. S. (1986). Thought and language (rev. ed.).

Wickens, C. D. (1979). Measures of workload, stress and

secondary tasks. In Mental workload, pages 79–99.

Springer.

Wickens, C. D. (1984). The multiple resources model of

human performance: Implications for display design.

Technical report, Illinois Univ At Urbana.

Wickens, C. D. (2012). Workload assessment and pre-

diction. MANPRINT: an approach to systems integra-

tion, page 257.

Wilson, G. F. and Eggemeier, T. F. (2006). Mental workload

measurement. In Karwowski, W., editor, International

Encyclopedia of Ergonomics and Human Factors (2nd

ed.), volume 1, chapter 167. Taylor & Francis.

Young, M. S. and Stanton, N. A. (2004). Mental workload.

In Stanton, N. A., Hedge, A., Brookhuis, K., Salas, E.,

and Hendrick, H. W., editors, Handbook of Human

Factors and Ergonomics Methods, chapter 39, pages

1–9. CRC Press.

Young, M. S. and Stanton, N. A. (2006). Mental workload:

theory, measurement, and application. In Karwowski,

W., editor, International encyclopedia of ergonomics

and human factors, volume 1, pages 818–821. Taylor

& Francis, 2nd edition.

APPENDIX

Table 1: Trigger questions for supporting the development

of cognitive skills - To be completed by instructor and to be

answered by learners.

Conceptualisation

Classifying and ordering

1 From the information that you have received, what

is the most important?

Conceptualising and defining

2 What does ... mean?

Giving examples

3 Could you provide some examples of ... ?

Comparing and contrasting

4 Could you explain the differences and the similari-

ties between ... and ...?

Investigation

Generating hypotheses

5 Which explanations could we provide to state that

... ?

Finding alternatives

5 Are there some different way to state that ... ?

Reasoning

Giving reasons

7 Why do you think that....?

Connecting causes and effects

8 What is the cause of.... and its effects?

Table 2: The NASA Task Load Index.

# Question

NT

1

How much mental and perceptual activity was re-

quired (e.g. thinking, deciding, calculating, re-

membering, looking, searching, etc.)? Was the

task easy or demanding, simple or complex, ex-

acting or forgiving?

NT

2

How much physical activity was required (e.g.

pushing, pulling, turning, controlling, activating,

etc.)? Was the task easy or demanding, slow or

brisk, slack or strenuous, restful or laborious?

NT

3

How much time pressure did you feel due to the

rate or pace at which the tasks or task elements

occurred? Was the pace slow and leisurely or ra-

pid and frantic?

NT

4

How hard did you have to work (mentally & phy-

sically) to accomplish your level of performance?

NT

5

How successful do you think you were in accom-

plishing the goals, of the task set by the lecturer?

How satisfied were you with your performance in

accomplishing these goals?

NT

6

How insecure, discouraged, irritated, stressed and

annoyed versus secure, gratified, content, relaxed

and complacent did you feel during the task?

An Investigation of the Impact of a Social Constructivist Teaching Approach, based on Trigger Questions, Through Measures of Mental

Workload and Efficiency

301

Table 3: Workload Profile.

# Question

W P

1

How much attention was required for activities

like remembering, problem-solving, decision-

making, perceiving (detecting, recognising, iden-

tifying objects)?

W P

2

How much attention was required for selecting

the proper response channel (manual - keybo-

ard/mouse, or speech/voice) and its execution?

W P

3

How much attention was required for spatial pro-

cessing (spatially pay attention around)?

W P

4

How much attention was required for verbal ma-

terial (eg. reading, processing linguistic material,

listening to verbal conversations)?

W P

5

How much attention was required for executing

the task based on the information visually recei-

ved (eyes)?

W P

6

How much attention was required for executing

the task based on the information auditory recei-

ved?

W P

7

How much attention was required for manually

respond to the task (eg. keyboard/mouse)?

W P

8

How much attention was required for producing

the speech response (eg. engaging in a conversa-

tion, talking, answering questions)?

CSEDU 2018 - 10th International Conference on Computer Supported Education

302