Applicability and Resilience of a Linear Encoding Scheme for Computing

Consensus

Michel Toulouse and B

`

ui Quang Minh

Vietnamese-German University, Binh Duong New City, Vietnam

Keywords:

Consensus Algorithms, Observability Theory, Attack Mitigation, Edge Computing.

Abstract:

Consensus algorithms have a set of network nodes converge asymptotically to a same state which depends

on some function of their initial states. At the core of these algorithms is a linear iterative scheme where

each node updates its current state based on its previous state and the state of its neighbors in the network.

In this paper we review a proposal from control theory which uses linear iterative schemes of asymptotic

consensus and observability theory to compute consensus states in a finite number of iterations. This proposal

has low communication requirements which makes it attractive to address consensus problems in a limited

resource environment such as edge computing. However it assumes static networks contrary to wireless edge

computing networks which are often dynamic and prone to attacks. The main purpose of this paper is to assess

the network conditions and attack scenarios where this algorithm can still be considered useful in practice to

address consensus problems in IoT applications. We introduce some new lower and exact bounds which

further improve the communication performance of the algorithm. We also have some technical contributions

on how to speed up mitigation of malicious activities and handling network instabilities. Numerical results

confirm the communication performance of the algorithm and the existence of scenarios where the system can

be considered cost effective resilient to errors injected intentionally or unintentionally.

1 INTRODUCTION

Consensus problems arise whenever a set of network

nodes seek to agree on a same value that depends

on parameters distributed across a network. They

are coordination problems such as rendezvous (Cortes

et al., 2006), agreement problems (Saber and Murray,

2003), leader-following problems (Peng and Yang,

2009), distributed fusion of sensor data (Xiao et al.,

2005), distributed optimization problems (Tsitsiklis

et al., 1986) and many others. Consensus problems

are often hidden in larger applications. Recently,

blockchain and distributed update consensus algo-

rithms have been proposed to address security and

privacy of IoT nodes data exchanges (Christidis and

Devetsikiotis, 2016; Bahga and Madisetti, 2016). We

also find consensus problems in service-oriented IoT

applications (Li et al., 2014), IoT resource allocation

(Colistra et al., 2014), and others (Pilloni et al., 2017;

Carvin et al., 2014). More consensus problems are

likely to arise as IoT expands its applications, some

of which, such as smart cities, because of security and

the sheer volume of data generated, may require close

to the sources data fusion and analytic.

At the core of consensus algorithms is a linear iter-

ative scheme where each node updates its current state

based on its previous state and the state of its neigh-

bors in the network. Assume the consensus function f

is for each node to compute the average sum of the ini-

tial states, i.e. f =

1

n

∑

n

i=1

x

i

(0), for n is the number of

network nodes and x

i

(0) the initial state of node i. Let

also assume the computer network is modeled as an

undirected graph G = (V,E) where V = {1, 2,. ..,n}

stands for the set of nodes, and where the edge set

E represents network links. Let N

i

denotes the set

of nodes adjacent to node i in G. The average sum

consensus problem is solved by a distributed average

consensus algorithm where each node i computes it-

eratively the following local average sum:

x

i

(k + 1) =

1

|N

i

| + 1

(x

i

(k) +

∑

j∈N

i

x

j

(k)), (1)

Local averages in (1) are initially poor approxima-

tions of the true average sum as they are based on a

partial sum of the initial states. However, local av-

erages get increasingly closer to the true average as

further iterations carry information from nodes that

are further away from node i. The local average rule

Toulouse, M. and Quang Minh, B.

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus.

DOI: 10.5220/0006806001730184

In Proceedings of the 3rd International Conference on Internet of Things, Big Data and Security (IoTBDS 2018), pages 173-184

ISBN: 978-989-758-296-7

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

173

in Equation (1) can be expressed in a more general

form:

x

i

(k + 1) = W

ii

x

i

(k) +

∑

j∈N

i

W

i j

x

j

(k), (2)

where W

i j

holds the coefficient of a weighted linear

combination of x

i

and the values x

j

, j ∈ N

i

(note, the

properties of W are summarized in the APPENDIX).

Equation (2) is the core linear model found in all dis-

crete linear consensus algorithms. The corresponding

network wide update rule is as followed:

x(k + 1) = W x(k),k = 0,1, .. . (3)

where x(k) represents the state of the nodes at itera-

tion k (x(k) = [x

1

(k), x

2

(k), ...,x

n

(k)]

T

).

In this paper we consider a consensus algorithm

that we call exact consensus because consensus func-

tions are computed exactly in a finite number of itera-

tions. This algorithm has first been proposed in (Sun-

daram and Hadjicostis, 2008). It is based on the same

linear iterative scheme as in Equation (2) but inter-

preted differently. For asymptotic consensus, Equa-

tion (2) is a computational step in a distributed algo-

rithm, it computes a refined approximation of the con-

sensus state for node i. For exact consensus, Equation

(2) is a linear encoding scheme.

This interpretation of the linear iterative scheme

in Equation (2) as an encoding scheme is analogue

to network coding protocols (Ahlswede et al., 2000)

where, at the network layer, packets from different

sources are recombined into a single packet to im-

prove network’s throughput. In Equation (2), states

x

j

(k), j ∈ N

i

, and state x

i

(k) are combined at iteration

k to yield a new state x

i

(k + 1). State x

i

(k + 1) en-

codes the states of iteration k which is then forwarded

to nodes j ∈ N

i

at iteration k + 1.

The second important contribution making exact

consensus possible is the parallel drawn between lin-

ear iterative rules such Equation (2) and observabil-

ity theory (Sundaram and Hadjicostis, 2013). Ob-

servability theory is not foreign to consensus algo-

rithms. It has been a central theme in approaches

proposed by researchers in the control theory commu-

nity to address security issues of asymptotic consen-

sus algorithms (Pasqualetti et al., 2009; Teixeira et al.,

2010; Silvestre et al., 2013). Observer-based tech-

niques in process control which detect faulty system

components are adapted to detect and isolate data fal-

sification attacks on asymptotic consensus iterations

(Pasqualetti et al., 2007).

In the context of exact consensus, observability

theory is applied to recover in a finite number of steps

the only guaranteed uncorrupted state of the system,

i.e. state x(0) (in this paper we assume the initial

states are genuine). The state x(0) is inferred by a

node i from observations of states x

j

(k), j ∈ N

i

that

flow through node i at each iteration of Equation (2).

Once the initial states have been recovered by a node

i, the consensus function is computed locally.

This paper is structured as follow. Section 2

briefly describes the exact consensus algorithm. In

Section 3 we introduce new lower and exact bounds

on the number of communication steps for the algo-

rithm, as well as a few technical approaches to com-

pute these bounds. Section 4, briefly summarizes

the algorithm in (Sundaram and Hadjicostis, 2011),

which is an extension of the algorithm in Section 2 to

mitigate the hacking of some nodes by malicious ac-

tors. Section 5 is an assessment of the performance

and potential of both algorithms based on numerical

tests and technical proposals to improve the resilience

of exact consensus. Section 6 concludes.

2 EXACT CONSENSUS

According to control theory, a system is observable

to an external observer if its internal states can be in-

ferred in finite time from observing a sequence of the

system’s outputs. In the context of the exact consen-

sus algorithm, the internal system states are x(k), and

the external observers are each node in the network.

Each node observes a sequence of states that flows

directly through it, i.e. its own state and the states

of its neighbors. Then each node infers x(0), from

the sequence of observations. Therefore we divide

the presentation of the exact consensus algorithm into

two sections. The observation phase deals with the

recording of states that flow through a node. The re-

covery phase is related to the recovery of initial states

from the stored encoded data.

2.1 Observation Phase

Let x be a state vector of n entries representing the

current state of an n network nodes, and W a weight

matrix. The following linear system models how in-

formation is recorded at node i:

x(k + 1) = W x(k)

y

i

(k) = C

i

x(k)

(4)

The relevant quantity in Equation (4) is y

i

(k) = C

i

x(k)

which specifies the values of x(k) observed by node i.

At each iteration k, y

i

(k) stores (deg

i

+ 1) states, i.e.

x

i

(k) and x

j

(k) ∈ N

i

(deg

i

= |N

i

|). Notations y

i

(k −1)

and y

i

(k) refer to two different sets of entries in y

i

.

The size of y

i

(0 : v − 1) is (deg

i

+ 1)v, where v is the

length of the sequence of observations performed by

node i. C

i

is a (deg

i

+ 1) × n matrix with a single 1 in

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

174

each row, indicating the value of vector x(k) stored in

y

i

(k) (entry C

i

[k, j ] = 1 if j ∈ N

i

, otherwise C

i

[k, j ] =

0).

As an example, consider the ring network in Fig-

ure 1 and the system described in Equation (4).

1 2

3

4

Figure 1: Ring Network.

Assume the weight matrix W is the same Metropolis-

Hasting matrix (16) for computing asymptotic aver-

age consensus. For a ring of 4 nodes, the coefficients

of the Metropolis-Hasting matrix are the following:

W =

0.33 0.33 0 0.33

0.33 0.33 0.33 0

0 0.33 0.33 0.33

0.33 0 0.33 0.33

We focus on node 1 as external observer. The corre-

sponding C

1

matrix is as followed:

C

1

=

1 0 0 0

0 1 0 0

0 0 0 1

,

The entries of y

1

are obtained as follow. We know

from linear algebra that x(k) = W

k

x(0) for W

k

the

product of matrix W with itself k times. Therefore,

y

1

(0) = C

1

W

0

x(0) = [x

1

,x

2

,x

4

]

T

, y

1

(1) = C

1

W x(0),

y

1

(2) = C

1

W

2

x(0) and y

1

(v − 1) = C

1

W

v−1

x(0). Af-

ter v observations, the column vector y

1

would have

stored the 3v states that have passed through node 1.

The observation sequence of node i fills the col-

umn vector y

i

(0 : v − 1). As the example above sug-

gests, the observation sequence is a mapping x(0) →

y

i

(0 : v − 1) that can be represented in matrix form

called the observability matrix, denoted as O

i,v−1

:

O

i,v−1

=

C

i

C

i

W

C

i

W

2

.

.

.

C

i

W

v−1

(5)

The observability matrix for node 1 in the ring net-

work of Figure 1 and v = 2 is O

1,1

=

C

1

C

1

W

=

1 0 0 0

0 1 0 0

0 0 0 1

0.33 0.33 0 0.33

0.33 0.33 0.33 0

0.33 0 0.33 0.33

O

i,v−1

is a mathematical expression that computes

the data recorded during the observation sequence at

node i:

y

i

(0)

y

i

(1)

y

i

(2)

.

.

.

y

i

(v

i

− 1)

=

C

i

C

i

W

C

i

W

2

.

.

.

C

i

W

v

i

−1

x(0) (6)

The observability matrix is needed to decode the data

in observation vector y

i

(0 : v − 1) in order to recover

x(0) during the recovery phase.

2.2 Recovery Phase

After the observation phase, the column vector y

i

(0 :

v − 1) of node i stores the initial state of all the

other nodes in the network, though this information is

stored in an encoded form. In order to compute a con-

sensus function f , either f is mapped into an equiva-

lent function f

0

i

that can be applied on the arguments

provided by y

i

or a decoding function is applied on y

i

that recovers the initial state x(0).

2.2.1 Mapping f into f

0

i

The consensus f is mapped into an equivalent func-

tion f

0

i

expressed in matrix form Γ

i

. First f is written

in a matrix form Q. From linear algebra, if Q is in

the row space of the matrix O

i,v−1

, then linearly inde-

pendent rows of O

i,v−1

can be treated as a base for Q.

The matrix Q is then expressed in terms of this new

base. The new expression of Q is found by solving

the following system of linear equations:

O

T

i,v−1

Γ

T

i

= Q

T

(7)

where Γ

i

computes f (x(0)) using the arguments avail-

able in the observation vector y

i

(0 : v − 1):

Γ

i

y

i

(0)

y

i

(1)

y

i

(2)

.

.

.

y

i

(v − 1)

= Γ

i

O

i,v−1

x(0) = Qx(0). (8)

As an example consider the consensus function

f =

1

n

∑

4

i=1

x

i

(0) and the four nodes ring network in

Figure 1. In matrix form, f is the row vector Q =

[0.25,0.25, 0.25,0.25]. Solving for Q and node 1, we

have the system of linear equations O

T

1,2

Γ

T

1

= Q

T

:

1 0 0 0.33 0.33 0.33

0 1 0 0.33 0.33 0

0 0 0 0 0.33 0.33

0 0 1 0.33 0 0.33

Γ

T

1

=

0.25

0.25

0.25

0.25

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus

175

This system has infinitely many solutions, one is

[−0.66,−0.33,−0.75,2,−0.25,1] which expresses Q

in terms of a set of linearly independent rows of O

1,2

as a new base for Q.

Assume x(0) = [1,2,3, 4], therefore

f =

1

n

∑

4

i=1

x

i

(0) = 2.5. We consider again the

case of node 1. During the observation phase, after

the first observation, the content of the column vector

y

1

(0) is

y

1

(0) =

1 0 0 0

0 1 0 0

0 0 0 1

1

2

3

4

=

1

2

4

At the second observation, the values stored in

y

1

(1) = C

1

W x(0)

0.33 0.33 0 0.33

0.33 0.33 0.33 0

0.33 0 0.33 0.33

1

2

3

4

=

2.31

2.0

2.66

After two observations, the column vector y

T

1

=

[1,2, 4,2.31,2,2.66]. According to Equation (8) the

consensus value can be computed as

1

n

∑

4

i=1

x

i

(0) =

Γ

i

y

i

. Indeed, the product of Γ

1

y

1

= 2.46 ≈ 2.5, the

difference is accounted for by rounding errors.

2.2.2 Recovering the Initial State x(0) from y

i

The procedure to recover x(0) simply consists to solve

Equation (8) for the identity matrix I

n

, assuming I

n

is

the row space of O

i,v−1

. If this the case, then I

n

is

expressed in terms of a linear combination of rows

in O

i,v−1

, from which Γ

i

y

i

returns x(0). Consider the

four nodes ring network in Figure 1, to compute the

average sum function, first the identity matrix I

4

is

expressed in a base provided by the O

1,2

matrix, by

solving the system of linear equations O

T

1,2

Γ

T

1

= I

4

:

1 0 0 0.33 0.33 0.33

0 1 0 0.33 0.33 0

0 0 0 0 0.33 0.33

0 0 1 0.33 0 0.33

Γ

T

1

= I

4

The solution of this system of equations is as follows:

Γ

T

1

=

0.917 -0.028 -0.836 -0.082

-0.083 0.953 -0.336 -0.033

-0.083 -0.008 -0.336 0.869

0.250 0.083 -0.497 0.249

0.000 0.058 1.515 -0.149

0.000 -0.058 1.515 0.149

Assuming the initial values x

i

(0) of nodes 1 to 4 are

the same as above, then y

T

1

= [1,2, 4,2.31, 2,2.66], the

same as for the average sum example. One can verify

that Γ

1

y

1

= [0.9965,1.9994,3.0598,4.0015], round-

ing errors accounting for the differences. Node 1 has

then learned the initial state of the system, therefore

node 1 can computed directly the average sum us-

ing the f =

∑

n

i=1

x

i

(0) or almost any other function

of x(0).

The difference between mapping f to f

0

and re-

covering x(0) from y

i

is mainly in the number of ob-

servations needed to compute each approach. As the

number of columns in I

n

is the same as the number

of columns in O

i,v−1

, for I

n

to be in the row space of

O

i,v−1

, the matrix O

i,v−1

has to be full column-rank.

This usually requires more observations compared to

solving for a matrix representation of the function f .

On the other hand, computing the consensus function

f directly from the arguments in y

i

requires a mapping

f → f

0

for each consensus function, which could be

tedious. It is more simple to recover x(0), from which

any consensus function can be computed.

The observability matrix O

i,v−1

as well as Γ

i

are

pre-computed for each node prior to the execution of

the observation phase. The constitution of the observ-

ability matrix O

i,v−1

depends on the weight matrix W ,

the matrix C

i

and the value of v which are known prior

to the observation phase. Γ

i

depends on the function

Q and O

i,v−1

which are also known prior to the obser-

vation phase. Only Γ

i

needs to be stored by node i,

the observability matrix is not needed during or after

the observation phase.

In this brief description of the exact consensus al-

gorithm several issues have been omitted on relations

existing between the value of v, the coefficients of the

weight matrix W , the rank of the observability matrix

and the existence of a base in the row space of O

i,v−1

for a given consensus function f . Discussions and re-

sults about these issues can be found in (Sundaram,

2009). One open issue is finding better bounds on v.

We address this issue in the next section.

3 BOUNDS ON THE NUMBER OF

OBSERVATIONS

The length v of the sequence of observations is a com-

munication cost that exact consensus incurs prior to

compute the consensus function. It is important to re-

duce the number of observations in order to improve

the efficiency of the algorithm. In this section we

briefly recall known results in the literature on upper

bounds for v. Then, we address open issues regard-

ing the more difficult task of defining lower and exact

bounds on the size of O

i,v−1

.

In this section, we only consider the general case

of Q = I, where we focus on the recovery of the initial

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

176

state. We also assume that W is a random weight ma-

trix. In order to recover the initial state of every node

successfully, it is necessary and sufficient to have a

full column-rank observability matrix O

i,v−1

for all i.

It is proven that random weight matrices can gener-

ate a full column-rank O

i,v−1

for all i with probability

equal to 1 (Sundaram, 2009).

3.1 Upper Bounds

The number of nodes n in the network is a trivial up-

per bound on the number of observations. According

to the local update rule in Equation (1), at iteration

k = 0, the initial state x

i

(0) of any node i is pushed

to all its neighbors. For any node j ∈ N

i

, at itera-

tion k = 1, the value x

i

(0) is pushed to all the neigh-

bors of j, therefore after 2 iterations x

i

(0) has reached

all the nodes at distance 2 for node i. In at most n

iterations, x

i

(0), the initial value of node i, thought

in an encoded form, will have been pushed to all the

nodes in the network. In practice a better upper bound

can be computed by noticing that a node i receives

the initial values of all its neighbors in one iteration.

Therefore, after the first iteration, each node i has re-

ceived deg

i

+1 initial values (including its own initial

value). This yields the following upper bound on v

(Sundaram and Hadjicostis, 2008):

v ≤ n − deg

i

+ 1 (9)

3.2 Lower Bounds

A trivial lower bound on the dimension of O

i,v−1

is

the diameter of the graph (Sundaram and Hadjicostis,

2008). The intuition is that if the initial value of some

node j does not reach node i, it cannot recover the

state of node j by any mean. We call this lower bound

the data availability requirement. Indeed, if this re-

quirement does not hold, this means there exists two

nodes i and j where the observations of node i do not

depend on x

j

(0). This translates into an observability

matrix O

i,v−1

with all zero entries at the j-th column

and therefore O

i,v−1

is not full column-rank.

However, the above lower bound is not tight in

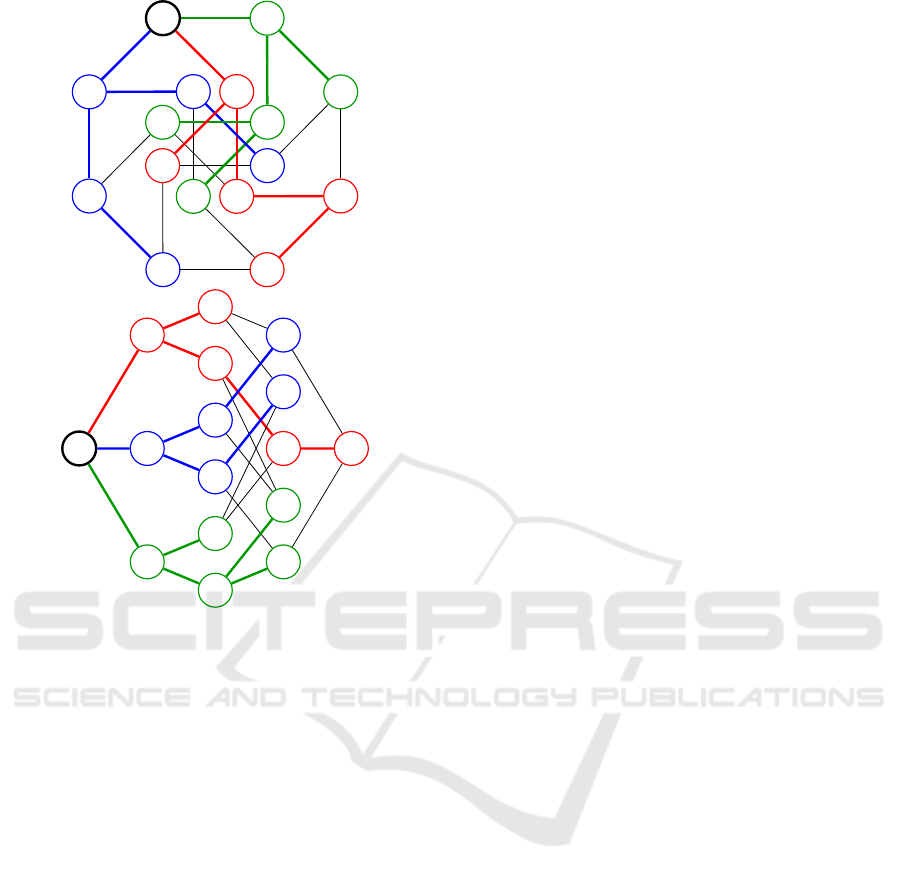

many cases. Consider a torus network such as the

network of 25 nodes in Figure 2. The diameter of this

graph is 4. As torus is a regular graph, the dimensions

of the matrix C

i

are the same for all nodes, it is 5 ×

25 for the graph in Figure 2. If the value of v is set

to 4, the column vector y

i

for any node i is 5v = 20,

the dimension of O

i,v−1

is 20 × 25, this observability

matrix has only 20 rows. Thus O

i,3

cannot be full

column-rank as the number of rows is less then the

number of columns.

Figure 2: Torus network with 25 nodes.

This brings us to introduce a second condition on

the minimum length the observation sequence must

satisfy, we call this second condition the intake ca-

pacity requirement. In one observation, a node cannot

gather more states than the number of links adjacent

to it in the network. The lower bound according to this

requirement could be formulated as d

n

min

i∈V

(deg

i

)+1

e,

which implies that the observability matrix O

i,v−1

for

any node would have at least n rows. A better bound

can be obtained by eliminating the rows which are

obviously linearly dependent on other rows. Notice

that, by definition, the state x

i

(k) is a linear combina-

tion of its state x

i

(k −1) and the states of its neighbors

x

j

(k−1), j ∈ N

i

of the previous iteration k −1. There-

fore, at node i, x

i

(k) can be eliminated (except for the

initial one x

i

(0)), thus there are only at most deg

i

in-

dependent states that are observed at each iteration.

Put everything together, the lower bound correspond-

ing to this requirement is:

v ≥

n − 1

min

i∈V

(deg

i

)

(10)

However, this requirement does not cover the data

availability requirement. Consider the graph in Fig-

ure 3. In this case, the minimum degree is 2 and

thus, the lower bound according to the intake capac-

ity requirement is 3, but the diameter is 4. These

two requirements generate two different lower bounds

which both need to be satisfied. Neither of these two

bounds is tight however for the graph in Figure 3

which requires empirically a sequence of 5 observa-

tions.

Tight lower bounds must also consider local prop-

erties and the topology of the graph. Intuitively, bot-

tlenecks are one of those properties. Bottlenecks for

a particular node are those nodes in the graph which

limit the intake capacity. For example, in Figure 3,

with respect to node 0, node 2 is a bottleneck. Though

node 0 has two neighbors, this node can only extract

one independent state observation of the part of the

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus

177

2

0

1

3

4

5

6

Figure 3: Example graph.

2

0

1

3

4

5

6

Figure 4: The spanning tree of the graph in Fig. 3.

graph beyond node 2 (i.e. nodes 3 to 6). To exam-

ine this analytically, consider x

1

(k), k ≥ 1, which is

the linear combination of x

j

(k − 1), j ∈ {0,1,2}. All

those three states derived from observations of node 0

at iteration k − 1, therefore x

1

(k) always depends on

existing observations of node 0 from iteration 1 and

can be eliminated. This implies that observing the

state of a same node twice would contribute nothing

into recovering x(0).

We derive a method to determine an exact lower

bound on v for any graph, which is correct empiri-

cally, but with no proof. This method was also men-

tioned in (Sundaram, 2009) as a conjecture. For a spe-

cific node i, draw a spanning tree rooted at i which has

the largest branch whose size is minimum. The num-

ber of observations requires at that particular node

is equals to the size of that largest branch. For the

graph in Figure 3, and node 0, the number of itera-

tions (which is 5) could be explained by looking at

Figure 4 (node 0 is colored in red, the blue branch is

the largest one and contains 5 edges, the green branch

is the other branch of the tree).

3.3 Exact Bound for Symmetric Graphs

A symmetric graph is a graph where any pair of adja-

cent nodes could be mapped to another pair of adja-

cent nodes (Babai, 1995). Formally, in a symmetric

graph, for any two pairs of adjacent nodes (u

1

,v

1

)

and (u

2

,v

2

), there exists an automorphism f such

that f (u

1

) = u

2

and f (v

1

) = v

2

. Such a graph is

both vertex-transitive (implying regularity) and edge-

transitive, and the connectivity of any node is exactly

d where d is the degree of every node in the graph.

The lower bound defines by the intake capacity

requirement is tight for symmetric graphs, which is

n−1

d

. While we still don’t have a formal proof,

the intuition could be explained using the result in

(Sundaram, 2009), which states that the minimum

size of the largest branch is the upper-bound on the

number of observations with probability 1 (where the

lower-bound is kept as a conjecture). In a symmet-

ric graph, there exists a spanning tree whose branches

have equal size or only have a difference of 1 (tree

with balanced branches). Because a symmetric graph

is a vertex-transitive graph (where any node has the

same role), we only need to analyze a single node.

To draw such a spanning tree, consider any node

as a reference node v

0

. The other nodes are organized

into layers based on the distance between them and

the reference node. Assign the nodes at distance 1

different labels (branch label). For any node at dis-

tance dist + 1, its label will be the union of the labels

of its adjacent nodes at distance dist. This structure

is shown in figure 5 and has some noticeable prop-

erties. First, because the connectivity of any node is

exactly d, any layer (except for the last one) must have

at least d nodes. Second, at any layer, the label counts

of each branch are equal. This is because we can map

any pair (v

0

,v

1

) to (v

0

,v

2

) where v

1

, v

2

are any pair

of nodes at distance 1, thus the labels of v

1

could be

map bijectively to the labels of v

2

. Based on these two

properties, we can manually draw a tree with balanced

branches, however we don’t have yet an explicit pro-

cedure to draw this tree, though we confirmed this ob-

servation with many well-known symmetric graphs,

as shown in figures 5, 6, and 7.

13

3

23

1

0

2

14

4

24

0

1

2

3

4

13

14

23

24

Figure 5: The distance-based representation of torus 3 × 3.

0

2

2

3

3

1

2

1 1

3

0

1

2

3

1

1

2

2

3

3

Figure 6: The distance-based representation of Petersen

graph.

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

178

0

2

2

12

123

13

3

3

2

123

1

23

1

123

3

1

0

1

2

3

1

1

2

2

3

3

12

123

13

123

23

123

Figure 7: The distance-based representation of M

¨

obius-

Kantor graph.

4 RESILIENCE OF EXACT

CONSENSUS

Implementations of the algorithm describe in Section

2 are susceptible to be hacked by malicious actors

taking control of some nodes from which the current

state of the nodes will be updated incorrectly. This

form of attacks has been addressed in (Sundaram and

Hadjicostis, 2011) where a solution approach is pro-

posed. In this section we briefly describe the pro-

posed attack model, the procedure detecting the exis-

tence of compromised nodes, the recovery procedure

of x(0) in the presence of malicious actors and finally

the identification and removal of compromised nodes.

4.1 Modeling Malicious Activities

Attacks are modeled as an additive error u

i

(k) to the

linear iterative Equation (2) at any given iteration k of

node i :

x

i

(k + 1) = W

ii

x

i

(k) +

∑

j∈N

i

W

i j

x

j

(k) + u

i

(k), (11)

In matrix form, referring to the possibility that several

nodes may be compromised, this is modeled as

x(k + 1) = W x(k) + B

F

u

F

(k) (12)

where F = {i

1

,i

2

,. ..,i

f

} is the set of the compro-

mised nodes, B

F

=

e

i

1

e

i

2

·· · e

i

f

(where e

l

de-

notes a unit vector with entry l storing 1) and u

F

(k) =

u

i

1

(k) u

i

2

(k) ·· · u

i

f

(k)

T

. From this, the obser-

vation vector of a node i at iteration k is written as a

linear combination of the initial states and the injected

additive errors:

y

i

(0 : k) ,

y

i

(0)

y

i

(1)

.

.

.

y

i

(k)

= O

i,k

x(0) + M

F

i,k

u

F

(0)

u

F

(1)

.

.

.

u

F

(k − 1)

(13)

in which M

F

i,k

is the fault matrix corresponding to the

subset F of compromised nodes, defined as:

M

F

i,k

,

0 0 ·· · 0

C

i

B

F

0 ·· · 0

C

i

W B

F

C

i

B

F

·· · 0

.

.

.

.

.

.

.

.

.

.

.

.

C

i

W

k−1

B

F

C

i

W

k−2

B

F

·· · C

i

B

F

4.2 Detection

At the end of the observation phase, the algorithm run

tests to detect malicious activities. Let κ be the vertex-

connectivity of the graph. Assume that at some itera-

tion L, for every subset S with size less then κ/2, O

i,L

and M

S

i,L

satisfy this condition:

ρ

h

O

i,L

M

S

i,L

i

= n + ρ

M

S

i,L

(14)

which means that every column in O

i,L

is linearly in-

dependent with the columns in M

S

i,L

and O

i,L

is full

column-rank (ρ denotes the rank of a matrix). If the

coefficients of the weight matrix W are generated ran-

domly, this condition will be satisfied with probability

1. From Equation (13), if there is any compromised

node in the system, the following test will fail:

ρ

O

i,L

y

i

(0 : L)

= ρ (O

i,L

) = n (15)

i.e. if there is no abnormal event happening in the sys-

tem, the observation vector y

i

(0 : L) is a linear com-

bination of columns in O

i,L

. Note that this is only

true when L is large enough such that O

i,L

has already

achieved full column-rank. If the test is passed, the

recovery algorithm in section 2 could be used. Other-

wise, a different recovery process must be applied to

recover x(0).

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus

179

The test in Equation (14) is executed prior to the

observation phase. If f , the number of potentially

compromised nodes, is pre-determined (for reasons

described in Section 5) than the test in Equation (14)

only needs to be applied to subsets S of size f . Once

an L has been found for which Equation (14) is satis-

fied for all subsets S, then the length of the observa-

tion phase is known, it is L + 1. We found empirically

(see Section 5) that Equation (14) is successful even

when condition S with size less then κ/2 is not satis-

fied.

4.3 Recovery

If the presence of malicious activities has been de-

tected, legitimate nodes could recover the initial states

from their observation vector. The following proce-

dure describes this recovery process.

Algorithm 1: Recovery algorithm.

1. Let f be equal to the (assumed) number of mali-

cious nodes.

2. For each combination S of f nodes among n:

(a) If this test is passed:

ρ

h

O

i,L

M

S

i,L

y

i

(0 : L )

i

= ρ

h

O

i,L

M

S

i,L

i

then remember S and go to step 3.

(b) If there is no combination left (all

n

f

tests have

failed), increase f and repeat step 2.

(c) Otherwise (there are still some combinations),

continue to the next combination.

3. Find N

S

i,L

whose rows form the basis for the left

null space of M

S

i,L

.

4. Calculate P

S

i,L

=

N

S

i,L

O

i,L

+

N

S

i,L

where A

+

de-

notes the pseudo-inverse of matrix A.

5. Recover the initial state x(0) = P

S

i,L

y

i

(0 : L).

4.3.1 Malicious Nodes Identification

If the initial state can be recovered using x(0) =

P

S

i,L

y

i

(0 : L), then the modified state at iteration 1

could be calculated in the same way:

x(1) = P

S

i,L

y

i

(1 : L + 1)

Compare it with the expected state at iteration 1

x(1) = W x(0), the malicious nodes at iteration 1

could be identified. Conduct the consensus pro-

tocol and apply the same strategy until iteration

L + l, if a node j is malicious at iteration l, then

x

j

(l) 6= x

j

(l)

where x(l) = P

S

i,L

y

i

(l : L + l) and x(l) = W x(l − 1).

Note that the next expected state is calculated based

on the modified state, because that is the state non-

malicious nodes use in reality.

5 ASSESSMENT OF EXACT

CONSENSUS

In this section we assess the exact consensus algo-

rithms in sections 2 and 4 for their communication

and computation cost, as well as resilience to attacks

and network changes. This assessment derived from

observations on the design of the algorithm as well

as numerical results. Numerical results have been ob-

tained from the insertion of each of the two exact con-

sensus algorithms in a simulator for consensus based

network intrusion detection (Toulouse et al., 2015).

For these simulations, we have run tests with 5 differ-

ent regular network topologies: rings with 9 and 25

nodes, 2-D torus with 9 and 25 nodes and the Petersen

graph (10 nodes 15 edges).

5.1 No Malicious Activities

In this section we assess the performance of the al-

gorithm describes in Section 2. Column ”Steps” in

Table 1 lists the number of observations performed to

recover x(0). Column ”Time” displays the cost to re-

cover x(0) = Γ

i

y

i

, expressed in millisecond on a dual-

core 2.00 GHz CPU. The time results reported are av-

eraged over 100000 runs of exact consensus. Clearly,

the computation time is negligible, which makes the

time complexity of this algorithm depends solely on

the number of observations. Columns 10

−2

and 10

−10

report the convergence speed (number of iterations) of

an asymptotic consensus algorithm which was sub-

stituted to exact algorithm in the simulations. The

values in column 10

−2

are the number of iterations

of the asymptotic algorithm to obtain a solution with

a precision no less than 10

−2

from the true solution

(similarly for the column 10

−10

). We see that that

Table 1: Convergence speed: Exact vs Asymptotic.

Topology Steps Time 10

−2

10

−10

Ring 9 4 0.131 20 129

Ring 25 12 0.219 136 1007

Torus 9 2 0.008 5 26

Torus 25 6 0.128 12 69

Petersen 3 0.014 7 33

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

180

the number of iterations for exact consensus is sub-

stantially lower compared to asymptotic consensus.

It also worth noticing that the values in the column

”Steps” follow the intake capacity lower bound tightly

for the five network topologies.

The performances of this algorithm are quite

good. We now analyze the impact on the consensus

function evaluation of errors that are introduced dur-

ing the observation phase either intentionally (hack-

ers) or unintentionally (system instability). Applica-

tions often have some tolerance to error in the consen-

sus function evaluation, so maybe, as for asymptotic

algorithms, this algorithm can be used to compute ap-

proximations of the true consensus value.

Table 2 records the errors in the recovered ini-

tial states x(0) when every node injects random errors

within some range. The header of each column indi-

cates the value of the error injected. If a is the header

of a column, then the injected error is drawn randomly

and uniformly in the range [−10

a

,10

a

]. The entries of

the table contain the error in x(0) represented in log

10

form, so if the value in an entry of Table 2 is b, then

the raw error is 10

b

. The raw error is the L

2

norm be-

tween the true x(0) and the recovered one. Results in

Table 2 are based the same number of observations as

in Table 1 for exact consensus.

The error in Table 2 grows linearly in the injected

data range with coefficient 1. Fixing the linear coeffi-

cient (slope) at 1, let y be the injection range and x be

the error (in log form), we have the model y = x + β

where β is the base error. The base error is the intrin-

sic error of the system, which depends on the topol-

ogy and represents the rounding error occurring dur-

ing the calculation. The base error is estimated by

β = y − x where y and x is the mean of y and x. The

base error values are listed in Table 3.

In table 3, a base error value of 4.01 means the

system will lose over 4 decimal places of precision,

no matter which floating-point format is being used.

If the single-precision format (32-bit) is used with

around 7 decimal places of precision, the recovered

x(0) will only have 3 meaningful decimal places left.

We observe from Table 3 that network topology with

fewer degree performs worse than the one with more

degree (e.g. Ring 9 < Petersen (10) < Torus 9, where

a < b means a is worse than b). We also observe that

the more nodes a network has, the more base error it

will suffer. Overall, the results in Table 3 pretty much

preclude the application of the exact consensus algo-

rithm in Section 2 in an environment characterized by

system instability and malicious activities.

5.2 Coping with Resilience Issues

In this section we first analyze numerically the strat-

egy described in Section 4 for coping with malicious

activities in the network. Then we discuss the applica-

bility of this algorithm to handle attacks and network

instability.

Table 4 reports the performance of the algorithm

in Section 4 for one and two compromised nodes.

The column ”Compromised” indicates the number

of compromised nodes in the network. The col-

umn “Subsets” indicates the number

n

f

of subsets of

nodes the algorithm may have to examine before find-

ing the subset of compromised nodes (step 2 of the re-

covery algorithm, Section 4.3). The column ”Steps”

displays the number of observations performed to re-

cover x(0) (this number is pre-computed as defined in

Section 4.3). The last three columns record the empir-

ical time to execute the recovery algorithm, measured

in millisecond. The best case happens when the first

subset always passes the test in step 2a of the recov-

ery algorithm and the same for the worst case with the

last subset. For the average time, the malicious nodes

are selected randomly at every run of the exact con-

sensus. Time is averaged over 1000 consensus runs.

In Table 4, “rank test is degenerate” means the

system cannot calculate the rank of the matrix reli-

ably anymore. The system uses SVD to calculate

the rank. Rank is degenerated when the precision of

the floating-point number (we used double floating-

point number) is not enough for the system to de-

cide whether a singular value is extremely small or

it is zero. For the cases of ring 9 and ring 25 topolo-

gies, if there are 2 compromised nodes, this will sep-

arate the network, it is then mathematically impos-

sible to recover x(0) at any node. Results in Table 4

show computation time greatly increases for scenarios

where there are 2 compromised nodes. The minimum

number of observations to recover x(0) also increases

rapidly. For Torus 9, it goes from 2 when no attack

to 3 and 5 respectively for one and two compromised

nodes, similarly for Torus 25 with 6-8-12.

Note that we have run the recovery algorithm with

2 attackers for the Petersen graph which has a vertex

connectivity κ = 3 and the 2D-Torus networks (9 and

25 nodes) with κ = 4. In both cases f = 2 ≥ κ/2.

However, in both cases we have been able to find an L

such that the test in Equation (14) is satisfied, allow-

ing the recovery algorithm to compute x(0) for these

last 3 network topologies.

5.2.1 Computational Time-complexity

The most time-consuming part of exact consensus in

Section 4 is step 2 of the recovery procedure. The

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus

181

Table 2: Robustness of exact consensus against error injection.

Topology -14 -13 -12 -11 -10 -9 -8 -7 -6 -5 -4 -3 -2 -1

Ring 9 -10.6 -9.0 -7.4 -7.8 -5.2 -5.3 -4.1 -2.3 -1.9 -1.7 -0.6 1.8 2.1 3.1

Ring 25 -2.1 -3.2 -0.9 -1.0 -0.2 0.5 2.2 2.9 3.4 5.2 5.3 7.2 8.3 9.5

Torus 9 -11.8 -10.8 -10.0 -9.3 -8.4 -6.6 -5.7 -5.0 -4.4 -3.0 -0.2 -0.7 0.4 0.8

Torus 25 -10.5 -9.6 -8.5 -7.7 -5.9 -5.7 -4.7 -2.7 -1.5 -1.6 -0.8 0.8 1.3 3.2

Petersen -10.4 -10.6 -9.8 -8.9 -7.0 -6.0 -5.6 -4.2 -3.4 -1.1 -2.0 -1.0 0.3 2.1

Table 3: Base error for each topology in exact consensus.

Topology Base error β

Ring 9 4.01

Ring 25 10.15

Torus 9 2.17

Torus 25 3.64

Petersen 2.68

number of subsets that may need to be tested is

n

f

,

therefore the algorithm is exponential in f . In prac-

tice, base on the results in Table 4, this algorithm

can only detect 2 malicious nodes or less. However,

the time-complexity of the recovery algorithm grow

in polynomial time in terms of the number of nodes

n, but the order is high. The number of subsets for

a specified n is O(n

f

), but matrix multiplication and

SVD also have their own time-complexity (which is

O(n

3

)), which is in O(n

f +3

) in total. If the combina-

tion S is a superset of the set of malicious nodes F ,

S will still satisfy the test in Step 2a of the recovery

algorithm. Therefore, if there is high probability that

2 malicious nodes will appear, we can set the starting

of f to f = 2, and then use an alternating combination

enumeration such as {0, 1},{2, 3},{4,5},.. . to cover

all the subsets with 1 node in a first few trials. In

this way, the computational time trying for the case

f = 1 could be saved without any significant trade-off

in performance. The same strategy could be applied

for f = 3, however, it is harder to design an enumer-

ation strategy which covers all the subsets of 2 nodes

without repeating some subsets.

5.3 Dynamic Networks

The recovery of the initial states at a given node de-

pends on information encoding rather than states dif-

fusion. If changes occur in the network topology, se-

quences of information encoding will take place at

run time that are not modeled in the pre-existing ob-

servability matrix. Despite some proposals for ex-

act consensus in dynamic networks (Ahmad et al.,

2012), none can actually work reasonably in a net-

work where node/link failures and removal may occur

any where without informing the whole network. The

proposal in (Ahmad et al., 2012) is limited to cyclic

network topology changes. It assumes the existence

of a pre-computed observability matrix for specific

sequence of network topologies, changes in the net-

work have take place according the sequence embed-

ded in the observability matrix otherwise recovery of

initial states will fail.

Our proposal to handle dynamic networks is to

consider changes in the network configuration as a

set of malicious nodes and apply the expensive re-

covery process of the algorithm in Section 4. Prac-

tically, as the detection algorithm can only recover

the initial state with 2 malicious nodes, it’s equiva-

lent to 2 node failures or 1 link failure. The observa-

tion phase of exact consensus is relatively short, with

an upper bound of n iterations. In contrast, the con-

vergence speed of asymptotic consensus algorithms is

slow, needing several iterations to compute a consen-

sus solution. Thus, despite that exact consensus can-

not tolerate many network changes, its speed makes

modifications in the network configuration less likely

to happen. And even if the system cannot recover in

an initial observation phase, it can initiate a second

observation phase to retransmit the data, whose total

number of iterations will still be less than the time

for a single asymptotic consensus phase. Therefore,

exact consensus is still a useful solution in dynamic

networks, which is applicable when the cost of trans-

mission is high and the network is sufficiently stable.

6 CONCLUSION

The present paper describes an exact consensus al-

gorithm which has proven low communication cost.

Our numerical results further indicate that it should

definitely be preferred to asymptotic consensus algo-

rithms when the physical network is stable, reliable

and secure. Further, as initial states are completely

recovered by each node, different nodes can compute

different functions of the initial states, they are not

limited to compute the same consensus function. This

extends the range of IoT applications of this algorithm

beyond those related to consensus problems.

Exact consensus has a mathematically sound mit-

igation strategy to recover initial states from nodes

compromised by malicious actors. Unfortunately it is

known that this strategy does not scale well. Our tests

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

182

Table 4: Exact consensus speed test result.

Compromised Topology Subsets Steps Best Average Worst

1

Ring 9 9 8 0.429 0.851 1.398

Ring 25 25 Rank test is degenerate

Torus 9 9 3 0.288 0.599 0.825

Torus 25 25 8 1.124 5.608 9.991

Petersen 10 5 0.361 0.797 1.289

2

Ring 9 Theoretically impossible

Ring 25 Theoretically impossible

Torus 9 36 5 0.458 2.714 5.186

Torus 25 300 12 2.345 133.514 272.231

Petersen 45 8 0.659 5.909 10.695

and analysis have shown that this strategy is only rea-

sonably applicable for attack scenarios involving 1 or

2 compromised nodes or for network configurations

that are impacted by no more than 2 nodes failures or

disappearances or by one link failure. However these

scenarios are not too constraining. The algorithm has

a procedure to identify and phase out compromised

nodes once consensus has been computed, so that

malicious actors will not accumulate in the system.

The very short communication phase means that pro-

portionally very few configuration changes can occur

during a communication phase.

An open question is looming in the context of dis-

tributed edge computing. Beyond the above limited

scenarios of dynamic networks, can the linear en-

coding scheme of exact consensus be a substitute to

the less communication efficient but more robust val-

ues diffusion gossip and flooding algorithms? Likely

some answers to this question can be found in re-

search on time-varying multihop networks in network

coding and applications of observability theory to

complex networks. This is the direction of our future

research on exact consensus algorithms.

ACKNOWLEDGMENTS

Funding for this project comes from the Professor-

ship Start-Up Support Grant VGU-PSSG-02 of the

Vietnamese-German University. The authors thank

this institution for supporting this research.

REFERENCES

Ahlswede, R., Cai, N., Li, S. Y., and Yeung, R. W. (2000).

Network information flow. IEEE Trans. Inf. Theor.,

46(4):1204–1216.

Ahmad, M. A., Azuma, S., and Sugie, T. (2012). Dis-

tributed function calculation in switching topology

networks. SICE Journal of Control, Measurement,

and System Integration, 5(6):342–348.

Babai, L. (1995). Handbook of combinatorics (vol. 2).

chapter Automorphism Groups, Isomorphism, Recon-

struction, pages 1447–1540. MIT Press, Cambridge,

MA, USA.

Bahga, A. and Madisetti, V. K. (2016). Blockchain platform

for industrial internet of things. Journal of Software

Engineering and Applications, 9(10):533–546.

Carvin, D., Owezarski, P., and Berthou, P. (2014). A gen-

eralized distributed consensus algorithm for monitor-

ing and decision making in the iot. In 2014 Interna-

tional Conference on Smart Communications in Net-

work Technologies (SaCoNeT), pages 1–6.

Christidis, K. and Devetsikiotis, M. (2016). Blockchains

and smart contracts for the internet of things. IEEE

Access, 4:2292–2303.

Colistra, G., Pilloni, V., and Atzori, L. (2014). The prob-

lem of task allocation in the internet of things and

the consensus-based approach. Computer Networks,

73:98 – 111.

Cortes, J., Martinez, S., and Bullo, F. (2006). Robust ren-

dezvous for mobile autonomous agents via proximity

graphs in arbitrary dimensions. IEEE Transactions on

Automatic Control, 51(8):1289–1298.

Li, S., Oikonomou, G., Tryfonas, T., Chen, T. M., and

Xu, L. D. (2014). A distributed consensus algorithm

for decision making in service-oriented internet of

things. IEEE Transactions on Industrial Informatics,

10(2):1461–1468.

Pasqualetti, F., Bicchi, A., and Bullo, F. (2007). Dis-

tributed intrusion detection for secure consensus com-

putations. In Decision and Control, 2007 46th IEEE

Conference on, pages 5594–5599.

Pasqualetti, F., Bicchi, A., and Bullo, F. (2009). On the

security of linear consensus networks. In Proceedings

of the 48h IEEE Conference on Decision and Control

(CDC) held jointly with 2009 28th Chinese Control

Conference, pages 4894–4901.

Peng, K. and Yang, Y. (2009). Leader-following consen-

sus problem with a varying-velocity leader and time-

varying delays. Physica A: Statistical Mechanics and

its Applications, 388(2):193 – 208.

Pilloni, V., Atzori, L., and Mallus, M. (2017). Dy-

namic involvement of real world objects in the iot: A

Applicability and Resilience of a Linear Encoding Scheme for Computing Consensus

183

consensus-based cooperation approach. Sensors 2017,

17(3).

Saber, R. and Murray, R. (2003). Consensus protocols for

networks of dynamic agents. In American Control

Conference, 2003. Proceedings of the 2003, volume 2,

pages 951–956.

Silvestre, D., Rosa, P. A. N., Cunha, R., Hespanha, J. P.,

and Silvestre, C. (2013). Gossip average consensus in

a byzantine environment using stochastic set-valued

observers. In CDC, pages 4373–4378. IEEE.

Sundaram, S. (2009). Linear Iterative Strategies for Infor-

mation Dissemination and Processing in Distributed

Systems. University of Illinois at Urbana-Champaign.

Sundaram, S. and Hadjicostis, C. N. (2008). Distributed

function calculation and consensus using linear iter-

ative strategies. IEEE Journal on Selected Areas in

Communications, 26(4):650–660.

Sundaram, S. and Hadjicostis, C. N. (2011). Distributed

function calculation via linear iterative strategies in

the presence of malicious agents. IEEE Transactions

on Automatic Control, 56(7):1495–1508.

Sundaram, S. and Hadjicostis, C. N. (2013). Structural con-

trollability and observability of linear systems over fi-

nite fields with applications to multi-agent systems.

IEEE Trans. Automat. Contr., 58(1):60–73.

Teixeira, A., Sandberg, H., and Johansson, K. H. (2010).

Networked control systems under cyber attacks with

applications to power networks. In Proceedings of

the 2010 American Control Conference, pages 3690–

3696.

Toulouse, M., Minh, B. Q., and Curtis, P. (2015). A con-

sensus based network intrusion detection system. In

IT Convergence and Security (ICITCS), 2015 5th In-

ternational Conference on, pages 1–6. IEEE.

Tsitsiklis, J., Bertsekas, D., and Athans, M. (1986). Dis-

tributed asynchronous deterministic and stochastic

gradient optimization algorithms. Automatic Control,

IEEE Transactions on, 31(9):803–812.

Xiao, L., Boyd, S., and Kim, S.-J. (2007). Distributed av-

erage consensus with least-mean-square deviation. J.

Parallel Distrib. Comput., 67(1):33–46.

Xiao, L., Boyd, S., and Lall, S. (2005). A scheme for robust

distributed sensor fusion based on average consensus.

In Proceedings of the 4th International Symposium

on Information Processing in Sensor Networks, IPSN

’05, Piscataway, NJ, USA. IEEE Press.

APPENDIX

The weight matrix W in systems like Equations (2,3)

is modeled on the adjacency structure of the graph

G, i.e. W

i j

= 0 if (i, j) 6∈ E, otherwise W

i j

represents

a weight on edge (i, j) ∈ E. The graph G has self-

edge, i.e. W

ii

6= 0. The system described in Equation

(3) converges asymptotically to a steady state where

x

i

(k) ≈ x

j

(k), ∀i, j ∈ {1,2, .. .,n} provided it satisfies

some conditions. The most general one is that the

graph G must be connected (strongly connected in

oriented graphs). Other conditions depend on the

consensus problem solved, and are associated to the

weight matrix. For the specific case of the average

sum problem, the system in Equation (3) converges

towards the true average sum if W is row stochastic

(Xiao et al., 2007), i.e.

∑

n

j=1

W

i j

= 1, the sum of the

weights of each row equal 1. The following weight

matrix (known as the Metropolis-Hasting matrix) sat-

isfies this second convergence condition for the aver-

age sum problem:

W

i j

=

1

1+max(deg

i

,deg

j

)

if i 6= j and j ∈ N

i

1 −

∑

k∈N

i

W

ik

if i = j

0 if i 6= j and j 6∈ N

i

(16)

where deg

i

denotes the degree of node i ∈ G.

IoTBDS 2018 - 3rd International Conference on Internet of Things, Big Data and Security

184