ISE: Interactive Image Search using Visual Content

Mohamed Hamroun

1,2

, Sonia Lajmi

2,3

, Henri Nicolas

1

and Ikram Amous

2

1

Department of Computer Science, Bordeaux University, LABRI Laboratory, Bordeaux, France

2

Department of Computer Science, Sfax University, MIRACL Laboratory, Sfax, Tunisia

3

Department of Computer Science, Al Baha University, Saudi Arabia

Keywords: CBIR, Genetic Algorithm GA, Retrieval Image.

Abstract: CBIR (Content-Based Image Retrieval) is an image retrieval method that exploits the feature vector of the

image as the retrieval index, which is based upon the content, including colors, textures, shapes and

distributions of objects in the image, etc. The implementation of the image feature vector and the searching

process take a great influence upon the efficiency and result of the CBIR. In this paper, we are introducing a

new CBIR system called ISE based on the optimum combination of color and texture descriptors, in order to

improve the quality of image recovery using the Particle Swarm Optimization algorithm (PSO). Our system

operates also the Interactive Genetic Approach (GA) for a better research output. The performance analysis

shows that the suggested 'DC' method upgrades the average precision metric from 66.6% to 89.50% for the

Food category color histogram, from 77.7% to 100% concerning CCV for the Flower category, and from

44.4% to 67.65% regarding co-occurrence matrix for the Building category using the Corel data set. Besides,

our ISE system showcases an average precision of% 95.43 which is significantly higher than other CBIR

systems presented in related works.

1 INTRODUCTION

Nowadays, image-based practical apps have

become available everywhere, whether on TV

channels, in newspapers, museums and even

among. Internet search engines that suggest image

search solutions. These images indexing and

retrieving depend mainly on text annotations or text

elements that can be attributed to them. In many

cases (TV channels, newspapers, etc.), the archiving

of images and video recording is done only through

a manual annotation step using keywords. This

indexation represents a long-term and recurring task

for humans, especially with the image bases

increasingly growing. Moreover, this task depends

highly on each person's culture, knowledge and

feelings.

In the other hand, with the massive escalation in

the number of videos accessible to the public thanks

to technical progress, the prices of memory supports

have witnessed a dramatic decline over the last

decade while their storage capacity has sensibly

risen. This availability also gave rise to the creation

of several storage possibilities in computing

systems to keep up with the development of video

files. However, a subsequent growth in exploitation

tools is also needed to allow the user to access and

handle these documents efficiently. It is within this

framework that CBIR systems have proven to be of

a high efficiency for researchers as they have been

conceived to ensure “an automatic indexing and

searching system” which is able to “retrieve an

image based on its visual features” (Kundu et al.,

2015), (Yue et al.,2011).

Considering this context, a visual content image

search system needs to be established. In the

literature CBIR several systems are proposed

extract the image features with innovative methods

(Singha et al., 2012), (Sandid et al., 2015), (Farsi et

al 2013). The main limitation of the proposed works

is the fact that they don’t consider the user feedback

to improve the result of the image retrieval. In fact,

this consideration can be made using genetic

algorithm. In this paper, a new CBIR method based

on genetic algorithm is proposed.

The innovative aspects of the proposed method

are as follows:

Combine usual descriptors features to obtain a new

DC descriptor.

Hamroun, M., Lajmi, S., Nicolas, H. and Amous, I.

ISE: Interactive Image Search using Visual Content.

DOI: 10.5220/0006806702530261

In Proceedings of the 20th International Conference on Enterprise Information Systems (ICEIS 2018), pages 253-261

ISBN: 978-989-758-298-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

253

Optimize the result of DC descriptor by applying the

genetic algorithm initially proposed by Holland

(Holland., 1975).

The rest of this paper is organized as follows:

Section 2 focuses on some important related works.

The proposed CBIR system is described in section

3. Experimental setup and results are presented in

Section 4, finally, in Section 5, we conclude with

the summary of this paper

2 RELATED WORKS

Among the most important recognition aspects are

color features. Color in these applications is a solid

value because it remains an unchanging parameter

that does not alter when the image orientation, size

or placement is altered (Dubey et al.,2015). CBIR

systems use conventional color features such as

dominant color descriptor DCD (Wang et al.,2011),

color coherence vector CCV (Pass et al.,1996),

color histogram (Singha et al., 2012) and color

auto-correlogram (Chun et al., 2008). DCD is about

quantifying the space occupied by the color feature

of an image by placing its pixels into a measurable

number of partitions and calculating the means and

ratio of this placement. CCV, however, partitions

the image histogram bins into coherent or

incoherent types. The results of this method are

more precise in that they not only emanate from

color histogram classification but also from spatial

classification. The accuracy of these results is more

palpable when it comes to images that contain

rather homogeneous colors (Pass et al.,1996).

Another important recognition aspect is the

image texture. Among the most elemental features

of an image, we may note the way in which its

different regions are arranged. The analysis of

texture can provide substantial information about

the relationship between the neighbor regions

(Sandid et al., 2015), (Rashno et al., 2015). This

analysis concerns such common features as those

which can be classified into four categories:

statistical, structural, model-based and signal

processing-based features. These latter have been

the most widely used because of their efficiency

(Farsi et al 2013). Indeed, among the most used

methods of signal processing-based features are

Discrete Cosine Transform (DCT), Discrete Sine

Transform (DST), Fourier transform Gabor Filter,

Wavelet Transform and Curvelet Transform.

The process with which CBIR systems function

starts with feature extraction which is an important

phase. This extraction is launched with low level

features such as color features, which are considered

among the primary and most eminent ones. DCD is

a pertinent and intuitive color representation as it

adopts an effective and concise method to describe

the color distribution within an image (Wang et

al.,2011). Both color and textural hybrid features

were suggested in (Shiv et al.,2015a); these are

referred to as rotation and scale-invariant hybrid

descriptors (RSHD). The first step in this method is

to distribute the RGB color space into 64 partitions

so as to quantize the image. Afterwards, the adjacent

structural patterns are employed to vehicle the

texture information of the image. Another

dimension adds up to DCD and the spatial color

descriptors which is the semantic feature (Talib et

al.,2013). This latter is employed to bridge the gap

between the two previously mentioned descriptors.

Then, according to the color of each image

component and background, the most dominant

colors are appointed with different weights.

The system extracts BDIP and BVLC features in

(Young et al., 2003) as textural features. In (Yildizer

et al.,2012), the system starts with resizing the

images into a 128x128 format then applies the

wavelet transform to them in 4 levels. The items

employed as feature vector are the standard

deviation of components in levels 3 and 4 and the

LL component. A local wavelet pattern, which is a

texture feature descriptor, was proposed in (Shiv et

al., 2015b). In order to construct the descriptor, the

local wavelet pattern relies on the connection

between the local neighbors and the center pixel on

the one hand and the circumambient neighbors on

the other. In (Shiv et al.,2015c), new local patterns

were brought in: the BoF-LBP. This method

operates so as, first, to filter the images using the

bag of filters (BoF), then to compute the local binary

pattern (LBP) over each filtered image and, finally,

to concatenate them in order to determine the BoF-

LBP descriptor. In (Murala et al., 2012), local tetra

patterns (LTrP) were introduced in a way in which

the connection between the referenced pixel and its

adjacent ones is used so as to allow the computing

of texture descriptors.

3 THE PROPOSED CBIR

FRAMEWORK “ISE”

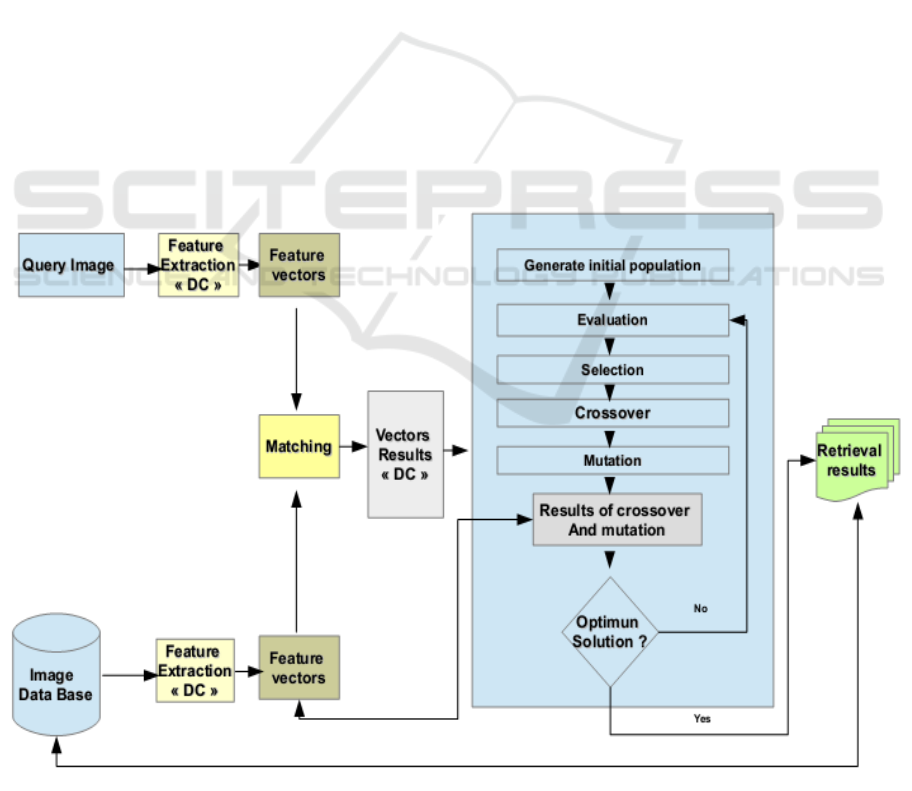

Fig.1 shows the overall architecture of the proposed

image search system. After preparing the dataset

(Corel), The user can introduce a query image. A

step of features extraction is applied on the image

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

254

query (on-line). The used descriptors are explained

in detail in section 3.1. The extracted descriptors

are after combined to a new descriptor called DC

(Descriptor Combination). In the section 3.2 we

explain how we combine the extracted features to

obtain this new descriptor DC. This process of

indexation is applied on the the same images in our

database in an offline mode. A step of matching

between query vectors and collection vectors is

made to obtain the DC vectors. The result is

classified according to their degree of relevance

with the query vectors.

To improve the result of indexation and search

(section 4), we apply the genetic algorithm to the

new DC descriptor. Genetic algorithms are the

result of the works of Holland (Holland., 1975),

(Goldb et al., 1989) in the seventies of the previous

century. GAs takes their inspiration from the

Darwinian vision of the biological evolution.

Indeed, the biological evolution favors individual

organisms which are tolerant to variations. The

individuals which are the most resistant to the

variations of the environmental have more chance

to persist and to impose their offspring along the

generations. The adaptation of every individual is

measured according to a fitness measure

representing an objective value taking into account

all the constraints of the problem. As defined by

Holland, the GA consists of three steps: selection,

crossover and mutation.

Later, we will focus on upgrading the results of

this method by implementing the genetic algorithm.

The application of the genetic algorithm is as

following:

1. Initial population generation phase: Vectors

resulting from the DC descriptors.

2. Evaluation and Selection phase: vectors having

a distance greater than or equal to 0.6 with respect

to the query image descriptor vector using Equation

1 of 3.3.

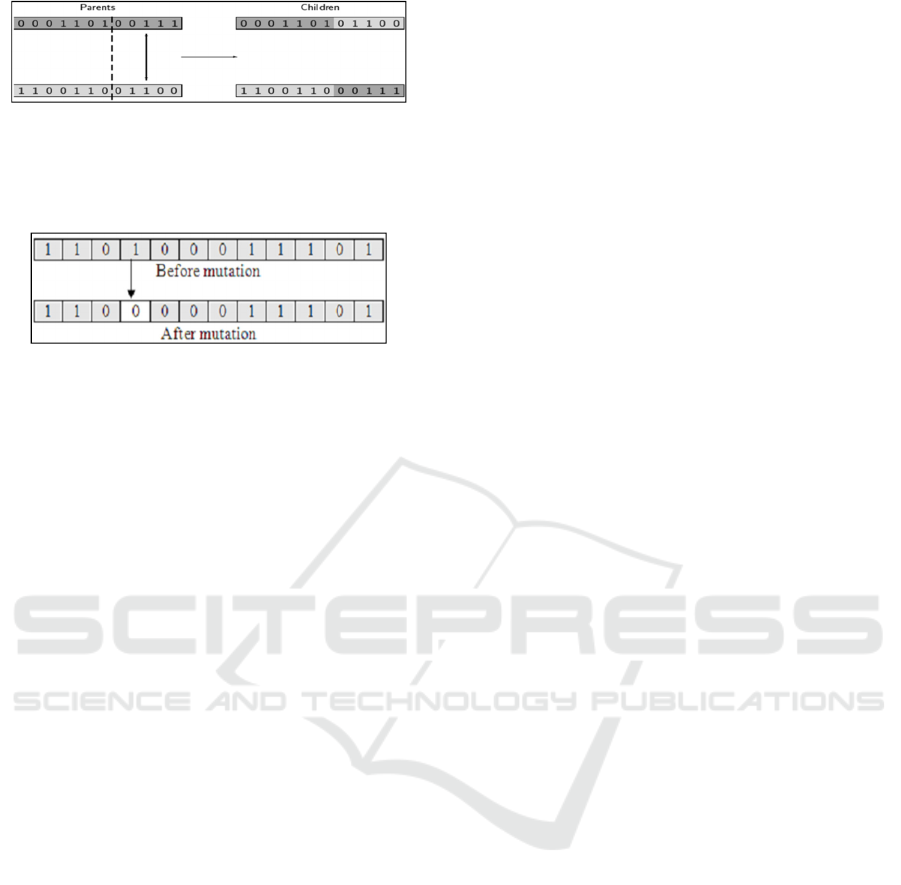

3. Crossover phase: We can realize this process by

cutting twoo strings at a randomlyy chosen position

and swapping thee two tails. This process, whichh

wee will call one-point ccrossover in the following,

is visualized in Fig.2. We will apply the same

principle on descriptor vectors.

4. Mutation phase: Is the occasionall introduction

of new features in to tthe solution strings of the

population pool to maintain diversity in the

population.

Though crossoverr has the main responsibility

to search for the optimal solutionn, mutation is also

Figure 1: Conceptual Architecture of Search System “ISE”.

ISE: Interactive Image Search using Visual Content

255

Figure 2: One-point crossover of binary strings.

used for thiss purpose. We will applyy the same

principle on descriptor vectors

Figure 3: Mutation.

5. Matching: comparing between the cross and

mutation resulting vectors and those assigned to

describe each image of our base and returning the

mot similar vectors. Then, we select the first 20

vectors.

6. Displaying results in the form of images

conforming to the 20 selected vectors.

7. Stop Criterion: All result images must have a

distance greater than or equal to 0.85 in regards to

the query image (otherwise returning to step 2).

3.1 Used Descriptors

3.1.1 Color histograms

Color histograms are frequently used to compare

images. Examples of their usage in multi-media

applications includes scene break detection (Arun et

al., 1995), (Kiyotaka et al., 1994) and image

database query (Brown et al., 1995), (Myron et al.,

1995), (Virginia et al., 1995), (Alex et al.,1996).

Their popularity stems from several factors. These

factors are listed in the following:

Color histograms are computationally trivial to

compute.

Small changes in camera viewpoint tend not to effect

color histograms.

Different objects often have distinctive color

histograms.

Researchers in computer vision have also

investigated color histograms. For example, Swain

and Ballard (Michael et al.,1991) describe the use of

color histograms for identifying objects. Hafner et

al. (James et al., 1995) provide an efficient method

for weighted-distance indexing of color histograms.

Stricker and Swain (Markus et al., 1994) analyze the

information capacity of color histograms, as well as

their sensitivity.

3.1.2 Color Coherence Vectors

The color coherence vector CCV represents another

more detailed variant of the color histogram. The

concept of coherence is linked to a pixel belonging

to a considerable size space. Conversely, an

incoherent pixel is isolated or belongs to an

insignificant size space. The color coherence vector

represents this classification of image colors (Greg

et al., 1996). The concept of space used

hereinbefore refers to a zone of identical color. A

labelling technique of connected components

enables the generation of regions and adjacency

interconnections used of corresponding type 8

(which includes diagonal adjacencies). Pass puts

forward precising the threshold beyond which a

space is considered coherent at 1% of the image

total size (Pass et al., 1996). Ai refers to the number

of coherent pixels in the row of color, while βi refers

to the number of incoherent pixels. An image CCV

is defined by a vector [(α1, β1) (α2, β2) ... (αn, βn)].

The addition of vectors (α1 + β1, α2 + β2... αn + βn)

results in the image color histogram.

The key strength of this approach lies in adding

spatial information to the histogram through their

refinement. This onset delivers more reliable results

than those directly derived from histograms

analysis. Even with a conventional distance

between vectors, this approach consistently delivers

good results. Still, it has the drawback of amplifying

sensitivity towards light conditions.

3.1.3 Co-occurrence Matrix

The greyscale co-occurrence matrices of an image

pixels is the most popular statistical technique

(Chen et al.,1979), (Marceau et al.,1990) to extract

texture descriptors for various types of images. For

instance, the segmentation and classification of

images of different types, such as medical images,

aerial and astronomical etc. This approach involves

exploring the special texture dependencies by

constructing a co-occurrence matrix first, based on

the orientation and distance between the image

pixels. The success of this process depends on

parameter proper choice including: the size of the

matrix on which the measurement is made, and the

distance between the two pixels of the pattern.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

256

3.2 Creation of a New "DC"

Descriptor

It goes without saying that a set of features applied

to different image types does not necessarily lead to

the same results. In other words, a set features which

issues a precise retrieval result for a given image

type may lead to insufficient results when applied to

another category and vice versa.

Table 1: Average precision based on Color histogram,

CCV and Co-occurrence matrix for Corel database.

Corel-1K C_HIST CCV Co_Matrix

Africa 88.8 33.3 44.4

Beach 66.6 66.6 22.2

Buildin

g

66.6 44.4 44.4

Bus 55.5 33.3 22.2

Dinosau

r

100 66.8 88.8

Elephan

t

33.3 11.1 22.2

Flowe

r

33.3 77.7 33.3

Horse 77.7 55.5 22,2

Mountain 11.1 55.5 55.5

Foo

d

66.6 44.4 66.6

Avera

g

e 59.9 51 42,1

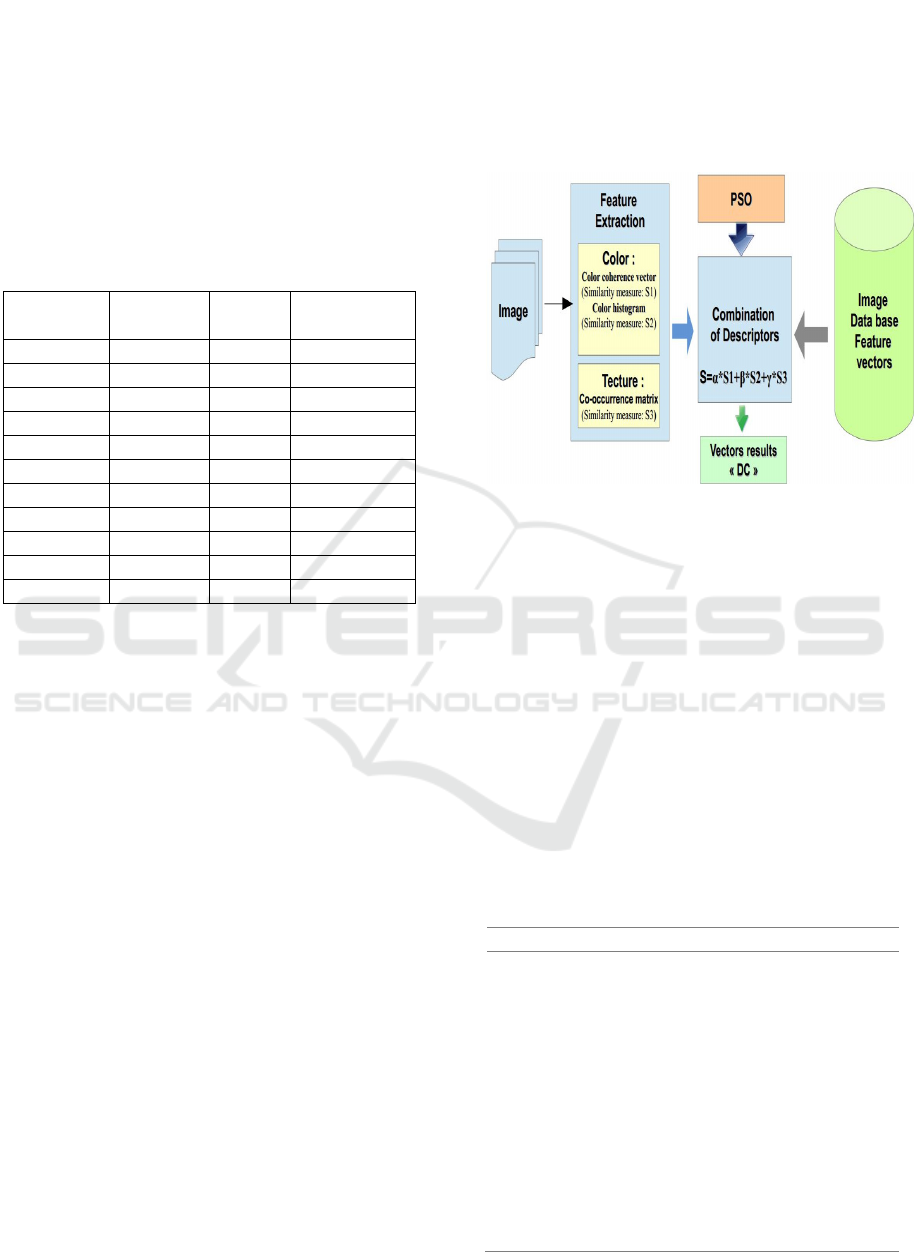

The previous table may lead us to the conclusion

that color histogram provides more efficient results

when applied to the themes of “Africa” and

“Dinosaur”. On the other hand, better results are

obtained from applying CCV on the themes of

“Flower” and “Mountain”. The inefficiency of each

set of features when applied to certain categories

can be restituted by their efficiency or the

effectiveness of their combination with other sets of

features. The PSO algorithm may be employed in

this sense to compensate for such deficiencies.

Introduced by Kennedy and Eberhart, the PSO

algorithm has proven to be an adequate solution for

different optimization problems (Eberhart et

al.,1995). Indeed, PSO operates by modeling the

swarm intelligence behavior and finding in the

search area the most suitable solution. Each particle

in the search area is treated as a potential solution.

The observed particle relies on a fitness function to

imitate the adjacent particles and stores the optimal

solution at the local level (local maxima) and the

optimal solution at the global level (global maxima).

Furthermore, each particle acts so as to drift to more

efficient solutions that best fit its own velocity. This

latter is given by the calculation of movements

towards local and global maxima.

Color histogram, CVV and coocurrence matrix are

the three sets of features that are used in our case.

For each of these sets, a corresponding similarity

measure is computed. The following diagram shows

how the final similarity measure is computed thanks

to the incorporation of the three similarity measures

explained in Figure 4:

Figure 4: Simplified schema of the DC algorithm.

The three corresponding similarity measures

associated with the feature sets Histogram color,

CVV and co-ocurrence matrix are respectively

assigned the weights α, β and γ. Moreover, the PSO

algorithm is applied to 50% of the database (as the

training data) to compute the weights. Indeed, the

average precision of CBIR corresponds to the

fitness function of the PSO algorithm and the

particles of this latter are 3D dimension variable (α,

β and γ). Hence, while the PSO algorithm aims at

finding the variables α, β and γ, it positively affects

the average precision of the CBIR system by

maximizing it. The Algorithm below represents the

PSO (Eberhart et al.,1995) algorithm for feature

combination.

Algorithm : PSO.

Let S bee the number of particles, x i be the best

Known position of particle i and x be the best

known position of the eentire swarm. The

proposed feature algorithm based on PSO is as

following:

1. Parameter Initialization:

Forr each particle i = 1,2, ..., S do:

2. Initialize the weight (w), the numberr of

iterations, the maximum velocity (V max), the

acceleration coefficients c 1 and c 2 and the

ranks of the particles For Each dimension.

3. Initialize the particle'ss position (x i ) with a

uniforml

y

distribute

d

r

ando

m

vector.

ISE: Interactive Image Search using Visual Content

257

4. Initialize the particle's best position Known to

its original position: xi ← xi.

5. Calculate the average CBIR precision for all

particles and find the swarm's best known

position (x).

6. Initializee the particle'sz velocity: v i ~ U

(v max, v max).

7. Until the numberr of iterations performed or

the average CBIR precision value is found,

repeat: For each particle i = 1,2, ..., S do:

8. Pick random vectors r 1, r 2 ~ U (0,1).

9. Calculate v i (t + 1) = wv i (t) + c 1 r 1 (x i -

x i (t)) + c 2 r 2 (x - x i (t))

10. Calculate x i (t + 1) = x i (t) + v i (t + 1)

11. Calculate the average CBIR precision for

x i (t + 1) and it Refer to P (x i (t)).

12. If P (x i (t + 1))> p (x i) then update the

particle's best position Known: ← x i x i (t + 1).

13. If P (x i (t + 1))> p (x i) then update the

swarm's best Known position: x ← x i (t + 1).

In the above Algorithm, v I stands for the velocity,

w controls the interaction of power between the

different particles, while c 1 and c 2 lead the

particles into the right directions. In addition,

r 1 and r 2 are chosen as random variables that

illustrate the idea of stochasticity in the PSO

method, x i stands for the position of local maxima

and x stands for the position of the overall maxima.

3.3 Similarity Measure

The process returns to measuring the similarity

between two images to judge the similarity or

dissimilarity. Thus, after representing by vectors the

extracted characteristic of the query image and the

others images from the database,, We adopt the

Euclidean distance to measure the distance between

these vectors, in our application, we use the

Euclidean distance thanks to its simplicity of

calculation of the similarity. This distance is

calculated according to the following formula:

=

=−

∑

2

1

(, ) ( )

n

ii

i

Dxy x y

(1)

X and y: two images (one query image and the other

is an image of the database).

Xi: The query image feature vector,

Yi: The current image feature vector.

Xi-yi: refers to a vector that corresponds to the

discrepancy between the vectors xi and yi.

4 EXPERIMENTATION AND

RESULTS

The most important evaluating metrics for CBIR

performance analysis are precision and recall

indexes which are defined as follows:

Number of relevant images retrieved

Precision (2)

Total number of images retrieved

Number of relevant images retrieved

Recall (3)

Total number of relevant images in the collec

aaaaa

tion

aaaaa

a

=

=

In experiment, all images of each category are

presented as a query image separately, and then the

precision of the first 20 retrieved images are

computed for each query. Finally, the average

precision of all queries are computed and reported

for each category.

Table 2: Comparison of the average precision of the previous methods and proposed method.

DB Semantic

name

Average (%)

Corel-

1K

REF Sadegh

et al.,

2017

Chuen

et al.,

2009

Murala

et al.,

2012

Yildizer

etal.,

2012

Kundu

et al.,

2015

Shiv et

al.,

2015a

Shiv et

al.,

2015c

DC ISE

Africa 72.40 68.30 54.95 49.95 73.05 68.95 59.90

88.05

100

Beach 51.15 54.00 39.40 71.25 59.35 41.10 50.85

79.5 89.50

Building 59.55 56.15 39.60 30.10 61.10 74.30 50.15

67.65 87.10

Bus 92.35 88.80 84.30 79.75 69.15 64.40 94.00

100

100

Dinosaur 99.90 99.25 94.70 92.05 99.15 99.55 97.60

100

100

Elephant 72.70 65.80 36.00 59.45 80.10 56.65 46.65

93.10

100

Flower 92.25 89.10 85.85 99.50 80.15 86.55 87.50

100

100

Horse 96.60 80.25 57.50 82.25 89.10 93.20 76.50

100

100

Mountan 55.75 52.15 29.45 54.60 58.00 55.15 35.25

77.75

77.75

Food 72.35 73.25 56.70 20.20 74.50 77.95 56.25

89.30

100

Average 76.50 72.70 57.85 63.91 74.36 71.78 65.47

89.50

95.43

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

258

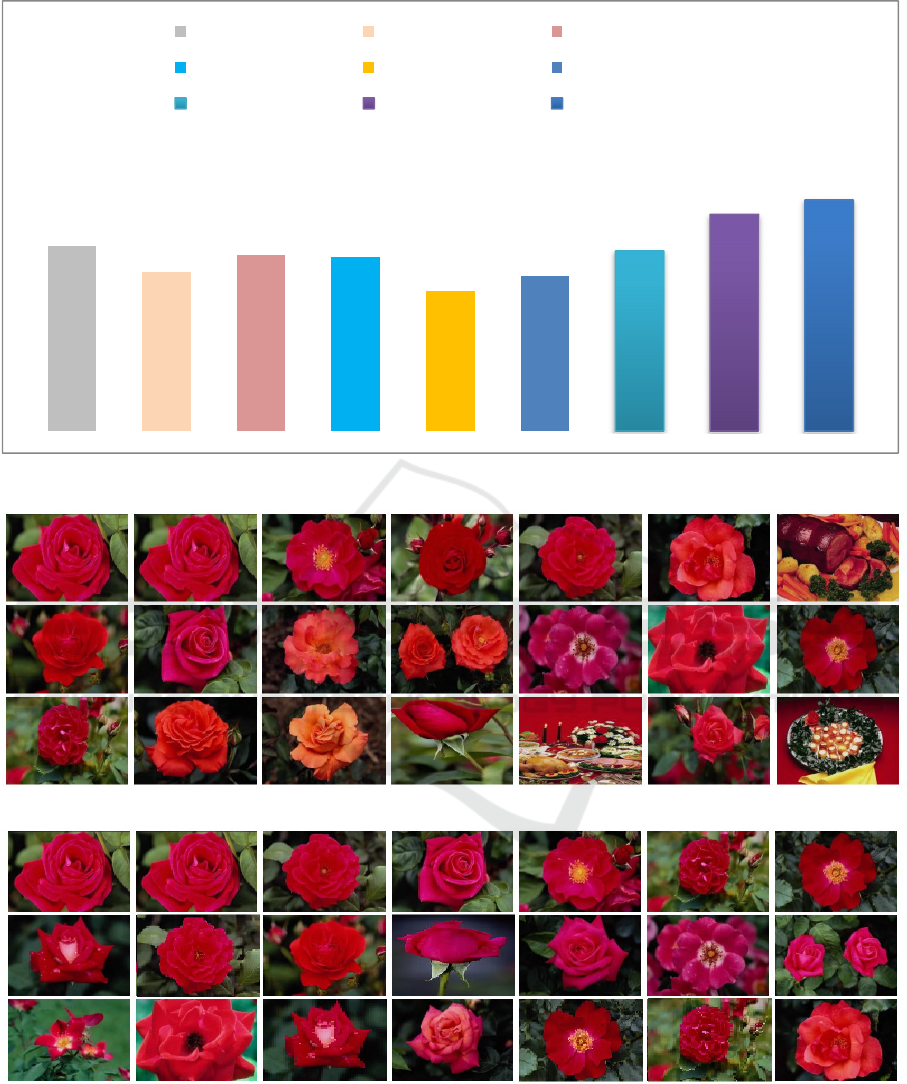

Figure 5: Comparison of the average precision of the previous methods and proposed method.

(a)

(b)

Figure 6: Image retrieval results for Flower a. (Kundu et al., 2015), b. our proposed system ISE.

The overall performance of ISE system with DC

feature and Genetic algorithm is compared with

some state-of-the-art CBIR systems.

The average precision for all image categories of the

Corel-1k dataset is reported in Tab. 2. To show the

utility of our CBIR scheme, the results of nine other

76,5

65,47

72,7

71,78

57,85

63,91

74,36

89,5

95,43

(Sadegh et al., 2017) (Shiv et al.,2015) (Chuen et al., 2009)

(Shiv et al.,2015a) (Murala et al., 2012) (Yildizer et al.,2012)

(Kundu et al., 2015) DC ISE

ISE: Interactive Image Search using Visual Content

259

CBIR systems are also reported in this table. Since

the average precision of our results is %95.43, our

CBIR scheme has the highest accuracy among the

other state-of-the-art CBIR systems.In fact, our

proposed CBIR system outperforms, (Chuen et al.,

2009), (Talib et al.,2013), (Yildizer et al.,2012),

(Kundu et al., 2015), (Shiv et al.,2015a) and (Shiv

et al.,2015c).

The results are depicted in Fig.6. These primary

results show that our ISE scheme has better

performance results by retrieving20 images

correctly among the flower category. On the other

hand, the results are17 images for the CBIR of ref

(Kundu et al., 2015)

According to the results assessment of an in-

depth testing that we have performed, we could

actually say that our visual content search system

succeeded in demonstrating its reliability and

accuracy. These tests enabled us to recognize

performance of the new DC descriptor, defined in

this article, and of the genetic algorithm for image

search. It can be concluded that our ISE system

succeeded, to a certain extent, in achieving our

target to improve search by visual content.

5 CONCLUSION

In this paper, we have validated our image search

system proposal based on the Corel test database.

We have developed an image search system called

ISE.

ISE allow users to easily access the desired

images starting from image query. The innovative

features of our new ISE image search system are (i)

Defining a new descriptor "DC" and (ii) Applying

the genetic algorithm in image search. The

application of the genetic algorithm is made to

improve results returned by the DC descriptor.

Despite the results that we achieved, the existing

visual content image retrieval systems are focusing

on addressing particular issues including semantic

insufficiency during indexation and retrieval.

However, only a few works are interested in

merging visual and semantic contents. Accordingly,

developing approaches that focus on this boundary

has become necessary. We will therefore tackle this

problematic by suggesting a method of image and

video documents searching based on a multi-level

fusion of visual and semantic.

REFERENCES

Kundu, Malay, K., Manish, C., and Samuel, R. (2015). A

graph-based relevance feedback mechanism in

content-based image retrieval , Knowledge-Based

Systems.73. pages. 254–264.

Yue, Jun, Zhenbo, L., Lu L., and Zetian, F. (2011.).

Content based image retrieval using color and texture

fused features. Mathematical and Computer

Modelling. pages. 1121–1127.

Dubey, Shiv, R., Satish K., S. and Rajat K., S. (2015).

Local neighbourhood-basedrobust colour occurrence

descriptor for colour image retrieval. IET Image

Processing. pages. 578–586.

Wang, Xiang, Y., Yong-Jian, Y., and Hong-Ying, Y.

(2011). An effective image retrieval scheme using

color, texture and shape features. Computer Standards

& Interfaces,33. (1). pages.59–68.

Pass, Greg, and Ramin, Z. (1996). Histogram refinement

for content-based image retrieval’, Pro-ceedings 3rd

IEEE Workshop on Applications of Computer Vision

(WACV’96). Sarasota. FL. pages. 96–102.

Singha, M., Hemachandran, K. and Paul, A. (2012).

Content-based image retrieval using the com-bination

of the fast wavelet transformation and the colour

histogram. IET Image Processing.6. (9). pages. 1221–

1226.

Chun, Young, D., Nam Chul, K., and Ick Hoon J. (2008).

Content-based image retrieval using multiresolution

color and texture features. IEEE Transactions on

Multimedia.10. (6). pages. 1073–1084.

Sandid, F., and Ali, D. (2015). Texture descriptor based

on local combination adaptive ternary pattern’, IET

Image Processing.9. (8). pages. 634–642.

Rashno, A., Sadri, S., and SadeghianNejad, H. (2015). An

efficient content-based image retrievalwith ant colony

optimization feature selection schema based on

wavelet and color features. International Symposium

on Artificial Intelligence and Signal Processing

(AISP). Mashhad,Iran, pages. 59–64.

Farsi, H., and Sajad M. (2013). Colour and texture

feature-based image retrieval by using Hadamard

matrix in discrete wavelet transform’, IET Image

Processing.7. (3). pages. 212–218.

J.H. Holland. Adaptation, (1975). in Natural and Articial

Systems. University of Michigan Press. USA.

Shiv R., D.,, Satish K., S., and Rajat K., S.,, (2015a).

Rotation and scale invariant hybrid image descriptor

and retrieval. Computers & Electrical

Engineering.46. pages. 288–302.

Talib, A., Massudi, M., Husniza, H. and Loay E. George.

(2013). A weighted dom-inant color descriptor for

content-based image retrieval’, Journal of Visual

Communication and Image Representation.24. (3).

pages. 345–360.

Young, D., C., Sang Y., S. and Nam C., K. (2003). Image

retrieval using BDIP and BVLC moments’, IEEE

Transactions on Circuits and Systems for Video

Technology. 13. (9). pages. 951–957.

ICEIS 2018 - 20th International Conference on Enterprise Information Systems

260

Yildizer, E., Balci, A. M., Jarada, T. N., and Alhajj, R.

(2012). Integrating wavelets with clustering and

indexing for effective content-based image retrieval’,

Knowledge-Based Systems. 31. pages. 55–66.

Shiv R., D., Satish K., S., and Rajat K., S. (2015b). Local

Wavelet Pattern: A New Feature Descriptor for Image

Retrieval in Medical CT Databases’, IEEE

Transaction on Image Processing.24. (12). pages.

5892–5903

Shiv R., D., Satish K., S.,, Rajat K., S. (2015c). Boosting

Local Binary Pattern with Bag-of-Filters for Content

Based Image Retrieval’, In Proc. of the IEEE UP

Section Conference on Electrical, Computer and

Electronics (UPCON).

Murala, Subrahmanyam, R. P. Maheshwari, and R.

Balasubramanian. (2012). Local tetra patterns. a new

feature descriptor for content-based image retrieval’,

IEEE Transactions on Image Processing.21. (5).

pages. 2874–2886.

M.G.Brown, J.T.Foote, G.J.F.Jones, K. Sparck Jones, and

S. J. Young. (1995). Automatic content-based

retrieval of broadcast news. In ACM Multimedia

Conference.

Myron, F., et al, 1995. Query by image and video content

The QBIC system. IEEE Computer. 28(9).pages.23-

32.

James., H., Harpreet., S., Will. E., Myron., F., and

Wayne., N. (1995). Efficient color histogram

indexing for quadratic form distance functions. IEEE

Transactions on Pattern Analysis and Machine

Intelligence. 17(7). pages. 729-736.

Arun H., Ramesh J., and Terry W. (1995). Production

model based dig-ital video segmentation. Journal of

Multimedia Tools and Applications. 1.1.38.

Virginia, O. and Michael S. (1995). Chabot: Retrieval

from a relational database of images. IEEE Computer.

28(9). Pages. 40-48.

Kiyotaka., O., and Yoshinobu., T. (1994). Projection-

detecting filter for video cut detection. Multimedia

Systems. Pages. 205-210.

Alex, P., Rosalind, P., and Stan S. (1996). Photobook:

Content-based manipulation of image databases.

Inter-national Journal of Computer Vision , 18(3)

pages. 233-254.

Markus, S., and Michael, S. (1994). The capacity of color

histogram indexing. In Proceedings of IEEE

Conference on Computer Vision and Pattern

Recognition. pages 704-708.

Michael S. and Dana B.(1991). Color indexing.

International Journal of Computer Vision,

7(1).pages.11-32.

Greg, P., Ramin, Z., and Justin, M. (1996). Comparing

images using color coherence vectors. ACM

conference on Multimedia , Boston.

Pass, G., Zabith, R. (1996). Histogramme refinement

for content-based image Retrieval. IEEE Workshop

on Applications of computer Vision .pages. 96-102.

Chen, P., C., and Pavlidis, T. (1979). Segmentation by

texture using a co-occurrence matrix and a splitand-

merge algorithm. Computer Graphics Image

Processing (CGIP). 10(2). pages. 172–182.

Marceau, D., J., Howarth, P., J., Dubois J., M., and

Gratton. D., J. (1990). Evaluation of the grey-level

cooccurrence matrix method for land-cover

classification using spot imagery. IEEE Transactions

on Geoscience and Remote Sensing.28.pages.513–

519.

Goldb, D., E. (1989). Genetic Algorithms in Search,

Optimization and Machine Learning. Addison-

Wesley Longman Publishing Co., Inc., Boston, MA.

USA.

Eberhart, Russ C., and James K. (1995). A new optimizer

using particle swarm theory’, Pro-ceedings of the

sixth international symposium on micro machine and

human science. Nagoya. p. ages.39–43.

Chuen-Horng L., Rong-Tai C., and Yung-Kuan C.

(2009). A smart content-based image re-trieval

system based on color and texture feature’, Image and

Vision Computing. 27. (6). pages. 658–66.

Sadegh, F., Rassoul A., and Mohammad, R., A. (2017).

A New Content-Based Image Retrieval System Based

on OptimizedIntegration of DCD, Wavelet and

Curvelet Features, IET Image Processing

ISE: Interactive Image Search using Visual Content

261