Eye Tracking as a Method of Controlling Applications

on Mobile Devices

Angelika Kwiatkowska and Dariusz Sawicki

Warsaw University of Technology, Institute of Theory of Electrical Engineering,

Measurements and Information Systems, Warsaw, Poland

Keywords: Multimedia, HCI, Mobile Device, Eye Tracking, Template Matching.

Abstract: The possibility of using eye tracking in multimodal interaction is discussed. Nowadays, communication by

eye movements can be both natural and intuitive. The main goal of the present work was to develop a

method which allows for controlling a smartphone application by using eye movements. The designed

software was based on the Open Source Computer Vision Library (OpenCV) and dedicated for Android

system. We conducted two sets of tests: usability tests of the new solution, and tests on how the methods of

template matching affect the operation of the device. The results, obtained by testing a small group of

people, showed that the application meets all stated expectations.

1 INTRODUCTION

1.1 Motivation

The use of eye tracking in multimedia includes

many different applications (Duchowski, 2007),

among which the psychology (psychophysiology) of

classical studies of the Human Visual System or the

visual control for the disabled can be enumerated. It

is true to say that recently multimodal interaction

has become one of the most important fields of

multimedia. The dynamic development of natural

methods supporting the communication with various

intelligent devices is recognized and appreciated by

professionals from practically all fields of science

and technology. Not so long ago no one imagined

that it would be possible to enter information using

other devices than a keyboard and a computer

mouse. Currently most of us exchanged mobile

phones for smartphones – devices, which have

unnoticeably ‘lost’ the classic keyboard, replaced by

multi-point touch control. With such a rapid

development of multimodal interaction; in a dozen

or so (or maybe in a few) years, the brain computer

interface could be the most important and common

form of interaction with electronic devices.

Nevertheless, before this happens, it is worth

considering whether today we can communicate in a

more natural and intuitive way. On the other hand, it

also results from our expectations. Who has not

dreamt about more comfortable and simpler way to

operate the smartphone when traveling by metro in

the morning rush hour and having only one free

hand? Moreover, it should be emphasized that the

described problem is socially significant, especially

in the field of communication with disabled for

whom eye movements provide the only possibility to

transmit information.

If we can recognize the sight direction using eye

tracking, it is worth to applying the eye to control

the device. Unfortunately, the effective use of eye

tracking in mobile devices becomes a problem. The

question arises whether we can correctly and

effectively recognize the direction of sight based on

an image from a smartphone camera. Especially,

when the image is capture by the only one camera

and also not a professional one.

1.2 The Aim of the Article

The aim of this paper is to develop a method of

control in multimodal interaction by using eye

tracking based on the recognition of the eye image

from one camera of the mobile device. The key

method for recognizing the direction of looking in

our solution is an appropriate template matching

method. An additional goal of the work was

therefore to check which method of template

matching is best suited to our solution.

Kwiatkowska, A. and Sawicki, D.

Eye Tracking as a Method of Controlling Applications on Mobile Devices.

DOI: 10.5220/0006837003730380

In Proceedings of the 15th International Joint Conference on e-Business and Telecommunications (ICETE 2018) - Volume 1: DCNET, ICE-B, OPTICS, SIGMAP and WINSYS, pages 373-380

ISBN: 978-989-758-319-3

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

373

2 EYE TRACKING AND ITS

APPLICATIONS IN

MULTIMODAL INTERACTION

In recent years, many methods have been developed

to track the movements of the eyeballs. Two

approaches for recording eye movements are worth

mentioning: techniques for monitoring eye position

in relation to the head and methods for determining

the orientation of the eye in space (or in relation to

the selected reference point). The latter is commonly

used to identify elements of the visual scene, such as

graphic objects in interactive applications (Young

and Sheena, 1975).

There are many methods of measuring eye

movement. Among the most commonly used the

following can be mentioned: Electro-OculoGraphy

(EOG), Photo-OculoGraphy (POG) and Video-

OculoGraphy (VOG), Purkinje imaging, video-

based reflection systems based on pupil and corneal.

The book (Duchowski, 2007) and document (Patel

and Panchal, 2014) are the interesting reviews of the

methods and techniques used in eye tracking.

Oculography is used in many areas of human

activity - from medicine and psychology, through

marketing, education and sports to entertainment. In

recent years, eye tracking technology has also

become popular among engineers working on

human-computer interaction (HCI), particularly in

multimodal HCI. A survey of different methods of

communications can be found in (Jaimes and Sebe,

2007). In the multimodal HCI, oculography is often

used in equipment dedicated to people with

disabilities, who, thanks to that, can communicate

using the eyesight (Strumiłło and Pajor, 2012).

In the case of nonmedical applications of

multimodal interaction, there are many attempts of

replacing the pointing device by eye tracking. In (Al-

Rahayfeh and Faezipour, 2013) Authors presented a

survey of methods used in eye tracking and head

position detections. A survey of eye tracking

methods in relation to multimodal interaction was

also presented in (Singh and Singh, 2012). There are

known studies of using single calibrated camera for

head (face and eyes) position analysis in HCI. In

(Rougier et al., 2013) the Authors calculated in real-

time a 3D trajectory, using proposed algorithm to

analyze the image from one camera.

There are few publications about controlling a

mobile device using eye tracking with a built-in

camera of this mobile device. In (Drewes et al.,

2007) the Authors discussed possibility of using eye-

gaze tracking for mobile phones and showed that it

could be alternative and attractive method for

interaction. The main idea of using eye gestures to

control a mobile device with an additional light

source (e.g. IR LED) has been patented (Sztuk et al.,

2017). The most advanced project of oculography

for a mobile device has been described in 2016

(Krafka et al., 2016). However, in that article the

Authors used large-scale dataset for eye image

analyzing (almost 2.5M frames – over 1450 people)

and a convolutional neural network for eye tracking.

In our project we focused on geometrical methods

and tried to select method of template matching.

3 DESIGN ASSUMPTIONS

The aim of the work was to design and implement

an application that allows the user to focus on the

screen of a mobile device and enable control by eye

movements. The following design assumptions were

adopted:

The front camera of the mobile device will be

used. A camera that allows for capturing low

resolution images, but at least 640x480 pixels;

The expected accuracy: the ability to identify

several regions of user’s interest in the device

screen. Regions that are associated primarily

with eye movements of left-right and up-down;

Recognition will work in real time – the purpose

of the application is to control the device;

Recognizing the viewing direction (recognizing

the selected region on the screen) should be

insensitive to changes in lighting conditions;

Modularity of the application will allow for the

integration with the operating system of the

mobile device.

The majority of commercially available, mid-range

devices of this type are characterized by poor quality

of installed front camera. Therefore it is very

important to design a graphical user interface (GUI)

that will help to reduce any possible hardware

limitations. On the other hand, the GUI must be

intuitive and unambiguous. This will be important if

the application is used by the disabled. Therefore we

have adopted the following assumptions regarding

GUI preparation:

The graphical user interface should be as simple

as possible and unambiguous;

We should use simple unambiguous geometric

forms instead of complicated graphics;

The individual components of the GUI should be

arranged in such a way as to make navigation

easier by using eye movement;

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

374

Colors should be selected in such a way as to

facilitate the navigation. If possible, the colors

should match the generally accepted convention

(e.g. green is usually associated with

acceptance). They will be used up to 4 different

colors.

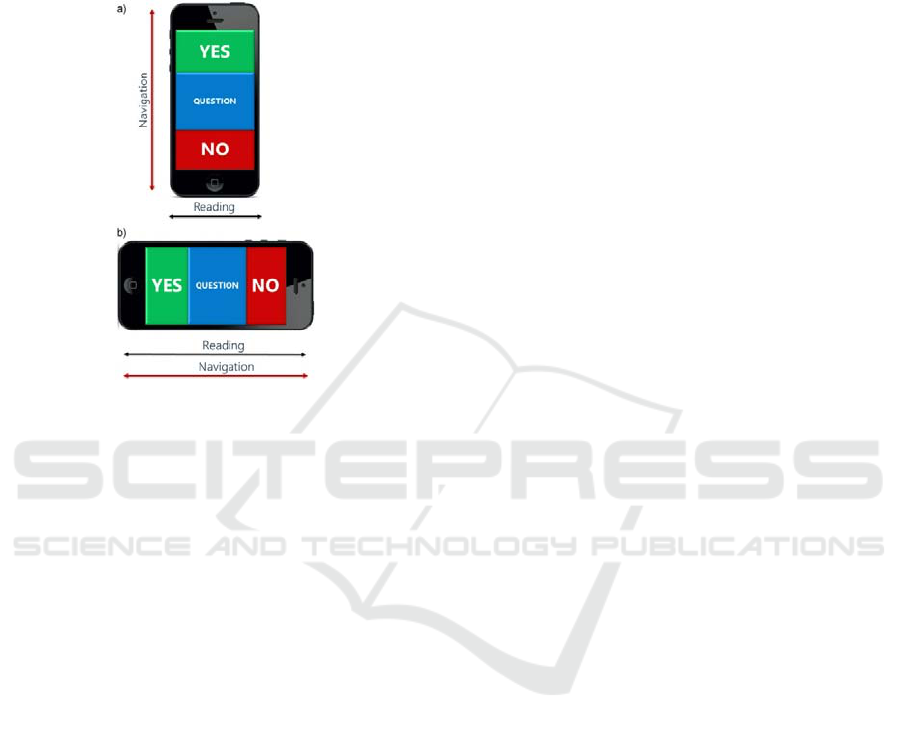

Figure 1: GUI and layout: a) vertical, b) horizontal.

Initially, two GUI prototypes were considered:

vertical (Fig.1.a) and horizontal (Fig.1.b) layout of

the components. In the case of the horizontal layout

(Fig.1.b) there are concerns that the participant will

move the eyes along the text while reading it. This

situation, in turn, can interfere with the navigation of

the application. The application of control in a

direction perpendicular to the reading direction

(Fig.1.a) could prevent such situation. Additionally,

very short sentences are used in the proposed

application, so this problem should not matter. At

the same time the movement of the eyes in one plane

(left-right) may be more convenient for the user. On

the other hand, recognizing the vertical changes in

the eye's position is much more difficult. The eyelids

partially cover the iris, which leads to additional

errors when the camera is of low resolution. We

have conducted preliminary tests, how these errors

affect getting the correct eye image The tests have

shown that the effective operation of our solution

can be achieved practically only in horizontal layout.

It should be noted here, that in case of real

applications of such GUI, it is necessary to prepare a

series of test screens. This would allow the user to

become familiar with the operation of the

application and to practice how to use it. The user

should also be able to choose one of the considered

systems according to his habits and personal

preferences.

4 IMPLEMENTATION.

ALGORITHM OF THE EYE

TRACKING

Android smartphone was chosen as a mobile device

because of its prevalence. The developed application

was prepared in Android Studio environment, using

Open Source Computer Vision Library (OpenCV,

2014). This solution will ensure modularity of the

application and easy integration into the operating

system.

The image retrieved from the mobile device's

camera is processed and analyzed to determine the

direction of the sight. The designated direction of

sight allows for determining the area of the screen

that the user is looking at. On this basis, the

appropriate procedure of control is started. The

application for image processing and analysis

performs consecutively the following functions:

1. Face Detection in the Image. We have used the

Viola-Jones method (Viola and Jones, 2001),

which is a cascade classifier based on Haar

features. This analysis allows for focusing on the

selected features of the image, abandoning the

processing of some information (such as pixel

brightness) in later stages of the algorithm.

Moreover, the use of cascade classifiers allows

for eliminating areas where there is no face,

effectively and quickly. The result of the

detection is a rectangle covering the face.

2. Determination of the Proper ROI (Region of

Interest). We are looking for a rectangular area

in which the eyes are located. Based on the

known geometrical features of the face (size of

elements and proportions), areas of the face

where there are no eyes, are eliminated. Then,

the rectangle containing the eyes is divided into

two parts, so that the image can be processed

independently for the left and right eye.

3. Determination of the Pupil in the Eye ROI.

The eye image is extracted from the ROI using

Haar's classifier. Then the darkest point and its

dark surrounding forming the pupil is sought in

the image of the eye.

4. Analysis of the Pupil Position. The position of

the pupil is monitored. Changes in position mean

that the eyes are turned towards another

direction. We have experimentally determined

the dependencies between recorded changes in

pupil position and screen areas, which are related

to the corresponding viewing directions. An

identified visual stop on a specific screen area

triggers the appropriate control procedure.

Eye Tracking as a Method of Controlling Applications on Mobile Devices

375

Analysis of change in the position of the pupil is

carried out using the method of template

matching.

The analysis of the relationship between the position

of the pupil (recognized) and the direction of

looking is very important. It is independent of the

individual human characteristics – each of us moves

the eyeball in the same way to direct the eyesight in

the proper direction. This relationship allows for

performing a simple calibration of the device by

each user, taking into account the individual way of

holding the device.

5 THE PROTOTYPE AND

USABILITY TESTS

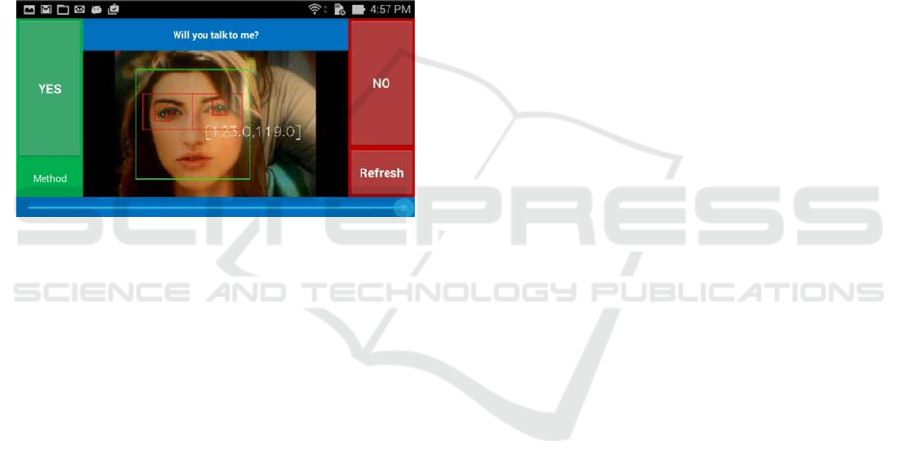

Figure 2: The screen of application. The rectangle of face

and ROI of the eyes are marked. The current coordinates

of the identified left eye pupil are also marked.

After initial testing in the environment of Android

Studio and Android Device Monitor, the developed

software was implemented in the tested smartphone.

The final version of the screen is shown in Figure 2.

The question for the user is placed above the camera

image; the answers are situated to its left (the

positive answer) and right (negative answer) side.

We decided that the user have to be gazing at the

button for a certain duration of time in order to

activate/confirm that button. At the bottom of the

screen there is a bar that allows for changing the

geometric method of shape matching. This feature

was used at the optimization step of the application.

Applying the proposed scheme for control / selection

in the operating system means implementing the

binary decision tree. Such a control is not always the

most effective one, but makes it possible to

implement any solution.

5.1 Tests of the Main Function in the

Proposed Solution

The main function of the developed application (i.e.,

control by eye movement) was tested on a group of

several users. The experiment was conducted in a

small group of 6 participants. The experiment was

developed on the basis of standard tests (Fawcett,

2006, Powers, 2011), giving an objective assessment

of the interface quality, using standard evaluation

criteria. The purpose of the experiment was to

answer a series of questions using a new eye

tracking solution. Each participant had to answer 40

questions: 10 answers given correctly using left

field, 10 answers given intentionally incorrectly

using left field, 10 correct answers using right field,

10 intentionally incorrect answers using right field.

The order of the questions was random and the

program counted the results as: True Positive, False

Negative, True Negative, False Positive

respectively. Before performing the experiment, we

explained how the device works and each participant

had the opportunity to work for a few minutes. Then

we explained the rules of the experiment. The results

of all participants are presented in Table 1. After the

experiment we discussed the new solution with test

participants. There were, of course, subjective

assessments, however this discussion was very

important for our conclusions. Everyone emphasized

that the solution is convenient, easy to use and user-

friendly.

The experiments have shown that the introduced

solution allows for visual control, therefore the aim

has been achieved. However, the designed

mechanism is not devoid of faults. Basic one is low

resistance to head movements recorded by the

camera. It is worth noting that this is a relative head

movement. The problem occurs in both situation:

when the device is stably held in hands but the user

moves his head during the operation and also when

the head is motionless but the user moves the device.

Moreover, in practice both cases can occur

simultaneously. In principle, such a problem was

expected in our solution.

On the other hand, the problem of stability has

not hindered the work. The way for avoiding this

problem is to pay special attention to maintaining

stable position. All the persons who used the

application after a few attempts have mastered the

basics of control and have learned how to work

properly and avoid interfering movements.

5.2 The Impact of the Face Lighting

We have tested several different lighting options.

The application was launched during the day - in

daylight, at twilight - with two types of lighting

(both natural and artificial) and in the evening - with

full artificial lighting.

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

376

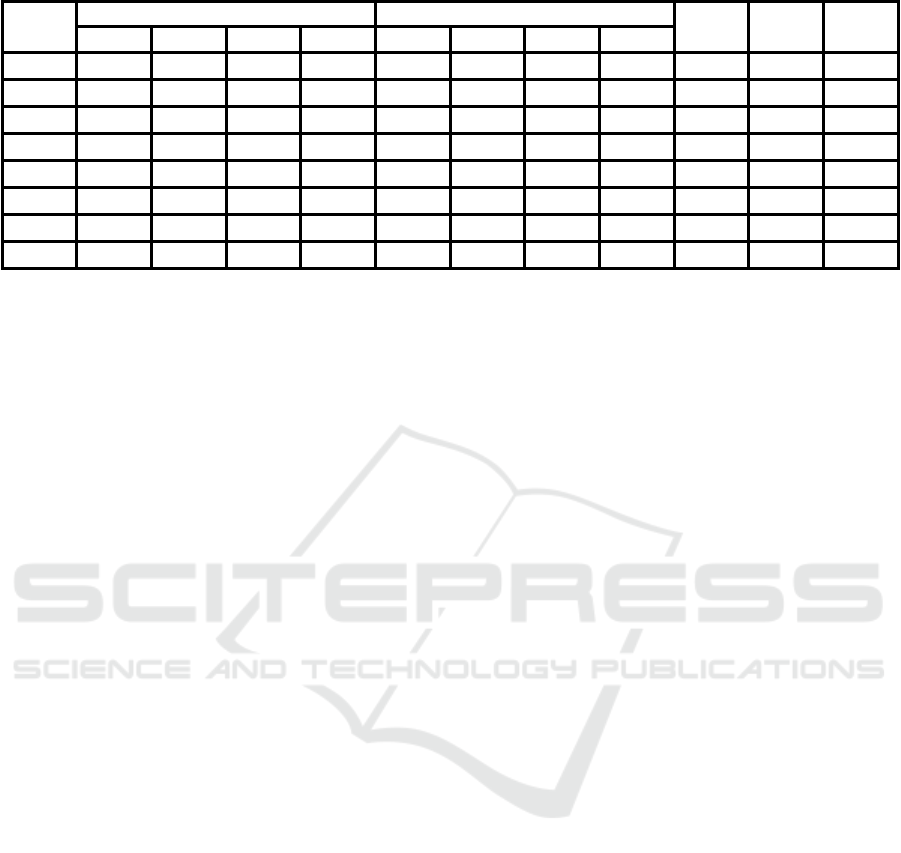

Table 1: Results of tests True Positive Rate (TPR), True Negative Rate (TBR), Accuracy (ACC), Standard Deviation (SD).

Parti

cipant

For the left field For the right field

TPR TNR ACC

TP FN TN FP TP FN TN FP

1.

20 0 13 7 14 6 20 0 0.85 0.83 0.84

2.

20 0 14 6 11 9 18 2 0.78 0.8 0.79

3.

20 0 11 9 12 8 20 0 0.8 0.78 0.79

4.

19 1 14 6 14 6 20 0 0.83 0.85 0.84

5.

20 0 13 7 12 8 20 0 0.8 0.83 0.81

6.

20 0 12 8 13 7 19 1 0.83 0.78 0.8

Mean

19.83 0.17 12.83 7.17 12.67 7.33 19.50 0.50

0.81 0.81 0.81

SD

0.37 0.37 1.07 1.07 1.11 1.11 0.76 0.76

Experiments have shown that the application

worked correctly in practically all tested conditions

and had a high resistance to changes of lighting. The

problem can only be caused by reflections of the

light sources, visible on the iris. Such elements

could be wrongly interpreted by the detection

algorithm, which can lead to a reduction in

precision. However, this did not cause the program

to work incorrectly. On the other hand, users can

influence the head position with respect to external

lighting and easily avoid such errors.

5.3 The Impact of the Background in

the Field of View

The tests performed has not showed problems with

proper face recognition. We have also made a test

when oval-shaped elements appeared close to the

human face in the camera’s field of view. Using

background with geometric figures that are close to

the face shape results in the worse performance of

the recognition algorithm. Background objects are

treated as another face, causing the application to

malfunction. Selection of the wrong background can

be a factor strongly influencing the proper

functioning of our program. However, such a

problem does not occur in a typical environment

(without oval-shaped elements and images of face

close to the user’s face in the camera’s field of

view).

5.4 Usage of the Developed Application

in Classical Oculography

Although the use of traditional oculography was not

an assumption of this work, a basic analysis has also

been made in this field. An appropriate research

stand was prepared to test the eye tracking device. A

rectangular image was placed on the wall in front of

the user's seat. In this image four extreme areas

(rectangles in the corners of the image) and one

central area were marked. Smartphone with built-in

front camera was placed on a special stand and set at

eye level of the user. Thanks to this solution,

interference of the user's hand shaking has been

eliminated. The mobile device was connected to the

notebook, allowing for real time data collection.

In the first stage of the experiment, the

participant was asked to concentrate on the central

point, to relax and prepare for a change of focus.

Then, the participant had to move his gaze along

consecutive points, with at least ten seconds stop on

the indicated place (for device calibration). Finally,

the participant was asked to look at a randomly

selected characteristic point within the test plane.

Participants of the experiment were informed that

they should not move their head during the test.

Unfortunately, the repeatability of the results was

very low. It was not possible to completely eliminate

the head movements, which resulted in significant

deviations during subsequent attempts to complete

the task. The difficulty of the task was to define an

universal reference point for head positioning.

Probably this problem could be eliminated by using

an additional camera or by using a phone with a

higher quality camera. Methods of geometric

stability could also be added in order to reduce the

impact of dynamic disturbances.

On the other hand, it has been proved in our

experiments, that it is possible to use the proposed

system for classical oculography. However, the

obtained results are not accurate enough to allow the

designed system to compete in this area with

solutions available on the market.

Eye Tracking as a Method of Controlling Applications on Mobile Devices

377

6 TESTS OF THE METHOD OF

TEMPLATE MATCHING AND

ITS IMPACT ON THE

OPERATION OF THE DEVICE

Analysis of the pupil position has been carried out

using the method of template matching. This is a key

method for determining the viewing direction in our

solution.

There are many methods of template matching,

based on different similarity measures of images

(Brunelli, 2011). In our tests we used 3 different

methods with proper measures, each in normalized

and not normalized version. In this way, we carried

out tests with 6 similarity measures of images –

formulas (1) – (6). In all formulas

T

x,y

means the

intensity/luminance of

x,y

point in the template

and

I

x,y

means the intensity/luminance of

x,y

point in the image. The size of template is defined

by

w

and

h

.

Normalization in the template matching helps to

reduce the impact of disturbing factors such as non-

uniform illumination or changes in the saturation of

the image. We decided to use also the normalized

versions, although we tried to provide the right

conditions - using the conclusions from the first part

of the tests.

Sum of Squared Differences (SSD):

,

,

,

,

(1)

Normalized Sum of Squared Differences (SSDN):

,

∑

,

,

,

∑

,

∙

∑

,

,

,

(2)

Cross Correlation (CC):

,

,

∙

,

,

(3)

Normalized Cross Correlation (CCN):

,

∑

,

∙

,

,

∑

,

∙

∑

,

,

,

(4)

Coefficient Correlation (CoC):

,

,

∙

,

,

(5)

where:

,

,

1

∙

∙

′,

,

,

,

+

1

∙

∙

,

,

Normalized Coefficient Correlation (CoCN):

,

∑

,

∙

,

,

∑

,

∙

∑

,

,

,

(6)

At first the test of device usability was performed.

The results and interviews with participants allowed

for determining the impact of various factors on the

operation of the device. In the test of template

matching techniques, we tried to create such

conditions (background, uniform lighting) so that

only the method of matching influenced the final

result.

The test was analogous to the previous one

(described in section 5.1), but 6 other participants

took part in it. As previously the order of the

questions was random and the program counted the

results as: True Positive, False Negative, True

Negative, and False Positive. Before performing the

experiment, we explained how the device works and

each participant had the opportunity to work for a

few minutes. Then we explained the rules of the

experiment. As we tested 6 different methods of

template matching, so each participant repeated the

tests 6 times. The results for 6 different methods and

for all participants are presented in Table 2.

The analysis of the results allows for the

formulation of several observations. All tested

methods give the possibility of correct operation (i.e.

TPR, TNT and ACC at the level of 80% or higher).

It is generally assumed that SSD and SSDN are

the most commonly used methods (due to

computational simplicity), although they are also the

least sensitive. The one advantage of the SSD/SSDN

measure is correct operation with Gaussian noise

(but only Gaussian). The other advantage is that the

signal energy (the sum of squares in a specific area)

is taken into account. Correlation measures may be

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

378

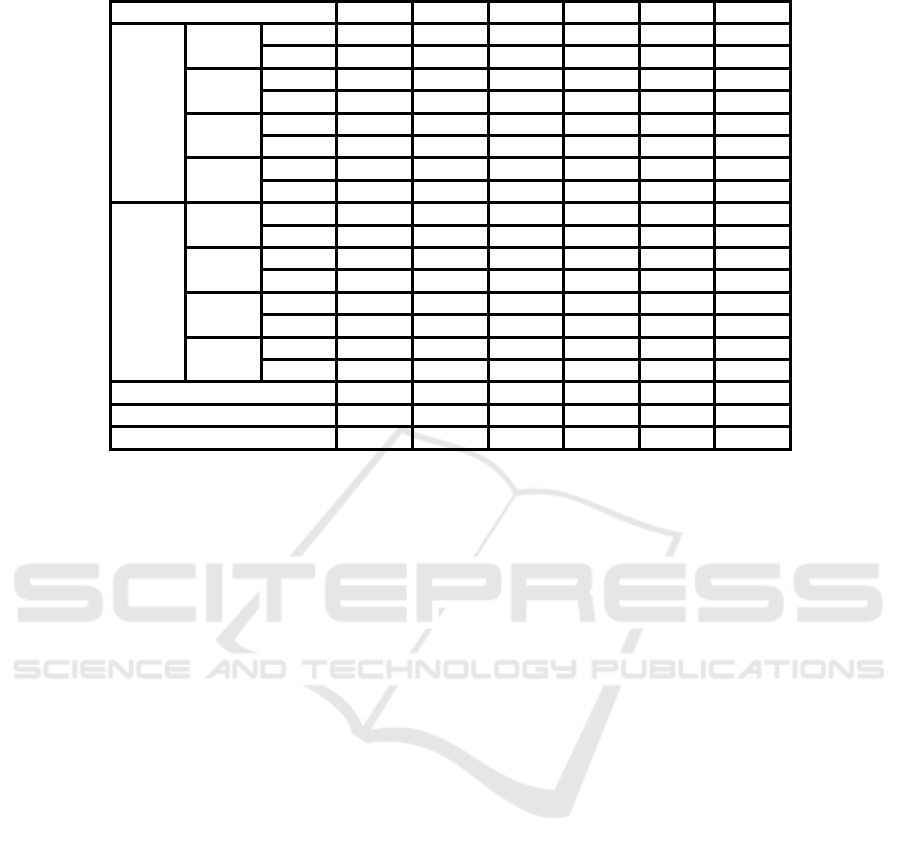

Table 2: Results of tests True Positive Rate (TPR), True Negative Rate (TBR), Accuracy (ACC), Standard Deviation (SD).

SSD SSDN CC CCN CoC CoCN

Left Field

TP

Mean 19.17 19.00 19.17 19.00 19.17 19.83

SD 0.69 0.82 0.69 0.82 0.37 0.37

FN

Mean 0.83 1.00 0.83 1.00 0.83 0.17

SD 0.69 0.82 0.69 0.82 0.37 0.37

TN

Mean 13.33 12.50 14.33 13.50 14.17 16.67

SD 1.11 1.50 1.80 1.71 1.86 0.94

FP

Mean 7.00 7.50 5.67 6.50 5.83 3.33

SD 1.00 1.50 1.80 1.71 1.86 0.94

Right Field

TP

Mean 13.50 12.83 13.83 14.00 13.50 13.83

SD 1.12 1.34 1.57 1.29 1.71 1.34

FN

Mean 6.50 7.17 6.17 6.00 6.50 6.17

SD 1.12 1.34 1.57 1.29 1.71 1.34

TN

Mean 19.00 19.00 19.00 18.83 18.67 19.33

SD 0.82 0.82 0.82 0.90 0.75 0.75

FP

Mean 1.00 1.00 1.00 1.17 1.33 0.83

SD 0.82 0.82 0.82 0.90 0.75 0.69

TPR 82% 80% 83% 83% 82% 84%

TNR 80% 79% 83% 81% 82% 90%

ACC 80.9% 79.2% 82.9% 81.7% 81.9% 86.9%

too sensitive to local areas of very low or very high

intensity. In the case of our solution, both

advantages are unusable. The image of the eye is

only slightly noisy, similar images are compared,

taken with the same camera in short intervals of

time.

Normalization helped only in one case

(CoC/CoCN). This means that the final result could

have been influenced by additional factors that are

difficult to eliminate, e.g. reflection of light from the

surface of the eye. However if so, simpler methods

(e.g. SSD/SSDN) should give slightly worse effects

and indeed, this is confirmed in the results.

The best effect was obtained when using CoCN

measure. It is normalized and the most advanced

measure among the tested ones. The computational

complexity of this method did not affect the work

comfort of our solution.

The group of participants was small. However, it

was enough to check the acceptance of the solution,

and confirm the method of template matching.

7 SUMMARY

The aim of this study was to develop the control

method by eye movements for the application

running on the mobile device.

A method has been developed for determining

the point of the screen of the mobile device, in

which the user's eyes are focused. The graphical user

interface (GUI), which is controlled using only eye

movements and has been specially designed for this

purpose, has been proposed. The designed

application has been dedicated for mobile devices

with Android operating system. We have conducted

a series of tests that have confirmed the assumptions

and correct operation of the developed program. We

have also analyzed the impact of external factors

that could affect the effectiveness of the solution.

We have investigated, among others, the impact of

lighting and background on work of the camera and

the influence of the head position on the work of the

eyes detection algorithm. We have also tested the

use of the proposed solution in classic oculography.

Additionally, we have tested 6 methods of

template matching. Such method is required in our

solution to analyze the pupil position, and in

consequence, to determine the viewing direction. All

tested methods give the possibility of correct

operation. However, the most popular and simplest

methods (SSD/SSDN) have given the worst results.

The tests showed that the CoCN method turned out

to be the best in our solution.

Based on the conducted research it can be

demonstrated that it is possible to implement the

algorithm of eye tracking in a mobile device. It has

been proven that it is possible to control the

functions of a mobile phone using only eye

movement. On the other hand, the tests have shown,

however, that the low precision of eye tracking does

not allow for using the proposed solution to the

classic oculography. It is worth emphasizing that

this problem has a great application potential.

Eye Tracking as a Method of Controlling Applications on Mobile Devices

379

Considering the rapid development of digital

technology, it can be expected that similar solutions

will become a standard in the near future.

We plan to improve the efficiency of eye

tracking by adding image stabilization algorithms.

However, the operation of such algorithm in real

time requires more computing power. The use of

binary decision tree in our solution is not the most

convenient way of controlling. However,

preliminary tests have shown that we can correctly

identify more areas by eye tracking. We are

considering future trials with a quadtree, octree or

sufficiently large icons.

REFERENCES

Al-Rahayfeh, A., Faezipour, M., 2013. Eye tracking and

head movement detection: a state-of-art survey. IEEE

Journal of Translational Engineering in Health and

Medicine. Vol. 1, (2013) doi:

10.1109/JTEHM.2013.2289879

Brunelli, R., 2009. Template Matching Techniques in

Computer Vision: Theory and Practice. John Wiley &

Sons, Ltd.

Drewes, H., De Luca, A., Schmidt, A., 2007. Eye-gaze

interaction for mobile phones, In: Proc. of the 4th

International Conference on Mobile Technology,

Singapore 2007. 364-371. doi:

10.1145/1378063.1378122

Duchowski, A., 2007.: Eye tracking methodology. Theory

and practise., II ed. Londyn: Springer-Verlag.

Fawcett, T., 2006. An Introduction to ROC Analysis.

Pattern Recognition Letters. Vol. 27 (8), 861-874.

doi:10.1016/j.patrec.2005.10.010

Jaimes, A., Sebe, N., 2007. Multimodal human computer

interaction: a survey. Computer Vision and Image

Understanding, Vol.108, issues 1-2, (Special Issue on

Vision for Human-Computer Interaction) 116-134.

doi:10.1016/j.cviu.2006.10.019

Krafka, K., Khosla, A., Kellnhofer, P., Kannan, H.,

Bhandarkar, S., Matusik, W., Torralba, A., 2016. Eye

Tracking for Everyone. In: Proc. of IEEE Conference

on Computer Vision and Pattern Recognition (CVPR),

June 27-30 2016, Las Vegas, Neveda, USA, 2176-

2184. doi: 10.1109/CVPR.2016.239

OpenCV 2.4.10.0 documentation. 2011-2014.

http://docs.opencv.org/2.4.10/index.html (retrieved

March 1, 2018)

Patel, R.A., Panchal, S.R., 2014. Detected Eye Tracking

Techniques: And Method Analysis Survey.

International Journal of Engineering Development

and Research, Vol. 3, Issue 1,. 168-175.

Powers, D.M.W., 2011. Evaluation: From Precision,

Recall and F-Measure to ROC, Informedness,

Markedness & Correlation. Journal of Machine

Learning Technologies. Vol. 2 (1), 37-63

Rougier, C., Meunier, J., St-Arnaud, A., Rousseau, J.

(2013). 3D head tracking for fall detection using a

single calibrated camera. Image and Vision

Computing. Vol. 31, 246-254.

Singh, H., Singh, J., 2012. Human eye tracking and related

issues: a review. International Journal of Scientific

and Research Publications. Vol. 2, issue 9, (2012) 1-9.

Strumiłło, P., Pajor, T., 2012. A vision-based head

movement tracking system for human-computer

interfacing. In Proc. New trends in audio and

video/signal processing algorithms, architectures,

arrangements and applications (NTAV/SPA), 28-29

September 2012. 143-147

Sztuk, S., Tall, M.H., Lopez, J.S.A., 2017. Systems and

methods of eye tracking control on mobile device.

Patent US9612656B2.

Viola, P., Jones, M., 2001. Rapid Object Detection using a

Boosted Cascade of Simple Features. Computer Vision

and Pattern Recognition (CVPR). Vol. 1, 511-518.

doi: 10.1109/CVPR.2001.990517

Young, R.L., Sheena, D., 1975. Survey of Eye Movement

Recording Methods. Behavior Research Methods &

Instrumentation

. Vol.7 (5), 397-439.

SIGMAP 2018 - International Conference on Signal Processing and Multimedia Applications

380