Development of an Online-System for Assessing the Progress of

Knowledge Acquisition in Psychology Students

Thomas Ostermann

1

, Jan Ehlers

2

, Michaela Warzecha

3

, Gregor Hohenberg

3

and Michaela Zupanic

2

1

Chair of Research Methodology and Statistics in Psychology, Witten/Herdecke University, 58448 Witten, Germany

2

Chair of Didactics and Educational Research in Health Science, Witten/Herdecke University, 58448 Witten, Germany

3

Centre for IT, Media and Knowledge Management, University of Applied Sciences Hamm, 59063 Hamm, Germany

Keywords: Progress Testing, Online Digital Platform, Knowledge Acquisition.

Abstract: Results of summative examinations represent most often only a snapshot of the knowledge of students over

a part of the curriculum and do not provide valid information on whether a long-term retention of

knowledge and knowledge growth takes place during the course of studies. Progress testing allows the

repeated formative assessment of students’ functional knowledge and consists of questions covering all

domains of relevant knowledge from a given curriculum. This article describes the development and

structure of an online platform for progress testing in psychology at the Witten/Herdecke University. The

Progress Test Psychology (PTP) was developed in 2015 in the Department of Psychology and

Psychotherapy at Witten/Herdecke University and consists of 100 confidence-weighted true-/false-items

(sure / unsure / don’t know). The Online-System for implementation of the PTP was developed based on

XAMPP including an Apache Server, a MySQL-Database, PHP and JavaScript. First results of a

longitudinal survey show the increase in student’s knowledge in the course of studies also reliably reflects

the course of the curriculum. Thus, content validity of the PTP could be confirmed. Apart from directly

measuring the long-term retention of knowledge the use of the PTP in the admission of students applying

for a Master’s program is discussed.

1 INTRODUCTION

Learning and understanding of new educational

content are major goals of academic teaching aiming

at to expanding the student’s knowledge base.

Examination of these goals is mainly archived by

practical, written or oral tests and other examination

formats for the respective learning modules at the

end of a course. Thus, the acquisition of knowledge

of students is triggered to pass the exam (backwash

effect), but may not be remembered in the long run

(Leber et al., 2017). The knowledge curves of

students in different topics confirm the approach of

"assessment drives learning" according to Biggs

(2003). Moreover, examination results only

represent a snapshot of a special part of the complete

curriculum and do not give valid information

whether there is a long-term retention of knowledge

over the course of the complete curriculum, as the

content of this course is normally not tested again

(Ferreira et al., 2016).

Educational research very early has given

attention to this problem by conducting memory

tests to assess retention of knowledge, i.e. in clinical

psychology (Conway et al., 1991, 1997). One of the

modern forms of assessing the retention of

knowledge is given by progress testing developed in

the 1990th at the University of Missouri-Kansas City

School of Medicine and Maastricht University in the

Netherlands (Nouns and Georg, 2010). A progress

test is defined as a “repeated assessment of students’

functional knowledge” (Schuwirth and van der

Vleuten, 2012) and consists of questions covering all

domains of relevant knowledge from a given

curriculum. The blueprint of the progress test (PT)

contains the full content of the curriculum, usually

according to a classification matrix of relevant

categories, e.g. organ systems and medical

disciplines. An advantage of progress test is the fact

that it is designed to test knowledge at graduate

level, in a way that students after graduating should

be able to complete the test on a 100 % level. In

Ostermann, T., Ehlers, J., Warzecha, M., Hohenberg, G. and Zupanic, M.

Development of an Online-System for Assessing the Progress of Knowledge Acquisition in Psychology Students.

DOI: 10.5220/0006892500770082

In Proceedings of the 7th International Conference on Data Science, Technology and Applications (DATA 2018), pages 77-82

ISBN: 978-989-758-318-6

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

77

Germany progress tests are used as a means of

formative, so-called low stakes assessment. With the

increasing use of digital platforms in formative

testing i.e. in mathematics (Faber et al., 2017) or

engineering (Petrović et al., 2017), authors have also

discussed the embedding of progress testing in a

digital environment (Tio et al., 2016). Heenemann et

al. (2017) found that the use of online progress test

feedback by students through analysis of patterns,

formulation, and follow-up of learning objectives is

helpful for further learning. Also Schaap et al.

(2011) were able to show that initial learning of

psychology students is positively associated with

good results in the progress test.

This article describes the development and the

structure of an online platform for progress testing in

psychology at Witten/Herdecke University and gives

first insight in preliminary results.

2 MATERIAL AND METHODS

The Progress Test Psychology (PTP) was established

for the first time German wide in 2015 in the

Department of Psychology and Psychotherapy at

Witten/Herdecke University and has become an

integral part of the curriculums in the examination

regulations for the Bachelor's Programme in

Psychology and Psychotherapy (Dallüge et al., 2016).

The modular curriculum for the bachelor program

Psychology and Psychotherapy served as a blueprint

for the test design with three methodological modules,

six modules on the basics of psychology and four

health-related modules. The weighting of the test

questions corresponds in content to the weighting of

all modules, so that the increase in knowledge reflects

the actual course of the study.

The PTP consists of 100 items in single or

multiple true-/false-format dealing with thematic

statements from the modules of the curriculum.

Answers are confidence-weighted (see Table 1) with

sure (+2) or unsure (+1) to assess the cumulated

knowledge of students on the cognitive and meta-

cognitive level. Students can more easily decide if a

statement is true if they can voice their possible

doubts. In addition, rewarding the precise confidence

assessment In addition, rewarding the accurate

assessment of trust by doubling the achievable points

directs students' attention to monitoring their

knowledge, thus supporting self-directed learning.

Moreover this construction reveals additional

information on the impact of teaching and exams and

allows for the reflexion of „not-knowing“ (Dutke and

Barenberg, 2015).

Table 1: Scoring scheme using the example of a true

hypothesis.

Answer Points

True (sure) 2

True (unsure) 1

Don’t know 0

False (unsure) -1

False (sure) -2

The ePTP is implemented as a web-application

which serves as the user interface and administers

the access to the database. In our case there are two

target groups or actors to be addressed: the most

substantive part is essentially addressing the students

as the main actors of the PTP. However the ePTP at

least needs one administrator for implementing the

tests. He or she (or a group of administrators) is

responsible to select and release hypotheses and to

create items relying on them. Moreover the

administrator is responsible to implement, to lock or

unlock the user profiles.

Profiles are stored in the database with the actual

term of the student. Response time is an important

indicator of whether students are seriously working

on the progress test (Osterberg et al., 2006).

Participants with a processing time of less than 15

minutes should be excluded from the calculation of

the average values for feedback.

The administrator enables the students to access

the ePTP. Once the ePTP is activated it can be

completed by the student. After completion, the

results can directly be accessed from the student and

the administrator. In addition, the individual

progress in knowledge acquisition a) within the

course of time and b) compared to the complete

group of students of the same term is presented

using statistical routines implemented in the ePTP.

This immediate feedback is a major benefit of the

online PTP for the students’ further learning

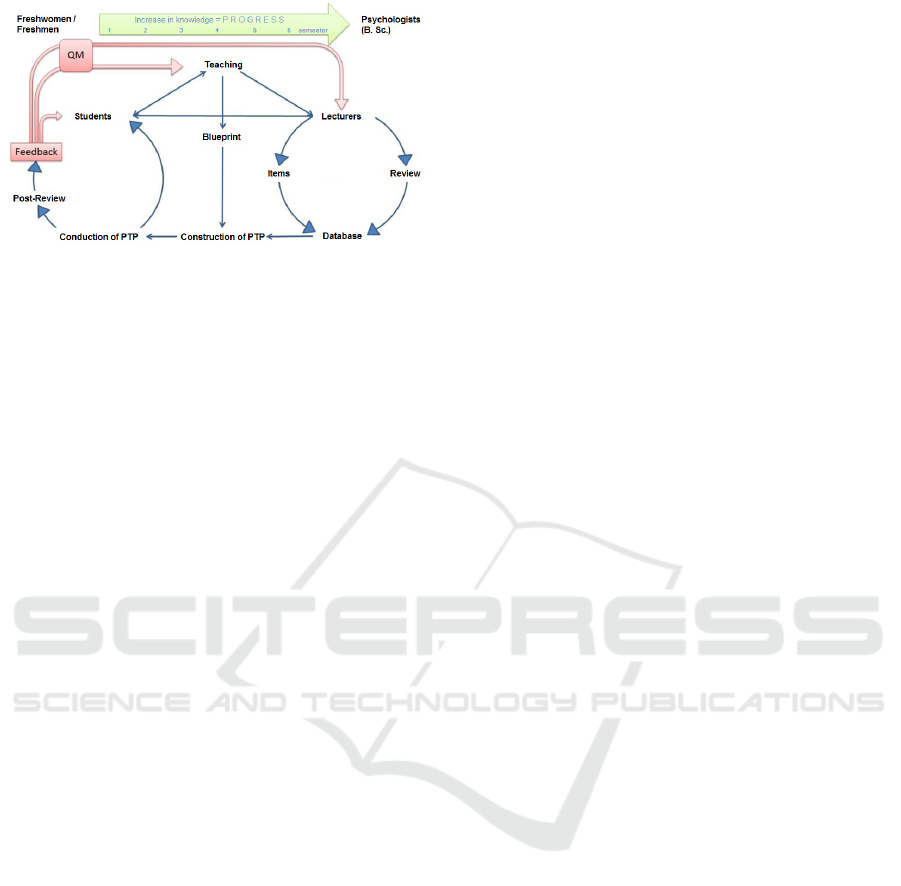

strategies. The resulting process flow is illustrated

in Figure 1.

Figure 1: Process flow and responsibilities in the ePTP.

DATA 2018 - 7th International Conference on Data Science, Technology and Applications

78

Thus, the following fundamental requirements

have to be met by the web application of the

database:

• Creation of a set of hypotheses

• Automatic Generion of the PTP

• Implementation of profiles and distribution

of access rights

• Data acquisition: storage of the student

responses

• Automated statistical analysis

• Graphical display of the results

3 RESULTS

3.1 Design and Implementation of the

Database

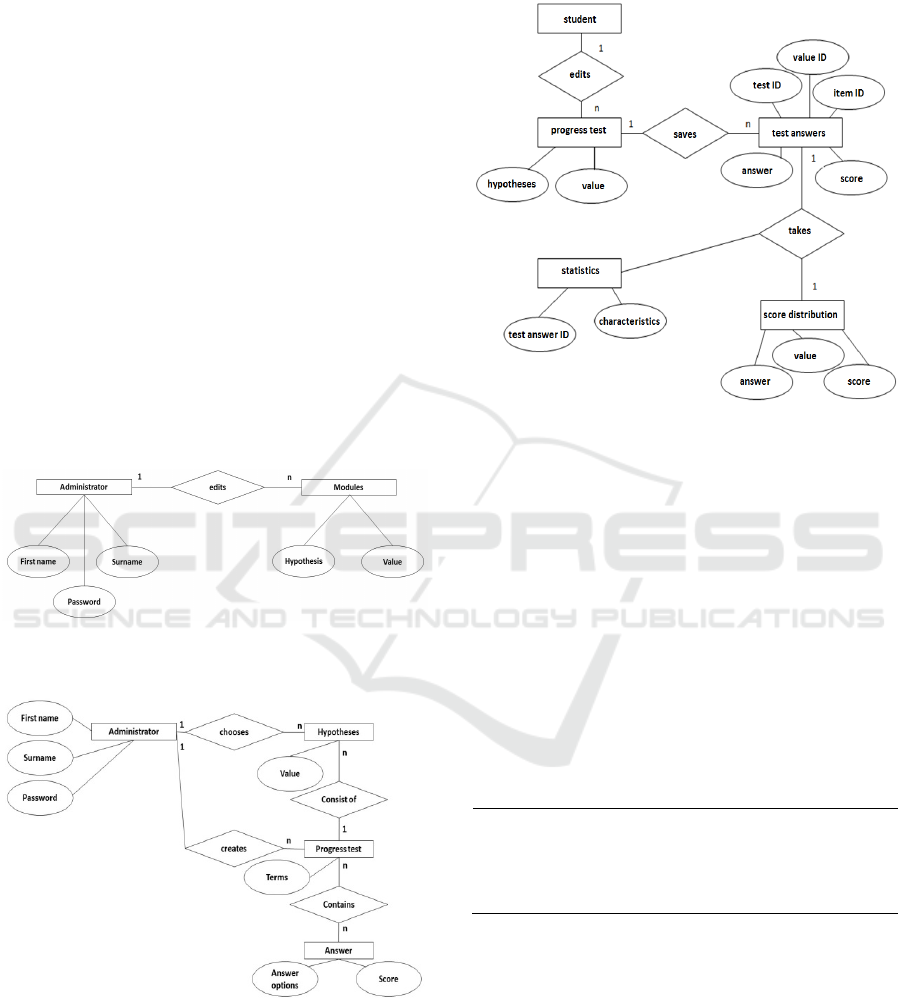

The design of the database is based on the theory

and pragmatics of the entity relationship models

(Thalheim, 2013). A graphical visualization of the

entity relationship model is given in Figures 2-4.

Figure 2: ER-Model describing the roles and

responsibilities of the actors.

Figure 3: ER-Model describing the generation of the PTP.

The implementation of the PTP is based on the

WINDOWs package XAMPP including an Apache

Server, a MySQL-Database and PHP as the dialect

of the framework. This framework has been used for

web-based student record management systems

(Walia and Gill, 2014). In addition JavaScript is

used to react to the behaviour of the user by

dynamically adaption of the web-interface.

Figure 4: ER-Model for storing of processed hypotheses

and statistical analysis.

3.2 First Statistical Results

Our database currently comprises complete time

series from a total of four cohorts of students starting

from summer 2015. Tables 2 and 3 describe the

feedback given to a virtual student of the first

semester.

Table 2: Scoring scheme using the example of a true

hypothesis.

Participant-No. 666 – 1st semester of the

Bachelor's Programme

Own result Mean value of the

comparison group

Test score (correct – false)

14

11.5

True sure (+2) 3 (1.5%) 5.2 (2.6%)

True unsure (+1) 15 (7.5%) 11.3 (5.6%)

Don’t know ( 0 ) 78 (39.0%) 78.6 (39.3%)

False unsure (-1) 3 (1.5%) 3.9 (5.6%)

False sure (-2) 1 (0.5%) 1.1 (0.5%)

You answered 22 out of 100 rated questions,

thereof 82 % correct.

Your comparison group is the 1st semester

with n=36 students.

Participant-No. 666 with a sum score of 14

(maximum 200 points) is just above the average of

the comparison group. The extent of the "don’t

know" answers reflects the low level of prior

knowledge at the beginning of the first semester.

Development of an Online-System for Assessing the Progress of Knowledge Acquisition in Psychology Students

79

Table 3: Evaluation sheet splitted by modules and

compared to the peer group.

Modules Items Own result Peer group

mean % mean %

Methodological

modules

Introduction to

Psychology

6 0 0.0 0.8 6.7

Statistics 15 2 6.7 1.3 4.3

Psychological

Research Methods

6 -2 -16.7 1.0 8.1

Basic modules

General Psychology 6 0 0.0 0.4 3.0

Biological Psychology 6 2 16.7 0.5 3.9

Social Psychology 6 1 8.3 1.6 13.6

Personality

Psychology

6 2 16.7 1.0 8.6

Developmental

Psychology

6 5 41.7 1.4 12.0

Educational

Psychology

6 2 16.7 1.9 16.0

Health-related

modules

Psychological

Diagnostics

6 0 0.0 0.2 1.6

Introduction of

Clinical Psych.

15 3 10.0 3.0 10.0

Clinical Practice 10 1 5.0 2.4 11.8

Health Psychology 6 0 0.0 0.1 1.2

The results in the different modules clarify the

focus of individual knowledge of Participant-No.

666. The module G-5 Developmental Psychology

shows above-average knowledge, while the negative

result (-2 points) in the module M-3 Psychological

Research Methods suggests that the student was too

convinced of their own knowledge and

overestimated it.

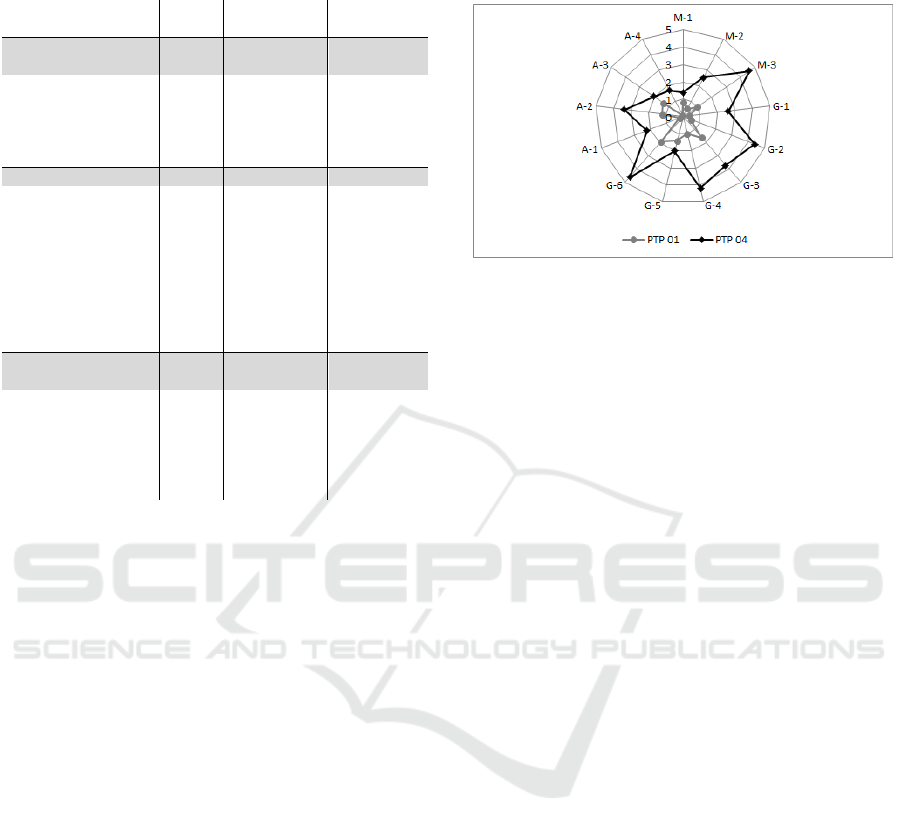

Figure 4 describes the knowledge gain of one

cohort from the initial assessment in the 1st semester

(PTP 01: n=35) to the assessment in the 4th semester

(PTP 04: n=29). As can been seen, there are highly

significant (GLM repeated measures, α=5%,

p<0.0001) differences in the knowledge gain in

Statistics (1.6±1.9 vs. 5.8±4.5; F=24.5),

Psychological Research Methodology (1.1±1.8 vs.

4.4±2.8; F=28.9), Biological Psychology (0.7±1.5

vs. 4.1±2.4; F=48.7) and Personality Psychology

(1.4±1.9 vs. 4.3±3.7; F=16.3) to mention only some

domains. Others like Health Psychology,

Epidemiology and Public Health have not such

accelerated increase in acquired knowledge (0.3±1.0

vs. 1.7±2.0; F=12.1), as the respective module is yet

to come for this cohort in the sixth semester. Total

PTP-Score also increased from 18.1±13.0 in the 1st

semester to 47.9±20.2 in the 4th semester (F=49.3,

p<0.0001). By proven construct validity (Zupanic et

al., 2016) the internal consistency differs from very

good (PTP 01: α=0.91) to acceptable (PTP 04:

α=0.71).

Figure 5: Knowledge gain from the 1st semester (PTP 01)

to the 4th semester (PTP 04).

(M-1 Introduction to Psychology, M-2 Statistics, M-3

Psychological Research Methodology G-1 General

Psychology G-2 Biological Psychology G-3 Social

Psychology G-4 Personality Psychology G-5 Development

Psychology G-6 Educational Psychology, A-1

Psychological Diagnostics, A-2 Introduction of Clinical

Psychology, A-3 Clinical Practice, A-4 Health

Psychology, Epidemiology and Public Health).

4 DISCUSSION

The PTP-Online system allows students to directly

gain understanding in their overall knowledge

acquisition as well as in their scores per discipline or

module and to compare their score with the average

in their respective peer group. Due to its low

threshold as a formative assessment students get an

unstressed feedback on their level of acquired

knowledge which might also help to encourage

students to fill their deficits.

The interest of the students in progress testing

resp. in the feedback on the individual current state

of knowledge depends on whether they are

motivated to close the gap to the possible level of

knowledge. This approach corresponds to a

constructivist perspective of learning that, given the

prerequisite of self-reflection and evaluation,

considers a strong involvement of students in the

learning process to be essential (Rushton, 2005).

Starting from the job description of a

psychologist resp. from the expected knowledge of a

bachelor in psychology, a standardized test was

developed on the basis of a blueprint, which

validates the learning progress (s. figure 6). As a

measure of internal quality assurance, the lecturers

also receive feedback on the average knowledge

growth in the semesters.

DATA 2018 - 7th International Conference on Data Science, Technology and Applications

80

Figure 6: Integration of the PTP into the process of

knowledge gaining of the students (adapted from Siegling-

Vlitakis et al.; 2014; p. 1078).

Furthermore, studies of psychological assessment in

psychology students have shown equivalence of

paper-pencil and online tests (Vallejo et al., 2007).

However there is a clear dominance of online testing

with respect to usability and completeness of data

(Kongsved et al., 2007). Schüttpelz-Brauns et al.

(2018) found fewer non-responders in a paper-based

format than in an online format, like several studies

before, which might depend on a survey fatigue in

the context of online surveys.

With respect to other scientific disciplines,

progress testing has also been applied in the field of

information literacy (de Meulemeester and Buysse,

2014), language acquisition (Becker et al., 2017) and

basic law science (Moravec et al., 2015). In particu-

lar in the study of Moravec et al. it was found that a

provision of an E-learning tool increased the average

correctness of answers at the test by around 20%.

Further research thus should be carried out to

evaluate paper-pencil vs. online progress testing

concerning the reliability of the PTP.

5 CONCLUSION

Apart from assessing the acquired knowledge in the

course of a Bachelor programme, the PTP might also

be useful as a tool to measure the knowledge

acquisition of graduated students applying for a

Master’s program. Moreover it can be applied as a

“policy tool to introduce meaningful curricular

adjustment” (Becker et al., 2017) aiming at

optimizing the quality of higher education (Khalil et

al., 2017).

REFERENCES

A. Becker, T. Nekrasova-Beker, T. Petrashova (2017).

Testing as a Way to Monitor English as a Foreign

Language Learning. TESL-EJ, 21(2).

J. Biggs (2003). Teaching for quality learning at

university. The Society for Research into Higher

Education and Open University Press, Buckingham.

M. A. Conway, G. Cohen, N. Stanhope (1991). On the

very long-term retention of knowledge acquired

through formal education: Twelve years of cognitive

psychology. Journal of Experimental Psychology:

General, 120(4), 395-409.

M. A. Conway, J. M. Gardiner, T. J. Perfect, S. J.

Anderson, G.M. Cohen (1997). Changes in memory

awareness during learning: the acquisition of

knowledge by psychology undergraduates. Journal of

Experimental Psychology: General, 126(4), 393-413.

E. Dallüge, M. Zupanic, C. Hetfeld, M. Hofmann (2016).

Wie bildet sich das Curriculum des Studiums im

Progress Test Psychologie (PTP) ab? In: M. Krämer,

S. Preiser, K. Brusdeylins (Eds). Psychologiedidaktik

und Evaluation XI. Aachen: Shaker, 307-314.

A. de Meulemeester, H. Buysse (2014). Progress testing of

information literacy versus information literacy self-

efficacy in medical students. European Conference on

Information Literacy, Springer, New York: 361-369.

S. Dutke, J. Barenberg (2015). Easy and Informative:

Using Confidence-Weighted True–False Items for

Knowledge Tests in Psychology Courses. Psychology

Learning & Teaching, 14(3), 250-259.

J. M. Faber, H. Luyten, A. J. Visscher (2017). The effects

of a digital formative assessment tool on mathematics

achievement and student motivation: Results of a

randomized experiment. Computers & education, 106,

83-96.

J. J. Ferreira, L. Maguta, A. B. Chissaca, I. F. Jussa, S. S.

Abudo (2016). Cohort study to evaluate the

assimilation and retention of knowledge after

theoretical test in undergraduate health science. Porto

Biomedical Journal, 1(5), 181-185.

S. Heeneman, S. Schut, J. Donkers, C. P. M. van der

Vleuten, A. Muijtjens (2017). Embedding of the

progress test in an assessment program designed

according to the principles of programmatic

assessment. Med Teach. 39:44-52.

M. K. Khalil, H. G. Hawkins, L. M. Crespo, J. Buggy

(2017). The Design and Development of Prediction

Models for Maximizing Students’ Academic

Achievement. Medical Science Educator, 1-7.

S. M. Kongsved, M. Basnov, K. Holm-Christensen, N. H.

Hjollund (2007). Response rate and completeness of

questionnaires: a randomized study of Internet versus

paper-and-pencil versions. J Med Internet Res,

9(3):e25.

J. Leber, A. Renkl, M. Nückles, K. Wäschle (2017). When

the type of assessment counteracts teaching for under-

standing. Learning: Research and Practice, 3, 1-19.

T. Moravec, P. Štěpánek, P. Valenta (2015). The influence

of using e-learning tools on the results of students at

the tests. Procedia-Social and Behavioral Sciences,

176, 81-86.

Z. M. Nouns, W. Georg (2010). Progress testing in

Development of an Online-System for Assessing the Progress of Knowledge Acquisition in Psychology Students

81

German speaking countries. Medical teacher, 32(6),

467-470.

K. Osterberg, S. Kölbel, K. Brauns (2006). Berlin Progress

Test: Experiences at the Charité Berlin. GMS Z Med

Ausbild. 23:Doc46.

J. Petrović, P. Pale, B. Jeren (2017). Online formative

assessments in a digital signal processing course:

Effects of feedback type and content difficulty on

students learning achievements. Education and

Information Technologies, 22, 1-15.

A. Rushton (2005). Formative assessment: a key to deep

learning? Med Teach. 27:509-13.

C. Siegling-Vlitakis, S. Birk, A. Kröger, C. Matenaers, C.

Beitz-Radzio, C. Staszyk, S. Arnhold, B. Pfeiffer-

Morhenn, T. Vahlenkamp, C. Mülling, E. Bergsmann,

C. Gruber, P. Stucki, M. Schönmann, Z. Nouns, S.

Schauber, S. Schubert, J. P. Ehlers (2014). PTT:

Progress Test Tiermedizin - Ein individuelles

Feedback-Werkzeug für Studierende. Deutsches

Tierärzteblatt 8/2014, 1076-1082.

L. Schaap, H. G. Schmidt, P. J. L. Verkoeijen (2011).

Assessing knowledge growth in a psychology

curriculum: which students improve most? Assess

Eval Higher Educ, 1-13.

K. Schüttpelz-Brauns, M. Kadmon, C. Kiessling, Y.

Karay, M. Gestmann, J.E. Kämmer (2018). Identifying

low test-taking effort during low-stakes tests with the

new Test-taking Effort Short Scale (TESS) –

development and psychometrics. BMC Medical

Education 201818:101.

L. W. T. Schuwirth, C. P. M. van der Vleuten (2012). The

use of progress testing. Perspectives on Medical

Education 6(1):24-30.

B. Thalheim (2013). Entity-relationship modeling:

foundations of database technology. Springer Science

& Business Media.

R. A. Tio, B. Schutte, A. A. Meiboom, J. Greidanus, E. A.

Dubois, A. J. Bremers (2016). The progress test of

medicine: the Dutch experience. Perspectives on

medical education, 5(1), 51-55.

E. S. Walia, E. S. Gill (2014). A framework for web based

student record management system using PHP.

International Journal of Computer Science and

Mobile Computing, 3(8), 24-33.

M. A. Vallejo, C. M. Jordán, M. I. Díaz, M. I. Comeche, J.

Ortega (2007). Psychological assessment via the

internet: a reliability and validity study of online (vs

paper-and-pencil) versions of the General Health

Questionnaire-28 (GHQ-28) and the Symptoms

Check-List-90-Revised (SCL-90-R). J Med Internet

Res, 9(1):e2.

M. Zupanic, J. P. Ehlers, T. Ostermann, M. Hofmann

(2016). Progress Test Psychologie (PTP) und

Wissensentwicklung im Studienverlauf. In: M.

Krämer, S. Preiser, K. Brusdeylins (Eds).

Psychologiedidaktik und Evaluation XI. Aachen:

Shaker, 315-322.

DATA 2018 - 7th International Conference on Data Science, Technology and Applications

82