Fast Document Similarity Computations using GPGPU

Parijat Shukla

1

and Arun K. Somani

2

1

Xillinx, Inc., HITEC City, Hyderbad, India

2

Dept. of Electrical and Computer Engineering, Iowa State University, Ames, Iowa, U.S.A.

Keywords:

Deduplication, Semi-structured Data, NoSQL, Big Data, Parallel Processing, GPGPU, Data Shaping.

Abstract:

Several Big Data problems involve computing similarities between entities, such as records, documents, etc.,

in timely manner. Recent studies point that similarit y-based deduplication techniques are efficient for do-

cument databases. Delta encoding-like techniques are commonly leveraged to compute similarities between

documents. Operational requirements dictate low latency constraints. The previous researches do not consider

parallel computing to deliver low latency delta encoding solutions. This paper makes two-fold contribution in

context of delta encoding problem occurring in document databases: (1) develop a parallel processing-based

technique to compute simil arities between documents, and (2) design a GPU-based document cache frame-

work to accelerate the performance of delta encoding pipeline. We experiment with real datasets. We achieve

throughput of more than 500 similarity computations per millisecond. And the similarity compuatation fra-

mework achieves a throughput in the range of 237-312 MB per second w hich is up to 10X higher throughput

when compared to the hashing-based approaches.

1 INTRODUCTION

Semi-structured data is a prominent component of Big

Data and is becoming more important day-by-da y. In-

creasing impor ta nce of semi-structured data has ge-

nerated vast interest in document-oriented d a ta bases

(Apache Cassandra, ); ( MongoDB, ); ( A pache HBase,

). The document da ta bases store the semi-structur ed

data, w hich is h ierarchical in nature, in formats such

as JSON, Avro, XML, etc., see references (XML, ) ;

(YAML: YAML Ain’t Markup Language, ) ; (JSON, );

(Binary JSON, ). Semi-structured data is also a part of

scientific workflows. Scientific meta data associated

with experiments, measurements, etc. are ofte ntimes

in xml or other semi-structured format.

Oftentimes, the se document databases are plagued

with volume-born e challenges when dealing with

data storage and data movement requirem ents. The

scientific workflows require large scale data transfe rs

across geograph ic al locations. Deduplicatio n helps

in reducing the amount of d ata transferred and redu-

ces the bandwidth r equirement during transfers. De-

duplication techn iques such as similarity-based de-

duplication are deployed to overcome storage- and

bandwidth-related issues in the document databases.

Recent studies conclude that delta e ncoding (c om-

pression) based deduplication offers advantages when

compare d to other data deduplication approa c hes

such as (Xu et al., 2015) . This is due to the fact that

(1) regions of similarity are small, and (2) such simi-

lar regions are scattered in the deduplication stream.

The Research P roblem. We a ddress the following

research problem: How can we leverage General Pur-

pose Graphics Pro cessing Units(GPGPUs) effectively

to accelerate the the delta encoding pipeline? This

work has two components: (1) How can we tackle th e

volume-related challenges associated with processing

of Big Data workloa ds, and (2) How can we design

a GPGPU-based solutio n which alleviates the perfor-

mance bottlenecks of existing delta enco ding soluti-

ons?

Our Response. Our response to fir st component is:

(1) Design output aware techniques. For instance,

computations involving favorable set of inputs must

incur lesser time complexity when compared to com-

puting with unfavorable set of inputs. Our response to

second component is: (1) Design a parallel processing

based solution which circumvents the pathologies of

existing solutions? For example, trad e the compute

power of GPGPUs to avoid auxiliary data structures?

This work makes the following contributions:

• Develop a novel tec hnique to compute degree of

similarity in tree-structu red data via identifying

the similarity patterns.

Shukla, P. and Somani, A.

Fast Document Similarity Computations using GPGPU.

DOI: 10.5220/0006960303230331

In Proceedings of the 10th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2018) - Volume 1: KDIR, pages 323-331

ISBN: 978-989-758-330-8

Copyright © 2018 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

323

• Develop an novel technique to compute similarity

between two objects in context of delta encoding

problem.

• Design a GPU-based document cache to accele-

rate the delta encod ing pipeline in context of do-

cument databases.

The paper is organized as follows. Section 2 co-

vers the background and related work. Section 3

describes our data shaping technique, and Section 4

describes our similarity computation tech nique.

Section 5 discu sses our GPU-based do cument cache

framework for delta compression. Section 6 describe

the experimental methodology and results obtained.

Section 7 concludes the paper.

2 BACKGROUND

GPGPU. G PGPUs are advancing the h igh perfor-

mance and high throug hput computing. GPGPUs are

basically Single Instruction Multiple Thread (SIMT)

machines. In a GPGPU, a large number of thre-

ads execute a single in struction concurrently. The

GPGPUs comprise hundreds of streaming pro cessor

(SP) cores, which operate in a parallel fashion. Stre-

aming processors (SPs) are arranged in groups and

each group of SPs is referred as Streaming Multipro-

cessor (SM). All the SPs within one SM execute in-

structions from the same memory block in lock-step

fashion. GPGPUs are equippe d with large number of

registers. Main memory in GPUs is referred as de-

vice global memory and incurs a high access latency.

Communica tion within SPs of one SM is through a

low latency shared me mory structure.

Unlike mainstream CPUs, the GPGPUs lack a ric h

memory hierarchy. Typically, only a single level limi-

ted size cache memory is available. A portion of avai-

lable cache memory is designated as read only cache,

which is used for caching a re ad-only portion of the

device global memory. In this paper, we refer G PG-

PUs and Single Instruction Multiple Thread (SIMT)

interchangeably.

Related Work. A study proposes algorithms for delta

compression and remote file synchronization(Suel

and Memon, 2002). Mogul et al. studies be-

nefits of delta encoding and data c ompression for

HTTP(Mogul et al., 1997). File system support for

delta compression(MacDonald, 2000) .

Zdelta, a tool for delta compression (Trendafilov

et al., 2002a); (Trendafilov et al., 2002b). A cluster-

based delta compression of a collection of files is stu-

died in (Ouyang et al., 2002). A pre-cache similarity-

based delta compression for use in a data storage sy-

stem is studied in (Yang and Ren, 2012).

Recently, Shilane et al. focussed on p referential

selection of candidates for delta compression (Sh ilane

et al., 2016). Z hang et al. focussed on reducing solid -

state storage device write stress through opportunistic

in-place delta compression( Zhang e t al., 2016b).

Xia e t al. proposed DA RE which is a

dedup lica tion-aware resemblance detection and eli-

mination schem e for data red uction with low overhe-

ads(Xia et al., 2016).

Wen et al. proposed edelta which is a word-

enlarging based fast de lta compression approach( X ia

et al., 2015). Li et al. explored use of hardware

accelerator for similarity based data deduplication(Li

et al., 2015). Zhang et al. explored application of

delta compression for energy efficient STT-RAM re-

gister file on GPGPUs(Zhang et al., 2016a).

3 DATA SHAPING FOR GPGPUS

We consider semi-structured fo rm data represented in

XML, JSON, etc. Figure 1( a) depicts one such semi-

structured document represented in XML n otation.

The document is basically an excerpt of revision me-

tadata from Mediawiki dataset (Wikimedia, ). Prec i-

sely, this r evision document relates to one of the revi-

sions made by some wiki user RoseParks hose user id

is 99, as shown under the contributor. The document

contains several other information such as timestamp,

comment, model, format, text id, sha1 hash of that

update.

A given document is organized as a sequence o f

objects, wherein an object is marked by the start of a

node label. For example, in Figure 1(a), XML label

revision shown a s < revision > marks the beginning

of object revision.

Figure 1(b) depicts the resulting encoding and its

memory representation. The object revision starts at

byte 384, shown a s B’38 4, in memory.

4 PARALLEL DOCUMENT

SIMILARITY COMPUTATION

In this section, we descr ibe the application of our data

shaping technique (described above) to com pute do-

cument similarity metric in a parallel manner.

Specifically, we first describe the similarity com-

putation problem being considered in this paper in

Section 4. Next, we present an outline of the solu-

tion to similarity computation problem in Section 4.

Remaining parts of this sectio n covers details.

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

324

<revision>! <id>233192</id>!

<timestamp>2001-01-21T02:12:21Z</timestamp>!

<contributor>!

<username>RoseParks</username>!

<id>99</id> </contributor>!

<comment>*</comment>!

<model>wikitext</model>!

<format>text/x-wiki</format>!

<text id="233192" bytes="124" />!

<sha1>8kul9tlwjm9oxgvqzbwuegt9b2830vw</sha1> </revision>!

"#$%&!

"#&'$!

"#&($!

"#)$&!

"#*++!

"#**)!

"#(+&!

"#&''!

"#&('!

"#)$$!

"#),,!

"#**&!

"#(+$!

-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!!

-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!!

<revision>!

<id>233192</id>!

<timestamp>2001-01-21T02:12:21Z</timestamp>!

<contributor>!

<username>RoseParks</username>!

<id>99</id>!

</contributor>!

<comment>*</comment>!

<model>wikitext</model>!

<format>text/x-wiki</format>!

<text id="233192" bytes="124" />!

<sha1>8kul9tlwjm9oxgvqzbwuegt9b2830vw</sha1>!

</revision>!

-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!!

-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!-!!

!

"#$!

"%$!

Figure 1: (a) Example of history metadata document. (b)

Memory representation of document.

The Similarity Computation Problem. We consi-

der the following problem: Given two rooted, ordered

tree objects O1, O2. Also given is a sim ilarity thres-

hold, θ. Compute difference between O1, O2.

We ma ke two key observations: (1) Closer are the

objects, lesser is the similarity (dissimilarity) compu-

tation time. (2) Contiguous dissimilarity is better than

disjoint dis-similarity.

Basic idea is that since the pattern of similarity

is directly related to the final overhead incurred after

delta encoding , it makes sense to track the patterns

of similarity b etween the objects being considered for

delta encoding. For example, if the two objects (to be

encoded) a re completely similar (identical) than the

delta encoding overhead in insignificant.

Similarity Computation: An Overview. Apply data

shaping technique describe d in Sec tion 3 to obtain T1

and T2 which are linearize d memory representation

of O1 and O2. Compare linearized tree objects in

parallel and record element-wise outcome. Perform

data compaction and record dissimilarity pattern P.

This is realized through the pseudocode as Listing 1.

Classify P as a positive or negative pattern by com-

paring it with a set of template patterns. Specifically,

P is classified as a positive pattern when P is closer

to pa ttern(s) favorable to attain the desired δ. If P is

classified as a positive pattern, then compute α

i

, the

similarity m etric corresponding to i

th

orientation pair.

Else, P is cla ssified as a negative pattern.

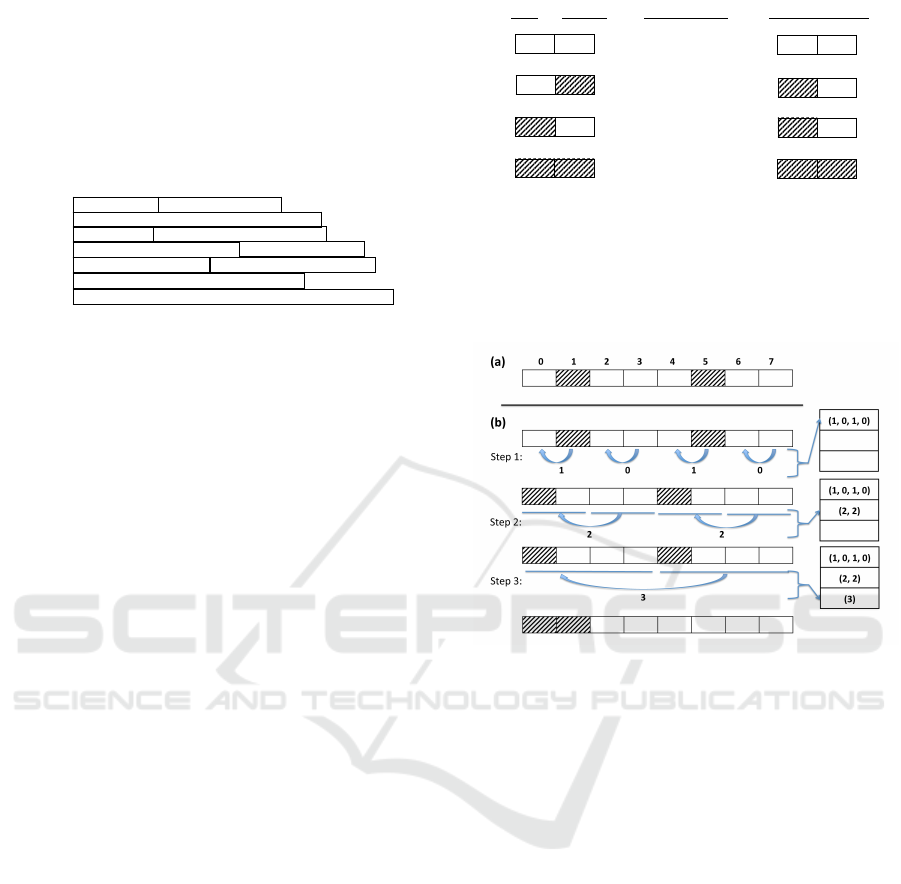

Similarity Patterns. Figure 2(a) depicts four patterns

of similarity distribution. A shaded box denotes cor-

respond ing entries in linearized version of trees are

dissimilar. First category of similarity pattern is when

both source and sink are unshaded, and no migration

is needed then.

Second category of similarity pattern denotes case

when source is shaded while sink is unshaded. In this

case, migration takes place from source to sink.

!"

#"

$"

%"

&'()"

&*+,-." /01.,("2*3." 45.,"6'7,08*("

90:" 9;:"

9-:"

Figure 2: (a) Similarity patterns. Dissimilarity migration

takes place from source to sink. A shaded box indicates

corresponding entries in linearized trees are not si mi lar. (b)

Pattern codes, and (c) Post-migration simi larity distribution.

Figure 3: (a) Similarity distribution after comparing O1 and

O2. (b) S teps to register similarity pattern. Number of steps

is log

2

(max(O1,O2)), which is 3 in this example. Simila-

rity pattern code is indicated along the curved arrows. (1)

In first step, cell size is 1. (2) In second step, size of cell

is 2. (3) In third and final step size of 4 cells is conside-

red. The similarity pattern is denoted as {(1, 0, 1, 0), (2, 2),

(3)}. [Comment: add O1 and O2 and show the differences

at index 1 and 5. And show how XORMatch is obtained.].

Third c ategory of similarity pattern denotes case

when source is unshaded while sink is shaded. No mi-

gration takes place in such cases. Fourth category of

similarity pattern re presents scenario of no similarity.

No migration can ha ppen in such cases. Figure 2(b)

depicts the pattern c ode associated with these four ca-

tegories of similarity d istribution patterns. Figure 2(c)

depicts the distribution after migration.

Registering Similarity Pattern. Figure 3(a) depicts

distribution of similarity obtained after com paring O1

and O2. Note that two entries are shaded. The shaded

entries signify the the corresp onding elements in O1

and O2 are dissimilar. Specifically, when O1 and O2

are compared element-wise, elements 1 and 5 a re dis-

similar.

Figure 3(b) depicts how we record similarity pat-

tern for O1 and O2. The similarity registration pro -

Fast Document Similarity Computations using GPGPU

325

cess operates in binary reduction fashion. Total num-

ber of steps involved in registration process are boun-

ded by log

2

(max(O1,O2)), where max(O1,O2) de-

notes the maximum of O1 and O2. In this case, num-

ber of steps in reduction pro cess are log

2

(8) = 3.

The figure depicts a thr ee step reduction operation.

In Step 1, four sets of operations o ccur in parallel (re-

fer Figure 3(b)). Specifically, entries 0 and 1 parti-

cipate in first set of comparison. Similarity pattern

correspo nds to type 1, which is marked on the arch

from source to sink.

Second set of operation comprises entries 2 and

3. Note that both of the entries are unshaded which

indicates a similarity pattern corresponds of type 0,

as marked on the arch from source to sin k. Third and

fourth set of operations comprises entries 4 and 5, and

6 and 7, resp ectively. And similarity pa tterns corr e-

sponds to type 1 and type 0, respectively. After Step

1, pattern table is updated with similarity pattern (1,

0, 1, 0).

In Step 2, two set of operations occur in para l-

lel. In first set of operation, entries 0-1 form the sink

and entries 2-3 form source. Similarity p a ttern corre-

sponds to type 2, as marked on the arch from source

to sink. In seco nd set, the participating entries are 4-7

wherein entries 4 -5 for m the sink a nd entries 6-7 form

source. The similarity pattern corresponds to type 2

as marked on the arch. After Step 2, pa ttern table is

updated with similarity pattern (2, 2).

In Step 3, one set of operation takes place wherein

all the entries participate. Entries 0-3 form the sink

and entries 4-7 form source. Similarity p a ttern corre-

sponds to type 3, which is ma rked on the arch from

source to sink. After Step 3, pattern table is upda-

ted with similarity pattern (3). With this step the fin a l

pattern table is as follows: {(1, 0, 1, 0), (2, 2), (3) }.

Listing 1 depicts the methodology to register the

similarity distribution pattern.

Classification of Similarity Patterns. Next, we dis-

cuss some of the common sim ilarity patterns from

delta encoding perspective.

Intuitively, if O1 and O2 are identical, then the

similarity pattern comprises of all 0’s, and denoted as

{(0, 0, 0, 0), (0, 0), (0 )}.

If O1 and O2 are co mpletely different, then the

similarity pattern comprises all 3’s, a nd denoted as

{(3, 3, 3, 3), (3, 3), (3 )}.

Consider another similarity pattern {(0, 0, X, X),

(0, X), (X) }, where X=(1, 2, 3). This is similarity

pattern represents a scenario where first half of O1 is

identical to the first half of O2. Note that this is based

on the observing similarity pattern emerging out of

the first step wh ich is {(0, 0, X, X) }.

Likewise, another similarity pattern {(X, X, 0, 0),

(X, 0) , (X)}, where X=(1, 2, 3), indicates that the

bottom halves of O1 and O2 are identical.

Such patterns indicate that there exist a signifi-

cant amount of contiguity in the similarity. Contigu-

ous similar ity pattern represent scenarios which are

highly favorable for delta encod ing. Reason being

that the overhead resulting from delta encoding would

be limited due to the contiguous nature of the simila-

rity (or dissimilarity) in the objects being considered.

Such favorable patterns are also referred as positive

patterns.

Listing 1: Register-Pattern-GPU.

1 { i n t s t e p ;

2 i n t m a x Steps = l o g ( max ( O1 , O2 ) ) ;

3 i n t maxThreads = max ( O1 , O2 ) / 2 ;

4 i n t i ; i n t S t a r t ;

5 i f ( b lo c k I d x . x < num

BLOCKS )

6 { i f ( t h r e a d I d x . x < maxThreads )

7 { f or ( i = 0 ; i < m ax S tep s ; i ++)

8 { i f ( t h r e a d I d x . x<maxT hr eads )

9 { TID = t h r e a d I d x . x ;

10 i f ( XORMatch [ TID + 1] == 0 )

11 { i f ( XORM atch [ TID ] == 0 )

12 { S i m

P a t t e r n [ i ] [ TID ] = 0 ; }

13 e l s e S i m

P a t t e r n [ i ] [ TID ] = 2 ;}

14 e l s e { S t a r t = 0 ;

15 i f ( XORMatch [ TID ] == 0)

16 { S i m

P a t t e r n [ i ] [ TID ] = 1 ;

17 S t a r t = 0 ;}

18 e l s e { S i m

P a t t e r n [ i ] [ TID ] = 3 ;

19 S t a r t = si ze A T [ TID ] ;

20 fo r ( j = 0 ; j <siz eA T [ TID + 1 ] ; j ++)

21 {XORMatch [ TID+ j ] =

22 XORMatch [ ( TID +1)+ j ] ; }

23 siz eA T [ TID ]+= s i ze A T [ TID + 1 ] ;

24 }}} m axT hr eads = maxT hr eads / 2 ;

25

t h r e a d f e n c e s y s t e m ( ) ;

26 } } } } r e t ur n ;

27 }

Consider a pattern such as {(Y, Y, Y, Y) , (3, 3) ,

(3)}, w here Y=(1, 2) indicates that similarity is non-

contiguous. Such patterns represent worst case sce-

narios and are u nfavorable for delta encoding from

overhead aspect. Such u nfavorable patterns are also

referred as n egative p a tterns.

We argue th at negative patterns or unfavorable

patterns are not likely to b e nefit the cause of delta

encodin g. This is due to th e diverging nature of the

overhead accruing. The task of finding d elta enco-

ding for objects, which exhibit such negative pattern

similarity, should be abandoned in favor of othe r m ore

meaningful comp utations.

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

326

Algorithm 1: ClassifySImilarityPattern( ).

1: while (Level < Thresh) do

2: Check all the nodes at this level:

3: if ( NodeValue == 0 )) then

4: case = BestCase, Exit

5: end if

6: if ( NodeValue == 1 )) then

7: case = HalfIdentical

8: end if

9: if ( NodeValue == 2 )) then

10: case = HalfIdentical

11: end if

12: if ( NodeValue == 3 )) then

13: case = Dissimilar

14: end if

15: end while

5 GPU-BASED DOCUMENT

CACHE

In this section, we describe our proposed GPU-based

In-mem ory Doc ument Cache for Fast Delta Encoding

delta encoding framework. The proposed delta enco-

ding fr amework comprises (1) similarity computation

substrate prop osed in previous section and (2) a delta

encodin g system.

We maintain the documents in their native form.

In other words, we do no t hash the documents instead

we represent them a s-they-are. This a pproach offers

several advantages: (1) Representing the documents

preserves the ir structural details whereas those cru-

cial structural details are lost after hashing. (2) Repr e -

senting documents in their native form also facilitates

obtaining their similar segments or the similarity pat-

tern. Note tha t this similarity pattern information is

very critical for determining if the similarity is good

enoug h to produce effective delta compression.

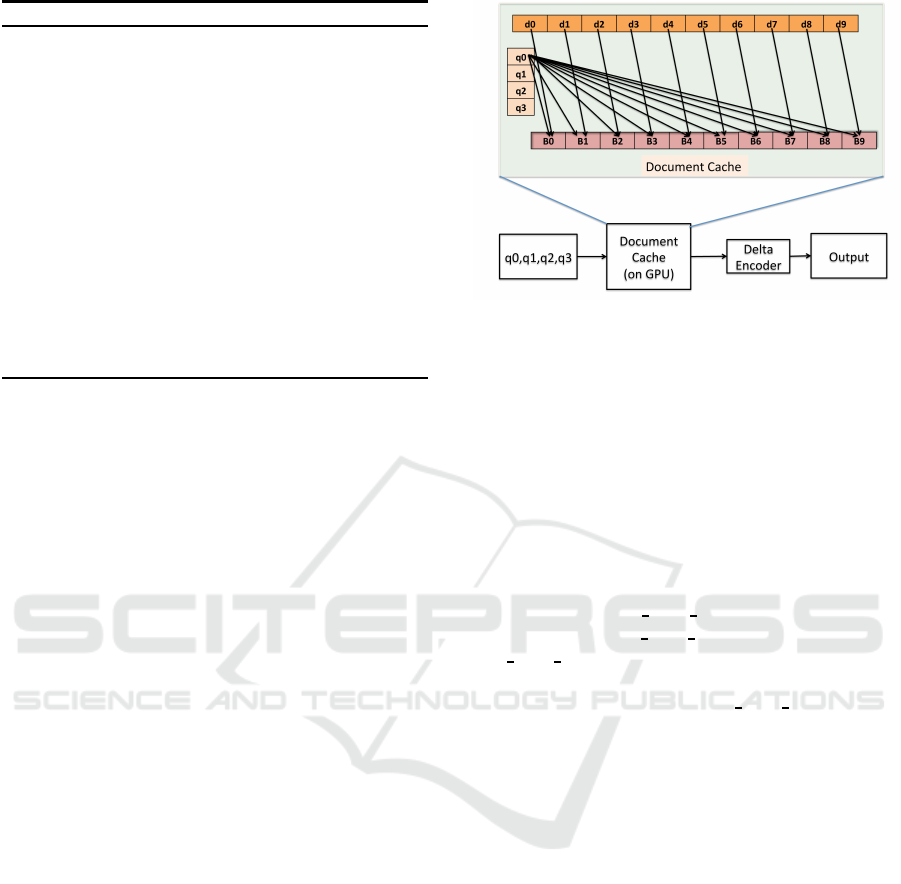

Figure 4(a) depicts our GPGPU-based document

cache and delta encoding framework. T he framework

depicts the a set of documents as input, GPU-b a sed

docume nt ca c he, a delta enco der, and output. We con-

sider a First-In-First-Out (FIFO).

Now, w e descr ibe operational aspects of the fra-

mework. The framework operates in batch mode.

Input dataset is parsed an d documents are organize d

their id-wise. A set of k input documents are presen-

ted to the d ocument cache to obtain their correspon-

ding similar documents. We refer to this set of k do-

cuments as qu e ry documents. The query documents

are search e d in document cache concurrently. If th e

docume nt cache contains document(s) similar to the

query document, the identifiers of the similar docu-

Figure 4: Block diagram of the framework comprising

GPU-based document cache and delta encoder. The docu-

ment cache is shown in detail (TOP).

ment(s) are passed to the delta encoder. Th e identifier

comprises ID(s) of the similar document(s) and the

similarity pattern.

Mapping of Similarity Computations on GPU. Let

N be the total number of entries in the documen t ca-

che and let N

Q

be the total number of entries in the

query set. Computations are organized into bloc ks in

a 3D manner as follows:

(a) blockIdx .x = N;

(b) blockIdx.y = max

ydim threadblocks;

(c) blockIdx .z = max

ydim threadblocks / N

Q

, where

max ydim threadblocks is the maximum y- or z-

dimension of a grid of thread blocks.

Note that the value of max

xdim threadblocks,

the maximum x- dimension of a grid of thread blocks,

for Nvidia GPUs with compute capab ility hig her than

3.0 is (2

31

-1), which is a relatively very high nu mber

w.r.t. the size of document cache being considered in

this work.

Figure 4(TOP) describes the mapping of docu -

ment similarity computations on to the blocks of GPU

using a document cache comprising 10 entries and a

four query documents. The figur e shows the map-

ping process of only one query, q0, for the sake of

clarity. From the figure, we can see that each GPU

block numbered as B0, B1, ···, B9 is assigned a

copy of que ry document q0 and entries d0, d1, ···,

d9, respectively. This set of co mputation forms the

blockIdx.y=0. Similarly, blockIdx .y=1 handles the

set of ten computations corresponding to query do-

cument q1. And computations due to query docu-

ments q2 and q3 a re handled by blockIdx.y=2 a nd

blockIdx.y=3, respectively.

Fast Document Similarity Computations using GPGPU

327

6 EVALUATION

We used Kepler K20 GPGPUs in our exper iments.

The K20 device comprises 2496 Cuda cores or stre-

aming processors (SPs) @706 MHz, and is equippe d

with 5 GB GDDR5 on-board memory. The compute

platform comes with CPU having following spec ifica-

tions: Intel (R) Xeon (R) CPU E5-2650 @ 2.0 0 GHz

machine running GNU/L inux. Algorithms proposed

in this paper are implemented in C/Cuda7.5.

We used dumps of Mediawiki revision metadata

for our exper iments. The data set is in XML format.

We consider each revision metadata as one document.

Each document is marked within < revision > and

< /revision > tags. Each revision document compri-

ses contributor which in turn is composed of id and

user name. Then, each revision document contains

several other information such as timestamp, com-

ment, model, format, text id, sha1 hash of the update

made under that revision.

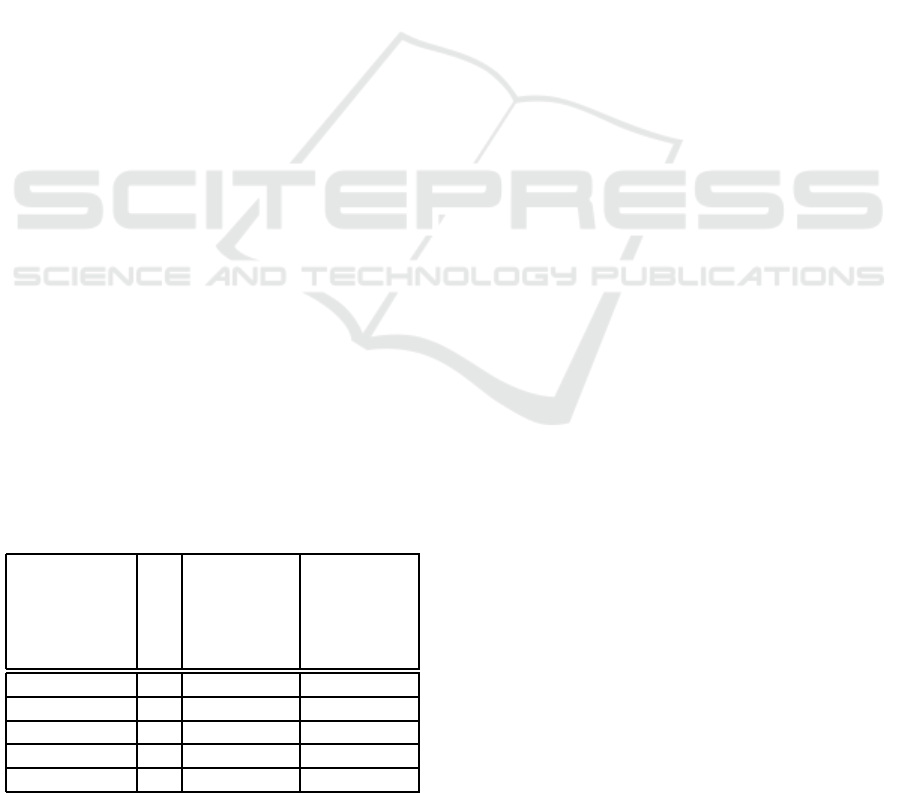

Table 1 lists details of the datasets. Wiki-57MB

dataset is 57 MB in size and contains ∼ 127000 docu-

ments. Similarly, Wiki-107MB and Wiki-1.1GB data-

sets are 107 MB and 1.1 GB in size, respectively; and

each of them comprise 236,000 and 2,364,000 docu-

ments, respectively.

Table 1: Datasets.

Name Size Num. of documents

Wiki-57MB 57 MB 126,828

Wiki-107MB 107 MB 236,086

Wiki-1.1GB 1.1 GB 2,363,912

Wiki-11GB 11 GB 23,678,264

6.1 Data Shaping O verhead

Input dataset comprising documents is par sed on

CPU. Table 2 depicts the parsing overhead. From this

table we observe that wall-clock time elapsed in data

shaping of 107 MB input document is 1.927 seconds.

From this table we also o bserve that for a 10.2X in-

crease in input size, the corresponding increase in par-

sing time is 10.16X indicating a nearly linear relation

between input size and p arsing time. The overall d ata

shaping throughput is ∼55 MB per second.

Table 2: Data Shaping overhead (on CPU).

Name Parsing (on CPU)

Wiki-107MB 1.927 seconds

Wiki-1.1GB 19.596 seconds

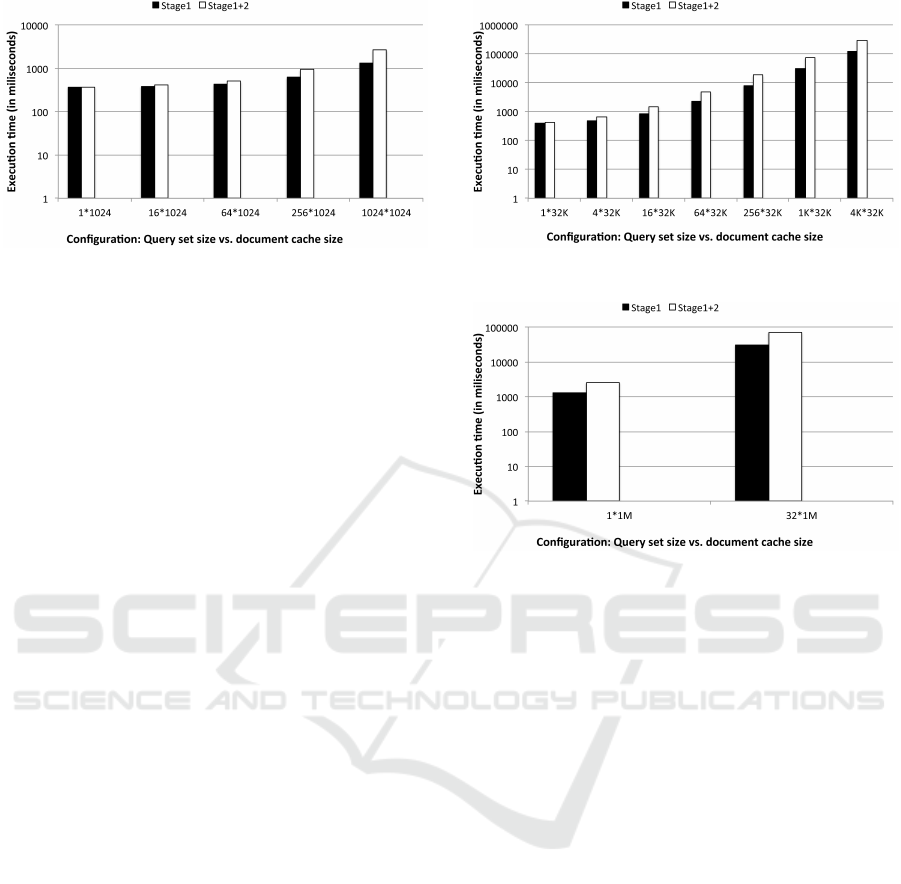

Figure 5: Throughput performance of similarity pattern al-

gorithm on GPU.

6.2 Computation Throughput

To me asure raw compute throughput of our simila-

rity computation technique described in Section 4 on

K20 GPU, we compute the similarity of one docu-

ment from that d ataset with all other documents. All

of the resulting similarity computations run concur-

rently on GPU. Consider the 107 MB dataset Wiki-

107MB which has ∼236,000 documen ts. As result of

comparing one document against all other documents

in that dataset, a total of ∼236,000 concurrent com-

putations are generated.

Figure 5 plots the compute throu ghput of the simi-

larity pattern algorithm for three wiki d atasets. Note

that the performance reported in the figure is from

execution on K- 20 GPU having 2496 Cuda cores. X-

axis represents three Wik i datasets of 57 MB, 107

MB, an d 1.1 GB in sizes. For each dataset, we expe-

riments with GPU block sizes o f 256 a nd 512 threads.

Y-axis is log scale and re presents the execution time

elapsed on GPU as milliseconds.

From Figure 5, we observe that for block sizes of

256, time elapsed in Stage 1 fo r concur rent simila-

rity computation of ∼236,000 is ∼148 milliseconds.

And the time elapsed in Stage 1 and Stage 2 combi-

ned is ∼354 milliseconds. This results in a throug-

hput of 302 MB p er second. In terms of similarity

computations, this amounts to a n overall raw com-

pute throughput of 666 similarity computations per

millisecond. When using a GPU block of 512 thre-

ads, the time elapsed on Stage 1 is 2 57 milliseconds

and that elapsed in Stage 1 and Stage 2 combined is

∼450 milliseconds, resulting in a throughput of 237

MB per second. These execution times yield a raw

compute throughput of ∼524 similarity computations

per millisecond.

Similarly, for 1.1 GB dataset, the time elapsed on

Stage 1 and Stages 1, 2 c ombined is ∼1462 milli-

seconds and ∼3524 milliseconds, respectively when

using block of 256 threads. This results in throu g-

hput of 312 MB per second. This yields a raw com-

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

328

Figure 6: Performance of small document cache on varying

size of query set.

pute throughput of ∼670 similarity computations per

millisecond. And when using block size of 512, time

elapsed on Stages 1 and 2 combined is ∼4478 millise-

conds yielding in a throughput of 245 MB per second.

And the raw compute throughput is ∼527 similarity

computations per millisecond.

6.3 Scalability

We determine the size of query documents an d docu-

ment cache empirically. To this end we conduct the

following three set of experiments: (1) Small docu-

ment cache having 1024 entries, (2) Me dium docu-

ment cache with 32K entries, and (3) La rge document

cache having 1M document entr ie s. For these set of

experiments we set the GPU block sizes as 512 thre-

ads.

Figure 6 plots perform ance of sma ll document c a-

che on varying query set size from 1 to 1 024 in steps

of 4X. X-a xis represents the configuration of simila-

rity computation. For example, 16*1024 denotes a

configuration when query set size is 16 and document

cache size is 1024. Note tha t the configur ation also in-

dicates the total num ber of documen t similarity com-

putations involved for the document cache and qu ery

set being considered. Y-axis is log scale and repre-

sents the execution time elapsed on GPU as millise-

conds.

From Figure 6, we observe that f or a document

cache of 1024 entries the time elapsed in Stage 1 va-

ries from ∼372 milliseconds to ∼1346 milliseconds

when the size of query set is varied from 1 to 1024.

From the figure, we also observe that for the same

docume nt cache the time elapsed in stages 1 an d 2

combined varies from ∼374 milliseconds to ∼2649

milliseconds. These execution tim e measurements re-

veal that fo r our small documen t cache, an increase in

query set size by 1024X results in ∼7X rise in exe-

cution time. This in dicates that medium docum ent

cache is highly scalable.

Figure 7: Performance of medium document cache on va-

rying size of query set.

Figure 8: Performance of large document cache on varying

size of query set.

Figure 7 plots pe rformance of medium document

cache on varying query set size from 1 to 16 *1024

in steps of 4X. X-axis represents the configuration of

similarity compu ta tion. Y-axis is log scale a nd repre-

sents the execution time elapsed on GPU in millise-

conds. From Figur e 7, we observe that for a do cument

cache of 32K entries, where K=1024, the time elap-

sed in Stage 1 varies from ∼391 milliseconds to ∼125

seconds when the size of query set is varied from 1

to 4K. From the figure, we also observe that for the

same documen t cach e the time elapsed in stages 1 and

2 combined varies from ∼430 m illiseconds to ∼ 293

seconds. These measurements reveal that for our me-

dium d ocument cache, an increase in que ry set size by

4096X resu lts in ∼682X rise in execution time. T his

indicates that medium document cache is scalable.

Similarly, Figure 8 plots performance of our large

docume nt cache. The query set of size 1 and 32 are

used. X-axis represents the configu ration of similarity

computation and log-scale Y-axis represents the time

in milliseconds. From this figure, we observe that for

our large document cache, the time e lapsed in execu-

tion is ∼2.6 seconds and ∼71.85 seconds for query

set of size 1 and 32, respectively.

Fast Document Similarity Computations using GPGPU

329

6.4 Comparison with Has hing

Now, we a nalyse the performance of hashing-b ased

approa c h. Fir st, we measure performance of hashing-

based approach. To this end , we use different values

of window size, average chunk size, minimum chunk

size, and ma ximum chunk size to understand the ef-

fect of these param eters on the performance. These

measurements are carried out Intel CPU. We use four

set of configurations whic h are as follows:

Config ID 1: Window size = 24 bytes; Average

chunk size = 32 bytes; Minimum chunk size = 16 by-

tes; Maximum chunk size = 48 bytes;

Config ID 2: Window size = 24 bytes; Average

chunk size = 64 bytes; Minimum chunk size = 32 by-

tes; Maximum chunk size = 96 bytes;

Config ID 3: Window size = 24 bytes; Average

chunk size = 128 bytes; Minimum chunk size = 64

bytes; Maximum chunk size = 192 bytes;

Config ID 4: Window size = 48 bytes; Average

chunk size = 32 bytes; Minimum chunk size = 16 by-

tes; Maximum chunk size = 48 bytes;

Table 3 depicts the performance outcome of the

hashing-based approach under these configu rations.

From this table, we n ote that the performance of

hashing-based approach is independent of the values

of window sizes, average chunk sizes, minimum and

maximum chunk sizes. This observation holds true

when size of the input is increased by 10X. The

throughput of the hashing-based approach is in the

range of 28-29 MB/sec. Specifically, when using

smaller dataset of 107 MB, the throughput observed is

28.1 MB/sec. And when the larger dataset of 1.1 GB

is used, the throughput of hashing -based approach is

29.4 MB/sec.

The similarity co mpuatation framework proposed

in this p aper achieves a throughput in the range of

237-312 MB per second (refer to 6.2). Alternatively,

our novel appr oach result in up to 10X higher throug-

hput.

Table 3: Chunking and Hashing Time ( on CPU).

Dataset ID Parsing Parsing

+ Chunking + Chunking

(Rabin) (Rabin)

+ Hashing

(Murmur3)

Wiki-107MB 1 3.768 sec 3.803 sec

Wiki-107MB 2 3.756 sec 3.808 sec

Wiki-107MB 3 3.764 sec 3.804 sec

Wiki-107MB 4 3.761 sec 3.804 sec

Wiki-1.1GB 1 36.951 sec 37.375 sec

7 CONCLUSION

Several Big Data problems involve computing si-

milarities b etween entities, such as records, docu-

ments, etc., in timely manner. Recent studies point

that similarity-based deduplication techniques are ef-

ficient for document databases. Delta encoding-like

techniques are commonly leveraged to compute si-

milarities between documents. Operational require-

ments dictate low latency constraints. The previous

researches do not consider pa rallel computing to deli-

ver low latency delta encoding so lutions.

Throu gh this paper, we made a two-fo ld contri-

bution in context of delta encoding problem occur-

ring in document databases: (1) developed a paral-

lel processing- based technique to compute similari-

ties between do c uments, an d (2) designed a GPU-

based document ca c he to accelera te the perfo rmance

of delta enc oding pipeline. We experiment with real

datasets. Our experiments demonstrate the effecti-

veness of GPUs in similarity computatio ns. Speci-

fically, we achieve throughput of more than 500 si-

milarity computations per millisecond. And the simi-

larity compuatation fram ework proposed in this paper

achieves a throug hput in the range of 2 37-31 2 MB per

second which is up to 10X higher throughput when

compare d to the ha shing-based approaches.

REFERENCES

Apache Cassandra. http://cassandra.apache.org/.

Apache HBase. http://hbase.apache.org/.

Binary JSON. http://bsonspec.org/.

JSON. http://www.json.org/.

Li, D., Wang, Q., Guyot, C., Narasimha, A., Vucinic, D.,

Bandic, Z., and Yang, Q. (2015). Hardware accele-

rator for similarity based data dedupe. In NAS, pages

224–232.

MacDonald, J. (2000). File system support for delta com-

pression. PhD thesis, Masters thesis. D ep. of EECS ,

University of California at Berkeley.

Mogul, J. C., Douglis, F., Feldmann, A., and Krishna-

murthy, B. (1997). Potential benefits of delta encoding

and data compression for http. In ACM SIGCOMM

Computer Communication Review, volume 27, pages

181–194.

MongoDB. http://www.mongodb.org/.

Ouyang, Z., Memon, N., Suel, T., and Trendafilov, D.

(2002). Cluster-based delta compression of a col-

lection of fil es. In WISE, pages 257–266.

Shilane, P. N., Wal lace, G. R., and Huang, M. L. (2016).

Preferential selection of candidates for delta compres-

sion. US Patent 9,262,434.

Suel, T. and Memon, N. (2002). Algorithms for delta com-

pression and remote fi le synchronization.

KDIR 2018 - 10th International Conference on Knowledge Discovery and Information Retrieval

330

Trendafilov, D., Memon, N., and Suel, T. (2002a). zdelta:

An efficient delta compression tool.

Trendafilov, D., Trendafilov, D., Memon, N., Memon, N.,

Suel, T., and Suel, T. (2002b). zdelta: An efficient

delta compression tool. Technical report.

Wikimedia. https://dumps.wikimedia.org.

Xia, W., Jiang, H., Feng, D., and Tian, L. (2016). Dare: A

deduplication-aware resemblance detection and elimi-

nation scheme for data reduction with low overheads.

IEEE Trans. on Computers, 65(6):1692–1705.

Xia, W., Li, C., Jiang, H., Feng, D., Hua, Y., Qin, L., and

Zhang, Y. (2015). Edelta: A word-enlarging based

fast delta compression approach. In HotStorage 2015,

Santa Clara, CA.

XML. http://www.w3.org/XML/.

Xu, L., Pavlo, A., Sengupta, S., Li, J. , and Ganger, G. R.

(2015). Reducing replication bandwidth for distri-

buted document databases. SoCC, pages 222–235.

ACM.

YAML: YAML Ain’t Markup Language. http://www.

yaml.org/.

Yang, Q. and Ren, J. (2012). Pre-cache similari ty-based

delta compression for use in a data storage system.

US Patent App. 13/366,846.

Zhang, H., Chen, X., Xiao, N., and Liu, F. (2016a). Ar-

chitecting energy-efficient stt-ram based register file

on gpgpus via delta compression. In Proc. of 53rd An-

nual Design Automation Conference, page 119. ACM.

Zhang, X., Li, J., Wang, H., Zhao, K., and Zhang, T.

(2016b). Reducing solid-state storage device write

stress through opportunistic in-place delta compres-

sion. In FAST 2016, pages 111–124.

Fast Document Similarity Computations using GPGPU

331