Comparing Real Walking in Immersive Virtual Reality and in Physical

World using Gait Analysis

Andrea Canessa, Paolo Casu, Fabio Solari and Manuela Chessa

University of Genoa, Dept. of Informatics, Bioengineering, Robotics and Systems Engineering, Italy

Keywords:

Head-mounted Display, HTC-Vive, Walking in VR, Real Walking, Biomechanical Analysis.

Abstract:

One of the main goals of immersive virtual reality is to allow people to walk in virtual environments in an

ecological way. Several techniques have been developed in the literature: the use of devices such as omni-

directional treadmills, robotic tiles, stepping systems, sliding-based surfaces and human-sized hamster balls;

or techniques such as the walking-in-place. Conversely, real walk requires the precise tracking of the user,

performed on a large area, in order to allow him/her to explore the virtual environment without limitations.

This can be achieved by using optical tracking systems, or low cost off-the shelf devices, such as the HTC-

Vive tracking system. Here, we consider the latter solution and we aim to compare real walking in a virtual

environment with respect to walking in a corresponding real world situation, with the long term goal of using

it in rehabilitation and clinical setups. Moreover, we analyze the effect of having a virtual representation of the

user’s body inside the virtual environment. Several spatio-temporal gait parameters are analyzed, such as the

total distance walked, the patterns of velocity in each considered path, the velocity peaks, the step count and

step length. Differently from what can be typically found in the literature, in our preliminary results we did

not find significant differences between real walk in virtual environments and in a real world situation. Also

having the virtual representation of the body inside virtual reality does not affect the gait parameters. The

implication of these results for future research, in particular with respect to the specific considered setup, are

discussed.

1 INTRODUCTION

In this paper, we aim to compare real walking in a

virtual environment (VE) with respect to walking in

a corresponding real world situation, to investigate

whether it is possible a natural walk in virtual real-

ity (VR). Moreover we investigate the role of having

a virtual body in walking tasks.

The problem of walking in immersive VR has

been extensively addressed by researchers in the last

years, due to its implication in the reduction of sick-

ness, in the increase of the sense of presence and in

the improvement of the overall user experience (Kim

et al., 2017). Moreover, natural walking in immersive

VR can improve the natural interaction of the users

inside the VEs, since they can freely explore it.

In the literature (see (Nabiyouni and Bowman,

2016) for a taxonomy of walking-based locomotion

techniques in VR), the problem of achieving unlim-

ited area for locomotion in VR has been addressed by

developing various devices such as omni-directional

treadmills, robotic tiles, stepping systems, sliding-

based surfaces and human-sized hamster balls. All

these techniques employ stationary or moving sur-

faces to allow the user staying (almost) fixed in a lim-

ited physical space while walking in VR. To avoid

the use of external devices, several systems use the

walking-in-place technique. Also in this case, users

can explore a VE larger than the physical environ-

ment. Nevertheless, all these techniques are quite far

from providing the user an experience similar to the

one experienced by real walking. The main problems

hampering real walking in VR are related to the lack

of systems capable to track the users’ movements in

a sufficiently large portion of space, and capable to

transfer users’ movements into VR. Nowadays, typi-

cal setups for implementing real walking are based on

optical tracking systems, e.g. see (Nabiyouni et al.,

2015; Janeh et al., 2017b).

The different solutions to allow people’s locomo-

tion in VR have been analyzed and compared in the

literature. In (Nabiyouni et al., 2015), the authors

compared a high-fidelity real walking with a low-

fidelity gamepad technique and a medium-fidelity lo-

comotion interface (the Virtusphere). 16 optitrack

flex 3 cameras tracked the users movements at a 100

Canessa, A., Casu, P., Solari, F. and Chessa, M.

Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis.

DOI: 10.5220/0007380901210128

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 121-128

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

121

Hz sampling frequency. As a result, in line with the

McMahan hypothesis (McMahan et al., 2012), the use

of the virtusphere (medium-fidelity) produced worst

performance than those obtained using the gamepad

(low-fidelity).

By considering real walking setups, one of the

main issues is the fact that isometric mappings, i.e.

replicating the exact amount of movement of the

users’ head inside the VE, are often misperceived by

people walking in VR (Steinicke et al., 2010; Janeh

et al., 2017b). In particular, people do not perceive

distances in VR as in a corresponding real-world sit-

uation. To mitigate the issue, in (Steinicke et al.,

2010), translation gains are introduced, though the re-

sults presented in (Janeh et al., 2017b) show that fur-

ther investigations on this aspect are needed. Also

the effect of having a virtual representation (i.e. an

avatar) inside the VE has been addressed, with re-

spect to depth perception. In (Valkov et al., 2016),

the authors presented the results of an evaluation of

the users distance perception with different avatar rep-

resentations, by showing that the anthropometric fi-

delity of the avatar has stronger effect on the distance

perception than a realistic representation.

Several researchers addressed the problem of un-

derstanding whether low-cost and off-the-shelf solu-

tions can be valid for scientific purposes, and whether

these setups, designed for entertainment purposes,

cause undesired effects on the users. As an exam-

ple, in (Chessa et al., 2016b), the authors investigated

the quality of a commercial low-cost VR headset, the

Oculus Rift DK2, with respect to the sense of pres-

ence (Slater et al., 1994) and the cybersickness. More

recently, in (Niehorster et al., 2017), an analysis of the

precision and accuracy of the HTC-Vive VR headset

is presented. The authors conclude that such a system

is maybe affected by some errors due to the fact that

a tilting of the reference plane can occur, especially

when the sensors loose the tracking. Nevertheless, the

quantitative error measures they reported are not sig-

nificant with respect to the application described in

this paper.

We should also take into account that, in the lit-

erature, several studies show the relationship among

walking in VR and gait instability. In (Hollman et al.,

2007), the authors analyzed ten healthy volunteers

walking on an instrumented treadmill in a VR envi-

ronment and a non-VR environment. They showed

that subjects walked in the VR environment with in-

creased magnitudes and rates of weight acceptance

force and with increased rates of push-off force. The

gait deviations reflect a compensatory response to vi-

sual stimulation that occurs in the VR environment,

suggesting that walking in a VR environment may in-

duce gait instability in healthy subjects. Previoulsy,

in (Mohler et al., 2007), the authors showed that gait

parameters within a head-mounted display (HMD)

VE are different than those in the real world. A

person wearing a HMD and backpack walks slower,

and takes a shorter stride length than they do in a

comparable real world condition. Though comparing

walking in VE with respect to walking in real condi-

tions, all these previous works did not consider nat-

ural walking, but they addressed the problem by us-

ing treadmills. This is probably due to technologi-

cal constraints, since the possibility of implementing

low cost natural walking (yet in a limited space) is a

quite recent achievement. A recent paper (Janeh et al.,

2017a) consider real walking in immersive VE with

an HTC Vive HMD, by comparing the behaviour of

adults and younger people. The authors showed that

older adults walked very similarly in the real and VE

in the pace and phasic domains, which differs from

the results found in younger adults. In contrast, the

results indicated a different base of support for both

groups while walking within a VE and the real world.

They also considered non-isometric mappings, and

they found in both younger and older adults an in-

creased divergence of gait parameters in all domains

correlating with the up- or down-scaled velocity of vi-

sual self-motion feedback.

In this paper, we decided to implement and an-

alyze walking by using first-person perspective (i.e.

direct mapping between the head position of the user

and the virtual camera inside the VE). In particular,

we considered a commercial low-cost solution that we

used both for the VR setup (i.e. for the tracking of

the users’ movements, and thus for the implementa-

tion of real walking) and also for the gait analyzes.

The main goal is to achieve natural walking in a sim-

ple setup, affordable by everyone, with the long term

aim of using it in rehabilitation and clinical setups. A

precise biomechanical analysis of the locomotion in

our setup is out of the scope of this paper, and it will

be considered in a future work. Indeed, the rationale

underlying our contribution is the validation of a low-

cost setup, based on the HTC-Vive device, designed

for a future clinical use. As an example, individuals

with neurodegenerative diseases such as Parkinsons

disease, Multiple Sclerosis, dementia syndromes due

to the deficits of motor and cognitive functions, typ-

ically present gait dysfunctions. Virtual Reality rep-

resents an attractive option to investigate, in a con-

trolled way, the locomotor difficulties by replicating

the real-life situations when dramatic and potentially

dangerous gait problems occur (e.g., walking through

a crowded space, crossing the street at the green light

or entering an elevator before the door closes). In

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

122

particular, there is a great need of a refinement of

VR to represent more closely the real-life situations

when gait problems occur for a better understand-

ing of this phenomenon and for new and personalized

treatments. Our aim is to have an affordable VR sys-

tem with performances that are well suited to specific

clinical protocols based on real walking.

Here, in order to understand how people perceive

depth in VR, and the behaviour of walking in an im-

mersive VE, we considered three different conditions:

(i) real walking in a VE, without a representation of

own body inside VR; (ii) real walking in a VE, with a

virtual representation of own body inside VR (in par-

ticular an avatar of the users’ legs); (iii) walking in a

real-world situation, consistent with the VE.

The main original contributions of this paper are:

• the quantitative evaluation of real walking in

VR, by analyzing gait parameters. In particular,

we will compute and compare the total distance

walked by the users, the number of steps to cover

a given part of the path, maximum peak veloc-

ity, step size, by considering the three described

conditions. Moreover, we analyze the velocity

profile of walking. It is worth noting that we do

not take into consideration translation gains tech-

niques, such as the one proposed by (Steinicke

et al., 2010; Janeh et al., 2017b);

• a comparison between the behavior of users who

have not a virtual representation of themselves in-

side the VE with respect to users who can see

an avatar replicating their movements inside the

VE (Chessa et al., 2016a; Valkov et al., 2016).

The paper is organized as follows: in Section 2

we describe the setup, the experimental protocol and

the gait parameters we consider and analyze; in Sec-

tion 3 we present and discuss the outcome of the ex-

periments; finally in Section 4 we discuss the possible

implications of the obtained results and how to further

analyze and improve our setup.

2 MATERIALS AND METHODS

2.1 Participants

Eighteen healthy volunteer subjects (10 female and 8

male, median ages 24, range 19-33) participated in

this study and completed the experiment. The par-

ticipants are students or members of our University.

All subjects gave written informed consent in accor-

dance with the Declaration of Helsinki. They wore the

HTC-Vive head-mounted-display for about 15 min-

utes during the experiment and all of them were naive

7.0m

Base

Station A

6.4m

Base

Station B

0.85m

x

z

Goal

Start

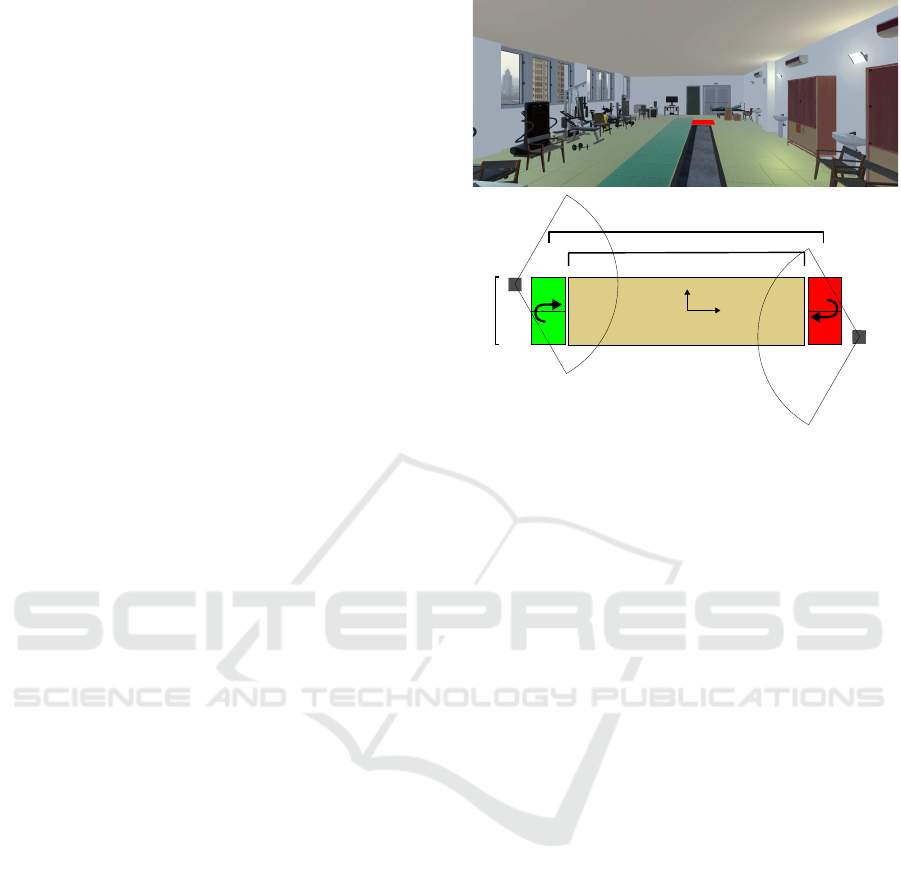

Figure 1: Schematic setup of experiment. (Top) The partic-

ipants view by wearing the HMD. (Bottom) A sketch of the

setup representing the position of the base stations, inver-

sion zones and walkway with the dimensions. The reference

system is centered on walkway.

to the experimental conditions, wearing their normal

clothes. The total time per participant, including

pre-questionnaires, instructions, experiment and post-

questionnaires, was 30 minutes.

2.2 Experimental Setup

The experiment has been performed in a laboratory

room with a walking free space of 8m × 2m in size.

During the experiment, the room was darkened in or-

der to reduce the participant’s perception of the real

world while immersed in the VE and not to have any

lights that can disturb HTC-Vive infrared-cameras.

The setup has been developed by using Unity 3D:

models and animations are taken from free libraries

publicly available on the web (e.g., Sketchfab, Tur-

bosquid, Mixamo), and then fitted to our needs. We

exactly replicated in VR an existing room and walk-

way used for biomechanical experimental sessions.

In order to allow the subjects to identify real walk-

ing space inside the VE, we added a virtual walkway

(6.4m× 0.85m). At the end of the walkway two inver-

sion zones (zone 1 and zone 2) highlight where sub-

jects have to make a 180deg turn and start walking in

the opposite direction (Figure 1). Green colored zone

represents the start, red colored the end.

The participants wore an HTC-Vive HMD for

the stimulus presentation, which is equipped with a

AMOLED display with resolution of 1080 × 1200

pixels per eye, a refresh rate of 90 Hz and an approx-

Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis

123

imate 110

◦

diagonal Field of View (FOV). The sys-

tem is equipped with two base stations that are able to

detect and track the 3D positions of the headset and

of the two Vive controllers, by using infra-red light.

A VR-ready laptop, equipped with an Intel proces-

sor with Core i5 3.5GHz, 8 GB of RAM and Nvidia

GeForce 1060 (6GB VRAM) Graphics Cards, has

been used for rendering, system control and record-

ing.

The base stations were positioned face to face at a

distance of 8 meters and at a height of 2 meters from

the floor (see Figure 1) and connected by the provided

sync cable. It is worth noting that this distance is dif-

ferent from the one recommended by HTC, and that

in (Niehorster et al., 2017) the authors reported some

measurements errors when the setup looses the track-

ing due to a distance between the base stations bigger

than the recommended one. Nevertheless, we decided

to implement this solution in order to be compliant

with the real room and setup (specifically the size of

the walkway) at the Hospital, where we are going to

continue our experimental validation. Moreover, we

previously verified that our setup is not affected by

tracking issues. In particular, the headset and the con-

trollers are always visible to the base stations, which

provide continuous measurements.

We have decided to use the HTC-Vive controllers,

not for tracking the users’ hands and for interacting

with objects in the scene, as they have been devel-

oped for, but for detecting and tracking the position

of the users’ legs. For this reasons, each participant

performed the entire experiment with the HTC-Vive

wireless controllers tied to the lateral compartment of

the left and right legs, directly over the muscle per-

oneus longus. The controllers were used to record

both 3D leg positions during the walk and for ren-

dering leg models replicating the users’ participant

movements in the VE.

2.3 Experimental Protocol

In order to assess cybersickness issues, all the partici-

pants filled out the Simulator Sickness Questionnaire

(SSQ) (S. Kennedy et al., 1993) immediately before

and after the experiment. The questionnaire consists

of 16 questions corresponding to symptoms that are

rated by participants in terms of severity on a Lik-

ert scale from 1 (none) to 4 (severe). These symp-

toms include nausea, burping, sweating, fatigue and

vertigo. Furthermore, the Slater-Usoh-Steed presence

questionnaire (SUS PQ) (Slater et al., 1998; Slater,

1999) was filled out after the experiment.

Each participant was asked to walk at their nor-

mal pace along the virtual (or real) walkway, without

stepping out, and moving between the two zones with

the purpose to reach red zone (the goal) in each trial.

The two zones alternatively switch their color when-

ever the subject reaches the red zone, moving the goal

to the other side of the walkway. Therefore, subjects

turned on themselves and kept walking in the opposite

direction. This was repeated 8 times for each condi-

tion. The experiment included 3 conditions, thus 24

total trials :

1. VR

avatar

: Real walking in the VE along the walk-

way for 8 trials, where the participants see the ren-

dering of the leg models inside of VE. We mea-

sured the leg length of each participant to rescale

proportionately his/her virtual model, visible dur-

ing this configuration.

2. VR: Real walking in the VE along the walkway

for 8 trials, where we disable the leg models ren-

dering, thus no visual feedback of the user’s body

is present.

3. Real: Real walk without HMD for 8 trials, on a

real-world path identical to the one inside the VE.

The “Real” condition has been always presented as

the last in the experiment, while “VR

avatar

” and “VR”

conditions have been permuted.

We recorded the 3D positions of the HMD and of

the two controllers fixed on the users’ legs.

2.4 Gait Quantities Estimation

From the raw 3D positions of the HMD (i.e. of the

users’ head) and of the two controllers (thus indicat-

ing the position of the users’ leg) we have computed

the following quantities:

• Walked Distance: the distance between the sub-

ject’s position of two successive inversion points

(see Fig. 2, black lines)

• Stride length: it is the distance between successive

points of heel contact of the same foot. Since the

HTC-Vive controllers were attached on the mid-

dle portion of the legs we measured the instant of

heel strike looking at the horizontal controller dis-

placement (HCD) along the z axis. We computed

HCD from the difference zleg

le f t

− zleg

right

. Heel

strikes for the left foot occur at roughly the same

instant as the HCD reaches a maximum (or a mini-

mum for the right foot) (see Fig. 2, red lines, black

dots). We computed the stride length as the dif-

ference between the controller positions at the in-

stants of two successive peaks of the HCD curve.

• Step length: it is the traveled distance from one

HTC-Vive controller (swing leg) with respect to

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

124

0 10 20 30 40 50 60 70

80

Time [s]

-4

-2

0

2

4

0

1

2

3

Velocity [m/s]

Movement [m]

Left leg

Right leg

Real

VR

VR

avatar

-4

-2

0

2

4

0

1

2

3

Velocity [m/s]

Movement [m]

Time [s]

0 10 20 30 40 50 60 70 80

Z

HCD

0 10 20 30 40 50 60 70

80

Time [s]

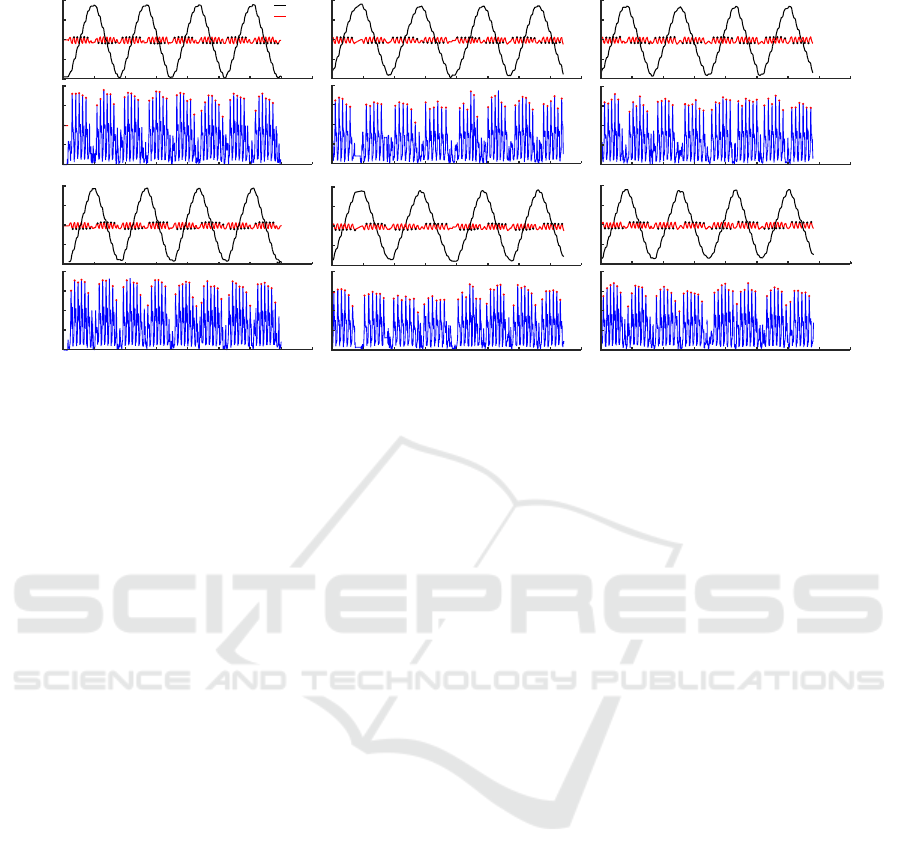

Figure 2: Example of data from one participant. Each column show data for one of the three conditions. First and second

rows show data for the left leg. The third and fourth show data for the right leg. In each subpanel black lines represent the

time course of the z-position, red lines the horizontal controller displacement (HCD) and blu lines represent the modulus of

the velocity profile. Black dots mark the maximum and minimum of the HCD curve used to identify the heel contact time

points. Red dots mark the peak swing velocity.

the other (stance leg) between the heel strike oc-

currences of each foot. In healthy subjects this

parameter result about half stride length. We com-

puted the step length as the difference between the

swing controller position at its heel strike (maxi-

mum of the HCD curve) and the distance between

the controller position at its heel strike (previous

minimum of the HCD curve).

• Step count: it is defined by the number of steps

taken to cover the entire path, for each trial.

• Cadence: it is the number of steps per unit time

measured in steps/minute. We divided the step

count by the time interval between the first and

the last step in each trial and normalized with re-

spect to one minute.

• Peak Velocity: it is the maximum value of the ve-

locity. It is measured in meters per second. We

computed peak velocity by looking at the peaks

of the derivative of the controller positions (see

Fig. 2, blue lines, red dots)

• Velocity profile: it is a window of 1.2s centered on

the peak velocity.

To perform all the statistical analysis for the afore-

mentioned parameters, we computed the average val-

ues across trial (for the walked distance) and across

step (for the other parameters) for each subject and

for each condition (Real, VR, VR

avatar

).

3 RESULTS

3.1 Biomechanics of Walking

As we have discussed in Section 2, here we an-

alyze the biomechanics of walking through spatio-

temporal parameters to evaluate motor performances

in three different conditions compared to each other:

real walking (Real), virtual walking (VR) and vir-

tual walking with users body representation in VE

(VR

avatar

). Statistical analysis was performed with

Wilcoxon rank sum test at the 5% significance level.

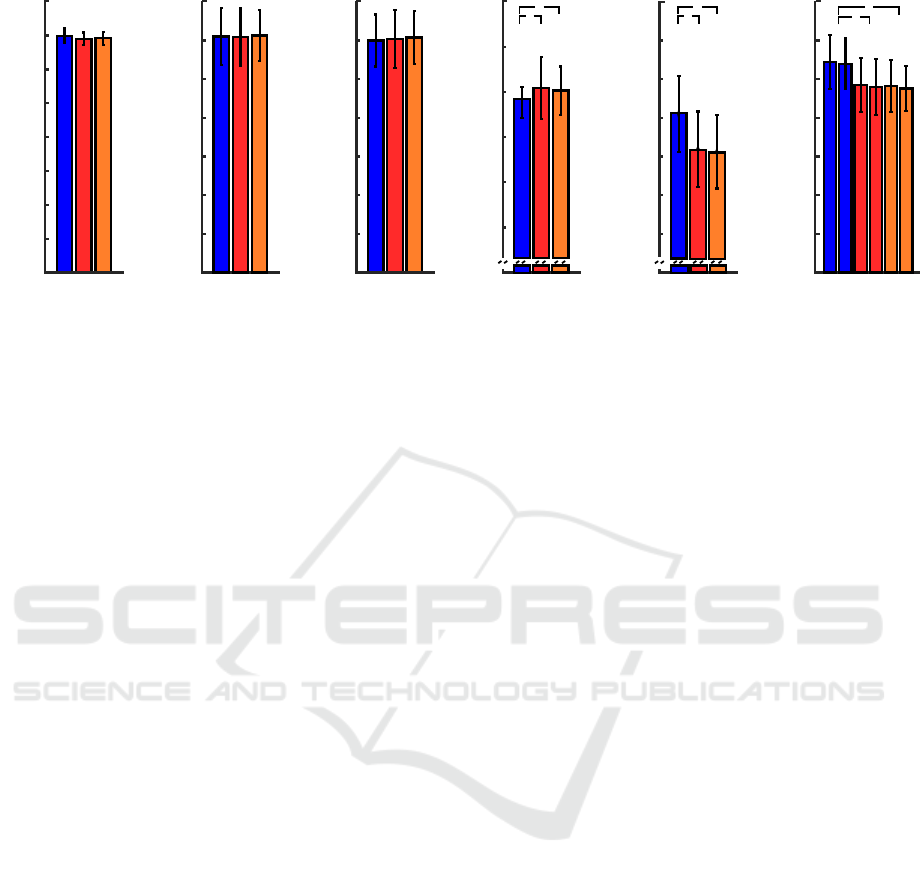

Figure 3 summarizes the mean values and stan-

dard deviations of the considered biomechanical

quantities, averaged across participants for the three

conditions.

We first analyze all the trajectories (all the tri-

als, for each subject) in each considered condition

(Real, VR and VR

avatar

), thus computing the total

distance traveled in each trial. Each participant was

asked to walk to reach the inversion zones for both

the real condition and two virtual conditions. Our re-

sults show that participants travel in the correct way

the distances presented to them in all conditions, as

shows in Figure 3, and statistical analysis produces no

significant differences among real and virtual condi-

tions (p= 0.0718 and p=0.2473 respectively for Real

vs. VR and Real vs. VR

avatar

). Therefore, we can

have a first indication that there is no difference be-

tween real and virtual behaviors, in opposition to pre-

vious perceptual studies that reported understimation

Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis

125

0

1

2

3

4

5

6

7

8

Real

VR

VR

avatar

Walked Distance [m]

4

6

8

10

12

14

Step Count

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

Step length [m]

0

0.2

0.4

0.6

0.8

1

1.2

1.4

Stride length [m]

0

0.5

1

1.5

2

2.5

3

3.5

Peak swing velocity [m/s]

L R L R L R

75

85

95

105

115

125

135

Cadence [step/min]

0

*

*

*

*

*

*

0

Figure 3: Mean and standard deviation in each condition of walking for spatio-temporal parameters. On step count, cadence

and peak velocity significant differences between Real and two virtual conditions are highlighted.

and depth compression in a VE (Valkov et al., 2016;

Janeh et al., 2017b). It is worth noting that the contri-

bution of having a virtual representation of the users’

legs does not emerge, probably due to the fact that

no depth compression occurs also in the standard VR

setup without avatar.

To obtain a finer analysis at the level of the single

stride/step we first analyzed the stride length. From

the analysis no significant effect of immersive walk-

ing in VE emerged (p=0.788 and p=0.6464 respec-

tively for Real vs. VR and for Real vs. VR

avatar

).

The same result arises from the estimation of the

step length. It is worth to note that these values

are consistent with the stride length, which is about

double in healthy subjects. As for stride length, the

Wilcoxon rank sum test produces no significant dif-

ferences (p=0.987, p=0.861 for Real vs. VR and Real

vs. VR

avatar

).

On the contrary, the step count shows significant

differences between real and virtual conditions. The

obtained values show that in a VE people tend to

make a slightly greater number of steps. Mean step

count value for Real condition (9.45± 0.68 step) is

significantly smaller than mean step count values for

the two virtual conditions (VR: 10.48± 1.24, p=0.01;

VR

avatar

: 10.5± 1.01, p=0.002).

Cadence is defined as the number of steps per

unit time (steps/minute). The obtained values for

cadence are linked to the previously computed step

count value, indeed we still have significant differ-

ences between real and virtual conditions (p= 0.0018

for Real vs. VR and p=0.0023 for Real vs. VR

avatar

).

We also analyzed velocity, to assess and to com-

pare the peak velocity during the swing phase for each

foot. Mean peak values show a difference (p < 0.05

for Real vs. VR and Real vs. VR

avatar

, computed

for left and right leg) between real and virtual condi-

tions, with greater velocity during real walk (2.72±

0.35 m/s for left leg and 2.7±0.33 for the right leg)

compared to walking in VE (VR: 2.42 ± 0.36 m/s

and 2.39 ± 0.36 m/s respectively for left and right

leg, VR

avatar

: 2.41±0.34 and 2.38±0.29 respectively

for left and right leg). This result is in agreement with

results in the literature that reported an evident reduc-

tion of velocity in VE with respect to a real world

situation (Nabiyouni et al., 2015).

It is worth noting that in our setup the Real con-

dition was always presented at the end of the exper-

iment. This can have an influence in the presented

results, we should further analyze.

Despite this significant difference in peak velocity,

it is evident (as shown in Figure 2) that there is a ve-

locity pattern that is repeated in similar way in all the

three conditions. Since the values between left and

right leg are very similar, we used the average values

for the following analysis. Therefore, the analysis of

the average velocity profile (across trial and subjects)

for each condition, allowed us to assess whether there

is an actual correspondence and overlapping between

real and virtual conditions. This is qualitatively il-

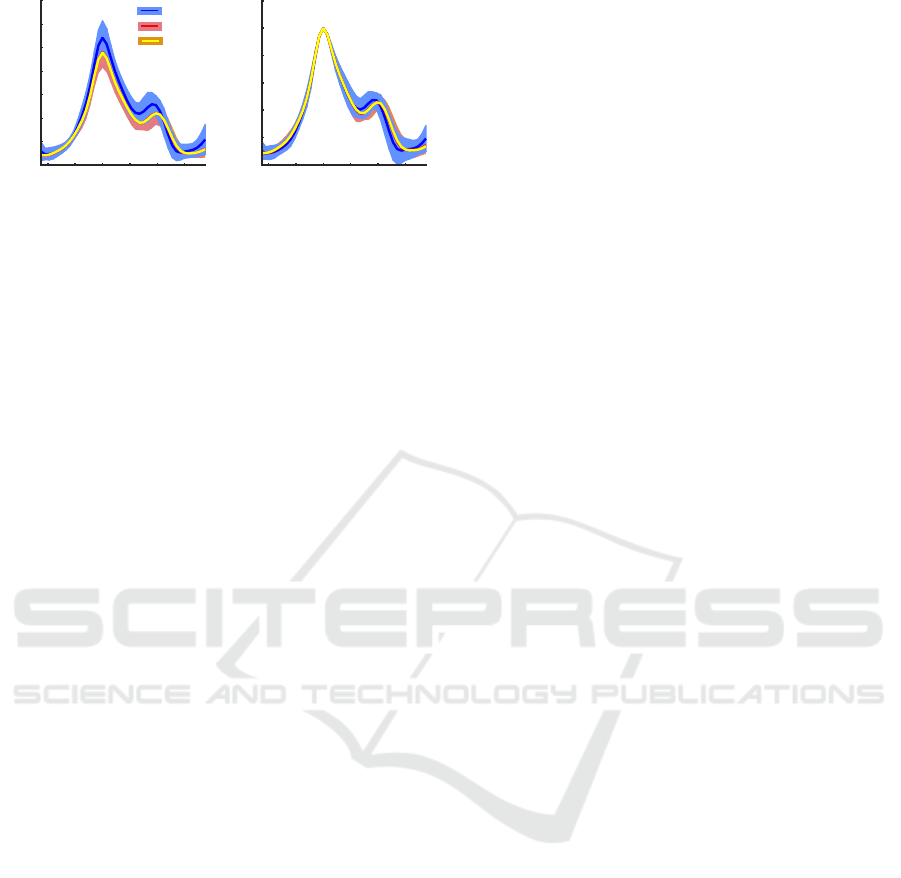

lustrated in Figure 4(left), in which the average pro-

files for all conditions are shown with their associated

standard deviations. The similarity among the con-

ditions is even more evident if we look at the nor-

malized profiles with respect to maximum value for

each condition. Indeed, Figure 4 shows how such pro-

files are extremely repeatable. The statistical analysis

of the average quadratic error between the mean pro-

files in the three conditions, all normalized as men-

tioned above, does not appear to be a significant dif-

ference (p= 0.231 e p=0.112 Real vs. VR and Real

vs. VR

avatar

). Such a result could be the confirma-

tion that we have achieved a natural walk behaviour

in virtual environments.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

126

-400 -200 0 200 400 600

0

0.5

1

1.5

2

2.5

3

3.5

-400 -200 0 200 400 600

0

0.2

0.4

0.6

0.8

1

1.2

Time [ms] Time [ms]

Velocity [m/s]

Velocity [a.u.]

Real

VR

VR

avatar

Figure 4: (left) Mean velocity profile and (right) normalized

mean profile for Real, VR and VR

avatar

conditions.

We can also say that having a virtual representa-

tion of the users legs has not led to any change in any

of the parameters compared. Nevertheless, it is worth

noting that this result could be affected by the reduced

field of view of HMD. This fact will be the focus of

future analysis.

3.2 Self-reported Assessment through

Questionnaires

The SSQ score averaged across all the participants

is 10.39 ± 10.65 before the experiment, and 12.46

±13.87 after the experiment. The scores indicate

overall low simulator sickness symptoms for walk-

ing with an HMD (p = 0.61). Moreover, the analysis

of the sub-scores of the SSQ indicate that no specific

domains of simulation-related sickness were changed

by VR exposure: we measured a mean N (Nausea)

score of 8.48±11.74, a mean O (Oculomotor) score

of 11.79±11.4 and a mean D (Disorientation) score

of 12.37± 20.2. The mean SUS PQ score for the

sense of feeling present in the VE was M = 2.8 ±1.98,

which indicates a moderate sense of presence.

4 CONCLUSION

In this paper, we have addressed an important prob-

lem related to interaction in immersive virtual real-

ity: natural walking inside virtual environments. Re-

searchers have addressed such a problem by devel-

oping systems and techniques that allow people to ex-

plore VE, though having a limited physical interaction

spaces (i.e. by using treadmills, stepping systems,

or walk-in-place techniques). Recently, new tech-

nologies allow people to precisely track the 3D po-

sition of the users and to translate such measures into

position and orientation of the virtual cameras, thus

replicating human movements inside the VR. This

is typically achieved by using optical tracking sys-

tems (Janeh et al., 2017b). Here, we have presented a

low-cost system that uses the HTC-Vive tracking sys-

tem, only. Our aim was to evaluate human locomo-

tion, from a biomechanical point of view, in order to

assess whether such a system can be effectively used

in clinical VR tasks involving walking. For this rea-

son, we have created a virtual environment that repli-

cates a specific setup used at Hospital, where people

can walk and we have compared some gait quantities

derived from the users’ tracking, in order to under-

stand whether differences occur between walking in

the real world and in VR. We have also taken into

account the presence of an avatar inside VR replicat-

ing the movements of the users’ legs, to understand

whether this affects locomotion.

Referring to the main paper’s aims described in

Section 1 we can devise the following conclusions

and future works:

• The analysis of the considered gait parameters

shows that there are no significant differences in

the total distance the users walk, in the stride and

step length. On the contrary, we noticed signif-

icant differences in the peak swing velocity, the

step count and the cadence. This can be explained

by the fact that subjects wore a cabled HMD for

the virtual conditions, and this could be a possi-

ble factor that introduces differences in the walk-

ing behavior with respect to a real world situation.

Moreover, most of the subjects have never ex-

perienced walking in immersive VR before, thus

we should further analyze whether these differ-

ences disappear after a longer training session.

The velocity profiles of each walking averaged

across participants do not show significant differ-

ences among the three conditions, thus showing

that people walk in a similar way in the three sce-

narios.

• The obtained results can be affected by the fact

that we have used a low-cost setup to track users’

movements. From the literature (Niehorster et al.,

2017), we know that the considered setup is char-

acterized by a good accuracy but in some situa-

tions it can be affected by errors. In particular,

shifts in the reference measurement system can

occur when working far from the theoretical limit

of use of the HTC-Vive, as in our case. A future

work will be a comparison with a optical tracking

system, in order to verify whether the same re-

sults are obtained also by using a different track-

ing system, and to further assess the reliability of

our low-cost system.

• All the results show that no differences occur be-

tween the situation in which the user can see an

avatar of his/her legs, and the one in which no

visual feedback about his/her own body is pre-

Comparing Real Walking in Immersive Virtual Reality and in Physical World using Gait Analysis

127

sented. This is partially in contrast with the re-

sults obtained by (Valkov et al., 2016), we plan

to further analyze the contribution of having a vi-

sual feedback of the users’ own body inside VR,

by considering more complex tasks involving lo-

comotion, e.g. walking on different surfaces and

in presence of obstacles.

REFERENCES

Chessa, M., Caroggio, L., Huang, H., and Solari, F. (2016a).

Insert your own body in the oculus rift to improve pro-

prioception. In VISIGRAPP (4: VISAPP), pages 755–

762.

Chessa, M., Maiello, G., Borsari, A., and Bex, P. J. (2016b).

The perceptual quality of the oculus rift for immersive

virtual reality. Human–Computer Interaction, pages

1–32.

Hollman, J. H., Brey, R. H., Bang, T. J., and Kaufman, K. R.

(2007). Does walking in a virtual environment induce

unstable gait?: An examination of vertical ground re-

action forces. Gait & Posture, 26(2):289–294.

Janeh, O., Bruder, G., Steinicke, F., Gulberti, A., and

Poetter-Nerger, M. (2017a). Analyses of gait parame-

ters of younger & older adults during (non-) isometric

virtual walking. IEEE Transactions on Visualization

and Computer Graphics.

Janeh, O., Langbehn, E., Steinicke, F., Bruder, G., Gulberti,

A., and Poetter-Nerger, M. (2017b). Walking in virtual

reality: Effects of manipulated visual self-motion on

walking biomechanics. ACM Transactions on Applied

Perception (TAP), 14(2):12.

Kim, A., Darakjian, N., and Finley, J. M. (2017). Walk-

ing in fully immersive virtual environments: an eval-

uation of potential adverse effects in older adults and

individuals with parkinsons disease. Journal of neu-

roengineering and rehabilitation, 14(1):16.

McMahan, R. P., Bowman, D. A., Zielinski, D. J., and

Brady, R. B. (2012). Evaluating display fidelity and

interaction fidelity in a virtual reality game. IEEE

transactions on visualization and computer graphics,

18(4):626–633.

Mohler, B. J., Campos, J. L., Weyel, M., and B

¨

ulthoff,

H. H. (2007). Gait parameters while walking in

a head-mounted display virtual environment and the

real world. In Proceedings of Eurographics, pages

85–88.

Nabiyouni, M. and Bowman, D. A. (2016). A taxonomy

for designing walking-based locomotion techniques

for virtual reality. In Proceedings of the 2016 ACM

Companion on Interactive Surfaces and Spaces, pages

115–121. ACM.

Nabiyouni, M., Saktheeswaran, A., Bowman, D. A., and

Karanth, A. (2015). Comparing the performance

of natural, semi-natural, and non-natural locomotion

techniques in virtual reality. In 3D User Interfaces

(3DUI), 2015 IEEE Symposium on, pages 3–10. IEEE.

Niehorster, D. C., Li, L., and Lappe, M. (2017). The accu-

racy and precision of position and orientation tracking

in the htc vive virtual reality system for scientific re-

search. i-Perception, 8(3):2041669517708205.

S. Kennedy, R., E. Lane, N., Berbaum, K., and G. Lilien-

thal, M. (1993). Simulator sickness questionnaire:

An enhanced method for quantifying simulator sick-

ness. The International Journal of Aviation Psychol-

ogy, 3:203–220.

Slater, M. (1999). Measuring presence: A response to the

witmer and singer presence questionnaire. Presence:

Teleoper. Virtual Environ., 8(5):560–565.

Slater, M., McCarthy, J., and Maringelli, F. (1998). The

influence of body movement on subjective presence

in virtual environments. Human Factors, 40(3):469–

477. PMID: 9849105.

Slater, M., Usoh, M., and Steed, A. (1994). Depth of pres-

ence in virtual environments. Presence: Teleoper. Vir-

tual Environ., 3(2):130–144.

Steinicke, F., Bruder, G., Jerald, J., Frenz, H., and Lappe,

M. (2010). Estimation of detection thresholds for redi-

rected walking techniques. IEEE transactions on vi-

sualization and computer graphics, 16(1):17–27.

Valkov, D., Martens, J., and Hinrichs, K. (2016). Evaluation

of the effect of a virtual avatar’s representation on dis-

tance perception in immersive virtual environments.

In Virtual Reality (VR), 2016 IEEE, pages 305–306.

IEEE.

HUCAPP 2019 - 3rd International Conference on Human Computer Interaction Theory and Applications

128