Discriminant Patch Representation for RGB-D Face Recognition using

Convolutional Neural Networks

Nesrine Grati

1

, Achraf Ben-Hamadou

2

and Mohamed Hammami

1

1

MIRACL-FS, Sfax University, Road Sokra Km 3 BP 802, 3018 Sfax, Tunisia

2

Digital Research Center of Sfax, Technopark of Sfax, PO Box 275, 3021 Sfax, Tunisia

Keywords:

RGB-D Face Recognition, Convolution Neural Networks, Data-Driven Representation, Discriminant Repre-

sentation, Consumer RGB-D Sensors.

Abstract:

This paper focuses on designing data-driven models to learn a discriminant representation space for face re-

cognition using RGB-D data. Unlike hand-crafted representations, learned models can extract and organize the

discriminant information from the data, and can automatically adapt to build new compute vision applications

faster. We proposed an effective way to train Convolutional Neural Networks t o learn face patch discrimi-

nant features. The proposed solution was tested and validated on state-of-the-art RGB-D datasets and showed

competitive and promising results relatively to standard hand-crafted feature extractors.

1 INTRODUCTION

With the increase demand of robust secu rity systems

in real-life applications, several automated biometrics

systems for person identity recognition are developed,

where the most user-friendly and n on-invasive moda-

lity is the face. Face recognition using 2D images

was well treated but still affected by imaging conditi-

ons. Thanks to the 3D tech nology progress, the recent

research has shifted from 2D to 3D (Bowyer et al.,

2006; Abbad et al., 2018). Indeed, three-dimension a l

face representation ensures a reliable surface shape

description and add geome tric shape information to

the face appearance. Most recently, some resear-

chers used image and depth data capture from low-

cost RGB-D senso rs like MS Kinect or Asus Xtion

instead of bulky and expensive 3D scanners. In ad-

dition to color images, RGB-D sensors provide depth

maps describing the scene 3D shape by active vision.

With th e availability of cost-effective RGB-D sensors,

many resear chers proposed and adapted feature ex-

traction operators to the raw data for different compu-

ter vision a pplications like gait analysis ( Wu et al.,

2012), lips movement analysis (Rekik et al., 2016;

Rekik et al., 2015b; Rekik et al., 2015a), and gender

recogn ition (Huynh et al., 2012). Hand-craf te d or en-

gineered feature extractors such as L BP, Local Phase

Quantization (LPQ), HOG wer e mainly used to deal

with RGB-D data for face recognition. The main be-

nefits of these feature extractors is that they are rela-

tively simple and efficient to compute. Alternatively,

learned features, for exam ple with Convolution Neu-

ral Networks (CNNs), achieve a very prominent per-

formance in many computer vision tasks (e.g., object

detection (Szegedy et al., 2013), image classification

(Krizhevsky et al., 2012) etc.). The basic idea behind

is to learn data-driven models that transform the raw

data to an optimal representation space leading to ap-

propriate features without manu al intervention.

In this context, this paper focuses on the feature

extraction pa rt in our face recognition pipeline. A gi-

ven face is represented by a set of pa tches extracted

from image and depth data. We propose to learn d is-

criminant local features using data-driven represen-

tation to describe the face patches be fore feeding a

Sparse Representa tion Classification (SRC) algorithm

to attribute the person identity.

The rest of this paper is o rganized as follows.

First, an overview on the most prom inent RGB-D face

recogn ition systems is given in section 2. Then, we

detail our proposed system in section 3. Section 4

summarizes the perform e d expe riments and the obtai-

ned results to validate our proposed system. Finally,

we conclude this study in section 5 with some obser-

vations and perspectives for future work.

510

Grati, N., Ben-Hamadou, A. and Hammami, M.

Discriminant Patch Representation for RGB-D Face Recognition using Convolutional Neural Networks.

DOI: 10.5220/0007403305100516

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019), pages 510-516

ISBN: 978-989-758-354-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2 RELATED WORKS

In this overview, we focus mainly on the feature ex-

tractors f or face recognition using RGB-D sensors.

Actually, many other asp ects can be discu ssed like

the pre-proc e ssing techniques, or the overall classi-

fication schemes. In (Li et al., 2013), the nose tip

is manua lly detected and the facial scans are aligned

with a generic face model using the Iterative Closest

Point (ICP) algorithm to normalize the head orienta-

tion and generate a canonical frontal v iew for both

image and depth data. A symmetric filling process is

applied o n the missing depth data specifica lly for the

non frontal view. For image data, Discriminant Co-

lor Space (DCS) operator is used as feature extractor.

Then, obtained depth fron ta l view and DCS features

are classified separately using SRC before late fusion

to get the person identity.

(Hsu et al., 2014) fits a 3D face model to the face

data to reconstruct a single 3D textured face model

for each per son in the gallery. The approach requires

to estimate the pose for any new probe to be able to

apply it to all 3D textured models in the gallery. Th is

allows to generate 2D images by plan projectio n and

then compute the LBP descriptor on the whole pro-

jected 2D images to perform the classification using

an SRC algorithm. Likewise, (Sang et al., 2016) used

the depth data for po se correction b ased on ICP al-

gorithm to render the gallery view as the probe one.

However, contrary to (Hsu et al., 2014), the authors

applied Joint Bayesian Classifier on RGB and depth

HOG descriptors extracted from the both data and the

final decision is made via weighted sum of their simi-

larity scores.

From the discussion above, th e most focus to pre-

processing especially dealing with pose variation by

aligning the query data to the gallery samples. Alt-

hough this kind of sequential processing may lead to

error propagation from pose estimation to the classi-

fication, it gave a very go od results (Hsu et al., 2014).

Alternatively, to deal with pose variation, (Ciaccio

et al. , 2013) used a large number of image sets in

the gallery under different poses angles from a single

RGB-D data. Also, the face pose is estimated via the

detection and alignment of standard facial landmarks

in the images (Zhu and Ramanan, 2012). E ach face

is then represented using a set of extracted patches

centered on the detected landmarks and described by

a set of LBP descriptor, co-variance of edge orienta -

tion, and pixel location and intensity derivative. The

classification is then performed by computing distan-

ces be twe en patch descriptors, inferring pro babilities,

and lately per forming a Bayesian decision.

The following works, gave more attention to fe-

ature extraction from face RGB-D data than pre-

processing and dealing with head pose variation. In

(Dai et al., 2015), a single ELMDP (Enhanced Local

Mixed Derivative Pattern) descriptors are extracted

and Nearest Neighbor algorithm is used for the com-

bined features with confidence weights. In (Goswami

et al., 2014), a combination of HOG applied on sa-

liency and entropy features, and ge ometric attributes

computed from the Euclidean distances between face

landmarks are used as face signature. The random

forest classifier is then used for the identity classifi-

cation. In (Boutellaa et al., 2015), a series of hand-

crafted feature extractors (i.e., LBP, LPQ, and HOG)

are applied respectively on texture and depth crops

and finally SVM classifier is carried out for face iden-

tification. The only use of the ca refully-engineered

representation was with feature Binarized Statistical

Image Fea tures detector ( BSIF).

In (Kaashki and Safabakhsh, 20 18) three-

dimensional constrained local model (CLM-Z) is ap-

plied for face-modeling and landmarks points locali-

zation. Local features HOG , LBP and 3DLBP around

landmarks points are extracted then SVM classifier is

used for the classification.

Indeed , (Hayat et al., 2016) proposed the first

RGB-D image set classification for face recognition.

For a given set of images (which can captured frames

with Kinect sensor), the face regions a nd the head po-

ses are firstly detected with (Fanelli et al., 2011) al-

gorithm’s than clustered into multiple subsets accor-

ding to the estima ted pose. A block based covariance

matrix representation with LBP features is applied

to mo del each subsets o n the Riemannian manifold

space and SVM classification is performed on all sub-

sets for the both modality. The final decision is made

with a majority vote fusion. The proposed technique

has been evaluated on a combination of thr ee RGB-D

data sets and achieved an identification rate of 94.73%

which concurr ent the single image based classifica-

tion accuracy’s.

Observations. For the classification part, we ob-

serve tha t SRC is used in the most popular RGB-D

face recognition systems (Li et al., 2013; Hsu et al.,

2014). Indeed , after its succ e ssful ap plication in

(Wright et al. , 2009) for face rec ognition, SRC has

attracted the attention in many other computer vision

tasks. Also, SRC is a good choice when the number

of classes in the dataset is variable and in a con stant

increase, which is the case of face recognition appli-

cations.

We note that the most prominen t m ethods (Hsu

et al., 2014) (Li et al., 2013) aim principally to over-

come the issues of pose variation either with pose cor-

Discriminant Patch Representation for RGB-D Face Recognition using Convolutional Neural Networks

511

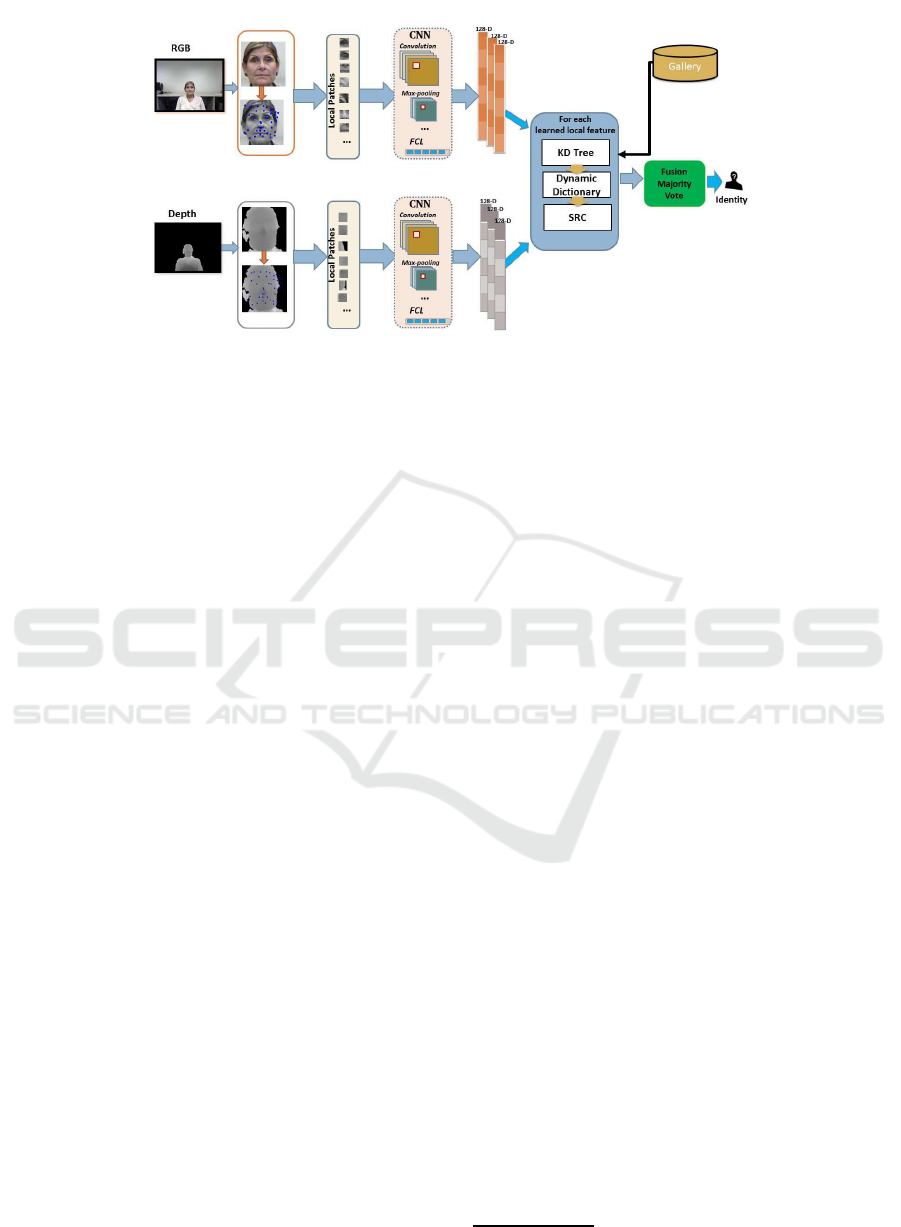

Figure 1: An overview of the online process of the proposed method. First, t he facial regions are detected from image and

depth data. Local patches are then extracted from both of the modalities. Afterward, two CNNs are used to transform the

extracted patches to obtain feature vectors to feed the SRC algorithm and fi nally obtain the person ID.

rection or with augmenting the gallery through ge-

nerating new images in different view or even cap-

turing multi-view d a ta for each face in the gallery.

Data representation and appropriate feature extraction

remains un derestimated in the aforemen tioned work s

and they settle for hand-crafted features combinations

and fusion. In this n ew era of deep learning techni-

ques, we believe that RGB-D face recog nition sys-

tems can take benefits of CNNs to le arn appropriate

features and boost their performances.

Contribution. The main objective of this paper is to

highlight how CNNs can contribute to learn a discri-

minant representation of local regions in face image,

and how it competes with standard hand-crafted fea-

ture extractors in the case of RGB-D face recogn ition.

A given face is represented by a set of patches a round

detected salient key-points. Each of these patches is

assigned an ID by SRC techniqu e that associate the

patch to on e of the most similar patches in the da-

tabase. The raw patches data (image and depth) ar e

transformed using a CNN befo re f eeding the classifi-

cation part. We propose an effective approach to learn

our CNN weights leading to a discriminant space for

face patches representation.

3 PROPOSED FACE

RECOGNITION SYSTEM

The proposed approach involves on line and offline

phases sharing some processing b locks. The offline

phase is to train our CNNs while the online phase is

dedicated to predict the person identity given a face

query. Figure 1 sketches the online phase. It goes al-

ong the following steps. Firstly, the face is localized

in the image. It is then represented by a set of patches

croppe d around key-points extrac te d on the face. Af -

terward, two trained CNNs are applied to transform

these patches to get a feature vector for each one, and

an SRC algorithm is used to attribute an ID for each

feature vector be fore making the late fusion leading

to the predicted identity. The remain ing of the section

gives details about the different processing blocks just

introdu ced including the tra ining o f the CNNs.

3.1 Face Pre-processing and Patch

Extraction

The face pre -processing shared between the offline

and online phase of our system includes median and

bilateral filtering f or the depth map s and face locali-

zation ( Zhu and Ramanan, 2012)

1

. The face region

is cropped and resized to 96 × 96 pixels to ensu re

a normalized face spatial resolution. To get rid of

face landmarks localization, we only consider salient

image key-points without any further semantic ana-

lysis and without loss of genera lity. In other words,

we do not try to catch specific anatomical refer ence

points on the face. That said, the repeatability of

image feature points for face analysis was proven. We

used the SURF operator (Bay et al., 2006) to extract

interest key-points on the cropped face images. The

number of extracted key-points is variable and de-

pends on face textures and also the position of the

person in the frustum of the RGB-D camera. The

key-points c oordinates are mapped on the depth crop

using the sensor calibra tion par ameters. Arou nd each

key-point, we crop from both image and depth data

two patches of 20 × 20 pixels. Again, the mapping

1

We used only the face localization, facial landmarks

were not used.

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

512

between image and depth map can be ensured by the

RGB-D sensor geometric calibration (Ben-Hamadou

et al., 20 13).

3.2 CNN Architecture and Training

Since the size of the CNN input patches is small

(20×20 pixels), we designed a relatively shallow

CNN architecture as described in Table 1. It is worth

to notice that at this level of the study w e did not

try complex architectures or fancy connectivity (skip

connections, residual, etc..) but it could be explored

later in future works. We train separate models with

the same architecture for each modality (i.e., image

and depth patches).

Research on face analysis u sually focuses on fin-

ding an improvement in faithful face characterization

with discrimina nt and robust representation. Lear-

ning descriptors with neural networks is entirely a

data-driven approach. The objective of the discrimi-

nant descriptors learning is to find a transformation

from raw space to an another space in which fe a tu-

res from same classes are closer than fea tures from

different classes. Metric learning using a triplet net-

work was introduced by Google’s FaceNet (Schroff

et al., 2015), where a triplet-loss is used to tra in an

embedd ing space for face image using online triplet

mining which outperforms a Siamese networks in ma-

nifolds clustering. Good face embedding satisfy simi-

larity’s constraint by the way faces from the same per-

son should be close together while those of different

faces are far away from each other. The intra-class

distance shou ld to be smaller than the inter-class dis-

tance and form well separated clusters.

In this paper, the triplet loss takes a triplet of pa-

tches as input in the form a, p,n , where a is the an-

chor patch, p is the positive patch, which is a diffe-

rent sample of the same class as a, and n standing

for negative patch is a sample belonging to a different

class. The objective of the optimization process, is to

update the parameters of the network in such way that

Table 1: Our CN N architecture for small RGB-D Patches.

odim stands for number of channels in the output tensors,

similarly idim is the number of channels in the i nput tensors,

and ks is the kernel size.

Layer Parameters Output tensor

Convolution odim: 6, ks: 3 (6,18,18)

BatchNorm (6,18,18)

Sigmoid (6,18,18)

Max Pooling ks: 2 (6,9,9)

Convolution odim: 32, ks: 3 (32,7,7)

ReLU (32,7,7)

Max Pooling ks: 2 (32,3,3)

FCL idim: 288, odim: 128 128

Figure 2: Local feature descriptor training pipeline with tr i-

plet loss.

patches a and p become closer in the embedded fe-

ature space, and a and n are further apart in terms

of their Euclidean distances as presented in Figure 2.

The triplet loss formula is given in Equation 1. f (x)

stands fo r the application of CNN on a given input

x to generate a feature vector (embedding). Another

hyper parameter is added to the loss equation called

the margin, it defines how far away the dissimilari-

ties should be. Minimizing L

tr

enforce s to maximize

the Euclidean distance between patches from diffe-

rent classes which sho uld be greater than the distance

between anchor and positive features distance. For

efficient training, only the triplets patches that verify

the constraint L

tr

> 0 are online selected as a valid

triplet to improve the training.

L

tr

=

∑

a,n,p

|| f (a) − f (p)||

2

2

(1)

−|| f (a) − f (n)||

2

2

+ margin)

3.3 Patch Classification

We followed (Grati et al., 2016) for the adaptive and

dynamic dictionary selection in the SRC process. The

objective the SRC is to reconstruct an input signal by

a linear combination of atoms in a selected diction a ry.

We denote by y

k

∈ R

M

the input fe a ture vector obtai-

ned from the application of the trained CNN on the

k-th extracted patch and M is the its dimension. Also,

we note by

˜

D

k

∈ R

M×

˜

N

the dic tionary. I t consists of

the clo sest

˜

N gallery patches (a toms). Equation 2 gi-

ves the lin e ar regression leading to the reconstructed

feature vector ˜y

k

. x

k

∈ R

˜

N

is the sparse coefficient

vector whose nonzero values are related to the atoms

in

˜

D

k

used for the reconstruction of y

k

, ε

k

captures

noise, and

˜

N is experimentally fixed.

˜y

k

=

˜

D

k

x

k

+ ε

k

(2)

The estimation of the sparse coefficients x

k

is for-

mulated by a LASSO problem with an ℓ

1

minimiza-

tion using (Ma iral et al., 2010):

argmin(k

˜

D

k

x

k

− y

k

k

2

+ λk x

k

k

1

) (3)

Discriminant Patch Representation for RGB-D Face Recognition using Convolutional Neural Networks

513

Finally, the identity associated to the k-th patch is

classically the class generating the less reconstruction

error. Once the sparse r epresentation of all local pat-

ches in the query image is obtained, a score level fu-

sion strategy is applied then a majority vote rule pre-

dicts the final person identity.

4 EXPERIMENTS AND RESULTS

4.1 Training Details

Our CNNs has been tra ined with a batch size of 64, a

decay value of 0.0005 a momentum value of 0.09 and

an initial learning rate set to 0.001. We used PyTorch

2

framework to implement and train the CNNs. Fir-

stly, the set of patches is classically split to trainin g

and testing sets. The pool of patch triplets needed for

the CNNs training are generated and updated every

ten epochs of the training by gather ing the triplets

from all the person s equally. A single patch tr iplet

is obtained following these 3 steps:

1. Randomly select one anchor patch from the pool

of patches related the a given person c.

2. Randomly select the positive p atch from the re-

maining patches in the sam e pool.

3. Randomly select the negative patch from the patch

pool related to other persons (6= c)

4.2 Datasets

Our approach is validated on two publicly RGB-D

face databases: Eurecom ( H uynh et al., 201 2), and

Curtin faces (Li et al., 2013).

• Eurecom dataset is composed by 52 subjects, 14

females and 38 males. Each person has a set

of 9 images in two different sessions. Each ses-

sion contains 9 settings: neutral, smiling, open

mouth, illumination variation, left en d right pro-

file, o cclusion on the eyes, occlusion on the

mouth, and finally occlusion with a white paper-

sheet.

• CurtinFaces dataset consists of 52 subjects. Each

subject has 97 imag es captu red under different va-

riations: combinations of 7 facial expression, 7

poses, 5 illuminations, and 2 occlu sio ns. Curtin-

Faces database with low qua lity face models is

more challenging in terms of variations of poses,

and expression and illumination face models.

2

https://pytorch.org/

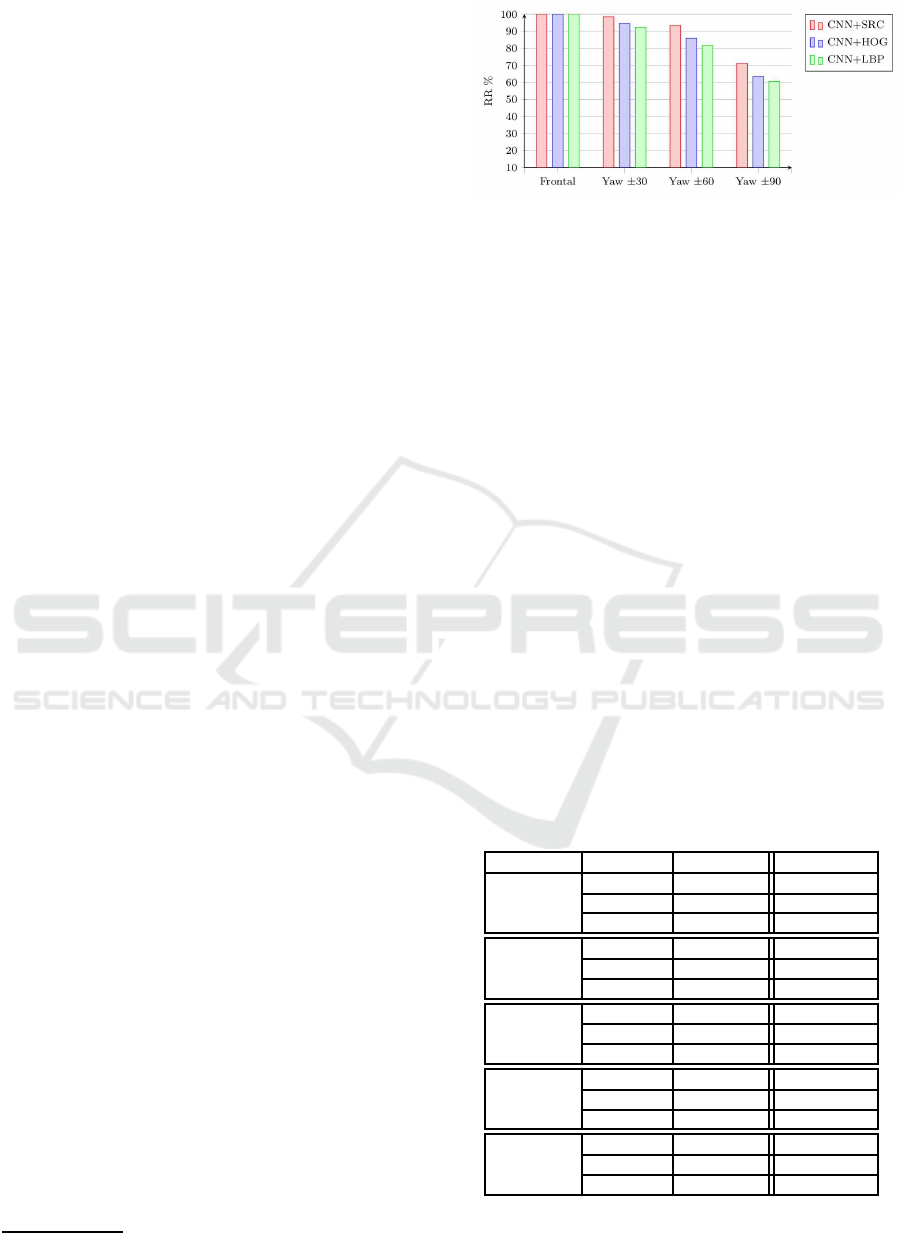

Figure 3: Performance comparison between our CNN fea-

tures and the standard hand-crafted features HOG and LBP.

4.3 Validation and Results

We p e rformed two sets of experiments to evaluate our

approa c h. The first set is de dicated to compare with

state-of-the-art systems, and the second set is to evalu-

ate the propo sed learned features comparing to hand-

crafted features (i.e., HOG and LBP) taking our face

recogn ition system as baseline.

For th e first set of experiments, an d on CurtinFa-

ces dataset, we report our results as well as those of

(Hsu et al., 2014) (Li et al., 2013), (Ciaccio et al.,

2013), (Kaashki and Safabakh sh, 2018), and (Grati

et al., 2016). To make a fair comparison, we use the

same protocol as (Li et al., 2013). 18 images contai-

ning only one kind of variations in illumination, pose

or expression are selected for the training and testing

images include the rest of non- occluded faces, there

are a total of 6 different yaw poses with 6 different

expressions added to the neutral frontal views. The

reported results of our system on RGB, depth and fu-

sion scheme in comparison with (Li et al., 20 13) are

summarized in table 2.

Table 2: Face recognition performance under yaw pose and

facial expression variations.

Pose Modality Our Work DSC+SRC

Frontal RGB 100% 100%

Depth 100% 100%

Fusion 100% 100%

Yaw ± 30 RGB 99.03 % 99.8%

Depth 94.55% 88.3 %

Fusion 98.40% 99.4 %

Yaw ± 60 RGB 93.75 % 97.4%

Depth 86.05% 87.0%

Fusion 93.43% 98.2%

Yaw ± 90 RGB 70.2% 83.7%

Depth 63.45% 74%

Fusion 71.15% 84.6 %

Average RGB 94.6% 96.70%

Depth 88.67% 86.65%

Fusion 94.23% 96.98%

The presented results demon strates that our met-

hod can works eq ually to the aforementioned work

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

514

Table 3: CurtinFaces Database performances on differently approach.

Pose Our Cov+LBP LBP+SRC DCS+SRC BSIF+SRC HLF+SVM

Frontal 100% N/A 100% 100% 100% 100%

Yaw ±30 98.40% 94.2% 99.4 % 99.8% 99.04% 90.3%

Yaw ±60 93.43% 84.6% 98.2 % 97.4 95.51% 58.6%

Yaw ±90 71.15% 75.0% 93.5% 83.7% 60.58% N/A

which is based on expensive pre-processing stage to

frontalize and to fill sym metrically the self-occlu ded

part in the face due to head rotation. An overall of

94.6%, 88.67% and 94.23% are achieved with our

system respectively for RGB, depth and multimodal

data. It’s clear that our RGB performance need to

be improved but our d epth performance seems bet-

ter than this repo rted in (Li et al., 2013) which de-

monstrate the importance an d the effectiveness of data

representation with learning discriminant features to

overcome ch allenging cond itions. In other hand we

present in table 3 our obtained results in comparison

with the most pe rforming state of the ar t techniques

namely(Ciaccio et al., 2013; Hsu et al., 2 014) and

some recent works (Grati et al., 2016 ; Kaashki and

Safabakhsh, 2018) .

It is shown that our system outperforms (Ciaccio

et al., 2013) (Cov+LBP) with a margin of 4 % in Yaw

±30 while a gain of ≈ 9% in Yaw ±60. The drop in

the performance for the set Yaw ±9 0 angles can be

explained by the fact that CurtinFaces database con-

tains only just two samples for left and right ± 90.

That is, if we take one sample for testing, no corre-

sponding pose exists anymore in the gallery. In con-

trary and as reported previously in the related work

section, (Ciaccio et al., 2013; Hsu et al., 2014; Li

et al., 2013 ) pre-processings allow to tackle this is-

sue as they either au gment the gallery by ge nerating

new poses, correct the pose or symmetrically filling

the self occluded part in the face. Beyon d these well

engineer ed pre-processings, in this work we aim to fo-

cus on estimating optimal RGB-D data representation

for face recog nition applications.

In other hand, ou r study is comp ared also

to (Kaashki and Safabakhsh, 2018) (labeled as

HLF+SVM in the Table 3) as it uses pa tc h repre-

sentation around landmarks points. We can observe

that our performance are better with a gain of 8% in

Yaw ±30 angle and an im provement of more th an

30% in Yaw ±60 angle. T hese results highlights the

added- value of learned features to derive more dis-

criminant representations for local features compa-

red to the standard hand-crafted features (i.e., HOG,

LBP, and 3DLBP) and prove clearly the use of salient

points without interp reting face structure.

On Eurecom database, the first session set is se-

lected for training and the second one for test. The

learned feature descriptor yield to 90,82% for texture

images and 85,57% for depth data, and 92,70% after

fusion, which is better than the recognition rate obtai-

ned in RISE (Goswami et al., 2014) ( i.e., 89.0% after

fusion).

The seco nd set of experiments are dedic a te d to

compare our CNN learned features to hand-crafted fe-

atures taking our system as baseline. This is to high-

light the added-value of CNN learned features. Three

versions of our system are tested, the only changed

part is the feature extraction: HOG+SRC, LBP+SRC,

and CNN+SRC. As shown in Figure 3, CNN+SRC

outperforms the other systems for all the test sub sets.

Based on all the obtained results and compari-

sons, we can conclude that CNN learned-fe atures ca n

achieve a competitive identification pe rformance for

person recognition from low-cost sensor and under

challengin g pose and expression variations an d wit-

hout any prior face analysis (e.g., face pose estima-

tion, facial landmar ks detection , etc.).

5 CONCLUSION

In this paper, we proposed a data-driven representa-

tion for RGB-D face recognition. A given face is re-

presented by a set of p atches around detected salients

key-points on w hich a CNNs transformation are ap-

plied to extract the learned local descriptor for each

modality separately befo re per forming SRC c la ssifi-

cation. The experimental results obtained on ben-

chmark RGB-D databases highlight the added-value

of deep learning local features compared to standard

hand-c rafted feature extractors. For future works,

we plan to extend our system with learning a multi-

modal representation to combine texture and depth

data. With an appropria te CNN, the fusion strategy

of RGB-D data will take in consid eration the comple-

mentarity between depth and image data to enhance

the recognitio n performance.

REFERENCES

Abbad, A., Abbad, K., and Tair i, H. (2018). 3d face recog-

nition: Multi-scale strategy based on geometric and

Discriminant Patch Representation for RGB-D Face Recognition using Convolutional Neural Networks

515

local descriptors. Computers & Electrical Engineer-

ing, 70:525–537.

Bay, H., Tuytelaars, T., and Van Gool, L. (2006). Surf:

Speeded up robust features. In Computer vision–

ECCV 2006, pages 404–417. Springer.

Ben-Hamadou, A ., Soussen, C., Daul, C., Blondel, W., and

Wolf, D. (2013). Flexible calibration of st ructured-

light systems projecting point patterns. Computer Vi-

sion and Image Understanding, 117(10):1468–1481.

Boutellaa, E., Hadid, A., Bengherabi, M., and Ait-Aoudia,

S. (2015). On the use of kinect depth data for identity,

gender and ethnicity classification from facial images.

Pattern Recognition Letters, 68:270–277.

Bowyer, K. W., Chang, K., and F lynn, P. (2006). A survey

of approaches and challenges in 3d and multi-modal

3d+ 2d face recognition. Computer vision and image

understanding, 101(1):1–15.

Ciaccio, C., Wen, L., and Guo, G. (2013). Face recogni-

tion robust to head pose changes based on the rgb-d

sensor. In Biometrics: Theory, Applications and Sy-

stems (BTAS), 2013 IEEE Sixth International Confe-

rence on, pages 1–6. IEEE.

Dai, X., Yin, S., Ouyang, P., Liu, L., and Wei, S. (2015).

A multi-modal 2d+ 3d face recognition method with a

novel local feature descriptor. In Applications of Com-

puter Vision (WACV), 2015 IEEE Winter Conference

on, pages 657–662. IEEE.

Fanelli, G., Gall, J., and Van Gool, L. (2011). Real time

head pose estimation with random regression forests.

In Computer Vision and Pattern Recognition (CVPR),

2011 IEEE Conference on, pages 617–624. IEEE.

Goswami, G., Vatsa, M., and Singh, R. (2014). Rgb-d face

recognition with texture and attribute features. Infor-

mation Forensics and Security, IEEE Transactions on,

9(10):1629–1640.

Grati, N., Ben-Hamadou, A., and Hammami, M. (2016). A

scalable patch-based approach for rgb-d face recogni-

tion. In International Conference on Neural Informa-

tion Processing, pages 286–293. Springer.

Hayat, M., Bennamoun, M., and El-Sallam, A. A. (2016).

An rgb–d based image set classification for r obust

face recognition from kinect data. Neurocomputing,

171:889–900.

Hsu, G.-S. J., Liu, Y.-L., Peng, H.-C., and Wu, P.-X. (2014).

Rgb-d-based face reconstruction and recognition. In-

formation Forensics and Security, IEEE Transactions

on, 9(12):2110–2118.

Huynh, T., Min, R., and Dugelay, J.-L. (2012). An efficient

lbp-based descriptor for facial depth images applied

to gender recognition using rgb-d face data. In Com-

puter vision-ACCV 2012 workshops, pages 133–145.

Springer.

Kaashki, N. N. and Safabakhsh, R. (2018). Rgb-d face re-

cognition under various conditions via 3d constrained

local model. Journal of Visual Communication and

Image Representation, 52:66–85.

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012).

Imagenet classification with deep convolutional neu-

ral networks. In Advances in neural information pro-

cessing systems, pages 1097–1105.

Li, B. Y., Mian, A., Liu, W., and Krishna, A. (2013). Using

kinect for face recognition under varying poses, ex-

pressions, illumination and disguise. In Applications

of Computer Vision (WACV), 2013 IEEE Workshop

on, pages 186–192. IEEE.

Mairal, J., Bach, F., Ponce, J., and Sapiro, G. (2010). Online

learning for matrix factorization and sparse coding.

The Journal of Machine Learning Research, 11:19–

60.

Rekik, A., Ben-Hamadou, A., and Mahdi, W. (2015a). Hu-

man Machine Interaction via Visual Speech Spotting.

In Advanced Concepts for Intelligent Vision Systems,

number 9386 in Lecture Notes in Computer Science,

pages 566–574. Springer International Publishing.

Rekik, A., Ben-Hamadou, A., and Mahdi, W. (2015b). Uni-

fied System for Visual Speech Recognition and Spea-

ker Identification. In Advanced Concepts for Intelli-

gent Vision Systems, number 9386 in Lecture Notes in

Computer Science, pages 381–390. Springer Interna-

tional Publishing.

Rekik, A., Ben-Hamadou, A., and Mahdi, W. (2016).

An adaptive approach for lip-reading using image

and depth data. Multimedia Tools and Applications,

75(14):8609–8636.

Sang, G., Li, J., and Zhao, Q. (2016). Pose-invariant face

recognition via rgb-d images. Computational intelli-

gence and neuroscience, 2016:13.

Schroff, F., Kalenichenko, D ., and Philbin, J. (2015). Fa-

cenet: A unified embedding for face recognition and

clustering. In Proceedings of the IEEE conference on

computer vision and pattern recognition, pages 815–

823.

Szegedy, C., Toshev, A., and Erhan, D. (2013). Deep neural

networks for object detection. In Advances in neural

information processing systems, pages 2553–2561.

Wright, J., Yang, A. Y., Ganesh, A., Sastry, S. S., and Ma,

Y. (2009). Robust face recognition via sparse repre-

sentation. Pattern Analysis and Machine Intelligence,

IEEE Transactions on, 31(2):210–227.

Wu, D., Zhu, F., and Shao, L. (2012). One shot learning

gesture recognition from rgbd images. In Computer

Vision and Pattern Recognition Workshops (CVPRW),

2012 IEEE Computer Society Conference on, pages

7–12. IEEE.

Zhu, X. and Ramanan, D. (2012). Face detection, pose es-

timation, and landmark localization in the wild. In

Computer Vision and Pattern Recognition (CVPR),

2012 IEEE Conference on, pages 2879–2886. IEEE.

VISAPP 2019 - 14th International Conference on Computer Vision Theory and Applications

516