HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation

Network

Gaurav Jaswal

1

, Shreyas Patil

2

, Kamlesh Tiwari

3

and Aditya Nigam

1

1

School of Computing and Electrical Engineering, Indian Institute of Technology Mandi, India

2

Department of Electrical Engineering, Indian Institute of Technology Jodhpur, India

3

Department of Computer Science and Information Systems, Birla Institute of Technology and Science Pilani, India

Keywords:

Faster RCNN, Finger Knuckle Biometrics.

Abstract:

The aforementioned works and other analogous studies in finger knuckle images recognition have claimed

that the precise detection of true features is difficult from poorly segmented images and the main reason for

matching errors. Thus, an accurate segmentation of the region of interest is very crucial to achieve superior

recognition results. In this paper, we have proposed a novel holistic and generalized segmentation Network

(HFDSegNet) that automatically categorizes the given finger dorsal image obtained from multiple sensory

resources into particular class and then extracts three possible ROIs (major knuckle, minor knuckle and nail)

accurately. To best of our knowledge, this is the first attempt, an end-to-end trained object detector inspired

by Deep Learning technique namely faster R-CNN (Region based Convolutional Neural Network) has been

employed to detect and localize the position of finger knuckles and nail, even finger images exhibit blur,

occlusion, low contrast etc. The experimental results are examined on two publicly available databases named

as Poly-U contact-less FKI data-set, and Poly U FKP database. The proposed network is trained only over

500 randomly selected images per database, demonstrate the outstanding performance of proposed ROI’s

segmentation network.

1 INTRODUCTION

Biometry based authentication solutions have been

used in large scale security and privacy applications

like mobile device, surveillance etc (Jain et al., 2004).

As for as features of Palmer region of hand is con-

cerned, palm-print, fingerprint, palm vein and hand

geometry are the ideal biometric traits (Bera et al.,

2014). In earlier studies, fingerprint provided the ba-

sis for personal identification. Apart from its exten-

sive usages, fingerprint requires high quality images

(> 400d pi) for accurate results as well as its features

deteriorate with sharp cuts/ injuries which limit its

role in certain commercial applications (Kumar and

Kwong, 2013). Also, the quality of fingerprint of

laborers or cultivators is not very good to be used

for recognition (Jaswal et al., 2017b). Similarly, in-

stead of having the bigger ROI region, a palm con-

sist of limited systematic line features and may under-

gone impostor attacks because most of the time peo-

ple leave their palm print or fingerprint unintention-

ally. While, geometrical features of palm/ finger are

not very much unique for identification (). The vein

traits in hand are distinctive, difficult to spoof but re-

quires extra imaging devices (Kumar and Prathyusha,

2009). On the contrary, the skin patterns over finger

dorsal surface are unaffected or naturally preserved.

(Jaswal et al., 2016).

1.1 Finger Knuckle Anatomy and

Challenges

The basic epidermis structure appearing on the dor-

sal surface of finger is named as finger knuckle image

(FKI) pattern (Zhang et al., 2010). It mainly consists

of rich convex like lines, corner points, skin folds, and

gray-mutation regions specifically around the finger

joints. The three joints in a finger lie in between one

of three bone groups called as the distal, proximal,

and middle phalanx (Kumar and Xu, 2016). The epi-

dermal cells near knuckle mature very early stage of

development and rarely change during an adult’s life.

Its failure to enroll rate (FTE) is observed to be lower

and can be acquired easily using an inexpensive setup

with lesser user cooperation (Jaswal et al., 2017a).

Moreover, the dorsal knuckle patterns are invariant

786

Jaswal, G., Patil, S., Tiwari, K. and Nigam, A.

HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation Network.

DOI: 10.5220/0007568307860793

In Proceedings of the 8th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2019), pages 786-793

ISBN: 978-989-758-351-3

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

to emotions/ behavioural aspects and cannot be easily

manipulated (Kumar and Xu, 2016). However, there

exist several challenge in FKI recognition that are to

be solve for superior performance: (1) Track the mid-

dle knuckle line for FKI registration because the posi-

tion of fingers deviate during contact-less acquisition.

(2) Control varying illumination and lighting affects

for outdoor conditions (3) Automatically segment the

major, minor finger knuckle and nail ROI’s consis-

tently (4) Improve the matching accuracy of major,

minor finger knuckle and nail recognition. (5) De-

velop non-uniform databases in which images incor-

porate the real world situations such as non-stretch

palm or bending of fingers.

1.2 Problem Statement

Beside the possibility of simultaneous acquisition

and highly stable patterns of major and minor finger

knuckles, FKI is still not enough mature to be used

solely in personnel security applications like mobile

devices, forensic etc. Grouping of a major, and mi-

nor finger knuckles may take the advantage to im-

prove the performance of single modality based FKI

recognition systems. However, the performance is of-

ten affected by inconsistent ROI segmentation, vary-

ing lighting situations, sensor accuracy etc and it is

important to minimize these factors at initial level.

Specifically, it can be summarize that the performance

of consistent ROI extraction performs a key part in the

performance of a biometric system, since the succes-

sive processing units has to work over the extracted

ROI region. Therefore, the exact ROI segmentation

of major and minor knuckles is very crucial for do-

ing point-wise correspondence of image patches. In

view of above mentioned FKI challenges, this work

has been proposed to automatically segment the ma-

jor and minor (upper) finger knuckles on PolyU FKI

data set using deep learning criteria. Moreover, we

have investigated the possibility of another region of

interest near finger nail as a part of finger dorsal fea-

ture which have not yet attracted the attention. Till

date no other work has been reported that exploits nail

feature over this data-set.

Contribution: We present a two fold problem,

as image classification network (handled via ResNet)

for categorizing the multi sensory input data and as

ROI segmentation for extraction of four (1-PolyU

FKP; 3-PolyU FKI) ROI’s (handled via Modified R-

CNN. Our proposed holistic and generalized Fin-

ger Knuckle Segmentation Network (HFDSegNet)

provide a single fully automated network for finger

knuckle image ROI extraction that can be trained and

perform well for two type of finger knuckle databases.

In this architecture, a trained ResNet50 model (He

et al., 2016) is first used to identify the object class of

the given finger knuckle. The classified finger image

is further given as input to a modified deep learning

network which actually provides three region of inter-

est in that image as shown in Fig. 1. In practice, the

deep learning network has been trained almost simi-

lar to state-of-art faster R-CNN (Girshick et al., 2014)

by explicitly reformulate the layers as learning func-

tions. Also, it can further classify the extracted ROI’s

of particular data-set into respective classes (major or

minor or finger nail), so that it can later be matched

with the appropriate gallery sample. For more rigor-

ous experimentation, we have involved two publicly

available finger knuckle databases i.e., PolyU contact-

less finger knuckle image (FKI) database (56, 6 13)

and PolyU finger knuckle print (FKP) data-set (55,

2009). To the best of our knowledge, this is the first

holistic deep learning architecture utilized to classify

and localize the ROI of any type of finger knuckle

image. One of the major implementations break-

through achieved wad that we have managed to train

the entire network with only 500 images per dataset

whereas generally any deep learning network takes

huge amount of data to train. The performance of ROI

segmentation algorithm is measured in terms of IOU,

accuracy, precision, and recall. The remainder of arti-

cle is organized into following main sections: Section

2 summarizes the state-of-art studies for FKI recogni-

tion. In section 3, the proposed ROI extraction algo-

rithm is presented including image classification and

training/testing strategy. Then, experimental results

and comparative analysis are presented in section 4.

Finally, the important findings and future scope are

drawn in the last section.

2 RELATED WORK

The present state of art studies in the area of finger

knuckle recognition can be mainly grouped into fol-

lowing main implementations: ROI extraction, qual-

ity assessment, ROI enhancement, feature extraction

and finally, the most crucial matching.

2.1 ROI Extraction

In real sense, major finger knuckle is the most stud-

ied biometric identifier among knuckle print studies

whereas minor finger knuckle and nail are still not

much explored. Most of the major knuckle ROI ex-

traction methods are based on local convexity char-

acteristics of the line patterns on the middle knuckle

region. In (Zhang et al., 2010), authors computed

HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation Network

787

Figure 1: Finger Knuckle Image Annotation: (a) PolyU FKI Sample, (b) PolyU FKP Sample.

convexity magnitude to detect the center of the mid-

dle finger joint and for that encoded image pixels

as (1, -1). In (Nigam et al., 2016), authors pro-

posed an idea to locate middle knuckle point by which

finger knuckle ROI can be segmented consistently.

They used magnitude responses of two curvature Ga-

bor filters with fine tune parameters to locate cen-

tral knuckle line. In (Kumar and Xu, 2016), authors

proposed ROI segmentation framework for major and

minor finger knuckles using local image processing

operations on contact-less images.

2.2 Feature Extraction/Classification

In (Zhang et al., 2009), authors proposed competi-

tive code in which orientation information of major

knuckle pattern is extracted through the use of ga-

bor filter. In (Kumar and Ravikanth, 2009), authors

resolved the problems occurring in finger knuckle

recognition due to challenging knuckle images. In

(Kumar and Prathyusha, 2009), authors stated bio-

metric fusion of knuckle shape and vein features to

validate the identity of individuals. In (Kumar, 2012),

first time ever the usages of upper minor finger knuck-

les for personnel identification is presented. In an-

other hand dorsal study (Kumar, 2014), importance of

lower minor finger knuckles and palm dorsal region

for personal authentication is discussed. In (Jaswal

et al., 2017a), authors presented a score level fusion

of multiple texture features obtained from local trans-

formations schemes and performed multi-scale based

matching. In latest work (Chlaoua et al., 2018), au-

thors extracted the middle knuckle features by deep

learning based PCANet model as well as performed

hashing and multi-class classification. In another lat-

est work (Zhai et al., 2018), authors made efforts

to improve recognition performance of hand crafted

features and proposed Convolutional Neural Network

(CNN) architecture with data augmentation and batch

normalization.

3 PROPOSED ROI EXTRACTION

NETWORK

There have been no research work till now that adopts

deep methods for FKI ROI segmentation. We pro-

pose an end-to-end deep network architecture for FKI

ROI segmentation that can efficiently minimize the

poor segmentation results. The main aim of proposed

ROI extraction approach is to consistently segment

the fixed size ROI’s from PIP, DIP, and finger nail

regions. For this, a two stage end-to-end network

(HFDSegNet) has been trained using modified faster

RCNN, which takes any finger as input and results

into different type of ROI regions.

3.1 HFDSegNet: The Network

Architecture

Various deep learning methods have been developed

and achieve significant progress in object classifica-

tion and localization. The goal of any image classifi-

cation challenge is to train a model that can correctly

classify an input image. The proposed HFDSegNet

consists of two main stages: (i) Object classification-

ResNet and (ii) Object localization- Modified R-

CNN. In this work, we have two state-of-art finger

knuckle databases available, namely PolyU FKP and

PolyU contact-less FKI database.

3.1.1 Classification Network: ResNet-50

The proposed HFDSegNet is trained in such a way

that first it categorize the given input image into ei-

ther Class-1 (PolyU FKP) or Class-2 (PolyU contact-

less FKI) database for the purpose of next level pro-

cessing. For this, a well known state-of-art model

ResNet50 has been used because it is very easy

to implement and train. There are other ResNet

(ResNet101, ResNet-152) variants are available but

this particular model more correctly classifies the

given input image into two categories.

ICPRAM 2019 - 8th International Conference on Pattern Recognition Applications and Methods

788

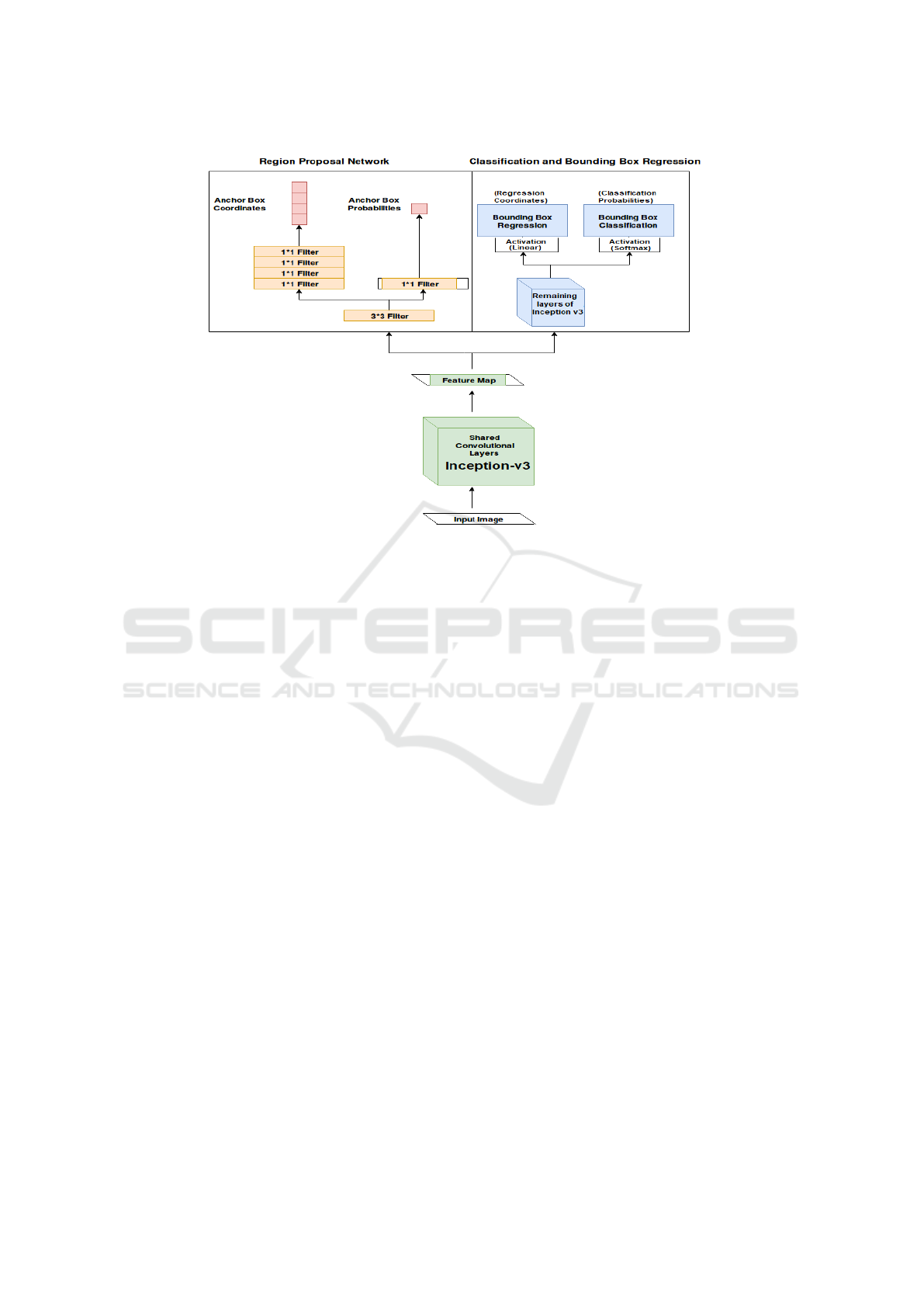

3.1.2 ROI Segmentation: Modified R-CNN with

Inception-v3

To keep in mind time complexity of existing ROI net-

works like YOLO, R-CNN etc, we have selected a

network which has the most optimum ratio of the time

consumed, the computations performed and the accu-

racy required in the field of biometrics. Therefore,

a network inspired from Faster R-CNN architecture

has been presented that gives much better accuracy

as well as transferable features than current state-of-

art studies. As shown in Fig 2, proposed modified

R-CNN comprises of three major modules such as:

1. Shared Layers of Inception-V3: In this work,

a set of convolutional layers are selected form a

pre-trained network namely Inception-V3 for ex-

traction of best possible line features. we have

truncated the pre-train Inception-v3 network by

detaching the whole fully connected layers. Thus,

we have only left with 2D convolution layers for

extraction of feature map. Now at first level of

the detection, we take the output feature map of

last convolution layer of Inception with dimension

17× 17× 768. Since, last layers of Inception-v3 is

helpful to provide mixed kind of knuckle features,

so we only take feature map from last 2D convo-

lution layer. Further, we lower the total number

of channels from 768 to 128 (using 1 × 1 convo-

lutions) for memory requirement. Till this level,

we have only concentrated on global knuckle fea-

tures. However for localization of different traits,

context information also play a crucial part. As

context information is important hence we have

included three context layers with 7 × 7, 5 × 5

and 3 × 3 filters. However, small sequential fil-

ters take few hyper-parameters than large filters,

so we consider small filters. The context informa-

tion of three types of filters will further merged

and given as input to classification and regression

head which will give the classification score and

regression output respectively. Up to this level,

first we take the feature map of last layer of Incep-

tion, then we apply Max-pooling over that for get-

ting more global features, finally this output fea-

ture map has been given to context module.

2. Region Proposal Network (RPN): RPN is a net-

work that takes in an input of size 3*3 from the

feature map obtained from the shared layers. It

then considers several anchor boxes of different

scales and aspect ratios, so as to select the best fit

anchor box for every ground truth bounding box.

The anchor boxes are chosen to be scale and shape

invariant. By default, three aspect ratios and three

scales are considered, yielding nine anchor boxes

at each (3 × 3) patch position. Later on, it selects

the coordinates of these anchor boxes by regress-

ing them w.r.t the ground truth bounding box. The

similarity between the anchor and the bounding

boxes are measured using Intersection over Union

(IOU). For each of the bounding box, at-least one

anchor has to be chosen. These anchor boxes are

further pruned to one (in case if they are more)

per bounding box, using non-maximum suppres-

sion(NMS). The RPN network, gives an output of

4K and K values, signifying the coordinates of the

K anchor boxes (4 values per box) and the proba-

bility (one value per box) of box existence respec-

tively.

3. ROI Pooling and Classification (Conv2D-512

Filters, Relu) and Regression (Conv2D-2048

Filters, Relu) Heads: An arbitrary sized matrix

given as input (as previously defined by RPN) to

ROI pooling to reduce the dimension of feature

maps. It maps the RPN region into a fixed size

(14 × 14) vector, and applies max pooling over

such a re-sized grid. It is easy to back propagate

through this layer as it is just a max pooling ap-

plied over to different regions of a feature map.

Finally, the network turns into two heads pre-

dicting the class scores and bounding box coor-

dinates. The multiple regions obtained after ROI

pooling are finally fed to a network consisting of

a few convolutional layers and a few fully con-

nected layers to predict the class scores and the

bounding box coordinates.

3.2 Testing and Training Strategy

The training and testing strategy were performed in

two phases to ascertain the usefulness of proposed

KHSegNet for the ROI segmentation.

3.2.1 Training

1. Ground Truth Generation: The ground truth

with respect to two type of finger knuckle images

has been generated using (Kumar and Ravikanth,

2009; Nigam et al., 2016) respectively. This is

performed because the RPN is trained as a regres-

sor and the probabilities and the coordinates of the

anchor boxes have to be generated so as to pro-

vide a ground truth for calculating the loss. The

RPN, outputs 4K and K values corresponding to

K anchor boxes for each n × n input. For gener-

ating the ground truth for the RPN we have used

IOU as a similarity measure between the anchor

boxes and the bounding boxes provided as ground

truth for probability prediction. The anchor boxes

HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation Network

789

Figure 2: ROI segmentation Architecture: Modified R-CNN.

with the maximum IOU while compared with the

ground truth are given high probabilities, termed

“positive”. It is ensured that each of the bounding

boxes has to have at-least one positive anchor box

corresponding to it.

2. Training RPN Network: Initially, we have

trained the region proposal network along with the

shared layers using the above computed ground

truths for the RPN. We are training modified R-

CNN from scratch rather than considering pre-

trained weights in order to make out the trained

model as problem specific as possible. One has

to notice that RPN along with the shared layers

has to be trained as an end-to-end network so as

to achieve good performance.

3. Training Classification and Regression Heads:

In the next step, we have to train the classifica-

tion and the regression heads using the obtained

region proposals. This also has to be carried out

in end-to-end fashion through ROI pooling layer

and shared convolutional layers.

4. Fine Tuning RPN, Classification and Regres-

sion Heads: Once we have trained the shared lay-

ers for RPN and both heads (as in Steps (2),(3)),

the best possible and discriminative features have

been learned at shared layers attaining the max-

imum accuracy. But the problem is, that RPN

is trained as end-to-end in Step (2), along with

shared layers. Hence, we have fine tuned the RPN

layers keeping the shared layers frozen, in order to

learn the anchor box prediction and their probabil-

ities. Similarly, the classification and regression

heads has to be fine tuned in order to take a differ-

ent feature map as an input, keeping the weights

of shared layers frozen, to get satisfactory results.

5. Losses: In order to train proposed HFDSegNet,

the four type of training losses are considered: (i)

RPN regression loss, (ii) RPN classification loss,

(iii) Final regression loss and (iv) final classifica-

tion loss. For each epoch, RPN network is first

trained and then the final regression and classifi-

cation heads is trained. The loss functions consid-

ered for trait classification and RPN classification

are “categorical cross-entropy” and “binary cross

entropy” respectively. In addition, mean squared

error (MSE) loss function has been used for re-

gression of both region proposal network as well

as bounding boxes.

Size Invariant Network: Our network can take in-

put of any size (size invariant), mainly because of this

tweaked implementation of RPN and the classifica-

tion and regression heads. The region proposal net-

work has been implemented as convolutional layers

and the ground truth is corresponding to the image

size, making our RPN size invariant. In the case of

classification and regression heads, the ROI pooling

layer serves this purpose, as the pooling layers take in

any arbitrary sized region of interest (ROI) and pools

it into a fixed sized output as discussed above. This

fixed sized output has been fed to a network consist-

ICPRAM 2019 - 8th International Conference on Pattern Recognition Applications and Methods

790

ing of convolutional and fully connected layers, mak-

ing the classification and regression heads size invari-

ant too. All the network hyper-parameters, have been

selected “empirically” by maximizing the system per-

formance over a validation set.

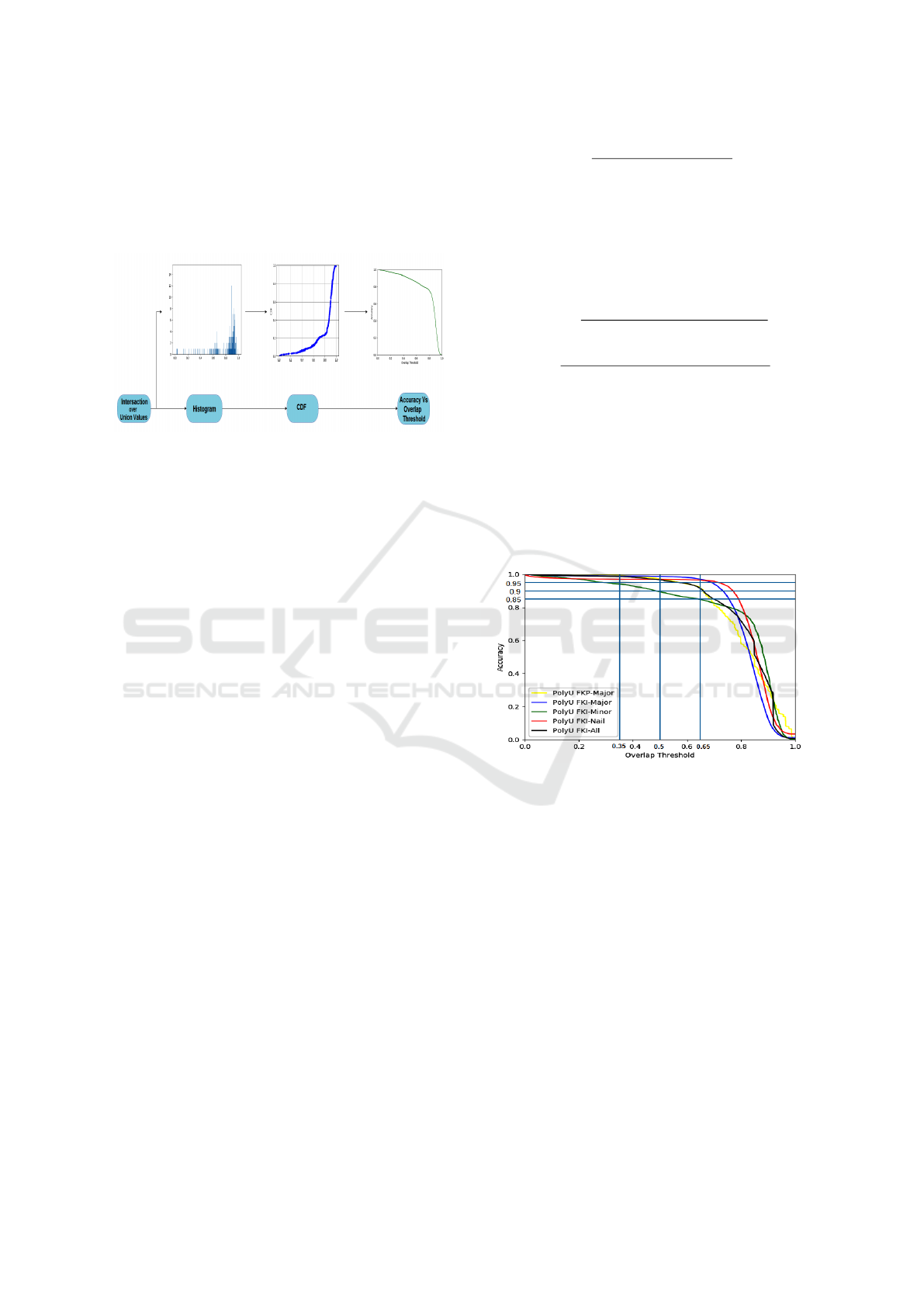

Figure 3: Steps involved for generating Accuracy Vs IOU

Graph

3.2.2 Testing

In case of class-2 database, We have used only 500

images for training while around 2015 images has

been used for testing in order to generate the response

of proposed HFDSegNet. Likewise, the equal number

of 500 images of class-2 database has been selected

for training. The trained network has been tested by

evaluating IOU, that we have used to obtain the accu-

racy of our proposed network. It is the most widely

used evaluating parameter, to check the efficiency of

any algorithm/ network, for object localization. Iter-

ative thresholding has been applied to over each of

the traits individually, as well over all the traits to de-

termine the individual trait as well as overall perfor-

mance analysis.

(a) Accuracy Vs IOU Graph: To visualize sys-

tem performance, we have plotted a graph, show-

ing accuracy at each threshold for each trait as well

as overall, as shown in Fig. 3. The IOU ranges

from 0 to 1. Where 0, indicates that the boxes do

not match at all and 1 indicates that the boxes are

perfectly matched. When the threshold is high the

number of images (in %) having IOU more than the

threshold will be less, where as it is 100% at 0 thresh-

old. Such a graph can be plotted as follows : Com-

pute IOU of predicted and ground truth boxes. The

predicted boxes having the same ground truth along

with their respective distance has been considered to

match the boxes in images containing multiple boxes.

Generate a histogram over IOU values at an step of

0:00001 so as to get a smooth curve. Normalize it, so

as to get a probability distribution function (PDF) and

compute its cumulative distribution function (CDF).

Accuracy at each IOU threshold (i

t

) can be defined

as : Accuracy =

# test images with IOU >=i

t

# test images

and can be

computed using the Eq. (1), as shown in Fig. 4.

Accuracy = 1 − cd f + Value o f histogram (1)

(b) Precision and Recall: In addition to the accu-

racy values, precision and recall has also been calcu-

lated for the proposed network validation as defined

in Eqs. (2), (3).

Precision =

# o f correct boxes predicted

Total No. o f boxes predicted

(2)

Recall =

# o f correct boxes predicted

Total No. o f Ground truth boxes

(3)

Precision and recall are calculated so as to validate

our approach, while calculating accuracy we only

consider the true predicted boxes and not all the pre-

dicted boxes. Similarly the intersection over union

values calculated are with respect to the ground truth

bounding boxes, but it may so happen that all the

ground truth boxes are not considered while calculat-

ing accuracy, therefore we take into account this detail

while computing the recall values.

Figure 4: The Accuracy Vs. Overlap Threshold Graph.

4 EXPERIMENTAL RESULTS

AND DISCUSSION

This section presents a detail of evaluation param-

eters, datasets and the testing protocol by which

we eveluate the performance of proposed HKSegNet

Segmentation network. For datasets, the two pub-

licly available finger knuckle databases: PolyU FKP

dataset (55, 2009) and PolyU Contactless FKI dataset

(56, 6 13) have been used.

[a] Test-1: In the first test, training for each

database is done using 500 images taken randomly.

The combined Accuracy Vs. Overlap Threshold

graph is shown in Fig. 4, where different colours have

been used to plot the curves for different ROI’s. One

can observe that the network produces high accuracy

HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation Network

791

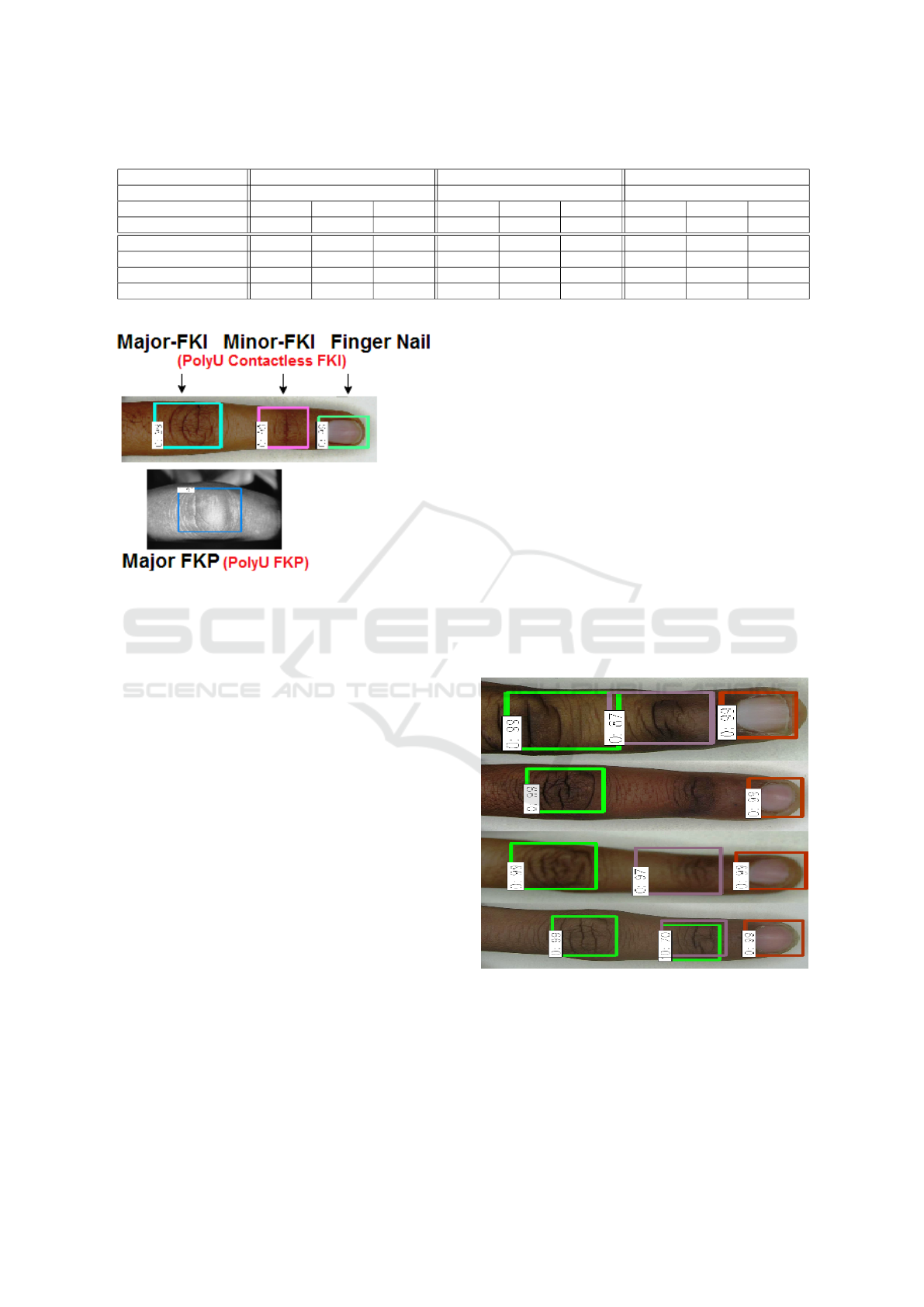

Table 1: The Accuracy, Precision and Recall Values at different Overlap (IOU) thresholds.

Biometric Traits Accuracy Precision Recall

Overlap IOU Threshold Overlap IOU Threshold Overlap IOU Threshold

0.35 0.5 0.65 0.35 0.5 0.65 0.35 0.5 0.65

PolyU FKP 99.56 98.52 93.15 99.04 98.62 96.97 90.27 8.38 88.12

Major FKI 99.18 98.94 97.88 98.05 97.96 97.34 97.75 97.66 96.68

Minor FKI 95.42 89.36 86.09 95.27 90.22 86.21 97.19 89.30 85.64

Nail 97.82 98.10 97.96 97.62 97.33 97.12 98.25 97.58 97.44

All 99.15 97.36 92.46 98.89 97.76 90.87 98.55 95.09 91.05

Figure 5: ROI obtained for various finger knuckle images

using HFDSegNet.

even up to 0.5 overlap IOU threshold for almost all

the images. A slight accuracy drop has been observed

when overlap IOU threshold becomes more than 0.3,

especially for minor knuckle (Green curve). But, one

can see that it drops gradually. It may be the case

as the curvature features are largely missing in that

region. While the performance of finger nail (Red

curve) is surprisingly observed good, as it sustains the

uniformity up to 0.65 IOU. Similarly the accuracy vs

overlap threshold graph for major knuckle shows that

it outperforms its counterparts at every IOU thresh-

old level. The prime reason behind this is that fin-

ger knuckle image contains features that are easily

distinguishable from the others under their respec-

tive region of interests. The PolyU FKI (Blue curve)

maintains constant accuracy (97.88%) up to 0.65 IOU

while it drops somewhat earlier for PolyU FKP im-

ages. Since, line features are evenly distributed in

case for PolYU FKP samples and most of the pre-

vious approaches tried to obtain the centre line or the

center point (Zhang et al., 2010; Nigam et al., 2016).

Network may not be able to capture such a symmetry

in the finger image. However, this type of accuracy is

considered to be very good in object recognition lit-

erature. From Fig 4 one can infer that the proposed

network has been performing very well across all the

samples. Some network predictions are depicted in

Fig 5. We obtain only major finger knuckle ROI using

PolyU FKP data-set while three ROI’s i.e., major, mi-

nor finger knuckles including finger nail are obtained

from PolyU FKI database.

[b] Test-2: To best of our knowledge, this is the

first ever proposed FKI biometrics deep learning seg-

mentation network. Table 1, shows the values ob-

tained for Accuracy, Precision and Recall for the all

experiments performed. Hence, we have not com-

pared our results with any other method. Although,

one can compare it with the existing techniques, such

as (Kumar and Ravikanth, 2009; Zhang et al., 2010;

Nigam et al., 2016), but such comparison may not be

justified due to two reasons : (i) They had been tested

only over single trait (we have performed multi-class

classification) and (ii) None of them had used deep

learning. Still we have observed that the proposed

network performs better that previous individual trait

techniques.

Figure 6: Failed ROI Images in case of Minor Finger

Knuckle.

[c] Pros and Cons of the Proposed Network:

We have tried to train HFDSegNet on nail bed, which

contains useful structural information. A set of few

images over which the proposed HFDSegNet failed

to segment are shown in Fig 6. The algorithm mainly

fails for Minor FKI due to blurriness, presence of ar-

ICPRAM 2019 - 8th International Conference on Pattern Recognition Applications and Methods

792

tifact/ minor cuts and scrapes etc. While, the results

for major knuckle and finger nail are much more con-

sistent. One most important point to mention is that

our network has just been trained over 500 random

images and performs much better than other existing

state-of-the-art ROI extraction algorithm.

5 CONCLUSION AND FUTURE

SCOPE

In this paper, we have proposed a end-to-end network

for extracting ROIs from any finger knuckle image.

To best of our knowledge, this is the first holistic

architecture proposed so far, segmenting three ROIs

namely major finger knuckle, minor finger knuckle

and finger nail in the image. We have explored the

possibility to extract a complete information from

a finger dorsal region so that it can enumerate the

recognition performance of state-of-art FKI recog-

nition systems. This method provides rotation and

translation error free results and capable of localizing

regions in case of challenging finger kuckle images.

The proposed holistic ROI segmentation network has

been trained with around 500 images and produces

very satisfactory and consistent results. Experimen-

tal results on two FKI data-sets show that our method

outperforms state-of-the-art finger knuckle segmenta-

tion approaches in terms of segmentation accuracy.

This work on holistic finger knuckle segmentation,

opens up the vast opportunities in the field of multi-

biometric authentication systems. In future work, we

will try to compute the recognition performance over

these extracted ROI’s.

REFERENCES

(2006-13). Polyu contact-less finger knuckle im-

age database. http://www4.comp.polyu.edu.hk/∼csa

jaykr/fn1.htm.

(2009). Finger-knuckle-print polyu. https://www4.comp.

polyu.edu.hk/∼biometrics/FKP.htm.

Bera, A., Bhattacharjee, D., and Nasipuri, M. (2014). Hand

biometrics in digital forensics. In Computational In-

telligence in Digital Forensics: Forensic Investigation

and Applications, pages 145–163. Springer.

Chlaoua, R., Meraoumia, A., Aiadi, K. E., and Korichi, M.

(2018). Deep learning for finger-knuckle-print iden-

tification system based on pcanet and svm classifier.

Evolving Systems, pages 1–12.

Girshick, R., Donahue, J., Darrell, T., and Malik, J. (2014).

Rich feature hierarchies for accurate object detection

and semantic segmentation. pages 580–587.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. pages 770–778.

Jain, A. K., Ross, A., and Prabhakar, S. (2004). An in-

troduction to biometric recognition. IEEE Transac-

tions on Circuits and Systems for Video Technology,

14(1):4–20.

Jaswal, G., Kaul, A., and Nath, R. (2016). Knuckle print

biometrics and fusion schemes–overview, challenges,

and solutions. ACM Computing Surveys (CSUR),

49(2):34.

Jaswal, G., Nigam, A., and Nath, R. (2017a). Deepknuckle:

revealing the human identity. Multimedia Tools and

Applications, pages 1–30.

Jaswal, G., Nigam, A., and Nath, R. (2017b). Finger

knuckle image based personal authentication using

deepmatching. pages 1–8.

Kumar, A. (2012). Can we use minor finger knuckle images

to identify humans? In Biometrics: Theory, Applica-

tions and Systems (BTAS), 2012 IEEE Fifth Interna-

tional Conference on, pages 55–60. IEEE.

Kumar, A. (2014). Importance of being unique from fin-

ger dorsal patterns: Exploring minor finger knuckle

patterns in verifying human identities. IEEE

Transactions on Information Forensics and Security,

9(8):1288–1298.

Kumar, A. and Kwong, C. (2013). Towards contactless,

low-cost and accurate 3d fingerprint identification.

pages 3438–3443.

Kumar, A. and Prathyusha, K. V. (2009). Personal au-

thentication using hand vein triangulation and knuckle

shape. IEEE Transactions on Image processing,

18(9):2127–2136.

Kumar, A. and Ravikanth, C. (2009). Personal authentica-

tion using finger knuckle surface. IEEE Transactions

on Information Forensics and Security, 4(1):98–110.

Kumar, A. and Xu, Z. (2016). Personal identification us-

ing minor knuckle patterns from palm dorsal surface.

IEEE Transactions on Information Forensics and Se-

curity, 11(10):2338–2348.

Nigam, A., Tiwari, K., and Gupta, P. (2016). Multiple

texture information fusion for finger-knuckle-print au-

thentication system. Neurocomputing, 188:190–205.

Zhai, Y., Cao, H., Cao, L., Ma, H., Gan, J., Zeng, J., Pi-

uri, V., Scotti, F., Deng, W., Zhi, Y., et al. (2018).

A novel finger-knuckle-print recognition based on

batch-normalized cnn. In Chinese Conference on Bio-

metric Recognition, pages 11–21. Springer.

Zhang, L., Zhang, L., and Zhang, D. (2009). Finger-

knuckle-print: a new biometric identifier. In 16th

IEEE International Conference on Image Processing,

pages 1981–1984. IEEE.

Zhang, L., Zhang, L., Zhang, D., and Zhu, H. (2010). On-

line finger-knuckle-print verification for personal au-

thentication. Pattern recognition, 43(7):2560–2571.

HFDSegNet: Holistic and Generalized Finger Dorsal ROI Segmentation Network

793