Anthropomorphic Virtual Assistant to Support Self-care of Type 2

Diabetes in Older People: A Perspective on the Role of Artificial

Intelligence

Gergely Magyar

1

, João Balsa

2

, Ana Paula Cláudio

2

, Maria Beatriz Carmo

2

, Pedro Neves

2

,

Pedro Alves

2

, Isa Brito Félix

3

, Nuno Pimenta

4,5

and Mara Pereira Guerreiro

3,6

1

Department of Cybernetics and Artificial Intelligence, Technical University of Kosice, Letna 9, Kosice, Slovakia

2

Biosystems & Integrative Sciences Institute (BioISI), Faculdade de Ciências da Universidade de Lisboa, Lisboa, Portugal

3

Unidade de Investigação e Desenvolvimento em Enfermagem (ui&de),

Escola Superior de Enfermagem de Lisboa, Lisboa, Portugal

4

Sport Sciences School of Rio Maior – Polytechnic Institute of Santarém, Rio Maior, Portugal

5

Exercise and Health Laboratory, Interdisciplinary Centre for the Study of Human Performance, ULisboa,

Cruz-Quebrada, Portugal

6

Centro de Investigação Interdisciplinar Egas Moniz (CiiEM), Instituto Universitário Egas Moniz,

Monte de Caparica, Portugal

{fc51688, fc51686}@alunos.fc.ul.pt, npimenta@esdrm.ipsantarem.pt

Keywords: Virtual Humans, Relational Agents, Artificial Intelligence, Health Care, Behaviour Change, Type 2 Diabetes,

Older People.

Abstract: The global prevalence of diabetes is escalating. Attributable deaths and avoidable health costs related to dia-

betes represent a substantial burden and threaten the sustainability of contemporary healthcare systems. In-

formation technologies are an encouraging avenue to tackle the challenge of diabetes management. Anthro-

pomorphic virtual assistants designed as relational agents have demonstrated acceptability to older people and

may promote long-term engagement. The VASelfCare project aims to develop and test a virtual assistant

software prototype to facilitate the self-care of older adults with type 2 diabetes mellitus. The present position

paper describes key aspects of the VASelfCare prototype and discusses the potential use of artificial intelli-

gence. Machine learning techniques represent promising approaches to provide a more personalised user ex-

perience with the prototype, by means of behaviour adaptation of the virtual assistant to users’ preferences or

emotions or to develop chatbots. The effect of these sophisticated approaches on relevant endpoints, such as

users’ engagement and motivation, needs to be established in comparison to less responsive options.

1 INTRODUCTION

The global prevalence of diabetes is escalating

(Karuranga et al., 2017). Across the globe 90% of di-

abetic adults suffer from type 2 diabetes (T2D); the

disease or its complications are the 9

th

major cause of

death (Zheng, Ley and Hu, 2018). Recent Portuguese

data estimated a total diabetes prevalence of about

13%. In Portugal more than one out of four persons

aged between 60 and 79 years old have diabetes

(Sociedade Portuguesa de Diabetologia, 2016).

Hyperglycaemia control in T2D involves lifestyle

changes, including an adequate diet and physical ac-

tivity and, where needed, regular medication. Diffi-

culties in adhering to diabetes management, which re-

quires sustained behavioural change, is associated

with poor glycaemic control in more than half of the

patients (García-Pérez et al., 2013). Long term hyper-

glycaemia results in life-threatening complications,

including cardiovascular disease, neuropathy,

nephropathy and retinopathy. These complications

represent an economic burden to health care systems,

in addition to the clinical and humanistic burden

posed to diabetes sufferers and their families

(Karuranga et al., 2017). Improving adherence to

T2D management is therefore critical, as it will delay

or avoid diabetes complications whilst relieving the

Magyar, G., Balsa, J., Cláudio, A., Carmo, M., Neves, P., Alves, P., Félix, I., Pimenta, N. and Guerreiro, M.

Anthropomorphic Virtual Assistant to Support Self-care of Type 2 Diabetes in Older People: A Perspective on the Role of Artificial Intelligence.

DOI: 10.5220/0007572403230331

In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2019) - Volume 1: GRAPP, pages

323-331

ISBN: 978-989-758-354-4; ISSN: 2184-4321

Copyright

c

2023 by SCITEPRESS – Science and Technology Publications, Lda. Under CC license (CC BY-NC-ND 4.0)

323

financial pressure on healthcare systems over-

whelmed by the demands of an ageing population.

Information Technologies (IT) are a promising

avenue to help T2D patients self-managing their con-

dition sustainably and without time or place re-

strictions. Mobile applications are regarded as

cheaper, more convenient and more interactive than

other IT-based interventions, such as short message

services and computer-based interventions (Hou et

al., 2016). They have demonstrated a positive effect

in improving glycaemic control in T2D patients. For

example, Cui and co-workers (2016) conducted a sys-

tematic review of 13 randomised controlled trials on

the effect of mobile applications on the management

of T2D. Six studies provided data for meta-analysis,

with a total of 1022 patients (average age from 45.2

to 66.6 years). They found that mobile applications

were associated with a significant reduction in HbA1c

by -0.40% (Cui et al., 2016). One issue meriting de-

bate is whether older people with T2D will have a

benefit comparable to their younger counterparts, as

trials included mostly younger samples (Cui et al.,

2016; Hou et al., 2016). Mobile applications for older

adults should be designed considering the needs of

this population group.

Relational agents, which are virtual characters ca-

pable of establishing long-term relationships with us-

ers, emerge as an encouraging approach to engage

older people.

The VASelfCare project aims to develop and test

a prototype of a relational agent application to facili-

tate self-care of older people with T2D. In this paper

we discuss ideas on the role of artificial intelligence

to enhance functionalities of the VASelfCare proto-

type; this falls under one of the conference topic areas

(interactive environments). Our position paper builds

on an international collaboration between the

VASelfCare team and a researcher experienced in be-

haviour adaptation of social robots in cognitive stim-

ulation therapy for older people. The ideas put for-

ward are discussed in light of experimental and bibli-

ographic evidence.

The core of this paper is comprised by three key

sections. Firstly, section 2 provides a broad over-

view of published work on relational agents, includ-

ing a brief description on the use of artificial intelli-

gence. Section 3 describes our relational agent pro-

totype (VASelfCare). Together, these sections set

the scene for the proposed ideas about the role of

artificial intelligence to enhance functionalities of

our prototype.

2 RELATED WORK

Studies resorting to relational agents have been con-

ducted in several areas. The importance of incorpo-

rating an artificial intelligence component in these

agents has been recognized for some years now

(Cassell, 2001).

In the area related to our work, healthcare, sub-

stantial research has been conducted by Bickmore et

al., namely in approaches tackling mental condi-

tions. For instance, Bickmore et al. (2010) evaluated

how patients with a high-level of depressive symp-

toms responded to a computer animated conversa-

tional agent in a hospital environment. Another pub-

lication describes the use of relational agents in

health counselling and behaviour change interven-

tions in clinical psychiatry (Bickmore and Gruber,

no date). Ring et al. reported a pilot study based on

an affectively-aware virtual therapist for depression

counselling (Ring, Bickmore and Pedrelli, 2016).

Still in mental health, Provoost et al. (2017) re-

viewed the use of embodied conversational agents in

clinical psychology, mostly focusing on autism and

on social skills training. The benefit of relational

agents in patients with lower health literacy was

demonstrated (Bickmore et al., 2010). It has also

been showed that interventions based on relational

agents are well accepted by older people (Bickmore

et al. 2005; Bickmore et al. 2013).

Devault and co-workers developed a conversa-

tional agent deploying artificial intelligence tech-

niques for natural language understanding and dia-

logue management to establish an engaging face-to-

face interaction. The goal was creating interactional

situations favourable to the automatic assessment of

distress indicators (Devault et al., 2014).

The role of emotions and the importance of estab-

lishing rapport between a virtual agent and the human

user, coupled to the relevance of nonverbal behaviour

in affective interaction, have been studied, for in-

stance, by Gratch et al. (2007), Bickmore et al. (2005)

Bickmore, Gruber and Picard (2005), and Paiva et al.

(2017).

One of the more explored subareas of artificial in-

telligence in other fields of research on virtual assis-

tants is natural language processing, which intends to

achieve a more believable interaction. For example,

(Hoque et al., 2013) developed a virtual agent for so-

cial skills training that reads facial expressions and

uses natural language processing techniques to under-

stand speech and prosody, responding with verbal and

nonverbal behaviours in real time. Also, Rubin, Chen

and Thorimbert (2010), surveyed the use of language

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

324

technologies in the development of intelligent assis-

tants in libraries.

The importance of virtual assistants in healthcare

as well as the relevance of incorporating machine

learning techniques is also reported in a work by

(Shaked, 2017), where the authors identify a set of

key features for the design of this kind of applications

when interacting with older people.

The use of relational agents in people with T2D

has been little explored. One exception is an Austral-

ian study, which described the development of an in-

telligent diabetes lifestyle coach for self-management

of diabetes patients (Monkaresi et al., 2013). How-

ever, this study lacks data on usability or the effect on

endpoints of interest. More recently, an on-going

study in the USA has used a relational agent as a

health coach for adolescents with type 1 diabetes and

their parents (Thompson et al., 2016).

3 VASELFCARE PROTOTYPE

Our application targets medication adherence and

lifestyle changes (physical activity and diet) in a step-

wise fashion by means of an anthropomorphic virtual

assistant, named Vitória. As previously mentioned,

the virtual assistant was designed as relational agent.

This is expected to increase engagement and long-

term use (Bickmore, 2010), and to facilitate the inter-

action with people with lower literacy. Development

of the application was guided by usability principles

for older people (Arnhold, Quade and Kirch, 2014).

3.1 Interaction with the Application

When opening the application Vitória is depicted in a

3D living room scenario. When clicking on the button

“Enter”, users with T2D are directed to a menu where

several choices are available, including talking to Vi-

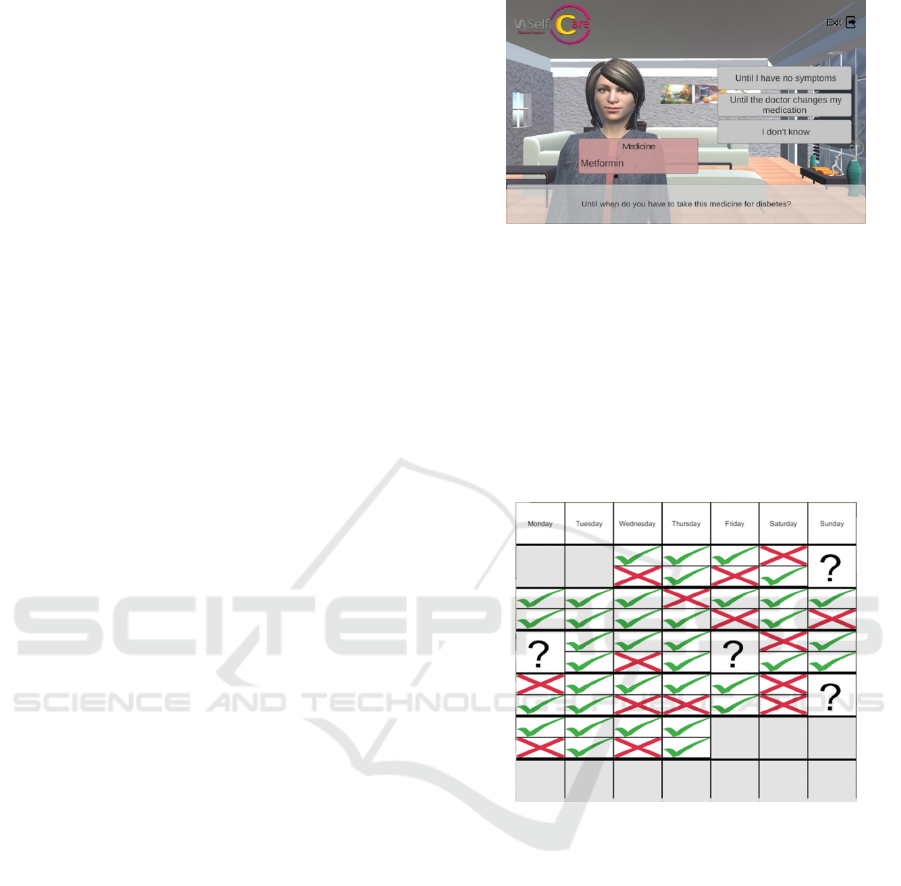

tória (Dialogue view – Figure 1).

3.1.1 The Dialogue View

Vitória communicates with users both verbally, by

means of a synthetic voice, and non-verbally

through facial and body animations. The latter de-

pend on users’ responses. The 3D scenario in which

this virtual assistant is depicted changes according

to the dialogue context (e.g. the kitchen scenario is

presented when talking about the diet), the time of

day (i.e. the view from the window in the back-

ground) and the season (e.g. the window view with

sunshine or rain).

Figure 1: Dialogue view in the evaluation phase (version

1.00).

Another design option tailored for older people in-

cludes subtitles in Vitória’s speech, which may over-

come hearing difficulties.

Users communicate with Vitória using buttons or

recording values, such as the daily number of steps,

and medication taken. The latter data are plotted into

graphs or other visual representations (Figure 2), used

by Vitória to give feedback during the interaction.

Figure 2: Medication-taking feedback (scenario: one oral

antidiabetic, two daily doses; question mark means no self-

reported data) (version 1.0.5).

The first daily interactions for medication adher-

ence and lifestyle changes collect data on pertinent

variables, with the purpose of tailoring the interven-

tion to users’ characteristics. For example, behaviour

change on medication adherence considers users’

knowledge about antidiabetic agents, their usual be-

haviour and the perceived self-efficacy in managing

their medication. This phase is designated “evaluation

phase”. In the subsequent “follow-up phase”, the be-

haviour change intervention is informed by theory

and users’ characteristics.

Based on the literature (Bickmore, Schulman and

Sidner, 2011), each daily interaction is structured in

sequential steps. Interactions in the evaluation phase

have six steps: opening, social talk, assess, feedback,

Anthropomorphic Virtual Assistant to Support Self-care of Type 2 Diabetes in Older People: A Perspective on the Role of Artificial

Intelligence

325

pre-closing and closing. The “opening” step, which

consist in greeting the user, is followed by a social

dialogue, including inquiries about users’ general

emotional and physical state (“social talk”). Ques-

tions on a variable of interest, such as knowledge

about antidiabetic medication, are then posed (“as-

sess”), followed by feedback on the answers collected

previously. Finally, contents of the next interaction

are described (“pre-closing”) and a farewell is deliv-

ered (“closing”).

Daily interactions in the follow-up phase are also

structured in repeated sequential steps: opening, so-

cial talk, review tasks, assess, counselling, assign

tasks, pre-closing and closing. The first and last two

steps are similar to those described above. In the “re-

view tasks” step, information is collected about pre-

viously agreed tasks or behaviours. Then, information

is provided about the reported task or behaviour, in-

cluding reward talk or a discussion of behaviour(s)

determinants (“assess”). In the counselling step, users

receive information on strategies to achieve the desir-

able behaviour (if applicable) and on specific educa-

tional topics. After, users negotiate new behavioural

goals and tasks with Vitória (“assign tasks”).

A rule-based component has been implemented in

the application, to convey a more flexible dialogue

flow in the follow-up phase.

The Behaviour Change Wheel (BCW), a compre-

hensive and evidence-based theoretical framework of

behaviour change, was chosen to inform the interven-

tion design (Michie, Atkins and West, 2014). The

BWC is underpinned by the COM-B model. This

model posits that engagement in a behaviour (B) at a

given moment depends on capability (C) plus oppor-

tunity (O) and motivation (M). Analysis of a given

behaviour in relation to COM-B allows the identifi-

cation of determinants, which must be addressed to

achieve change. The BCW entails also the selection

of interventions functions (broad strategies for induc-

ing the target behaviour) and behaviour change tech-

niques (active replicable elements that promote

change).

Suitable and effective behaviour change tech-

niques (BCT) were incorporated in different steps of

the interactions, guiding the dialogue creation

(Michie et al., 2013). For example, “Self-monitoring

of behaviour” was applied in the “review tasks”; it

consists of recording medication taken or steps count.

In the “Assess” step, information on medication-tak-

ing is provided by means of a calendar, a technique

entitled “Feedback on behaviour”. In this step users

also identify potential determinants of non-adher-

ence, which allows the selection of ameliorating strat-

egies in the “counselling” step. This BCT is desig-

nated “problem solving”. Educational topics ad-

dressed during “counselling” encompass the “infor-

mation about health consequences” BCT.

Dialogue creation in the assessment and follow-

up phases employs a helpful-cooperative communi-

cation style (Niess and Diefenbach, 2016).

3.1.2 The Other Views

In its current version, the application has six views in

addition to the dialogue view (“Main menu”, “Re-

cording”, “My diary”, “My data”, “Information” and

“About the application”). The main menu offers sev-

eral sub-menus; each sub-menu, in turn, corresponds

to a view of the application.

In the “My diary” view, users can create a weekly

plan. The diary automatically proposes meal times

with recipe suggestions. Users can also add, remove

and edit activities.

“My Data” allows users to review information on

topics, such as their prescribed antidiabetic medica-

tion, anthropometric measurements (e.g. a visual rep-

resentation of body mass index) and their reported be-

haviour, by means of the same charts used by Vitória

in daily interactions.

The “Information” view consists of a glossary

plus educational content about diabetes, diet, physical

activity, antidiabetic medication.

“Recording” allows users to input data such as the

number of steps walked per day or blood glucose lev-

els. The application gives automatic feedback to en-

tered data, including messages in case of potentially

erroneous values. Another example of automatic

feedback is depicted in Figure 3; this feedback guides

the user on the treatment of a hypoglycaemic episode,

when the inputted blood glucose level is below 70

mg/dl.

Figure 3: Example of an instant response to the recording

of a low blood sugar level (version 1.0.5).

Finally, the “About the Application” view in-

cludes information about the application develop-

ment, security and privacy policy.

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

326

While the dialogue view is restricted to one inter-

action per day, the aforementioned views can be

freely accessed.

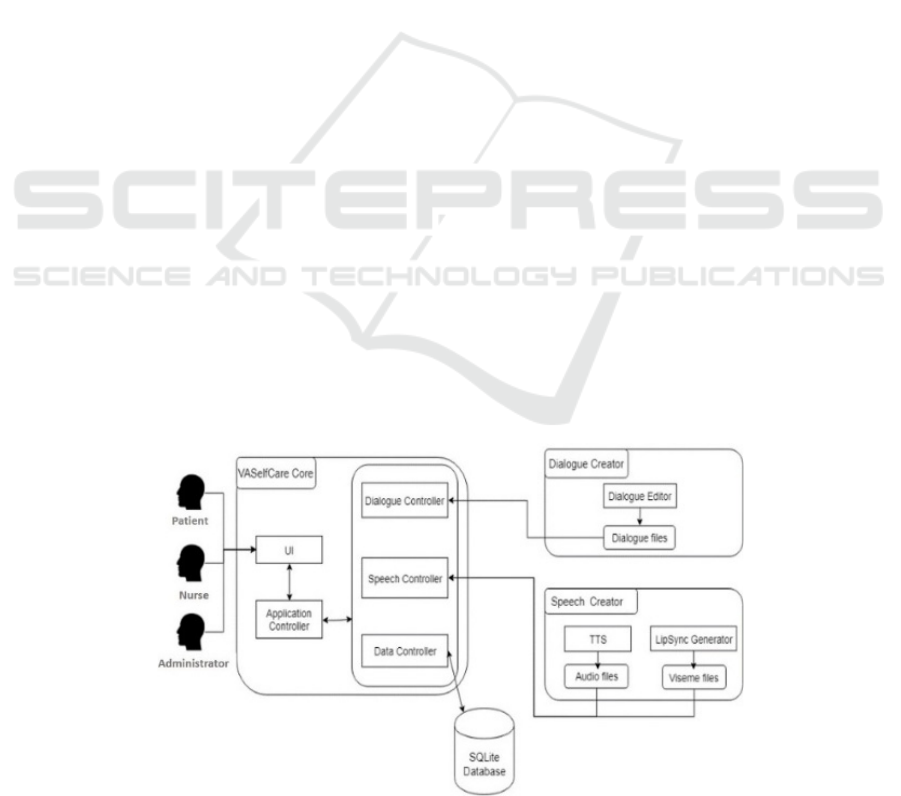

3.2 The Application Architecture

The components of our prototype are depicted in Fig-

ure 4. The VASelfCare Core comprises the scripts re-

sponsible for the user interface and the logic of the

application; it is implemented inUnity3D with C#

scripts. This core component resorts to services pro-

vided by two external elements: the Dialogue Crea-

tor and the Speech Generator.

VASelfCare Core comprises several modules with

distinct responsibilities: the Application Controller,

the Dialogue Controller, the Speech Controller,

and the Data Controller.

The Application Controller is responsible for the

logical sequence of the application and communicates

with the other modules of the VASelfCare Core.

The Dialogue Controller decides the correct order of

the dialogues, choosing the dialogue files that are

used in a specific moment of the interaction.

The Speech Controller is the module that allows Vi-

tória to speak, searching and activating the audio and

viseme files that correspond to the on-going dialogue.

Finally, the Data Controller module has the respon-

sibility of exchanging data with the embedded local

database. In this database the application stores clini-

cal information entered at the time of registration and

all the relevant data concerning the flow of interaction

with older patients.

The application runs in tablets with Android sys-

tem without Internet connection, which is intended to

facilitate access at users’ homes, where Internet is not

always available. When an Internet connection is de-

tected the application backs-up the database up onto

the project server.

4 THE ROLE OF ARTIFICIAL

INTELLIGENCE IN THE

VASELFCARE APPLICATION

The architecture of the VASelfCare prototype pre-

sented in section 3 offers multiple opportunities for

machine learning to further enhance the capabilities

of the virtual assistant. This section provides a brief

background on reinforcement learning and discusses

the potential use of artificial intelligence methods in

the VASelfCare application.

4.1 Reinforcement Learning

Generally, reinforcement learning is a learning

method which determines how to map situations to

actions and also tries to maximize a numerical reward

signal. The actions performed by the agent are not

identified explicitly, they have to be discovered

through exploration in order to get the most reward

(Sutton and Barto, 2018).

The agent can sense its environment and take ac-

tions which can change the state of the environment

to reach a given goal. The formulation of the task in-

cludes the following three aspects: sensation, action

and goal.

Figure 4: VASelfCare architecture.

Anthropomorphic Virtual Assistant to Support Self-care of Type 2 Diabetes in Older People: A Perspective on the Role of Artificial

Intelligence

327

In addition to the aforementioned concepts of re-

inforcement learning, there are three other key ele-

ments to correctly define a reinforcement learning

problem: policy, reward function and value function

and (Sutton and Barto, 2018).

Policy in general defines the agent’s way of be-

having in a given situation. More precisely it serves

for mapping from perceived states of the environment

to actions which must be executed when in those

states.

The reward function defines the goal of the rein-

forcement learning problem. It is responsible for

mapping the perceived state of the environment or

state-action pair to a number (reward) which defines

the desirability of the given state. The agent’s goal is

to maximize this reward during the learning process.

Contrarily to the reward function, which indicates

what is good or bad for the agent in the immediate

sense, the value function defines what is good for the

agent in the long run. In general, the value function is

the maximal value of reward which can be expected

by the agent during the learning process.

Traditional methods of machine learning, such as

reinforcement learning, were used successfully in

many areas. However, they were not primarily de-

signed for learning from real-time social interaction

with humans. This encompasses challenges, such as

dealing with limited human patience or ambiguous

human input. To address these challenges socially

guided machine learning was designed (Thomaz and

Breazeal, 2006).

In reinforcement learning the reward signal is rep-

resented by human feedback (e.g. facial emotion, ges-

ture, verbal expression). Such a system is designed to

efficiently learn from people with no experience in

reinforcement learning. This learning method can be

used to further enhance the cognitive capabilities of

Vitória, the VASelfCare virtual assistant.

4.2 Applying Reinforcement Learning

to the Prototype

4.2.1 Behaviour Adaptation based on Users’

Evaluation

One of the possibilities is changing Vitória’s facial

and body animations based on users’ preferences. The

task of the learning agent in this case is to find what

kind of pre-defined animations are desired by users

(e.g. fast or slower movements, more expressive ver-

sus more neutral facial animations). To increase the

learning speed animations can be labelled; the ones

which have the same label and are not accepted by the

user can be excluded from the learning right away.

Nonetheless, to ensure variability of the virtual assis-

tant behaviour these can show up with low probability

in future interactions. The reward for the agent could

be provided by users’ ratings of Vitória’s animations.

An additional possibility pertains to the fifth step

of the evaluation stage: counselling. As mentioned in

section 3.1, one of the goals of this step is to educate

users on different topics. Reinforcement learning can

help to create a personalised counselling step, which

builds on existing knowledge and provides new infor-

mation. The actions of the learning agent can be the

descriptions of the new concepts and the reward can

be provided by testing the newly acquired knowledge

of the user; this step is already included in the evalu-

ation stage.

For the sake of discussion, we can consider a sce-

nario in which Vitória does not have information

about the existing level of knowledge of a new user.

Firstly, this construct must be evaluated, to determine

what is already known about a topic (e.g. diabetes or

the prescribed medication). In the follow-up interac-

tions the virtual assistant can then address more often

topics where knowledge is absent or limited. Employ-

ing this mechanism provides a tailored approach

whilst ensuring that users will have comprehensive

knowledge about diabetes management.

4.2.2 Behaviour Adaptation based on the

Assessment of Users’ Facial Emotions

In recent years many emotion recognition services

were made publicly available. Due to the complexity

of facial emotion assessment, these cloud services fa-

cilitate the development of emotion recognition sys-

tems. In practice, users upload an image or provide an

URL address to the service, which returns infor-

mation about emotion assessment, usually in the form

of numerical data, representing the probability of the

presence of a given emotion in the image.

Selected examples of emotion recognition ser-

vices are Face++ Cognitive Services’ Emotion

Recognition which can detect anger, disgust, fear,

happiness, neutral, sadness, and surprise. Google Vi-

sion detects four emotional states on the face: anger,

joy, sorrow, and surprise. The Microsoft Face API an

detect eight emotions: anger, contempt, disgust, fear,

happiness, neutral, sadness, and surprise. The

Sighthound Cloud API can recognize anger, disgust,

fear, happiness, neutral, sadness, and surprise.

In a recent work we combined the above-men-

tioned services and trained a machine learning model

to increase the overall accuracy of face emotion as-

sessment (Magyar et al., 2018). The resulting system

was tested on different face emotion datasets and was

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

328

able to increase accuracy from an average of 70 –

75% to approximately 95%. In the VASelfCare pro-

totype such an emotion recognition system can be

used to gather data about the overall emotional state

of users, therefore detecting and preventing unwanted

states (e.g. distress, pain). A pilot study for assessing

elderly patients’ emotions in a cognitive stimulation

therapy session with a robot already showed that such

information can be valuable when adapting the

agent’s behaviour (Takac et al., 2018).

As an example, we can consider the second step

of the daily interaction: social talk. In this step Vitória

initiates small talk with a user on various topics (e.g.

family, music, daily news, etc.). By combining rein-

forcement learning and emotion recognition, the vir-

tual assistant can explore topics associated with posi-

tive feelings (e.g. expressing happiness when talking

about grandchildren) and with negative feelings (e.g.

expressing sadness when talking about a late spouse).

Using this information and a history of previous con-

versations, the social interaction can be personalised,

to ensure a positive mood during the interaction.

Facial emotion recognition can also be used in re-

inforcement learning as a reward for the learning

agent. In this approach the behaviour of the virtual as-

sistant will be adapted based on the emotional re-

sponse of the user. This means that Vitória will likely

to prefer those actions which result in boosting the us-

ers’ mood and avoid those provoking sadness.

4.2.3 Chatbots

Another interesting possibility is applying artificial

intelligence to the VASelfCare prototype by means of

chatbots, which are increasingly getting more atten-

tion. As for facial emotion assessment, there are many

publicly available cloud services for building chat-

bots. Although these are mainly used for customer

support applications, they can be easily integrated in

the VASelfCare prototype.

For the sake of simplicity, we provide the descrip-

tion of Microsoft’s Q & A maker and how it can be

used in interactions with older people with T2D.

Nearly all cloud-based bot frameworks work on a

similar principle.

To create a chatbot from scratch it is firstly neces-

sary to gather data on relevant topics, such as medi-

cation, activities in the form of previous conversa-

tions, user manuals and product materials. Secondly

these documents are processed and used to train a ma-

chine learning model, which extracts information

from the documents and uses it to form answers to

questions on a given topic. The model trained initially

can be tested by experts to refine the system answers.

The model is then ready for use and can serve the var-

ious needs of the users.

In case of updates (e.g. a new marketed drug), the

model can be easily re-trained by feeding data about

the product to the system.

This feature holds great potential, since questions

about diabetes management can come up daily in the

interactions with Vitória. By constantly updating the

model with the newest information it is ensured that

the virtual assistant is providing accurate information,

previously checked by experts, to multiple users. This

system can also work with available text-to-speech

technologies, so users will not have to read the mes-

sages.

5 CONCLUSIONS

Artificial intelligence, and particularly machine

learning techniques, represent promising approaches

to provide a more personalised user experience with

the VASelfCare prototype. These approaches include

tailoring the virtual assistant behaviour based on us-

ers’ preferences or facial emotion assessment and the

use of chatbots.

Scholten et al. (2017) reviewed the capabilities of

relational agents to fulfil users’ needs in eHealth in-

terventions. They made a distinction between non-

responsive and responsive relational agents. The for-

mer are not designed to respond to emotionally ex-

pressed users’ needs in real time. Research shows that

non-responsive relational agents can engage users.

Although their development is simpler, there is a

higher risk of a worse user experience (Scholten,

Kelders and Van Gemert-Pijnen, 2017).

Responsive relational agents, designed to detect

frustration and to empathically respond to it, have

shown a positive effect on users’ attitudes. However,

this effect has not been demonstrated in clinical pop-

ulations (Scholten, Kelders and Van Gemert-Pijnen,

2017). Therefore, the benefits of facial emotion as-

sessment merit further research, particularly consid-

ering that both nonresponsive and responsive agents

can provide a positive experience.

Tailoring of health information has been defined

as “any combination of information and behaviour

change strategies intended to reach one specific per-

son based on characteristics that are unique to that

person related to the outcome of interest and derived

from an individual assessment” (Kreuter, M., Farrell,

D., Olevitch, L., & Brennan, 2000). This approach

demonstrated a positive effect in health behaviour

outcomes in web-delivered interventions (versus non-

Anthropomorphic Virtual Assistant to Support Self-care of Type 2 Diabetes in Older People: A Perspective on the Role of Artificial

Intelligence

329

tailored approaches) (Lustria et al., 2013). Our cur-

rent prototype tailors the counselling step, dealing

with diverse pre-existing levels of knowledge, by

means of an decision system. Nonetheless, due to the

nature of human-computer interaction it is nearly im-

possible to prepare the system for every situation us-

ing an explicit rule-based system. Reinforcement

learning enables a more flexible platform for the

agent to learn an appropriate behaviour based on us-

ers’ preferences. It can also be used as a complemen-

tary method to the existing decision system. In this

scenario the system would firstly determine what kind

of action should be executed by the agent. Then rein-

forcement learning would be applied to determine

how the action should be executed when dealing with

a concrete user. By applying such a combination of

machine learning methods, the application will offer

a more flexible and tailored behaviour of the agent.

An open dialogue (textual or verbal) between us-

ers and the relational agent, such as the one provided

by a chatbot, may support users’ needs, providing a

better experience and consequently improving adher-

ence to the intervention. Ultimately, this assumption

will have to be subjected to empirical trial.

We have pointed out several opportunities for re-

search. Such research will contribute to answer a crit-

ical question posed by Dehn & Mulken (2000) about

two decades ago.: “what kind of animated agent used

in what kind of domain influence what aspects of the

user’s attitudes or performance?.”

ACKNOWLEDGEMENTS

This project was supported by FCT, Compete 2020

(grant number LISBOA-01-0145-FEDER-024250,

02/SAICT/2016) and by FEDER Programa Opera-

cional do Alentejo 2020 (Grant number ALT20-03-

0145-FEDER-024250).

REFERENCES

Arnhold, M., Quade, M. and Kirch, W. (2014) ‘Mobile

Applications for Diabetics : A Systematic Review and

Expert-Based Usability Evaluation Considering the

Special Requirements of Diabetes Patients Age 50

Years or Older Corresponding Author ’:, Journal of

Medical Internet Research, 16(4), pp. 1–18. doi:

10.2196/jmir.2968.

Bickmore, T. (2010) ‘Relational Agents for Chronic

Disease Self-Management’, in Hayes, B. and Aspray,

W. (eds) Health Informatics: A Patient-Centered

Approach to Diabetes. Cambridge, MA: MIT Press, pp.

181–204.

Bickmore, T. and Gruber, A. (no date) ‘Relational agents in

clinical psychiatry.’, Harvard review of psychiatry,

18(2), pp. 119–30. doi: 10.3109/10673221003707538.

Bickmore, T., Gruber, A. and Picard, R. (2005)

‘Establishing the computer-patient working alliance in

automated health behavior change interventions’,

Patient Education and Counseling, 59(1), pp. 21–30.

doi: 10.1016/j.pec.2004.09.008.

Bickmore, T. W. et al. (2005) ‘“It’s just like you talk to a

friend” relational agents for older adults’, Interacting

with Computers, 17(6), pp. 711–735. doi: 10.1016/j.int

com.2005.09.002.

Bickmore, T. W. et al. (2010) ‘Response to a relational

agent by hospital patients with depressive symptoms’,

Interacting with Computers. Elsevier B.V., 22(4), pp.

289–298. doi: 10.1016/j.intcom.2009.12.001.

Bickmore, T. W. et al. (2013) ‘A randomized controlled

trial of an automated exercise coach for older adults’,

Journal of the American Geriatrics Society, 61(10), pp.

1676–1683. doi: 10.1111/jgs.12449.

Bickmore, T. W., Schulman, D. and Sidner, C. L. (2011) ‘A

reusable framework for health counseling dialogue

systems based on a behavioral medicine ontology’,

Journal of Biomedical Informatics. Elsevier Inc., 44(2),

pp. 183–197. doi: 10.1016/j.jbi.2010.12.006.

Cassell, J. (2001) ‘Conversational Agents Representation

and Intelligence in User Interfaces’, AI Magazine,

22(4), pp. 67–84. doi: 10.1609/aimag.v22i4.1593.

Cui, M. et al. (2016) ‘T2DM self-management via

smartphone applications: A systematic review and

meta-analysis’, PLoS ONE, 11(11), pp. 1–15. doi:

10.1371/journal.pone.0166718.

Dehn, D. M. and Mulken, S. van (2000) ‘The impact of

animated interface agents : a review of empirical

research’, International Journal of Human-Computer

Studies, 52, pp. 1–22.

Devault, D. et al. (2014) ‘SimSensei Kiosk: A Virtual

Human Interviewer for Healthcare Decision Support’,

in International conference on Autonomous Agents and

Multi-Agent Systems, AAMAS ’14, Paris, France, May

5-9, 2014. IFAAMAS/ACM, pp. 1061–1068.

García-Pérez, L.-E. et al. (2013) ‘Adherence to therapies in

patients with type 2 diabetes.’, Diabetes therapy, 4(2),

pp. 175–94. doi: 10.1007/s13300-013-0034-y.

Gratch, J. et al. (2007) ‘Creating Rapport with Virtual

Agents’, Intelligent Virtual Agents, pp. 125–138. doi:

10.1007/978-3-540-74997-4_12.

Hoque, M. (Ehsan) et al. (2013) ‘MACH: my automated

conversation coach’, in Proceedings of the 2013 {ACM}

international joint conference on {Pervasive} and

ubiquitous computing - {UbiComp} ’13. Zurich,

Switzerland: ACM Press, p. 697. doi: 10.1145/24934

32.2493502.

Hou, C. et al. (2016) ‘Do Mobile Phone Applications

Improve Glycemic Control (HbA1c) in the Self-

management of Diabetes? A Systematic Review, Meta-

analysis, and GRADE of 14 Randomized Trials’,

Diabetes Care, 39(11), pp. 2089–2095. doi: 10.2337/dc

16-0346.

GRAPP 2019 - 14th International Conference on Computer Graphics Theory and Applications

330

Karuranga, S. et al. (eds) (2017) IDF Diabetes Atlas. 8th

edn. Brussels, Belgium: International Diabetes

Federation. Available at: http://diabetesatlas.org/.

Kreuter, M., Farrell, D., Olevitch, L., & Brennan, L. (2000)

Tailoring health messages: customizing communica-

tion with computer technology. Mahwah New Jersey:

Lawrence Erlbaum Associates. Available at:

https://www.popline.org/node/174671 (Accessed: 4

December 2018).

Lustria, M. L. A. et al. (2013) ‘A meta-analysis of web-

delivered tailored health behavior change

interventions’, Journal of Health Communication,

18(9), pp. 1039–1069. doi: 10.1080/10810730.2013.

768727.

Magyar, J., Magyar, G., Sincak, P. 2018. A Cloud-Based

Voting System for Emotion Recognition in Human-

Computer Interaction. 2018 World Symposium on

Digital Intelligence for Systems and Machines, pp. 109-

114.

Michie, S. et al. (2013) ‘The behavior change technique

taxonomy (v1) of 93 hierarchically clustered

techniques: Building an international consensus for the

reporting of behavior change interventions’, Annals of

Behavioral Medicine, 46(1), pp. 81–95. doi:

10.1007/s12160-013-9486-6.

Michie, S., Atkins, L. and West, R. (2014) The Behaviour

Change Wheel.

Monkaresi, H. et al. (2013) ‘Intelligent Diabetes

Lifestyle Coach’, Fifth International Workshop on

Smart Healthcare and Wellness Applications

(SmartHealth’13) - Adelaide, Australia, p. s/p.

Niess, J. and Diefenbach, S. (2016) ‘Communication Styles

of Interactive Tools for Self-Improvement’, Psychology

of Well-Being. Springer Berlin Heidelberg, 6(1), p. 3.

doi: 10.1186/s13612-016-0040-8.

Paiva, A. et al. (2017) ‘Empathy in Virtual Agents and

Robots: A Survey’, ACM Trans. Interact. Intell. Syst.,

7(3), p. 11:1–11:40. doi: 10.1145/2912150.

Provoost, S. et al. (2017) ‘Embodied conversational agents

in clinical psychology: A scoping review’, Journal of

Medical Internet Research, 19(5). doi:

10.2196/jmir.6553.

Ring, L., Bickmore, T. and Pedrelli, P. (2016) ‘An

affectively aware virtual therapist for depression

counseling’, ACM SIGCHI Conference on Human

Factors in Computing Systems (CHI) workshop on

Computing and Mental Health. doi: 10.1128/JB.01951-

12.

Rubin, V. L., Chen, Y. and Thorimbert, L. M. (2010)

‘Artificially intelligent conversational agents in

libraries’, Library Hi Tech, 28(4), pp. 496–522. doi:

10.1108/07378831011096196.

Scholten, M. R., Kelders, S. M. and Van Gemert-Pijnen, J.

E. W. C. (2017) ‘Self-guided Web-based interventions:

Scoping review on user needs and the potential of

embodied conversational agents to address them’,

Journal of Medical Internet Research, 19(11). doi:

10.2196/jmir.7351.

Shaked, N. A. (2017) ‘Avatars and virtual agents –

relationship interfaces for the elderly’, Healthcare

Technology Letters, 4(3), pp. 83–87. doi: 10.1049/

htl.2017.0009.

Sociedade Portuguesa de Diabetologia (2016) Diabetes:

Factos e Números - o ano de 2015.

Sutton, R. S. and Barto, A. (2018) Reinforcement Learning:

An Introduction. MIT PRESS. Available at:

https://mitpress.mit.edu/books/reinforcement-learning-

second-edition (Accessed: 13 November 2018).

Takac, P. et al. (2018). Cloud Based Affective Loop for

Emotional State Assessment of Elderly. In: G

D'Onofrio, ed.. Active & Healthy Aging: Novel Robotic

Solutions and Internet of Things. Avid Science, ISBN

978-93-86337-71-9

Thomaz, A. L. and Breazeal, C. (2006) ‘Reinforcement

learning with human teachers: Evidence of feedback

and guidance with implications for learning

performance’, AAAI Conference on Artificial

Intelligence, 6, pp. 1000–1005. doi: 10.1039/C3EE

43707H.

Thompson, D. et al. (2016) ‘Use of Relational Agents to

Improve Family Communication in Type 1 Diabetes:

Methods’, JMIR Research Protocols, 5(3), p. e151. doi:

10.2196/resprot.5817.

Zheng, Y., Ley, S. H. and Hu, F. B. (2018) ‘Global

aetiology and epidemiology of type 2 diabetes mellitus

and its complications’, Nature Reviews Endocrinology.

Nature Publishing Group, 14(2), pp. 88–98. doi:

10.1038/nrendo.2017.151.

Anthropomorphic Virtual Assistant to Support Self-care of Type 2 Diabetes in Older People: A Perspective on the Role of Artificial

Intelligence

331