Cloud Service Quality Model: A Cloud Service Quality Model based

on Customer and Provider Perceptions for Cloud Service Mediation

Claudio Giovanoli

Institutue of Information Systems, University of Applied Sciences and Arts Northwestern Switzerland,

Riggenbachstrasse 16, 4600 Olten, Switzerland

Keywords: Cloud Computing, Service Quality, Cloud Service Quality, Quality Attributes.

Abstract: The field of cloud service selection tries to support customers in selecting cloud services based on QoS

attributes. For considering the right, QoS attributes it is necessary to respect the customers and the providers’

perception. This can be made through a Service Quality Model. Thus, this paper introduces a Cloud Service

Quality Model based on a Systematic Literature Review and user interviews as well as providers perceptions.

1 INTRODUCTION

Over the last ten years, the globalization procedure of

business structures has been formed in part through

outsourcing. Outsourcing is a kind of substitution of

internal departments and tasks to third-party vendors

who are typically specialized in certain businesses.

Contracts are regulating supplies and services and the

period of validity between the outsourcing company

and the third-party vendor (Norwood et al., 2006).

Cloud computing can be seen as a stage of IT

outsourcing. The exclusion of internal IT departments

including data centers and complex application

landscapes can be seen as its main drivers.

Soon, companies will need devices connected to

the internet via broadband network access. Other

required services like infrastructure, platforms, and

applications are placed off-premise by cloud service

providers and used on demand. Clients of such cloud

services have no control or influence on the cloud

service providers' IT infrastructure because they just

use the offered service as agreed in SLAs.

Today, companies and organizations planning to

use cloud services are facing a huge number of

different possible cloud solutions. Because of the

immense number of possibilities, it is hard to orient

oneself and find a suitable solution and offer. Cloud

Brokering companies are offering the provision of

optimal service to its customers. This time-

consuming process stands in contrast to the cloud

paradigms of fast provision and on-demand self-

service of a service. Thus, an automated brokerage

approach could leverage the advantages of cloud

computing and increase companies’ agility.

However, before a company can realize these

advantages, a thorough evaluation of the needs,

possible cloud usage scenarios (what type of service

and deployment models will meet), a suitable partner

(who can understand and implement my needs)

should be made in advance. Such a holistic analysis,

however, requires a high use of resources, which

often cannot be guaranteed, especially in the case of

small and medium-sized enterprises, primarily due to

a lack of know-how. There are already tools for

carrying out internal evaluation and procedural

models for the selection of a suitable partner

(provider). However, full consideration can usually

be provided only with the inclusion of consulting

services, which in turn do not pay off especially for

small and medium-sized companies.

2 RELATED WORK

With the growth of cloud service offerings, it has

become increasingly difficult for cloud service

customers to decide which provider can fulfill their

requirements for quality cloud services (Dastjerdi et

al., 2011; Zheng et al., 2013). For example, each

cloud service provider might offer similar services at

different prices and performance levels with different

sets of features (Wibowo and Deng, 2016). However,

while one provider might be cheaper for storage

services, they may be more expensive for

Giovanoli, C.

Cloud Service Quality Model: A Cloud Service Quality Model based on Customer and Provider Perceptions for Cloud Service Mediation.

DOI: 10.5220/0007587502410248

In Proceedings of the 9th International Conference on Cloud Computing and Services Science (CLOSER 2019), pages 241-248

ISBN: 978-989-758-365-0

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

241

computation. Given the diversity of cloud service

offerings, it is an essential challenge for organizations

to discover suitable cloud providers who can satisfy

their requirements. There may be trade-offs between

different user requirements fulfilled by different

cloud service providers. As a result, it is not sufficient

to discover multiple cloud services. It is important to

determine the most suitable cloud service through an

evaluation for a specific situation (Garg et al., 2013;

Whaiduzzaman et al., 2014; Wibowo and Deng,

2016). The evaluation of available cloud services

concerning a set of specific criteria is complex

(Bryman and Bell, 2015) due to the presence of the

multi-dimensional nature of the evaluation process

and the presence of vagueness of the decision-making

process (Arpaci et al, 2015).

Kritikos and Plexousakis relate the basic web

service discovery and cope with the topic of

requirements for web services discovery (Kritikos

and Plexousakis, 2009). The core of this process is

matchmaking, which enlists the relevant services in

the registry. Afterward, the selection is based on the

ranking approach. First, various services are filtered

and selected as per the user's preference who selects

the options they want to use. This service can be very

likely using Categorization of Super Matches, exact

matches, partially Matches and service that fails. In

this approach, the quality criteria are defined through

literature, based on OWL Q, and consist of

availability, reliability, safety, and security are

considered as criteria. However, according to Hema

Priya and Chandramathi, (Hema Priya and

Chandramathi, 2014), these criteria cannot be

considered all together, which reduces the

opportunities for restrictions. They use numeric QoS

parameters along with their measurement units and

methods in OWL-Q. The criteria reflect users’ needs

and are not considered, and the approach is only

tested prototypically.

A Delphi study conducted by Lang (Lang et al.,

2016) defines the most critical criteria for cloud

provider selection. Through conducting workshops

and panels with industry experts from the cloud

computing area, the authors provided a list of

important selection criteria. This set of criteria

consists of several attributes: certification, contract,

deployment model, flexibility, functionality,

geolocation of service, integration, legal compliance,

monitoring, support, a test of the solution, and

transparency of activities. Using all these criteria can

provide a comprehensive limitation for a cloud

service selection. Nevertheless, they do not offer

measures for their criteria nor a matchmaking method

to prove the approach. This diminishes the

applicability for users as criteria support, tests of

solution and transparency of activities are not easy to

measure, and thus high expertise in each area is

necessary.

A Description Logic-based method proposed by

Dastjerdi supports the QoS-aware discovery of IaaS

web-services and the automatic deployment of

appliances on selected services through a service

(Dastjerdi et al., 2011). The proposed service

matchmaking process has two parts – ontologies and

a matchmaking algorithm. The goal of service

matching and five matching operations are first

specified, such as the concepts of exact matching,

plugin matching, non-matching, etc.

In most cases, the project context provides the

language used in the service description. If the

language of the service description is an ontology, the

matchmaker service is based on ontology

fundamentals. In other cases, the service

matchmakers use different mathematical methods.

However, service matchmakers also differ in other

factors: the target service requester, the supported

service layer, their definition for the service

matchmaking process, the types of requirements, and

according to the quality and model used.

Table I examines 20 different service selection

projects. Seven of the selected projects focus on the

selection of web-services, whereas the other projects

focus on Infrastructure or Software Layers. As

functional requirements are underlying on the

systems input/output, most research work is based on

non-functional aspects. Thus, the matchmaking

methods focuses on the matching of non-functional

requirements, mainly QoS aspects.

In the existing approaches, the service description

and quality models stem mostly from the web

services context. Some QoS properties that are

specific to cloud services are not considered, for

example, scalability, elasticity and different price

models. Moreover, some matching approaches do not

provide concrete examples for the service properties

targeted by their service matcher. Considering a

quality model, the approaches are beside

(Repschlaeger et al., 2012; Wang et al., 2014)

linguistic terms most often SMI and OWL-Q.

Whereas OWL-Q appears mostly for web-service

matching, SMI and CFR are used for the selection of

cloud services. As three roles are involved in service

selection, the cloud service customer (CSC), the

cloud service provider (CSP) and the Selector (S)

getting an in-depth look into the research projects,

Table 2 shows that 20 projects are focusing on the

same roles. Somu (Somu et al., 2017) include the CSP

role, beside the CSC as an essential part for building

CLOSER 2019 - 9th International Conference on Cloud Computing and Services Science

242

Table 1: Service Selection Review I.

trust with the customer based on the SMI criteria. All

other projects consider the matchmaking and the

cloud service customer role but excluding the cloud

service provider or for some instances, consider it

only as the provider of datasets. The sources of the

considered requirements of each work are examined:

literature is the dominant source of deriving the used

criteria. Through its Delphi study, Lang (Lang et al.,

2016) conducted panels and interviews with industry

(provider) experts to define their criteria.

II As the cloud service consumer plays a primary

role in the cloud service selection; only two projects

partially consider the needed criteria from an end-

users point of view. In both cases (Lang et al., 2016;

Siegel and Perdue, 2012b) the end-user focus is

represented through the industry experts. All other

projects are not considering any other end-user

derived quality criteria. It can be summarized that the

(i) existing quality models support the selection of

web-services. They can be used for cloud service

selection too, but they do not reflect different aspects

and characteristics of cloud computing (e.g.,

elasticity). Only SMI, CFR and OWL-Q are partially

in favor of the cloud. (ii) The dominantly used non-

functional requirements are derived from academic

literature or only from interviews. There exists no

synthesis of both approaches. (iii) Service selection

consists of the parts customer (CSC), matchmaker

(MM) and provider (CSP). However, the focus is on

CSC and MM, CSPs are neglected. Thus, this work

aims to come up with a Cloud Quality Model, which

reflects cloud characteristics to make cloud services

with similar functional requirements comparable. The

QM considers input from literature, as well as from

cloud services consumers and cloud service

providers. Furthermore, based on the comparability

of the cloud services, the service selection offers also

the opportunity to include cloud service providers to

benchmark their own services.

3 RESEARCH APPROACH

In the design-science paradigm, knowledge and

understanding of a problem domain and its solution

are achieved in the building and application of the

designed artifact. As this research aims to create a

Mediation Broker for evaluating and finding

appropriate cloud services and thus, creates an

artifact, it follows a design-science research strategy.

Regarding the Design Science Research Cycle

(Hevner and Chatterjee, 2010) the application domain

is Cloud Service Selection. Based on a literature

review the relevance of the research is examined. The

Design Cycle consists of two elements to develop and

evaluate. Thus, firstly the development of the cloud

evaluation criteria, the cloud service measures, as

well as the Mediation Broker prototypes, took place.

Followed by the evaluation through a survey and

expert interview for the evaluation criteria and the

cloud service measures and the benchmarking of the

prototypes. There has been extensive and ongoing

research in the field of cloud computing services. As

cloud computing is considered a service, there are

expectations of users which need to be reflected in

offers being provided by the providers (Alabool and

Mahmood, 2013; Garg et al., 2011). In the current

context, the cloud computing services being offered

are not clearly measurable, and often it does not

match up with the expectations of the users, which

creates an environment where a potential user does

not get the confidence in the offering (Buyya, Garg,

and Calheiros, 2011; Garg et al., 2011; Sun et al.,

2014). It then becomes essential that the providers can

define their offering, which helps in mapping the

expectations of the users with the perceived value of

the service provided. Based on these outlines, the

following main and sub-research questions can be

derived:

Research Work Service Layer

Matchmaking and

Selection Context

Type of requirements

Service Quality

Model

Garg et al.

(2011) IaaS Cloud Servives non-functional SMI

Liu et al. (2004) web-services Semantic web-services non-functional WSDL, OWL-S

Sukumar et al.

(2012)

web-services

Web-services from IBM

UDDI Registries

non-functional WSDL, OWL-S

Kritikos et al.

(2009)

web-services

QoS parameters

including parameters

and methods from OWL-

Q

non-functional OWL-Q, OWL-S

Wibowo et al.

(2016)

SaaS Cloud Services non-functional -

Whaiduzzaman

et al. (2014)

IaaS Cloud services functional -

Kang et al.

(2011a)

web-services,

IaaS

Cloud Services functional -

Buyya et al.

(2009)

IaaS, SaaS Cloud services non-functional SMI

Lang et al.

(2016)

IaaS; PaaS,

SaaS Cloud services non-functional -

Sundareswaran

et al. (2012)

IaaS

Cloud Infrastructure

Servuces

non-functional

-

Dastjerdi et al.

(2011)

web-services

Web-services from IBM

UDDI Registries

non-functional

-

Wang (2009) IaaS, SaaS Service Markeptplace non-functional linguistic terms

Sun et al. (2014) IaaS SaaS non-functional -

Zheng et al.

(2013)

SaaS SaaS functional -

Sathya et al.

(2010)

web-services Web-services non-functional WSMO

Shetty et al.

(2015)

SaaS Cloud Services Ranking non-functional -

Siegel et al.

(2012a)

IaaS, PaaS,

SaaS Cloud Services non-functional SMI

Mobedpour et

al. (2013)

- Cloud Service Ranking non-functional -

Somu et al.

(2017)

IaaS, PaaS,

SaaS Cloud Service Ranking non-functional SMI

Raeppschlaeger

et al. (2012)

IaaS

Cloud Service

Evaluation

functional, non

functional

CFR

Cloud Service Quality Model: A Cloud Service Quality Model based on Customer and Provider Perceptions for Cloud Service Mediation

243

Table 2: Service Selection Review.

RQ 1: What is Service Quality; RQ 1.1: What is

Service Quality regarding cloud services

RQ 2: What are Service Quality Models?; RQ 2.1:

Are these SQMs designed for cloud services?; RQ

2.2: What are attributes from the user’s perspective

needs?; RQ 2.3: What are attributes from the

provider’s perspective needs?

4 SERVICE QUALITY MODEL

Kotler and Armstrong (Kotler and Armstrong, 1999)

define service as, “an act of performance that one can

offer to another that is essentially intangible and does

not result in the ownership of anything. Its production

may or may not be tied to a physical product.” It is

conformance of requirements. “Quality is the totality

of features and characteristics of a product or service

that bear on its ability to satisfy stated or implied

needs” (Kotler and Armstrong, 1999).

The quality of service measures how much of the

service provided meets the customers’ expectations.

To measure the quality of intangible services,

researchers usually use the term perceived service

quality. Perceived service quality is the result of

comparing perceptions about the service delivery

process and the actual outcome of the service

(Grönroos, 1984; Wirtz and Lovelock, 2016).

Wang (Wang, 2014) proposed a service quality

management model and service quality evaluation for

maintenance service for cloud computing, a method

based on the SERVQUAL. Using the same

SERVQUAL model, the authors redefined some

quality characteristics, as they argued that

“SERVQUAL is universally applied in the field of

service and cannot reflect the characteristics of

maintenance service for cloud computing.” Based on

the quality management model, this paper proposed a

quality evaluation model using some research

methods, such as the Delphi method. Furthermore,

the paper introduced the application of quality

evaluation by considering an actual case. The essence

of this paper was to help providers improve their

quality management and show them how to deal with

challenges of maintenance service of cloud

computing. This model helps to “solve the problem

underlying in the evaluation of service quality and

inseminate theories and methods for evaluating

service quality.” This paper is more focused on the

provision of quality from the provider’s side, but no

real direct focus on the user’s aspect.

Domínguez-Mayo (Domínguez-Mayo et al.,

2014) proposed a framework and tool to manage

cloud computing service quality. ISO 9000 includes

eight quality management principles, on which to

base an efficient, effective and adaptable quality

management system.

They are applicable throughout industry,

commerce and service sectors: “Customer focus,

leadership, involving people, process approach,

system approach, continual improvement, factual

decision-making, mutually beneficial supplier

relationships, customer requirements, organizations

requirement.” The paper proposed a framework for

managing Cloud Computing service quality between

clients and providers. QuEF (Quality Evaluation

Framework) was developed to manage Model-Driven

Web Development methodologies quality but later

extended to cover the quality management of other

areas like cloud computing. Over time, it has been

improved with the following phases - Strategy Phase,

design phase transition phase, operational phase,

quality continuous improvement phase. The purpose

of the QuEF is to bring about continuous automatic

Research Work

Considered

Roles CSP/

CSC /S

Source of

considered

requirements

End user

focused

Matchmaking /

Selection

Approach

Garg et al.

(2012) CSC, S literature no AHP

Liu et al. (2004) CSC, S literature no

Semantic

reasoning

Sukumar et al.

(2012)

CSC, S

literature no

Peano space

filling curve

Kritikos et al.

(2009)

CSC, S

literature no

Mixed integer

programing

Wibowo et al.

(2016)

CSC, S

literature no TOPSIS & Fuzzy

Whaiduzzaman

et al. (2014)

CSC, S

literature no AHP

Kang et al.

(2011a)

CSC, S

literature no

Semantic

reasoning

Buyya et al.

(2009)

CSC, S

literature no AHP

Lang et al.

(2016) CSC, S

Panel,

interviews partially -

Sundareswaran

et al. (2012)

CSC, S

literature no

Greedy-

Opitmization

Dastjerdi et al.

(2011)

CSC, S

literature no

Semantic

reasoning

Wang (2009) CSC, S user perception no Fuzzy Logic

Sun et al. (2014) CSC, S literature no AHP

Zheng et al.

(2013)

CSC, S

literature no

Greedy-

Opitmization

Sathya et al.

(2010)

CSC, S

literature no

Shetty et al.

(2015)

CSC, S

literature no AHP

Siegel et al.

(2012a)

CSC, S

interviews partially -

Mobedpour et

al. (2013)

CSC, S

literature no

Ranking similarity

calculation

Somu et al.

(2017)

CSC / CSP

literature no

Raeppschlaeger

et al. (2012)

CSC, S

literature, offer

analyses

no

-

CLOSER 2019 - 9th International Conference on Cloud Computing and Services Science

244

improvement by generating checklists,

documentation, thereby automatically evaluating, and

planning in order to improve quality with minimal

effort and time. They did SLR (Systematic Literature

Review), in order to identify gaps in quality

management of Cloud computing, and as per their

study, there are no found works focused on

frameworks, which ensure quality management in

cloud computing service between clients and

providers. The framework further offers a set of tools

to manage quality effectively and efficiently. Further,

more tools help to manage cloud computing quality

between clients and providers by means of e-SCM

(Capability Maturity Model for service) (Domínguez-

Mayo et al., 2015. The article focuses on service

quality, but characteristics of cloud computing

services are not listed, which is also one of the

important aspects of service quality. Zheng, Martin,

Brohman, and Da Xu (Zheng et al., 2016) created a

quality model named CLOUDQUAL for cloud

services. This model is based on SERVQUAL and e-

service quality model. It demonstrates quality

dimensions and metrics for general cloud services.

CLOUDQUAL contains six quality dimensions,

namely, “usability, availability, reliability,

responsiveness, security, and elasticity.” This paper is

focused on validating service quality, and the scope is

limited towards only six dimensions. Moreover,

CLOUDQUAL does not highlight the main

characteristics of cloud computing services, like pay-

per-use, interoperability, etc. A service is defined by

its characteristics and service quality is based on the

characteristics. In this research paper, the scope of the

characteristics is limited and a holistic view, on the

basis of which service quality can be defined are not

covered in this paper. Zheng (Zheng et al., 2013)

proposed a cloud service quality evaluation system

based on five dimensions: “rapport, responsiveness,

reliability, flexibility, security” and extended SaaS-

Qual. The index system proposed external metrics

with the application of SLA in order to measure users’

requirement of service quality for PaaS and SaaS.

The approaches recommended and proposed by

researchers have mainly been for service selection,

but most of them have not focused on service quality,

required for cloud service users and providers.

Another aspect related to service quality is non-

functional attributes like accountability, reliability,

etc., but none of the literature provides a holistic view

of related non-functional attributes of cloud

computing services. The approaches proposed to

cover only one kind of service, or some of them are

useful for either cloud users, providers or

intermediaries. The service quality researches have

been done in other areas of services such as catering,

airline, etc., using the famous SERVQUAL model,

but the same has not been extensively used in cloud

computing services. There is a need to design

classification based on non-functional attributes of

cloud computing services, a scheme, which can

provide qualitative as well as a quantitative view for

providers as well as users of cloud computing

services and measures to evaluate the same.

5 CLOUD SERVICE QUALITY

MODEL

To define a set of quality criteria, to reflect literature,

customer and providers perception, a systematic

literature review based on 46 academic papers, which

have been published in the time frame between 2009

and 2018 was conducted. Out of these papers seven

projects were published between 2009 and 2014, 39

sources were published from 2015 – 2018. Alabool

and Mahmood reflect in its meta-study already 40

papers considering the most cited criteria from 2009

to 2012 (Alabool and Mahmood, 2013). Their

findings are also included in this research. In the vein

of the search resources, the ScienceDirect (Elsevier),

SpringerLink, ACM Digital Library, and IEEE

Xplore have been considered as the main digital

libraries for cloud computing (Lang et al. 2016; Sun

et al. 2014) for performing search processes. Google

Scholar search engine has been used to find some of

the archival journals, technical reports, and

conference proceedings.

The keywords that have been used to perform a

search over the digital libraries have been selected

based on evaluation theory activities (Lopez, 2000)

that covers the concepts that represent the cloud

evaluation and selection methods domain such as

Cloud Service Evaluation, Cloud Selection Criteria or

Factors, Attributes or Functional Requirements or

Non-Functional Requirements. Based on these

findings in a second step interviews are conducted to

receive in-depth feedback to the quality attributes

(QAs) and its components elaborated within this

work. A short introduction into the general topic of

Cloud Service Selection, Service Quality Models and

the derived QAs gives the interviewees an overview.

The interviews are held through Skype calls and face-

to-face in German and are semi-structured. Semi-

structured interviews are based on a semi-structured

interview guide, which is a schematic presentation of

questions or topics and needs to be explored by the

interviewer (DiCicco-Bloom and Crabtree, 2006).

Cloud Service Quality Model: A Cloud Service Quality Model based on Customer and Provider Perceptions for Cloud Service Mediation

245

This kind of interview offers the advantages of

providing rich data, different ways of data analysis, to

gain more insights about relational aspects and to the

interviewee’s perceptions about the QAs.

The first interviewee is a Service Manager for a

central infrastructure provider and ensures the flow of

information and money between banks, traders,

merchants, investors and service providers

worldwide. The interviewee has worked for Payment

and Card Services, Finance and Insurance,

Healthcare, and Transport industries for more than

ten years and gained experiences in various specialist

fields such as Project & Quality Management, Agile

Service & Product Development, Business

Intelligence, Requirements & Service Management.

The interviewee is a certified cloud expert. The

interviewee is involved in projects for evaluating

cloud services for its company but also in delivering

cloud services to their customers and thus represents

the provider's point of view.

The second interviewee is an experienced Service

Manager with a demonstrated history of working in

the insurance industry. Strong support professional

skilled in Configuration Management, Incident

Management, Service Delivery, Problem

Management, ITIL, and Business Process

Improvement. Currently, she is working for a Swiss

IT-provider for banking services.

The third interviewee is Lead Software Engineer

at an international financial software development

company. Besides his strong skills in developing

cloud services, he gained in his former roles also a

deep insight into IT-Service Management, especially

in the field of Cloud Sourcing for banking institutes.

He represents the customer point of view.

Forth interviewee is Program Test Manager at an

international IT consulting company. She has a strong

background of quality testing for IT services and thus

experiences in quality and metrics. She represents, in

general, the customer's point of view but gives also

general feedback on QAs aspects. Most of the

attributes are recommended as suitable for Quality

Attributes by the interviewees. An exception is the

usability attributed, which is declared as hard to

measure by two interviewees. Additionally, the

questionnaire shows that the derived attributes are at

least suitable for a Cloud Service Quality Model.

Thus, except the attribute usability, the other

attributes are considered as Quality Attributes.

Besides these QAs, the interviews and survey

show that there is a need for additional attributes.

They see the attributes Compliance and Geo-

Location as important criteria while considering the

service quality. Customizing, Reputation, Costs per

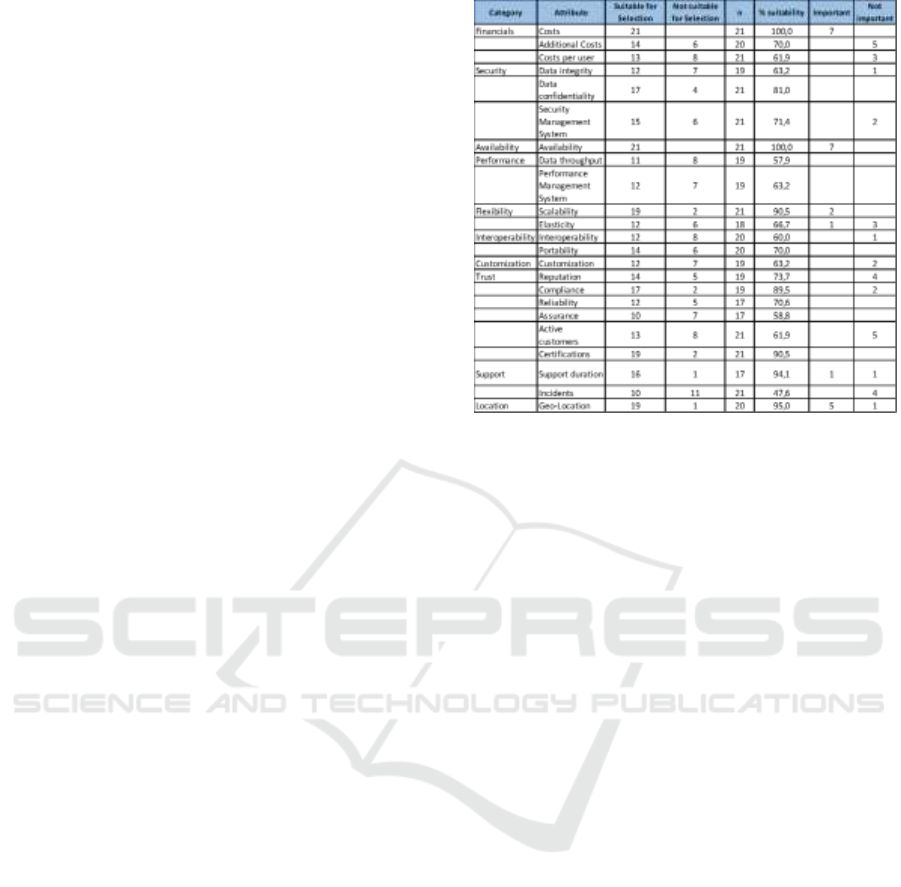

Figure 1: Quality Attributes Validation Results.

Costumer and additional Costs are attributes, which

must be considered from the customers and providers

point of view. Based on these findings the categories

and QAs for a Cloud Service Quality Model are

derived in Fig.1. For defining the Cloud Service

Quality Model as a next step, the identified attributes

now are collocated to the different categories.

Therefore, the reviewed attributes from the SLR are

used, and additionally, the additional attributes form

the interviews and survey. As the additional attributes

consist of 50% attributes which are only named once,

only the attributes with at least 25 % frequency (three

nominations) are considered. In total these attributes

are, excluding the attributes which are now a

category: cost, additional costs, costs per user,

availability, scalability, elasticity, interoperability,

portability, customizing, reputation, compliance,

reliability, assurance, number of active users,

certificates, geo-location. As all attributes assigned to

one category, the category of Security, Performance,

and Support to not inherited any attributes. As

mentioned before these groups can have different

attributes and can be generalized through different

attributes (e.g., Security and Privacy). Within the

interviews that for example for the category of

Support metric can be the number of incidents per

year and the average support time. Derived from these

metrics the attributes are Support duration and

Incidents. For the category of Security, the triad of

confidentiality, integrity, and availability can be

considered. As availability reflects an own category,

the preferred attributes are Data confidentiality and

integrity. The Trusted Cloud Label (Verein

Kompetenznetzwerk Trusted Cloud eV, 2016) and

CLOSER 2019 - 9th International Conference on Cloud Computing and Services Science

246

the Service Measurement Index (Siegel and Perdue,

2012b) are selection models that can be used to

measure cloud services according to given criteria.

Both are defining also the Security Management

System (if in place or not) as an important criterion.

Thus, these three attributes are considered as

attributes for the Security category.

As the metrices and measures are broadly

discussed in the literature already, existing metrics

and measures can be considered. For example, the

measurements of the Trusted Cloud label and SMI are

considered as guidelines (where applicable) because

both tend to develop a comprehensive criteria catalog

which covers the defined evaluation criteria within

this work. Furthermore, both approaches are ensuring

that the criteria are suitable to request and analyzing

them in the context of self-service and self-test from

the provider (Siegel and Perdue, 2012b; Verein

Kompetenznetzwerk Trusted Cloud eV, 2016) which

is in the alignment of this work.

6 VALIDATION

For validating the findings, a panel discussion with 21

cloud experts shows the suitability of the QAs for

cloud service selection.

The validation shows that there is a space for

additional attributes for cloud service quality besides

the traditional literature derived attributes. The

attributes derived from providers and customers view

have in general suitability or acceptance over more

than 60% even if attributes like Additional Costs and

Active users are also seen as not important. This

discrepancy lies in the drawback of this work, which

is the limited number of interviews held with

customers and users. Additionally, more interviews

could have led also to additional attributes which now

are not considered.

7 CONCLUSION

Literature and a survey have shown that the process

of finding a suitable cloud service is not trivial. Small

businesses often do not have the knowledge to define

their requirements and find a suitable cloud service.

As literature describes, there are already many

research initiatives that have been or are still in

progress. However, they usually focus on a specific

domain, such as matching, service selection, service

description, or are applicable only to a service or

deployment model. As the concept of service quality

is still not widely prevalent in the cloud computing

services, this study investigates on the service quality

of cloud services, which can be used for cloud service

selection. Thus, following a design science research

approach, a list of the most common cited cloud

service quality attributes has been identified. Based

on these literature derived attributes, the cloud

customer’s and cloud provider’s perception was

collected. Within interviews and a questionnaire, the

topic has been discussed and further attributes were

identified. In a next step, the attributes supported the

creation of according categories. Furthermore, simple

metrics have been identified, where applicable, to

derive a Cloud Service Quality Model.

REFERENCES

Alabool, H. M. and A. K. Mahmood. 2013. “Review on

Cloud Service Evaluation and Selection Methods.”

2013 International Conference on Research and

Innovation in Information Systems (ICRIIS) 2013:61–

66.

Arpaci, Ibrahim, Kerem Kilicer, and Salih Bardakci. 2015.

“Effects of Security and Privacy Concerns on

Educational Use of Cloud Services.” Computers in

Human Behavior 45:93–98.

Bryman, Alan and Emma Bell. 2015. Business Research

Methods. 4th ed. New York: Oxford University Press.

Buyya, Rajkumar, Saurabh Kumar Garg, and Rodrigo N.

Calheiros. 2011. “SLA-Oriented Resource

Provisioning for Cloud Computing: Challenges,

Architecture, and Solutions.” Pp. 1–10 in Proceedings

of the 2011 International Conference on Cloud and

Service Computing, CSC ’11. Washington, DC, USA:

IEEE Computer Society.

Dastjerdi, Amir Vahid, Saurabh Kumar Garg, and

Rajkumar Buyya. 2011. “QoS-Aware Deployment of

Network of Virtual Appliances across Multiple

Clouds.” Pp. 415–23 in Proceedings - 2011 3rd IEEE

International Conference on Cloud Computing

Technology and Science, CloudCom 2011. IEEE.

DiCicco-Bloom, Barbara and Benjamin F. Crabtree. 2006.

“The Qualitative Research Interview.” Medical

Education 40(4):314–21.

Domínguez-Mayo, Francisco José et al. 2015. “A Framework

and Tool to Manage Cloud Computing Service Quality.”

Software Quality Journal 23(4):595–625.

Garg, Saurabh Kumar, Christian Vecchiola, and Rajkumar

Buyya. 2013. “Mandi: A Market Exchange for Trading

Utility and Cloud Computing Services.” Journal of

Supercomputing 64(3):1153–74.

Garg, Saurabh Kumar, Steve Versteeg, and Rajkumar

Buyya. 2011. “SMICloud: A Framework for

Comparing and Ranking Cloud Services.” Proceedings

- 2011 4th IEEE International Conference on Utility

and Cloud Computing, UCC 2011 (Vm):210–18.

Cloud Service Quality Model: A Cloud Service Quality Model based on Customer and Provider Perceptions for Cloud Service Mediation

247

Grönroos, Christian. 1984. “A Service Quality Model and

Its Marketing Implications.” European Journal of

Marketing 18(4):36–44.

Hema Priya, N. and S. Chandramathi. 2014. “QoS Based

Optimal Selection of Web Services Using Fuzzy

Logic.” edited by S. Fong. Journal of Emerging

Technologies in Web Intelligence 6(3):331–39.

Hevner, Alan and Samir Chatterjee. 2010. Design Research

in Information Systems. Springer US.

Kang, Jaeyong and Kwang Mong Sim. 2011. “Cloudle: An

Ontology-Enhanced Cloud Service Search Engine.” Pp.

416–27 in Lecture Notes in Computer Science

(including subseries Lecture Notes in Artificial

Intelligence and Lecture Notes in Bioinformatics), vol.

6724 LNCS.

Kotler, Philip and Gary Armstrong. 1999. “Marketing

Principles.” Parsaian, Ali, Tehran, Adabestan Jahan e

Nou Publication 1389.

Kritikos, Kyriakos et al. 2013. “A Survey on Service

Quality Description.” ACM Computing Surveys.

Kritikos, Kyriakos and Dimitris Plexousakis. 2009.

“Requirements for QoS-Based Web Service

Description and Discovery.” Pp. 320–37 in IEEE

Transactions on Services Computing, vol. 2. IEEE.

Lang, Michael, Manuel Wiesche, and Helmut Krcmar.

2016. “What Are the Most Important Criteria for Cloud

Service Provider Selection? A Delphi Study.” Pp. 1–17

in Twenty Fourth European Conference on Information

Systems (ECIS 2016). Istanbul: AIS Electronic Library.

Retrieved (http://aisel.aisnet.org/ecis2016_rp/119).

Li, Zheng, Ranjan,Rajiv, O’Brien, Liam, Zheng Li, Liam

O’Brien, and Rajiv Ranjan. 2016. “Cloud Service

Evaluation.” Pp. 349–60 in Encyclopedia of Cloud

Computing. John Wiley & Sons, Ltd.

Liu, Yutu, Anne H. Ngu, and Liang Z. Zeng. 2004. “QoS

Computation and Policing in Dynamic Web Service

Selection.” in Proceedings of the 13th international

World Wide Web conference on Alternate track papers

& posters - WWW Alt. ’04.

Lopez, Marta. 2000. An Evaluation Theory Perspective of

the Architecture Tradeoff Analysis Method (ATAM).

Pittsburgh.

Mingrui Sun, Tianyi Zang, Xiaofei Xu, and Rongjie Wang.

2013. “Consumer-Centered Cloud Services Selection

Using AHP.” Pp. 1–6 in 2013 International Conference

on Service Sciences (ICSS). Shenzhen: IEEE. Retrieved

(http://ieeexplore.ieee.org/document/6519752/).

Mobedpour, Delnavaz and Chen Ding. 2013. “User-

Centered Design of a QoS-Based Web Service

Selection System.” Service Oriented Computing and

Applications 7(2):117–27.

Norwood, Janet et al. 2006. Off-Shoring: An Elusive

Phenomenon. National Academy of Public

Administration.

Repschlaeger, Jonas, Ruediger Zarnekow, Stefan Wind,

and Turowski Klaus. 2012. “Cloud Requirement

Framework: Requirements and Evaluation Criteria to

Adopt Cloud Solutions.” 20th European Conference on

Information Systems.

Sathya, M. and M. Swarnamugi. 2010. “Evaluation of QoS

Based Web- Service Selection Techniques for Service

Composition.” International Journal of Software

Engineering.

Shetty, Jyothi and Demian Antony D’Mello. 2015. “Quality

of Service Driven Cloud Service Ranking and Selection

Algorithm Using REMBRANDT Approach.” in 2015

International Conference on Smart Technologies and

Management for Computing, Communication,

Controls, Energy and Materials, ICSTM 2015 -

Proceedings.

Siegel, Jane and Jeff Perdue. 2012b. “Cloud Services

Measures for Global Use: The Service Measurement

Index (SMI).” in Annual SRII Global Conference, SRII.

Somu, Nivethitha, Kannan Kirthivasan, and Shankar

Sriram Shankar. 2017. “A Computational Model for

Ranking Cloud Service Providers Using Hypergraph

Based Techniques.” Future Generation Computer

Systems 68:14–30.

Sun, Le, Hai Dong, Farookh Khadeer Hussain, Omar

Khadeer Hussain, and Elizabeth Chang. 2014. “Cloud

Service Selection: State-of-the-Art and Future Research

Directions.” Journal of Network and Computer

Applications 45:134–50.

Sundareswaran, Smitha, Anna Squicciarini, and Dan Lin.

2012. “A Brokerage-Based Approach for Cloud Service

Selection.” Pp. 558–65 in Proceedings - 2012 IEEE 5th

International Conference on Cloud Computing,

CLOUD 2012. Honolulu: IEEE.

Verein Kompetenznetzwerk Trusted Cloud eV. 2016.

Criteria Catalogue for Cloud Services. Retrieved

(https://www.trusted-

cloud.de/sites/default/files/trusted_cloud_kriterienkata

log_v1_1_en.pdf).

Wang, Shangguang, Zhipiao Liu, Qibo Sun, Hua Zou, and

Fangchun Yang. 2014. “Towards an Accurate

Evaluation of Quality of Cloud Service in Service-

Oriented Cloud Computing.” Journal of Intelligent

Manufacturing 25(2):283–91.

Whaiduzzaman, Md et al. 2014. “Cloud Service Selection

Using Multicriteria Decision Analysis.” The Scientific

World Journal 2014:10.

Wibowo, S. and H. Deng. 2016. “Evaluating the

Performance of Cloud Services: A Fuzzy Multicriteria

Group Decision Making Approach.” Pp. 327–32 in

2016 International Symposium on Computer,

Consumer and Control (IS3C).

Wirtz, Jochen and Christopher Lovelock. 2016. Services

Marketing. World Scientific Publishing Company.

Zheng, Zibin, Xinmiao Wu, Yilei Zhang, Michael R. Lyu,

and Jianmin Wang. 2013. “QoS Ranking Prediction for

Cloud Services.” IEEE Transactions on Parallel and

Distributed Systems 24(6):1213–22.

CLOSER 2019 - 9th International Conference on Cloud Computing and Services Science

248