A Taxonomy for Enterprise Architecture Analysis Research

Amanda Oliveira Barbosa

1

, Alixandre Santana

1

, Simon Hacks

2

and Niels von Stein

2

1

UFRPE, Recife, Brazil

2

Research Group Software Construction, RWTH Aachen University, Aachen, Germany

Keywords:

Enterprise Architecture, Analysis, Model, Research Evaluation, Taxonomy.

Abstract:

Enterprise Architecture (EA) practitioners and researchers have put a lot of effort into formalizing EA model

representation by defining sophisticated frameworks and meta-models. Because EA modelling is a cost and

time-consuming effort, it is reasonable for organizations to expect to extract value from EA models in return.

Due to the plethora of models, techniques, and stakeholder concerns in literature, the task of choosing an

analysis approach might be challenging when no guidance is provided. Even worse, the design of analysis

efforts might be redundant if there is no systematization of the analysis techniques due to the inefficient

dissemination of practices and results. This paper contributes with one important step to overcome those

issues by screening existing EA analysis literature and defining a taxonomy to classify EA research according

to their analysis concerns, analysis techniques, and modelling languages employed. The proposed taxonomy

had a significant coverage tested with a set of 46 papers also collected from the literature. Our work thus

identifies and systematizes the state of art of EA analysis and further, establishes a common language for

researchers, tool designers, and EA subject matter experts.

1 INTRODUCTION

The continuous establishment of Enterprise Architec-

ture (EA) techniques as a means to model a holis-

tic representation of corporate structures, processes

and Information Technology (IT) infrastructure still

attracts many researchers today (Aier et al., 2008;

Saint-Louis and Lapalme, 2016). While themes like

EA frameworks, modelling languages, Enterprise Ar-

chitecture Management (EAM) are reasonably repre-

sented, EA analysis, a fundamental practice in EAM,

has received much less attention from the research

community.

EA analysis is based on the data collected from

models and documents. EA modelling itself is a cost

and time-consuming effort and, therefore, organiza-

tions expect to extract value from those EA mod-

els in return (V

¨

alja, 2018). EA analysis enables in-

formed decisions and plays a crucial role in projects

because it manages the projects complexity and pro-

vides the possibility of comparing architecture alter-

natives (Manzur et al., 2015b).

To date, there are a plethora of analysis paradigms

such as ontology-based (Bakhshadeh et al., 2014),

probabilistic network analysis (Johnson et al., 2014)

and network theory (anonymous, 201x); which use

several types of EA model based on OWL-DL, Archi-

mate, Graphs and so on. Every analysis supports a

different analysis concern and, thus, for a sound eval-

uation of the architecture different kinds of analyses

are required (Rauscher et al., 2017).

Despite the importance of EA analysis, EA practi-

tioners and researchers do not have an overall shared

and acknowledged comprehension about EA analysis

techniques. Little research about mechanisms to clas-

sify, compare, or organize the existing EA analysis

research can be found. As a consequence, the task of

choosing an analysis approach might be challenging

when little guidance is provided. Even worse,the de-

sign of analysis efforts might be redundant if there is

no systematization of the analysis techniques due to

the inefficient socialization of practices and results.

We contribute with one important step in that di-

rection deriving a taxonomy to classify analysis re-

search according to its layers, analysis concerns, anal-

ysis techniques, and modelling languages. We also

evaluate the proposed taxonomy against recent EA

analysis research. Doing so, we create foundational

elements aiming to foster the development of this re-

search field and also establishing alignment among

Barbosa, A., Santana, A., Hacks, S. and von Stein, N.

A Taxonomy for Enterprise Architecture Analysis Research.

DOI: 10.5220/0007692304930504

In Proceedings of the 21st International Conference on Enterprise Information Systems (ICEIS 2019), pages 493-504

ISBN: 978-989-758-372-8

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

493

researchers, tool designers, and EA subject matter ex-

perts. Therefore, in this paper, we answer the follow-

ing question:

RQ How is the EA analysis research classified accord-

ing to its analysis concerns, techniques, and mod-

elling languages?

The next section presents the key concepts in-

volved in this research and gives insights about the

correlated literature. Section 3 elaborates in detail the

approach to answer the research question. The taxon-

omy is presented in Section 4. The discussion is made

in Section 5. In closing, Section 6 gives our final con-

siderations and directions for further work.

2 KEY CONCEPTS

2.1 Enterprise Architecture, Layers and

Models

According to Kotusev, Singh, and Storey (Kotusev

et al., 2015): “EA is a description of an enter-

prise from an integrated business and IT perspective”.

However, EA is more inclusive if it is presented from

different perspectives at different layers of abstrac-

tion (Ahlemann et al., 2012). According to TOGAF

(Haren, 2011): “EA is a system formed by four sub-

systems, namely Business, Data/Information, Appli-

cation, and Infrastructure (or Technology) Architec-

ture”. Archimate 2.1 (Group, 2013) defines a motiva-

tional extension to include concerns regarding strat-

egy and governance aspects (e.g., goals, principles,

requirements, stakeholders, intentions). This partic-

ular layer, Value, aims to understand the factors that

influence the architecture as a whole. Therefore, we

consider those five layers (value, business, informa-

tion, application, technology).

Considering the previous layers, EA models are

used as an abstraction of the structure of the enterprise

in its current state (AS-IS models). They show pos-

sible alignment issues, ease communication and can

aid in decision-making, by being used to predict the

behavior of future states (TO-BE state models) rather

than modifying the systems in the current architecture

(Buschle et al., 2010). EA models are tools for plan-

ning, communicating, and of course, also for docu-

menting (remembering) (Johnson, P., Lagerstr

¨

om, R.,

Ekstedt, M., &

¨

Osterlind, 2012).

2.2 EA Analysis and Concerns

EA analysis is one of the most relevant functions

in EAM as it enables informed decision making

and plays a crucial role in projects (Matthes et al.,

2008). This paper will use the definition suggested

by (anonymous, 201x), that defines EA analysis as

“the property assessment, based on models or other

EA related data, to inform or bring rationality to de-

cision support of stakeholders.”

The property is related to an analysis concern

(e.g., risk, business-IT alignment, cost, etc.). Our def-

inition of concern agrees with the Oxford Dictionary

of English definition, which is “A matter of interest or

importance to someone”. We consider as an analysis

concern the main objective of an analysis approach

such as cost, risks, performance and so on.

2.3 Related Work

Past works also tried to discuss and categorize anal-

ysis approaches. While (Lankhorst, 2004) shows the

variety present in techniques and methods and anal-

yses them according to the type of the employed

technique (analytical x simulation) and type of pro-

duced result (quantitative x functional). (Buckl et al.,

2009) perform their classification representing differ-

ent contexts of EA: academic research, practition-

ers, standardization bodies, and tool vendors (Manzur

et al., 2015a). Their classification covers the fol-

lowing dimensions: the body of analysis, time ref-

erence, analysis technique, analysis concern and self-

referentiality. Both classifications proposals evaluate

their framework by classifying published works.

Niemann (Niemann, 2006), in contrast, describes

different types of analysis according to the object

under investigation (dependency, coverage, interface,

heterogeneity, complexity, compliance, cost and ben-

efit) and discusses each one separately, although Nie-

mann does not base it in a broad sample of stud-

ies. Andersen and Carugati (Andersen and Carugati,

2014) shed light on findings regarding the main focus

of the papers analyzed (business, technical or finan-

cial), their approaches’ outcomes (model, measure-

ment, method) and which elements their techniques

are evaluating (architecture, IT projects, and IT initia-

tives; services and applications; business elements).

However, this classification is still superficial in light

of the plurality of methods, techniques, and concerns

related to EA Analysis. (anonymous, 201x) designed

a meta-model to characterize network analysis initia-

tives, based on 74 works found through a systematic

literature review (SLR), and classified the initiatives

according to their analysis concern of interest and

other information requirements. This current work,

though, is not limited to network analysis techniques.

Hanschke provides “analysis patterns” and de-

fines two dimensions for the classification of anal-

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

494

ysis approaches: analysis function and architecture

sub-model (Hanschke, 2009). Regarding the anal-

ysis functions, the following possibilities are pro-

posed: discovery of potential, redundancies, discov-

ery of potential inconsistencies, needs for organiza-

tional changes, implementation of business goals, op-

timization, and required changes on technical and in-

frastructure layer. Similarly to our research, that work

identifies Business, Information Systems, Technical,

and Infrastructure Layer as targets for the analysis ap-

proaches.

Abdallah et al. (Abdallah et al., 2016) mapped

the concepts measured in EA measurement research.

Based on previous works, (Lantow et al., 2016)

propose a more detailed so-called EA classification

framework and evaluate it by using papers from pub-

lished research, similarly to our research design.

(Rauscher et al., 2017) define requirements for an

EA analysis and utilize them to classify of the various

approaches in two categories: technical (according to

their utilized techniques and requirements for execu-

tion) and functional (according to their goals and their

provided result). The authors also propose a domain

specific language for EA analysis.

Similarly to (Lankhorst, 2004), (Buckl et al.,

2009), (Lantow et al., 2016), and (Rauscher et al.,

2017), we use the analysis technique and analysis

concern dimensions in our taxonomy. Although, our

approach differs from previous works because we ex-

pand the classification of EA analysis research in-

cluding also modelling languages and EA layers cate-

gories in our process. A differential to (Lantow et al.,

2016) is that our search scope is broader than all

previous ones regarding the query string and also in

terms of time interval (the last EA analysis literature

review was published in 2016). This was reflected in

the numbers of categories we found for taxonomy’s

dimension, for instance.

3 RESEARCH DESIGN

This is a qualitative and descriptive research split up

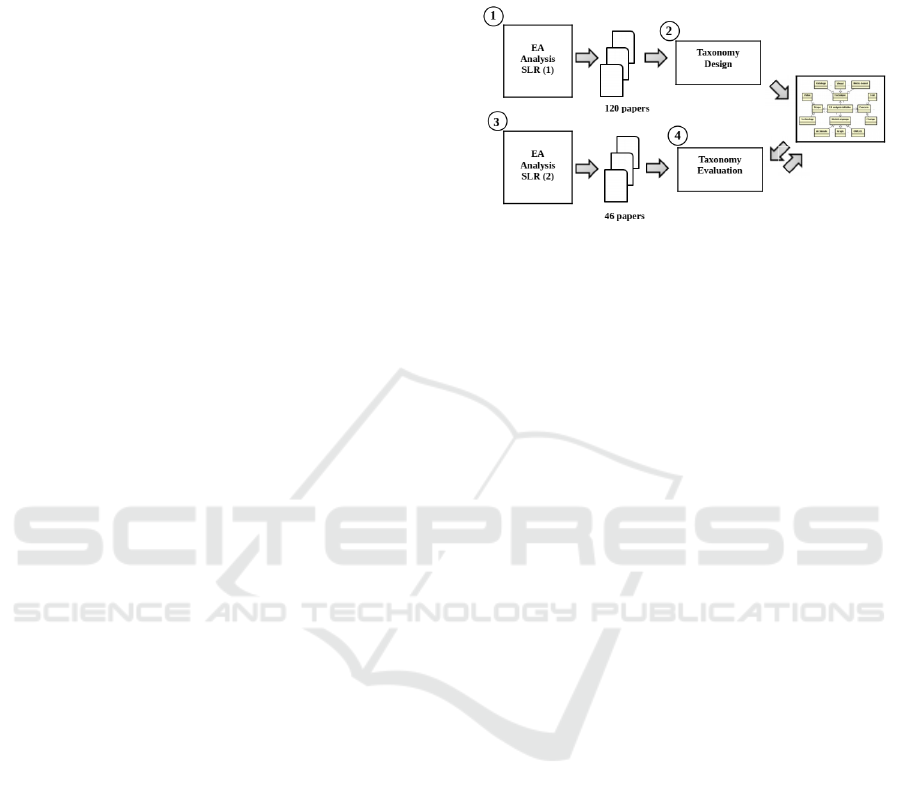

into four steps. First, we apply the SLR method ac-

cording to Kitchenham (Kitchenham, 2004) to gather

a set of papers related to EA analysis research (Step 1

in figure 1). Second, we perform a data categorization

(Cruzes and Dyba, 2011) to end up with a taxonomy

answering the question: “How to classify EA analysis

research according to its analysis concerns and mod-

elling languages?” (Step 2 in Figure 1). We obtained

a second dataset with papers published between 2016

and September 2018 (Step 3 in Figure 1). Finally, we

apply the taxonomy created in Step 2 in the evaluation

dataset (from Step 3) to evaluate and improve the tax-

onomy. Our research design is depicted in Figure 1.

The SLR steps are detailed in the next sections.

Figure 1: Research Design.

Research Query

The keyword design was intentionally generic as it

aimed for wide coverage of publications in the EA

analysis field. The final string combined the terms

related to EA and its subsets, as used in the work of

(Simon et al., 2013b); and terms related to “analysis”

such as goals, metrics, and evaluation, as listed by

(Andersen and Carugati, 2014). Thus, our final string

was:

(”Enterprise architecture” OR ”business architecture” OR

”process architecture” OR ”information systems

architecture” OR ”IT architecture” OR ”IT landscape”

OR ”information architecture” OR ”data architecture”

OR ”application architecture” OR ”application

landscape” OR ”integration architecture” OR ”technology

architecture” OR ”infrastructure architecture”) AND

(Goals OR concerns OR methods OR procedures OR

approaches OR analysis OR evaluate* OR assess* OR

indicator OR method OR measur* OR metric)

Inclusion and Exclusion Criteria

The inclusion criteria consisted of papers containing

techniques, methods or any initiative to evaluate EA,

e.g., papers which use EA as input for taking decision

or papers that analyze EA itself, its changes and evo-

lution. Papers in any language but English, related to

product architecture analysis or internal architecture

of software, containing only modelling approaches or

that do not analyze EA itself but instead they describe

the EA as a whole organizational function to an orga-

nizational variable (e.g., organizational performance)

were not included. Literature reviews about EA (sec-

ondary studies) and papers dealing with the discus-

sion of analysis approaches, but not performing any,

were also excluded from the study.

A Taxonomy for Enterprise Architecture Analysis Research

495

Used Engines

We selected the main engines/databases accessed

in the information system community as our data-

sources for primary studies: Scopus, IEEE, Sci-

enceDirect, ISI Web of knowledge and AIS electronic

library. Duplicates were removed. Table 1 presents

the results returned by each engine.

Table 1: Results by engine for the two time intervals of our

SLR.

Engine Time inter-

val 1

Time inter-

val 2

IEEE 1,762 358

ScienceDirect 832 623

Scopus 3439 949

AISEL 25 0

ISI 1,162 no access

Total (dupli-

cates removed)

5174 1076

Screening Phases

The SLR was performed considering two intervals.

The first one (Step 1 of our research design) covers

papers published until 2015. Then, using the data ex-

tracted from those papers, we applied the data catego-

rization to derive our taxonomy’s constructs. The sec-

ond interval (related to Step 3 of our research design)

encompass papers published from 2015 to September

2018.

Considering the previous inclusion and exclusion

criteria, our screening process was divided into three

rounds for each one of the two-time intervals. For

the first interval, during our first round, we read 7220

abstracts and titles of primary studies returned by the

engines. In the next round, the reading focus was on

the introduction and conclusion sections of 803 re-

maining papers. Finally, the 183 resulted papers were

completely read, forming a set of 120 final papers.

For the second interval (2016 to September 2018), we

performed the same previous screening strategy: the

first round had 1076 titles and abstracts to be read, the

second had 168 introductions and conclusions, 65 full

paper readings in the third and 46 final papers as final

dataset, under the same process of the first interval.

The papers were selected according careful inclusions

and exclusion criteria, and according their availability

to the authors. We took the papers from this second

set to validate the produced taxonomy.

Data Categorization

We screened the 120 papers from the first data-set for

the identification of common dimensions related to

the EA analysis. Considering our research goals, this

ended up in the four dimensions: EA Scope, Analy-

sis Technique, Analysis Concern, and modelling Lan-

guage.

To bring the coding into practice, we follow an

inductive approach of Cruzes and Dyba (Cruzes and

Dyba, 2011). We reviewed the data line by line in

detail and as a value becomes apparent, a code is as-

signed. To ascertain whether a code is appropriately

assigned, we compare text segments to segments that

have been previously assigned the same code and de-

cide whether they reflect the same value. This leads

to continuous refinement of the dimensions of exist-

ing codes and identification of new ones (Cruzes and

Dyba, 2011). This process does not necessarily take

a linear order rather an iterative and dynamic one. In

the next section, we present the proposed taxonomy.

4 EA ANALYSIS TAXONOMY

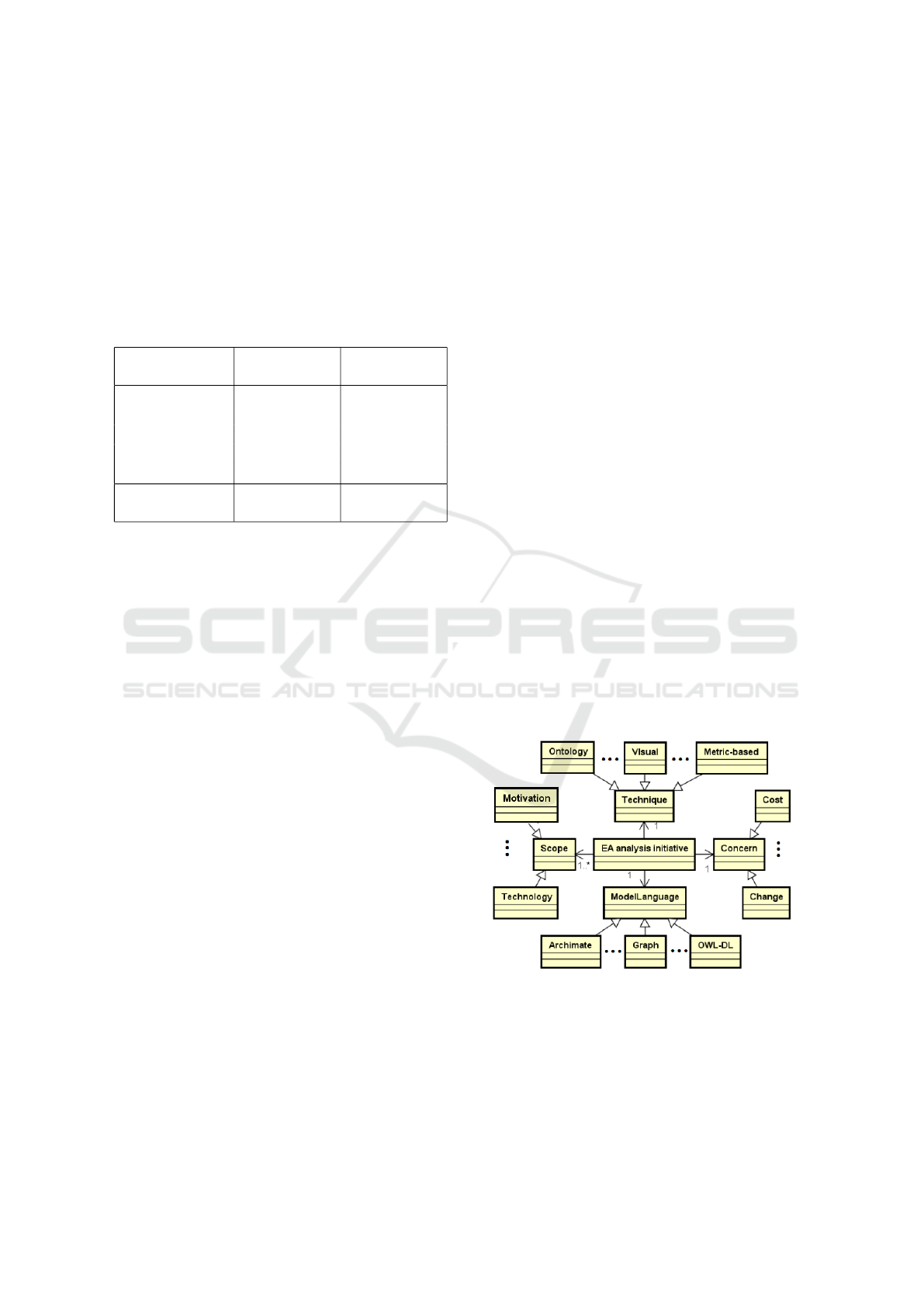

The taxonomy has four main dimensions: EA Scope,

Analysis Concern, Analysis Technique, and mod-

elling Language, depicted in Figure 2. The dots be-

tween the entities represent additional categories hid-

den due to space reasons although described in the

following paragraphs.

Figure 2: Proposed Taxonomy.

4.1 EA Scope Dimension

By investigating the architecture models, we observed

that plenty of papers operate their evaluation on rather

specific components instead of looking at a whole

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

496

model. Even if the authors introduce a case study with

a comprehensive EA model, the evaluation consid-

ered very specific parts of it for example only the tech-

nical layer or process layer (Veneberg et al., 2014; au-

thors, 201x). In (Sousa et al., 2013) the authors used

an EA model’s visions and goals hierarchy for their

evaluation although the exemplary data-set consists

of much more information. In this case, the relevant

components of the EA model were the dependencies

between visions and goals. In (Xavier et al., 2017;

Antunes et al., 2015; Oussena and Essien, 2013) even

larger components were used spreading along multi-

ple layers of the EA model.

Our analysis shows that the EA model related

work sticks to the well-known layered structure, e.g.

defined in TOGAF (The Open Group, 2011) or by

Winter and Fischer (Winter and Fischer, 2006). Ac-

cordingly, our EA Model Scope dimension is com-

posed by following well-known layers:

• Motivation - Since the publication of (Winter and

Fischer, 2006), recent frameworks offer the op-

portunity to model elements modelling the mo-

tivation or the purpose of the organization (cf.

ArchiMate 3.0.1 (The Open Group, 2017)). Addi-

tionally, recent research stresses the need for mod-

elling the business motivation (Sousa et al., 2013;

Timm et al., 2017). Therefore, we opt for a mo-

tivational scope, even if it is maximally implicitly

included in the business layer of Winter and Fis-

cher (Winter and Fischer, 2006).

• Business - This represents the fundamental cor-

porate structure as well as any relationships be-

tween actors or processes of the business archi-

tecture (Winter and Fischer, 2006).

• Process - This layer represents “the fundamental

organization of service development, service cre-

ation, and service distribution in the relevant en-

terprise context” (Winter and Fischer, 2006).

• Application - Since there was no observation of

requirements for a deeper differentiation of busi-

ness integration and software architecture, we

merge the layer “Integration Architecture” and

“Software Architecture” of Winter and Fischer

(Winter and Fischer, 2006). Consequently, it rep-

resents an organization’s enterprise services, ap-

plication clusters, and software services.

• Technology - This layer represents the underlying

IT infrastructure (Winter and Fischer, 2006).

4.2 Analysis Concern Dimension

We define concerns as relevant interests that pertain to

system development, its operation or other important

aspects to stakeholders (ISO et al., 2011). Since an

approach may suit more than one concern at a time,

several papers are classified with more than one con-

cern (e.g., (Simon et al., 2013a; Vasconcelos et al.,

2004)). According to our research results, the di-

mension Analysis Concern consists of 55 concerns,

grouped in fifteen categories:

• Actor Aspects - This category covers papers deal-

ing with actor’s relations to business process,

goals, and the impact on them of EA changes,

e.g. the organizations impact on the motivation

and learning of employees (N

¨

arman et al., 2016).

• Application Portfolio Analysis - It means to an-

alyze why certain applications are well-liked and

widely used than others and what it means to the

EA (N

¨

arman et al., 2012).

• Best Practice - Papers elaborating on the value

of best practice analysis establish EA patterns

or evaluate real-world EAs with respect to EA

patterns (Ernst, 2008; Langermeier et al., 2014;

¨

Osterlind et al., 2012).

• Cost Analysis - Papers related to the value of cost

analysis are manifold. For example, they estimate

or assess the cost of the current IT architecture

(Francalanci and Piuri, 1999), or determine the

ROI (Return on Investment) of EA (Rico, 2006).

Another facet is related to the costs of changing

components of the EA (Lagerstr

¨

om et al., 2010,

p. 440),(Simon et al., 2013a, p. 25).

• EA Alignment - For instance, EA redundancy is

contained within papers related to EA alignment.

Those paper identify redundancies and eliminate

unplanned redundancies (Castellanos et al., 2011,

p. 118). Additionally, there are papers promoting

alignment between layers (Boucher et al., 2011).

• EA Change - This value covers concerns related

to modifications of the current EA. Scientific re-

search related to this value elaborates, for ex-

ample, the consequences of changes, scenarios’

choices, or performs gap analysis.

• EA Decisions - This value covers approaches re-

lated to the decision-making process itself. Ex-

emplary, it is related to the rationale behind de-

cisions, stakeholders’ influence on the decision-

making process, or methods to evaluate alterna-

tives (Plataniotis et al., 2013; Plataniotis et al.,

2014).

• EA Governance - Research related to EA Gov-

ernance evaluates EA from a strategic viewpoint,

comprehending the analysis of EA’s overall qual-

ity and its function. This value includes works

A Taxonomy for Enterprise Architecture Analysis Research

497

dealing with EA effectiveness, EA data qual-

ity, EA documentation, or metrics monitoring

(Davoudi and Aliee, 2009; Capirossi and Rabier,

2013).

• Information Dependence of an Application -

This category aims to evaluate dependent appli-

cations on EA, helping CIOs to manage their ap-

plication landscape and to eliminate redundancies

(Addicks, 2009).

• Model Consistency - This value aims to eval-

uate the integrity of EA models and its consis-

tency through time and organizations’ evolution

(Bakhshadeh et al., 2014; Florez et al., 2014b).

• Performance - This value is concerned with spe-

cific measures of performance, e.g., EA compo-

nent performance, business performance, or sys-

tem quality (Garg et al., 2006; N

¨

arman et al.,

2008).

• Risk - Papers related to the value of risk elabo-

rate on different aspects: risk of component’s fail-

ure and its consequences, information security as

a whole, EA project risks, or EA implementation

risks (Garg et al., 2006; Grandry et al., 2013).

• Strategy Compliance - Research on Strat-

egy Compliance analyses if EA decisions, EA

projects, models, and its structure are compliant

with the organization’s strategy (Plataniotis et al.,

2015a; Subramanian et al., 2006).

• Structural Aspects - This value covers analy-

sis of how components are organized, the rela-

tions among the components and their emergent

complexity, possible ripple effects, clustering is-

sues, and positional values in the structure (Aier,

2006b; Lee et al., 2014).

• Traceability It represents the need of querying

or tracking components that are connected/linked

to a particular component and/or have specific at-

tributes values.

4.3 Modelling Language Dimension

In some papers, the proposed method relies on certain

properties introduced by specific frameworks (Xavier

et al., 2017; Oussena and Essien, 2013). Others re-

quire EA models where the actual meta-model was

of less importance or they require models that follow

either less formalized or more general meta-models

(authors, 201x). Researchers, therefore, may require

model data to follow a specific conceptual format

which is captured by the third dimension modelling

Language. In this case, conceptual format serves as a

generic term for meta-model or framework.

We identified several modelling approaches, some

already existing, others created by the authors to suit

their specific analysis approach. We categorized the

modelling techniques into nine values of the dimen-

sion. Due to space limitations, we will present the full

description for the four main categories, which repre-

sent 80% percent of all papers classified. The other

five categories are DoDAF models, Probabilistic net-

works based, Intentional modelling, Formal Specifi-

cation Based, and UML based.

• ArchiMate-based - Obviously all research mod-

eled with ArchiMate is classified within this

value. Mainly, there can three subcategories

be distinguished: Firstly, papers applying Archi-

Mate (Plataniotis et al., 2015a; Davoudi and

Aliee, 2009). Secondly, papers extending Archi-

Mate (Grandry et al., 2013; Capirossi and Ra-

bier, 2013). Finally, papers that explicitly used

the Archimate adapted or merged with other enti-

ties and attributes (Plataniotis et al., 2015b).

• Combined Models - This category comprises pa-

pers that use more than one model to perform

their analysis, e.g. (Sunkle et al., 2014) which

uses Business Motivation Model (BMM) and In-

tentional modelling together with Archimate to

evaluate if and how business rules and goals are

compliant with the organization’s directives.

• Graphs - In this value, the EAs are modeled as

graphs, with their components and relations being

represented by nodes and edges, respectively. In

addition, design structure matrix is included be-

cause they are structurally equivalent to graphs.

Examples can be found in (Garg et al., 2006; Aier,

2006a). A special sub-case of EA graph models

are probabilistic relational models, influence dia-

grams, Bayesian networks, and fault tree analysis

models. All those models work with uncertainty

and probability principles in their modelling ap-

proaches (

¨

Osterlind et al., 2012; Johnson et al.,

2014).

• Own - In this value, we included papers that

present their own EA modelling framework and

it is not classifiable in none of the other categories

(Langermeier et al., 2014; Holschke et al., 2008).

4.4 Analysis Technique Dimension

This dimension covers techniques and methods used

to perform EA analysis. We identified a plurality

of different approaches, as a large portion of the ap-

proaches was proprietary, and many were poorly de-

tailed, focusing on the results rather than the anal-

ysis process. The results were classified in 22

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

498

categories according to their main characteristics:

(Semi) Formalism based, Analytic Hierarchy Process

(AHP), Architecture Theory Diagram (ATD) based,

Axiomatic Design, Best practice conformance, BI,

BITAM, Compliance analysis, Design Structured Ma-

trix, EA Anamnesis, EA executable models, EA mis-

alignment catalogue, Fuzzy based, Machine learning

techniques, Mathematical functions, Metrics based,

Multi-criteria analysis, Prescriptive models, Proba-

bilistic based, Proprietary techniques, Structural anal-

ysis, and Visual analysis. About 70% of the studies

corresponded to the following five values:

• (Semi) Formalism based - It includes description

languages, ontologies, set theory, and other for-

malisms. All those techniques try to take advan-

tage of reasoning mechanisms to perform (semi)

automated analysis of the EA, through queries,

model consistency, and restrictions checks, for ex-

ample (Florez et al., 2014a; Langermeier et al.,

2014).

• Metric-based - Analysis approaches including

several punctual quantitative metrics to evaluate

operational data from the components (e.g., per-

formance, usage, workload) or from the overall

EA (e.g., entropy) (Veneberg et al., 2014; Mon-

tino et al., 2007).

• Probabilistic-based - Cause and effect, un-

certainty and probabilistic events are concepts

present in all variations of methods belonging to

this category. Typical techniques are Bayesian

networks, probabilistic Bayesian networks, ex-

tended influence diagrams, and fault-tree analysis.

Those are frequently used to perform EA compo-

nents performance analysis (

¨

Osterlind et al., 2012;

Holschke et al., 2008).

• Structural Analysis - In this category, struc-

tural aspects of the overall EA or specific lay-

ers are analyzed. Methods and techniques based

on network theory are employed to identify criti-

cal points, clusters or overall indexes for the EA

structure (Wood et al., 2012; Dreyfus and Iyer,

2006).

• Visual Analysis - This category covers several

techniques that use the power of visualization in-

trinsic to the models to extract valuable infor-

mation for the experts. Typical concerns ana-

lyzed are alignment between layers, the impact of

changes or failures in the overall structure (

ˇ

Sa

ˇ

sa

and Krisper, 2011; Lee et al., 2014).

The previous dimensions were defined as a result

of the SLR performed, as described in Section 3. In

order to assess the taxonomy, we updated the data

through a new SLR (see Figure 1, Step 3) addressing

papers published after the first research’s interval and

applied the taxonomy to its final data-set, containing

46 articles.

The papers on the new data-set addressed 26 con-

cerns classified in 13 categories already present on the

taxonomy, which indicates its good coverage. From

the 47 preexisting concerns, six were merged into

three ones and eight new concerns were mapped on

the update (into the categories of Actor aspects, Best

practice analysis, Actor aspects, EA Alignment, EA

Change, Model consistency, and Structural aspects).

Regarding modelling approaches, 89.1% of stud-

ies presented model-based analysis. Only two new

values of modelling approaches were detected, one

of them also resulting in one new category (DoDAF

models). The papers from the dataset were classified,

according to the taxonomy, into seven categories - i.e.,

only one paper was not covered by the taxonomy’s

preexisting values, which, again, indicates it’s good

coverage.

Our first study resulted in a considerable number

of different analysis techniques and methods, classi-

fied into 23 categories. When applying the results to

the new data-set, we found 19 of those, and five new

categories, determined by specific approaches.

5 DISCUSSION

Following, we present existing research classified by

our taxonomy and discuss the insights.

All the evaluated papers were covered by the five

layers of the EA scope dimension. Regarding the fre-

quency of EA targeted scopes, most of the papers ap-

proached more than one layer. Business and Appli-

cation are the layers that received more focus on the

analysis in general - 77% and 83% of the total, re-

spectively.

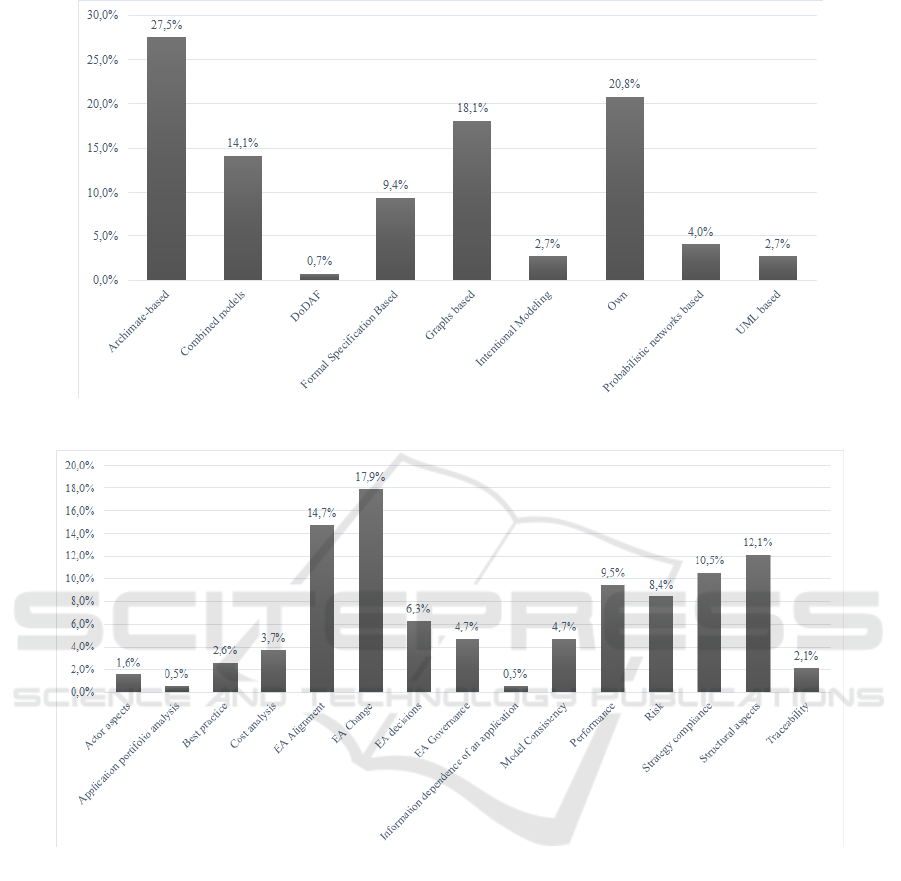

We identified about 22 different modelling

approaches, divided into nine categories (Archimate-

based, Combined models, DoDAF, Formal

Specification-based, Graphs-based, Intentional

modelling, Own, Probabilistic networks-based, and

UML-based). The distribution of the studies, from

both SLRs, regarding their modelling approaches is

depicted in figure 3.

Even though ArchiMate-based and graphs-based

represent a large part of the studies, 34.9% of the

approaches used a proprietary model or a combined

model to perform their analysis. The plurality of dif-

ferent modelling approaches reflects the lack of stan-

dardization regarding EA models and corroborates the

affirmation from (Johnson et al., 2007) that “there is

A Taxonomy for Enterprise Architecture Analysis Research

499

Figure 3: Percentage of studies on each model category.

Figure 4: Number of studies per Concern category.

no clear understanding of what information a good

enterprise architectural model should contain”.

Our taxonomy defined 52 concerns, classified

into 15 categories: Actors aspects, Application Port-

folio Analysis, Best practice analysis, Cost analy-

sis, EA Alignment, EA Change, EA Decisions, EA

Governance, Information dependence of an applica-

tion, Model consistency, Performance, Risk, Strategy

Compliance, Structural aspects, and Traceability. The

amount of papers on each concern category is illus-

trated by figure 4.

It is important to consider that some studies ap-

proached more than one concern on their analysis

(e.g., (Simon and Fischbach, 2013) performs an anal-

ysis on eight different aspects of the Application

scope). According to our research’ results, the focus

of EA analysis has been in five main categories: EA

Change, EA Alignment, Strategy Compliance, Per-

formance and Structural Aspects, as shown in fig-

ure 4. Papers covering these concerns correspond to

64.7% of the whole final set.

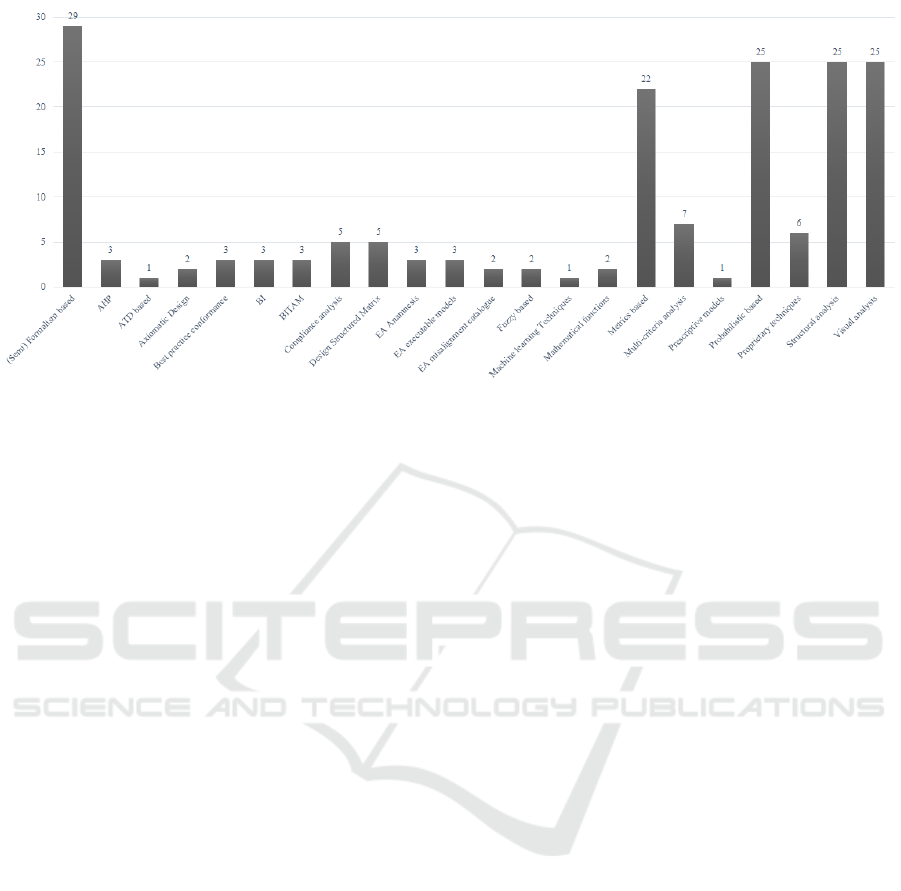

We identified a plurality of different analysis ap-

proaches (i.e., techniques or methods), classified in 22

categories according to their main characteristics, as

shown in figure 5. A large portion of the approaches

was proprietary, and some of them so specific that we

gathered them resulting in a specific category. Many

approaches were poorly detailed, focusing on the re-

sults rather than the analysis process.

In our present literature review about EA analy-

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

500

Figure 5: Number of studies per Analysis approaches category.

sis, from both set of papers, 57.5% of the works pre-

sented empirical data, while 28.7% of them used sim-

ulated data and 13.8% only theoretical data. Although

several publications present empirical cases, some of

them do not present enough information about how

the study was conducted and the benefits obtained

from the analysis approach (e.g., (Gmati et al., 2010)).

This lack of information leads, on the one hand, to

the issue of the reproducibility of methods, as some

techniques require a specific set of data. This set of

data is not always available, due to classification as

confidential by its owning organization (Gmati et al.,

2010). On the other hand, the data set might be ar-

tificially created for a special purpose, because there

was no data publicly available and, therefore, the cre-

ated data set might not be applicable to real-world

scenarios (e.g., (Giakoumakis et al., 2012; Sundarraj

and Talluri, 2003)). Despite no empirical evidence to

which degree EA research faces those issues is found,

many examples of a fallback to artificial evaluation by

using exemplary data sets can be given (Franke, 2014;

Sousa et al., 2013; Antunes et al., 2015; Xavier et al.,

2017). In those cases, the developed artifact normally

undertakes an evaluation at non-realistic conditions

and produces results which do not hold in a realistic

setting (Venable, 2006).

6 CONCLUSIONS

In this paper, we performed a SLR of EA analysis re-

search, its adopted models, analysis techniques and

concerns analyzed. Grounded in those findings, we

derived an initial taxonomy for EA analysis research

to help researchers classify their work according to

the analysis scope, technique, concern and modelling

language. We validate the taxonomy’s coverage with

a second data-set of 46 papers. We consider the 46

papers in the final data-set give a good perspective

regarding the coverage of our taxonomy. Therefore,

we present the state of art of EA analysis research

initiatives. We believe that researchers can use our

taxonomy as a conceptual reference to classify their

research and tool modelers can also take this study to

design EA analysis functionalities. The findings also

show that EA analysis research presents very diverse

EA models and concerns. Nevertheless, cases where

most EA layers were analyzed rarely appeared.

As for limitations, we did not perform backward

and forward searches. However, because of the broad

coverage of our search string, we are confident that

the additional search would not uncover much more

works. In our SLR, we did not perform a qualitative

assessment of primary studies. We accepted inten-

tionally all the works that aimed to perform EA anal-

ysis, without a very strict quality criteria, to be able

to have a broad understanding of the field and the au-

thors’ purpose.

Future works may go in three main directions.

First, because the taxonomy is not exhaustive, we

may need to look especially to the work of (Lantow

et al., 2016) to align all categories created. Then,

the taxonomy’s dimensions may be further validated

and refined with experts (e.g., by conducting a sur-

vey to enclose more real-world examples). Second,

based on our systematized set of analysis initiatives,

a web catalog may be designed to share past results

and to stimulate the reuse of EA models (EA data)

among researchers. This could boost the EA empiri-

cal analysis research, as occurred in areas such as ma-

A Taxonomy for Enterprise Architecture Analysis Research

501

chine learning UC Irvine

1

which was supported by

standard shared databases on which researchers ap-

ply their analysis approaches. For example, the open

models initiative

2

(Frank et al., 2007) goes on that

direction, offering a collection of models and also a

rough classification of them.

At the same time, further work is needed to inves-

tigate technical aspects like model anonymization or

model portability to lower the barriers for EA model

sharing. Since existing analysis specifications usually

presuppose a specific structure of meta-models and

models, it is very difficult to reuse them with organi-

zational models that do not conform to the respective

assumptions. They required a high effort to transform

the actual EA model in a manner, that the analysis

can be executed. Additionally, the respective meta

model does not make any statements about what con-

cepts are actually used (Langermeier et al., 2014). A

generic meta-model could help in that as the one stud-

ied in (Rauscher et al., 2017). Another option would

be focusing in ArchiMate-based models, the de facto

market standard for EA modelling.

REFERENCES

Abdallah, A., Lapalme, J., and Abran, A. (2016). Enter-

prise architecture measurement: A systematic map-

ping study. In 2016 4th International Conference on

Enterprise Systems (ES), pages 13–20.

Addicks, J. S. (2009). Enterprise architecture dependent

application evaluations. In Digital Ecosystems and

Technologies, 2009. DEST’09. 3rd IEEE International

Conference on, pages 594–599. IEEE.

Ahlemann, F., Stettiner, E., Messerschmidt, M., and Leg-

ner, C. (2012). Strategic Enterprise Architecture Man-

agement: Challenges, Best Practices, and Future De-

velopments. Management for Professionals. Springer

Berlin Heidelberg.

Aier, S. (2006a). How clustering enterprise architectures

helps to design service oriented architectures. In 2006

IEEE International Conference on Services Comput-

ing (SCC’06), pages 269–272.

Aier, S. (2006b). How Clustering Enterprise Architec-

tures helps to Design Service Oriented Architectures:

. In 2006 IEEE International Conference on Services

Computing (SCC’06).

Aier, S., Riege, C., and Winter, R. (2008). Classification of

enterprise architecture scenarios-an exploratory anal-

ysis. Enterprise Modelling and Information Systems

Architectures, 3(1):14–23.

Andersen, P. and Carugati, A. (2014). Enterprise archi-

tecture evaluation: a systematic literature review. In

MCIS, page 41.

anonymous, A. (201x). title. In Book, pages xx–yy. O.

1

https://archive.ics.uci.edu/ml/index.php

2

http://www.openmodels.org/

Antunes, G., Barateiro, J., Caetano, A., and Borbinha, J. L.

(2015). Analysis of federated enterprise architecture

models. In ECIS.

authors (201x). Title. In Conference, pages 1–10. Org.

Bakhshadeh, M., Morais, A., Caetano, A., and Borbinha, J.

(2014). Ontology Transformation of Enterprise Archi-

tecture Models. In Camarinha-Matos, L. M., Barrento,

N. S., and Mendonc¸a, R., editors, Technological Inno-

vation for Collective Awareness Systems, pages 55–

62, Berlin, Heidelberg. Springer Berlin Heidelberg.

Boucher, X., Chapron, J., Burlat, P., and Lebrun, P. (2011).

Process clusters for information system diagnostics:

An approach by Organisational Urbanism. Production

Planning & Control, 22(1):91–106.

Buckl, S., Franke, U., Holschke, O., Matthes, F., Schweda,

C. M., Sommestad, T., and Ullberg, J. (2009). A

pattern-based approach to quantitative enterprise ar-

chitecture analysis. AMCIS 2009 Proceedings, page

318.

Buschle, M., Ullberg, J., Franke, U., Lagerstr

¨

om, R., and

Sommestad, T. (2010). A tool for enterprise architec-

ture analysis using the prm formalism. In Forum at

the Conference on Advanced Information Systems En-

gineering (CAiSE), pages 108–121. Springer.

Capirossi, J. and Rabier, P. (2013). An Enterprise Architec-

ture and Data Quality Framework. In Benghozi, P.-J.,

Krob, D., and Rowe, F., editors, Digital Enterprise

Design and Management 2013, pages 67–79, Berlin,

Heidelberg. Springer Berlin Heidelberg.

Castellanos, C., Correal, D., and Murcia, F. (2011). An

ontology-matching based proposal to detect potential

redundancies on enterprise architectures. In 2011

30th International Conference of the Chilean Com-

puter Science Society, pages 118–126.

Cruzes, D. S. and Dyba, T. (2011). Recommended steps for

thematic synthesis in software engineering. In 2011

International Symposium on Empirical Software En-

gineering and Measurement, pages 275–284.

Davoudi, M. R. and Aliee, F. S. (2009). Characterization of

enterprise architecture quality attributes. In 2009 13th

Enterprise Distributed Object Computing Conference

Workshops, pages 131–137.

Dreyfus, D. and Iyer, B. (2006). Enterprise architecture: A

social network perspective. In System Sciences, 2006.

HICSS’06. Proceedings of the 39th Annual Hawaii

International Conference on, volume 8, pages 178a–

178a. IEEE.

Ernst, A. M. (2008). Enterprise Architecture Management

Patterns. In Proceedings of the 15th Conference on

Pattern Languages of Programs, PLoP ’08, pages 7:1–

7:20, New York, NY, USA. ACM.

Florez, H., S

´

anchez, M., and Villalobos, J. (2014a). Exten-

sible model-based approach for supporting automatic

enterprise analysis. In Enterprise Distributed Object

Computing Conference (EDOC), 2014 IEEE 18th In-

ternational, pages 32–41. IEEE.

Florez, H., Snchez, M., and Villalobos, J. (2014b). Exten-

sible model-based approach for supporting automatic

enterprise analysis. In 2014 IEEE 18th International

Enterprise Distributed Object Computing Conference,

pages 32–41.

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

502

Francalanci, C. and Piuri, V. (1999). Designing information

technology architectures: A cost-oriented methodol-

ogy. Journal of Information Technology, 14(2):181–

192.

Frank, U., Strecker, S., and Koch, S. (2007). ”open

model” - ein vorschlag f

¨

ur ein forschungsprogramm

der wirtschaftsinformatik. In Wirtschaftsinformatik.

Franke, U. (2014). Enterprise architecture analysis with

production functions. In Enterprise Distributed Ob-

ject Computing Conference (EDOC), 2014 IEEE 18th

International, pages 52–60. IEEE.

Garg, A., Kazman, R., and Chen, H.-M. (2006). Inter-

face descriptions for enterprise architecture. Science

of Computer Programming, 61(1):4–15.

Giakoumakis, V., Krob, D., Liberti, L., and Roda, F. (2012).

Technological architecture evolutions of information

systems: Trade-off and optimization. Concurrent En-

gineering, 20(2):127–147.

Gmati, I., Rychkova, I., and Nurcan, S. (2010). On the way

from research innovations to practical utility in enter-

prise architecture: The build-up process. International

Journal of Information System Modeling and Design

(IJISMD), 1(3):20–44.

Grandry, E., Feltus, C., and Dubois, E. (2013). Concep-

tual Integration of Enterprise Architecture Manage-

ment and Security Risk Management. In 17th IEEE

International Enterprise Distributed Object Comput-

ing Conference Workshops, pages 114–123.

Group, O. (2013). Archimate 2.1 Specification. Van Haren

Publishing.

Hanschke, I. (2009). Strategic IT Management: A Toolkit

for Enterprise Architecture Management. Springer

Berlin Heidelberg.

Haren, V. (2011). TOGAF Version 9.1. Van Haren Publish-

ing, 10th edition.

Holschke, O., N

¨

arman, P., Flores, W. R., Eriksson, E., and

Sch

¨

onherr, M. (2008). Using enterprise architecture

models and bayesian belief networks for failure im-

pact analysis. In International Conference on Service-

Oriented Computing, pages 339–350. Springer.

ISO, IEC, and IEEE (01.12.2011). Systems and software

engineering – Architecture description.

Johnson, P., Nordstr

¨

om, L., and Lagerstr

¨

om, R. (2007). For-

malizing analysis of enterprise architecture. In Enter-

prise Interoperability, pages 35–44. Springer.

Johnson, P., Ullberg, J., Buschle, M., Franke, U., and

Shahzad, K. (2014). An architecture modeling frame-

work for probabilistic prediction. Information Systems

and e-Business Management, 12(4):595–622.

Johnson, P., Lagerstr

¨

om, R., Ekstedt, M., &

¨

Osterlind, M. b.

(2012). IT Management with Enterprise Architecture.

Kitchenham, B. (2004). Procedures for performing sys-

tematic reviews. Keele, UK, Keele University,

33(2004):1–26.

Kotusev, S., Singh, M., and Storey, I. (2015). Consolidat-

ing enterprise architecture management research. In

System Sciences (HICSS), 2015 48th Hawaii Interna-

tional Conference on, pages 4069–4078. IEEE.

Lagerstr

¨

om, R., Johnson, P., and Ekstedt, M. (2010). Archi-

tecture analysis of enterprise systems modifiability: A

metamodel for software change cost estimation. Soft-

ware Quality Journal, 18(4):437–468.

Langermeier, M., Saad, C., and Bauer, B. (2014). Adaptive

approach for impact analysis in enterprise architec-

tures. In International Symposium on Business Mod-

eling and Software Design, pages 22–42. Springer.

Lankhorst, M. M. (2004). Enterprise architecture mod-

ellingthe issue of integration. Advanced Engineering

Informatics, 18(4):205–216.

Lantow, B., Jugel, D., Wißotzki, M., Lehmann, B., Zim-

mermann, O., and Sandkuhl, K. (2016). Towards a

classification framework for approaches to enterprise

architecture analysis. In The Practice of Enterprise

Modeling - 9th IFIP WG 8.1. Working Conference,

PoEM 2016, Sk

¨

ovde, Sweden, November 8-10, 2016,

Proceedings, pages 335–343.

Lee, H., Ramanathan, J., Hossain, Z., Kumar, P., Weir-

wille, B., and Ramnath, R. (2014). Enterprise archi-

tecture content model applied to complexity manage-

ment while delivering it services. In 2014 IEEE In-

ternational Conference on Services Computing, pages

408–415.

Manzur, L., Ulloa, J. M., S

´

anchez, M., and Villalobos,

J. (2015a). xarchimate: Enterprise architecture sim-

ulation, experimentation and analysis. simulation,

91(3):276–301.

Manzur, L., Ulloa, J. M., Snchez, M., and Villalobos, J.

(2015b). xarchimate: Enterprise architecture simu-

lation, experimentation and analysis. SIMULATION,

91(3):276–301.

Matthes, F., Buckl, S., Leitel, J., and Schweda, C. M.

(2008). Enterprise architecture management tool sur-

vey 2008.

Montino, R., Fathi, M., Holland, A., Schmidt, T., and

Peuser, H. (2007). Calculating risk of integra-

tion relations in application landscapes. In Elec-

tro/Information Technology, 2007 IEEE International

Conference on, pages 210–214. IEEE.

N

¨

arman, P., Holm, H., H

¨

o

¨

ok, D., Honeth, N., and John-

son, P. (2012). Using enterprise architecture and tech-

nology adoption models to predict application usage.

Journal of Systems and Software, 85(8):1953–1967.

N

¨

arman, P., Johnson, P., and Gingnell, L. (2016). Using

enterprise architecture to analyse how organisational

structure impact motivation and learning. Enterprise

Information Systems, 10(5):523–562.

N

¨

arman, P., Sch

¨

onherr, M., Johnson, P., Ekstedt, M., and

Chenine, M. (2008). Using Enterprise Architec-

ture Models for System Quality Analysis. In En-

terprise Distributed Object Computing Conference,

2008. EDOC ’08. 12th International IEEE, pages 14–

23.

Niemann, K. D. (2006). From enterprise architecture to IT

governance, volume 1. Springer.

¨

Osterlind, M., Lagerstr

¨

om, R., and Rosell, P. (2012). As-

sessing Modifiability in Application Services Using

Enterprise Architecture Models – A Case Study. In

Aier, S., Ekstedt, M., Matthes, F., Proper, E., and

Sanz, J. L., editors, Trends in Enterprise Architec-

ture Research and Practice-Driven Research on En-

terprise Transformation, pages 162–181, Berlin, Hei-

delberg. Springer Berlin Heidelberg.

A Taxonomy for Enterprise Architecture Analysis Research

503

Oussena, S. and Essien, J. (2013). Validating enterprise

architecture using ontology-based approach: A case

study of student internship programme. In Proceed-

ings of the 15th International Conference on Enter-

prise Information Systems - ICEIS, pages 302–309.

IEEE.

Plataniotis, G., d. Kinderen, S., Ma, Q., and Proper, E.

(2015a). A conceptual model for compliance checking

support of enterprise architecture decisions. In 2015

IEEE 17th Conference on Business Informatics, vol-

ume 1, pages 191–198.

Plataniotis, G., De Kinderen, S., Ma, Q., and Proper, E.

(2015b). A conceptual model for compliance check-

ing support of enterprise architecture decisions. In

Business Informatics (CBI), 2015 IEEE 17th Confer-

ence on, volume 1, pages 191–198. IEEE.

Plataniotis, G., de Kinderen, S., and Proper, H. A. (2013).

Capturing Decision Making Strategies in Enterprise

Architecture – A Viewpoint. In Nurcan, S., Proper,

H. A., Soffer, P., Krogstie, J., Schmidt, R., Halpin,

T., and Bider, I., editors, Enterprise, Business-Process

and Information Systems Modeling, pages 339–353,

Berlin, Heidelberg. Springer Berlin Heidelberg.

Plataniotis, G., de Kinderen, S., and Proper, H. A. (2014).

EA Anamnesis: An Approach for Decision Making

Analysis in Enterprise Architecture. International

Journal of Information System Modeling and Design

(IJISMD), 5(3):75–95.

Rauscher, J., Langermeier, M., and Bauer, B. (2017). Char-

acteristics of enterprise architecture analyses. pages

104–113.

Rico, D. F. (2006). A framework for measuring roi of enter-

prise architecture. Journal of Organizational and End

User Computing, 18(2):I.

Saint-Louis, P. and Lapalme, J. (2016). Investigation of

the lack of common understanding in the discipline of

enterprise architecture : A systematic mapping study.

2016 IEEE 20th International Enterprise Distributed

Object Computing Workshop (EDOCW), pages 1–9.

ˇ

Sa

ˇ

sa, A. and Krisper, M. (2011). Enterprise architecture

patterns for business process support analysis. Journal

of Systems and Software, 84(9):1480–1506.

Simon, D. and Fischbach, K. (2013). It landscape manage-

ment using network analysis. In Enterprise Informa-

tion Systems of the Future, pages 18–34. Springer.

Simon, D., Fischbach, K., and Schoder, D. (2013a). An Ex-

ploration of Enterprise Architecture Research. Com-

munications of the Association for Information Sys-

tems, 32(1):1–72.

Simon, D., Fischbach, K., and Schoder, D. (2013b). An

exploration of enterprise architecture research. CAIS,

32:1.

Sousa, S., Marosin, D., Gaaloul, K., and Mayer, N. (2013).

Assessing risks and opportunities in enterprise archi-

tecture using an extended adt approach. In Enterprise

Distributed Object Computing Conference (EDOC),

2013 17th IEEE International, pages 81–90. IEEE.

Subramanian, N., Chung, L., and tae Song, Y. (2006).

An nfr-based framework for establishing traceabil-

ity between enterprise architectures and system ar-

chitectures. In Seventh ACIS International Con-

ference on Software Engineering, Artificial Intelli-

gence, Networking, and Parallel/Distributed Comput-

ing (SNPD’06), pages 21–28.

Sundarraj, R. and Talluri, S. (2003). A multi-period op-

timization model for the procurement of component-

based enterprise information technologies. European

Journal of Operational Research, 146(2):339–351.

Sunkle, S., Kholkar, D., Rathod, H., and Kulkarni, V.

(2014). Incorporating directives into enterprise to-be

architecture. In Enterprise Distributed Object Com-

puting Conference Workshops and Demonstrations

(EDOCW), 2014 IEEE 18th International, pages 57–

66. IEEE.

The Open Group (2011). TOGAF Version 9.1. Van Haren

Publishing, Zaltbommel, 1 edition.

The Open Group (2017). ArchiMate 3.0.1 Specification.

Timm, F., Hacks, S., Thiede, F., and Hintzpeter, D. (2017).

Towards a quality framework for enterprise architec-

ture models. In Proceedings of the 5th International

Workshop on Quantitative Approaches to Software

Quality (QuASoQ 2017) co-located with APSEC, vol-

ume 4, page 1421, Nanjing, China.

V

¨

alja, M. (2018). Improving IT Architecture Modeling

Through Automation: Cyber Security Analysis of

Smart Grids. PhD thesis, KTH Royal Institute of

Technology.

Vasconcelos, A., Pereira, C. M., Sousa, P. M. A., and Tribo-

let, J. M. (2004). Open issues on information system

architecture research domain: The vision. In ICEIS

(3), pages 273–282.

Venable, J. (2006). A framework for design science re-

search activities. In Emerging Trends and Challenges

in Information Technology Management: Proceedings

of the 2006 Information Resource Management Asso-

ciation Conference, pages 184–187. Idea Group Pub-

lishing.

Veneberg, R., Iacob, M.-E., van Sinderen, M. J., and Bo-

denstaff, L. (2014). Enterprise architecture intelli-

gence: combining enterprise architecture and opera-

tional data. In Enterprise Distributed Object Com-

puting Conference (EDOC), 2014 IEEE 18th Interna-

tional, pages 22–31. IEEE.

Winter, R. and Fischer, R. (2006). Essential layers, arti-

facts, and dependencies of enterprise architecture. In

Enterprise Distributed Object Computing Conference

Workshops, 2006. EDOCW’06. 10th IEEE Interna-

tional, pages 30–30. IEEE.

Wood, J., Sarkani, S., Mazzuchi, T., and Eveleigh, T.

(2012). A framework for capturing the hidden stake-

holder system. Systems Engineering, 16(3):251–266.

Xavier, A., Vasconcelos, A., and Sousa, P. (2017). Rules for

validation of models of enterprise architecture - rules

of checking and correction of temporal inconsisten-

cies among elements of the enterprise architecture. In

Proceedings of the 19th International Conference on

Enterprise Information Systems - Volume 3: ICEIS,

pages 337–344. INSTICC, SciTePress.

ICEIS 2019 - 21st International Conference on Enterprise Information Systems

504