Multi Low-resolution Infrared Sensor Setup for Privacy-preserving

Unobtrusive Indoor Localization

Christian Kowalski

1

, Kolja Blohm

1

, Sebastian Weiss

1

, Max Pfingsthorn

1

,

Pascal Gliesche

1

and Andreas Hein

2

1

OFFIS, Institute for Information Technology, Oldenburg, Germany

2

Carl von Ossietzky University, Oldenburg, Germany

Keywords:

Indoor Localization, Multiple Infrared Sensors, Health Monitoring, Classification.

Abstract:

The number of home intensive care patients is increasing while the number of nursing staff is decreasing at

the same time. To counteract this problem, it is necessary to take a closer look at safety-critical scenarios

such as long-term home ventilation to provide relief. One possibility for support in this case is the exact

localization of the affected person and the caregiver. A wide variety of sensors can be used to remedy this

problem. Since the privacy of the patient should not be disturbed, it is important to find unobtrusive solutions.

For this specific application, low-resolution infrared sensors - which are unable to invade the patient’s privacy

due to the low amount of sensor data information - can be used. The objective of this work is to create a basis

for an inexpensive, privacy-preserving indoor localization system through the use of multiple infrared sensors,

which can be for example used to support long-term home ventilated patients. The results show that such

localization is possible by utilizing a support vector machine for classification. For the described scenario,

a specific sensor layout was chosen to ensure the highest possible area coverage with a minimum amount of

sensor installations.

1 INTRODUCTION

Due to the growing economic pressure, the number of

domestic intensive care patients is increasing (Razavi

et al., 2016), not least due to the aging of the world

population (World Health Organization, 2015). Es-

pecially the group of long-term home ventilated pa-

tients is exposed to high risk because even a single

mistake can lead to the death of the patient. Assis-

tive technologies can help to alleviate the risk and at

the same time increase safety in such a safety-critical

scenario (see Fig. 1). For this particular case, differ-

ent monitoring solutions can be used to improve re-

sponse time or to predict critical events in advance.

Cameras offer good image quality and provide the

ability to cover large areas but due to privacy con-

cerns, they have a low acceptance rate in domestic

environments (Rapoport, 2012). Consequently, mul-

tiple unobtrusive sensors distributed in the apartment

or house of elders represent an alternative to normal

cameras. They can provide valuable information to

predict or detect frequent hazards like falls (Mubashir

et al., 2013) or less frequent hazards like missing

caregivers during a respiratory device alarm of pa-

Figure 1: Staging of a long-term home ventilation scenario.

The patient is localized by the wall mounted infrared sensor

cluster for security purposes. Due to the low-resolution of

the sensors, the patient’s privacy is not invaded.

tients in need of long-term home mechanical venti-

lation (Gerka et al., 2018).

One example of such an unobtrusive sensor that

preserves privacy is a thermal infrared (IR) array sen-

sor. This sensor is able to capture the temperature of

a two-dimensional field with 8 × 8 pixels, even in

Kowalski, C., Blohm, K., Weiss, S., Pfingsthorn, M., Gliesche, P. and Hein, A.

Multi Low-resolution Infrared Sensor Setup for Privacy-preserving Unobtrusive Indoor Localization.

DOI: 10.5220/0007694601830188

In Proceedings of the 5th International Conference on Information and Communication Technologies for Ageing Well and e-Health (ICT4AWE 2019), pages 183-188

ISBN: 978-989-758-368-1

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

183

darkness. In this paper, we will make use of multiple

cost-efficient Panasonic thermopile low-resolution IR

thermal array sensors with a 60

◦

view angle called

Grid-EYE (AMG8833) (Panasonic, 2016) to perform

indoor localization.

1.1 Related Work

The use of low-resolution IR array sensors for dif-

ferent indoor monitoring or surveillance tasks is an

emerging topic. Despite the sensor’s small field of

view, most of the publications focus on single Grid-

EYE sensor setups to detect the presence of a per-

son in front of the sensor (Shetty et al., 2017; Bel-

tran et al., 2013; Trofimova et al., 2017). A ceil-

ing mounted configuration by Trofimova et al. (2017)

aimed at indoor detection in noisy environments, but

only the presence of a person within the sensor’s field

of view is determined and not the specific location.

Gonzalez et al. (2013) used a wall mounted Grid-EYE

to detect different activities in a pantry area. Again,

the captured data was not used to provide a location

of the detected objects or persons. Further on, Gerka

et al. (2018) proposed a ceiling mounted setup where

one Grid-EYE sensor is used to detect the number of

persons to enhance the safety of artificially ventilated

persons but also again, the specific location of the de-

tected persons was not taken into account. In contrast

to the aforementioned publications, Basu and Rowe

(2015) proposed a method to detect the presence of a

single person based on motion tracking with a ceiling

mounted Grid-EYE sensor.

Another crucial field of application of the Grid-

EYE sensor is fall detection. In this case, the sensor is

permanently mounted to a specific location (ceiling or

wall) and tries to detect falls in its field of view (Fan

et al., 2017; Mubashir et al., 2013). Again, present

work makes use of only one sensor at a time to infer

certain information about the movement of a person,

which might have a negative impact on the accuracy

of these solutions.

To our knowledge, all applications either try to de-

tect whether or not one or more persons are within

the sensor’s field of view or they try to detect falls or

motion in general. For this reason, in this paper we

propose a method to localize a single person indoors

instead of merely detecting presence. To do so, we

use a setup of two IR sensor clusters, each consisting

of three Grid-EYE sensors. Thus, the field of view

and the amount of data is increased, which might re-

sult in a better localization accuracy. Finally, we com-

pare the localization accuracy for a different number

of Grid-EYE sensors.

2 MATERIALS AND METHODS

2.1 Hardware Setup

2.1.1 Layout

For the conducted research we make use of multiple

8 × 8 thermopile IR array Grid-EYE sensors. The

sensor measures a size of 11.6 × 8 × 4.3 mm and

has the advantage that - compared to pyroelectric and

single element thermopile sensors - not only motion

but also presence and position are detectable due to

the sensor’s array alignment (Panasonic, 2016). The

Grid-EYE is able to sense surface temperatures rang-

ing from -20

◦

up to +100

◦

Celsius, has a 60

◦

view

angle, a human detection distance of up to 7 m, a

frame rate of 10 Hz and uses an inter-integrated cir-

cuit (I2C) as an external interface option (Panasonic,

2017). Using the I2C interface, it is possible to access

the sensor’s digital temperature reading directly, e.g.

via the CircuitPython AMG88xx module provided by

Adafruit (Adafruit, 2017).

Figure 2: Circuit visualization of one Grid-EYE sensor

cluster setup. One Raspberry Pi Zero is connected to three

Grid-EYE sensors via a multiplexer to bypass the limited

amount of only two I2C addresses.

The correct placement of the sensors plays an im-

portant role in the outcome of the experiment. While

most published work prefers a ceiling mount (Trofi-

mova et al., 2017; Beltran et al., 2013; Basu and

Rowe, 2015; Mashiyama et al., 2015; Gerka et al.,

2018), wall mounted configurations are also used

(Fan et al., 2017; Jeong et al., 2014; Gonzalez et al.,

2013; Mubashir et al., 2013). It is further stated that

the wall mounted setup has several drawbacks like an

obstructed view due to furniture and a varying amount

of pixels representing a person based on the distance

to the sensor (Trofimova et al., 2017). While the for-

mer explained disadvantage is dependent on each lo-

cal room layout and can also occur when mounted on

the ceiling, the latter does not really represent a dis-

advantage if several sensors are distributed so that the

ICT4AWE 2019 - 5th International Conference on Information and Communication Technologies for Ageing Well and e-Health

184

varying pixel amount can be used as an implicit in-

dicator for distance. Depending on the height of the

ceiling, the ceiling mounted setup might only cover a

small area and in most cases will not make use of the

maximum person detection distance of 7 m. Overall,

the positioning depends on the spatial conditions and

the activity to be observed. In perspective, however,

one can assume that a combination of the two posi-

tioning options is also conceivable.

2.1.2 Sensor Cluster

Three interconnected sensors are required for a desir-

able area coverage of 180

◦

. This creates the problem

that only two fixed I2C addresses are available, which

is why a multiplexer is used in our setup in addition

to the three IR sensors. Using a multiplexer, a sepa-

rate switching between the sensor channels becomes

possible (see Fig. 2).

In our setup, each sensor cluster is comprised of

three Grid-Eye sensors, a multiplexer for the record-

ing and a Raspberry Pi Zero for the wireless trans-

mission of the data. Since the exact positioning plays

an important role for a coverage of 180

◦

, a special

case for wall mounting was 3D-printed based on pre-

vious work. All necessary elements can be installed

and fastened on the wall in the 3D-printed case for

measurements (see Fig. 3).

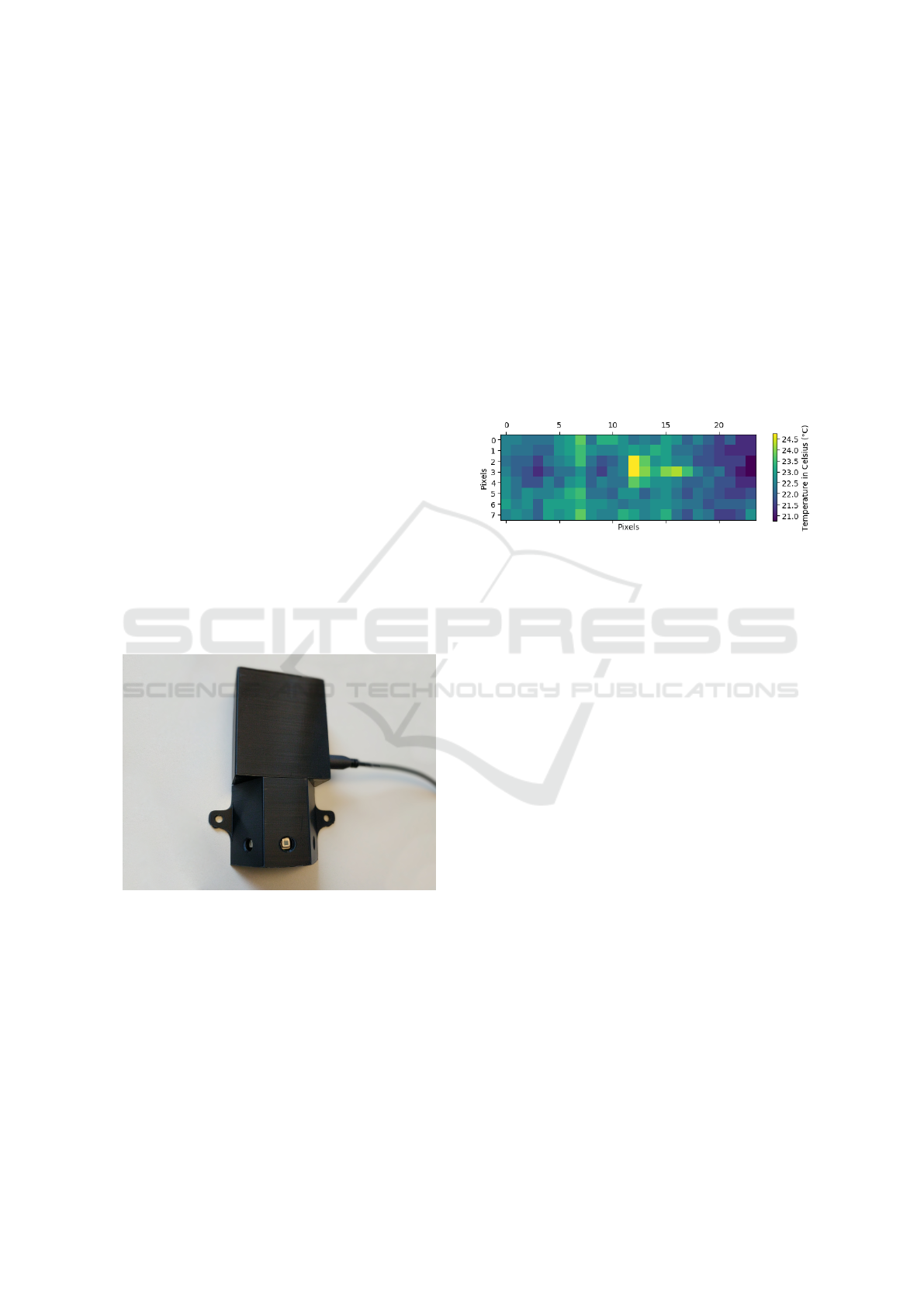

Figure 3: The image shows a complete sensor cluster setup,

ready to be mounted on the wall. A Raspberry Pi Zero, a

multiplexer and three Grid-EYE sensors are installed in the

3D-printed case. The sensors are aligned in such a way that

a 180

◦

horizontal and 60

◦

vertical field of view is achieved.

2.2 Indoor Localization Experiment

The cluster presented in the previous chapter for the

acquisition of Grid-EYE IR sensor data was assem-

bled in two copies so that a total of six sensors were

available for measurements. In the course of the ex-

periment, different layouts were compared for local-

ization in the domestic environment. Figure 5 shows

that the use of only one sensor results in many blind

spots. Thus, a room can not be completely captured.

However, in the case of monitoring in a domestic en-

vironment, it is important to cover the largest possible

area to guarantee safety. For this reason, this paper fo-

cuses on the localization accuracy of two 180

◦

sensor

clusters attached to the walls perpendicular to each

other. The difference in the measurement area cov-

ered by one or two clusters is negligible depending

on the layout of the room, but the additional sensor

information from a different angle may be important

for the accuracy of the localization method, especially

when one considers the low-resolution of the sensor

(see. Fig. 4).

Figure 4: Exemplary Grid-EYE sensor cluster output that

shows a 8 × 24 pixel temperature (C

◦

) matrix. It is quite ob-

vious that no identifying information can be captured with

this sensor.

Since a ground truth of the position is necessary

for a correct verification of the localization results,

the position of the recorded person must be recorded

in addition to the sensor data of the IR sensors. For

this purpose, the localization sensors of the Win-

dows Mixed Reality headset Dell Visor with inside-

out tracking were used. Other tracking solutions, of-

ten based on IR illumination themselves, were not

chosen to eliminate possible interference. Although

the tracking precision of virtual reality headsets seems

debatable in certain scientific fields (Niehorster et al.,

2017), this does not hold in our case due to the ex-

treme mismatch with the low-resolution of the Grid-

EYE sensors.

The measurements of the experiment are con-

ducted as follows: after placing the sensor clusters on

the walls at a height of about 1.5 m, the person to be

measured is equipped with the Dell Visor headset on a

predefined measuring area of 2.5 × 1.5 m. A data log-

ger explicitly written for this measurement captures

the x- and y-coordinates of the headset while the six

Grid-EYE sensors capture their respective 8 × 8 ma-

trices of temperature (see Fig. 6), both with a frame

rate of 10 Hz. The person with the headset simultane-

ously sees a predefined area in the virtual reality that

corresponds to the measuring surface in reality in or-

der to prevent accidental collision with objects in the

room. The collected data is used for the classification

to perform the indoor localization.

Multi Low-resolution Infrared Sensor Setup for Privacy-preserving Unobtrusive Indoor Localization

185

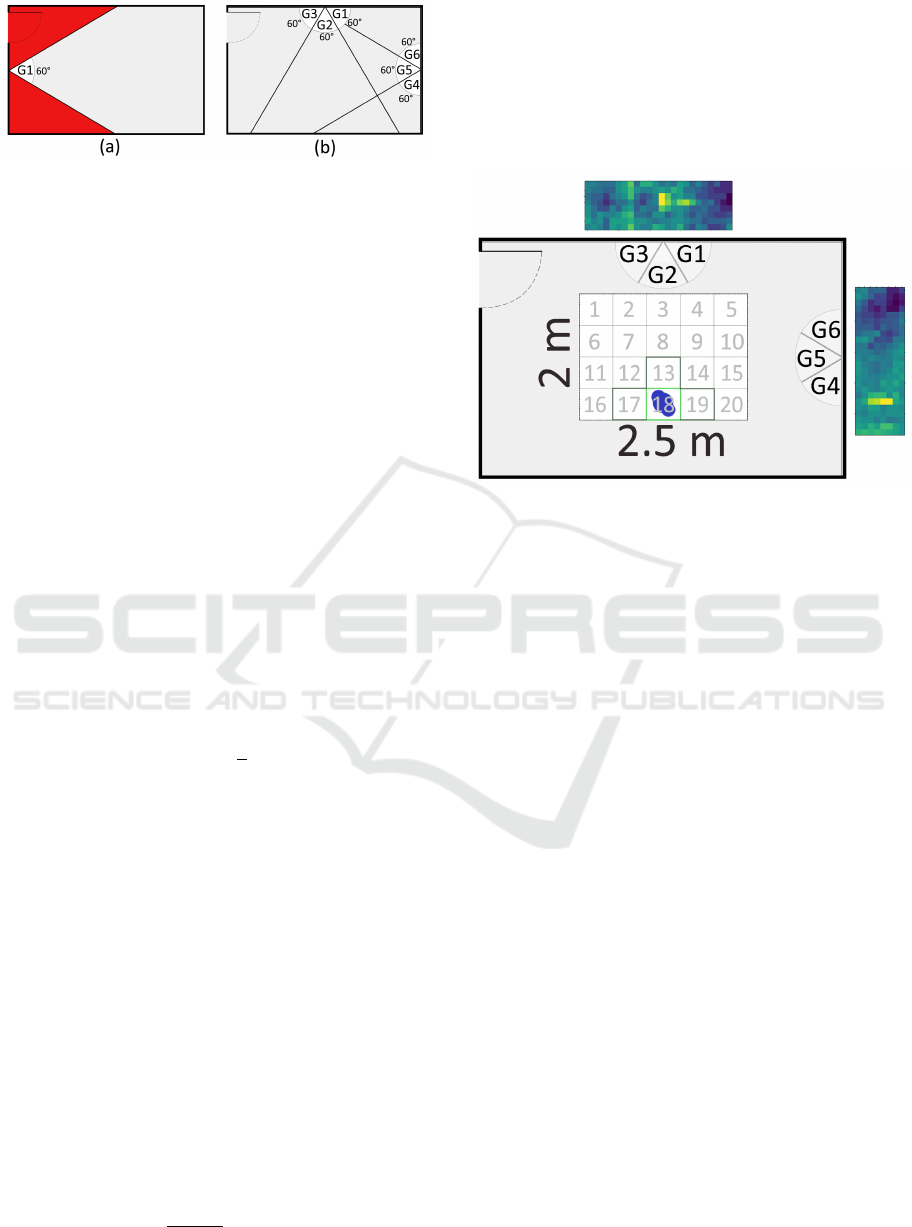

Figure 5: Top-down perspective on an exemplary room with

different sensor placement layouts. Red colored areas are

blind spots. (a) Only one Grid-EYE sensor (G1) misses a

large area of the room during capture. (b) With two clusters

(G1-G3, G4-G6) the whole room can be captured. These

layouts do not provide a great advantage regarding the cap-

tured area compared to the layout with only one cluster, but

they have an impact on the indoor localization accuracy.

2.3 Classification

A classification procedure is used to process the

stored temperature and position data. Assuming that

the recorded position data can serve as a ground truth

for localization, supervised learning can be used to

train a classifier. For this purpose, the measuring sur-

face is divided into a grid of 50 × 50 cm fields, which

serve as a label for the applied support vector machine

(SVM) classifier. In general, a SVM tries to find a hy-

perplane in a n-dimensional space in order to separate

classes based on the input data. On the basis of label

pairs (x

i

, y

i

), i = 1, ..., l where x

i

∈ R

n

is the feature

vector and y ∈ {1, −1}

l

is the class label vector, the

used C parameter SVM tries to solve the following

optimization problem:

min

w,b,ζ

1

2

w

T

w +C

l

∑

i=1

ζ

i

(1)

subject to y

i

(w

T

φ(x

i

) + b) ≥ 1 − ζ

i

, (2)

ζ

i

≥ 0, i = 1, ..., n, (3)

where C > 0 depicts the error term’s penalty param-

eter, w is the coefficient vector, b is a constant, ζ

i

is a parameter vector and the training vectors x

i

are

mapped into a higher, maybe even infinite dimen-

sional space through function φ (Hsu et al., 2003).

The kernel function used in our particular case is a

third degree polynomial with a parameter C = 1. No

hyper-parameter tuning was performed in this case.

For the implementation, an already existing machine

learning library in Python is used (Pedregosa et al.,

2011). The following accuracy function was used to

determine the correct predictions over l

samples

, where

ˆy

i

is the predicted value of the i-th sample and y

i

is the

corresponding true value:

accuracy(y, ˆy) =

1

l

samples

l

samples

−1

∑

i

1( ˆy

i

= y

i

). (4)

The feature vector consists of all corresponding tem-

perature values of the Grid-EYE sensors and the la-

bels correspond to each predefined 50 × 50 cm grid

field, which can also be seen in Fig. 6. In addition,

we will compute the accuracy for a correct classifi-

cation within a neighborhood of four for comparison

purposes.

Figure 6: Visualization of the conducted setup for the ex-

periment. One person (blue point) equipped with a Dell

headset for position determination purposes moves within

the predefined measurement area (2 × 2.5 m). The mea-

surement area is divided into a 4 × 5 grid field. Both sensor

clusters (G1-G3, G4-G6) capture the scene. Afterwards, the

data is evaluated to deduce the true grid location of a per-

son. Both the score for the actual grid position (light green)

as well as the accuracy score for the neighborhood (dark

green) will be evaluated.

3 RESULTS

The main objective of the measurement by the previ-

ously described system is the proof of concept for the

IR sensor cluster localization method and the compar-

ison of whether the use of such clusters has a benefi-

cial effect on the accuracy of the localization. Table 1

shows the results of the measurement with 2128 sam-

ples for both tested evaluation criteria. 25 % of the

data were used for testing, the remaining 75 % func-

tioned as training data for classification. The multi-

class classification of the SVM is done by a one-vs-

all scheme where separate classifiers are learned for

each different grid label ranging from 1 to 20 as seen

in Figure 6.

In addition to the accuracy of the correct grid

determination, Fig. 7 also shows an exemplary

probability heat map at one point in time. With these

two visualization methods it is easier to evaluate the

results correctly.

ICT4AWE 2019 - 5th International Conference on Information and Communication Technologies for Ageing Well and e-Health

186

Table 1: SVM classification accuracy results based on Eq.

4 for three cases where either the data of one sensor cluster

is used or the data of both. Scores for on point classification

(single grid field) and a neighborhood of four classification

(neighborhood grid fields) are shown.

SVM Accuracy G1-G3 G4-G6 G1-G6

Single 0.689 0.567 0.731

Neighborhood 0.812 0.789 0.930

Figure 7: Exemplary visualization of a probability heat map

for the predefined grid field. Based on the classification re-

sults, the location probabilities for a person are shown on

each particular grid.

4 DISCUSSION

The results indicate that it is possible to perform in-

door localization on the basis of low-resolution IR

sensors. Table 1 shows the accuracy of the classifica-

tion for detection in a single grid field and also for de-

tection within a neighborhood of four. As assumed in

advance, classification works best if the data of both

clusters is used (93.0 % accuracy for neighborhood

classification and 73.1 % for single classification). In

the case where only one cluster measures data at a

time, either an accuracy of 68.9 % (G1-G3) or 56.7 %

(G4-G6) is achieved for the single grid classification

or 81.2 % (G1-G3) or 78.9 % (G4-G6) for the neigh-

borhood classification. The difference between the

performance of G1-G3 and G4-G6 can be explained

by the fact that G4-G6 is positioned on the wall where

the measurement area is less broad and therefore col-

lects less discriminative information. We assume that

the accuracy of G1-G3 suffers from the greater depth

of 2.5 m, since the depth only plays an indirect role

via the number of pixels of a person to be detected.

Overall, the accuracy of the single grid field clas-

sification method is relatively poor even when both

clusters (G1-G6) are integrated. The single grid eval-

uation criteria emphasizes exact localization deci-

sions from very low-resolution data while also suf-

fering from quantization error. Therefore, a low per-

formance is expected. Depending on the location of

the person, one is often in the transition area between

two grids, so that a clear assignment becomes diffi-

cult. For this reason, the visualization of the heat map

used in Fig. 7 is more suitable for assessment pur-

poses. The advantage of this type of visualization is

that if there are more grids with an increased proba-

bility of the person’s localization, it becomes imme-

diately apparent that the person is presumably in the

transition area of the grids. Thus, a heatmap is bet-

ter suited to estimate the location of the person. In

addition, the size of the subdivided grid fields most

probably is an influencing factor that has an important

impact on the accuracy of the classifier. This particu-

lar aspect could be examined in future work.

5 CONCLUSIONS

In this paper, we developed a cost-efficient indoor lo-

calization setup based on the combination of multi-

ple low-resolution IR array sensors which is indepen-

dent of ambient lighting. By using the temperature

data output of each 8 × 24 pixel sensor cluster it was

possible to locate a person in a predefined area of

2.5 × 2 m, which was further sliced into 20 distin-

guishable 50 × 50 cm grids. It was then possible to

deduce the person’s location by applying a SVM clas-

sification.

Since this work represents an early feasibility

study, further points will have to be addressed in fu-

ture research work. It is planned to compare further

machine learning methods for the classification accu-

racy. Moreover, it is conceivable to increase the num-

ber of persons to be localized simultaneously, to en-

large the measuring area, to replace the subdivision

into individual grids by an exact estimation of the x-

and y-coordinates, to detect several states such as ”sit-

ting”, ”falling” and ”lying” by taking the height into

account, to perform a comprehensive investigation of

different classification techniques and to carry out the

entire setup in a realistic environment with additional

sources of interference such as heating or solar radia-

tion.

ACKNOWLEDGEMENTS

This work was funded by the German Ministry

for Education and Research (BMBF) within the re-

search project Nursing Care Innovation Center (grant

16SV7819K).

Multi Low-resolution Infrared Sensor Setup for Privacy-preserving Unobtrusive Indoor Localization

187

REFERENCES

Adafruit (2017). Adafruit circuitpython amg88xx. https://gi

thub.com/adafruit/Adafruit CircuitPython AMG88xx.

Basu, C. and Rowe, A. (2015). Tracking motion and

proxemics using thermal-sensor array. arXiv preprint

arXiv:1511.08166.

Beltran, A., Erickson, V. L., and Cerpa, A. E. (2013). Ther-

mosense: Occupancy thermal based sensing for hvac

control. In Proceedings of the 5th ACM Workshop

on Embedded Systems For Energy-Efficient Buildings,

pages 1–8. ACM.

Fan, X., Zhang, H., Leung, C., and Shen, Z. (2017). Robust

unobtrusive fall detection using infrared array sensors.

In Multisensor Fusion and Integration for Intelligent

Systems (MFI), 2017 IEEE International Conference

on, pages 194–199. IEEE.

Gerka, A., Pfingsthorn, M., Lupkes, C., Sparenberg, K.,

Frenken, M., Lins, C., and Hein, A. (2018). Detecting

the number of persons in the bed area to enhance the

safety of artificially ventilated persons. In 2018 IEEE

20th International Conference on e-Health Network-

ing, Applications and Services (Healthcom), pages 1–

6. IEEE.

Gonzalez, L. I. L., Troost, M., and Amft, O. (2013). Us-

ing a thermopile matrix sensor to recognize energy-

related activities in offices. Procedia Computer Sci-

ence, 19:678–685.

Hsu, C.-W., Chang, C.-C., Lin, C.-J., et al. (2003). A prac-

tical guide to support vector classification.

Jeong, Y., Yoon, K., and Joung, K. (2014). Probabilis-

tic method to determine human subjects for low-

resolution thermal imaging sensor. In Sensors Appli-

cations Symposium (SAS), 2014 IEEE, pages 97–102.

IEEE.

Mashiyama, S., Hong, J., and Ohtsuki, T. (2015). Activity

recognition using low resolution infrared array sensor.

In Communications (ICC), 2015 IEEE International

Conference on, pages 495–500. IEEE.

Mubashir, M., Shao, L., and Seed, L. (2013). A survey on

fall detection: Principles and approaches. Neurocom-

puting, 100:144–152.

Niehorster, D. C., Li, L., and Lappe, M. (2017). The accu-

racy and precision of position and orientation tracking

in the htc vive virtual reality system for scientific re-

search. i-Perception, 8(3):2041669517708205.

Panasonic (2016). White paper: Grid-eye state of the art

thermal imaging solution. Accessed: 2018-11-16.

Panasonic (2017). Data sheet: Infrared array sensor grid-

eye (amg88). Accessed: 2018-11-18.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V.,

Thirion, B., Grisel, O., Blondel, M., Prettenhofer,

P., Weiss, R., Dubourg, V., Vanderplas, J., Passos,

A., Cournapeau, D., Brucher, M., Perrot, M., and

Duchesnay, E. (2011). Scikit-learn: Machine learning

in Python. Journal of Machine Learning Research,

12:2825–2830.

Rapoport, M. (2012). The home under surveillance:

A tripartite assemblage. Surveillance & Society,

10(3/4):320.

Razavi, S. S., Fathi, M., and Hajiesmaeili, M. (2016). In-

tensive care at home: An opportunity or threat. Anes-

thesiology and pain medicine, 6(2).

Shetty, A. D., Shubha, B., Suryanarayana, K., et al. (2017).

Detection and tracking of a human using the in-

frared thermopile array sensorgrid-eye. In Intelligent

Computing, Instrumentation and Control Technolo-

gies (ICICICT), 2017 International Conference on,

pages 1490–1495. IEEE.

Trofimova, A. A., Masciadri, A., Veronese, F., and Salice,

F. (2017). Indoor human detection based on thermal

array sensor data and adaptive background estimation.

Journal of Computer and Communications, 5(04):16.

World Health Organization (2015). World report on ageing

and health. World Health Organization.

ICT4AWE 2019 - 5th International Conference on Information and Communication Technologies for Ageing Well and e-Health

188