Model Assurance Levels (MALs) for Managing Model-based

Engineering (MBE) Development Efforts

Julie S. Fant and Robert G. Pettit

The Aerospace Corporation, Chantilly, Virginia, U.S.A.

Keywords:

Model-based Engineering, UML, Software Models, Managing MBE, Model Quality and Value.

Abstract:

Model-based engineering (MBE) in industry is on the rise. However, improvements are still needed on the

oversight and management of MBE efforts. Frequently, program managers and high-level decision makers

do not have background in MBE to understand models, the value the models are providing, and if they are

successfully achieving their MBE goals. To address these concerns, we developed a rating scale for models,

called Model Assurance Levels (MALs). The purpose of the MALs is to be able to quickly and concisely

express the assurance the model is providing to the program, as well as, risks associated with the model.

Therefore, given a MAL level, program managers and decision makers will be able to quickly understand the

model value and risks associated with the model. They can then make informed decisions about the future

direction of MBE development effort.

1 INTRODUCTION

Model-based engineering (MBE) in industry is on the

rise. It is a term that is often thrown around within

industry and it is broadly used to describe any engi-

neering effort that utilizes models. However, there

is a wide range of activities that can be performed

as part of a MBE effort (Chaudron, 2017) (Liebel

et al., 2018) (Bencomo et al., 2019) (Pettit IV and

Mezcciani, 2013) (Pettit et al., 2014). For exam-

ple, some MBE efforts may focus on addressing just

the functional requirements models at a high-level.

While other MBE efforts will include addressing non-

functional requirements and use models for simula-

tion. Given this broad usage of the term in industry, it

can be difficult for those without detailed knowledge

of MBE to truly understand what the model is being

used for and how much benefit and risk reduction it

is providing. Therefore, a bridge is needed to quickly

and concisely translate a complex model into terms

program managers and high-level decision makes can

understand.

Currently, there are no repeatable approaches that

help program managers and high-level decision mak-

ers understand what type of information is in the

model, understand if the model is maturing as ex-

pected, and determine if the model is providing

enough value and risk reduction to the program. Cur-

rent approaches to assess and evaluate MBE artifacts

are either ad hoc or subjective based on the evalua-

tors experience with MBE. This leads to inconsistent

results and advice given to program managers and de-

cision makers. Without being able to understand and

manage MBE efforts, the benefits of the MBE may

be lost, and/or the quality of the resulting system de-

graded.

To address these concerns, we developed a rat-

ing scale for models, called Model Assurance Lev-

els (MALs). The purpose of the MALs to be able

to quickly and concisely express the assurance the

model is providing to the program. Additionally, each

MAL level has a certain level of risk associated with

it. Therefore, given a MAL level, program managers

and decision makers will be able to quickly under-

stand the risks associated with the model and make

informed decisions about the development effort and

the direction it is going. Additionally, MALs can be

also be used by engineers building the model as a

means to effectively communicate information in the

model and set expectations for what should be in the

model.

2 RELATED WORK

There is limited research in the area of model scales

and levels. There are notable works on technology

readiness levels, such as National Aeronautics and

542

Fant, J. and Pettit, R.

Model Assurance Levels (MALs) for Managing Model-based Engineering (MBE) Development Efforts.

DOI: 10.5220/0007697505420549

In Proceedings of the 7th International Conference on Model-Driven Engineering and Software Development (MODELSWARD 2019), pages 542-549

ISBN: 978-989-758-358-2

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

Space Administration (NASA) Technology Readiness

Levels (TRLs) (NASA, ) (NASA, 2012) and the Eu-

ropean Space Agency (ESA) Technology Readiness

Levels (TRLs) (European Space Agency, ) (European

Space Agency, 2008). However, the TRLs are pri-

marily focused on assessing the maturity of technol-

ogy and they do not translate well to assessing models

because they dont take into account what is included

in the model.

Another area of related work is on maturity lev-

els. Maturity levels have more an emphasis on assess-

ing the processes and the role of models, rather than

on the models themselves. First, Kleppe and Warmer

(Kleppe et al., 2003) propose a set of Modeling Ma-

turity Levels. These levels characterize the role of

modeling in a software project. They do not address

the content and risks on the models themselves. Ad-

ditionally, Kleppe and Warmer have extensive lev-

els prior to model use, but only one level for mod-

els, which is not detailed enough for managing model

based efforts. Second, Rios et al (Rios et al., 2006)

propose a Model-Driven Development (MDD) Matu-

rity Model. This work however is roadmap for adop-

tion of models. Finally, there is the Capability Ma-

turity Model Integration (CMMI) appraisal program.

CMMI was originally developed by Mellon Univer-

sity (CMU), but is now administer by the CMMI In-

stitute (CMMI Institute, LLC, 2018) (Kneuper, 2018).

CMMI assess the maturity of an organizations soft-

ware processes and a CMMI level is given based on

the maturity of an organization. Given a CMMI, one

can quickly and concisely understand the maturity

of an organizations software development processes.

CMMI can be applied to organizations using MBE.

However, CMMI levels assess processes rather than

models themselves. Therefore, they are not as use-

ful with trying to understand what type of informa-

tion and how much information is being captured in a

model during the actual development effort.

3 OVERVIEW OF MALs

Model Assurance Levels (MALs) are a measurement

system for model value, content, and quality. MALs

are based on a scale from one to three with three being

the highest to reflect increasing value and risk reduc-

tion of the model. The MAL scale is conceptually de-

picted in Figure 1. Within each MAL level, there are

also sub-levels to show incremental growth. When

applying MALs, not every program needs to achieve

the highest MAL level. Instead, a MAL level should

be selected based on the amount of risk and cost a pro-

gram is willing to accept. A higher MAL score will

help to reduced risk since the increased modeling ef-

fort will find and correct faults earlier in the lifecycle.

However, this will also cause the cost to increase since

more time and effort is needed for the additional mod-

eling effort. The higher modeling costs are recouped

later in the lifecycle by reducing rework and testing.

Additionally, the resulting system will be of higher

quality. Thus, when setting a MAL goal, careful con-

sideration should be taken to ensure the right balance

of risk reduction and cost.

Figure 1: Conceptual MAL Scoring.

3.1 Benefits of MALs

Using MALs to understand and assess MBE efforts

has several benefits. First, MAL levels are very

easy to understand, even without details knowledge

of MBE. MAL levels can be uses to consistently con-

vey information about models.

Second, MALs are acquisition and development

approach agnostic. Therefore, they can be deter-

mined and assessed regardless of acquisition structure

or development lifecycle approach. This makes the

approach flexible and broadly applicable across pro-

grams and customers.

Third, MAL scores dont have to be universal

across a program or project. Different domain or sub-

systems models can have different desired MALs. For

example, flight software models may seek to achieve

higher MALs than ground processing software mod-

els. This is because flight software is often considered

safety critical therefore reducing risk is more impor-

tant on these types of programs (Ganesan et al., 2016).

Another benefit of MALs is they can be specified

in acquisition language and proposals. This can help

set MBE expectations between government and con-

tractor during proposal and contract award stages, as

well as help avoid confusion on what types and the

Model Assurance Levels (MALs) for Managing Model-based Engineering (MBE) Development Efforts

543

amount of content that will be developed. For exam-

ple, on government acquisitions a contractor can state

they plan to have their flight software model at certain

MAL level by Preliminary Design Review (PDR) and

a different and more mature MAL level by Critical

Design Review (CDR).

4 MAL SCALE

Developing a scale to assess and define categories

of software models is very difficult because software

models are multifaceted. Models typically vary by

breadth (i.e. how much information is modeled), by

depth (i.e. how detailed is the information in the

model), and by fidelity (i.e. how much validation and

verification (V&V) is performed on the model. There-

fore, we first developed a high-level MAL scale that

broadly groups models into the different categories by

breadth of information in the model, as captured in

Table 1. We believe that all software models can fall

into one of these three categories.

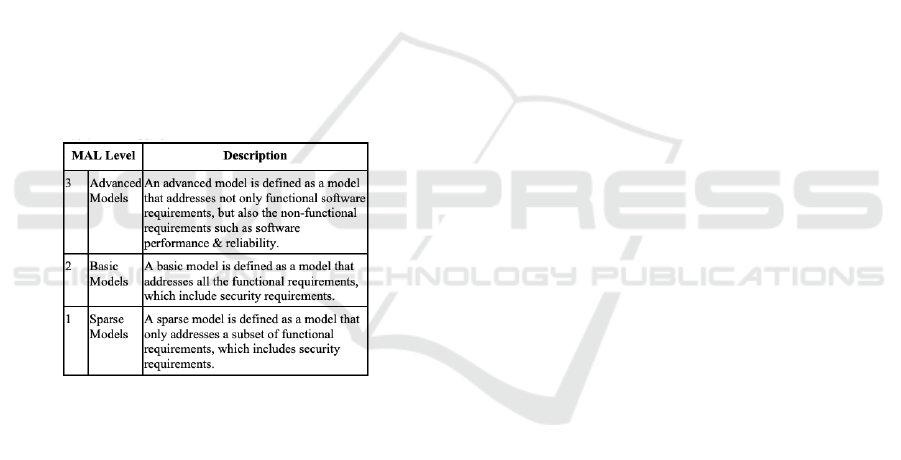

Table 1: MAL Scale.

While these three MAL levels provide some distinc-

tion between different models, they are not sufficient

for managing MBE efforts because they do not ad-

dress depth or fidelity. For example, using just these

three levels a high-level analysis model that addresses

all functional requirements, but doesnt not contain de-

tailed design information such as concurrency would

receive a level 2. A detailed design model that ad-

dress all the functional requirements and contains ad-

ditional information detailed information such as con-

currency, would also receive a score of 2. A detailed

design model provides greater risk reduction than a

higher level analysis model since additional imple-

mentation details have been thought out and included

in the design. Therefore, sublevels are needed to add

distinctions between these types of models.

Next, we further decomposed the three MAL level

into sublevels that address depth of a model. For

depth of a model, we used the terms Analysis Model

and Detailed Design Model. These terms are com-

monly used in MBE methods such as (Gomaa, 2016)

(Gomaa, 2011). An analysis model captures the soft-

ware classes and their interactions at a high-level.

An analysis may define operations for classes, but

they lack detailed implementation information such

as parameters, types, and concurrency. Detailed de-

sign models contain detailed implementation infor-

mation about the software, such as data types, con-

currency, and data formats. Detailed design models

should reduce the risk on a program since the lower

level implementation issues are thought out and ad-

dressed prior to implementation. Therefore, on the

MAL scale, an analysis model would have a lower

score that a detailed design model.

Since MALs are intended to be used to help deci-

sion makers manage a MBE development effort, us-

ing just model depth and breadth is not enough. A fi-

nal aspect that helps program managers and decision

makers reduce risk on a program is through model

V&V. The more V&V that is performed on the model,

the more confidence we have the models are correct

and the more risk reduction they provide. Therefore,

we further decomposed the MAL scale by the amount

of V&V that is performed on the model. We used

the terms Sparsely V&V to indicate that only a subset

of the software functionality in the model underwent

V&V and Completely V&V to indicate that the ma-

jority of the software functionality underwent V&V.

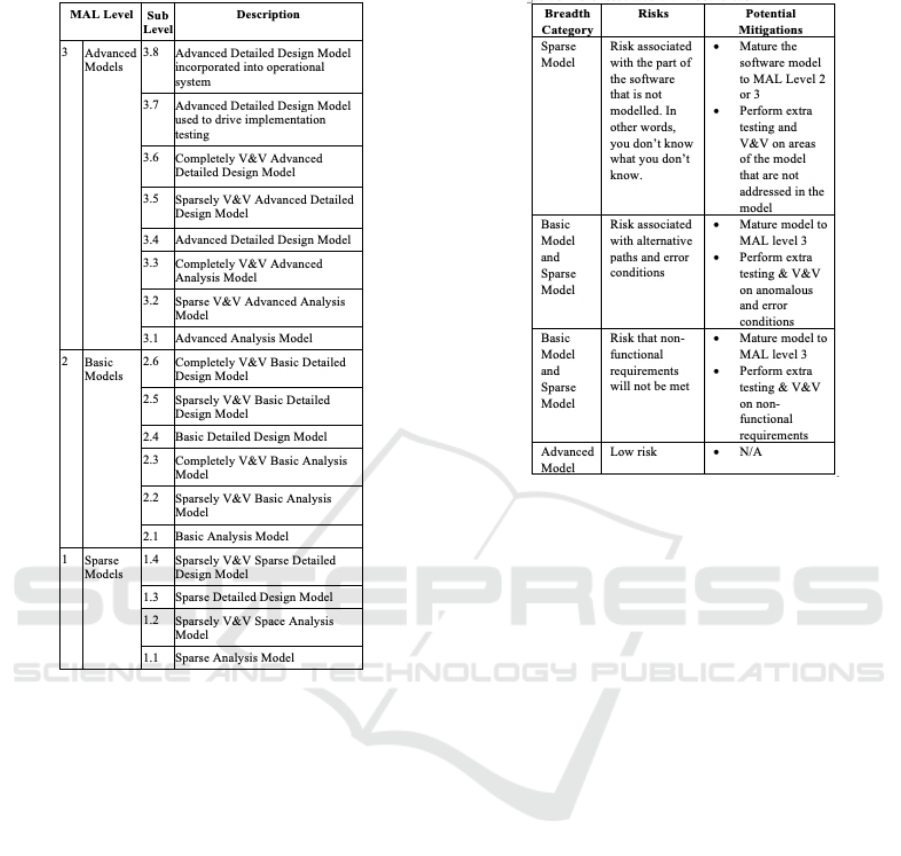

The final MAL scale with sublevels that address

model depth and amount of V&V performed on the

software functionality model is captured in Table 2.

At level one, we only used sparsely V&V since

because the model only addresses a subset of the func-

tional requirements, therefore complete V&V of the

requirements in not possible. Both MAL levels two

and three contain sublevels for both sparsely V&V

and completely V&V since they address all the func-

tional requirements in the model.

The last addition we made to the sublevels was in

MAL Level 3. Advances are being made every day

in MBE and new techniques are being developed for

other uses of models. Therefore, we added two more

sublevels to address when models are used to drive

implementation testing and when models are incorpo-

rated into operational systems. These types of models

need to be highly trusted, therefore it is assumed these

models would need to have a lot of breadth, a lot of

depth, and need to be highly V&V, therefore they are

included at the top of the MAL scale.

MODELSWARD 2019 - 7th International Conference on Model-Driven Engineering and Software Development

544

Table 2: MAL Scale with Sub Levels.

4.1 Associating Risk with MAL Levels

The MAL scale with sublevels is useful for under-

standing the type of information in a model. How-

ever, in order for decision makers to effectively un-

derstand and make informed decisions about the di-

rection of the model, risks need to be associated with

each level. That way, decision makers can understand

the risk they are taking on.

To associate risk to the MAL scale, we need to

determine the risk associated with the depth, breadth,

and amount of V&V performed on the model. This is

accomplished by determining the risk associated with

what is not included in that type of model. For ex-

ample, Table 3 show the risks associated with differ-

ent model breadths. So, if a decision maker finds out

that their programs model is a Basic Model, then they

know there is risk associated with the unknown be-

havior on alternative paths and error conditions, as

well as, the potential to not meet non-functional re-

quirements. Now they are informed about the pro-

gram risks and can effectively decide how they wish

to address them.

Table 3: Risks associated with model breadth.

Similar risk tables were developed for model depth

and fidelity. Using these risks, the overall risks for

each MAL sublevel can be determine. By selecting

the risks from the appropriate categories.

5 CASE STUDY: AUTOMATED

VEHICLE GUIDANCE SYSTEM

MALs have been applied to an Aerospace Corpora-

tion customer. MALs received very positive feedback

for the value it provides to decision makers and the

program. Unfortunately, the details of these customer

model cannot be openly published. Therefore, repre-

sentative example will be used to illustrate the values

MALs provide decision makers. The representative

case study is an Automated Vehicle Guidance System

with an academic model from (Gomaa, 2011). The

AVGS software is responsible for moving automated

vehicles around a factory in a counter clockwise di-

rection where they stop/start at selected stations to

collect parts. An external Supervisory system sends

the commands to the vehicles. The overall context

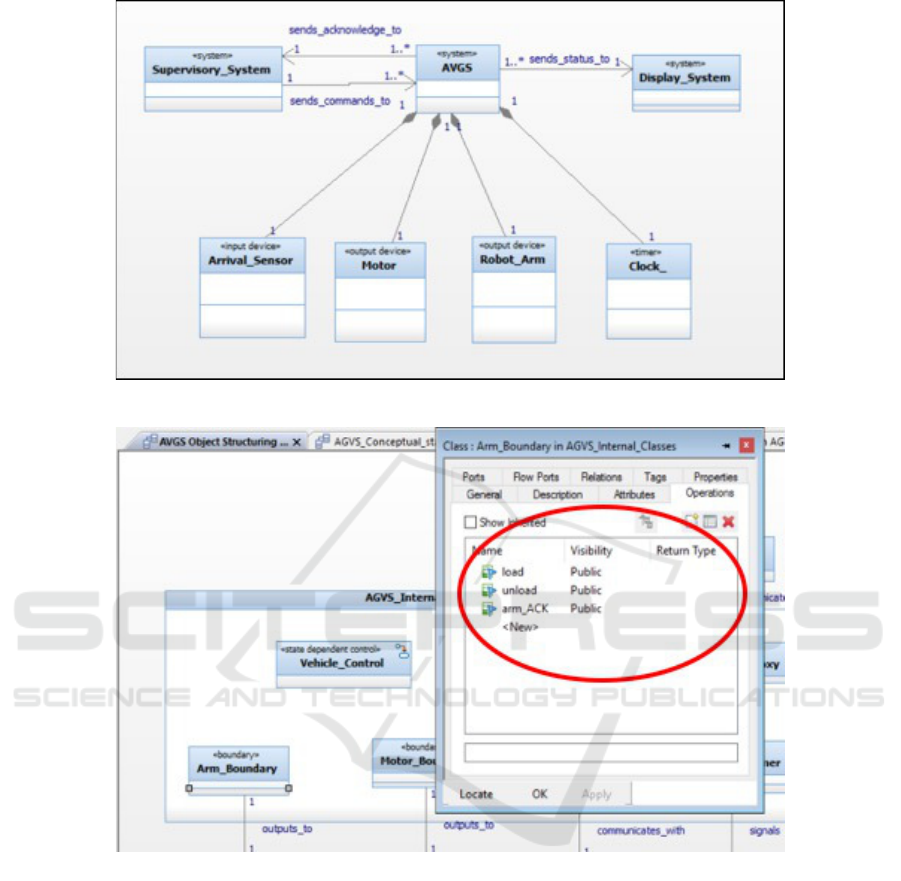

diagram for the AVGS is show in Figure 2.

In this case study, a government program manager

is responsible for acquiring the AVGS. They would

like get a MAL level during Preliminary Design Re-

view (PDR) and Critical Design Review (CDR) to un-

derstand if the model is maturing as planned, to iden-

tify any risks, and to identify any areas for improve-

Model Assurance Levels (MALs) for Managing Model-based Engineering (MBE) Development Efforts

545

ment for the model.

5.1 First MAL Assessment

The first MAL assessment is performed at PDR. The

contractors provided a copy of the model in IBM

Rational Rhapsody to use in the assessment. First,

to assess model depth, the level of detail provided

on classes and interactions is examined. This is il-

lustrated using Figure 3. It shows a portion of the

AVGS class diagram and the properties associated

with the Arm Boundary class. In this example, the

Arm Boundary class has operations defined. How-

ever, the operations parameters and data types are not

defined. This is consistent with model depth of an

Analysis Model, which only contains high-level de-

tails. Since all the classes and dynamic views in the

model are at this same level of detail, the model is

categorized as an Analysis Model.

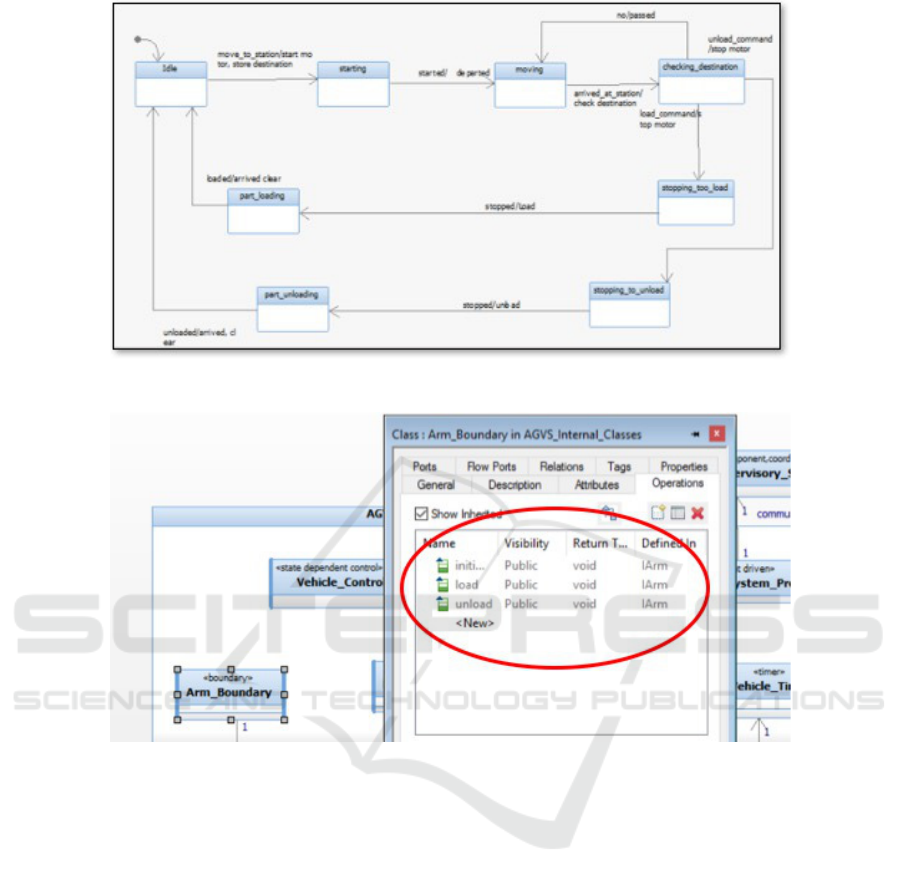

Next, the model breadth is examined. This is ac-

complished by examining how much of the software

is captured in the model. This will be illustrated using

the state chart for the Vehicle Control Class in Fig-

ure 4. This state chart clearly shows how the Vehi-

cle Control Class is guiding the automated vehicle to

meet its functional requirements, like moving to dif-

ferent locations in the factory and loading/unloading

parts. However, it fails to show what happens if there

is an error in this process. What happens if the vehicle

gets stuck or cannot move anymore? What happens if

it goes to unload a part and there is no part to grab

because it accidentally fell out? This state chart and

other dynamic and static views in the model support

that the model does not address alternative paths and

error conditions.

Additionally, the security requirements are not ad-

dressed by the model. For instance, we do not see any

user authentication required to operate the vehicle or

any verifying of vehicle commands to ensure that only

valid locations within the factory are entered as a des-

tination. Since the model does not address security,

alternative paths, and error conditions, it is given a

model depth of sparse model.

Finally, the amount of V&V performed on the

model is assessed. The contractor does not have

the requirements traced to model elements. How-

ever, there is an implied traceability since the se-

quence diagrams are traced to the use cases. Addi-

tionally, all classes are in at least one sequence di-

agram. Therefore, it can be assumed that they are

all functionally required. The contractor also stated

that they performed manual walk-throughs on the se-

quence diagrams. Since some V&V was performed

on the model, but other types of V&V, such as simu-

lation, were not performed, the model is categorized

as Sparsely V&V.

In summary, the model is categorized as a

Sparsely V&V Sparse Analysis Model, which is a

MAL level 1.2.

The MAL score and risks associated with this

score are presented to the government decision maker.

The risks include:

• Risk associated with the part of the software that

is not modeled in this case the security require-

ments.

• Risk that alternative paths and error conditions are

unknown and wont be discovered until implemen-

tation phase

• Risk that non-functional requirements will not be

met

• Implementation risk since lower level implemen-

tation details are not addressed in the model and

any faults wont be discovered until implementa-

tion phase.

The program has a set budget for the MBE effort,

so the government program manager must decide the

best way to mature the model for CDR. Aerospace

provided two courses of action: First, the model can

be matured to a MAL 1.4, where the depth of the

model can be increased, and the breadth of the model

remain unchanged. This would reduce the imple-

mentation risk on a program since implementation

details would be added to the model. There would

still be outstanding risks including not addressing al-

ternative paths, error conditions, security, and non-

functional requirements. The second course of action

is to mature the model to a MAL 2.2. This would

entail keeping the current model depth but expand-

ing the model breadth to address. alternative paths,

error conditions, security, and non-functional require-

ments. The outstanding risk at level would be imple-

mentation risk, since additional implementation de-

tails about the model are not being added.

Given this information about the model and poten-

tial risks, the government program manager decided

to mature the model to a MAL 1.4. since security, al-

ternative paths, and non-functional requirements are

less important on the AVGS. The government deci-

sion maker would rather reduce the implementation

risks.

5.2 Follow-up MAL Assessment

The purpose of the follow-up MAL assessment is to

determine if the model matured to the desired MAL

1.4 level. Therefore, during CDR an updated copy of

the model is provided to assess.

MODELSWARD 2019 - 7th International Conference on Model-Driven Engineering and Software Development

546

Figure 2: AVGS Context Diagram.

Figure 3: AVGS Class Diagram.

First, the level of detail provided on classes and

interactions is examined to assess model depth. This

is illustrated using Figure 5, which shows an updated

portion of the AVGS class diagram and the proper-

ties associated with the Arm Boundary class. In this

example, the Arm Boundary class has operations de-

fined. Additionally, the class now contains the opera-

tions parameters and data types. Since all the classes

and dynamic views in the model at this same level of

detail, the model is categorized as a Detailed Design

Model. This is consistent with the desired MAL level

of 1.4.

Next, the model breadth is examined. This is ac-

complished by examining how much of the software

is captured in the model. The state chart for the Ve-

hicle Control class is examined again. While it was

updated to reflect the detailed messages. However,

the state chart still only addresses how its functional

requirements are being met. No additional behav-

ior was added to the diagram. This is also true of

other dynamic views in the model, which still do not

address alternative paths, error conditions, and secu-

rity requirements. Thus, the model remains a Sparse

Model, which is consistent with the desired MAL 1.4

level.

Finally, the amount of V&V performed on the

model is assessed. The contractor performed still sim-

ilar V&V as before. Therefore, the model is catego-

rized as Sparsely V&V Model.

In summary, the updated model is categorized as a

Model Assurance Levels (MALs) for Managing Model-based Engineering (MBE) Development Efforts

547

Figure 4: Vehicle Control State Chart.

Figure 5: Updated AVGS Class Details.

Sparsely V&V Sparse Detailed Design Model, which

is a MAL level 1.4. This is consistent with the desired

MAL level the government program manager set as a

goal for CDR. Now, as they move into the implemen-

tation phase, the government can focus their risk re-

duction resources on addressing the outstanding risk

to address alternative paths, error conditions, security,

and non-functional requirements.

5.3 Case Study Summary

In summary, the use of MALs helped the government

program manager to understand what was being mod-

eled, to shape the direction of the model, to under-

stand the current risks, and to effectively uses limited

resources. Using MALs, the program manager was

able to quickly identify risks and was able to deter-

mine the best course of action for the program, given

their limited budget and resources. Additionally, by

using MALs the government program manager was

able to understand the progress on the model, as well

as, the type of progress made between two versions

of the model. Finally, the case study illustrated how

MALs can be used to set expectations and direction

of MBE efforts between contractors and government.

6 NEXT STEPS

The concept of software MAL scale and scores pro-

vides value to program managers and decision mak-

ers. However, MAL scores must be determined and

applied in a consistent manner in order for the scores

to be meaningful. For example, what happens is 60%

of the model is at the analysis level and 40% is at the

detailed design level. Should it be a level 2.1 or a

level 2.4? Opinion on this matter may vary depend-

ing on who is performing the assessment. Therefore,

next steps include developing a repeatable and quan-

MODELSWARD 2019 - 7th International Conference on Model-Driven Engineering and Software Development

548

tifiable assessment method so that the interpretation

of the MAL scale is consistent regardless of who is

performing the assessment. Additionally, tool support

to automate the MAL assessment process will also be

pursued. Automated tool support will make the ap-

proach more practical and it will provide faster results

to decision makers.

Another area of future work is to increase the ap-

plicability to agile methodology, where models may

not be being developed. Research is needed to deter-

mine if MALs can be effectively applied on models

reverse engineered from code. This is useful on Agile

efforts where only code is being developed.

Finally, the last area of future work is to expand

MALs into the systems engineering space and create

a separate scale and assessment method for enterprise

models.

7 CONCLUSIONS

In conclusion, there exists a need in industry to help

program managers and high-level decisions makers

understand and manage MBE efforts. This paper de-

scribes the first step in establishing a repeatable and

concise way to describe models to decision makers

using Model Assurance Levels (MALs). MALs pro-

vide the means to express a lot of information about a

software model into a single score. Each MAL score

has as level of associated risk, which further helps

program managers and decisions makers make in-

formed decision about the direction and expectations

of the model. MALs are development and acquisition

approach agnostic, therefore they have broad applica-

bility across industry.

REFERENCES

Bencomo, N., G

¨

otz, S., and Song, H. (2019). Models@ run.

time: a guided tour of the state of the art and research

challenges. Software & Systems Modeling, pages 1–

34.

Chaudron, M. R. (2017). Empirical studies into UML in

practice: pitfalls and prospects. In Modelling in Soft-

ware Engineering (MiSE), 2017 IEEE/ACM 9th Inter-

national Workshop on, pages 3–4. IEEE.

CMMI Institute, LLC (2018). CMMI Institute.

https://cmmiinstitute.com.

European Space Agency. ESA Technology Readiness Lev-

els.

European Space Agency (2008). Technology readiness lev-

els handbook for space applications.

Ganesan, D., Lindvall, M., Hafsteinsson, S., Cleaveland,

R., Strege, S. L., and Moleski, W. (2016). Experi-

ence report: Model-based test automation of a con-

current flight software bus. In Software Reliability

Engineering (ISSRE), 2016 IEEE 27th International

Symposium on, pages 445–454. IEEE.

Gomaa, H. (2011). Software modeling and design: UML,

use cases, patterns, and software architectures. Cam-

bridge University Press.

Gomaa, H. (2016). Real-Time Software Design for Embed-

ded Systems. Cambridge University Press.

Kleppe, A. G., Warmer, J., Warmer, J. B., and Bast, W.

(2003). MDA explained: the model driven architec-

ture: practice and promise. Addison-Wesley Profes-

sional.

Kneuper, R. (2018). Capability Maturity Model Integration

for Development (CMMI-DEV).

Liebel, G., Marko, N., Tichy, M., Leitner, A., and Hansson,

J. (2018). Model-based engineering in the embedded

systems domain: an industrial survey on the state-of-

practice. Software & Systems Modeling, 17(1):91–

113.

NASA. TRL Definitions. https://www.nasa.gov/pdf/

458490main TRL Definitions.pdf.

NASA (2012). Technology readiness levels.

https://www.nasa.gov/directorates/heo/scan/

engineering/technology/txt accordion1.html.

Pettit, R. G., Mezcciani, N., and Fant, J. (2014).

On the needs and challenges of model-based en-

gineering for spaceflight software systems. In

Object/Component/Service-Oriented Real-Time Dis-

tributed Computing (ISORC), 2014 IEEE 17th Inter-

national Symposium on, pages 25–31. IEEE.

Pettit IV, R. G. and Mezcciani, N. (2013). Highlighting the

challenges of model-based engineering for spaceflight

software systems. In Proceedings of the 5th Interna-

tional Workshop on Modeling in Software Engineer-

ing, pages 51–54. IEEE Press.

Rios, E., Bozheva, T., Bediaga, A., and Guilloreau, N.

(2006). MDD maturity model: A roadmap for intro-

ducing model-driven development. In European Con-

ference on Model Driven Architecture-Foundations

and Applications, pages 78–89. Springer.

Model Assurance Levels (MALs) for Managing Model-based Engineering (MBE) Development Efforts

549