A Systematic Strategy to Teaching of Exploratory Testing using

Gamification

Igor Ernesto Ferreira Costa and Sandro Ronaldo Bezerra Oliveira

Graduate Program in Computer Science, Institute of Exact and Natural Sciences, Federal University of Pará,

Belém, Pará, Brazil

Keywords: Gamification, Learning, Teaching, Education, Exploratory Testing, Ad Hoc Testing.

Abstract: The Exploratory Testing (ET) is a growing approach in the industrial scenario, mainly in reason of the

emerging utilization of agile practices in the software development process to satisfy the time to market.

However, it is a subject little discussed in the academic context, for this reason, this work aims to use elements

of gamification as a systematic strategy in the teaching of ET to keep a strong engagement of the students and

stimulate a good performance, with that, obtaining as results, trained students to use this test approach in the

industrial and academic context.

1 INTRODUCTION

Software testing is an essential activity for software

quality assurance, which the tester can use various

methods or techniques to find defects. Among the

existing methods in the literature, the present work

focuses on the exploratory testing (ET), which Cem

Kaner conceptualized, in 1983, as a manual test

approach that emphasizes the freedom and

responsibility of the testator to explore the system

(Kaner, 2008). In addition, Cem Kaner, James Bach

and Swebok also define as an approach that treats the

planning, testing design, execution, and interpretation

of results as support activities performed in parallel

during the testing process, thus allowing the tester

acquire knowledge of the program while performing

the tests, since the test cases are not established in a

pre-defined test plan (Pfahl, 2014).

The points cited above are able to provide

flexibility and quick feedback in test results, however,

because of the need to make this approach more

structured and systematic, and also the lack of

sufficient documentation to support the management

of test process, is that test management techniques

have emerged to supply these needs (Itkonen and

Mantila, 2013). These management techniques also

make it easier to inspect test coverage, tracking,

measure, and manage tests (Bach, 2004).

Thus, observed that the Session-Based Test

Management (SBTM) technique has been widely

used and more widespread among others techniques,

according to the results of the systematic literature

mapping (SLM) about the ET efficiency and

effectiveness. With this, the present work integrated

the SBTM in the applications or in the teaching of ET.

Therefore, it is noticeable the need to research the

subject of ET, as well as, the exploration techniques

and the specific management techniques of this

approach, in order to improve the understanding of

where, when and how this can be applied during the

system life cycle. In this way, it is pointed out that

with traditional teaching students may do not absorb

so much details with only theories and some basic

exercises, thus impairing learning and future

performance in the professional life, in these

circumstances the strategy of teaching with

gamification is fundamental to improve the students’

performance. For Werbach and Hunter (2012)

gamification is the use of game elements outside their

context, that is used to mobilize individuals to act,

help, solve problems, interact and promote learning.

One of the greatest benefits of gamification in

education is to provide a systematic structure, which

students can visualize the effects their actions,

performance in learning and how this happens

progressively, becoming a facilitator in the

relationship between the parties involved in teaching,

immersed as in a game (Fardo, 2013).

In this context, the present work aims to apply a

systemic strategy to teaching of ET using game

elements, where the use of SBMT also collaborates to

systematize the practical application of this test

Costa, I. and Oliveira, S.

A Systematic Strategy to Teaching of Exploratory Testing using Gamification.

DOI: 10.5220/0007711603070314

In Proceedings of the 14th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2019), pages 307-314

ISBN: 978-989-758-375-9

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

307

approach. The application of ET in this present work

focuses on the achievement of functional testing,

specifically, on the online test and simulation system

- SAW (Alcantara, 2018). The achievement of

functional testing becomes quite adequate in the

application of ET, because according to the SLM

performed previously, it was evidenced that the ET

allows finding, mainly, the defects of graphical user

interface (GUI) and usability.

2 RELATED WORKS

Initially, a research was carried out in the specialized

literature, in relation to published articles, to identify

the papers that present a similar proposal to this

present study. The research focused on work using the

gamification approach as supporting teaching and

learning of ET, however, no work was found, so the

related work described in this section covers teaching

of testing in general. This highlights the importance,

relevance and originality of this study.

Herbert (2016) presents four standards to the

teaching of testing for non-software developers.

These standards were extracted from the experiments

in the testing course for graduate students of the

Federal University of Health Sciences of Porto Alegre

(UFCSPA), in this context, the functional testing

approach and risk-based strategy were the most

feasible to apply, given that individuals had extensive

knowledge of the domain and context of the system

under test (SUT). However, the author does not report

details about the approach used in teaching

(resources, lesson content, tools and etc.), in addition,

the standards emphasize more the description of

concepts and good testing practices.

Benitti (2015) presents an evaluation of learning

objects to the teaching of software testing, using an

instructional design matrix for the analysis and design

stage, and Wilcoxon for statistical analysis. These

objects compose a systematic structure of contents

essential for the teaching of testing, being identified

from documentary research in the menu of several

Brazilian undergraduate courses and IEEE, MPS.BR

and others standards. Although the author posteriorly

built a tool to aid in the teaching of testing following

the systematic structure, the applied approach did not

involve the participation of a specialist to help in

clarifying doubts, and this can negatively affect the

student performance, since the specialist has an

important role contributing his/her knowledge and

technical experience on the subject in question and so

on.

In the work of Valle et al. (2015) a SLM was

conducted to identify the approaches that aid in

teaching of testing. The results indicate that there are

more occurrences of research on teaching of testing

with programming and use of educational games,

focusing mainly on the phase of test case design. The

author also identifies the highest occurrence of the

empirical evaluation for analysis of research results;

however, it is not shown how this approach has been.

In addition, few studies were observed, and most of

them presented partial results.

The work of Ribeiro and Paiva (2015) presents an

educational game for software testing learning. The

iLearTest is destined, specifically, for the assistance

of professionals who aim to obtain ISTQB

certification; however, all content is addressed only

to the foundation level based on the syllabus (study

material produced by ISTQB).

As it can be observed, no paper presented treats

the practice of the teaching of testing using any

strategies with gamification elements for exploratory

testing. Although Benitti (2015) present a tool to

provide a learning more interactive, and Ribeiro and

Paiva (2015) present an educational game destined to

testing learning in a playful way and also from Valle

et al. (2015) show that educational games are highly

observed in research, is still noticeable the need for a

systematic strategy to provide greater student

engagement. In this context, the present work differs

by presents a systematic strategy with the use of

several playful elements in the form of facilitating,

improving engagement, minimizing differences

between students and, above all, boosting the

teaching on ET in the academic context.

3 THE GAME APPROACH

Considering the ET approach, the present work uses

gamification elements, following the theme of

treasure hunting because this having similar ideas, for

example, exploring some areas by creating strategies

and solving puzzle to uncovering treasure (bugs)

hidden or lost.

The experiment should be applied as part of the

Software Quality course in the postgraduate in

Computer Science of the Federal University of Pará,

Brazil. This course is offered every semester for

postgraduate students, who they can be enrolled as

regular (engage in postgraduate from the selection

process) or special (they have not yet engaged in the

master's degree). Currently the course is taught by a

teacher with great academic and professional

experience in the field of software engineering,

because of this, many students who participate has a

ENASE 2019 - 14th International Conference on Evaluation of Novel Approaches to Software Engineering

308

lot of interest in software engineering subjects.

3.1 The Methodology of the

Experiment

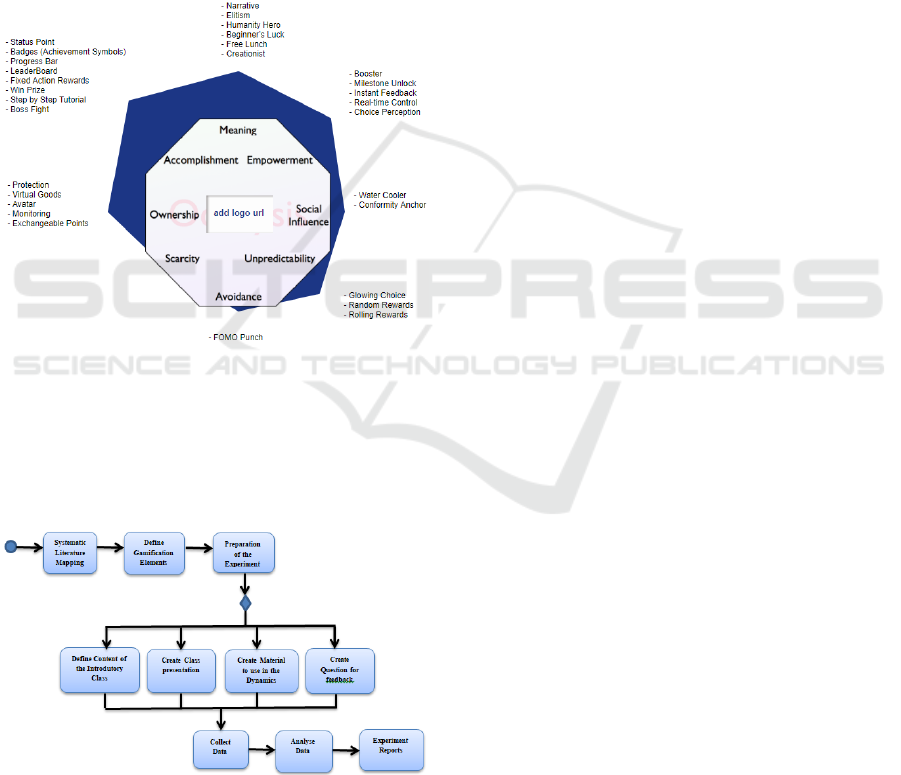

In the gamification planning, the games elements

were defined based on octalysis gamification

framework created by Chou (2015). In figure 1 the

core driver can be observed in the inner part of the

octagon and the corresponding elements involved in

the external part, which are referenced by the blue

margin. The blue margin is proportional to the

amount of elements used of the core driver in

question.

Figure 1: Mapping of the game elements.

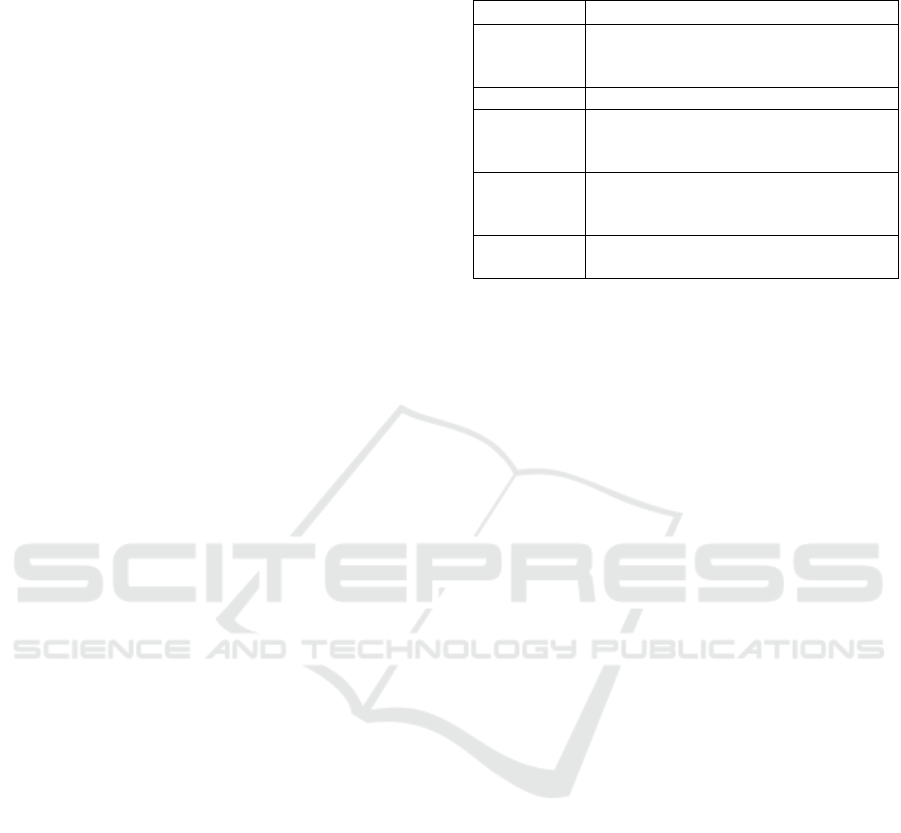

The experiment will be run twice in different

classes, and the feedback obtained the first time

should be analysed and used to improve the next run.

Figure 2 shows the activities performed from the

idealization of research and planning to evaluation.

Figure 2: Methodology of the experiment.

3.2 Game Mechanics

According to Zicbermann and Cunningham (2011)

the act of playing a game involves a set of functional

elements that makes it possible to guide the actions of

the player, which these elements are the basis of game

design, in this sense this is understood as the game

mechanics. Some of the elements considered primary

that can be combined according to the specificity of

gamification are: rewards, points, distinctive, levels,

leader board.

In this way, below are the basic elements involved

in this dynamics. Emphasize that most element names

and stages of dynamics make analogies to pirate

items:

Profile: The three profiles involved in

gamification are: a) Expert: it is the driver of the

gamification, is the one who solve the doubts and

analyses the reports; b) Tester: they are students,

posteriorly also is considered the team, since from the

stage of "commands to treasure hunting" should be

formed teams composed of two students; c) Judge: it

is the one who must observe if the students are

performing the activities to fill the scoring table

according to the items under analysis.

Activities: They are specific actions existing

in the stages which the student should perform.

Scoring: They are rewards attributed to the

activities, where the team can get depending on their

performance.

Medals: They are rewards that the student

must receive proportional to the points obtained in the

stages.

Gifts: They are rewards specifically for the

personal benefit of the team, such as books, sweets,

etc. and is not offered any advantage in the dynamics.

The gifts are utilized to stimulate the idea of team

superiority.

Bitskull: They are the coins that students must

earn by achieving a maximum score in each stage and

must use it to purchase resources.

Cards: The cards can be of three types, which

are destined to equip the avatar; gives access to

resources that aid in the detection of defects; or

receive an unknown reward.

The advantages offered by the first kind of cards

are: a) Self-defence card: provide a resource (shield,

helmet, etc.) to defend against possible attacks by

enemies; b) Attack card: provide an armament to

attack the enemies; c) Accessory cards: provide a

accessory for avatar customization.

The advantages of the second kind of cards are:

consulting a system requirement, consulting a part of

the system tutorial, consulting the description of an

A Systematic Strategy to Teaching of Exploratory Testing using Gamification

309

exploration technique, or being able to receive a tip

from the specialist about a defect in the program

which must be registered in the defects catalogue.

The third kind of cards can offer any advantage

described in the first type of cards, in addition can to

offer a certain quantity of bitskull or a gift to the team,

however to obtain one of these advantages will

depend on the resolution of a puzzle. It should be

noted that the resource cards can penalize, which is

the case of subtracting team points. The penalty order

from highest to lowest in the subtraction of points is:

receive tips, refer to the user manual, consult a

requirement and refer to the description of an exploit

technique.

Defects Catalogue: This is a document of

specialist consultation that containing several defects

that have been intentionally inserted into the program

under test. The registered information about the

defects are: unique identifier, description, route to

find it and priority level.

Bonus: It is a score attributed specifically to

the participatory actions, which are composed by the

evaluative items. There are items that increase the

score and items that decrease the points. Thus are

actions that earn points: presence, participation,

question, suggestion and teamwork; while the actions

that lost points are: lack, does not perform the activity

and disrupts the activity.

The following is a description and justification of

the items evaluated as participatory actions refers to

the bonus points that can be received or lost:

Presence: The student must be present time on

from beginning to end of the activity. This item is

important to understand the dynamics, and

consequently being able to participate in the

dynamics, as well as, stimulating a good relationship

between the participants.

Participation: The student should participate

by interacting with the specialist or with the

classmates to be commenting, answering questions.

This item is important because it indicates that the

students are attentive in the dynamics and seeking to

engage in activities.

Suggestion: The student should suggest

something that contributes to gamification. This item

indicates that the student is seeking to contribute to

the subject in question.

Question: The student should ask questions or

look for more details about the subject, approaches,

rules and other factors of the dynamics. This item is

important because it characterizes that the student is

taking an interest in the dynamics and seeks the total

understanding to be participating or acting correctly

according to dynamics flow.

Teamwork: It is the responsibility of the

judge and the specialist to observer if students on the

same team are interacting and cooperating with each

other. This item characterizes student engagement,

seeking to exchange knowledge.

Missing: It is the absence of the student in the

activities, so the team loses points if it is not present;

Does Not Carry out the Activity: The non-

performance of the activities assumes the student's

lack of interest in the dynamics, so the team loses

points if they do not perform the activity, even if they

are present in the classroom.

Disrupts the Activity: The team loses points

if it damages the performance of the activities and

performance of other teams, because it assumes that

the student is inattentive in the activity.

Level: There are four levels that the student

can achieve, and this is based on the type of avatar.

Below are the rules for classifying what level of

avatar the student should reach:

Avatar Activity: The student must perform

the activities that are specific to each stage and

according to the score obtained reaches an avatar

level. If in certain activities the student reaches the

maximum score, then he should receive medals and

bitskull as a reward.

Participatory Action Avatar: The student

should always be present and participate in class.

Thus, the student must achieve an avatar level that is

related to the performance their participatory actions.

If the student achieves the maximum score, soon

receives medals and bitskull.

Final Avatar: From the definition of the

avatar of activity and participatory action, the final

avatar of the stage in question is generated. The

generation of the level achieved in the final avatar

depends on the amount of medals you have gained.

The final avatar has a weight grade, which is

proportional to the level reached and this weight note

is used to generate the general avatar;

General Avatar: The general avatar is

calculated at the end of the dynamics (beginning of

the "Reward Pirate Captain" stage), from the

arithmetic mean of the weight grade of the final

avatars obtained. The general avatar indicates the

average performance of the student.

The classification of the activity avatar and

participatory action should occur at all stages, except

in the "Help" stage because it is a non-mandatory

stage that provides to the student help. Regarding the

levels, the student can reach any of the existing 4,

which are described below. As already mentioned

that gamification refers to the game of treasure

ENASE 2019 - 14th International Conference on Evaluation of Novel Approaches to Software Engineering

310

hunting, so the avatar profiles refers to the movie

Pirates of the Caribbean.

Level 1: It is the lowest level called Marty

because he is like a boarder who is present, but

remains quiet, not wanting to get involved with the

activities, besides not having special abilities. The

weight grade for this level is 1;

Level 2: It is an intermediate level called Will

Turner II because he represents a navigation master

who has ability to teamwork, has skills, however, is

still irresponsible or cannot making good decision.

The weight grade of this level is 2.

Level 3: It is an intermediate level called

Joshamee Gibbs by the fact of representing a battle

officer who manages to make good decisions,

exercises leadership, and works well with his team,

always interacting with responsibility. The weight

grade of this level is 3.

Level 4: This is the last level named Jack

Sparrow by the fact of always to exercise the leading

role, acting strategically, being able to work very well

as a team and always showing interest in the

dynamics. The weight grade of this level is 4.

The Figure 3 shows the complete flow of the

gamification, where it begins with the "Pirate

Training" and ends with the "Reward Pirate Captain",

where the students must reach the level of pirate

captain to have the opportunity to find the treasure. In

this flow, it is important to observe that before

entering the awards stage of the winners, three

iterations should occur in the cycle that starts in the

"Treasure Hunt" stage, following until "Buy

resources".

The flow has a chronological order as follows (see

Figure 3).

Pirate Training: The students should

participate during 4 hours of introductory classes,

divided into two days, on the concepts of testing (see

Table 1). Before starting the classes, the students

must complete the initial form about the subject and

at the end of the classes they are submitted to an

evaluation of all the content presented, this is

intended to provide data to the specialist to identify

the degree of knowledge of these students before and

after classes.

During the classes three fixation exercises are

applied for the purpose of improving understanding.

Thus, the specific activities of this stage are:

completion of the initial form, resolution of the

exercises and a final test. In order to stimulate the

good performance the student must receive medals

and bitskull, in case of reaching the maximum score

in the exercises and in the test, however the student is

penalized if he does not fill out the form.

Table 1: Contents of the introductory class based on IEEE

standards.

Tips

Content

Software

Testing

Foundation

Terminology, Relation with other areas,

key question

Test Level

Where apply it, Goal

Test

Techniques

Structural vs Functional, Expertise

Based Test, Requirement Based Test,

Risk Based Test, Usability Testing, etc.

Metrics

Regard to

Testing

SUT Evaluation, Testing Evaluation

Testing

Process

Question regard to management,

Activities

Commands for Treasure Hunting: In

approximately 15 minutes the specialist must present

the purpose and rules of the dynamics, as well as

briefly narrate a story about the lost treasure for

students feel immersed in the pirates’ world. In

addition, the specialist should inform the pre-defined

teams and provide all the materials needed to initiate

the dynamics of treasure hunting. The materials are:

Leader board, letters, program under test, installation

tutorial and etc. The pre-defined teams also have a

name that refers them to a pirate that existed in the

past and known in history.

The teams are predefined based on the data

analysis of the activities in the introductory classes,

and thus to form balanced teams, that is, to join two

students where one has presented a higher level of

knowledge than the other. At this stage it is only

observed the participatory actions, because it only

requires the concentration and attention in the

explanation of the specialist.

Outfit the Pirate: The students must

complete two basic activities in approximately 10

minutes, which are: a) Set up the test environment:

install the program under test strictly following the

installation tutorial; b) Customize avatar: request

twice the accessory cards, however the team has one

more opportunity to choose if it obtains 10 or more

medals. The benefit of the third opportunity is to

stimulate student participation in the previous stages,

since anyone who obtains the avatar with more

accessories at the end of the game should receive a

gift. In this context, the specific activity of this stage

that must be observed is the fulfilment of the steps

established in the installation tutorial, as well as, to

analyse the participatory actions.

Treasure Hunt: The students should focus on

exploring of the program, in order to find the

maximum of defects within the time of 30 minutes.

A Systematic Strategy to Teaching of Exploratory Testing using Gamification

311

Figure 3: Gamification flow.

This time reserved to this stage is based on kind of

test session specific of the SBTM technique, called

“short”. During the exploration it is possible to opt for

the purchase of aid of some resource cards, to

facilitate the detection of defects. The team may

request only three times, however, from the second

time, the value of the card is doubled. It is emphasized

that according the student finds the defects the other

immediately must register it in the defects report.

If the team finds a defect remaining 3 minutes to

complete this stage, immediately the team must

inform to the specialist, and then 2 minutes will be

added for the defect record. Thus the evaluative items

are: a) Time compliance: when registering defects

found within the established time, this indicates that

they have time control; b) Defects found: at this

moment only the counting of the number of defects is

performed. The strategy of using resource cards is to

avoid questions to the specialist; this way the team

stays focused on defect detection.

The team should receive an extra score if they

detect more than 5 defects, for example, if the team

finds 8 defects, soon are 3 defects above 5, then the

team should receive 30 bonus points. This bonus is to

stimulate the detection of defects and also serves as

recognition of good performance.

Help: This stage is optional and provides the

opportunity of the team to acquire self-help resources

to assist in the defect detection. The cards must have

a penalty character if the team requests in the first 10

minutes of the test session, because it characterizes

that the team does not want to make an effort to use

their creativity in the defects detection, on the other

hand, if the resource is requested in the final minutes,

indicates that the team may be having difficulties and

thus need help to boost their creativity in the

exploring the program. The time of this step is

included in the "look for the treasure" step.

Discuss Strategies: The team has an

estimated time of 5 minutes to analyse and discuss the

strategies used in the test session, in addition, the

team should perform the prioritization of the defects

found. The discussion of the strategies is an activity

inherent to the SBTM technique, and used after the

test session. Regards to prioritization of defects, three

levels can be classified, where 1 is more priority than

the others. At this point, the judge should only check

that all defects recorded in the defect report have been

prioritized.

If the team prioritizes all defects recorded, then

receives the maximum score, and consequently

receives medals and bitskull as reward, otherwise, the

team receives only half of the points and also no

rewards are received. This bonus and/or penalty

objective to keep students more attentive and not

forget to prioritize each registered defect.

It should be noted that the defect report is a

document inherent in the SBTM technique; therefore

it is used because it is fundamental to have some

record of the test process for further analysis.

Battle: The teams must exchange defects

report with other team. Each team has 30 minutes to

analyse the following aspects: a) Prioritization: write

a justification if it is in disagreement with the priority

level of the defects defined in the report of another

team; b) Clarity: analyse if the script of each

registered defect is well inscribed, that is, there are no

ambiguous words, wrong words and incomplete

sentences; c) Reproducible: check if only with the

registered script of each defect it is possible to

reproduce it, otherwise, the team should highlight the

inconsistencies found in the script described.

ENASE 2019 - 14th International Conference on Evaluation of Novel Approaches to Software Engineering

312

The result of the analysis of the three aspects is

described in the analysis report, and at the end of the

analysis, the team gives a general note of the defect

report analysed and also must justify this note,

posteriorly, delivery to the expert to carry out an

evaluation of both the reports.

At this stage the specialist and the judge should

only check whether the tests contained in the defect

report were analysed by the assessment team. If all

the defects were analysed, then the team receives the

maximum score and also more medals and bitskull as

a reward, otherwise it receives only half of the

possible points and no reward is received. The

purpose of this bonus and/or penalty is to keep the

team always aware of the details of the information in

the reports.

Validate the Results: The specialist should

evaluate both reports, which are: a) defect report; b)

analysis report. In the first, it is evaluated whether the

defects found are in the catalogue of defects, if

present the team receives a certain score, otherwise it

receives a higher score, because certainly is one

defect not purposely inserted. In the second, the

specialist must evaluate if the three aspects were well

analysed, if the defects are not false positives and also

if the general note and justification are coherent. The

specialist should give a general note for the defect

report and compare it with the grade suggested by the

assessment team, so the team should receive a score

according to the specialist's note and should also

receive a score by the analysis performed.

In order to carry out this evaluation, more time

and attention is required for specialist, so it is an extra

class activity, since it should organize the results to

present them in detail in the classroom, in

approximately 30 minutes. In this context, the

specialist's note, the evaluation team's note and the

coherence of the analysis of the three aspects are

observed. This way as the team cannot receive point

about participatory action items, then the team must

receive bonus according to the performance in the

activities. Thus, it is important to carry out this

assessment because students can observe how to

improve their analysis.

Pirate Highlight: It is destined an

approximate time of 15 minutes for the specialist to

reward the highlights of the stage of "Battle". The

team receives a gift and the opportunity to choose an

accessory card or an unknown reward card, however

the team must solve a puzzle before withdrawing this

card, otherwise if the answer be wrong then the team

receive only the gift. It is important to reward the

highlights for students perceive that they are

performing well.

Buy Resources: The team must request the

purchase of two cards using the bitskull, and only the

unknown reward card is not allowed, because this

buying activity aims to prepare the team to the next

testing session, by equipping the avatar or acquiring

some resources to aid in the detection of defects.

However, it is emphasized that before removing these

cards, the team must solve a riddle. Emphasize the

importance for the students self to prepare for the next

testing session, because is a way to stay competitive

with a goal of winning the game. With this, the time

for this stage is estimated to be 15 minutes.

Reward Pirate Captain: The specialist must

reward the team that reached the level of pirate

captain in the general avatar. Before starting the

awards, the team must solve three riddles, and the

answer is directed to hidden treasure within the

classroom. The purpose of having a treasure within

the classroom is to provide the feeling of immersion

in the world of treasure hunting and also to stimulate

engagement by the playful artefacts.

It is emphasized that all the riddles are about the

content of the introductory classes, except at the

awards stage of the winners that should direct the

team to places where the treasure can be found within

the classroom. Another observation is that all this

dynamics must be performed during 7 days of class,

according the Table 2, because each class day has

duration of 2 hours, for example, the “Pirate

Training” stage will be 4 hours of introductory class.

Table 2: Execution planning of the stages.

Day

Stage

1

st

, 2

nd

Pirate training

3

rd

Commands for treasure hunting, outfit the

pirate, Treasure hunt, Help, Discuss strategies,

Battle

4

th

, 5

th

,

6

th

Evaluate Results, Pirate highlight, buy

Resources, Treasure hunt, Help, Discuss

strategies, Battle,

7

th

Reward Pirate Captain, Feedback

4 EXPECTED RESULTS

As a result of this work, a satisfactory level of

learning is expected based on student performance

considering the potential of the gamification

approach, which students are influenced and always

engaged and motivated to be present and

participatory, interacting with each other, answering

questions from the specialist and collaborating with

the subject.

A Systematic Strategy to Teaching of Exploratory Testing using Gamification

313

In order to prove the expected results, this work

should compare the data of four activities: data of the

initial forms, data of the exercises, data of the test on

the content of the theoretical classes and the feedback.

This comparative and qualitative analysis is

fundamental to understand the gaps not unresolved,

the level of progression of the students, points of

improvement, critical suggestions and contributors,

points that were well treated, as well as to evaluate

didactics and other factors related to the process of

ET.

As a result, the students are also expected to

understand the subjects and dynamics to achieve good

performance and, finally, to be prepared to act in

testing procedures by applying the ET in the

academic and professional context.

5 CONCLUSIONS

In this present study has been described a systematic

strategy to teaching of ET using the gamification

approach Initially, this experiment should be applied,

twice in different classes of postgraduate, in order to

improve students' engagement to maintain a good

performance in classes related to software

engineering, especially in software testing. In

addition, it is noticeable the importance of this study

contributing to the diffusion of the subject and

encourage further research on the teaching of ET in

the academic context and from the results possibly

provide the application in the professional context.

The preparation of these undergraduate students

on ET aims at balancing the levels of knowledge on

this subject and as well as providing a sufficient

aptitude to act in the industrial context, given that

there is a great lack of professionals specialized in

software testing.

REFERENCES

Alcantara, et al., 2018. SAW: Um Sistema de Geração

de Simulados e Avalições para Auxilio no Ensino e

Aprendizado. XXIII conferência Internacional sobre

Informatica na Educação (TISE). ISBN:978-956-

19-1111-6.

Bach, J., 2004. Exploratory Testing. In: The Testing

Software Engineer, 2nd ed., E. van Veenendaal (Ed.)

Den Bosch: UTN publisher, pp. 253-265.

Benitti, F., 2015. Avaliando Objetos de Aprendizagem

para o Ensino de Teste de Software. Nuevas Ideas en

Informática Educativa TISE. Available at:

<http://www.tise.cl/volumen11/TISE2015/584-

589.pdf>

Chou, Y., 2015. Actionable Gamification - Beyond

Points, Badges, and Leaderboards. Octalysis Media.

Fardo, M. F., 2013. A Gamificação Aplicada em

Ambientes de Aprendizagem. Renote- Novas

Tecnologias na Educação. 11.

Herbert, J., 2016. Patterns to Teach Software Testing to

Non-developers. Universidade Federal de Ciências

da Saúde de Porto Alegre. SugarLoafPLoP

'16 Proceedings of the 11th Latin-American

Conference on Pattern Languages of Programming.

Available at: <https://dl.acm.org/citation.cfm?id

=3124362.3124374>.

IEEE Computer Society, 2004. “SWEBOK - Guide to the

Software Engineering Body of

Knowledge”.

Itkonen, J., Mantila, M. V., 2013. Are test cases needed?

Replicated comparison between exploratory and

test-case-based software testing. Empirical Software

Engineering, pp. 1-40. DOI 10.1007/s10664-013-

9266-8.

Kaner, C., 2008. A Tutorial in Exploratory Testing.

QUEST 2008. Available at:

<http://www.kaner.com/pdfs/QAIExploring.pdf>.

Pfahl, D. et al., 2014. How is Exploratory Testing Used?:

A state of the Practice Survey. ESEM’14, September

18-19, 2014, Torino, Italy. Copyright 2014 ACM

978-1-4503-2774-9/14/09.

Ribeiro, T., Paiva, A., 2015. iLearnTest - Jogo Educativo

para Aprendizagem de Teste de Software. Atas da

10ª Conferencia Ibérica de Sistemas y Tecnologías

de la Información (CISTI'2015). Universidade do

Porto. Available at: <https://repositorio-

aberto.up.pt/bitstream/10216/75914/2/31898.pdf>

Valle, P., et al., 2015. Um Mapeamento Sistemático

Sobre Ensino de Teste de Software. Anais do XXVI

Simpósio Brasileiro de Informática na Educação

CBIE-LACLO 2015. DOI:

10.5753/cbie.sbie.2015.7171.

Werbach, K., Hunter, F., 2012. For The Win: How

Game Thinking Can Revolutionize Your Business.

Filadélfia, Pensilvânia:Wharton Digital Press.

Zicbermann, G., Cunningham, C., 2011. Gamification by

Design. Oreilly & Associates Inc. Disponível em:

<http://scholar.google.com/scholar?hl=en&btnG=S

earch&q=intitle:Gamification+by+Design#3>.

ENASE 2019 - 14th International Conference on Evaluation of Novel Approaches to Software Engineering

314