Animal Observation Support System based on Body Movements:

Hunting with Animals in Virtual Environment

Takaya Iio

1

, Yui Sasaki

2

, Mikihiro Tokuoka

1

, Ryohei Egusa

3

, Fusako Kusunoki

4

,

Hiroshi Mizoguchi

1

, Shigenori Inagaki

2

and Tomoyuki Nogami

5

1

Department of Mechanical Engineering, Tokyo University of Science, 2641 Yamazaki, Noda-shi, Chiba-ken, Japan

2

Department of Developmental Sciences, Kobe University, Hyogo, Japan

3

Department of Education and Child Development, Meiji Gakuin University, Tokyo, Japan

4

Department of Computing, Tama Art University, Tokyo, Japan

5

Professor Emeritus, Kobe University, Hyogo, Japan

Keywords: Ecological Learning, Body Movement, Kinect Sensor, Immersive Reality, Virtual Reality, Zoo.

Abstract: We have developed a preliminary learning support system for zoos where children can learn about the

ecologies of animals while moving their bodies. For children, the zoo is a place of learning, where they can

observe live animals carefully and learn about ecology. However, some animals are exceptionally difficult to

observe carefully, and the observers may find it challenging to learn in such scenarios. Therefore, in this

research, we developed a learning support system that efficiently acquires knowledge about the ecologies of

animals that are difficult to observe in zoos. This system uses a sensor to measure the body movements of the

learner; certain animals also tend to respond based on these movements. By doing so, live animals can be

carefully observed virtually, and ecological learning is achieved via touch. In this work, we describe the results

of evaluating the usefulness of the current system by developing a prototype and evaluating experiments as

the first step towards realizing a learning support system to achieve ecological learning of animal observations

in zoos.

1

INTRODUCTION

Every year, hundreds of millions of people

worldwide visit zoos, and most of these visitors are

children (Wagoner and Jensen, 2010). For children

visiting these zoos, the zoo is an important place of

learning outside the classroom where they can engage

with live animals (Wagoner and Jensen, 2010; Allen,

2002). The primary reason why the zoo is a popular

place of learning is that one can learn about animals

and their ecologies in a more detailed manner by

observing live animals in their own environments

(Braund and Reiss, 2006; Mallapur, Waran and Sinha,

2008). Therefore, it is quite meaningful for children

to observe animals in zoos. However, it is oftentimes

difficult to observe live animals, which thrive in

exceptional circumstances, over time, e.g., animals

that are difficult to observe because they move fast

or remain hidden. In such cases, children cannot

carefully observe the animals or learn efficiently

about their ecologies. This is a challenge that has to

be solved in order to improve the quality of learning.

Various studies have been conducted and reported to

support such learning measures. Studies that use

tablets and mobile phones are the mainstream in these

research works. For instance, a penguin’s movement

is difficult to observe when it is moving fast; A

previously reported study aimed to learn the ecology

of penguins by looking at an animation of a penguin

moving slowly by using a tablet (Tanaka et al., 2017).

Animals that have small statures are generally

difficult to detect and observe. Ohashi, Ogawa and

Arisawa (2008) used mobile phones to impart

knowledge on such animals. These learning systems

provide opportunities to acquire knowledge even if

the actual animals are difficult to observe. However,

these systems have challenges as well. For electronic

items such as tablets and mobile phones, the amount

of work required to build such systems is less, and the

learning experience for children is not very great. Far

from the opportunity actually involved in animals,

has not reached the original meaning of observing

living animals. Therefore, a system that addresses

these problems is required. A zoo that solves this

problem by allowing children to visually observe live

animals in a virtual environment in close proximity is

Iio, T., Sasaki, Y., Tokuoka, M., Egusa, R., Kusunoki, F., Mizoguchi, H., Inagaki, S. and Nogami, T.

Animal Observation Support System based on Body Movements: Hunting with Animals in Virtual Environment.

DOI: 10.5220/0007753404210427

In Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), pages 421-427

ISBN: 978-989-758-367-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

421

thus required. There is a need for a learning support

system that efficiently acquires ecological knowledge

such as "what to eat” or “why to act a certain way" by

improving learning motivation via observation and

touch.

Visitors to zoos generally want to experience

meaningful contact with the animals (Jerry et al.,

2008). Research shows that squeezing wisdom and

immersing when children are playing (Dau and Jones,

1999; Levin, 1996). When children use gestures and

movements, the learning environment becomes more

natural (Grandhi, Joue and Mittelberg, 2011; Nielsen et al.,

2004; Villaroman, Rowe and Swan, 2011), and children

are able to retain more of the knowledge being taught

(Edge, Cheng and Whitney, 2013; Antle et al., 2009).

Based on these ideas, our work provides an

opportunity to virtually experience contact with

animals. By learning ecology while moving the body,

we developed a learning support system that could

enhance emotional learning and immersive feeling

in the virtual environment. In this system,

information regarding the movement of a person is

acquired by a sensor, and the experience with the

system is operated based on this information. The

content is spread across multiple screens in the

learner's field of vision and is usually aimed at

realistic experiences with animals that are otherwise

difficult to directly touch. Also, the learner can

reflect oneself in such real virtual space and

incorporate physical acts as contact act. While

improving the immersive feeling in the virtual space

and simulating actual experience, the learners are

expected to learn about the ecologies of animals,

thereby improving learning motivation. By

experiencing this virtual learning system and

observing animals, we expect to achieve a more

effective learning of ecology.

In this paper, we describe the results of

evaluating the usefulness of the proposed system by

developing and evaluating prototypes as the first

step towards realizing a learning support system

that achieves ecological learning of animal

observations in zoos. The evaluation experiments

were conducted at the Hyogo Prefectural Oji Zoo.

2

BODY MOVEMENT BASED

ANIMAL OBSERVATION

SUPPORT SYSTEM

2.1 Concept of System

We aim to support the observations of animals in

zoos, and we have developed a "body movement

based animal observation support system" as an

observation support system to improve learning

motivation and its effect on better understanding

animals by using body movements. Among the

animals that are difficult to observe, we have created

a system with the theme “Wildcat”. A wildcat is a

carnivorous mammal that is associated with the

following features.

·

Lives in grasslands and forests

·

Hunts birds by jumping to great heights

·

Vigilant, has a strong heart, and interacts

poorly with human beings

·

Hiding behind grass

From the view of these characteristics, a wildcat

lives in grasslands and forests and is an animal having

excellent jumping capabilities to hunt birds. The

ecological environment changes the form of jumping

depending on where the birds are located during

hunting. If the bird is in the same height horizontally

from the wildcat, the wildcat jumps in the horizontal

direction to hunt. When a bird is at a higher position

from the wildcat, the wildcat jumps vertically to hunt.

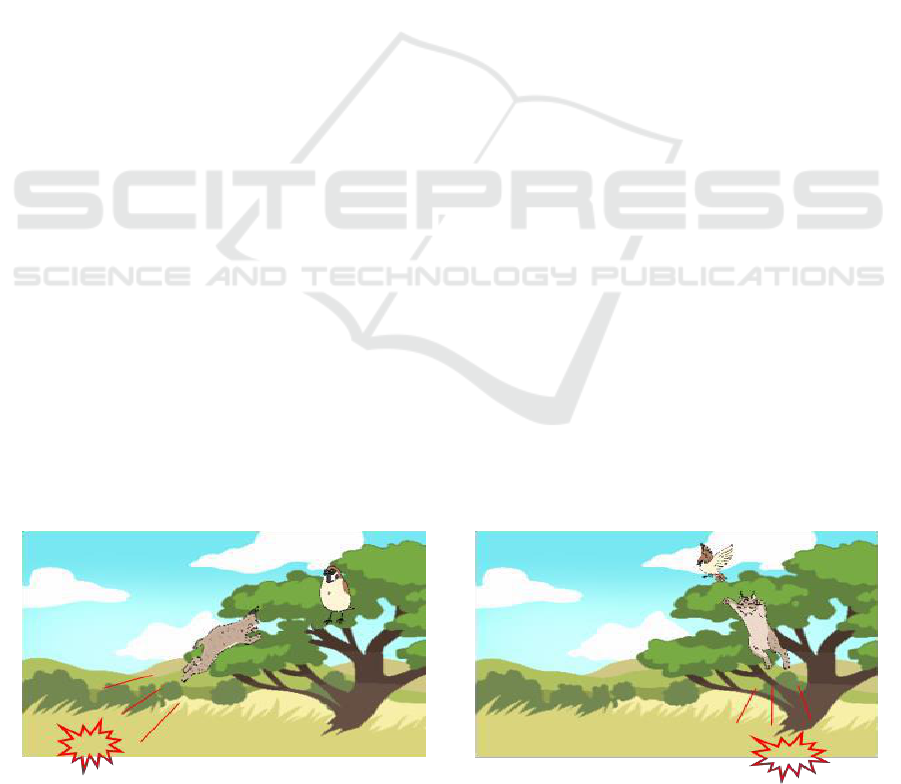

Fig. 1 is a concept diagram showing how the wildcat

jumps while hunting birds. However, the wildcat is

strong and alert and not good at interacting with

people. In addition, the wildcats hide behind grass.

Figure 1: Example of a jumping wildcat whose purpose is to hunt a bird.

CSEDU 2019 - 11th International Conference on Computer Supported Education

422

Therefore, it is difficult even in the zoo to observe this

creature. It is also therefore difficult to learn about

wildcat observation and ecology. We considered

learning support for demonstrating the ecology with

which wildcats jump to hunt birds, which is a difficult

phenomenon to observe in zoos. Thus, we developed

a system to observe wildcat jumps while moving the

body.

2.2

Necessary Functions

The learning support system that can efficiently

assist learning ecology realizes four features:

immersed in the environment where the wildcat lives,

move the wildcat and body, touch the wildcat, and

observe the ecology of the wildcat. For the purpose of

realizing these features, the following four functions

are necessary:

(a)

A function to project an experienced person in

the virtual environment to enhance the

immersive feeling.

(b)

A function that allows the experiencer (user)

to select the type of jump for body movement.

(c)

A function wherein the wildcat moves in

conjunction with user's body movement in

the virtual environment for efficient

interaction.

(d)

A function where the user observes the

ecology of a wildcat catching birds in a virtual

environment for observation and learning.

By realizing (a), the user is projected onto the

virtual environment in which the wildcat is located,

and the user feels immersed in the virtual

environment.

By realizing (b), the user can choose whether to

learn about the ecology in which the wildcat is

hunting birds: high places or distant places. When the

user selects the type of jump, the experienced person

understands that the system is about to use body

movement.

By realizing (c), user can learn how to manipulate

the physical movements. It is expected that the

movement of the animals projected on the screen by

the animals moving within the virtual environment

corresponds to the body movement of the user and is

perceived as realistic movements of living creatures

and not as the movements of a virtual creature.

Further, because the wildcat will move in the virtual

environment, it is possible to touch the virtual

wildcat.

By realizing (d), the user can observe and

learn the

ecology of wildcat jumping for the purpose

of hunting

birds, which are otherwise difficult to

observe in real life at the zoo.

2.3 Implementation

The authors used Microsoft’s Kinect sensor to

realize the four functions. Microsoft’s Kinect sensor

is a range image sensor originally developed as a

home video game device. The sensor records

advanced measurements with regard to the user’s

position. In addition, this sensor uses the software

development kit library for Kinect Windows to

recognize humans and human skeletons. Kinect

measures the position of a human body part such as

hands and feet and uses this function and position

information to identify the user’s pose and state.

Next is the extraction of the human region. In

Kinect’s human body database, various human body

posture patterns are realized through machine

learning. This is achieved by identifying the body

parts from the database.

This system comprises Microsoft’s Kinect sensor,

a computer for controlling the Kinect sensor, and a

projector projecting on the screen based on data from

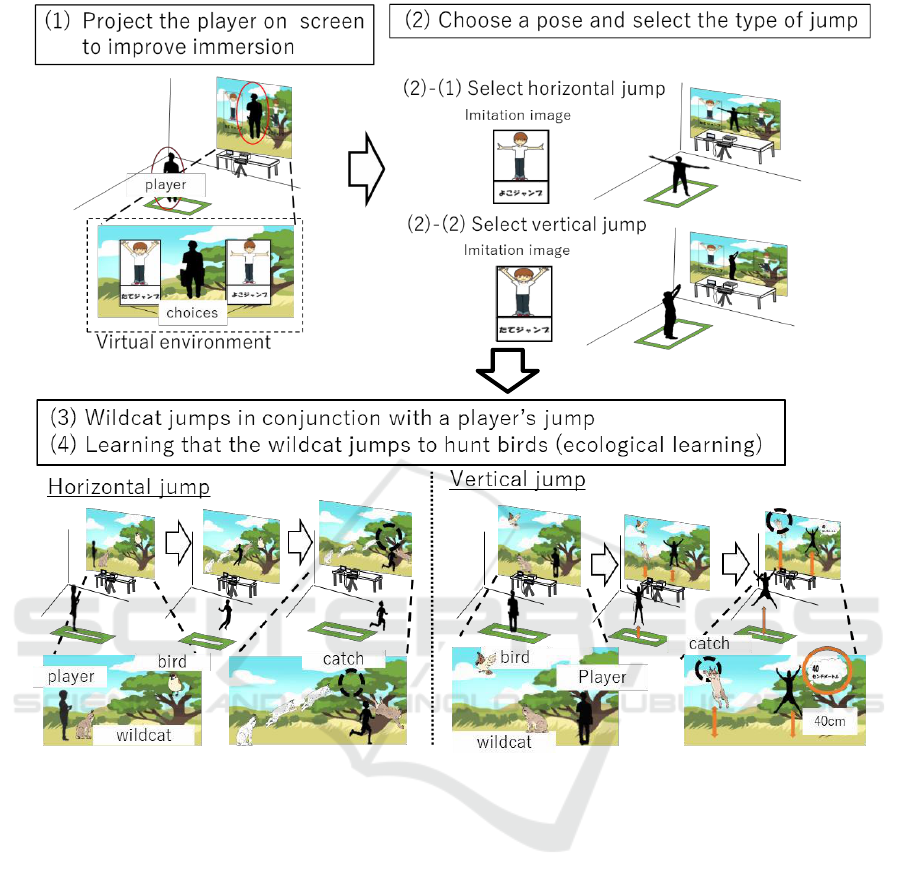

the computer. Fig. 2 shows a schematic of an actual

system.

Next, the flow of this system is explained. When

the experiencer stands in front of the sensor, the

sensor recognizes the human skeleton and projects the

figure of the experiencer in a virtual environment. Fig.

3 (1) shows this state.

Experiencers imitate images projected on the

screen using body movements. The sensor recognizes

the posture of the person, and the screen switches

according to the type of jump selected. As the screens

switch, a wildcat and birds appear on the screen.

Depending on the type of jump selected, whether

birds are placed upwards or birds are placed

horizontally relative to the wildcat within the screen

is decided. By doing so, the environment where the

wildcat hunts the bird is realistically simulated. Fig. 3

(2) shows this state.

Figure 2: Outline of the actual system.

Animal Observation Support System based on Body Movements: Hunting with Animals in Virtual Environment

423

Figure 3: System flow.

When the experiencer jumps toward the bird

where the experienced person is positioned, the

wildcat moves according to the jump of the

experiencer. The sensor obtains the position

coordinates of the ankle of the experiencer and sets it

as the height and width of the jump.

When the experiencer jumps over a certain

height or a certain width, the wildcat on the screen

hunts the bird and the birds placed on the screen

disappear. The system experiencer can learn by

observing a virtually living wildcat while moving

their body to act as if the wildcat jumps to catch birds.

When one jumps toward a vertical bird, the height of

the experienced jump is displayed on the screen.

Moreover, if you jump toward the horizontal birds

on the screen, the trajectory of the jumping wildcat

will be displayed. This state is shown in Fig. 3(3)

and 3(4).

3 EVALUATION EXPERIMENT

3.1

Method

Participants: Participants are 20 first-grade

elementary school students belonging to a national

university corporation (12 girls and 8 boys).

Place: Hyogo Prefectural Oji Zoo. Date: October

18, 2018.

Evaluation: After individually experiencing the

system created inside the zoo’s hall, the experiencers

evaluated the system. Evaluation items were taken

from three viewpoints: immersion and attention to the

created system, attention to the wildcat, and

ecological learning of the wildcat. The first two items

were evaluated by the questionnaire, and the third was

undertaken via free explanation. These questions

CSEDU 2019 - 11th International Conference on Computer Supported Education

424

were adjusted according to the participants. The

responses to the questionnaire were classified based

on a five-point Likert scale.

3.2

Results

Among the responses obtained, we classified

“Strongly Agree” and “Agree” as positive responses

and “Neutral”, “Disagree”, and “Strongly Disagree”

as neutral or negative answers. The significance of the

bias between the numbers of response was examined

by a 1 × 2 direct probability calculation. For reversal

items (those denoted by “R”), analysis was performed

after reversal treatment. Table 1 shows the results of

the questionnaire. A significant bias of 1% level was

observed for the three items: “I enjoyed the system

very much”, “This system is very interesting”, and

“This system was very attractive”; p < 0.01). In

addition, the number of positive answers exceeded

the number of neutral or negative answers.

Meanwhile, a significant bias of 1% is seen for the

two items: “The system is boring” and “I was not

interested in the system.” (p < 0.01). Moreover,

negative answers outweigh neutral or positive

responses. From these results, it can be said that the

created system attracted the interest of the target.

Moreover, a significant bias of 1% level is recognized

(p < 0.01) for the item “I experienced the system and

got interested in real Wildcats”. From this, it can be

said that the system motivated people to learn about

wildcats. In addition, Table 2 shows the results of the

questionnaire regarding the reason why a wildcat

jumps. Fifteen out of 20 people answered, “To hunt”

or “To catch prey”. From this, it can be said that it

was understood that the wildcat aimed at hunting

birds by jumping. This was experienced through the

created system. By experiencing the system, we can

conclude the following three notions.

·

The system attracts the interest of people

·

It motivates them to learn about wildcats.

·

The experiencer understands that a wildcat

jumps with the purpose of catching prey.

Fig. 4 shows the state of the experiment. From this,

it is understood that the child enjoys experiencing the

system.

Figure 4: Experimental Situation.

Table 1: Results of the questionnaire about the created system.

Statements

5

4

3

2

1

I enjoyed the system very much.**

18

1

0

0

1

The system is interesting.**

16

3

0

0

1

The system is boring. (-)**

3

1

2

1

13

When I was experiencing the system,

I felt like I was really competing with the wildcat.**

12

4

3

0

1

When I was experiencing the system,

The wildcat felt like a real thing.**

10

6

1

1

2

I was not interested in the system.

4

2

2

0

12

When I was experiencing the system,

I felt like being in the grassland.**

11

4

2

1

2

When I was experiencing the system,

I felt like I was in a different world than usual.**

14

3

1

1

1

The system was very attractive.**

15

3

1

0

1

I experienced the system and got interested in real wildcats.**

14

2

2

1

1

N = 20 5: Strongly Agree, 4: Agree, 3: Neutral, 2: Disagree, 1: Strongly Disagree

(-): reverse item ** p < 0.01

Animal Observation Support System based on Body Movements: Hunting with Animals in Virtual Environment

425

Table 2: Answers to the question why the wildcat jumps.

Girl.1

To hopscotch

Girl.11

To catch prey

Girl.2

To catch prey

Girl.12

To hunt

Girl.3

To catch prey

Boy.1

To escape

Girl.4

To hunt

Boy.2

To catch prey

Girl.5

Because I raised my legs high

Boy.3

To catch prey

Girl.6

Because it is dangerous Because it

seems likely to be eaten

Boy.4

To hunt

Girl.7

Because it is high

Boy.5

To catch prey

Girl.8

To catch prey

Boy.6

To catch prey

Girl.9

To hunt

Boy.7

To hunt

Girl.10

To catch prey

Boy.8

To catch prey

4 CONCLUSIONS

In this paper, we proposed a learning support system

to learn about the “ecology where wildcats jump and

hunt birds” as the first step to realize a learning

support system to support ecological learning of

animal observation in zoos. By learning about

ecology while moving one’s body, you can gain an

immersive feeling in the virtual environment where

animals live and learn it. We explained the results of

the evaluation experiments to determine whether

experienced people were able to efficiently undergo

ecological learning.

From our experiments, it was obvious that the

learner’s motivation to learn improved as learners

improved the immersion in the virtual environment

and experienced the proposed system. Furthermore,

learners have been shown to be able to learn about

ecology efficiently. These results have demonstrated

that the proposed system effectively provides a

platform for wildcat ecology observation and learning.

Otherwise, it is difficult for children to observe

wildcats directly at the zoo and to undergo

ecological learning.

In this paper, it was impossible to support learning

for all experiencers from the result of answer to the

question why the wildcat jumps. For this reason, in

the future, we will involve multiple projectors and

develop a system that can improve upon the

immersive experiences for learners.

ACKNOWLEDGEMENTS

This work was supported in part by Grants-in-Aid for

Scientific Research (A), Grant Number JP16H01814,

JP18H03660. We are grateful to Kobe Municipal Oji

Zoo, Kobe, Japan, which made this study possible.

REFERENCES

Wagoner, B., Jensen E., 2010. Science learning at the zoo:

Evaluating children’s developing understanding of

animals and their habitats. Psychology & Society,

pp.65–76.

Allen, S., 2002. Looking for learning in visitor talk: a

methodological exploration. Learning Conversations in

Museums, pp.259–303.

Braund, M., Reiss, M., 2006. Towards a more authentic

science curriculum: The contribution of out-of-school

learning. International Journal of Science Education,

pp.1373–1388.

Mallapur, A., Waran, N., Sinha, A. The captive audience:

the educative influence of zoos on their visitors in

India." International Zoo Yearbook 42, pp.214–224.

Tanaka, Y., Egusa, R., Dobashi, Y., Kusunoki, F.,

Yamaguchi, E., Inagaki, S., Nogami, T., 2017.

Preliminary evaluation of a system for helping

children observe the anatomies and behaviors of

animals in a zoo. Proceedings of the 9th International

Conference on Computer Supported Education 2,

pp.305–310.

Ohashi, Y., Ogawa, H., Arisawa, M., 2008. Making New

Learning Environment in Zoo by Adopting Mobile

Devices. Proceedings of the 10th International

Conference on Human Computer Interaction with

mobile devices and services, pp.489–490.

Jerry F. Luebke, Jason V. Watters, Jan Packer, Lance J.

Miller & David M. Powell., 2016. Zoo Visitors'

Affective Responses to Observing Animal

Behaviors.Visitor Studies 19, pp.60–76.

Dau, E., Jones, E., 1999. Child’s Play: Revisiting Play in

Early Childhood Settings. Brookes Publishing, Maple

Press, pp.67–80.

CSEDU 2019 - 11th International Conference on Computer Supported Education

426

Levin, D., 1996. “Endangered play, endangered

development: A constructivist view of the role of play

in development and learning.” In A. Phillips (Ed.),

Topics in early childhood education 2: Playing for

keeps. St. Paul, MI: Inter-Institutional Early

Childhood Consortium, Redleaf Press, pp.73–88 &

168–171.

Grandhi, S. A., Joue, G., Mittelberg, I., 2011.

Understanding naturalness and intuitiveness in

gesture production: Insights for touchless gestural

interfaces. Proceedings of CHI, pp.821–824.

Nielsen M., Störring, M., Moeslund, T., Granum E., 2004.

A procedure for developing intuitive and ergonomic

gesture interfaces for HCI. Gesture-Based

Communication in HCI, pp.105–106.

Villaroman, N., Rowe, D., Swan, B., 2011. “Teaching

natural user interaction using OpenNI and the

Microsoft Kinect Sensor.” Proceedings of SIGITE,

227–232.

Edge, D., Cheng, K. Y., Whitney, M., 2013. SpatialEase:

Learning language through body motion. Proceedings

of CHI, pp.469–472.

Antle, A., Kynigos, C., Lyons, L., Marshall, P., Moher, T.,

Roussou, M., 2009. “Manifesting embodiment:

Designers’ variations on a theme.” Proceedings of

CSCL, pp.15–17.

Animal Observation Support System based on Body Movements: Hunting with Animals in Virtual Environment

427