Direct Instruction and Its Extension with a Community of Inquiry:

A Comparison of Mental Workload, Performance and Efficiency

Giuliano Orru and Luca Longo

School of Computer Science,

Technological University Dublin, Ireland

Keywords: Direct Instruction, Community of Inquiry, Efficiency, Mental Workload, Cognitive Load Theory,

Instructional Design.

Abstract: This paper investigates the efficiency of two instructional design conditions: a traditional design based on the

direct instruction approach to learning and its extension with a collaborative activity based upon the

community of inquiry approach to learning. This activity was built upon a set of textual trigger questions to

elicit cognitive abilities and support knowledge formation. A total of 115 students participated in the

experiments and a number of third-level computer science classes where divided in two groups. A control

group of learners received the former instructional design while an experimental group also received the latter

design. Subsequently, learners of each group individually answered a multiple-choice questionnaire, from

which a performance measure was extracted for the evaluation of the acquired factual, conceptual and

procedural knowledge. Two measures of mental workload were acquired through self-reporting

questionnaires: one unidimensional and one multidimensional. These, in conjunction with the performance

measure, contributed to the definition of a measure of efficiency. Evidence showed the positive impact of the

added collaborative activity on efficiency.

1 INTRODUCTION

Cognitive Load Theory (CLT), relevant in

educational psychology, is based on the assumption

that the layout of explicit and direct instructions

affects working memory resources influencing the

achievement of knowledge in novice learners.

Kirschner and colleagues (2006) pointed out that

experiments, based on unguided collaborative

methodologies, generally ignore the human mental

architecture. As a consequence, these types of

methodologies cannot lead to instructional designs

aligned to the way humans learn, so they are believed

to have little chance of success (Kirschner, Sweller

and Clark, 2006). Under the assumptions of CLT,

learning is not possible without explicit instructions

because working memory cannot receive and process

information related to an underlying learning task.

This study focuses on a comparison of the efficiency

of a traditional direct instruction teaching method

against an extension with a collaborative activity

informed by the Community of Inquiry paradigm

(Garrison, 2007). The assumption is that the addition

of a highly guided collaborative and inquiring

activity, to a more traditional direct instruction

methodology, has a higher efficiency when compared

to the application of the latter methodology alone. In

detail, the collaborative activity based upon the

Community of Inquiry is designed as a collaborative

task based on explicit social instructions and trigger

cognitive questions (Orru et al., 2018).

The research question investigated is: to what

extent can a guided community of inquiry activity,

based upon cognitive trigger questions, when added

to a direct instruction teaching method, impact and

improve its efficiency?

The remainder of this paper is structured as

follows. Section 2 informs the reader on the

assumptions behind Cognitive Load Theory, its

working memory effect and the Community of

Inquiry paradigm that inspired the design of the

collaborative inquiry activity. Section 3 describes the

design of an empirical experiment and the methods

employed. Section 4 present and critically discuss the

results while section 5 summarise the paper

highlighting future work.

436

Orru, G. and Longo, L.

Direct Instruction and Its Extension with a Community of Inquiry: A Comparison of Mental Workload, Performance and Efficiency.

DOI: 10.5220/0007757204360444

In Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), pages 436-444

ISBN: 978-989-758-367-4

Copyright

c

2019 by SCITEPRESS – Science and Technology Publications, Lda. All rights reserved

2 RELATED WORK

2.1 The Cognitive Load Theory

Cognitive Load Theory (CLT) provides instructional

techniques aimed at optimising the learning phase.

These are aligned with the limitations of the human

cognitive architecture (Atkinson and Shiffrin, 1971)

(Baddeley, 1998) (Miller, 1956). An instructional

technique that considers the limitations of working

memory aims at reducing the cognitive load of

learners which is the cognitive cost required to carry

out a learning task. Reducing cognitive load means

increasing working memory spare capacity, thus

making more resources available. In turn this

facilitates learning that consists in transferring

knowledge from working memory to long-term

memory (Sweller, Van Merrienboer and Paas, 1998).

Instructional designs should not overcome the

working memory limits, otherwise transfer of

knowledge is hampered and learning affected.

Explicit instructional techniques are the necessary

premises for informing working memory on how to

process information and consequently building

schemata of knowledge. The research conducted in

the last 3 decades by Sweller and his colleagues

brought to the definition of three types of load:

intrinsic, extraneous and germane loads (Sweller, van

Merriënboer and Paas, 2019). In a nutshell, intrinsic

load depends on the number of items a learning task

consists of (its difficulty), while extraneous load

depends on the characteristic of the instructional

material, of the instructional design and on the prior

knowledge of learners. Germane load is the cognitive

load on working memory elicited from an

instructional design and the learning task difficulty. It

depends upon the resources of working memory

allocated to deal with the intrinsic load. In order to

optimise these working memory resources, research

on CLT has generated a number of approaches to

inform instructional design. One of this is the

Collective Working Memory effect whereby sharing

the load of processing complex material among

several learners and their working memories lead to

more effective processing and learning. The level of

complexity of the task remains constant but the

working resources expand their limits because of

collaboration (Sweller, Ayres and Kalyuga, 2011). It

is assumed that collaborative learning foster

understanding just for high load imposed by the task

when individual learners do not have sufficient

processing capacity to process the information (Paas

and Sweller, 2012). Results in empirical studies

comparing collaborative learning with individual

learning are mixed. Positive effects are found in

highly structured and highly scripted learning

environments where learners knew what they had to

do, how to do it, with whom and what they had to

communicate about (Dillenbourg, 2002) (Fischer et

al., 2002) (Kollar, Fischer and Hesse, 2006)

(Kirschner, Paas and Kirschner, 2009). In these

environments, student working collaboratively

become more actively engaged in the learning process

and retain the information for a longer period of time

(Morgan, Whorton and Gunsalus, 2000). The main

negative effect is the cognitive cost of information

transfer: the transactive interaction could generate

high cognitive load hampering the learning phase

instead of facilitating it. This depends upon the

complexity of the task, and in tasks with high level of

complexity, the cognitive cost of transfer is

compensated by the advantage of using several

working memories resources. In contrast, in tasks

with low level of complexity, the individual working

memory resources are supposed to be enough and the

transfer costs of communication might hamper the

learning phase. It is hard to find unequivocal

empirical support for the premise that learning is best

achieved interactively rather than individually but the

assumption of the Collective Working Memory effect

is clear: joining human mental resources in a

collaborative task correspond to expanding the

human mental load capacity (Sweller, Ayres and

Kalyuga, 2011).

2.2 The Community of Inquiry

John Dewey reconceptualised the dualistic

metaphysic of Plato who split the reality in two: ideal

on one side and material on the other. In this context,

Dewey suggests to rethink the semantic distinction

between ‘Technique’ as practice and ‘Knowledge’ as

pure theory. Practice, in fact, is not foundationalist in

its epistemology anymore. In other words, it does not

require a first principle as its theoretical foundation.

Technique, in the philosophy of Dewey, means an

active procedure aimed at developing new skills

starting from the redefinition of the old ones (Dewey,

1925). Therefore, the configuration of epistemic

theoretical knowledge is a specific case of technical

production and Knowledge as a theory is the result of

Technique as practice. Both are deeply interconnected

and they share the resolution of practical problems as

starting point for expanding knowledge (Dewey,

1925). The research conducted by Dewey inspired the

work of Garrison (2007) who further develop the

Community of Inquiry. This may be defined as a

teaching and learning technique. It is an instructional

Direct Instruction and Its Extension with a Community of Inquiry: A Comparison of Mental Workload, Performance and Efficiency

437

technique thought for a group of learners who,

through the use of dialogue, examine the conceptual

boundary of a problematic concept, processing all its

components in order to solve it. Garrison provides a

clear exemplification of the cognitive structure of a

community of inquiry. Firstly, exploring a problem

means exchanging information on its constituent

parts. Secondly, this information needs to be

integrated by connecting related ideas. Thirdly, the

problem has to be solved by a resolution phase ending

up with new ideas (Garrison, 2007). The core ability

in solving a problem consists in connecting the right

tool to reach a specific aim. According to Lipman

(2003), who extended the Community of Inquiry with

a philosophical model of reasoning, the meaning of

inquiry should be connected with the meaning of

community. In this context, individual and the

community can only exist in relation to one another

along a continuous process of adaptation that ends up

with their reciprocal, critical and creative

improvement (Lipman, 2003).

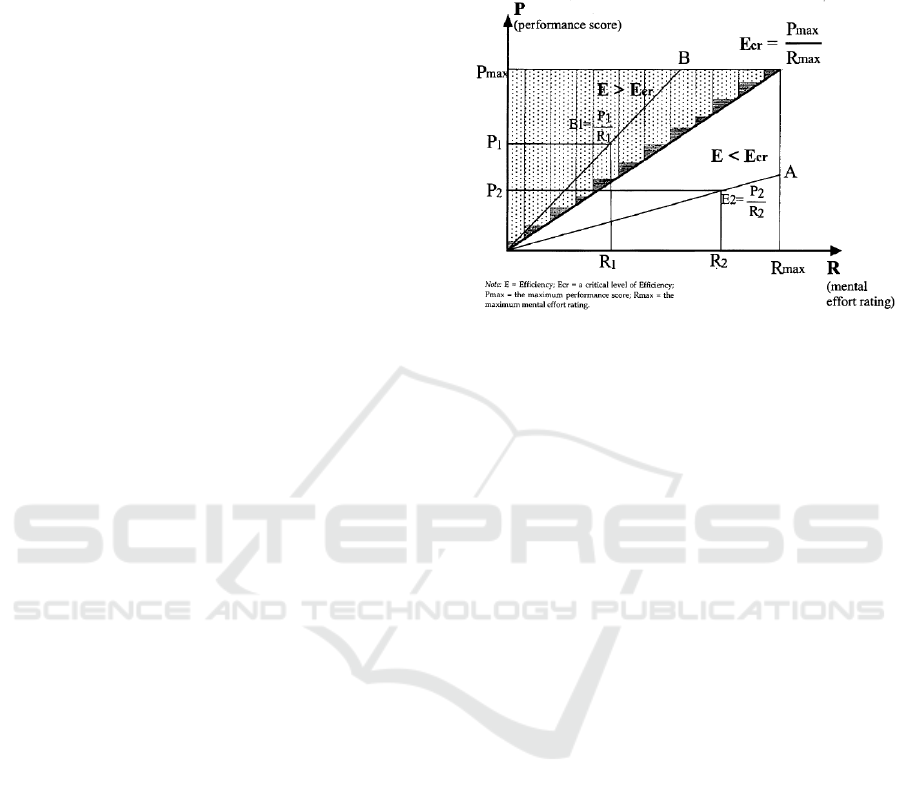

2.3 Instructional Efficiency and the

Likelihood Model

Efficiency of instructional designs in education is a

measurable concept. A high efficiency occurs when

learning outcomes, such as test results, are produced

at the lowest level of financial, cognitive or temporal

resources (Johnes, Portela and Thanassoulis, 2017) .

One of the measures of efficiency developed within

Education is based upon a likelihood model (Hoffman

and Schraw, 2010). Efficiency here is the ratio of

work output to work input. Output can be identified

with learning, whereas input with work, time or

effort. These two variables can be replaced

respectively with a raw score of performance of a

learner or a learning outcome denoted as P, and a raw

score for time, effort or cognitive load denoted as R:

E=P/R

R can be gathered with any self-report scale of

effort or cognitive resources employed or an objective

measure of time (Hoffman and Schraw, 2010). An

estimation of the rate of change of performance is

calculated by dividing P by R and the result represents

the individual efficiency based on individual scores

(Hoffman and Schraw, 2010). Previously, Kalyuga

and Sweller (2005) employed the same formula

extended with a reference value: the critical level

under or above which the efficiency can be

considered negative or positive (Kalyuga and

Sweller, 2005). As shown in figure 1, the authors

suggest to divide the maximum performance score

and the maximum effort exerted by learners in order

to establish whether a learner is competent or not.

Ecr = Pmax / Rmax

Figure 1: Critical level of efficiency (Kalyuga and Sweller,

2005).

A given learning task is considered efficient if E

is greater than Ecr, relatively inefficient if E is less

than or equal to the critical level Ecr. The ratio of the

critical level is based on the assumption that an

instructional design is not efficient if a learner invests

maximum mental effort in a task, without reaching

the maximum level of performance (Kalyuga and

Sweller, 2005). Instead, an instructional design is

efficient if a learner reaches the maximum level of

task performance with less than the maximum level

of mental effort. Intermediate values are supposed to

be evaluated in relation to the critical level (Kalyuga

and Sweller, 2005). This measure of efficiency has

been adopted in this research and measures of

performance, effort and mental workload have been

selected as its inputs, as described below.

2.4 The Bloom’s Taxonomy and

Multiple Choice Questionnaires

One way of designing instructional material is

through the consideration of the educational

objectives conceived in the Bloom’s Taxonomy

(Bloom, 1956). In educational research, this has been

modified in different ways and one of the most

accepted revision is proposed by Anderson et al.

(2001). In connection to the layout of multiple choice

questions (MCQ), this adapted taxonomy assumes

great importance because it explains how a test

performance can be linked to lower or higher

cognitive process depending on the way it is designed

(Scully, 2017). In other words, the capacity of a test

performance to evaluate ‘higher or lower cognitive

CSEDU 2019 - 11th International Conference on Computer Supported Education

438

process’ may depend on the degree of alignment to

the Bloom’s Taxonomy. This revised version has

been adopted in this research to design a MCQ

performance test.

2.5 Measures of Mental Workload

Mental workload can be intuitively thought as the

mental cost of performing a task (Longo, 2014)

(Longo, 2015). A number of measures have been

employed in Education, both unidimensional and

multidimensional (Longo, 2018). The modified

Rating Scale of Mental Effort (RSME) (Zijlstra and

Doorn, 1985) is a unidimensional mental workload

assessment procedure that is built upon the notion of

effort exerted by a human over a task. A subjective

rating is required by an individual through an

indication on a continuous line, within the interval 0

to 150 with ticks each 10 units (Zijlstra, 1993).

Example of labels are ‘absolutely no effort’,

‘considerable effort’ and ‘extreme effort’. The overall

mental workload of an individual coincides to the

experienced exerted effort indicated on the line. The

Nasa Task Load Index (NASA-TLX) is a mental

workload measurement technique, that consists of six

sub-scales. These represent independent clusters of

variables: mental, physical, and temporal demands,

frustration, effort, and performance (Hart and

Staveland, 1988). In general, the NASA-TLX has

been used to predict critical levels of mental workload

that can significantly influence the execution of an

underlying task. Although widely employed in

Ergonomics, this has been rarely adopted in

Education. A few studies have confirmed its validity

and sensitivity when applied to educational context.

(Gerjets, Scheiter and Catrambone, 2006) (Gerjets,

Scheiter and Catrambone, 2004) (Kester et al., 2006).

2.6 Summary of Literature

Explicit instructional design is an inherent

assumption of Cognitive Load Theory. According to

this, information and instructions have to be made

explicit to learners to enhance learning (Kirschner,

Sweller and Clark, 2006). This is in contrast to the

features of the Community of Inquiry approach that,

instead, do not focus only on explicit instructions to

construct information, but on the learning connection

between cognitive abilities and knowledge

construction. The achievement of factual, conceptual

and procedural knowledge, in connection to the

cognitive load experienced by learners, is supposed to

be the shared learning outcome under evaluation in

the current experiment. Kirshner and colleagues

(Kirschner et al., 2006) affirmed that unguided

inquiring methodologies are set to fail because of

their lack of direct instructions. Joanassen (2009), in

a reply to Kirchner et al. (2006), stated that, in the

field of educational psychology, a comparison

between the effectiveness of constructivist inquiry

methods and direct instruction methods does not exist

(Jonassen, 2009). This is because the two approaches

come from different theories and assumptions,

employing different research methods. Moreover,

they do not have any shared learning outcome to be

compared. We argue that both the approaches have

own advantages and disadvantages for learning. This

research study tries to fill this gap and it aims at

joining the direct instruction approach to learning

with the collaborative inquiry approach, taking

maximum advantage from them.

3 DESIGN AND METHODOLOGY

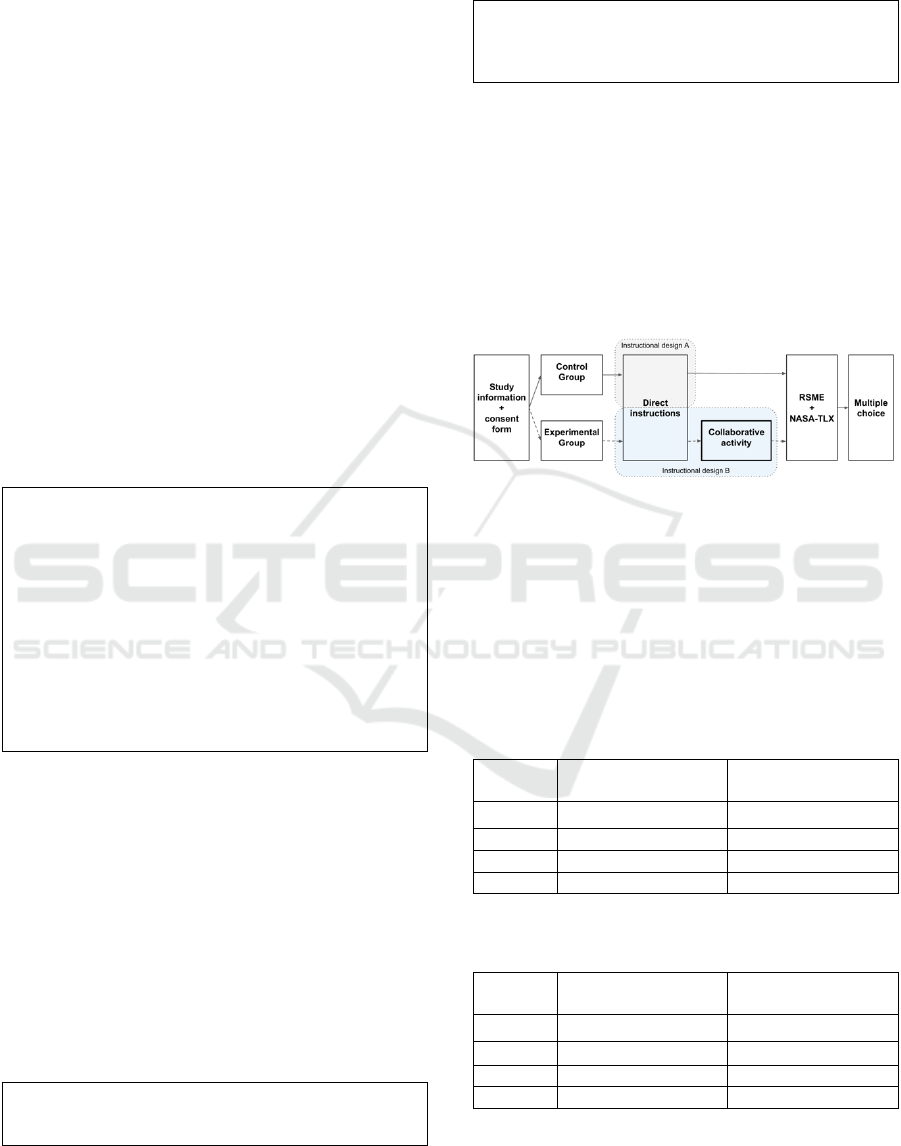

A primary research experiment has been designed

following the approach by Sweller et al. (2010) and

taking into consideration the cognitive load effects.

Two instructional design conditions were designed:

one merely following the direct instruction approach

to learning (A), and one that extends this with a

collaborative activity inspired by the community of

inquiry approach to learning (B). In detail, the former

involved a theoretical explanation of an underlying

topic, whereby an instructor presented information

through direct instructions. The latter involved the

extension of the former with a guided collaborative

activity based upon cognitive trigger questions. These

questions, aligned to the Bloom’s Taxonomy, are

supposed to develop cognitive skills in

conceptualising and reasoning, that stimulate

knowledge construction in working memory (Popov,

van Leeuwen and Buis, 2017).

An experiment has been conducted in third-level

classes at the Technological University Dublin and at

the University of Dublin, Trinity College involving a

total of 115 students. Details as below:

Semantic Web [S.W]: 42 students;

Advanced Database [A.D]: 26 students;

Research Methods [R.M]: 26 students;

Amazon Cloud Watch Autoscaling [AWS]: 21

students.

Each class was divided into two groups: the

control group received design condition A while the

experimental group received A followed by B. Each

student voluntarily took part in the experiment after

Direct Instruction and Its Extension with a Community of Inquiry: A Comparison of Mental Workload, Performance and Efficiency

439

being provided with a study information sheet and

signed a consent form approved by the Ethics

Committee of the Technological University Dublin.

The participation was based on a criterion of

voluntary acceptance. Consequently, it can be

deducted that who accepted to participate in the

experiment had a reasonable level of motivation,

contrary to a number of students who denied to

participate. At the beginning of each class, lecturers

asked whether there was someone familiar with the

topic, but no evidence of prior knowledge was

observed. The Rating Scale Mental Effort (RSME)

and the Nasa Task Load index (NASA-TLX)

questionnaire were provided to students in each group

after the delivery of the two design conditions. After

these, students received a multiple-choice

questionnaire. The collaborative activity B was made

textually explicit and distributed to each student in the

experimental group which in turn, was sub-divided

into smaller groups of 3 or 5 students. Table 1 list the

instructions for executing the collaborative activity.

Table 1: Instructions for the collaborative activity.

SECTION 1: Take part in a group dialog considering the

following democratic habits: free-risk expression,

encouragement, collaboration and gentle manners.

SECTION 2: Focus on the questions below and follow

these instructions:

Exchange information related to the underlying topic

Connect ideas in relation to this information

FIRST find an agreement about each answer

collaboratively, THEN write the answer by each group

member individually

Followed by trigger questions…

The first section explains the social nature of the

inquiry technique while the second section outlines

the cognitive process involved in answering the

trigger questions. Examples of the questions are

showed in tables 2 and 3, and are adapted from the

work of Satiro (2006). They are aimed at developing

cognitive skills of conceptualisation by comparing

and contrasting, defining, classifying, and reasoning

by relating cause and effect, tools and aims, parts and

whole and by establishing criteria (Sátiro, 2006).

Table 2: Examples of trigger questions employed during the

collaborative activity in the ‘Semantic Web’ class.

What does a Triple define? (Conceptualising)

How a Triple is composed of? (Reasoning)

Table 3: Examples of trigger questions employed during the

collaborative activity in the ‘Advanced Database’ class.

What is a Data-warehouse? (Conceptualising)

How is a date dimension defined in a dimensional

model? (Reasoning)

With a measure of performance (the multiple-

choice score, percentage) and a mental workload

score (RSME or NASA-TLX), a measure of

efficiency was calculated using the likelihood models

described in section 2.3 (Hoffman and Shraw, (2010).

Figure 2 summarise the layout of the experiment. The

research hypothesis is that the efficiency of the design

condition B is higher than the efficiency of the design

condition A. Formally: Efficiency B > Efficiency A.

Figure 2: Layout of the experiment.

4 RESULTS AND DISCUSSION

Table 4 and 5 respectively list the descriptive

statistics of the Rating Scale Mental Effort and the

Nasa-Task Load indexes associated to each group.

Table 4: Means and standard deviations of the Rating Scale

Mental Effort responses.

Topic

RSME mean (STD)

Control Group

RSME mean (STD)

Experimental Group

A.D.

36.00 (12.83)

47.91 (13.72)

A.W.S.

56.00 (25.64)

68.57 (32.07)

R.M.

47.08 (8.38)

67.85 (23.67)

S.W.

61.92 (29.19)

66.31 (32.08)

Table 5: Means and standard deviations of the Nasa Task

Load indexes.

Topic

NASA mean (STD)

Control Group

NASA mean (STD)

Experimental Group

A.D.

43.61 (15.39)

47.80 (9.91)

A.W.S.

50.00 (8.19)

54.45 (16.12)

R.M.

49.38 (9.37)

49.85 (8.96)

S.W.

47.74 (10.98)

50.62 (9.43)

As noticeable from tables 4 and 5, the

experimental group experienced, on average more

effort (RSME) and more cognitive load (NASA-

CSEDU 2019 - 11th International Conference on Computer Supported Education

440

TLX) than the control group. Intuitively this can be

attributed to the extra mental cost required by the

collaborative activity. Table 6 shows the performance

scores of the two groups. Also in this case, the

collaborative activity increased the level of

performance of the learners belonging to the

experimental group.

Table 6: Mean and standard deviation of MCQ.

Topic

MCQ mean (STD)

Control Group

MCQ mean (STD)

Experimental Group

A.D.

42.92 (21.26)

54.91 (14.27)

A.W.S.

61.33 (15.52)

66.42 (13.36)

R.M.

68.41 (15.72)

69.57 (18.88)

S.W.

34.42 (18.10)

47.12 (18.77)

Despite of this consistent increment across topics,

the results of a non-parametric analysis of variance

(depicted in table 7) shows how the scores associated

the control and experimental groups are, most of the

times, not statistically significantly different. Given

the dynamics of third-level classes and the

heterogeneity of students having different

characteristics such as prior knowledge and learning

strategy, this was not a surprising outcome.

Table 7: P-values of the non-parametric analysis of variance

of the performance scores (MCQ), the perceived effort

scores (RSME) and the workload scores (NASA-TLX).

Topic

MCQ

RSME

NASA

A.D.

0.2250

0.1164

0.3695

A.W.S.

0.3718

0.3115

0.3709

R.M.

0.9806

0.0103

0.6272

S.W.

0.0369

0.8319

0.1718

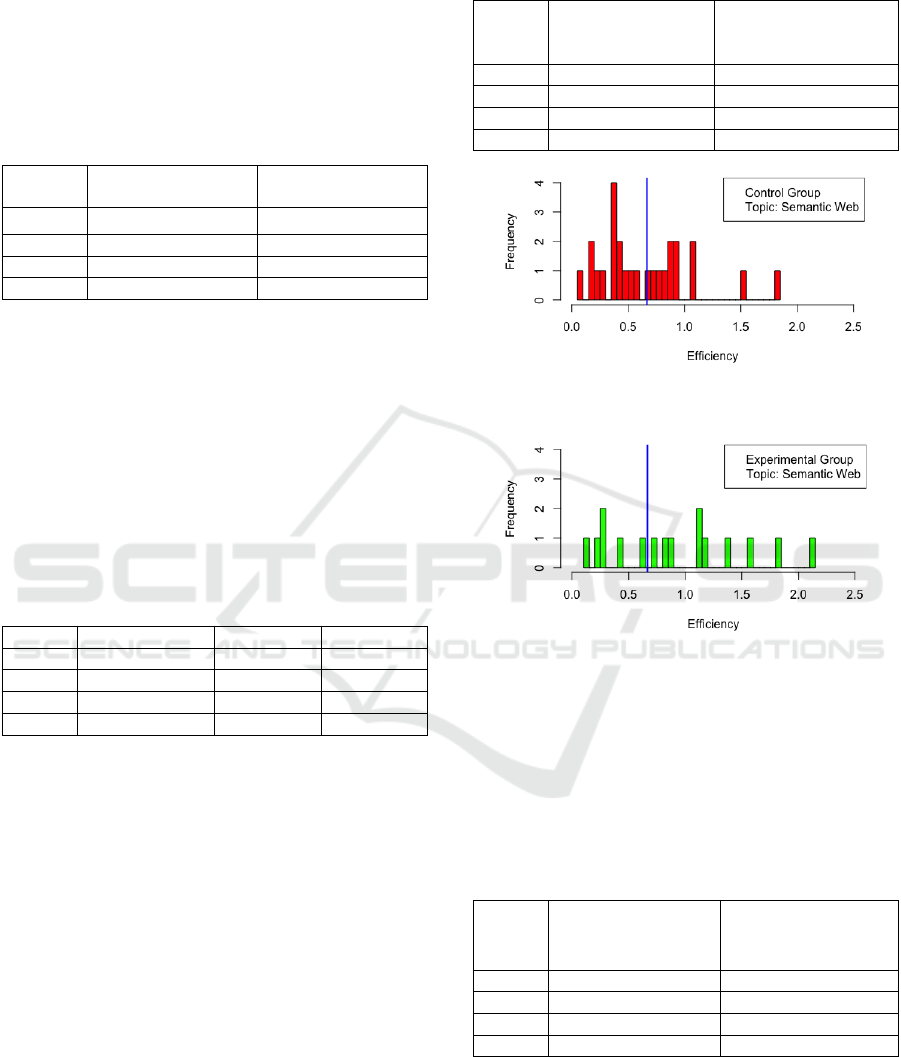

In order to test the hypothesis, an efficiency score

for each participant was computed according to the

likelihood models described in section 2.3 (Hoffman

and Schraw, 2010). Table 8 lists the efficiency scores

across groups and topics. Under the assumptions of

the likelihood model, the evidence of the positive

impact of the collaborative inquiry activity (design

condition B) is limited to the ‘Semantic Web’ class

where there is a significant difference in the

efficiency of design conditions when the RSME is

used. The control group is on average below the

critical level, while the experimental group above it.

In detail, as depicted in figure 3 and 4, the distribution

of the efficiency scores, with the RSME measure,

reveals that 54% of the students in the control group

experienced an efficiency below the critical level

whereas 46% above it. Contrarily, in the experimental

group, a higher 66.6% of students experienced an

efficiency above the critical level and 33,3% below it.

Table 8: Mean of efficiency computed with the RSME (+ is

positive, - is negative). Critical Level 100/150=0.666.

Topic

Mean efficiency (with

RSME)

Control group

Mean efficiency (with

RSME)

Experimental Group

A.D.

1.304 > 0.666 (+)

1.239 > 0.666 (+)

A.W.S.

1.440 > 0.666 (+)

1.336 > 0.666 (+)

R.M.

1.505 > 0.666 (+)

1.152 > 0.666 (+)

S.W.

0.656 < 0.666 (-)

0.922 > 0.666 (+)

Figure 3: Distribution of the efficiency scores of learners in

the control group for the topic ‘Semantic Web’.

Figure 4: Distribution of the efficiency scores of learners in

the experimental group for the topic ‘Semantic Web’.

A similar analysis was conducted by using the

NASA-TLX as a measure of mental workload for the

likelihood model (Table 9) where a more coherent

picture emerges. In fact, the efficiency scores are on

average always higher in the experimental group.

Table 9: Mean of Efficiency computed with the NASA-

TLX (+ is positive, - is negative). Critical Level 100/100=1.

Topic

Mean efficiency (with

NASA)

Control group

Mean efficiency

(with NASA)

Experimental Group

A.D.

1.153 > 1 (+)

1.244 > 1 (+)

A.W.S.

1.241 > 1 (+)

1.340 > 1 (+)

R.M

1.425 > 1 (+)

1.440 > 1 (+)

S.W.

0.750 < 1 (-)

0.964 < 1 (-)

Despite of the general increment of the efficiency

scores, these are not statistically significantly

different across design conditions. In fact, all the p-

values of Table 10 are greater than the significance

level (alpha=0.05).

Direct Instruction and Its Extension with a Community of Inquiry: A Comparison of Mental Workload, Performance and Efficiency

441

Table 10: P-values of the Kruskal-Wallis test on the

efficiency scores (with the NASA-TLX) and the Wilcoxon

test of the efficiency scores (with the RSME).

Topic

Kruskal-Wallis (NASA)

Wilcoxon RSME

A.D.

0.5865

0.9349

A.W.S.

0.6945

0.4191

R.M.

0.8977

0.1105

S.W.

0.1202

0.1778

The Likelihood model behaves differently when

used with different measures of mental workload. In

fact, on one hand, with the unidimensional Rating

Scale Mental Effort, the design condition B

(experimental group) on average had a lower

efficiency than the design condition A (control group)

across topics. On the other hand, with the

multidimensional NASA Task Load Index, the

efficiency of the design condition B (experimental

group) was always better than the design condition A

(control group) across topics. This raises the question

of the completeness of the unidimensional measure of

mental workload (RSME). In line with other

researches in the literature of CLT, the criticism is

whether ‘effort’ is the main indicator of cognitive

load (Paas and Van Merriënboer, 1993) or others

mental dimensions influence it during problem

solving (De Jong, 2010). In this research, we believe

that a multidimensional model of cognitive load

seems to be more suitable than a unidimensional

model when used in the computation of the efficiency

of various instructional designs. We argue that a

multidimensional model, such as the NASA-TLX,

better grasps the characteristics of the learner and the

features of an underlying learning task. Results shows

that the average performance (MCQ) is higher in the

experimental group than the control group. This can

be attributed to the layout of the collaborative activity

designed to boost the learning phase and enhance the

learning outcomes, namely the achievement of

factual, conceptual and procedural knowledge.

According to the collaborative cognitive load theory

(Kirschner et al., 2018), nine principles can be used

to define complexity. Among these, task

guidance/support, domain expertise, team size and

task complexity were the principles held under

control in the current experiments. In particular, three

factors were observed that can be used to infer task

complexity: 1) amount of content delivered; 2) time

employed for its delivery; 3) level of prior knowledge

of learners. In relation to 1 and 2, A.D. had 28 slides

(50 mins), A.W.S. 25 slides (25 mins), R.M. 20 slides

(35 mins), S.W. 55 slides (75 mins). In relation to

point 3, prior knowledge can be inferred from the year

the topic was delivered: S.W. first year BSc in

Computer Science; AWS (third year), A.D. (fourth

year), and R.M. (post-graduate). As it is possible to

note, S.W. was the learning task with the higher level

of complexity in terms of slides, delivery time and

prior-knowledge. Results are in line with the

assumption of the collaborative cognitive load theory:

collaborative learning is more effective when the

level of the complexity of an instructional design is

high (Kirschner et al., 2018). In fact, on one hand

A.D., A.W.S. and R.M. are of lower complexity to

justify the utility of a collaborative activity that

involves sharing of working memory resources from

different learners. On the other, the higher complexity

of S.W. justifies the utility of the collaborative

activity and the exploitation of extra memory

resources from different learners in processing

information and enhance the learning outcomes.

5 CONCLUSIONS

A literature review showed a lack of studies aimed at

comparing the efficiency of instructional design

based on direct instruction and those based on

collaborative inquiries techniques. Motivated by the

statement provided by Kirshner and colleagues

(2006) whereby inquiries techniques are believed to

be ineffective in the absence of explicit direct

instructions, an empirical experimental study has

been designed. In detail, a comparison of the

efficiency between a traditional instructional design,

purely based upon explicit direct instructions, and its

extension with a guided inquiry technique has been

proposed. The likelihood model of efficiency,

proposed by Kalyuga and Sweller (2005), was

employed. This is based upon the ratio of

performance and cognitive load. The former was

quantified with a multiple-choice questionnaire

(percentage) and the latter with a unidimensional

measure of effort first (the Rating Scale Mental

Effort) and a multidimensional measure of mental

workload secondly (the NASA Task Load Index).

Results demonstrated that extending the traditional

direct instruction approach, with an inquiry

collaborative activity, employing direct instructions,

in the form of trigger questions, is potentially more

efficient. This is in line with the beliefs of Popov, van

Leeuwen and Buis (2017) whereby the development

of cognitive abilities, through the implementation of

cognitive activities (here collaboratively answering

trigger questions and following direct instructions),

facilitates the construction and the achievement of

knowledge. Future empirical experiments are

necessary to demonstrate this point held statistically.

CSEDU 2019 - 11th International Conference on Computer Supported Education

442

REFERENCES

Atkinson, R. C. and Shiffrin, R. M., 1971. The control

processes of short-term memory. Stanford University

Stanford.

Baddeley, A., 1998. Recent developments in working

memory. Current Opinion in Neurobiology, 8(2),

pp.234–238.

Bloom, B. S., 1956. Taxonomy of educational objectives.

Vol. 1: Cognitive domain. New York: McKay, pp.20–24.

De Jong, T., 2010. Cognitive load theory, educational

research, and instructional design: some food for

thought. Instructional Science, 38(2), pp.105–134.

Dewey, J., 1925. What I believe. Boydston, Jo Ann (Ed.).

John Dewey: the later works, 1953, pp.267–278.

Dillenbourg, P., 2002. Over-scripting CSCL: The risks of

blending collaborative learning with instructional

design. Heerlen, Open Universiteit Nederland.

Fischer, F., Bruhn, J., Gräsel, C. and Mandl, H., 2002.

Fostering collaborative knowledge construction with

visualization tools. Learning and Instruction, 12(2),

pp.213–232.

Garrison, D. R., 2007. Online community of inquiry review:

Social, cognitive, and teaching presence issues. Journal

of Asynchronous Learning Networks, 11(1), pp.61–72.

Gerjets, P., Scheiter, K. and Catrambone, R., 2004.

Designing Instructional Examples to Reduce Intrinsic

Cognitive Load: Molar versus Modular Presentation of

Solution Procedures. Instructional Science, 32(1/2),

pp.33–58.

Gerjets, P., Scheiter, K. and Catrambone, R., 2006. Can

learning from molar and modular worked examples be

enhanced by providing instructional explanations and

prompting self-explanations? Learning and Instruction,

16(2), pp.104–121.

Hart, S. G. and Staveland, L. E., 1988. Development of

NASA-TLX (Task Load Index): Results of Empirical

and Theoretical Research. In: Advances in Psychology.

Elsevier, pp.139–183.

Hoffman, B. and Schraw, G., 2010. Conceptions of

efficiency: Applications in learning and problem

solving. Educational Psychologist, 45(1), pp.1–14.

Johnes, J., Portela, M. and Thanassoulis, E., 2017.

Efficiency in education. 68(4), pp.331–338.

Jonassen, D., 2009. Reconciling a human cognitive

architecture. In: Constructivist instruction. Routledge,

pp.25–45.

Kalyuga, S. and Sweller, J., 2005. Rapid dynamic

assessment of expertise to improve the efficiency of

adaptive e-learning. Educational Technology Research

and Development, 53(3), pp.83–93.

Kester, L., Lehnen, C., Van Gerven, P. W. and Kirschner, P.

A., 2006. Just-in-time, schematic supportive information

presentation during cognitive skill acquisition.

Computers in Human Behavior, 22(1), pp.93–112.

Kirschner, F., Paas, F. and Kirschner, P. A., 2009. Individual

and group-based learning from complex cognitive tasks:

Effects on retention and transfer efficiency. Computers in

Human Behavior, 25(2), pp.306–314.

Kirschner, P. A., Sweller, J. and Clark, R. E., 2006. Why

Minimal Guidance During Instruction Does Not Work:

An Analysis of the Failure of Constructivist, Discovery,

Problem-Based, Experiential, and Inquiry-Based

Teaching. Educational Psychologist, 41(2), pp.75–86.

Kirschner, P. A., Sweller, J., Kirschner, F. and Zambrano,

J., 2018. From Cognitive Load Theory to Collaborative

Cognitive Load Theory. International Journal of

Computer-Supported Collaborative Learning, pp.1–21.

Kollar, I., Fischer, F. and Hesse, F. W., 2006. Collaboration

scripts–a conceptual analysis. Educational Psychology

Review, 18(2), pp.159–185.

Lipman, M., 2003. Thinking in education. Cambridge

University Press.

Longo, L., 2014. Formalising Human Mental Workload as

a Defeasible Computational Concept. PhD Thesis.

Trinity College Dublin.

Longo, L., 2015. A defeasible reasoning framework for

human mental workload representation and assessment.

Behaviour and Information Technology, 34(8), pp.758–

786.

Longo, L., 2018. On the Reliability, Validity and

Sensitivity of Three Mental Workload Assessment

Techniques for the Evaluation of Instructional Designs:

A Case Study in a Third-level Course. In: 10th

International Conference on Computer Supported

Education (CSEDU 2018. pp.166–178).

Miller, G. A., 1956. The magical number seven, plus or

minus two: Some limits on our capacity for processing

information. Psychological review, 63(2), p.81.

Morgan, R. L., Whorton, J. E. and Gunsalus, C., 2000. A

comparison of short term and long term retention:

Lecture combined with discussion versus cooperative

learning. Journal of instructional psychology, 27(1),

pp.53–53.

Orru, G., Gobbo, F., O’Sullivan, D. and Longo, L., 2018.

An Investigation of the Impact of a Social

Constructivist Teaching Approach, based on Trigger

Questions, Through Measures of Mental Workload and

Efficiency. In: CSEDU (2). pp.292–302.

Paas, F. and Sweller, J., 2012. An evolutionary upgrade of

cognitive load theory: Using the human motor system

and collaboration to support the learning of complex

cognitive tasks. Educational Psychology Review, 24(1),

pp.27–45.

Paas, F. G. and Van Merriënboer, J. J., 1993. The efficiency

of instructional conditions: An approach to combine

mental effort and performance measures. Human

Factors: The Journal of the Human Factors and

Ergonomics Society, 35(4), pp.737–743.

Popov, V., van Leeuwen, A. and Buis, S., 2017. Are you

with me or not? Temporal synchronicity and

transactivity during CSCL. Journal of Computer

Assisted Learning, 33(5), pp.424–442.

Sátiro, A., 2006. Jugar a pensar con mitos: este libro forma

parte dle Proyecto Noria y acompña al libro para niños

de 8-9 años: Juanita y los mitos. Octaedro.

Scully, D., 2017. Constructing Multiple-Choice Items to

Measure Higher-Order Thinking. Practical

Assessment, Research & Evaluation, 22.

Direct Instruction and Its Extension with a Community of Inquiry: A Comparison of Mental Workload, Performance and Efficiency

443

Sweller, J., Ayres, P. and Kalyuga, S., 2011a. Cognitive

load theory, explorations in the learning sciences,

instructional systems, and performance technologies 1.

New York, NY: Springer Science+ Business Media.

Sweller, J., Ayres, P. and Kalyuga, S., 2011b. Measuring

cognitive load. In: Cognitive load theory. Springer,

pp.71–85.

Sweller, J., van Merriënboer, J. J. and Paas, F., 2019.

Cognitive architecture and instructional design: 20

years later. Educational Psychology Review, pp.1–32.

Sweller, J., Van Merrienboer, J. J. and Paas, F. G., 1998.

Cognitive architecture and instructional design.

Educational psychology review, 10(3), pp.251–296.

Zijlstra, F. R. H., 1993. Efficiency in Work Behavior: A

Design Approach for Modern Tools (1993). Delft

University Press.

Zijlstra, F. R. H. and Doorn, L. van, 1985. The construction

of a scale to measure subjective effort. Delft,

Netherlands, p.43.

CSEDU 2019 - 11th International Conference on Computer Supported Education

444